Abstract

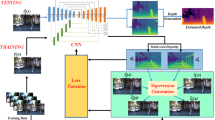

Depth-estimation is an important task for autonomous driving, 3D object detection and recognition, scene understanding, and other fields. To improve the quality of depth estimation in high-frequency edge detail area, this paper presents DSS-Net, a dual-stream stereo network, combining a bottom-up steam based on scene understanding and a top-down stream based on parallax local optimization. Firstly, in the bottom-up stream, deep features containing high-level semantic information are extracted by a deep network, and then, a coarse estimate of the disparity is computed according to depth intervals classified based on deep features. In the up-bottom stream, the model uses the high-resolution shallow features with rich details and the initial coarse disparity to construct the local dense matching cost in the parallax neighborhood of each pixel of the initial disparity map, and uses stacked multiple hourglass networks to refine the parallax diagram in several stages. We achieve results on the Scene Flow, KITTI 2012 and 2015 datasets, showing that our method has high precision.

Similar content being viewed by others

References

Geiger, P.L., Urtasun, R.: Are we ready for autonomous driving? the KITTI vision benchmark suite. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3354–3361 (2012)

Chen, X., Kundu, K., Zhu, Y., et al.: 3d object proposals for accurate object class detection. Adv. Neural Inf. Process. Syst. 1, 424–432 (2015)

Menze, M., Geiger, A.: Object scene flow for autonomous vehicles. IEEE Conference on Computer Vision and Pattern Recognition, pp. 3061–3070 (2015)

Zbontar, J., LeCun, Y.: Computing the stereo matching cost with a convolutional neural network. IEEE Conference on Computer Vision and Pattern Recognition, pp. 1592–1599 (2015)

Zhang, K., Lu, J., Lafruit, G.: Cross-based local stereo matching using orthogonal integral images. IEEE Trans. Circuits Syst. Video Technol. 19(7), 1073–1079 (2019)

Kunii, Y., Ushioda, T.: Shadow casting stereo imaging for high accurate and robust stereo processing of natural environment. IEEE/ASME International Conference on Advanced Intelligent Mechatronics, pp. 302–307 (2008).

Hirschmuller, H.: Stereo processing by semiglobal matching and mutual information. IEEE Trans. Pattern Anal. Mach. Intell. 30(2), 328–341 (2007)

Luo, W., Schwing, A.G., Urtasun, R.: Efficient deep learning for stereo matching. IEEE Conference on Computer Vision and Pattern Recognition, pp. 5695–5703 (2016).

Mayer, N., Ilg, E., Hausser, P., et al.: A large dataset to train convolutional networks for disparity, optical flow, and scene flow estimation. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4040–4048 (2016)

Kendall, A., Martirosyan, H., Dasgupta, S., et al.: End-to-end learning of geometry and context for deep stereo regression. IEEE International Conference on Computer Vision, pp. 66–75 (2017)

Pang, J., Sun, W., Ren, J., et al.: Cascade residual learning: A two-stage convolutional neural network for stereo matching. Proceedings of the IEEE International Conference on Computer Vision Workshops, pp. 878–886 (2017)

Chang, J.R., Chen, Y.S.: Pyramid stereo matching network. IEEE Conference on Computer Vision and Pattern Recognition, pp. 5410–5418 (2018)

He, K., Zhang, X., Ren, S., et al.: Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 37(9), 1904–1916 (2014)

Zhang, F., Prisacariu, V., Yang, R., et al.: GA-Net: Guided aggregation net for end-to-end stereo matching. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 185–194 (2019)

Xu, H., Zhang, J.: AANet: Adaptive aggregation network for efficient stereo matching. IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1956–1965 (2020)

Zhu, Z., He, M., Dai, Y., et al.: Multi-scale cross-form pyramid network for stereo matching. In: IEEE Conference on Industrial Electronics and Applications, pp. 1789–1794 (2019)

Guo, X., Yang, K., Yang, W., Wang, X., Li, H.: Group-wise correlation stereo network. IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3268–3277 (2019)

Zhang, F., Prisacariu, F., Yang, R., Torr, P.H.S.: GA-Net: Guided Aggregation Net for End-To-End Stereo Matching. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 185–194 (2019)

Duggal, S., Wang, S., Ma, W.C., et al.: DeepPruner: Learning Efficient Stereo Matching via Differentiable PatchMatch. IEEE/CVF International Conference on Computer Vision, pp. 4384–4393 (2019).

Szeliski, R., Zabih, R., Scharstein, D., et al.: A comparative study of energy minimization methods for markov random fields with smoothness-based priors. IEEE Trans. Pattern Anal. Mach. Intell. 30(6), 1068–1080 (2008)

Zagoruyko, S., Komodakis, N.: Learning to compare image patches via convolutional neural networks. IEEE Conference on Computer Vision and Pattern Recognition, pp. 4353–4361 (2015)

Chen, L.C., Zhu, Y., Papandreou, G., et al.: Encoder–Decoder with Atrous Separable Convolution for Semantic Image Segmentation. European Conference on Computer Vision, pp. 801–818 (2018)

Wang, P., Shen, X., Lin, Z., Cohen, S., Price, B., Yuille, A.: Towards unified depth and semantic prediction from a single image. Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 2800–2809 (2015)

Eigen, D., Fergus, R.: Predicting depth, surface normals and semantic labels with a common multi-scale convolutional architecture. Proceedings of the IEEE International Conference on Computer Vision, pp. 2650–2658 (2015)

Eigen D, Puhrsch C, Fergus R: Depth map prediction from a single image using a multi-scale deep network. arXiv preprint arXiv:1406.2283, 2014.

Li, X., You, A., Zhu, Z., et al.: Semantic flow for fast and accurate scene parsing. European Conference on Computer Vision, pp. 775–793 (2020)

Girshick, R.: Scale-aware fast R-CNN for pedestrian detection. IEEE Trans. Multimed. 1, 985–996 (2017)

Yang, P., Sun, X., Li, W., et al.: SGM: sequence generation model for multi-label classification. arXiv preprint arXiv.1806.04822 (2018)

Khamis, S., Fanello, S., Rhemann, C., et al.: Stereonet: Guided hierarchical refinement for real-time edge-aware depth prediction. European Conference on Computer Vision (ECCV), pp. 573–590 (2018)

Shashua, A., Levin, A.: Ranking with large margin principle: Two approaches. Adv. Neural Inf. Process. Syst. 1, 961–968 (2003)

Frank, E., Hall, M.: A simple approach to ordinal classification, pp. 145–156. European conference on machine learning. Springer, Berlin, Heidelberg (2001)

Fu, H., Gong, M., Wang, C., et al.: Deep ordinal regression network for monocular depth estimation. IEEE Conference on Computer Vision and Pattern Recognition, pp. 2002–2011 (2018)

Li, Z., Liu, X., Creighton, F.X., et al.: Revisiting stereo depth estimation from a sequence-to-sequence perspective with transformers (2020)

Shen, Z., Dai, Y., Rao, Z.: CFNet: cascade and fused cost volume for robust stereo matching (2021)

Jiang, X., Hornegger, J., Koch, R.: [Lecture Notes in Computer Science] Pattern Recognition Volume 8753. High-Resolution Stereo Datasets with Subpixel-Accurate Ground Truth. (2014) https://doi.org/10.1007/978-3-319-11752-2

Schps, T., Schnberger, J.L., Galliani, S., et al.: A multi-view stereo benchmark with high-resolution images and multi-camera videos. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). IEEE Computer Society (2017)

Funding

Research is supported by the National Natural Science Foundation of China under Grant 62173083 and is partly supported by the Major Program of National Natural Science Foundation of China (71790614) and the 111 Project (B16009).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declared that they have no conflicts of interest to this work.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhong, Y., Jia, T., Xi, K. et al. Dual-stream stereo network for depth estimation. Vis Comput 39, 5343–5357 (2023). https://doi.org/10.1007/s00371-022-02663-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-022-02663-3