Abstract

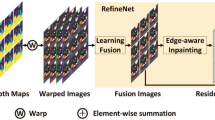

Compared with hardware-dependent methods, light field (LF) reconstruction algorithms enable requiring the densely sampled light field (DSLF) more economical and convenient. Most of the current LF reconstruction methods either directly apply multiple convolutional layers to the input views to generate DSLF or warp the input views to novel viewpoints based on the estimated depth and then blend to obtain the DSLF. These two types of methods produce either blurry results in texture areas or distortions near the boundaries of depth discontinuities. In this paper, we propose an end-to-end learning-based approach, which combines the characteristics of the above two methods from a parallel and complementary perspective, to reconstruct a high-quality light field. Our method consists of three sub-networks, i.e., two parallel sub-networks termed as ASR-Net and Warp-Net, and the refine sub-network termed as Refine-Net. ASR-Net directly learns an intermediate DSLF through deep convolutional layers, and Warp-Net warps the input views based on the estimated depth to obtain other intermediate DSLFs that maintain high-frequency texture information. These intermediate DSLFs are adaptively fused through the learned attention maps, and a fusion DSLF with the advantages of two types of intermediate DSLFs is obtained. Finally, the residual map learned from the intermediate DSLFs is added to the fusion DSLF to get a better one. Comprehensive experiments demonstrate the superiority of the proposed method on several LF datasets compared with the state-of-the-art approaches.

Similar content being viewed by others

References

Lytro. https://www.lytro.com/

Raytrix. https://www.raytrix.de/

The (New) Stanford Light Field Archive. http://lightfield.stanford.edu/acq.html

Chen, J., Hou, J., Ni, Y., Chau, L.P.: Accurate light field depth estimation with superpixel regularization over partially occluded regions. IEEE Trans. Image Process. 5, 4889–4900 (2018)

Fiss, J., Curless, B., Szeliski, R.: Refocusing plenoptic images using depth-adaptive splatting. In: 2014 IEEE International Conference on Computational Photography (ICCP), pp. 1–9 (2014)

Gul, M., Gunturk, B.K.: Spatial and angular resolution enhancement of light fields using convolutional neural networks. IEEE Trans. Image Process. 65, 1 (2018)

Honauer, K., Johannsen, O., Kondermann, D., Goldluecke, B.: A dataset and evaluation methodology for depth estimation on 4d light fields. In: Asian Conference on Computer Vision (ACCV), pp. 19–34 (2016)

Huang, G., Liu, Z., Laurens, V., Weinberger, K.Q.: Densely connected convolutional networks. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 4700–4708 (2017)

Jin, J., Hou, J., Chen, J., Zeng, H., Yu, J.: Deep coarse-to-fine dense light field reconstruction with flexible sampling and geometry-aware fusion. IEEE Transactions on Software Engineering (2020)

Jin, J., Hou, J., Yuan, H., Kwong, S.: Learning light field angular super-resolution via a geometry-aware network. Assoc. Adv. Artif. Intell. (AAAI) 11, 48 (2020)

Jin, J., Liu, H., Hou, J., Xiong, H.: Light field reconstruction via attention-guided deep fusion of hybrid lenses. http://arxiv.org/abs/2102.07085 (2021)

Jones, A., Mcdowall, I., Yamada, H., Bolas, M., Debevec, P.: Rendering for an interactive 360 light field display. ACM Trans. Gr. 5, 40 (2007)

Kalantari, N., Wang, T., Ramamoorthi, R.: Learning-based view synthesis for light field cameras. ACM Trans. Gr. (SIGGRAPH Asia) 193, 1–10 (2016)

Kim, C., Zimmer, H., Pritch, Y., Sorkine-Hornung, A., Gross, M.: Scene reconstruction from high spatio-angular resolution light fields. ACM Trans. Gr. (TOG) 73, 1–12 (2013)

Kingma, D., Ba, J.: Adam: a method for stochastic optimization. Computer Sci. 5, 81 (2014)

Kubota, A., Aizawa, K., Chen, T.: Reconstructing dense light field from array of multifocus images for novel view synthesis. IEEE Trans. Image Process. 16, 269 (2007)

Levin, A., Freeman, W.T., Durand, F.: Understanding camera trade-offs through a bayesian analysis of light field projections. In: European Conference on Computer Vision (ECCV), pp. 88–101 (2008)

Levoy, M.: Light fields and computational imaging. Computer 39(8), 46–55 (2006)

Li, R., Fang, L., Ye, L., Zhong, W., Zhang, Q.: Light field reconstruction with arbitrary angular resolution using a deep coarse-to-fine framework (2021)

Liu, X., Wang, M., Wang, A., Hua, X., Liu, S.: Depth-guided learning light field angular super-resolution with edge-aware inpainting. Visual Computer 5, 1–13 (2021)

Long, J., Ning, Z., Darrell, T.: Do convnets learn correspondence? Adv. Neural Inf. Process. Syst. 57, 601–1609 (2014)

Meng, N., Li, K., Liu, J., Lam, E.Y.: Light field view synthesis via aperture disparity and warping confidence map. IEEE Trans. Image Process. 5, 1 (2021)

Meng, N., So, H.K.H., Sun, X., Lam, E.: High-dimensional dense residual convolutional neural network for light field reconstruction. IEEE Trans. Pattern Anal. Mach. Intell. 43, 73–886 (2021)

Mitra, K., Veeraraghavan, A.: Light field denoising, light field superresolution and stereo camera based refocussing using a gmm light field patch prior. In: IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 22–28 (2012)

Navarro, J., Sabater, N.: Learning occlusion-aware view synthesis for light fields. Pattern Anal. Appl. 24, 1391 (2021)

Raj, A.S., Lowney, M., Shah, R., Wetzstein, G.: Stanford lytro light field archive. http://lightfields.stanford.edu/LF2016.html

Rigamonti, R., Sironi, A., Lepetit, V., Fua, P.: Learning separable filters. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2754–2761 (2013)

Ronneberger, O., Fischer, P., Brox, T.: U-net: Convolutional networks for biomedical image segmentation. International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI) pp. 234–241 (2015)

Shi, J., Jiang, X., Guillemot, C.: A framework for learning depth from a flexible subset of dense and sparse light field views. IEEE Trans. Image Process. 28, 1–15 (2019)

Shi, L., Hassanieh, H., Davis, A., Katabi, D., Durand, F.: Light field reconstruction using sparsity in the continuous fourier domain. Acm Trans. Gr. 34, 1–13 (2014)

Vagharshakyan, S., Bregovic, R., Gotchev, A.: Light field reconstruction using shearlet transform. IEEE Trans. Pattern Anal. Mach. Intell. 40, 133–147 (2018)

Vijayanarasimhan, S., Ricco, S., Schmid, C., Sukthankar, R., Fragkiadaki, K.: Sfm-net: Learning of structure and motion from video. http://arxiv.org/abs/1704.07804 (2017)

Wang, Y., Liu, F., Wang, Z., Hou, G., Sun, Z., Tan, T.: End-to-end view synthesis for light field imaging with pseudo 4dcnn. In: European Conference on Computer Vision (ECCV), pp. 1–16 (2018)

Wanner, S., Goldluecke, B.: Variational light field analysis for disparity estimation and super-resolution. IEEE Trans. Pattern Anal. Mach. Intell. 36(3), 606–619 (2014)

Wanner, S., Meister, S., Goldlücke, B.: Datasets and benchmarks for densely sampled 4d light fields. In: VMV, pp. 225–226 (2013)

Wilburn, B., Joshi, N., Vaish, V., Talvala, E.V., Levoy, M.: High performance imaging using large camera arrays. ACM Transactions on Graphics (TOG) pp. 765–776 (2005)

Wu, G., Liu, Y., Dai, Q., Chai, T.: Learning sheared epi structure for light field reconstruction. IEEE Trans. Image Process. 28, 1 (2019)

Wu, G., Liu, Y., Fang, L., Chai, T.: Lapepi-net: A laplacian pyramid epi structure for learning-based dense light field reconstruction. http://arxiv.org/abs/1902.06221 (2019)

Wu, G., Liu, Y., Fang, L., Chai, T.: Spatial-angular attention network for light field reconstruction. http://arxiv.org/abs/2007.02252 (2020)

Wu, G., Zhao, M., Wang, L., Dai, Q., Liu, Y.: Light field reconstruction using deep convolutional network on epi. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017)

Xing, S., Xu, Z., Nan, M., Lam, E.Y., So, K.H.: Data-driven light field depth estimation using deep convolutional neural networks. IEEE International Joint Conference on Neural Networks pp. 367–374 (2016)

Xu, H., Yao, L., Li, Z., Liang, X., Zhang, W.: Auto-fpn: Automatic network architecture adaptation for object detection beyond classification. In: IEEE International Conference on Computer Vision (ICCV) (2020)

Yan, L.Q., Mehta, S.U., Ramamoorthi, R., Durand, F.: Fast 4d sheared filtering for interactive rendering of distribution effects. ACM Trans. Gr. (TOG) 3, 1–13 (2015)

Yang, F., Yang, H., Fu, J., Lu, H., Guo, B.: Learning texture transformer network for image super-resolution. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2020)

Yeung, H., Hou, J., Chen, J., Chung, Y.Y., Chen, X.: Fast light field reconstruction with deep coarse-to-fine modeling of spatial-angular clues. In: European Conference on Computer Vision (ECCV) (2018)

Yeung, H.W.F., J., H., Chen, X., Chen, J., Chen, Z., Y., C.Y.: Light field spatial super-resolution using deep efficient spatial-angular separable convolution. IEEE transactions on image processing pp. 2319–2330 (2019)

Yoon, Y., Jeon, H.G., Yoo, D., Lee, J.Y., Kweon, I.S.: Learning a deep convolutional network for light-field image super-resolution. In: IEEE International Conference on Computer Vision Workshop, pp. 24–32 (2015)

Yu, F., Koltun, V., Funkhouser, T.: Dilated residual networks. In: Computer Vision and Pattern Recognition (CVPR) (2017)

Zhang, F., Wang, J., Shechtman, E., Zhou, Z., Shi, J., Hu, S.: Plenopatch: patch-based plenoptic image manipulation. IEEE Trans. Visual. Computer Gr. 23(5), 1561–1573 (2017)

Zhang, Y., Tian, Y., Kong, Y., Zhong, B., Fu, Y.: Residual dense network for image super-resolution. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2472–2481 (2018)

Zhang, Z., Liu, Y., Dai, Q.: Light field from micro-baseline image pair. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3800–3809 (2015)

Zhou, W., Liu, G., Shi, J., Zhang, H., Dai, G.: Depth-guided view synthesis for light field reconstruction from a single image. Image Vision Comput 95, 103874 (2020)

Zhuang, B., Shen, C., Tan, M., Liu, L., Reid, I.: Structured binary neural networks for accurate image classification and semantic segmentation. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2020)

Zhussip, M., Soltanayev, S., Chun, S.Y.: Training deep learning based image denoisers from undersampled measurements without ground truth and without image prior. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2020)

Funding

This study was supported in part by the National Key Research and Development Program of China (grant number 2016YFB0700802) and in part by the Innovative Youth Fund Program of the State Oceanic Administration of China (grant number 2015001).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported in part by the National Key Research and Development Program of China under Grant 2016YFB0700802 and in part by the Innovative Youth Fund Program of the State Oceanic Administration of China under Grant 2015001.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liu, X., Wang, M., Wang, A. et al. Light field reconstruction via attention maps of hybrid networks. Vis Comput 39, 5027–5040 (2023). https://doi.org/10.1007/s00371-022-02644-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-022-02644-6