Abstract

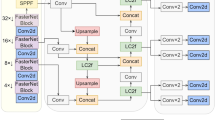

Underwater image enhancement (UIE), as an image processing technique, plays a vital role in computer vision. However, existing approaches treat the restoration process as a whole; thus, they cannot adequately handle the color distortion and low contrast in the enhanced images. In this paper, we propose a global–local-guided model for realizing UIE tasks in a coarse-to-fine manner to alleviate these issues. The proposed model is divided into two paths. The global path targets to estimate basic structure and color information, while the local path targets to remove the undesirable artifacts, e.g., noises over-exposure regions, and blurred edges. By integrating two neural networks into our model, we could recover the underwater images with clear textural details and vivid color. Besides, a learning-based weight map is introduced to make the global–local path on friendly terms, which can balance the pixel intensity distribution from both sides and remove redundant information to a certain degree. Qualitative and quantitative experimental results on various benchmarks demonstrate that our method can effectively tackle color distortion and blurred edges compared with several state-of-the-art methods by a large margin. Finally, we also conduct experiments to demonstrate that our method can be applied in various computer vision tasks, e.g., object detection, matching and edge detection.

Similar content being viewed by others

References

Li, C., Guo, C., Ren, W., Cong, R., Hou, J., Kwong, S., Tao, D.: An underwater image enhancement benchmark dataset and beyond. IEEE Trans. Image Process. 29, 4376–4389 (2020)

Li, C., Anwar, S., Porikli, F.: Underwater scene prior inspired deep underwater image and video enhancement. Pattern Recognit. 98, 107038 (2020)

Koschmieder, H.: Theorie der horizontalen sichtweite. Beitrage zur Physik der freien Atmosphare. pp 33–53 (1924)

Mingye, J., Zhang, D., Wang, X.: Single image dehazing via an improved atmospheric scattering model. Vis. Comput. 33(12), 1613–1625 (2017)

Li, C., Guo, J., Cong, R., Pang, Y., Wang, B.: Underwater image enhancement by dehazing with minimum information loss and histogram distribution prior. IEEE Trans. Image Process. 25(12), 5664–5677 (2016)

Anwar, S., Li, C., Porikli, F.: Deep Underwater Image Enhancement. arXiv e-prints, page arXiv:1807.03528 (2018)

Guo, Y., Li, H., Zhuang, P.: Underwater image enhancement using a multiscale dense generative adversarial network. IEEE J. Ocean. Eng. 45(3), 862–870 (2020)

Li, J., Skinner, K.A., Eustice, R.M., Johnson-Roberson, M.: Watergan: unsupervised generative network to enable real-time color correction of monocular underwater images. IEEE Robot. Autom. Lett. 3(1), 387–394 (2018)

Hou, M., Liu, R., Fan, X., Luo, Z.: Joint residual learning for underwater image enhancement. In: 2018 25th IEEE International Conference on Image Processing (ICIP), pp 4043–4047 (2018)

Zhou, Y., Liu, Y., Han, G., Zhang, Z.: Face recognition based on global and local feature fusion. In: 2019 IEEE Symposium Series on Computational Intelligence (SSCI), pp 2771–2775 (2019)

Gupta, E., Kushwah, R.S.: Combination of global and local features using dwt with SVM for CBIR. In: 2015 4th International Conference on Reliability, Infocom Technologies and Optimization (ICRITO) (Trends and Future Directions), pp 1–6 (2015)

Lisin, D.A., Mattar, M.A., Blaschko, M.B., Learned-Miller, E.G., Benfield, M.C.: Combining local and global image features for object class recognition. In: 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05) - Workshops, p 47 (2005)

Hummel, R.: Image enhancement by histogram transformation. Comput. Gr. Image Process. 6(2), 184–195 (1977)

Pizer, S., Johnston, R., Ericksen, J., Yankaskas, B., Muller, K.: Contrast-limited adaptive histogram equalization: speed and effectiveness. In: [1990] Proceedings of the First Conference on Visualization in Biomedical Computing, pages 337, 338, 339, 340, 341, 342, 343, 344, 345, Los Alamitos, CA, USA, may (1990). IEEE Computer Society

Liu, Yung-Cheng., Chan, Wen-Hsin., Chen, Ye-Quang.: Automatic white balance for digital still camera. IEEE Trans. Consum. Electron. 41(3), 460–466 (1995)

Buchsbaum, G.: A spatial processor model for object colour perception. J. Frankl. Inst. 310(1), 1–26 (1980)

van de Weijer, J., Gevers, T., Gijsenij, A.: Edge-based color constancy. IEEE Trans. Image Process. 16(9), 2207–2214 (2007)

Ancuti, C., Ancuti, C.O., Haber, T., Bekaert, P.: Enhancing underwater images and videos by fusion. In: 2012 IEEE Conference on Computer Vision and Pattern Recognition, pp 81–88 (2012)

Khan, A., Ali, S.S.A., Malik, A.S., Anwer, A., Meriaudeau, F.: Underwater image enhancement by wavelet based fusion. In: 2016 IEEE International Conference on Underwater System Technology: Theory and Applications (USYS), pp 83–88 (2016)

Asmare, M.H., Asirvadam, V.S., Hani, A.F.M.: Image enhancement based on contourlet transform. Signal Image Video Process. 9(7), 1679–1690 (2015)

He, K., Sun, J., Tang, X.: Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 33(12), 2341–2353 (2011)

Drews, P., Nascimento, E., Moraes, F., Botelho, S., Campos, M.: Transmission estimation in underwater single images. In: Proceedings of the IEEE international conference on computer vision workshops, pp 825–830 (2013)

Galdran, Adrian, Pardo, David, Picón, Artzai, Alvarez-Gila, Aitor: Automatic red-channel underwater image restoration. J. Vis. Commun. Image Represent. 26, 132–145 (2015)

Carlevaris-Bianco, N., Mohan, A., Eustice, R.M.: Initial results in underwater single image dehazing. In: OCEANS 2010 MTS/IEEE SEATTLE, pp 1–8 (2010)

Peng, Y., Zhao, X., Cosman, P.C.: Single underwater image enhancement using depth estimation based on blurriness. In: 2015 IEEE International Conference on Image Processing (ICIP), pp 4952–4956 (2015)

Baiju, P.S., Antony, S., George, S.: An intelligent framework for transmission map estimation in image dehazing using total variation regularized low-rank approximation. Vis. Comput. 04 (2021)

Zhang, S., He, F., Ren, W., Yao, J.: Joint learning of image detail and transmission map for single image dehazing. Vis. Comput. 36(2), 305–316 (2020)

Hu, W., Wang, T., Wang, Y., Chen, Z., Huang, G.: Le-msfe-ddnet: a defect detection network based on low-light enhancement and multi-scale feature extraction. Vis. Comput. 06 (2021)

Wang, C., Xing, X., Yao, G., Zhixun, S.: Single image deraining via deep shared pyramid network. Vis. Comput. 37, 07 (2021)

Lin, T., Dollár, P., Girshick, R., He, K., Hariharan, B., Belongie, S.: Feature pyramid networks for object detection. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp 936–944 (2017)

Zhang, H., Patel, V.M.: Density-aware single image de-raining using a multi-stream dense network. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 695–704 (2018)

Jingyu, L., Li, N., Zhang, S., Zhibin, Y., Zheng, H., Zheng, B.: Multi-scale adversarial network for underwater image restoration. Opt. Laser Technol. 110, 105–113 (2019)

Zhu, J., Park, T., Isola, P., Efros, A.A.: Unpaired image-to-image translation using cycle-consistent adversarial networks. In: 2017 IEEE International Conference on Computer Vision (ICCV), pp 2242–2251 (2017)

Li, C., Guo, J., Guo, C.: Emerging from water: underwater image color correction based on weakly supervised color transfer. IEEE Signal Process. Lett. 25(3), 323–327 (2018)

Skinner, K.A., Zhang, J., Olson, E.A., Johnson-Roberson, M.: Uwstereonet: Unsupervised learning for depth estimation and color correction of underwater stereo imagery. In: 2019 International Conference on Robotics and Automation (ICRA), pp 7947–7954. IEEE (2019)

Sun, X., Liu, L., Li, Q., Dong, J., Lima, E., Yin, Ruiying: Deep pixel-to-pixel network for underwater image enhancement and restoration. IET Image Process. 13(3), 469–474 (2018)

Isola, P., Zhu, J.-Y., Zhou, T., Efros, A.A.: Image-to-Image Translation with Conditional Adversarial Networks. arXiv e-prints, arXiv:1611.07004 (2016)

Wang, Y., Zhang, J., Cao, Y., Wang, Z.: A deep CNN method for underwater image enhancement. In: 2017 IEEE International Conference on Image Processing (ICIP), pp 1382–1386 (2017)

Cao, K., Peng, Y., Cosman, P.C.: Underwater image restoration using deep networks to estimate background light and scene depth. In: 2018 IEEE Southwest Symposium on Image Analysis and Interpretation (SSIAI), pp 1–4 (2018)

Goodfellow, I.J., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., Bengio, Y.: Generative Adversarial Networks. arXiv e-prints, arXiv:1406.2661 (2014)

Chen, X., Duan, Y., Houthooft, R., Schulman, J., Sutskever, I., Abbeel, P.: InfoGAN: Interpretable Representation Learning by Information Maximizing Generative Adversarial Nets. arXiv e-prints, arXiv:1606.03657 (2016)

Odena, A., Olah, C., Shlens, J.: Conditional image synthesis with auxiliary classifier GANs. In: Doina P., and Yee W.T., editors, Proceedings of the 34th International Conference on Machine Learning, volume 70 of Proceedings of Machine Learning Research, pp 2642–2651 (International Convention Centre, Sydney, Australia, 2017). PMLR

Fabbri, C., Islam, M.J., Sattarm, J.: Enhancing underwater imagery using generative adversarial networks. In: 2018 IEEE International Conference on Robotics and Automation (ICRA), pp 7159–7165 (2018)

Gulrajani, I., Ahmed, F., Arjovsky, M., Dumoulin, V., Courville, A.: Improved Training of Wasserstein GANs. arXiv e-prints. arXiv:1704.00028 (2017)

Li, H., Li, J., Wang, W.: A Fusion Adversarial Underwater Image Enhancement Network with a Public Test Dataset. arXiv e-prints. arXiv:1906.06819 (2019)

Chen, L., Jiang, Z., Tong, L., Liu, Z., Zhao, A., Zhang, Q., Dong, J., Zhou, H.: Perceptual underwater image enhancement with deep learning and physical priors. IEEE Trans. Circuits Syst. Video Technol. p. 1, 10 (2020)

Dudhane, A., Hambarde, P., Patil, P., Murala, S.: Deep underwater image restoration and beyond. IEEE Signal Process. Lett. p. 1, 04 (2020)

Iizuka, S., Simo-Serra, E., Ishikawa, H.: Globally and locally consistent image completion. ACM Trans. Graph. 36(4), (2017)

Uplavikar, P., Wu, Z., Wang, Z.: All-In-One Underwater Image Enhancement using Domain-Adversarial Learning. arXiv e-prints. arXiv:1905.13342. (2019)

Fu, X., Fan, Z., Ling, M., Huang, Y., Ding, X.: Two-step approach for single underwater image enhancement. In: 2017 International Symposium on Intelligent Signal Processing and Communication Systems (ISPACS), pp 789–794 (2017)

Islam, M.J., Xia, Y., Sattar, J.: Fast underwater image enhancement for improved visual perception. IEEE Robot. Autom. Lett. 5(2), 3227–3234 (2020)

Liu, R., Fan, X., Zhu, M., Hou, M., Luo, Z.: Real-world underwater enhancement: challenges, benchmarks, and solutions under natural light. IEEE Trans. Circuits Syst. Video Technol. 30(12), 4861–4875 (2020)

Lowe, D.: Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis, 60, 91, 11 (2004)

Redmon, J., Farhadi, A.: YOLOv3: An Incremental Improvement. arXiv e-prints. arXiv:1804.02767 (2018)

Lin, T.-Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., Dollár, P., Lawrence Zitnick, C.: Microsoft coco: common objects in context. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) Comput. Vis.–ECCV 2014, pp. 740–755. Springer International Publishing, Cham (2014)

Funding

The funding was provided by National Natural Science Foundation of China (Grant Nos. 61922019, 62027826, 61722105, 61672125).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

We declare that we have no financial and personal relationships with other people or organizations that can inappropriately influence our work, there is no professional or other personal interest of any nature or kind in any product, service and/or company that could be construed as influencing the position presented in, or the review of, the manuscript entitled, “Global Structure-Guided Learning Framework for Underwater Image Enhancement.”

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Lin, R., Liu, J., Liu, R. et al. Global structure-guided learning framework for underwater image enhancement. Vis Comput 38, 4419–4434 (2022). https://doi.org/10.1007/s00371-021-02305-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-021-02305-0