Abstract

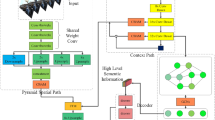

In the task of semantic segmentation, researchers often use self-attention module to capture long-range contextual information. These methods are often effective. However, the use of the self-attention module will cause a problem that cannot be ignored, that is, the huge consumption of computing resources. Therefore, how to reduce the resource consumption of the self-attention module under the premise of ensuring performance is a very meaningful research topic. In this paper, we propose a Sparse Attention Model combined with a powerful multi-task feature extraction network for semantic segmentation. Compared with the classic self-attention model, our Sparse Attention Model does not calculate the inner product between pairs of all vectors. Instead, we first sparse the feature block Query and the feature block Key defined in self-attention module through the credit matrix generated by the pre-output. Then, we perform similarity modeling on the two sparse feature blocks. Meanwhile, to ensure that the vectors in Query could capture dense contextual information, we design a Class Attention Module and embed it into Sparse Attention Module. Note that, compared with Dual Attention Network for scene segmentation, our attention module greatly reduces the consumption of computing resources while ensuring the accuracy. Furthermore, in the stage of feature extraction, the use of downsampling will cause serious loss of detailed information and affect the segmentation performance of the network, so we adopt a multi-task feature extraction network. It learns semantic features and edge features in parallel, and we feed the learned edge features into the deep layer of the network to help restore detailed information for capturing high-quality semantic features. We do not use pure concatenation. Instead, we extract the edge features related to each channel by element-wise multiplication before concatenation. Finally, we conduct experiments on three datasets: Cityscapes, PASCAL VOC2012 and ADE20K, and obtain competitive results.

Similar content being viewed by others

References

Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3431–3440 (2015)

Badrinarayanan, V., Kendall, A., Cipolla, R.: Segnet: a deep convolutional encoder—decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 39(12), 2481–2495 (2017)

Ronneberger, O., Fischer, P., Brox, T.: U-net: convolutional networks for biomedical image segmentation. In: International Conference on Medical Image Computing and Computer-assisted Intervention, pp. 234–241. Springer (2015)

Lin, G., Milan, A., Shen, C., Reid, I.: Refinenet: multi-path refinement networks for high-resolution semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1925–1934 (2017)

Liu, Z., Li, X., Luo, P., Loy, C.-C., Tang, X.: Semantic image segmentation via deep parsing network. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1377–1385 (2015)

Wang, D., Guoqing, H., Lyu, C.: Frnet: an end-to-end feature refinement neural network for medical image segmentation. Vis. Comput. 37, 1101–1112 (2021)

Zheng, C., Wang, J., Chen, W., Xingming, W.: Multi-class indoor semantic segmentation with deep structured model. Vis. Comput. 34(5), 735–747 (2018)

Zhou, X., Wang, Y., Zhu, Q., Xiao, C., Xiao, L.: Ssg: superpixel segmentation and grabcut-based salient object segmentation. Vis. Comput. 35(3), 385–398 (2019)

Wang, X., Girshick, R., Gupta, A., He, K.: Non-local neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7794–7803 (2018)

Fu, J., Liu, J., Tian, H., Li, Y., Bao, Y., Fang, Z., Lu, H.: Dual attention network for scene segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3146–3154 (2019)

Yuan, Y., Wang, J.: Ocnet: object context network for scene parsing (2018). arXiv preprint arXiv:1809.00916

Huang, Z., Wang, X., Huang, L., Huang, C., Wei, Y., Liu, W.: Ccnet: Criss-cross attention for semantic segmentation. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 603–612 (2019)

Shajini, M., Ramanan, A.: An improved landmark-driven and spatial-channel attentive convolutional neural network for fashion clothes classification. Vis. Comput. 1–10 (2020). https://doi.org/10.1007/s00371-020-01885-7

Zhang, X., Liu, S.: Contrast preserving image decolorization combining global features and local semantic features. Vis. Comput. 34(6–8), 1099–1108 (2018)

Duda, R.O., Hart, P.E., et al.: Pattern Classification and Scene Analysis, vol. 3. Wiley, New York (1973)

Cordts, M., Omran, M., Ramos, S., Rehfeld, T., Enzweiler, M., Benenson, R., Franke, U., Roth, S., Schiele, B.: The cityscapes dataset for semantic urban scene understanding. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3213–3223 (2016)

Mark Everingham, S.M., Eslami, A., Van Gool, L., Williams, C.K.I., Winn, J., Zisserman, A.: The pascal visual object classes challenge: a retrospective. Int. J. Comput. Vis. 111(1), 98–136 (2015)

Zhou, B., Zhao, H., Puig, X., Fidler, S., Barriuso, A., Torralba, A.: Scene parsing through ade20k dataset. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 633–641 (2017)

Chen, L.-C., Zhu, Y., Papandreou, G., Schroff, F., Adam, H.: Encoder–decoder with atrous separable convolution for semantic image segmentation. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 801–818 (2018)

Yu, C., Wang, J., Peng, C., Gao, C., Yu, G., Sang, N.: Learning a discriminative feature network for semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1857–1866 (2018)

Yang, M., Yu, K., Zhang, C., Li, Z., Yang, K.: Denseaspp for semantic segmentation in street scenes. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3684–3692 (2018)

Iandola, F., Moskewicz, M., Karayev, S., Girshick, R., Darrell, T., Keutzer, K.: Densenet: Implementing efficient convnet descriptor pyramids (2014). arXiv preprint arXiv:1404.1869

Sun, K., Zhao, Y., Jiang, B., Cheng, T., Xiao, B., Liu, D., Mu, Y., Wang, X., Liu, W., Wang, J.: High-resolution representations for labeling pixels and regions (2019). arXiv preprint arXiv:1904.04514

Zhao, H., Shi, J., Qi, X., Wang, X., Jia, J.: Pyramid scene parsing network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2881–2890 (2017)

Chen, L.-C., Papandreou, G., Kokkinos, I., Murphy, K., Yuille, A.L.: Deeplab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 40(4), 834–848 (2017)

Chen, L.-C., Papandreou, G., Schroff, F., Adam, H.: Rethinking atrous convolution for semantic image segmentation (2017). arXiv preprint arXiv:1706.05587

Chen, L.-C., Papandreou, G., Kokkinos, I., Murphy, K., Yuille, A.L.: Semantic image segmentation with deep convolutional nets and fully connected crfs (2014). arXiv preprint arXiv:1412.7062

Lin, G., Shen, C., Van Den Hengel, A., Reid, I.: Efficient piecewise training of deep structured models for semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3194–3203 (2016)

Zheng, S., Jayasumana, S., Romera-Paredes, B., Vineet, V., Zhizhong, S., Dalong, D., Huang, C., Torr, P.HS.: Conditional random fields as recurrent neural networks. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1529–1537 (2015)

Chen, L.-C., Barron, J.T., Papandreou, G., Murphy, K., Yuille, A.L.: Semantic image segmentation with task-specific edge detection using cnns and a discriminatively trained domain transform. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4545–4554 (2016)

Zhang, L., Li, X., Arnab, A., Yang, K., Tong, Y., Torr, P.H.S.: Dual graph convolutional network for semantic segmentation (2019). arXiv preprint arXiv:1909.06121

Woo, S., Park, J., Lee, J.-Y., Kweon, I.S.: Cbam: Convolutional block attention module (2018). arXiv preprint arXiv:1807.06521

Wu, B., Zhao, S., Chu, W., Yang, Z., Cai, D.: Improving semantic segmentation via dilated affinity (2019). arXiv preprint arXiv:1907.07011

He, K., Gkioxari, G., Dollár, P., Girshick, R.: Mask r-cnn. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2961–2969 (2017)

Zhang, Z., Cui, Z., Chunyan, X., Yan, Y., Sebe, N., Yang, J.: Pattern-affinitive propagation across depth, surface normal and semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4106–4115 (2019)

Takikawa, T., Acuna, D., Jampani, V., Fidler, S.: Gated-scnn: gated shape cnns for semantic segmentation. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 5229–5238 (2019)

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., Polosukhin, I.: Attention is all you need. In: Advances in Neural Information Processing Systems, pp. 5998–6008 (2017)

Chollet, F.: Xception: deep learning with depthwise separable convolutions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1251–1258 (2017)

Yu, F., Koltun, V., Funkhouser, T.: Dilated residual networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 472–480 (2017)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Nasr, G.E., Badr, E.A., Joun, C.: Cross entropy error function in neural networks: forecasting gasoline demand. In: FLAIRS Conference, pp. 381–384 (2002)

Liu, C., Chen, L.-C., Schroff, F., Adam, H., Hua, W., Yuille, A.L., Fei-Fei, L.: Auto-deeplab: hierarchical neural architecture search for semantic image segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 82–92 (2019)

Zhao, H., Zhang, Y., Liu, S., Shi, J., Loy, C.C., Lin, D., Jia, J.: Psanet: point-wise spatial attention network for scene parsing. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 267–283 (2018)

Ding, H., Jiang, X., Liu, A.Q., Thalmann, N.M., Wang, G.: Boundary-aware feature propagation for scene segmentation. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 6819–6829 (2019)

Zhang, H., Zhang, H., Wang, C., Xie, J.: Co-occurrent features in semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 548–557 (2019)

Zhu, Z., Mengde, X., Bai, S., Huang, T., Bai, X.: Asymmetric non-local neural networks for semantic segmentation. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 593–602 (2019)

Kong, S., Fowlkes, C.C.: Recurrent scene parsing with perspective understanding in the loop. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 956–965 (2018)

Chen, L.-C., Collins, M., Zhu, Y., Papandreou, G., Zoph, B., Schroff, F., Adam, H., Shlens, J.: Searching for efficient multi-scale architectures for dense image prediction. In: Advances in Neural Information Processing Systems, pp. 8699–8710 (2018)

Tian, Z., He, T., Shen, C., Yan, Y.: Decoders matter for semantic segmentation: Data-dependent decoding enables flexible feature aggregation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3126–3135 (2019)

Bulò, S.R., Porzi, L., Kontschieder, P.: In-place activated batchnorm for memory-optimized training of dnns. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5639–5647 (2018)

Huang, L., Yuan, Y., Guo, J., Zhang, C., Chen, X., Wang, J.: Interlaced sparse self-attention for semantic segmentation (2019). arXiv preprint arXiv:1907.12273

Xia, X., Cui, X., Nan, B.: Inception-v3 for flower classification. In: 2017 2nd International Conference on Image, Vision and Computing (ICIVC), pp. 783–787. IEEE (2017)

Tan, M., Pang, R., Le, Q.V.: Efficientdet: scalable and efficient object detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10781–10790 (2020)

Tan, M., Le, Q.V.: Efficientnet: rethinking model scaling for convolutional neural networks (2019). arXiv preprint arXiv:1905.11946

Acknowledgements

This work is partially supported by the Fundamental Research Funds for the Central Universities (JUSRP41908), National Natural Science Foundation of China (61201429, 61362030), China Postdoctoral Science Foundation (2015M581720, 2016M600360) and Jiangsu Postdoctoral Science Foundation (1601216C).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Jiang, M., Zhai, F. & Kong, J. Sparse Attention Module for optimizing semantic segmentation performance combined with a multi-task feature extraction network. Vis Comput 38, 2473–2488 (2022). https://doi.org/10.1007/s00371-021-02124-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-021-02124-3