Abstract

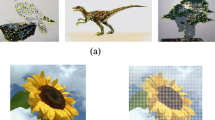

We propose a higher-level visual representation, visual synset, for object-based image retrieval beyond visual appearances. The proposed visual representation improves the traditional part-based bag-of-words image representation, in two aspects. First, the approach strengthens the discrimination power of visual words by constructing an intermediate descriptor, visual phrase, from frequently co-occurring visual word-set. Second, to bridge the visual appearance difference or to achieve better intra-class invariance power, the approach clusters visual words and phrases into visual synset, based on their class probability distribution. The rationale is that the distribution of visual word or phrase tends to peak around its belonging object classes. The testing on Caltech-256 data set shows that the visual synset can partially bridge visual differences of images of the same class and deliver satisfactory retrieval of relevant images with different visual appearances.

Similar content being viewed by others

References

Agarwal, A., Triggs, W.: Hyperfeatures—multilevel local coding for visual recognition. In: ECCV International Workshop on Statistical Learning in Computer Vision (2006). http://lear.inrialpes.fr/pubs/2006/AT06b/Agarwal-Triggs-eccv06.pdf

Baker, L., McCallum, A.: Distributional clustering of words for text classification. In: Croft, W.B., Moffat, A., van Rijsbergen, C.J., Wilkinson, R., Zobel, J. (eds.) Proceedings of ACM SIGIR, pp. 96–103. Melbourne, AU (1998). citeseer.ist.psu.edu/baker98distributional.html

Bekkerman, R., El-Yaniv, R., Tishby, N., Winter, Y.: Distributional word clusters vs. words for text categorization. J. Mach. Learn. Res. G 3, 1183–1208 (2003)

Carson, C., Belongie, S., Greenspan, H., Malik, J.: Blobworld: Image segmentation using expectation-maximization and its application to image querying. IEEE Trans. Pattern Anal. Mach. Intell. 24(8), 1026–1038 (2002)

Dance, C., Willamowski, J., Fan, L., Bray, C., Csurka, G.: Visual categorization with bags of keypoints. In: Proceedings of ECCV Workshop on Statistical Learning in Computer Vision (2004)

Donoser, M., Bischof, H.: Efficient maximally stable extremal region (MSER) tracking. In: Proceedings of Conference on Computer Vision and Pattern Recognition, pp. 553–560 (2006)

Faloutsos, C., Barber, R., Flickner, M., Hafner, J., Niblack, W., Petkovic, D., Equitz, W.: Efficient and effective querying by image content. J. Intell. Inf. Syst. 3(3/4), 231–262 (1994)

Griffin, A.H., Perona, P.: Caltech-256 object category dataset. Tech. rep., California Institute of Technology (2007)

Grauman, K., Darrell, T.: The pyramid match kernel: discriminative classification with sets of image features. In: Proceedings of International Conference on Computer Vision, pp. 1458–1465. IEEE Computer Society, USA (2005). http://dx.doi.org/10.1109/ICCV.2005.239

Gupta, A., Jain, R.: Visual information retrieval. Commun. ACM 40(5), 70–79 (1997). http://doi.acm.org/10.1145/253769.253798

Han, J., Cheng, H., Xin, D., Yan, X.: Frequent pattern mining: Current status and future directions. Data Min. Know. Discov. 14(1) (2007)

Jing, F., Li, M., Zhang, L., Zhang, H., Zhang, B.: Learning in region-based image retrieval. In: CIVR, pp. 206–215 (2003)

Jurie, F., Triggs, B.: Creating efficient codebooks for visual recognition. In: Proceedings of International Conference on Computer Vision. Washington, DC, USA (2005). http://dx.doi.org/10.1109/ICCV.2005.66

Kadir, T., Brady, M.: Saliency, scale and image description. Int. J. Comput. Vis. 45(2), 83–105 (2001). http://dx.doi.org/10.1023/A:1012460413855

Lazebnik, S., Schmid, C., Ponce, J.: Beyond bags of features: spatial pyramid matching for recognizing natural scene categories. In: Proceedings of Conference on Computer Vision and Pattern Recognition, pp. 2169–2178. Washington, DC, USA (2006)

Li, F.F., Fergus, R., Perona, P.: Learning generative visual models from few training examples: an incremental Bayesian approach based on 101 object categories. In: Proceedings of CVPR Workshop. Washington, DC, USA (2004)

Liu, Y., Zhang, D., Lu, G., Ma, W.Y.: A survey of content-based image retrieval with high-level semantics. Pattern Recognit. 40(1), 262–282 (2007). doi:10.1016/j.patcog.2006.04.045. http://dx.doi.org/10.1016/j.patcog.2006.04.045

Lowe, D.: Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 20, 91–110 (2003).

Pereira, F., Tishby, N., Lee, L.: Distributional clustering of English words. In: Proceedings of ACL, pp. 183–190. Morristown, NJ, USA (1993). http://portal.acm.org/citation.cfm?id=981598

Quack, T., Ferrari, V., Leibe, B., Van-Gool, L.: Efficient mining of frequent and distinctive feature configurations. In: ICCV (2007). http://lear.inrialpes.fr/pubs/2006/AT06b/Agarwal-Triggs-eccv06.pdf

Sivic, J., Russell, B.C., Efros, A.A., Zisserman, A., Freeman, W.T.: Discovering object categories in image collections. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV) (2005)

Slonim, N., Friedman, N., Tishby, N.: Agglomerative multivariate information bottleneck. In: Advances in Neural Information Processing Systems (NIPS) (2001). citeseer.ist.psu.edu/article/slonim01agglomerative.html

Smith, J.R., Chang, S.F.: Visualseek: a fully automated content-based image query system. In: Proceedings of the ACM International Conference on Multimedia, pp. 87–98. ACM, New York, NY, USA (1996). http://doi.acm.org/10.1145/244130.244151

Squire, D., Muller, W., Muller, H., Raki, J.: Content-based query of image databases, inspirations from text retrieval: inverted files, frequency-based weights and relevance feedback (1999). citeseer.ist.psu.edu/squire98contentbased.html

Squire, D., Muller, W., Muller, H., Raki, J.: Content-based query of image databases, inspirations from text retrieval: inverted files, frequency-based weights and relevance feedback (1999). citeseer.ist.psu.edu/squire98contentbased.html

Tishby, N., Pereira, F., Bialek, W.: The information bottleneck method. In: Proceedings of Allerton Conference on Communication, Control and Computing, pp. 368–377 (1999). citeseer.ist.psu.edu/tishby99information.html

Wallraven, C., Caputo, B., Graf, A.: Recognition with local features: the kernel recipe. In: Proceedings of International Conference on Computer Vision, p. 257. IEEE Computer Society, Nice, France (2003)

Wang, J.Z., Li, J., Wiederhold, G.: SIMPLIcity: Semantics-sensitive integrated matching for picture LIbraries. IEEE Trans. Pattern Anal. Mach. Intell. 23(9), 947–963 (2001). citeseer.ist.psu.edu/wang01simplicity.html

Willamowski, J., Arregui, D., Csurka, G., Dance, C., Fan, L.: Categorizing nine visual classes using local appearance descriptors. In: Proceedings of ICPR Workshop on Learning for Adaptable Visual Systems (2004)

Witten, I., Moffat, A., Bell, T.: Managing Gigabytes: Compressing and Indexing Documents and Images. Morgan Kaufmann, San Francisco (1999). citeseer.ist.psu.edu/witten96managing.html

Yuan, J., Wu, Y., Yang, M.: Discovery of collocation patterns: from visual words to visual phrases. In: Proceedings of the International Conference on Knowledge Discovery and Data Mining (2007)

Zhang, J., Marsza, M., Lazebnik, S., Schmid, C.: Local features and kernels for classification of texture and object categories: a comprehensive study. Int. J. Comput. Vis. 73(2), 213–238 (2007). http://dx.doi.org/10.1007/s11263-006-9794-4

Zheng, Q.F., Wang, W.Q., Gao, W.: Effective and efficient object-based image retrieval using visual phrases. In: Proceedings of ACM International Conference on Multimedia, pp. 77–80. Santa Barbara, CA, USA (2006). http://doi.acm.org/10.1145/1180639.1180664

Zheng, Y.T., Zhao, M., Neo, S.Y., Chua, T.S.: Visual synset: towards a higher-level visual representation. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, Alaska, USA (2008)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Zheng, YT., Neo, SY., Chua, TS. et al. Toward a higher-level visual representation for object-based image retrieval. TVC 25, 13–23 (2009). https://doi.org/10.1007/s00371-008-0294-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-008-0294-0