Abstract

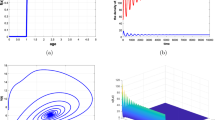

Ecological trade-offs between species are often invoked to explain species coexistence in ecological communities. However, few mathematical models have been proposed for which coexistence conditions can be characterized explicitly in terms of a trade-off. Here we present a model of a plant community which allows such a characterization. In the model plant species compete for sites where each site has a fixed stress condition. Species differ both in stress tolerance and competitive ability. Stress tolerance is quantified as the fraction of sites with stress conditions low enough to allow establishment. Competitive ability is quantified as the propensity to win the competition for empty sites. We derive the deterministic, discrete-time dynamical system for the species abundances. We prove the conditions under which plant species can coexist in a stable equilibrium. We show that the coexistence conditions can be characterized graphically, clearly illustrating the trade-off between stress tolerance and competitive ability. We compare our model with a recently proposed, continuous-time dynamical system for a tolerance-fecundity trade-off in plant communities, and we show that this model is a special case of the continuous-time version of our model.

Similar content being viewed by others

References

Cadotte MW, Mai DV, Jantz S, Collins MD, Keele M, Drake JA (2006) On testing the competition-colonization trade-off in a multispecies assemblage. Am Nat 168:704–709

Chase JM, Leibold MA (2003) Ecological Niches: linking classical and contemporary approaches. University of Chicago Press, Chicago

D’Andrea R, Barabás G, Ostling A (2013) Revising the tolerance-fecundity trade-Ooff; or, on the consequences of discontinuous resource use for limiting similarity, species diversity, and trait dispersion. Am Nat 181:E91–E101

Ellenberg H (1979) Zeigerwerte der Gefässpflanzen Mitteleuropas. Scripta Geobotanica 9:1–122

Greiner La Peyre MK, Grace JB, Hahn E, Mendelssohn IA (2001) The importance of competition in regulating plant species abundance along a salinity gradient. Ecology 82:62–69

Holt RD, Grover J, Tilman D (1994) Simple rules for interspecific dominance in systems with exploitative and apparent competition. Am Nat 144:741–777

Hutchinson GE (1957) Concluding remarks. Population Studies: Animal Ecology and Demography. Cold Spring Harbor Symposium on Quantitative Biology 22:415–457

Jung V, Mony C, Hoffmann L, Muller S (2009) Impact of competition on plant performances along a flooding gradient: a multi-species experiment. J Veg Sci 20:433–441

Kneitel JM, Chase JM (2004) Trade-offs in community ecology: linking spatial scales and species coexistence. Ecol Lett 7:69–80

MacArthur RH (1972) Geographical ecology. Princeton University Press, Princeton

McPeek MA (1996) Trade-offs, food web structure, and the coexistence of habitat specialists and generalists. Am Nat 148:S124–S138

Muller-Landau HC (2010) The tolerance-fecundity trade-off and the maintenance of diversity in seed size. Proc Natl Acad Sci USA 107:4242–4247

Rees M (1993) Trade-offs among dispersal strategies in British plants. Nature 366:150–152

Tilman D (1982) Resource Competition and Community Structure. Princeton University Press, Princeton

Tilman D, Pacala S (1993) The maintenance or species richness in plant communities. In: Ricklefs RE, Schluter D (eds) Species Diversity in Ecological Communities. University of Chicago Press, Chicago, pp 13–25

Uriarte M, Canham CD, Root RB (2002) A model of simultaneous evolution of competitive ability and herbivore resistance in a perennial plant. Ecology 83:2649–2663

Veliz-Cuba A, Jarrah AS, Laubenbacher R (2010) Polynomial algebra of discrete models in systems biology. Bioinformatics 26:1637–1643

Viola DV, Mordecai EA, Jaramillo AG, Sistla SA, Albertson LK, Gosnell JS, Cardinale BJ, Levine JM (2010) Competition-defense tradeoffs and the maintenance of plant diversity. Proc Natl Acad Sci USA 107:17217–17222

Weiner J, Xiao S (2012) Variation in the degree of specialization can maintain local diversity in model communities. Theor Ecol 5:161–166

Zelený D, Schaffers AP (2012) Too good to be true: pitfalls of using mean Ellenberg indicator values in vegetation analyses. J Veg Sci 23:419–431

Acknowledgments

We thank S. Schreiber and two anonymous reviewers for constructive comments. BH thanks L. Gironne for a helpful suggestion. Financial support was provided for BH by the TULIP Laboratory of Excellence (ANR-10-LABX-41), for RSE by a VIDI grant from the Netherlands Scientific Organization (NWO) and for BH and RSE by an exchange grant from the Van Gogh programme.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Recruitment probability

Here we derive the probability that species \(i\) in competition with \(n\) other species recruits a vacant site. The derivation, which is based on standard properties of Poisson random variables, is not new, but seems to be unfamiliar to many ecologists.

We consider a vacant site. The number of propagules (e.g., seeds) of species \(i\) reaching the vacant site is modelled by a Poisson random variable. We denote this number by \(s_i\). The parameter of the Poisson random variable is equal to \(f_i p_i\). That is, the probability of dispersing \(s_i\) seeds to the vacant site is

Species \(i\) competes with \(n\) other species. We label these species by \(j_1,j_2,\ldots ,j_n\). Conditional on the number of propagules \(s_i, s_{j_1}, \ldots , s_{j_n}\), the probability that species \(i\) wins the competition for the vacant site is

We average this expression with respect to the Poisson distributions for \(s_i\) and \(s_{j_k}\),

where we have used that the sum of Poisson random variables is again a Poisson random variable in the second equality and in the last equality. Because

where the last line is the definition of the function \(L\), we have

The probability that no species occupies the vacant site, that is, the probability that the vacant site remains empty, is

Appendix 2: Proof of Result 1

We consider a subset \(\mathcal{C }\) of the set of all species \(\mathcal{S }\), and study whether there exists an equilibrium of dynamical system (6) in which the species in \(\mathcal{C }\) are present and the species in \(\mathcal{S }{\setminus }\mathcal{C }\) are absent. We write the dynamical system as

with

and

An equilibrium \(\widetilde{\varvec{p}}\) of (23) has to satisfy

Equation (24) is trivially satisfied for \(i \in \mathcal{S }{\setminus }\mathcal{C }\), that is, for species that are absent at equilibrium (\(\widetilde{p}_i = 0\)). For species that are present at equilibrium (\(\widetilde{p}_i > 0\)), Eq. (24) simplifies to

1.1 Preliminary computation

We derive an expression for \(F_i(\widetilde{\varvec{p}})\). As we will need a more general result below, we consider the quantity \(G_i\) which is equal to \(F_i(\widetilde{\varvec{p}})\) in which the function \(L\) is replaced by an arbitrary function \(K\),

For a species \(i\) with \(i < i_C\), we determine the index \(k\) such that \(i_{k} \le i < i_{k+1}\) with \(k \in \{1,2,\ldots ,C-1\}\) and set \(k=0\) if \(i < i_1\). We prove that

For \(i < i_C\) we determine the index \(k\) as above and we compute

A similar computation holds for \(i \ge i_C\), proving (27).

1.2 Equilibrium conditions

Putting \(K=L\) and \(i=i_k\) in (27) we get

Hence, the equilibrium conditions (25) read

Note that the parameters \(h_i\) and \(f_i\) with \(i \in \mathcal{S }{\setminus }\mathcal{C }\) have been eliminated from (29).

Rearranging (29) we get

We have to invert the function \(L\) to solve for the occupancies \(\widetilde{p}_i\). Because \(L\) maps the positive real line \((0,\infty )\) to the real interval \((0,1)\), the inversion is possible if and only if

where we have used Assumption 1 to discard parameter combinations for which one or more of these inequalities is replaced by an equality.

After inverting the function \(L\), the solutions for the occupancies \(\widetilde{p}_i\) should be positive. Because \(L\) is decreasing, this condition is satisfied if and only if

where we have used Assumption 1 to discard cases in which equality rather than inequality holds.

Combining (31) and (32) we get the following conditions:

which proves the first statement of Result 1.

If these conditions are satisfied, the equilibrium occupancies \(\widetilde{p}_i\) are given by

with \(L^{-1}\) the inverse function of \(L\). Rewriting Eqs. (34) we get

Recall the definition \(L(x) = \frac{1-\mathrm{e}^{-x}}{x}\). The function \(\Lambda \) is defined as the inverse function of \(x \mapsto \frac{x}{1-\mathrm{e}^{-x}}\). We have

Hence, \(L^{-1}(x) = \Lambda \big (\frac{1}{x}\big )\). Using this identity in (35), we obtain the second statement of Result 1.

The function \(\Lambda \) can be expressed in terms of the Lambert \(W\) function. We have

with \(W_0\) the upper branch of the Lambert \(W\) function. Hence,

Similarly, for the function \(L^{-1}\),

so that

Note that Eqs. (36) and (37) satisfy \(L^{-1}(x) = \Lambda \big (\frac{1}{x}\big )\).

Because we have derived an explicit expression for the equilibrium \(\widetilde{\varvec{p}}\), we have also established the third statement of Result 1.

Appendix 3: Proof of Result 2

We consider an equilibrium \(\widetilde{\varvec{p}}\) of dynamical system (6) and study its local stability. We denote by \(\mathcal{C }\) the subset of the set of all species \(\mathcal{S }\) that are present in the equilibrium \(\widetilde{\varvec{p}}\).

The equilibrium \(\widetilde{\varvec{p}}\) is locally asymptotically stable if and only if the eigenvalues of the Jacobian matrix evaluated at \(\widetilde{\varvec{p}}\) lie inside the unit circle of the complex plane. Using the notation of (23), the Jacobian matrix \(J\) evaluated at \(\widetilde{\varvec{p}}\) is

Consider a species \(j\) that is absent at equilibrium, \(j \in \mathcal{S }{\setminus }\mathcal{C }\) and \(\widetilde{p}_j = 0\). Row \(j\) of the second term of (38) is zero, so that row \(j\) of the Jacobian matrix \(J\) is zero except for the diagonal element \(f_j F_j(\widetilde{\varvec{p}})\). Hence, by applying the permutation \(P\) that shifts the indices in \(\mathcal{C }\) in front of the indices in \(\mathcal{S }{\setminus }\mathcal{C }\), we get a block matrix

with \(J_\mathcal{C }\) a \(C \times C\) matrix, \(K\) a \(C \times (S\!-\!C)\) matrix, and \(J_{\mathcal{S }{\setminus }\mathcal{C }}\) a diagonal \((S\!-\!C) \times (S\!-\!C)\) matrix. It follows that the eigenvalues of \(J\) are equal to the union of the eigenvalues of \(J_\mathcal{C }\) and the eigenvalues of \(J_{\mathcal{S }{\setminus }\mathcal{C }}\).

1.1 Eigenvalues of \(J_\mathcal{S {\setminus }\mathcal C }\)

Because the matrix \(J_{\mathcal{S }{\setminus }\mathcal{C }}\) is diagonal, its eigenvalues are given by its diagonal elements. The diagonal elements are equal to \(f_j F_j(\widetilde{\varvec{p}})\) with \(j \in \mathcal{S }{\setminus }\mathcal{C }\), implying that the eigenvalues of \(J_{\mathcal{S }{\setminus }\mathcal{C }}\) are real and positive. To obtain the stability conditions, we look for conditions equivalent with \(f_j F_j(\widetilde{\varvec{p}}) < 1\) for all \(j \in \mathcal{S }{\setminus }\mathcal{C }\), that is, for all \(j \in \mathcal{S }\) excluding \(i_1,i_2,\ldots ,i_C\).

First, we consider a species \(j\) for which \(i_k < j < i_{k+1}\) with \(k = 1,2,\ldots C-1\). Expression (27) with \(K=L\) and \(i=j\) gives

We use Eq. (30a) for the first term of the right-hand side and Eq. (29a) for the second and third term of the right-hand side. This leads to

so that the condition \(f_j F_j(\widetilde{\varvec{p}}) < 1\) is equivalent with

Using that \(h_{i_k} > h_j > h_{i_{k+1}}\) (because \(i_k < j < i_{k+1}\)), we see that the latter condition is equivalent with

Second, we consider a species \(j\) for which \(j < i_1\). Expression (27) with \(K=L\), \(i=j\) and \(k=0\) gives

We use \(L(0)=1\) for the first term and Eq. (29a) for the second and third term of the right-hand side. This leads to

so that the condition \(f_j F_j(\widetilde{\varvec{p}}) < 1\) is equivalent with

Using that \(h_j > h_{i_1}\) (because \(j < i_1\)), we see that the latter condition is equivalent with

Third, we consider a species \(j\) for which \(i_C < j\). Expression (27) with \(K=L\), \(i=j\) and \(k=C\) gives

where we have used Eq. (29b). Hence, the condition \(f_j F_j(\widetilde{\varvec{p}}) < 1\) is equivalent with

Using that \(h_{i_C} > h_j\) (because \(i_C < j\)), we see that the latter condition is equivalent with

The set of conditions (40) for all \(j \in \mathcal{S }{\setminus }\mathcal{C }\) is equivalent with conditions (16). Note that Assumption 1 allows us to discard parameter combinations for which one or more of these inequalities is replaced by an equality. Hence, when one of the conditions (16) is violated, the matrix \(J_{\mathcal{S }{\setminus }\mathcal{C }}\) has an eigenvalue \(\lambda >1\) and the equilibrium \(\widetilde{\varvec{p}}\) is unstable. As a result, we have proved that conditions (16) are necessary for local stability. To prove that conditions (16) are also sufficient for local stability, it suffices to prove that the eigenvalues of \(J_\mathcal{C }\) lie inside the unit circle.

1.2 Eigenvalues of \(J_\mathcal C \)

The matrix \(J_\mathcal{C }\) is

with \(1\!\!1\) the identity matrix.

We compute the partial derivatives,

Using (27) with \(K=L^{\prime }\) and \(i = \max (i_k,i_m) = i_{\max (k,m)}\), we get

Note that the parameters \(h_i\) and \(f_i\) with \(i \in \mathcal{S }{\setminus }\mathcal{C }\) do not appear in these partial derivatives.

We can write the matrix \(J_\mathcal{C }\) as

with \(G\) a diagonal matrix,

and \(H\) a symmetric matrix,

where we have used that \(L^{\prime }(x) = -|L^{\prime }(x)|\) because \(L\) is decreasing. The vectors \(\varvec{v}_k\) are

where the vectors \(\varvec{e}_j\) are the standard basis vectors in \({\text{ I }\!\text{ R }}^C\).

First we establish that all eigenvalues of the matrix \(G H\) are real and positive. Then we show that all eigenvalues of the matrix \(G H\) lie inside the unit circle. As a result, all eigenvalues of \(J_\mathcal{C }= 1\!\!1- GH\) belong to the real interval \([0,1)\).

To show that all eigenvalues of the matrix \(G H\) are real and positive, we first consider the matrix \(H\),

a linear combination of symmetric rank-one matrices \(\varvec{v}_k \varvec{v}_k^\top \), with vectors \(\varvec{v}_k\) given by (43) and coefficients \(a_k\) given by (42). Because the coefficients \(a_k\) are positive, the matrix \(H\) is a sum of positive-semidefinite matrices, and therefore positive-semidefinite.

In fact, the matrix \(H\) is positive-definite. To establish this, we have to prove that \(H\) is not singular. Assume that \(H\) is singular. Then there exists a vector \(\varvec{x} \ne \varvec{0}\) for which \(H\varvec{x} = \varvec{0}\). Then also \(\varvec{x}^\top H\varvec{x} = 0\) and therefore,

Because \(a_k > 0\), this implies that

Because the set of vectors \(\varvec{v}_k\) span \({\text{ I }\!\text{ R }}^C\),

This implies that \(\varvec{x} = \varvec{0}\), but this contradicts our assumption. We conclude that \(H\) is not singular, and thus positive-definite.

From (41) we construct the square root \(\sqrt{G}\),

Multiplying the matrix \(G H\) on the left by \(\sqrt{G}^{-1}\) and on the right by \(\sqrt{G}\), we get

Because the matrix \(H\) is positive-definite, the matrix \(\sqrt{G} \,H \sqrt{G}\) is also positive-definite, and therefore has positive real eigenvalues. Equation (44) expresses that the matrices \(G H\) and \(\sqrt{G} \,H \sqrt{G}\) are similar, and therefore have the same eigenvalues. As a result, all eigenvalues of the matrix \(G H\) are positive and real.

To show that all eigenvalues of the matrix \(G H\) are inside the unit circle, we first note that the matrix \(H\) has positive components. We construct an auxiliary matrix \(H_+\) with components larger than \(H\),

Comparing (42) and (45) and using the inequality,

we see that

Because the matrix \(G\) is diagonal with positive components, both \(G H\) and \(G H_+\) are matrices with positive components, and

Hence, the spectral radius of \(G H\) is smaller than or equal to the spectral radius of \(G H_+\).

We show that the vector \(\varvec{w}\) with positive components,

is an eigenvector with eigenvalue one of the matrix \(G H_+\):

where we have used (27) with \(K=L\) and \(i=i_\ell \) in the fourth equality and (24) in the fifth equality. The matrix \(G H_+\) has positive exponents, so we can apply Perron-Frobenius theory. Because the vector \(\varvec{w}\) has positive components and is an eigenvector of \(G H_+\), we know that the corresponding eigenvalue equals the spectral radius of \(G H_+\). Hence, the spectral radius of \(G H_+\) is equal to one, and the spectral radius of \(G H\) is smaller than or equal to one. We have shown before that the eigenvalues of \(G H\) are real and positive. We conclude that the eigenvalues of \(J_\mathcal{C }= 1\!\!1- G H\) belong to the real interval \([0,1)\). This finishes the proof that conditions (16) are necessary and sufficient conditions for local stability of the equilibrium \(\widetilde{\varvec{p}}\).

Appendix 4: Proof of Result 3

Consider a set \(\mathcal{S }\) of species with traits \((g_i,h_i)\) satisfying Assumption 1. We prove that there is one and only one subset \(\mathcal{C }\subset \mathcal{S }\) for which inequalities (12) and (16) are simultaneously satisfied.

In the main text we have given a graphical representation of the coexistence conditions (12) and the stability conditions (16). For a subset \(\mathcal{C }\subset \mathcal{S }\) we construct a broken line passing through the trait pairs \((g_i,h_i)\) of species \(i \in \mathcal{C }\). Conditions (12) impose that at each species in \(\mathcal{C }\) the slope of the broken line should decrease (note that the slope changes at a species in \(\mathcal{C }\) due to Assumption 1). Conditions (16) impose that each species in \(\mathcal{S }{\setminus } \mathcal{C }\) should lie to the right of and below the broken line (note that no species in \(\mathcal{S }{\setminus } \mathcal{C }\) lies on the broken line due to Assumption 1).

1.1 Existence

We present an explicit algorithm to construct a set \(\mathcal{C }\) satisfying conditions (12) and (16):

Species are added one by one to the set \(\mathcal{C }\) (line 5 and 9). Which species \(i^*\) is added is determined by a maximization problem (line 4 and 8) over a set of species \(\mathcal{A }\) (computed in line 2, 6 and 10). Species are added in order of increasing \(g\) (and increasing \(h\)). Note that each maximization problem has a unique solution due to Assumption 1. The algorithm is illustrated in Fig. 5 for an example of \(S=5\) species.

The slope of the broken line decreases at each species \(i\) in the set \(\mathcal{C }\) constructed in the algorithm. Indeed, an increasing slope of the broken line at species \(i\) would contradict that species \(i\) was obtained by maximizing the slope. Hence, conditions (12) are satisfied. Similarly, each species \(j\) not belonging to the set \(\mathcal{C }\) lies to the right of and below the broken line corresponding to the set \(\mathcal{C }\). Indeed, if species \(j\) would lie to the left of and above the broken line, then there would be at least one maximization problem for which the line segment to species \(j\) has a larger slope than the maximal slope. From this contradiction we conclude that conditions (16) are satisfied for the set \(\mathcal{C }\) constructed in the algorithm.

1.2 Uniqueness

We show that there is only one set \(\mathcal{C }\) for which conditions (12) and (16) are satisfied.

Suppose that there are two sets \(\mathcal{C }_a\) and \(\mathcal{C }_b\) for which conditions (12) and (16) hold. Assume \(\mathcal{C }_a\) and \(\mathcal{C }_b\) have \(C_a\) and \(C_b\) elements, respectively,

We assume without loss of generality that \(C_a \le C_b\).

We consider the broken lines corresponding to \(\mathcal{C }_a\) and \(\mathcal{C }_b\). Both broken lines start at the origin \(0\). We compare the first species \(i_1\) and \(j_1\) of the broken lines. Suppose \(g_{i_1} > g_{j_1}\). Species \(i_1\) cannot lie above the broken line of \(\mathcal{C }_b\) (because (16) holds for \(\mathcal{C }_b\)). Hence, species \(i_1\) lies on or below the broken line of \(\mathcal{C }_b\). But then species \(j_1\) lies above the segment \([0,i_1]\) which is not possible (because (16) holds for \(\mathcal{C }_a\)). Hence, \(g_{i_1} \le g_{j_1}\). By symmetry we also have \(g_{i_1} \ge g_{j_1}\), so that \(g_{i_1} = g_{j_1}\). By Assumption 1 this implies that \(i_1 = j_1\). Next, we compare the second species \(i_2\) and \(j_2\) of the broken lines. A similar argument leads to \(i_2 = j_2\). By repeating the same argument, we obtain \(i_3 = j_3\), ..., \(i_{C_a} = j_{C_a}\). Left of species \(i_{C_a} = j_{C_a}\) the broken line of \(\mathcal{C }_a\) is a half-line with slope one. Species \(j_{C_a+1}\) cannot lie above the half-line (because (16) holds for \(\mathcal{C }_a\)). Species \(j_{C_a+1}\) cannot lie below the half-line because then the segment \([j_{C_a},j_{C_a+1}]\) would have slope smaller than one. Assumption 1 excludes that \(j_{C_a+1}\) lies on the half-line. Hence, the broken line of \(\mathcal{C }_b\) left of \(i_{C_a} = j_{C_a}\) is also a half-line with slope one. We conclude that \(C_a = C_b\) and that the sets \(\mathcal{C }_a\) and \(\mathcal{C }_b\) are identical.

Finally, we note that alternative (but equivalent) constructions of the set \(\mathcal{C }\) exist. We mention a construction in terms of the convex hull. For each species \(i\) let \(D_i\) be the half-line \(h=h_i+g-g_i\), \(g\ge g_i\). Let \(D\) be the convex hull of the half-line \(h=0\), \(g\ge 0\) and the half-lines \(D_i\). The set \(\mathcal{C }\) satisfying conditions (12) and (16) is the the set of species lying on the boundary of \(D\). The species not belonging to \(\mathcal{C }\) lie in the interior of \(D\). This construction can also be used to prove existence and uniqueness of the set \(\mathcal{C }\) of coexisting species.

Appendix 5: Model with overlapping generations

We demonstrate how our analysis for the discrete-time system (6) can be extended to the continuous-time system (20). We consider the conditions for the existence of an equilibrium with a specific coexistence set, and the corresponding conditions for local stability.

Dynamical system (20) can be written as

with \(m\) the mortality rate and \(F_i(\varvec{p})\) defined in (23b). The equilibrium conditions, obtained by setting the right-hand side of the latter equations equal to zero, are identical to the equilibrium conditions (24) for the discrete-time system. Hence, the conditions (12) for the existence of an equilibrium with a specific coexistence set and the explicit expressions (13) for the equilibrium occupancies also hold for the continuous-time system.

Concerning the stability conditions, note that the Jacobian matrix \(J_\text{ cont }\) (evaluated at an equilibrium) of the continuous-time system (20) is related to the Jacobian matrix \(J\) (evaluated at an equilibrium) of the discrete-time system (6) by

We have shown that conditions (16) guarantee that all eigenvalues of \(J\) belong to the real interval \([0,1)\). This implies that the eigenvalues of \(J_\text{ cont }\) belong to the real interval \([-m,0)\), so that the equilibrium of the continuous-time system is locally stable. Conversely, we have shown that if one of conditions (16) is violated, then there exists an eigenvalue \(\lambda >1\) of \(J\) (assuming genericity of parameters). This implies that there exists an eigenvalue \(m(\lambda -1)>0\) of \(J_\text{ cont }\), so that the equilibrium of the continuous-time system is unstable. This proves that conditions (16) are necessary and sufficient for local stability of an equilibrium of the continuous-time system as well.

1.1 Note on Muller-Landau (2010)

Muller-Landau (2010) conjectured that the following conditions are necessary and sufficient for the existence of an equilibrium in which all \(S\) species coexist:

We compare conditions (46) with the exact necessary and sufficient conditions, which follow from Result 1,

Inequality (46a) is equivalent with inequality (47a). Inequality (46b) with the last term in the right-hand side dropped is equivalent with inequalitiy (47b). Inequality (46c) with the last term dropped is equivalent with inequality (47c). Hence, conditions (46) are too strong.

Rights and permissions

About this article

Cite this article

Haegeman, B., Sari, T. & Etienne, R.S. Predicting coexistence of plants subject to a tolerance-competition trade-off. J. Math. Biol. 68, 1815–1847 (2014). https://doi.org/10.1007/s00285-013-0692-4

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00285-013-0692-4