Abstract

We develop a full theory for the new class of Optimal Entropy-Transport problems between nonnegative and finite Radon measures in general topological spaces. These problems arise quite naturally by relaxing the marginal constraints typical of Optimal Transport problems: given a pair of finite measures (with possibly different total mass), one looks for minimizers of the sum of a linear transport functional and two convex entropy functionals, which quantify in some way the deviation of the marginals of the transport plan from the assigned measures. As a powerful application of this theory, we study the particular case of Logarithmic Entropy-Transport problems and introduce the new Hellinger–Kantorovich distance between measures in metric spaces. The striking connection between these two seemingly far topics allows for a deep analysis of the geometric properties of the new geodesic distance, which lies somehow between the well-known Hellinger–Kakutani and Kantorovich–Wasserstein distances.

Similar content being viewed by others

When no ambiguity is possible, we will often adopt the convention to write the integral of a composition of functions as

1 Introduction

The aim of the present paper is twofold: In Part I we develop a full theory of the new class of Optimal Entropy-Transport problems between nonnegative and finite Radon measures in general topological spaces. As a powerful application of this theory, in Part II we study the particular case of Logarithmic Entropy-Transport problems and introduce the new Hellinger–Kantorovich  distance between measures in metric spaces. The striking connection between these two seemingly far topics is our main focus, and it paves the way for a beautiful and deep analysis of the geometric properties of the geodesic

distance between measures in metric spaces. The striking connection between these two seemingly far topics is our main focus, and it paves the way for a beautiful and deep analysis of the geometric properties of the geodesic  distance, which (as our proposed name suggests) can be understood as an inf-convolution of the well-known Hellinger–Kakutani and the Kantorovich–Wasserstein distances, see Remark 8.19 for a discussion of inf-convolutions of distances. In fact, our approach to the theory was opposite: in trying to characterize

distance, which (as our proposed name suggests) can be understood as an inf-convolution of the well-known Hellinger–Kakutani and the Kantorovich–Wasserstein distances, see Remark 8.19 for a discussion of inf-convolutions of distances. In fact, our approach to the theory was opposite: in trying to characterize  , we were first led to the Logarithmic Entropy-Transport problem, see Section A.

, we were first led to the Logarithmic Entropy-Transport problem, see Section A.

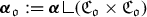

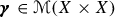

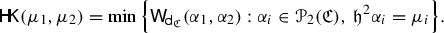

From Transport to Entropy-Transport problems. In the classical Kantorovich formulation, Optimal Transport problems [2, 40, 49, 50] deal with minimization of a linear cost functional

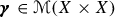

among all the transport plans, i.e. probability measures in  , \({\varvec{\gamma }}\) whose marginals

, \({\varvec{\gamma }}\) whose marginals  are prescribed. Typically, \(X_1,X_2\) are Polish spaces, \(\mu _i\) are given Borel measures (but the case of Radon measures in Hausdorff topological spaces has also been considered, see [26, 40]), the cost function \(\mathsf{c}\) is a lower semicontinuous (or even Borel) function, possibly assuming the value \(+\infty \), and \(\pi ^i(x_1,x_2)=x_i\) are the projections on the i-th coordinate, so that

are prescribed. Typically, \(X_1,X_2\) are Polish spaces, \(\mu _i\) are given Borel measures (but the case of Radon measures in Hausdorff topological spaces has also been considered, see [26, 40]), the cost function \(\mathsf{c}\) is a lower semicontinuous (or even Borel) function, possibly assuming the value \(+\infty \), and \(\pi ^i(x_1,x_2)=x_i\) are the projections on the i-th coordinate, so that

Starting from the pioneering work of Kantorovich, an impressive theory has been developed in the last two decades: from one side, typical intrinsic questions of linear programming problems concerning duality, optimality, uniqueness and structural properties of optimal transport plans have been addressed and fully analyzed. In a parallel way, this rich general theory has been applied to many challenging problems in a variety of fields (probability and statistics, functional analysis, PDEs, Riemannian geometry, nonsmooth analysis in metric spaces, just to mention a few of them: since it is impossible here to give an even partial account of the main contributions, we refer to the books [42, 50] for a more detailed overview and a complete list of references).

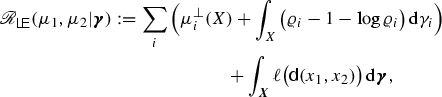

The class of Entropy-Transport problems, we are going to study, arises quite naturally if one tries to relax the marginal constraints \(\pi ^i_\sharp {\varvec{\gamma }}=\mu _i\) by introducing suitable penalizing functionals \(\mathscr {F}_i\), that quantify in some way the deviation from \(\mu _i\) of the marginals \(\gamma _i:=\pi ^i_\sharp {\varvec{\gamma }}\) of \({\varvec{\gamma }}\). In this paper we consider the general case of integral functionals (also called Csiszàr f-divergences [17]) of the form

where \(F_i:[0,\infty )\rightarrow [0,\infty ]\) are given convex entropy functions and \( {(F_{i})'_\infty }\) are their recession constants, see (2.15). Typical examples are the logarithmic or power-like entropies

or for the total variation functional corresponding to the nonsmooth entropy \(V(s):=|s-1|\), considered in [38]. We shall see that the presence of the singular part \(\gamma _i^\perp \) in the Lebesgue decomposition of \(\gamma _i\) in (1.3) does not force \(F_i(s)\) to be superlinear as \(s\uparrow \infty \) and allows for all the exponents p in (1.4).

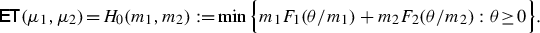

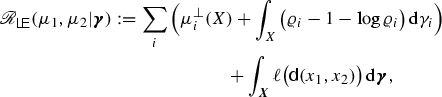

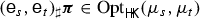

Once a specific choice of entropies \(F_i\) and of finite nonnegative Radon measures  is given, the Entropy-Transport problem can be formulated as

is given, the Entropy-Transport problem can be formulated as

where \(\mathscr {E}\) is the convex functional

Notice that the entropic formulation allows for measures \(\mu _1,\mu _2\) and \({\varvec{\gamma }}\) with possibly different total mass.

The flexibility in the choice of the entropy functions \(F_i\) (which may also take the value \(+\infty \)) covers a wide spectrum of situations (see Sect. 3.3 for various examples) and in particular guarantees that (1.5) is a real generalization of the classical optimal transport problem, which can be recovered as a particular case of (1.6) when \(F_i(s)\) is the indicator function of \(\{1\}\) (i.e. \(F_i(s)\) always takes the value \(+\infty \) with the only exception of \(s=1\), where it vanishes).

Since we think that the structure (1.6) of Entropy-Transport problems will lead to new and interesting models and applications, we have tried to establish their basic theory in the greatest generality, by pursuing the same line of development of Transport problems: in particular we will obtain general results concerning existence, duality and optimality conditions.

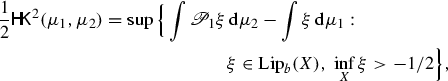

Considering e.g. the Logarithmic Entropy case, where \(F_i(s)=s\log s-s+1\), the dual formulation of (1.5) is given by

where one can immediately recognize the same convex constraint of Transport problems: the pair of dual potentials \(\varphi _i\) should satisfy \(\varphi _1\oplus \varphi _2\le \mathsf{c}\) on \(X_1\times X_2\). The main difference is due to the concavity of the objective functional

whose form can be explicitly calculated in terms of the Lagrangian conjugates \(F_i^*\) of the entropy functions. Thus (1.7) consists in the supremum of a concave functional on a convex set described by a system of affine inequalities.

The change of variables \(\psi _i:=1-\mathrm {e}^{-\varphi _i}\) transforms (1.7) in the equivalent problem of maximizing the linear functional

on the more complicated convex set

It will be useful to have both the representations at our disposal: (1.7) naturally appears from the application of the von Neumann min–max principle from a saddle point formulation of the primal problem (1.5). Moreover, (1.8)–(1.10) will play an important role in the dynamic version of a particular case of  , the Hellinger–Kantorovich distance that we will introduce later on.

, the Hellinger–Kantorovich distance that we will introduce later on.

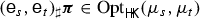

We will calculate the dual problem for every choice of \(F_i\) and show that its value always coincide with  . The dual problem also provides optimality conditions, that involve the pair of potentials \((\varphi _1,\varphi _2)\), the support of the optimal plan \({\varvec{\gamma }}\) and the densities \(\sigma _i\) of its marginals \(\gamma _i\) w.r.t. \(\mu _i\). For the Logarithmic Entropy Transport problem above, they read

. The dual problem also provides optimality conditions, that involve the pair of potentials \((\varphi _1,\varphi _2)\), the support of the optimal plan \({\varvec{\gamma }}\) and the densities \(\sigma _i\) of its marginals \(\gamma _i\) w.r.t. \(\mu _i\). For the Logarithmic Entropy Transport problem above, they read

and they are necessary and sufficient for optimality.

The study of optimality conditions reveals a different behavior between pure transport problems and entropic ones. In particular, the \(\mathsf{c}\)-cyclical monotonicity of the optimal plan \({\varvec{\gamma }}\) (which is still satisfied in the entropic case) does not play a crucial role in the construction of the potentials \(\varphi _i\). When \(F_i(0)\) are finite (as in the logarithmic case) it is possible to obtain a general existence result of (generalized) optimal potentials even when \(\mathsf{c}\) takes the value \(+\infty \).

A crucial feature of Entropy-Transport problems (which is not shared by the pure transport ones) concerns a third homogeneous formulation, which exhibits new and unexpected properties, in particular concerning the metric and dynamical aspects of such problems. It is related to the 1-homogeneous Marginal Perspective function

and to the corresponding integral functional

where \(\mu _i=\varrho _i\gamma _i+\mu _i^\perp \) is the “reverse” Lebesgue decomposition of \(\mu _i\) w.r.t. the marginals \(\gamma _i\) of \({\varvec{\gamma }}\). We will prove that

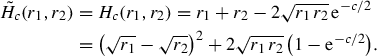

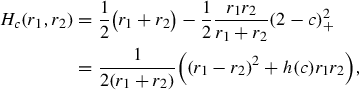

with a precise relation between optimal plans. In the Logarithmic Entropy case \(F_i(s)=s\log s-(s-1)\) the marginal perspective function H takes the particular form

which will be the starting point for understanding the deep connection with the Hellinger–Kantorovich distance. Notice that in the case when \(X_1=X_2\) and \(\mathsf{c}\) is the singular cost

(1.13) provides an equivalent formulation of the Hellinger–Kakutani distance [22, 25], see also Example E.5 in Sect. 3.3.

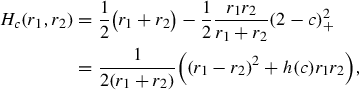

Other choices, still in the simple class (1.4), give raise to “transport” versions of well known functionals (see e.g. [31] for a systematic presentation): starting from the reversed entropies \(F_i(s)=s-1-\log s\) one gets

which in the extreme case (1.15) reduces to the Jensen–Shannon divergence [32], a squared distance between measures derived from the celebrated Kullback-Leibler divergence [28]. The quadratic entropy \(F_i(s)=\frac{1}{2}(s-1)^2\) produces

where \(h(c)=c(4-c)\) if \(0\le c\le 2\) and 4 if \(c\ge 2\): Equation (1.17) can be seen as the transport variant of the triangular discrimination (also called symmetric \(\chi ^2\)-measure), based on the Pearson \(\chi ^2\)-divergence [31], and still obtained by (1.12) when \(\mathsf{c}\) has the form (1.15).

Also nonsmooth cases, as for \(V(s)=|s-1|\) associated to the total variation distance (or nonsymmetric choices of \(F_i\)) can be covered by the general theory. In the case of \(F_i(s)=V(s)\) the marginal perspective function is

when \(X_1=X_2=\mathbb {R}^d\) with \(\mathsf{c}(x_1,x_2):=|x_1{-}x_2|\) we recover the generalized Wasserstein distance \(W^{1,1}_1\) introduced and studied by [38]; it provides an equivalent variational characterization of the flat metric [39].

However, because of our original motivation (see Section A), Part II will focus on the case of the logarithmic entropy \(F_i=U_1\), where H is given by (1.14). We will exploit its relevant geometric applications, reserving the other examples for future investigations.

From the Kantorovich–Wasserstein distance to the Hellinger–Kantorovich distance. From the analytic-geometric point of view, one of the most interesting cases of transport problems occurs when \(X_1=X_2=X\) coincide and the cost functional \(\mathscr {C}\) is induced by a distance \(\mathsf{d}\) on X: in the quadratic case, the minimum value of (1.1) for given measures \(\mu _1,\mu _2\) in the space  of probability measures with finite quadratic moment defines the so called \(L^2\)-Kantorovich–Wasserstein distance

of probability measures with finite quadratic moment defines the so called \(L^2\)-Kantorovich–Wasserstein distance

which metrizes the weak convergence (with quadratic moments) of probability measures. The metric space  inherits many geometric features from the underlying \((X,\mathsf{d})\) (as separability, completeness, length and geodesic properties, positive curvature in the Alexandrov sense, see [2]). Its dynamic characterization in terms of the continuity equation [7] and its dual formulation in terms of the Hopf–Lax formula and the corresponding (sub-)solutions of the Hamilton–Jacobi equation [37] lie at the core of the applications to gradient flows and partial differential equations of diffusion type [2]. Finally, the behavior of entropy functionals as in (1.3) along geodesics in

inherits many geometric features from the underlying \((X,\mathsf{d})\) (as separability, completeness, length and geodesic properties, positive curvature in the Alexandrov sense, see [2]). Its dynamic characterization in terms of the continuity equation [7] and its dual formulation in terms of the Hopf–Lax formula and the corresponding (sub-)solutions of the Hamilton–Jacobi equation [37] lie at the core of the applications to gradient flows and partial differential equations of diffusion type [2]. Finally, the behavior of entropy functionals as in (1.3) along geodesics in  [16, 35, 37] encodes a valuable geometric information, with relevant applications to Riemannian geometry and to the recent theory of metric-measure spaces with Ricci curvature bounded from below [3,4,5, 21, 34, 47, 48].

[16, 35, 37] encodes a valuable geometric information, with relevant applications to Riemannian geometry and to the recent theory of metric-measure spaces with Ricci curvature bounded from below [3,4,5, 21, 34, 47, 48].

It has been a challenging question to find a corresponding distance (enjoying analogous deep geometric properties) between finite positive Borel measures with arbitrary mass in  . In the present paper we will show that by choosing the particular cost function

. In the present paper we will show that by choosing the particular cost function

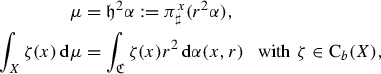

the corresponding Logarithmic-Entropy Transport problem

coincides with a (squared) distance in  (which we will call Hellinger–Kantorovich distance and denote by

(which we will call Hellinger–Kantorovich distance and denote by  ) that can play the same fundamental role like the Kantorovich–Wasserstein distance for

) that can play the same fundamental role like the Kantorovich–Wasserstein distance for  .

.

Here is a (still non exhaustive) list of our main results of part II concerning the Hellinger–Kantorovich distance.

-

(i)

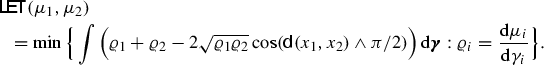

The representation (1.13) based on the Marginal Perspective function (1.14) yields

(1.21)

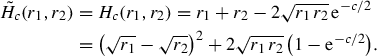

(1.21)By performing the rescaling \(r_i\mapsto r_i^2\) we realize that the function \(H(x_1,r_1^2;x_2,r_2^2)\) is strictly related to the squared (semi)-distance

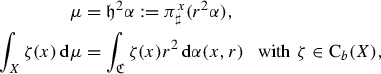

$$\begin{aligned} \mathsf{d}_\mathfrak {C}^2(x_1,r_1;x_2,r_2):= r_1^2+r_2^2-2r_1r_2\cos (\mathsf{d}(x_1,x_2)\wedge \pi ),\quad (x_i,r_i)\in X\times \mathbb {R}_+ \end{aligned}$$(1.22)which is the so-called cone distance in the metric cone \(\mathfrak {C}\) over X, cf. [10]. The latter is the quotient space of \(X\times \mathbb {R}_+\) obtained by collapsing all the points (x, 0), \(x\in X\), in a single point \(\mathfrak {o}\), called the vertex of the cone. We introduce the notion of “2-homogeneous marginal”

(1.23)

(1.23)to “project” measures

on measures

on measures  . Conversely, there are many ways to “lift” a measure

. Conversely, there are many ways to “lift” a measure  to

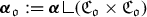

to  (e.g. by taking \(\alpha :=\mu \otimes \delta _1\)). The Hellinger–Kantorovich distance

(e.g. by taking \(\alpha :=\mu \otimes \delta _1\)). The Hellinger–Kantorovich distance  can then be defined by taking the best Kantorovich–Wasserstein distance between all the possible lifts of \(\mu _1,\mu _2\) in

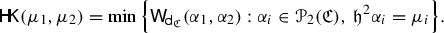

can then be defined by taking the best Kantorovich–Wasserstein distance between all the possible lifts of \(\mu _1,\mu _2\) in  , i.e.

, i.e.  (1.24)

(1.24)It turns out that (the square of) (1.24) yields an equivalent variational representation of the

functional. In particular, (1.24) shows that in the case of concentrated measures

functional. In particular, (1.24) shows that in the case of concentrated measures  (1.25)

(1.25)Notice that (1.24) resembles the very definition (1.18) of the Kantorovich–Wasserstein distance, where now the role of the marginals \(\pi ^i_\sharp \) is replaced by the homogeneous marginals

. It is a nontrivial part of the equivalence statement to check that the difference between the cut-off thresholds (\(\pi /2\) in (1.21) and \(\pi \) in (1.22) does not affect the identity

. It is a nontrivial part of the equivalence statement to check that the difference between the cut-off thresholds (\(\pi /2\) in (1.21) and \(\pi \) in (1.22) does not affect the identity  .

. -

(ii)

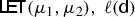

By refining the representation formula (1.24) by a suitable rescaling and gluing technique, we can prove that

is a metric space, a property that is not obvious from the

is a metric space, a property that is not obvious from the  -representation and depends on a subtle interplay of the entropy functions \(F_i(\sigma )=\sigma \log \sigma - \sigma +1\) and the cost function \(\mathsf{c}\) from (1.19). We show that the metric induces the weak convergence of measures in duality with bounded and continuous functions, thus it is topologically equivalent to the flat or Bounded Lipschitz distance [19, Sect. 11.3], see also [27, Thm. 3]. It also inherits the separability, completeness, length and geodesic properties from the correspondent ones of the underlying space \((X,\mathsf{d})\). On top of that, we will prove a precise superposition principle (in the same spirit of the Kantorovich–Wasserstein one [2, Sect. 8], [33]) for general absolutely continuous curves in

-representation and depends on a subtle interplay of the entropy functions \(F_i(\sigma )=\sigma \log \sigma - \sigma +1\) and the cost function \(\mathsf{c}\) from (1.19). We show that the metric induces the weak convergence of measures in duality with bounded and continuous functions, thus it is topologically equivalent to the flat or Bounded Lipschitz distance [19, Sect. 11.3], see also [27, Thm. 3]. It also inherits the separability, completeness, length and geodesic properties from the correspondent ones of the underlying space \((X,\mathsf{d})\). On top of that, we will prove a precise superposition principle (in the same spirit of the Kantorovich–Wasserstein one [2, Sect. 8], [33]) for general absolutely continuous curves in  in terms of dynamic plans in \(\mathfrak {C}\): as a byproduct, we can give a precise characterization of absolutely continuous curves and geodesics as homogeneous marginals of corresponding curves in

in terms of dynamic plans in \(\mathfrak {C}\): as a byproduct, we can give a precise characterization of absolutely continuous curves and geodesics as homogeneous marginals of corresponding curves in  . An interesting consequence of these results concerns the lower curvature bound of

. An interesting consequence of these results concerns the lower curvature bound of  in the sense of Alexandrov: it is a positively curved space if and only if \((X,\mathsf{d})\) is a geodesic space with curvature \(\ge 1\).

in the sense of Alexandrov: it is a positively curved space if and only if \((X,\mathsf{d})\) is a geodesic space with curvature \(\ge 1\). -

(iii)

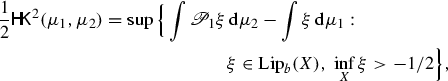

The dual formulation of the

problem provides a dual characterization of

problem provides a dual characterization of  , viz.

, viz.  (1.26)

(1.26)where \((\mathscr {P}_t)_{0\le t\le 1}\) is given by the inf-convolution

$$\begin{aligned} \mathscr {P}_{t}\xi (x):= & {} \inf _{x'\in X} \frac{\xi (x')}{1+2t\xi (x')}+ \frac{\sin ^2(\mathsf{d}_{\pi /2}(x,x'))}{2+4t\xi (x')}\\= & {} \inf _{x'\in X} \frac{1}{t}\Big (1-\frac{\cos ^2(\mathsf{d}_{\pi /2}(x,x'))}{ 1+2t\xi (x')}\Big ). \end{aligned}$$ -

(iv)

By exploiting the Hopf–Lax representation formula for the Hamilton–Jacobi equation in \(\mathfrak {C}\), we will show that for arbitrary initial data \(\xi \in \mathop {\mathrm{Lip}}\nolimits _b(X)\) with \(\inf \xi >-1/2\) the function \(\xi _t:=\mathscr {P}_t\xi \) is a subsolution (a solution, if \((X,\mathsf{d})\) is a length space) of

$$\begin{aligned} \partial ^+_t \xi _t(x)+\frac{1}{2}|\mathrm {D}_X\xi _t|^2(x)+2\xi _t^2(x)\le 0\quad \text {pointwise in }X\times (0,1). \end{aligned}$$If \((X,\mathsf{d})\) is a length space we thus obtain the characterization

(1.27)

(1.27)which reproduces, at the level of

, the nice link between

, the nice link between  and Hamilton–Jacobi equations. One of the direct applications of (1.27) is a sharp contraction property w.r.t.

and Hamilton–Jacobi equations. One of the direct applications of (1.27) is a sharp contraction property w.r.t.  for the Heat flow in \(\mathrm {RCD}(0,\infty )\) metric measure spaces (and therefore in every Riemannian manifold with nonnegative Ricci curvature).

for the Heat flow in \(\mathrm {RCD}(0,\infty )\) metric measure spaces (and therefore in every Riemannian manifold with nonnegative Ricci curvature). -

(v)

Formula (1.27) clarifies that the

distance can be interpreted as a sort of inf-convolution (see the Remark 8.19) between the Hellinger (in duality with solutions to the ODE \(\partial _t \xi +2\xi _t^2=0\)) and the Kantorovich–Wasserstein distance (in duality with (sub-)solutions to \(\partial _t\xi _t(x)+\frac{1}{2}|\mathrm {D}_X \xi _t|^2(x)\le 0\)). The Hellinger distance $$\begin{aligned} \mathsf {He}^2(\mu _1,\mu _2)=\int _X\big (\sqrt{\varrho _1}-\sqrt{\varrho _2}\big )^2\,\mathrm {d}\gamma ,\quad \mu _i=\varrho _i\gamma , \end{aligned}$$

distance can be interpreted as a sort of inf-convolution (see the Remark 8.19) between the Hellinger (in duality with solutions to the ODE \(\partial _t \xi +2\xi _t^2=0\)) and the Kantorovich–Wasserstein distance (in duality with (sub-)solutions to \(\partial _t\xi _t(x)+\frac{1}{2}|\mathrm {D}_X \xi _t|^2(x)\le 0\)). The Hellinger distance $$\begin{aligned} \mathsf {He}^2(\mu _1,\mu _2)=\int _X\big (\sqrt{\varrho _1}-\sqrt{\varrho _2}\big )^2\,\mathrm {d}\gamma ,\quad \mu _i=\varrho _i\gamma , \end{aligned}$$corresponds to the

functional generated by the discrete distance (\(\mathsf{d}(x_1,x_2)=\pi /2\) if \(x_1\ne x_2\)). We will prove that

functional generated by the discrete distance (\(\mathsf{d}(x_1,x_2)=\pi /2\) if \(x_1\ne x_2\)). We will prove that

where

(resp.

(resp.  ) is the

) is the  distance induced by \(n\mathsf{d}\) (resp. \(\mathsf{d}/n\)).

distance induced by \(n\mathsf{d}\) (resp. \(\mathsf{d}/n\)). -

(vi)

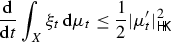

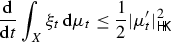

Combining the superposition principle and the duality with Hamilton–Jacobi equations, we eventually prove that

admits an equivalent dynamic characterization “à la Benamou-Brenier” [7, 18] (see also the recent [27]) in \(X=\mathbb {R}^d\):

admits an equivalent dynamic characterization “à la Benamou-Brenier” [7, 18] (see also the recent [27]) in \(X=\mathbb {R}^d\):  (1.28)

(1.28)Moreover, for the length space \(X=\mathbb {R}^d\) a curve \([0,1]\ni t\mapsto \mu (t)\) is geodesic curve w.r.t.

if and only if the coupled system $$\begin{aligned} \partial _t \mu _t+\nabla \cdot (\mathrm {D}_x \xi _t \mu _t)=4\xi _t\mu _t, \quad \partial _t\xi _t + \frac{1}{2}|\mathrm {D}_x\xi ^2|^2 + 2 \xi _t^2=0 \end{aligned}$$(1.29)

if and only if the coupled system $$\begin{aligned} \partial _t \mu _t+\nabla \cdot (\mathrm {D}_x \xi _t \mu _t)=4\xi _t\mu _t, \quad \partial _t\xi _t + \frac{1}{2}|\mathrm {D}_x\xi ^2|^2 + 2 \xi _t^2=0 \end{aligned}$$(1.29)holds for a suitable solution \(\xi _t=\mathscr {P}_{t}\xi _0\). The representation (1.28) is the starting point for further investigations concerning the link to gradient systems and reaction-diffusion equations, the cone geometry, the representation of geodesics and of \(\lambda \)-convex integral functionals: we refer the interested reader to the examples collected in [30].

Recall that the  variational problem is just one example in the realm of Entropy-Transport problems, and we think that other interesting applications can arise by different choices of entropies and cost. One of the simplest variations is to choose the (seemingly more natural) quadratic cost function \(\mathsf{c}(x_1,x_2):=\mathsf{d}^2(x_1,x_2)\) instead of the more “exotic” (1.19). The resulting functional is still associated to a distance expressed by

variational problem is just one example in the realm of Entropy-Transport problems, and we think that other interesting applications can arise by different choices of entropies and cost. One of the simplest variations is to choose the (seemingly more natural) quadratic cost function \(\mathsf{c}(x_1,x_2):=\mathsf{d}^2(x_1,x_2)\) instead of the more “exotic” (1.19). The resulting functional is still associated to a distance expressed by

where the minimum runs among all the plans  such that

such that  (we propose the name Gaussian Hellinger–Kantorovich distance). If \((X,\mathsf{d})\) is a complete, separable and length metric space,

(we propose the name Gaussian Hellinger–Kantorovich distance). If \((X,\mathsf{d})\) is a complete, separable and length metric space,  is a complete and separable metric space, inducing the weak topology as

is a complete and separable metric space, inducing the weak topology as  . However, it is not a length space in general, and we will show that the length distance generated by

. However, it is not a length space in general, and we will show that the length distance generated by  is precisely

is precisely  .

.

The plan of the paper is as follows.

Part I develops the general theory of Optimal Entropy-Transport problems. Section 2 collects some preliminary material, in particular concerning the measure-theoretic setting in arbitrary Hausdorff topological spaces (here we follow [44]) and entropy functionals. We devote some effort to deal with general functionals (allowing a singular part in the Definition (1.3)) in order to include entropies which may have only linear growth. The extension to this general framework of the duality Theorem 2.7 (well known in Polish topologies) requires some care and the use of lower semicontinuous test functions instead of continuous ones.

Section 3 introduces the class of Entropy-Transport problems, discussing some examples and proving a general existence result for optimal plans. The “reverse” formulation of Theorem 3.11, though simple, justifies the importance of dealing with the largest class of entropies and will play a crucial role in Sect. 5.

Section 4 is devoted to finding the dual formulation, proving its equivalence with the primal problem (cf. Theorem 4.11), deriving sharp optimality conditions (cf. Theorem 4.6) and proving the existence of optimal potentials in a suitable generalized sense (cf. Theorem 4.15). The particular class of “regular” problems (where the results are richer) is also studied in some detail.

Section 5 introduces the third formulation (1.12) based on the marginal perspective function (1.11) and its “homogeneous” version (Sect. 5.2). The proof of the equivalence with the previous formulations is presented in Theorem 5.5 and Theorem 5.8. This part provides the crucial link for the further development in the cone setting.

Part II is devoted to Logarithmic Entropy-Transport ( ) problems (Sect. 6) and to their applications to the Hellinger–Kantorovich distance

) problems (Sect. 6) and to their applications to the Hellinger–Kantorovich distance  on

on  .

.

The Hellinger–Kantorovich distance is introduced by the lifting technique in the cone space in Sect. 7, where we try to follow a presentation modeled on the standard one for the Kantorovich–Wasserstein distance, independently from the results on the  -problems. After a brief review of the cone geometry (Sect. 7.1) we discuss in some detail the crucial notion of homogeneous marginals in Sect. 7.2 and the useful tightness conditions (Lemma 7.3) for plans with prescribed homogeneous marginals. Section 7.3 introduces the definition of the

-problems. After a brief review of the cone geometry (Sect. 7.1) we discuss in some detail the crucial notion of homogeneous marginals in Sect. 7.2 and the useful tightness conditions (Lemma 7.3) for plans with prescribed homogeneous marginals. Section 7.3 introduces the definition of the  distance and its basic properties. The crucial rescaling and gluing techniques are discussed in Sect. 7.4: they lie at the core of the main metric properties of

distance and its basic properties. The crucial rescaling and gluing techniques are discussed in Sect. 7.4: they lie at the core of the main metric properties of  , leading to the proof of the triangle inequality and to the characterizations of various metric and topological properties in Sect. 7.5. The equivalence with the

, leading to the proof of the triangle inequality and to the characterizations of various metric and topological properties in Sect. 7.5. The equivalence with the  formulation is the main achievement of Sect. 7.6 (Theorem 7.20), with applications to the duality formula (Theorem 7.21), to the comparisons with the classical Hellinger and Kantorovich distances (Sect. 7.7) and with the Gaussian Hellinger–Kantorovich distance (Sect. 7.8).

formulation is the main achievement of Sect. 7.6 (Theorem 7.20), with applications to the duality formula (Theorem 7.21), to the comparisons with the classical Hellinger and Kantorovich distances (Sect. 7.7) and with the Gaussian Hellinger–Kantorovich distance (Sect. 7.8).

The last section of the paper collects various important properties of  that share a common “dynamic” flavor. After a preliminary discussion of absolutely continuous curves and geodesics in the cone space \(\mathfrak {C}\) in Sect. 8.1, we derive the basic superposition principle in Theorem 8.4. This is the cornerstone to obtain a precise characterization of geodesics (Theorem 8.6), a sharp lower curvature bound in the Alexandrov sense (Theorem 8.8), and to prove the dynamic characterization à la Benamou-Brenier of Sect. 8.5. The other powerful tool is provided by the duality with subsolutions to the Hamilton–Jacobi equation (Theorem 8.12), which we derive after a preliminary characterization of metric slopes for a suitable class of test functions in \(\mathfrak {C}\). One of the most striking results of Sect. 8.4 is the explicit representation formula for solutions to the Hamilton–Jacobi equation in X, that we obtain by a careful reduction technique from the Hopf–Lax formula in \(\mathfrak {C}\). In this respect, we think that Theorem 8.11 is interesting by itself and could find important applications in different contexts. From the point of view of Entropy-Transport problems, Theorem 8.11 is particularly relevant since it provides a dynamic interpretation of the dual characterization of the

that share a common “dynamic” flavor. After a preliminary discussion of absolutely continuous curves and geodesics in the cone space \(\mathfrak {C}\) in Sect. 8.1, we derive the basic superposition principle in Theorem 8.4. This is the cornerstone to obtain a precise characterization of geodesics (Theorem 8.6), a sharp lower curvature bound in the Alexandrov sense (Theorem 8.8), and to prove the dynamic characterization à la Benamou-Brenier of Sect. 8.5. The other powerful tool is provided by the duality with subsolutions to the Hamilton–Jacobi equation (Theorem 8.12), which we derive after a preliminary characterization of metric slopes for a suitable class of test functions in \(\mathfrak {C}\). One of the most striking results of Sect. 8.4 is the explicit representation formula for solutions to the Hamilton–Jacobi equation in X, that we obtain by a careful reduction technique from the Hopf–Lax formula in \(\mathfrak {C}\). In this respect, we think that Theorem 8.11 is interesting by itself and could find important applications in different contexts. From the point of view of Entropy-Transport problems, Theorem 8.11 is particularly relevant since it provides a dynamic interpretation of the dual characterization of the  functional. In Sect. 8.6 we show that in the Euclidean case \(X=\mathbb {R}^d\) all geodesic curves are characterized by the system (1.29). The last Sect. 8.7 provides various contraction results: in particular we extend the well known contraction property of the Heat flow in spaces with nonnegative Riemannian Ricci curvature to

functional. In Sect. 8.6 we show that in the Euclidean case \(X=\mathbb {R}^d\) all geodesic curves are characterized by the system (1.29). The last Sect. 8.7 provides various contraction results: in particular we extend the well known contraction property of the Heat flow in spaces with nonnegative Riemannian Ricci curvature to  .

.

Note during final preparation. The earliest parts of the work developed here were first presented at the ERC Workshop on Optimal Transportation and Applications in Pisa in 2012. Since then the authors developed the theory continuously further and presented results at different workshops and seminars, see Appendix A for some remarks concerning the chronological development of our theory.

In June 2015 the authors became aware of the parallel work [27], which mainly concerns the dynamical approach to the Hellinger–Kantorovich distance discussed in Sect. 8.5 and the metric-topological properties of Sect. 7.5 in the Euclidean case.

Moreover, in mid August 2015 they became aware of the works [13, 14], which start from the dynamical formulation of the Hellinger–Kantorovich distance in the Euclidean case, prove existence of geodesics and sufficient optimality and uniqueness conditions (which we state in a stronger form in Sect. 8.6) with a precise characterization in the case of a pair of Dirac masses. Moreover, they provide a detailed discussion of curvature properties following Otto’s formalism [36], and study more general dynamic costs on the cone space with their equivalent primal and dual static formulation (leading to characterizations analogous to (7.1) and (6.14) in the Hellinger–Kantorovich case).

Apart from the few above remarks, these independent works did not influence the first (cf. arXiv1508.07941v1) and the present version of this manuscript, which is essentially a minor modification and correction of the first version. In the final Appendix A we give a brief account of the chronological development of our theory.

Part I. Optimal Entropy-Transport problems

2 Preliminaries

2.1 Measure theoretic notation

Positive Radon measures, narrow and weak convergence, tightness. Let \((X,\tau )\) be a Hausdorff topological space. We will denote by  the \(\sigma \)-algebra of its Borel sets and by

the \(\sigma \)-algebra of its Borel sets and by  the set of finite nonnegative Radon measures on X [44], i.e. \(\sigma \)-additive set functions

the set of finite nonnegative Radon measures on X [44], i.e. \(\sigma \)-additive set functions  such that

such that

The restriction \(B\mapsto \mu (B\cap A)\) of a Radon measure \(\mu \) to a Borel set A will be denoted by  .

.

Radon measures have strong continuity properties with respect to monotone convergence. For this, denote by \(\mathrm {LSC}(X)\) the space of all lower semicontinuous real-valued functions on X and consider a nondecreasing directed family \((f_\lambda )_{\lambda \in \mathbb {L}}\subset \mathrm {LSC}(X)\) (where \(\mathbb {L}\) is a possibly uncountable directed set) of nonnegative and lower semicontinuous functions \(f_\lambda \) converging to f, we have (cf. [44, Prop. 5, p. 42])

We endow  with the narrow topology, the coarsest (Hausdorff) topology for which all the maps \( \mu \mapsto \int _X \varphi \,\mathrm {d}\mu \) are lower semicontinuous, as \(\varphi :X\rightarrow \mathbb {R}\) varies among the set \(\mathrm {LSC}_b(X)\) of all bounded lower semicontinuous functions [44, p. 370, Def. 1].

with the narrow topology, the coarsest (Hausdorff) topology for which all the maps \( \mu \mapsto \int _X \varphi \,\mathrm {d}\mu \) are lower semicontinuous, as \(\varphi :X\rightarrow \mathbb {R}\) varies among the set \(\mathrm {LSC}_b(X)\) of all bounded lower semicontinuous functions [44, p. 370, Def. 1].

Remark 2.1

(Radon versus Borel, narrow versus weak) When \((X,\tau )\) is a Radon space (in particular a Polish, or Lusin or Souslin space [44, p. 122]) then every Borel measure satisfies (2.1), so that  coincides with the set of all nonnegative and finite Borel measures. Narrow topology is in general stronger than the standard weak topology induced by the duality with continuous and bounded functions of \(\mathrm {C}_b(X)\). However, when \((X,\tau )\) is completely regular, i.e.

coincides with the set of all nonnegative and finite Borel measures. Narrow topology is in general stronger than the standard weak topology induced by the duality with continuous and bounded functions of \(\mathrm {C}_b(X)\). However, when \((X,\tau )\) is completely regular, i.e.

(in particular when \(\tau \) is metrizable), narrow and weak topology coincide [44, p. 371]. Therefore when \((X,\tau )\) is a Polish space we recover the usual setting of Borel measures endowed with the weak topology.

We now turn to the compactness properties of subsets of  . Let us first recall that a set

. Let us first recall that a set  is bounded if

is bounded if  ; it is equally tight if

; it is equally tight if

Compactness with respect to narrow topology is guaranteed by an extended version of Prokhorov’s Theorem [44, Thm. 3, p. 379]. Tightness of weakly convergent sequences in metrizable spaces is due to Le Cam [29].

Theorem 2.2

If a subset  is bounded and equally tight then it is relatively compact with respect to the narrow topology. The converse is also true in the following cases:

is bounded and equally tight then it is relatively compact with respect to the narrow topology. The converse is also true in the following cases:

-

(i)

\((X,\tau )\) is a locally compact or a Polish space;

-

(ii)

\((X,\tau )\) is metrizable and

for a given weakly convergent sequence \((\mu _n)\).

for a given weakly convergent sequence \((\mu _n)\).

If  and Y is another Hausdorff topological space, a map \(T:X\rightarrow Y\) is Lusin \(\mu \)

-measurable [44, Ch. I, Sect. 5] if for every \(\varepsilon >0\) there exists a compact set \(K_\varepsilon \subset X\) such that \(\mu (X\setminus K_\varepsilon )\le \varepsilon \) and the restriction of T to \(K_\varepsilon \) is continuous. We denote by

and Y is another Hausdorff topological space, a map \(T:X\rightarrow Y\) is Lusin \(\mu \)

-measurable [44, Ch. I, Sect. 5] if for every \(\varepsilon >0\) there exists a compact set \(K_\varepsilon \subset X\) such that \(\mu (X\setminus K_\varepsilon )\le \varepsilon \) and the restriction of T to \(K_\varepsilon \) is continuous. We denote by  the push-forward measure defined by

the push-forward measure defined by

For  and a Lusin \(\mu \)-measurable \(T:X\rightarrow Y\), we have

and a Lusin \(\mu \)-measurable \(T:X\rightarrow Y\), we have  . The linear space \(\mathrm {B}(X)\) (resp. \(\mathrm {B}_b(X)\)) denotes the space of real Borel (resp. bounded Borel) functions. If

. The linear space \(\mathrm {B}(X)\) (resp. \(\mathrm {B}_b(X)\)) denotes the space of real Borel (resp. bounded Borel) functions. If  , \(p\in [1,\infty ]\), we will denote by \(\mathrm {L}^p(X,\mu )\) the subspace of Borel p-integrable functions w.r.t. \(\mu \), without identifying \(\mu \)-almost equal functions.

, \(p\in [1,\infty ]\), we will denote by \(\mathrm {L}^p(X,\mu )\) the subspace of Borel p-integrable functions w.r.t. \(\mu \), without identifying \(\mu \)-almost equal functions.

Lebesgue decomposition. Given  , we write \(\gamma \ll \mu \) if \(\mu (A)=0\) yields \(\gamma (A)=0\) for every

, we write \(\gamma \ll \mu \) if \(\mu (A)=0\) yields \(\gamma (A)=0\) for every  . We say that \(\gamma \perp \mu \) if there exists

. We say that \(\gamma \perp \mu \) if there exists  such that \(\mu (B)=0=\gamma (X\setminus B)\).

such that \(\mu (B)=0=\gamma (X\setminus B)\).

Lemma 2.3

(Lebesgue decomposition) For every  with \(\gamma (X)+\mu (X)>0\) there exists Borel functions \(\sigma ,\varrho :X\rightarrow [0,\infty )\) and a Borel partition \((A,A_\gamma ,A_\mu )\) of X with the following properties:

with \(\gamma (X)+\mu (X)>0\) there exists Borel functions \(\sigma ,\varrho :X\rightarrow [0,\infty )\) and a Borel partition \((A,A_\gamma ,A_\mu )\) of X with the following properties:

Moreover, the sets \(A,A_\gamma ,A_\mu \) and the densities \(\sigma ,\varrho \) are uniquely determined up to \((\mu +\gamma )\)-negligible sets.

Proof

Let \(\theta \in \mathrm {B}(X;[0,1])\) be the Lebesgue density of \(\gamma \) w.r.t. \(\nu :=\mu +\gamma \). Thus, \(\theta \) is uniquely determined up to \(\nu \)-negligible sets. The Borel partition can be defined by setting \(A:=\{x\in X:0<\theta (x)<1\}\), \(A_\gamma :=\{x\in X:\theta (x)=1\}\) and \(A_\mu :=\{x\in X:\theta (x)=0\}\). By defining \(\sigma :=\theta /(1-\theta )\), \(\varrho :=1/\sigma =(1-\theta )/\theta \) for every \(x\in A\) and \(\sigma =\varrho \equiv 0\) in \(X\setminus A\), we obtain Borel functions satisfying (2.7) and (2.8).

Conversely, it is not difficult to check that starting from a decomposition as in (2.6), (2.7), and (2.8) and defining \(\theta \equiv 0\) in \(A_\mu \), \(\theta \equiv 1\) in \(A_\gamma \) and \(\theta :=\sigma /(1+\sigma )\) in A we obtain a Borel function with values in [0, 1] such that \(\gamma =\theta (\mu +\gamma )\). \(\square \)

2.2 Min–max and duality

We recall now a powerful form of von Neumann’s Theorem, concerning minimax properties of convex-concave functions in convex subsets of vector spaces and refer to [20, Prop. 1.2+3.2, Chap. VI] for a general exposition.

Let A, B be nonempty convex sets of some vector spaces and let us suppose that A is endowed with a Hausdorff topology. Let \(L:A\times B\rightarrow \mathbb {R}\) be a function such that

Notice that for arbitrary functions L one always has

so that equality holds in (2.10) if  . When

. When  is finite, we can still have equality thanks to the following result.

is finite, we can still have equality thanks to the following result.

The statement has the advantage of involving a minimal set of topological assumptions (we refer to [45, Thm. 3.1] for the proof; see also [9, Chapter 1, Prop. 1.1]).

Theorem 2.4

(Minimax duality) Assume that (2.9a) and (2.9b) hold. If there exists \(b_\star \in B\) and  such that

such that

then

2.3 Entropy functions and their conjugates

Entropy functions in \([0,\infty )\). We say that \(F:[0,\infty )\rightarrow [0,\infty ]\) belongs to the class \(\Gamma (\mathbb {R}_+)\) of admissible entropy function if it satisfies

where

It is useful to recall that for every \(x_0\in {\text {D}}(F)\) the map \(x\mapsto \frac{F(x)-F(x_0)}{x-x_0}\) is increasing in \({\text {D}}(F)\setminus \{x_0\}\), thanks to the convexity of F. The recession constant \({F'_\infty }\), the right derivative \({F_0'}\) at 0, and the asymptotic affine coefficient \({{\mathrm {aff}} {F}_\infty }\) are defined by

To avoid trivial cases, we assumed in (2.13) that the proper domain \({\text {D}}(F)\) contains at least one strictly positive real number. By convexity, \({\text {D}}(F)\) is a subinterval of \([0,\infty )\), and we will mainly focus on the case when \({\text {D}}(F)\) has nonempty interior and F has superlinear growth, i.e. \({F'_\infty } =+\infty \). Still it will be useful to deal with the general class defined by (2.13).

Legendre duality. The Legendre conjugate function \(F^*:\mathbb {R}\rightarrow (-\infty ,+\infty ]\) is defined by

with proper domain \({\text {D}}(F^*):=\{\phi \in \mathbb {R}:F^*(\phi )\in \mathbb {R}\}\); we will also denote by \(\mathring{{\text {D}}}(F^*)\) the interior of \({\text {D}}(F^*)\). Strictly speaking, \(F^*\) is the conjugate of the convex function \({\tilde{F}}:\mathbb {R}\rightarrow (-\infty ,+\infty ]\), obtained by extending F to \(+\infty \) for negative arguments, and it is related to the subdifferential \(\partial F:\mathbb {R}\rightarrow 2^{\mathbb {R}}\) by

Notice that

so that \(F^*\) is finite and continuous in \((-\infty ,{F'_\infty } )\), nondecreasing, and satisfies

Concerning the behavior of \(F^*\) at the boundary of its proper domain we can distinguish a few cases depending on the behavior of F at 0 and \(+\infty \):

-

If \({F_0'}=-\infty \) (in particular if \(F(0)=+\infty \)) then \(F^*\) is strictly increasing in \({\text {D}}(F^*)\).

-

If \({F_0'} \) is finite, then \(F^*\) is strictly increasing in \([{F_0'},{F'_\infty })\) and takes the constant value \(-F(0)\) in \((-\infty ,{F_0'}]\). Thus \(-F(0)\) belongs to the range of \(F^*\) only if \({F_0'}>-\infty \).

-

If \({F'_\infty }\) is finite, then \(\lim _{\phi \uparrow {F'_\infty }}F^*(\phi )={{\mathrm {aff}} {F}_\infty }\). Thus \({F'_\infty }\in {\text {D}}(F^*)\) only if \({{\mathrm {aff}} {F}_\infty }<\infty \).

-

The degenerate case when \({F'_\infty }={F_0'}\) occurs only when F is linear.

If F is not linear, we always have

with the obvious extensions to the boundaries of the intervals when \({F_0'}\) or \({{\mathrm {aff}} {F}_\infty }\) are finite.

We introduce the closed convex subset \(\mathfrak {F}\) of \(\mathbb {R}^2\) associated to the epigraph of \(F^*\)

since \({\text {D}}(F^*)\) has nonempty interior, \(\mathfrak {F}\) has nonempty interior \(\mathring{\mathfrak {F}}\) as well, with

and that \(\mathfrak {F}=\overline{\mathring{\mathfrak {F}}}.\) The function F can be recovered from \(F^*\) and from \(\mathfrak {F}\) through the dual Fenchel–Moreau formula

Notice that \(\mathfrak {F}\) satisfies the obvious monotonicity property

If F is finite in a neighborhood of \(+\infty \), then \(F^*\) is superlinear as \(\phi \uparrow \infty \). More precisely, its asymptotic behavior as \(\phi \rightarrow \pm \infty \) is related to the proper domain of F by

We will also use the duality formula

and we adopt the notation \(\phi _-\) and \(\phi _+\) to denote the negative and the positive part of a function \(\phi \), where \(\phi _-(x):=\min \{\phi (x), 0\}\) and \(\phi _+(x):=\max \{\phi (x),0\}\).

Example 2.5

(Power-like entropies) An important class of entropy functions is provided by the power like functions \(U_p:[0,\infty )\rightarrow [0,\infty ]\) with \(p\in \mathbb {R}\) characterized by

Equivalently, we have the explicit formulas

with \(U_p(0)=1/p\) if \(p>0\) and \(U_p(0)=+\infty \) if \(p\le 0\).

Using the dual exponent \(q=p/(p-1)\), the corresponding Legendre conjugates read

Reverse entropies. Let us now introduce the reverse density function \(R:[0,\infty )\rightarrow [0,\infty ]\) as

It is not difficult to check that \(R\) is a proper, convex and lower semicontinuous function, with

so that \(R\in \Gamma (\mathbb {R}_+)\) and the map \(F\mapsto R\) is an involution on \(\Gamma (\mathbb {R}_+)\). A further remarkable involution property is enjoyed by the dual convex set \(\mathfrak {R}:=\{(\psi ,\phi )\in \mathbb {R}^2:R^*(\psi )+\phi \le 0\}\) defined as (2.21): it is easy to check that

a relation that obviously holds for the interiors of \(\mathfrak {F}\) and \(\mathfrak {R}\) as well. It follows that the Legendre transform of \(R\) and F are related by

and, recalling (2.22),

Both the above conditions characterize the interior of \(\mathfrak {F}\). As in (2.20) we have

with \(\mathring{{\text {D}}}(R^*)=(-\infty ,F(0))\). A last useful identity involves the subdifferentials of F and \(R\): for every \(s,r>0\) with \(sr=1\), and \(\phi ,\psi \in \mathbb {R}\) we have

It is not difficult to check that the reverse entropy associated to \(U_p\) is \(U_{1-p}\).

2.4 Relative entropy integral functionals

For \(F\in \Gamma (\mathbb {R}_+)\) we consider the functional  defined by

defined by

where \(\gamma =\sigma \mu +\gamma ^\perp \) is the Lebesgue decomposition of \(\gamma \) w.r.t. \(\mu \), see Lemma 2.3. Notice that

and, whenever \(\eta _0\) is the null measure, we have

where, as usual in measure theory, we adopt the convention \(0\cdot \infty =0\).

Because of our applications in Sect. 3, our next lemma deals with Borel functions \(\varphi \in \mathrm {B}(X;{\bar{\mathbb {R}}})\) taking values in the extended real line \({\bar{\mathbb {R}}}:=\mathbb {R}\cup \{\pm \infty \}\). By \({\bar{\mathfrak {F}}}\) we denote the closure of \(\mathfrak {F}\) in \({\bar{\mathbb {R}}}\times {\bar{\mathbb {R}}}\), i.e.

and, symmetrically by (2.29) and (2.30),

In particular, we have

Lemma 2.6

If  and \( (\phi ,\psi ) \in \mathrm {B}(X;{\bar{\mathfrak {F}}})\) satisfy

and \( (\phi ,\psi ) \in \mathrm {B}(X;{\bar{\mathfrak {F}}})\) satisfy

then \(\phi _+\in \mathrm {L}^1(X,\gamma )\) (resp. \(\psi _+\in \mathrm {L}^1(X,\mu )\)) and

Whenever \(\psi \in \mathrm {L}^1(X,\mu )\) or \(\phi \in \mathrm {L}^1(X,\gamma )\), then equality holds in (2.41) if and only if for the Lebesgue decomposition given by Lemma 2.3 one has

Equation (2.42) can equivalently be formulated as \(\psi \in \partial R(\varrho )\) and \(\phi =-R^*(\psi )\).

Proof

Let us first show that in both cases the two integrals of (2.41) are well defined (possibly taking the value \(-\infty \)). If \(\psi _-\in \mathrm {L}^1(X,\mu )\) (in particular \(\psi >-\infty \) \(\mu \)-a.e.) with \((\phi ,\psi )\in {\bar{\mathfrak {F}}}\) we use the pointwise bound \(s\phi \le F(s)-\psi \) that yields \(s\phi _+\le (F(s)-\psi )_+\le F(s)+\psi _-\) obtaining \(\phi _+\in \mathrm {L}^1(X,\gamma )\), since \((\phi ,\psi )\in {\bar{\mathfrak {F}}}\) yields \(\phi _+\le {F'_\infty } \).

If \(\phi _-\in \mathrm {L}^1(X,\gamma )\) (and thus \(\phi >-\infty \) \(\gamma \)-a.e.) the analogous inequality \(\psi _+\le F(s)+s\phi _-\) yields \(\psi _+\in \mathrm {L}^1(X,\mu )\). Then, (2.41) follows from (2.21) and (2.40).

Once \(\phi \in \mathrm {L}^1(X,\mu )\) (or \(\psi \in \mathrm {L}^1(X,\gamma )\)), estimate (2.41) can be written as

and by (2.21) and (2.40) the equality case immediately yields that each of the three integrals of the previous formula vanishes. Since \((\phi ,\psi )\) lies in \({\bar{\mathfrak {F}}}\subset \mathbb {R}^2\) \((\mu +\gamma )\)-a.e. in A, the vanishing of the first integrand yields \(\psi =-F^*(\sigma )\) and \(\phi \in \partial F(\sigma )\) by (2.17) for \(\mu \) and \((\mu +\gamma )\) almost every point in A. The equivalence (2.34) provides the reversed identities \(\psi \in \partial R(\varrho )\), \(\phi =-R^*(\psi )\).

The relations in (2.43) follow easily by the vanishing of the last two integrals and the fact that \(\psi \) is finite \(\mu \)-a.e. and \(\phi \) is finite \(\gamma \)-a.e. \(\square \)

A simple application of (2.41) yields the following variant of Jensen’s inequality

In order to prove it, we first choose arbitrary \(({\bar{\phi }},{\bar{\psi }})\in \mathfrak {F}\) and constant functions \(\phi (x)\equiv {\bar{\phi }}\), \(\psi (x)\equiv {\bar{\psi }}\) in (2.41), obtaining

we then take the supremum with respect to \(({\bar{\phi }},{\bar{\psi }})\in \mathfrak {F}\), recalling (2.23).

The next theorem gives a characterization of the relative entropy \(\mathscr {F}\), which is the main result of this section. Its proof is a careful adaptation of [2, Lemma 9.4.4] to the present more general setting, which includes the sublinear case when \({F'_\infty }<\infty \) and the lack of complete regularity of the space. This suggests to deal with lower semicontinuous functions instead of continuous ones. Whenever \(A\subset \mathbb {R}\), we denote by \(\mathrm {LSC}_s(X;A)\) the class of lower semicontinuous simple real functions

by omitting A when \(A=\mathbb {R}\); we introduce the notation \(\varphi =-\phi \) and the concave increasing function

by (2.18) and (2.19) the interior of the proper domain of \(F^{\circ }\) is \(\mathring{{\text {D}}}(F^{\circ })=(-{F'_\infty },+\infty )\) and \(\lim _{\varphi \downarrow -{F'_\infty }}F^{\circ }(\varphi )=-\infty \), \(\lim _{\varphi \uparrow +\infty }F^{\circ }(\varphi )=F(0)\).

Theorem 2.7

(Duality and lower semicontinuity) For every  we have

we have

Moreover, the space \(\mathrm {LSC}_s(X)\) in the supremum of (2.46), can also be replaced by the space \(\mathrm {LSC}_b(X)\) of bounded l.s.c. functions or by the space \(\mathrm {B}_b(X)\) of bounded Borel functions and the constraint \( (\phi (x),\psi (x))\in \mathring{\mathfrak {F}}\) in (2.46) can also be relaxed to \( (\phi (x),\psi (x))\in \mathfrak {F}\) for every \(x\in X\). Similarly, the spaces \(\mathrm {LSC}_s(X,\mathring{{\text {D}}}(R^*))\) (resp. \(\mathrm {LSC}_s(X,\mathring{{\text {D}}}(F^{\circ })) \)) of (2.47) (resp. (2.48)) can be replaced by \(\mathrm {LSC}_b(X,{\text {D}}(R^*))\) or \(\mathrm {B}_b(X,{\text {D}}(R^*))\) (resp. \(\mathrm {LSC}_b(X,{\text {D}}(F^{\circ }))\) or \(\mathrm {B}_b(X,{\text {D}}(F^{\circ }))\)).

Remark 2.8

If \((X,\tau )\) is completely regular (recall (2.3)), then we can equivalently replace lower semicontinuous functions by continuous ones in (2.46), (2.47) and (2.48). E.g. in the case of (2.46) we have

whereas (2.47) and (2.48) become

In fact, considering first (2.46), by complete regularity it is possible to express every pair \(\phi ,\psi \) of bounded lower semicontinuous functions with values in \(\mathring{\mathfrak {F}}\) as the supremum of a directed family of continuous and bounded functions \((\phi _\alpha ,\psi _\alpha )_{\alpha \in \mathbb {A}}\) which still satisfy the constraint given by \(\mathring{\mathfrak {F}}\) due to (2.24). We can then apply the continuity (2.2) of the integrals with respect to the Radon measures \(\mu \) and \(\gamma \).

In order to replace l.s.c. functions with continuous ones in (2.47) we can approximate \(\psi \) by an increasing directed family of continuous functions \((\psi _\alpha )_{\alpha \in \mathbb {A}}\). By truncation, one can always assume that \(\max \psi \ge \sup \psi _\alpha \ge \inf \psi _\alpha \ge \min \psi \). Since \(R^*(\psi )\) is bounded, it is easy to check that also \(R^*(\psi _\alpha )\) is bounded and it is an increasing directed family converging to \(R^*(\psi )\). An analogous argument works for (2.49).

Proof

Since the statements are trivial in the case when \(\mu =\gamma =\eta _0\) are the null measure, it is clearly not restrictive to assume \((\mu +\gamma )(X)>0\). Let us prove (2.46): denoting by \(\mathscr {F}'\) its right-hand side, Lemma 2.6 yields \(\mathscr {F}\ge \mathscr {F}'\). In order to prove the opposite inequality we consider the Lebesgue decomposition given by Lemma 2.3: let  be a \(\mu \)-negligible Borel set where \(\gamma ^\perp \) is concentrated, let \({\tilde{A}}:=X\setminus A_\gamma =A\cup A_\mu \) and let \(\sigma :X\rightarrow [0,\infty )\) be a Borel density for \(\gamma \) w.r.t. \(\mu \). We consider a countable subset \((\phi _n,\psi _n)_{n=1}^\infty \) with \(\psi _1=\phi _1=0\), which is dense in \(\mathring{\mathfrak {F}}\) and an increasing sequence \({\bar{\phi }}_n\in (-\infty ,{F'_\infty })\) converging to \({F'_\infty }\), with \({\bar{\psi }}_n := -F^*({\bar{\phi }}_n)\). By (2.23) we have

be a \(\mu \)-negligible Borel set where \(\gamma ^\perp \) is concentrated, let \({\tilde{A}}:=X\setminus A_\gamma =A\cup A_\mu \) and let \(\sigma :X\rightarrow [0,\infty )\) be a Borel density for \(\gamma \) w.r.t. \(\mu \). We consider a countable subset \((\phi _n,\psi _n)_{n=1}^\infty \) with \(\psi _1=\phi _1=0\), which is dense in \(\mathring{\mathfrak {F}}\) and an increasing sequence \({\bar{\phi }}_n\in (-\infty ,{F'_\infty })\) converging to \({F'_\infty }\), with \({\bar{\psi }}_n := -F^*({\bar{\phi }}_n)\). By (2.23) we have

Hence, Beppo Levi’s monotone convergence theorem (notice that \(F_N\ge F_1=0\)) implies \(\mathscr {F}(\gamma |\mu )=\lim _{N\uparrow \infty } \mathscr {F}_N'(\gamma |\mu )\), where

It is therefore sufficient to prove that

We fix \(N\in \mathbb {N}\), set \(\phi _{0}:={\bar{\phi }}_N\), \(\psi _{0}:={\bar{\psi }}_N\), and recursively define the Borel sets \(A_j\), for \(j=0,\ldots ,N\), with \(A_0:=A_\gamma \) and

Since \(F_1\le F_2\le \cdots \le F_N\), the sets \(A_i\), \(i=1,\ldots ,N\), form a Borel partition of \({\tilde{A}}\). As \(\mu \) and \(\gamma \) are Radon measures, for every \(\varepsilon >0\) we find disjoint compact sets \(K_j\subset A_j\) and disjoint open sets (by the Hausdorff separation property of X) \(G_j\supset K_j\) such that

where

Since \((\phi _n,\psi _n)\in \mathfrak {F}\) for every \(n\in \mathbb {N}\) and \(\mathring{\mathfrak {F}}\) satisfies the monotonicity property (2.24) \((\phi _{\mathrm{min}}^N,\psi _{\mathrm{min}}^N)\in \mathring{\mathfrak {F}}\); since the sets \(G_n\) are disjoint, the functions

take values in \(\mathring{\mathfrak {F}}\) and are lower semicontinuous thanks to the representation formula

Moreover, they satisfy

Since \(\varepsilon \) is arbitrary we obtain (2.50).

Equation (2.47) follows directly by (2.46) and the previous Lemma 2.6. In fact, denoting by \(\mathscr {F}''\) the right-hand side of (2.47), Lemma 2.6 shows that \(\mathscr {F}''(\gamma |\mu )\le \mathscr {F}(\gamma |\mu )=\mathscr {F}'(\gamma |\mu )\). On the other hand, if \(\phi ,\psi \in \mathrm {LSC}_s(X)\) with \((\phi ,\psi )\in \mathring{\mathfrak {F}}\) then \(\psi \) takes values in \(\mathring{{\text {D}}}(R^*)\) and \(-R^*(\psi )\ge \phi \). Hence, the map \(x\mapsto R^*(\psi (x))\) belongs to \(\mathrm {LSC}_s(X)\) since \(R^*\) is real valued and nondecreasing in the interior of its domain, and it is bounded from above by \(-\phi \). We thus get \(\mathscr {F}''(\gamma |\mu )\ge \mathscr {F}'(\gamma |\mu )\).

In order to show (2.48), we observe that for every \(\psi \in \mathrm {LSC}_s(X,\mathring{{\text {D}}}(R^*))\) and \(\varepsilon >0\) we can set \(\varphi :=R^*(\psi )+\varepsilon \in \mathrm {LSC}_s(X,\mathring{{\text {D}}}(F^{\circ }));\) since \((\psi ,-R^*(\psi )-\varepsilon )\in \mathring{\mathfrak {R}}\), (2.31) yields \(\psi < -F^*(-\varphi )=F^{\circ }(\varphi )\) so that \(\int F^{\circ }(\varphi )\,\mathrm {d}\mu -\int \varphi \,\mathrm {d}\gamma \ge \int \psi \,\mathrm {d}\mu -\int R^*(\psi )\,\mathrm {d}\gamma -\varepsilon \gamma (X)\). By construction and (2.30) we also have \((-\varphi ,F^{\circ }(\varphi ))\in \mathring{\mathfrak {F}}\) so that \(\int F^{\circ }(\varphi )\,\mathrm {d}\mu -\int \varphi \,\mathrm {d}\gamma \le \mathscr {F}(\gamma |\mu )\) by Lemma 2.6. Passing to the limit as \(\varepsilon \downarrow 0\) and recalling (2.47) we obtain (2.48).

When one replaces \(\mathrm {LSC}_s(X)\) with \(\mathrm {LSC}_b(X)\) or \(\mathrm {B}_b(X)\) in (2.46) and the constraint \((\phi (x),\psi (x))\in \mathring{\mathfrak {F}}\) with \((\phi (x),\psi (x))\in \mathfrak {F}\) (or even \({\bar{\mathfrak {F}}}\)), the supremum is taken on a larger set, so that the right-hand side of (2.46) cannot decrease. On the other hand, Lemma 2.6 shows that \(\mathscr {F}(\gamma |\mu )\) still provides an upper bound even if \(\phi ,\psi \) are in \(\mathrm {B}_b(X)\); thus duality also holds in this case. The same argument applies to (2.47) or (2.48). \(\square \)

The following result provides lower semicontinuity of the relative entropy or of an increasing sequence of relative entropies.

Corollary 2.9

The functional \(\mathscr {F}\) is jointly convex and lower semicontinuous in  . More generally, if \(F\in \Gamma (\mathbb {R}_+)\) is the pointwise limit of an increasing net \((F_\lambda )_{\lambda \in \mathbb {L}}\subset \Gamma (\mathbb {R}_+)\) indexed by a directed set \(\mathbb {L}\) and

. More generally, if \(F\in \Gamma (\mathbb {R}_+)\) is the pointwise limit of an increasing net \((F_\lambda )_{\lambda \in \mathbb {L}}\subset \Gamma (\mathbb {R}_+)\) indexed by a directed set \(\mathbb {L}\) and  is the narrow limit of a net

is the narrow limit of a net  , then the corresponding entropy functionals \(\mathscr {F}_\lambda ,\mathscr {F}\) satisfy

, then the corresponding entropy functionals \(\mathscr {F}_\lambda ,\mathscr {F}\) satisfy

Proof

The lower semicontinuity of \(\mathscr {F}\) follows by (2.46), which provides a representation of \(\mathscr {F}\) as the supremum of a family of lower semicontinuous functionals for the narrow topology. Using \(F_\alpha \le F_\lambda \) for \(\alpha \le \lambda \) in \(\mathbb {L}\), \(\alpha \) fixed, we have

by the above lower semicontinuity. Hence, it suffices to check that

This formula follows by the monotonicity of the convex sets \(\mathfrak {F}_\lambda \) (associated to \(F_\lambda \) by (2.21)), i.e. \(\mathfrak {F}_{\alpha }\subset \mathfrak {F}_{\lambda }\) if \(\alpha \le \lambda \) in \(\mathbb {L}\), and by the fact that \(\mathring{\mathfrak {F}}\subset \cup _{\lambda \in \mathbb {L}} \mathring{\mathfrak {F}_\lambda }\); in order to show the latter property, we argue by contradiction and we suppose that there exists \((\phi ,\psi )\in \mathring{\mathfrak {F}}\) which does not belong to \(\mathfrak {F}':=\cup _{\lambda \in \mathbb {L}} \mathring{\mathfrak {F}_\lambda } \). Notice that every \(\mathfrak {F}_\lambda \) has nonempty interior, so that \(\mathfrak {F}'\) is a nonempty convex and open set. We also notice that \(\phi <{F'_\infty }\) and \(\lim _{\lambda \in \mathbb {L}}{(F_\lambda )'_\infty }={F'_\infty }\) so that there exists \(\alpha \in \mathbb {L}\) with \(F^*_\alpha (\phi )<\infty \); thus there exists \(-{\bar{\psi }}>\psi \) such that \((\phi ,{\bar{\psi }})\in \mathfrak {F}'\). Applying the geometric form of the Hahn-Banach theorem, we can find a non vertical line separating \((\phi ,\psi )\) from \(\mathfrak {F}'\), i.e. there exists \(\theta \in \mathbb {R}\) such that

Recalling that \(\overline{\mathring{\mathfrak {F}_\lambda }}=\mathfrak {F}_\lambda \) we deduce

taking the supremum w.r.t. \(\phi '\) we obtain

and passing to the limit w.r.t. \(\lambda \in \mathbb {L}\) we get

which contradicts the fact that \((\phi ,\psi )\in \mathop {\mathrm{int}}\nolimits \mathfrak {F}.\)

Thus for every pair of simple and lower semicontinuous functions \((\phi ,\psi )\) taking values in \(\mathop {\mathrm{int}}\nolimits \mathfrak {F}\) we have \((\psi (x),\phi (x))\in \mathop {\mathrm{int}}\nolimits \mathfrak {F}_\alpha \) for every \(x\in X\) and some \(\alpha \in \mathbb {L}\) so that

Since \(\phi ,\psi \) are arbitrary we conclude applying the duality formula (2.46). \(\square \)

Next, we provide a compactness result for the sublevels of the relative entropy, which will be useful in Sect. 3.4 (see Theorem 3.3 and Lemma 3.9).

Proposition 2.10

(Boundedness and tightness) If  is bounded and \({F'_\infty } >0\), then for every \(C\ge 0\) the sublevels of \(\mathscr {F}\),

is bounded and \({F'_\infty } >0\), then for every \(C\ge 0\) the sublevels of \(\mathscr {F}\),

are bounded. If moreover  is equally tight and \({F'_\infty } =+\infty \), then the sets \(\Xi _C\) are equally tight.

is equally tight and \({F'_\infty } =+\infty \), then the sets \(\Xi _C\) are equally tight.

Proof

Concerning the properties of \(\Xi _C\), we will use the inequality

This follows easily by considering the decomposition \( \gamma =\sigma \mu +\gamma ^\perp \) and by integrating the Young inequality \(\lambda \sigma \le F(\sigma )+F^*(\lambda )\) for \(\lambda >0\) in B with respect to \(\mu \); notice that

Choosing first \(B=X\) in (2.56) and an arbitrary \(\lambda \) in \((0,{F'_\infty } )\) (notice that \(F^*(\lambda )<\infty \) thanks to (2.18)) we immediately get a uniform bound of \(\gamma (X)\) for every \(\gamma \in \Xi _C\).

In order to prove the tightness when \({F'_\infty } =+\infty \), whenever \(\varepsilon >0\) is given, we can choose \(\lambda =2C/\varepsilon \) and \(\eta >0\) so small that \(\eta F^*(\lambda )/\lambda \le \varepsilon /2\), and then a compact set \(K\subset X\) such that \(\mu (X\setminus K)\le \eta \) for every  . (2.56) shows that \(\gamma (X\setminus K)\le \varepsilon \) for every \(\gamma \in \Xi \). \(\square \)

. (2.56) shows that \(\gamma (X\setminus K)\le \varepsilon \) for every \(\gamma \in \Xi \). \(\square \)

We conclude this section with a useful representation of \(\mathscr {F}\) in terms of the reverse entropy \(R\) (2.28) and the corresponding functional \(\mathscr {R}\). We will use the result in Sect. 3.5 for the reverse formulation of the primal entropy-transport problem.

Lemma 2.11

For every  we define

we define

where \(\mu =\varrho \gamma +\mu ^\perp \) is the reverse Lebesgue decomposition given by Lemma 2.3. Then

Proof

It is an immediate consequence of the dual characterization in (2.46) and the equivalence in (2.30). \(\square \)

3 Optimal Entropy-Transport problems

The major object of Part I is the entropy-transport functional, where two measures  and

and  are given, and one has to find a transport plan

are given, and one has to find a transport plan  that minimizes the functional.

that minimizes the functional.

3.1 The basic setting

Let us fix the basic set of data for Entropy-Transport problems. We are given

-

two Hausdorff topological spaces \((X_i,\tau _i)\), \(i=1,2\), which define the Cartesian product \({\varvec{X}}:=X_1\times X_2\) and the canonical projections \(\pi ^i:{\varvec{X}}\rightarrow X_i\);

-

two entropy functions \( F_i\in \Gamma (\mathbb {R}_+)\), thus satisfying (2.13);

-

a proper, lower semicontinuous cost function \(\mathsf{c}:{\varvec{X}}\rightarrow [0,\infty ]\);

-

a pair of nonnegative Radon measures

with finite mass \({m_{i}}:=\mu _i(X_i)\), satisfying the compatibility condition $$\begin{aligned} J:=\Big ({m_{1}}\, {\text {D}}(F_1)\Big )\cap \Big ({m_{2}}\, {\text {D}}(F_2)\Big )\ne \emptyset . \end{aligned}$$(3.1)

with finite mass \({m_{i}}:=\mu _i(X_i)\), satisfying the compatibility condition $$\begin{aligned} J:=\Big ({m_{1}}\, {\text {D}}(F_1)\Big )\cap \Big ({m_{2}}\, {\text {D}}(F_2)\Big )\ne \emptyset . \end{aligned}$$(3.1)

We will often assume that the above basic setting is also coercive: this means that at least one of the following two coercivity conditions holds:

For every transport plan  we define the marginals \(\gamma _i:=\pi ^i_\sharp {\varvec{\gamma }}\) and, as in (2.35), we define the relative entropies

we define the marginals \(\gamma _i:=\pi ^i_\sharp {\varvec{\gamma }}\) and, as in (2.35), we define the relative entropies

With this, we introduce the Entropy-Transport functional as

possibly taking the value \(+\infty \). Our basic setting is feasible if the functional \(\mathscr {E}\) is not identically \(+\infty \), i.e. there exists at least one plan \({\varvec{\gamma }}\) with \(\mathscr {E}({\varvec{\gamma }}|\mu _1,\mu _2)<\infty \).

3.2 The primal formulation of the optimal entropy-transport problem

In the basic setting described in the previous Sect. 3.1, we want to investigate the following problem.

Problem 3.1

(Entropy-Transport minimization) Given  find

find  minimizing \(\mathscr {E}( {\varvec{\gamma }}|{\mu _1,\mu _2})\), i.e.

minimizing \(\mathscr {E}( {\varvec{\gamma }}|{\mu _1,\mu _2})\), i.e.

We denote by  the collection of all the minimizers of (3.5).

the collection of all the minimizers of (3.5).

Remark 3.2

(Feasibility conditions) Problem 3.1 is feasible if there exists at least one plan \({\varvec{\gamma }}\) with \(\mathscr {E}({\varvec{\gamma }}|\mu _1,\mu _2)<\infty \). Notice that this is always the case when

since among the competitors one can choose the null plan \({\varvec{\eta }}_0\), so that

More generally, thanks to (3.1) a sufficient condition for feasibility in the nondegenerate case \({m_{1}}{m_{2}}\ne 0\) is that there exist functions \(B_1\) and \(B_2\) with

In fact, the plans

are Radon [44, Thm. 17, p. 63], have finite cost \(\mathscr {E}({\varvec{\gamma }}|\mu _1,\mu _1)<\infty \) and provide the estimate

Notice that (3.1) is also necessary for feasibility: in fact (2.44) yields

Thus, whenever \(\mathscr {E}({\varvec{\gamma }}|\mu _1,\mu _2)<\infty \), we have

and therefore

We will often strengthen (3.1) by assuming that at least one of the domains of the entropies \(F_i\) has nonempty interior, containing a point of the other domain:

This condition is surely satisfied if J has nonempty interior, i.e. \(\max (m_1 s_1^-,\) \( m_2s_2^-)< \min (m_1 s_1^+,m_2 s_2^+),\) where \(s_i^-=\inf {\text {D}}(F_i)\), \(s_i^+:=\sup {\text {D}}(F_i)\).

We also observe that whenever \(\mu _i(X_i)=0\) then the null plan \({\varvec{\gamma }}:={\varvec{\eta }}_0\) provides the trivial solution to Problem 3.1. Another trivial case occurs when \(F_i(0)<\infty \) and \(F_i\) are nondecreasing in \({\text {D}}(F_i)\) (in particular when \(F_i(0)=0\)). Then it is clear that the null plan is a minimizer and  .

.

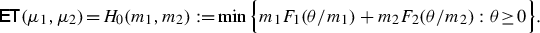

3.3 Examples

Let us consider a few particular cases:

-

E.1

Costless transport: Consider the case \(\mathsf{c}\equiv 0\). Since \(F_i\) are convex, in this case the minimum is attained when the marginals \(\gamma _i\) have constant densities. Setting \(\sigma _i\equiv \theta /m_i\) in order to have \(m_1\sigma _1=m_2\sigma _2\), we thus have

(3.13)

(3.13) -

E.2

Entropy-potential problems: If \(\mu _2\equiv \eta _0\) is the null measure and, just to fix ideas, \(X_i\) are Polish spaces with \(X_2\) compact and \(\mathsf{c}\) is real valued, then setting \(V(x_1):=\min _{x_2\in X_2}\mathsf{c}(x_1,x_2)\) we get

(3.14)

(3.14)In fact for every

we have \(\mathscr {F}_2(\gamma _2|\eta _0)={(F_2)'_\infty }\gamma _2(X_2)= {(F_2)'_\infty }\gamma _1(X_1)\); moreover by applying the von Neumann measurable selection Theorem [44, Thm. 13, p. 127] it is not difficult to check that

we have \(\mathscr {F}_2(\gamma _2|\eta _0)={(F_2)'_\infty }\gamma _2(X_2)= {(F_2)'_\infty }\gamma _1(X_1)\); moreover by applying the von Neumann measurable selection Theorem [44, Thm. 13, p. 127] it is not difficult to check that

-

E.3

Pure transport problems: We choose \(F_i(r)=\mathrm {I}_1(r)= {\left\{ \begin{array}{ll} 0&{}\text {if }r=1\\ +\infty &{}\text {otherwise}. \end{array}\right. }\)

In this case any feasible plan \({\varvec{\gamma }}\) should have \(\mu _1\) and \(\mu _2\) as marginals and the functional just reduces to the pure transport part

$$\begin{aligned} \mathsf {T}(\mu _1,\mu _2)=\min \Big \{\int _{X_1\times X_2}\mathsf{c}\,\mathrm {d}{\varvec{\gamma }}:\quad \pi ^i_\sharp {\varvec{\gamma }}=\mu _i\Big \}. \end{aligned}$$(3.15)As a necessary condition for feasibility we get \(\mu _1(X_1)=\mu _2(X_2)\).

A situation equivalent to the optimal transport case occurs when (3.12) does not hold. In this case, the set J defined by (3.1) contains only one point \(\theta \) which separates \(m_1{\text {D}}(F_1)\) and \(m_2{\text {D}}(F_2)\):

$$\begin{aligned} \theta =m_1s_1^+=m_2s_2^-\quad \text {or }\quad \theta =m_1 s_1^-=m_2s_2^+. \end{aligned}$$(3.16)It is not difficult to check that in this case

(3.17)

(3.17) -

E.4

Optimal transport with density constraints: We realize density constraints by introducing characteristic functions of intervals \([a_i,b_i]\), viz. \(F_i(r):=\mathrm {I}_{[a_i,b_i]}(r)\), \(a_i\le 1\le b_i\). E.g. when \(a_i=1\), \(b_i=+\infty \) we have

(3.18)

(3.18)For \([a_1,b_1]=[0,1]\) and \([a_2,b_2]=[1,\infty ]\) we get

(3.19)

(3.19)whose feasibility requires \(\mu _2(X_2)\ge \mu _1(X_1)\).

-

E.5

Pure entropy problems: These problems arise if \(X_1=X_2=X\) and transport is forbidden, i.e. \({(F_i)'_\infty }=+\infty \), \(\mathsf{c}(x_1,x_2)= {\left\{ \begin{array}{ll} 0&{}\text {if }x_1=x_2\\ +\infty &{}\text {otherwise.} \end{array}\right. } \)

In this case the marginals of \({\varvec{\gamma }}\) coincide: we denote them by \(\gamma \). We can write the density of \(\gamma \) w.r.t. any measure \(\mu \) such that \(\mu _i\ll \mu \) (say, e.g., \(\mu =\mu _1+\mu _2\)) as \(\gamma =\vartheta \mu \) and then \(\mu _i=\vartheta _i\mu \). Since \(\gamma \ll \mu _i\) we have \(\vartheta (x)=0\) for \(\mu \)-a.e. x where \(\vartheta _1(x)\vartheta _2(x)=0\). Thus \(\sigma _i=\vartheta /\vartheta _i\) is well defined and we have

$$\begin{aligned} \mathscr {E}({\varvec{\gamma }}|\mu _1,\mu _2)=\int _{X} \Big (\vartheta _1 F_1(\vartheta /\vartheta _1) +\vartheta _2 F_2(\vartheta /\vartheta _2)\Big )\,\mathrm {d}\mu , \end{aligned}$$(3.20)with the convention that \(\vartheta _i F_i(\vartheta /\vartheta _i)=0\) if \(\vartheta =\vartheta _i=0\). Since we expressed everything in terms of \(\mu \), by recalling the definition of the function \(H_0\) given in (3.13) we get

(3.21)

(3.21)In the Hellinger case \(F_i(s)= U_1(s)=s\log s-s+1\) a simple calculation yields

(3.22)

(3.22)In the Jensen–Shannon case, where \(F_i(s)=U_0(s)=s-1-\log s\), we obtain

$$\begin{aligned} H_0(\theta _1;\theta _2)=\theta _1\log \Big (\frac{2\theta _1}{\theta _1+\theta _2} \Big )+\theta _2 \log \Big (\frac{2\theta _2}{\theta _1+\theta _2}\Big ). \end{aligned}$$Two other interesting examples are provided by the quadratic case \(F_i(s)=\frac{1}{2}(s-1)^2\) and by the nonsmooth “piecewise affine” case \(F_i(s)=|s-1|\), for which we obtain

$$\begin{aligned} H_0(\theta _1,\theta _2)= & {} \frac{1}{2(\theta _1+\theta _2)}(\theta _1-\theta _2)^2,\quad \text {and}\\ H_0(\theta _1,\theta _2)= & {} |\theta _1-\theta _2|,\quad \text {respectively}. \end{aligned}$$ -

E.6

Regular Entropy-Transport problems: These problems correspond to the choice of a pair of differentiable entropies \(F_i\) with \({\text {D}}(F_i)\supset (0,\infty )\), as in the case of the power-like entropies \(U_p\) defined in (2.26). When they vanish (and thus have a minimum) at \(s=1\), the Entropic Optimal Transportation can be considered as a smooth relaxation of the Optimal Transport case E.3.

-

E.7

Squared Hellinger–Kantorovich distances: For a metric space \((X,\mathsf{d})\), set \(X_1=X_2=X\) and let \(\tau \) be induced by \(\mathsf{d}\). Further, set \(F_1(s)=F_2(s):=U_1(s)=s\log s-s+1\) and

$$\begin{aligned} \mathsf{c}(x_1,x_2):= & {} -\log \Big (\cos ^2\big (\mathsf{d}(x_1,x_2)\wedge \pi /2\big )\Big ) \quad \text {or simply}\\ \mathsf{c}(x_1,x_2):= & {} \mathsf{d}^2(x_1,x_2). \end{aligned}$$This case will be thoroughly studied in the second part of the present paper, see Sect. 6.

-

E.8

Marginal Entropy-Transport problems: In this case one of the two marginals of \({\varvec{\gamma }}\) is fixed, say \(\gamma _1\), by choosing \(F_1(r):=\mathrm {I}_1(r)\). Thus the functional minimizes the sum of the transport cost and the relative entropy of the second marginal \(\mathscr {F}_2(\gamma _2|\mu _2)\) with respect to a reference measure \(\mu _2\), namely

where \(\mathsf{T}\) has been defined by (3.15). This is the typical situation one has to solve at each iteration step of the Minimizing Movement scheme [2], when \(\mathsf{T}\) is a (power of a) transport distance induced by \(\mathsf{c}\), as in the Jordan-Kinderlehrer-Otto approach [24].

-

E.9

The Piccoli-Rossi “generalized Wasserstein distance” [38, 39]: For a metric space \((X,\mathsf{d})\), set \(X_1=X_2=X\), let \(\tau \) be induced by \(\mathsf{d}\), and consider \(F_1(s)=F_2(s):=V(s)=|s-1|\) with \(\mathsf{c}(x_1,x_2):=\mathsf{d}(x_1,x_2)\). This example can be considered as the natural extension of the \(L^1\)-Kantorovich–Wasserstein distance (corresponding to (3.15) with the distance cost) to measures with different masses, due to its dual representation in terms of the flat metric, see (7.47).

-

E.10

The discrete case. Let \(\mu _1=\sum _{i=1}^m\alpha _i\delta _{x_i}\), \(\mu _2=\sum _{j=1}^N \beta _j\delta _{y_j}\) with \(\alpha _i,\beta _j>0\), and let \(\mathsf{c}_{i,j}:=\mathsf{c}(x_i,y_j)\). In the case of superlinear entropy functions \(F_i\), the Entropy-Transport problem for this discrete model consists in finding coefficients \(\gamma _{i,j}\ge 0\) which minimize

$$\begin{aligned} \mathscr {E}(\gamma _{i,j}|\alpha _i,\beta _j):= \sum _{i}\alpha _i F_1\Big (\frac{\sum _j \gamma _{i,j}}{\alpha _i}\Big )+ \sum _{j}\beta _j F_2\Big (\frac{\sum _i \gamma _{i,j}}{\beta _j}\Big )+\sum _{i,j} \mathsf{c}_{i,j}\gamma _{i,j}. \end{aligned}$$(3.23)

3.4 Existence of solutions to the primal problem

The next result provides a first general existence result for Problem 3.1 in the basic coercive setting of Sect. 3.1.

Theorem 3.3

(Existence of minimizers) Let us assume that Problem 3.1 is feasible (see Remark 3.2) and coercive, namely at least one of the following conditions hold:

-

(i)

the entropy functions \(F_1\) and \(F_2\) are superlinear, i.e. \({(F_1)'_\infty }={(F_2)'_\infty }=+\infty \);

-

(ii)

\(\mathsf{c}\) has compact sublevels in \({\varvec{X}}\) and \({(F_1)'_\infty }+{(F_2)'_\infty }+\inf \mathsf{c}>0\).

Then Problem 3.1 admits at least one optimal solution. In this case  is a compact convex set of

is a compact convex set of  .

.