Abstract

Spectral computations of infinite-dimensional operators are notoriously difficult, yet ubiquitous in the sciences. Indeed, despite more than half a century of research, it is still unknown which classes of operators allow for the computation of spectra and eigenvectors with convergence rates and error control. Recent progress in classifying the difficulty of spectral problems into complexity hierarchies has revealed that the most difficult spectral problems are so hard that one needs three limits in the computation, and no convergence rates nor error control is possible. This begs the question: which classes of operators allow for computations with convergence rates and error control? In this paper, we address this basic question, and the algorithm used is an infinite-dimensional version of the QR algorithm. Indeed, we generalise the QR algorithm to infinite-dimensional operators. We prove that not only is the algorithm executable on a finite machine, but one can also recover the extremal parts of the spectrum and corresponding eigenvectors, with convergence rates and error control. This allows for new classification results in the hierarchy of computational problems that existing algorithms have not been able to capture. The algorithm and convergence theorems are demonstrated on a wealth of examples with comparisons to standard approaches (that are notorious for providing false solutions). We also find that in some cases the IQR algorithm performs better than predicted by theory and make conjectures for future study.

Similar content being viewed by others

1 Introduction

Spectral computations are ubiquitous in the sciences with applications in solutions to differential and integral equations, spline functions, orthogonal polynomials, quantum mechanics, quantum chemistry, statistical mechanics, Hermitian and non-Hermitian Hamiltonians, optics etc. [10, 26, 27, 34, 59, 67, 68, 70]. The computational problem is as follows. Letting T denote a bounded linear operator on the canonical separable Hilbert space \(l^2({\mathbb {N}})\), one wants to design algorithms to compute the spectrum of T, denoted by \(\sigma (T)\). Given the many applications, this problem has been investigated intensely since the 1950s [3, 4, 6, 7, 16, 17, 20,21,22, 25, 28, 29, 35,36,37,38, 43, 46, 47, 61, 63,64,66, 72], and we can only cite a small subset here.

In the paper “On the Solvability Complexity Index, the n-pseudospectrum and approximations of spectra of operators” [38] the Solvability Complexity Index (SCI) was introduced. The SCI provides a classification hierarchy [8, 9, 38] of spectral problems according to their computational difficulty. The SCI of a class of spectral problems is the least number of limits needed in order to compute the spectrum of operators in this class. From a classical numerical analysis point of view, such a concept may seem foreign. Indeed, the traditional sentiment is that one should have an algorithm, \(\Gamma _n\), such that for an operator \(T \in \mathcal {B}(l^2(\mathbb {N}))\),

preferably with some form of error control of the convergence. As this philosophy forms the basics of numerical analysis, it naturally permeates the classical literature on the computational spectral problem. However, as is shown in [8, 9, 38], an algorithm satisfying (1.1) is impossible even for the class of self-adjoint operators. Indeed, in the general case, the best possible alternative is an algorithm depending on three indices \(n_1, n_2, n_3\) such that

In fact, any algorithm with fewer than three limits will fail on the general class of operators. Moreover, no error control nor convergence rate on any of the limits are possible, since any such error control would reduce the number of limits needed. However, for the self-adjoint and normal cases, two limits suffice in order to recover the spectrum. This phenomenon implies that the only way to characterise the computational spectral problem is through a hierarchy classifying the difficulty of computing spectra of different subclasses of operators. This is the motivation behind the SCI hierarchy, which also covers general numerical analysis problems. Indeed, the SCI hierarchy is closely related to Smale’s question on the existence of purely iterative generally convergent algorithm for polynomial zero finding [69]. As demonstrated by McMullen [49, 50] and Doyle and McMullen [30], this is a case where several limits are needed in the computation, and their results become special cases of classification in the SCI hierarchy [8, 9].

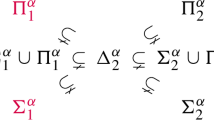

Informally, the SCI hierarchy is characterised as follows (see the “Appendix 1” for a more detailed summary describing the SCI hierarchy).

- \(\Delta _0\)::

-

The set of problems that can be computed in finite time, the SCI \(=0\).

- \(\Delta _1\)::

-

The set of problems that can be computed using one limit, the SCI \(=1\), however, one has error control and one knows an error bound that tends to zero as the algorithm progresses.

- \(\Delta _2\)::

-

The set of problems that can be computed using one limit, the SCI \(=1\), but error control may not be possible.

- \(\Delta _{m+1}\)::

-

For \(m \in \mathbb {N}\), the set of problems that can be computed by using m limits, the SCI \(\le m\).

The class \(\Delta _1\) is of course a highly desired class, however, most spectral problems are much higher in the hierarchy. For example, we have the following known classifications [8, 9, 38].

-

(i)

The general spectral problem is in \(\Delta _4{\setminus } \Delta _3\).

-

(ii)

The self-adjoint spectral problem is in \(\Delta _3{\setminus } \Delta _2\).

-

(iii)

The compact spectral problem is in \(\Delta _2{\setminus } \Delta _1\).

Here, the notation\({\setminus }\)indicates the standard “setminus”. Note that the SCI hierarchy can be refined. We will not consider the full generalisation in the higher part of the hierarchy in this paper, but recall the class \(\Sigma _1\) [24]. This class is defined as follows.

- \(\Sigma _1\)::

-

We have \(\Delta _1 \subset \Sigma _1 \subset \Delta _2 \) and \(\Sigma _1\) is the set of problems that can be computed by passing to one limit. Error control may not be possible, however, there exists an algorithm for these problems that converges and for which its output is included in the spectrum (up to an arbitrarily small accuracy parameter \(\epsilon \)).

In the context of computing \(\sigma (T)\), a \(\Sigma _1\) classification means the existence of an algorithm \(\Gamma _n\) such that

and \(\Gamma _n\) converges to \(\sigma (T)\) in the Hausdorff metric. The \(\Sigma _1\) class is very important as it allows for algorithms that never make a mistake. In particular, one is always sure that the output is sound but we do not know if we have everything yet. The simplest infinite-dimensional spectral problem is that of computing the spectrum of an infinite diagonal matrix and, as is easy to see, we have the following.

-

(iv)

The problem of computing spectra of infinite diagonal matrices is in \(\Sigma _1{\setminus } \Delta _1\).

Hence, the computational spectral problem becomes an infinite classification theory in order to characterise the above hierarchy. In order to do so, there will, necessarily, have to be many different types of algorithms. Indeed, characterising the hierarchy will yield a myriad of different approaches, as different structures on the various classes of operators will require specific algorithms. The key contribution of this paper is to investigate the convergence properties of the infinite-dimensional QR (IQR) algorithm, its implementation properties, and how this algorithm provides classification results in the SCI hierarchy.

1.1 Main contribution and novelty of the paper

The main contributions of the paper can be summarised as follows: New convergence results, algorithmic results (the IQR algorithm can be implemented), classification results in the SCI hierarchy and numerical examples.

-

(1)

Convergence results We provide new convergence theorems for the IQR algorithm with convergence rates and error control. The results include eigenvalues, eigenvectors and invariant subspaces.

-

(2)

Algorithmic implementation We prove that for infinite matrices with finitely many non-zero entries in each column, it is possible to implement the IQR algorithm exactly (on a finite machine) as if one had an infinite computer at one’s disposal. This can be extended to implementing the IQR algorithm with error control for general invertible operators.

-

(3)

SCI hierarchy classifications As a result of (1) and (2), we provide new classification results for the SCI hierarchy. In particular, the convergence properties of the IQR algorithm capture key structures that allow for sharp \(\Delta _1\) classification of the problem of computing extremal points in the spectrum. Moreover, we establish sharp \(\Sigma _1\) classification of the problem of computing spectra of subclasses of compact operators.

-

(4)

Numerical examples Finally, we demonstrate the IQR algorithm and the proven convergence results on a variety of difficult problems in practical computation, illustrating how the IQR algorithm is much more than a theoretical concept. Moreover, the examples demonstrate that the IQR algorithm performs much better than predicted by our theory, working on much larger classes of operators. Hence, we are left with many open problems on the theoretical understanding of the potential and limitations of this algorithm. The computational experiments include examples from

-

(i)

Toeplitz/Laurent operators and their perturbations,

-

(ii)

PT-symmetry in quantum mechanics,

-

(iii)

Hopping sign model in sparse neural networks,

-

(iv)

NSA Anderson model in superconductors.

-

(i)

1.2 Connection to previous work

Our results connect to many different approaches in the vast literature on spectral computation in infinite dimensions. The infinite-dimensional computational spectral problem is very different from the finite-dimensional computational eigenvalue problem, and even though the IQR algorithm is inspired by the finite-dimensional version, this paper solely focuses on the infinite-dimensional problem. Thus, the paper is aimed at the analysis and numerical analysis audience focusing on infinite-dimensional problems rather than the finite-dimensional numerical linear algebra discipline.

-

Finite sections The IQR algorithm provides an alternative to the standard finite section method in several cases where it fails. Whereas the finite section method would extract a finite section from the infinite matrix and then apply, for example, the finite-dimensional QR algorithm, the IQR algorithm first performs the infinite QR iterations and then extracts a finite section. In general, these two processes do not commute. The finite section method (or any derivative of it) cannot work in general because of the general classification results in the SCI hierarchy mentioned in Sect. 1. Typically, it may provide false solutions. However, in the cases where it converges, it provides invaluable \(\Delta _2\) classifications in the SCI hierarchy. The finite section method has often been viewed in connection with Toeplitz theory and the reader may want to consult the work by Böttcher [14, 15], Böttcher and Silberman [18], Böttcher et al. [16], Brunner et al. [22], Hagen et al. [35], Lindner [44], Marletta [46] and Marletta and Scheichl [47]. From the operator algebra point of view, the work of Arveson [5,6,7] has been influential as well as the work of Brown [21].

-

Infinite-dimensional Toda flow Deift et al. [28] provided the first results on the IQR algorithm in connection with Toda flows with infinitely many variables. Their results are purely functional analytic and do not take implementation and computability issues into account. However, these results provide the fundamentals of the IQR algorithm. In [36] these results were expanded with a convergence result for eigenvectors corresponding to eigenvalues outside the essential numerical range for normal operators. Yet, this paper did not consider convergence rates, actual numerical calculation nor any classification results.

-

Infinite-dimensional QL algorithm Olver, Townsend and Webb have provided a practical framework for infinite-dimensional linear algebra and foundational results on computations with infinite data structures [53,54,55,56, 73]. This includes efficient codes as well as theoretical results. The infinite-dimensional QL (IQL) algorithm is an important part of this program. The IQL algorithm is rather different from the IQR algorithm, although they are similar in spirit. In particular, both the implementation and the convergence results are somewhat contrasting.

-

Infinite-dimensional spectral computation: The results in this paper follow in the long tradition of infinite-dimensional spectral computations. This field contains a vast literature that spans more than half a century, and the references that we have cited in the first paragraph of Sect. 1 represent a small sample. However, we would like to highlight the recent work by Bögli et al. [13] who were able to computationally confirm, with absolute certainty, a conjecture on a certain oscillatory behaviour of higher auto-ionizing resonances of atoms. Note that problems that are classified as \(\Delta _1\) and \(\Sigma _1\) in the SCI hierarchy may allow for computer assisted proofs.

1.3 Background and notation

Here we briefly recall some definitions used in the paper. We will consider the canonical separable Hilbert space \(\mathcal {H} = l^2(\mathbb {N})\) (the set of square summable sequences). Moreover, we write \(\mathcal {B}(\mathcal {H})\) for the set of bounded operators on \(\mathcal {H}.\) For orthogonal projections E, F, we will write \(E\le F\) if the range of E is a subspace of the range of F. We denote the canonical orthonormal basis of \(\mathcal {H}\) by \(\{ e_j \}_{j \in \mathbb {N}}\), and if \(\xi \in \mathcal {H}\) we write \(\xi (j) = \langle \xi , e_j \rangle .\) Note that \(T \in \mathcal {B}(\mathcal {H})\) is uniquely determined by its matrix elements \(t_{ij} = \langle Te_j, e_i\rangle \). Hence we will use the words bounded operator and infinite matrix interchangeably. Given a sequence of operators \(\{T_n\}\), we will use the notation

to mean convergence in the strong and weak operator topology respectively. The spectrum of \(T \in \mathcal {B}(\mathcal {H})\) will be denoted by \(\sigma (T)\), and \(\sigma _d(T)\) denotes the set of isolated eigenvalues with finite multiplicity (the discrete spectrum).

In connection with the spectrum, we need to recall some definitions which will appear in the statement of our theorems. We recall that, for \(T \in \mathcal {B}(\mathcal {H})\), the essential spectrumFootnote 1 and the essential spectral radius are given by

Moreover, the numerical range and the essential numerical range of T are defined by

In addition, we need the Hausdorff metric as defined by the following. Let \(\mathcal {S}, \mathcal {T} \subset \mathbb {C}\), be compact. Then their Hausdorff distance is

where \(d(\lambda ,\mathcal {T}) = \inf _{\rho \in \mathcal {T}}|\rho -\lambda |.\) We also recall a generalisation of the spectrum, known as the pseudospectrum. Indeed, for \(\epsilon >0\) define the \(\epsilon \)-pseudospectrum as

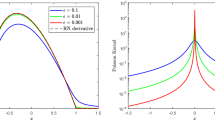

where we interpret \(\left\| S^{-1}\right\| \) as \(+\infty \) if S does not have a bounded inverse. This is easier to compute than the spectrum, converges in the Hausdorff metric to the spectrum as \(\epsilon \downarrow 0\) and gives an indication of the instability of the spectrum of T. We shall use it as a comparison for the IQR algorithm and as a means to detect spectral pollution for finite section methods.

Finally, we need a notion of convergence of subspaces. We follow the notation in [41]. Let \(M \subset \mathcal {B}\) and \(N \subset \mathcal {B}\) be two non-trivial closed subspaces of a Banach space \(\mathcal {B}.\) The distance between them is defined by

Given subspaces M and \(\{M_k\}\) such that \(\hat{\delta }(M_k,M) \rightarrow 0\) as \(k \rightarrow \infty ,\) we will use the notation \(M_k {\rightarrow } M\). If we replace \(\mathcal {B}\) with a Hilbert space \(\mathcal {H}\), we can express \(\delta \) and \(\hat{\delta }\) conveniently in terms of projections and operator norms. In particular, if E and F are the orthogonal projections onto subspaces \(M \subset \mathcal {H}\) and \(N \subset \mathcal {H}\) respectively, then

Since the operator \(E-F = F^{\perp }E - FE^{\perp }\) is essentially the direct sum of operators \(F^{\perp }E \oplus (- FE^{\perp }),\) its norm is \(\hat{\delta }(M,N),\) i.e.

This allows us to extend the definition to allow the trivial subspace \(\{0\}\) and gives rise to a metric on the set of all closed subspaces of \(\mathcal {H}\) (first introduced by Krein and Krasnoselski in [42]). We also define the (maximal) subspace angle, \(\phi (M,N)\in [0,\pi /2]\), between M and N by

Finally, we will use two further well-known properties in the Hilbert space setting. First, if M and N are both finite l-dimensional subspaces, then

which shows that to prove convergence of finite-dimensional subspaces, it is enough to prove \(\delta \)-convergence. Second, suppose we have

where the \(N_j^{(k)}\) need not be orthogonal. Then a simple application of Hölder’s inequality yields

which shows that if the dimensions of \(M_j\) and \(N_j^{(k)}\) are finite and equal, then to prove convergence \(N^{(k)}\rightarrow M\) we only need to prove that \(\delta (M_j,N_j^{(k)})\rightarrow 0\) as \(k\rightarrow \infty \). For further properties (including other notions of distances between subspaces) and a discussion on two projections theory, we refer the reader to the excellent article of Böttcher and Spitkovsky [19].

1.4 Organisation of the paper

The paper is organised as follows. In Sect. 2 we define the IQR algorithm (simple codes are also provided in the appendix). Section 3 contains and proves our main theorems including convergence rates. The outcome is more elaborate than the finite-dimensional case, as the infinite-dimensional setting includes more intricate instances. Our key practical result is that, despite being an algorithm dealing with infinite amount of information, it can be implemented on any standard computer and this is discussed in Sect. 4. The fact that the IQR algorithm can be computed allows for its use in order to provide new classification in the SCI hierarchy as discussed in Sect. 5. In particular, we demonstrate \(\Delta _1\) classification for the extremal part of the spectrum and dominant invariant subspaces, as well as \(\Sigma _1\) results for spectra of certain classes of compact operators. Note that the general spectral problem for compact operators is not in \(\Sigma _1\). The IQR algorithm and convergence theorems are demonstrated on a large collection of examples from the sciences on difficult computational spectral problems in Sect. 6, with comparisons to the finite section method. The IQR algorithm is also found to perform better than theory predicts and we conjecture conditions on the operator for this to be the case. Finally, we conclude with a discussion of the opportunities and limits of the IQR algorithm in Sect. 7.

2 The infinite-dimensional QR algorithm (IQR)

The IQR algorithm has existed as a pure mathematical concept for more than thirty years and it first appeared in the paper “Toda Flows with Infinitely Many Variables” [28] in 1985. However, the analysis in [28] covers only self-adjoint infinite matrices with real entries, and since the analysis is done from a pure mathematical perspective, the question regarding the actual numerical algorithm is left out. We will extend the analysis to more general operators and answer the crucial question: can one actually implement the IQR algorithm? The answer is affirmative, and we also prove convergence theorems, generalising the well-known finite-dimensional case.

2.1 The QR decomposition

The QR decomposition is the core of the QR algorithm. If \(T \in \mathbb {C}^{n\times n},\) one may apply the Gram-Schmidt procedure to the columns of T and store these columns in a matrix Q. This gives us the QR decomposition

where Q is a unitary matrix and R upper triangular. It is no surprise that a QR decomposition should exist in the infinite-dimensional case, however, we need more than just the existence. A key ingredient in the QR algorithm are Householder transformations, used for computational reasons (they are backwards stable). It is crucial that we can adopt these tools in the infinite-dimensional setting. Our goal is to extend the construction of the QR decomposition, via Householder transformations, to infinite matrices and to find a way so that one can implement the procedure on a finite machine. To do this, we need to introduce the concept of Householder reflections in the infinite-dimensional setting.

Definition 2.1

A Householder reflection is an operator \(S \in \mathcal {B}(\mathcal {H})\) of the form

where \(\bar{\xi }\) denotes the associated functional in \(\mathcal {H}^*\) given by \(x\rightarrow \langle x,\xi \rangle \). In the case where \(\mathcal {H} = \mathcal {H}_1 \oplus \mathcal {H}_2\) and \(I_i\) is the identity on \(\mathcal {H}_i\) then

will be called a Householder transformation.

A straightforward calculation shows that \(S^* = S^{-1} = S\) and thus also \(U^* = U^{-1} = U.\) An important property of the operator S is that if \(\{e_j\}\) is an orthonormal basis for \(\mathcal {H}\) and \(\eta \in \mathcal {H}\), then one can choose \(\xi \in \mathcal {H}\) such that

In other words, one can introduce zeros in the column below the diagonal entry. Indeed, if \(\eta _1 = \langle \eta , e_1\rangle \ne 0\) one may choose \(\xi = \eta \pm \Vert \eta \Vert \zeta ,\) where \(\zeta = \eta _1/|\eta _1|e_1\) and if \(\eta _1 = 0\) choose \(\xi = \eta \pm \Vert \eta \Vert e_1.\) The following theorem gives the existence of a QR decomposition, even in the case where the operator is not invertible.

Theorem 2.2

([36]) Let T be a bounded operator on a separable Hilbert space \(\mathcal {H}\) and let \(\{e_j\}_{j\in \mathbb {N}}\) be an orthonormal basis for \(\mathcal {H}\cong l^2(\mathbb {N}).\) Then there exists an isometry Q such that \(T=QR\), where R is upper triangular with respect to \(\{e_j\}\). Moreover,

where \(V_n = U_1 \cdots U_n\) are unitary and each \(U_j\) is a Householder transformation.

2.2 The IQR algorithm

Let \(T \in \mathcal {B}(\mathcal {H})\) be invertible and let \(\{e_j\}\) be an orthonormal basis for \(\mathcal {H}\). By Theorem 2.2 we have \(T = QR,\) where Q is an isometry and R is upper triangular with respect to \(\{e_j\}.\) Since T is invertible, Q is in fact unitary. Consider the following construction of unitary operators \(\{{\hat{Q}}_k\}\) and upper triangular (w.r.t. \(\{e_j\}\)) operators \(\{{\hat{R}}_k\}.\) Let \(T = Q_1R_1\) be a QR decomposition of T and define \(T_1 = R_1Q_1.\) Then QR factorize \(T_1 = Q_2R_2\) and define \(T_2 = R_2Q_2.\) The recursive procedure becomes

Now define

This is known as the QR algorithm and is completely analogous to the finite-dimensional case. Note also that we have \(T_n = \hat{Q}^*_n T \hat{Q}_n.\) In the finite-dimensional case and under favourable conditions, \(\hat{Q}_n^*T\hat{Q}_n\) converges to a diagonal operator and the columns of \(\hat{Q}_n\) converge to the corresponding eigenvectors as \(n\rightarrow \infty \) (see Theorem 3.1 below). We will see that the IQR algorithm behaves similarly for the extreme parts of the spectrum.

Definition 2.3

Let \(T \in \mathcal {B}(\mathcal {H})\) be invertible and let \(\{e_j\}\) be an orthonormal basis for \(\mathcal {H}\). The sequences \(\{\hat{Q}_j\}\) and \(\{{\hat{R}}_j\}\) constructed as in (2.3) and (2.4) will be called a Q-sequence and an R-sequence of T with respect to \(\{e_j\}.\)

Remark 2.4

Note that since the Householder transformations used in the proof of Theorem 2.2 are unique up to a ± sign, we will with some abuse of language refer to the QR decomposition constructed as the QR decomposition. In general for an invertible operator, the IQR algorithm is uniquely defined up to phase—see Sect. 4.2. This will not be a problem for our theorems or numerical examples.

The following observation will be useful in the later developments. From the construction in (2.3) and (2.4) we get

An easy induction gives us that

Note that \({\hat{R}}_m\) must be upper triangular with respect to \(\{e_j\}_{j\in \mathbb {N}}\) since \(R_j, \, j \le m\) is upper triangular with respect to \(\{e_j\}_{j\in \mathbb {N}}.\) Also, if T is invertible then \(\langle R e_i,e_i\rangle \ne 0.\) From this it follows immediately that

3 Convergence theorems

In finite dimensions we have the following well-known theorem:

Theorem 3.1

(Finite dimensions) Let \(T \in \mathbb {C}^{N \times N}\) be a normal matrix with eigenvalues satisfying \(|\lambda _1|> \cdots > |\lambda _N|\). Let \(\{Q_m\}\) be a Q-sequence of unitary operators. Then (up to re-ordering of the basis)

In this section we will address the convergence of the IQR algorithm for normal operators under similar assumptions and prove an analogue of Theorem 3.1 in infinite dimensions (Theorem 3.9). As well as this, and for more general operators T that are not necessarily normal, we address block convergence (Theorem 3.13), relevant when the eigenvalues do not have distinct moduli, and convergence to (dominant) invariant subspaces (Theorem 3.15).

3.1 Preliminary definitions and results

To state and prove our theorems we need some preliminary results. The reader only interested in the results themselves is referred to Sect. 3.2. If T is a normal operator, we will use \(\chi _S(T)\) to denote the indicator function of the set S defined via the functional calculus. Without loss of generality, we deal with the Hilbert space \(\mathcal {H}=l^2(\mathbb {N})\) and the canonical orthonormal basis \(\{e_j\}_{j\in \mathbb {N}}\). Our first set of results concerns the convergence of spanning sets under power iterations and is analogous to the finite-dimensional case. The following proposition can be found in [36] and together with Lemma 3.6 below, these are the only results we will use from [36].

Proposition 3.2

Suppose that \(T\in \mathcal {B}(\mathcal {H})\) is normal, is invertible and that \(\sigma (T)=\omega \cup \Psi \) is a disjoint union such that \(\omega =\{\lambda _i\}_{i=1}^N\) consists of finitely many isolated eigenvalues of T with \(\left| \lambda _1\right|>\left| \lambda _2\right|>\cdots >\left| \lambda _N\right| \). Suppose further that \(\mathrm {sup}\{\left| z\right| :z\in \Psi \}<\left| \lambda _N\right| \). Let \(l\in \mathbb {N}\) and suppose that \(\{\xi _i\}_{i=1}^l\) are linearly independent vectors in \(\mathcal {H}\) such that \(\{\chi _{\omega }(T)\xi _i\}_{i=1}^l\) are also linearly independent. Then

-

(i)

The vectors \(\{T^k\chi _{\omega }(T)\xi _i\}_{i=1}^l\) are linearly independent and there exists an l-dimensional subspace \(B\subset \mathrm {ran}\chi _{\omega }(T)\) such that

$$\begin{aligned} \mathrm {span}\{T^k\xi _i\}_{i=1}^l\rightarrow B,\quad \text {as }k\rightarrow \infty . \end{aligned}$$ -

(ii)

If

$$\begin{aligned} \mathrm {span}\{T^k\xi _i\}_{i=1}^{l-1}\rightarrow D\subset \mathcal {H},\quad \text {as }k\rightarrow \infty , \end{aligned}$$where D is an \((l-1)\)-dimensional subspace, then

$$\begin{aligned} \mathrm {span}\{T^k \xi _i\}_{i=1}^l \rightarrow D \oplus \mathrm {span}\{\xi \}, \quad \text {as }k\rightarrow \infty , \end{aligned}$$where \(\xi \in \mathrm {ran}\chi _{\omega }(T)\) is an eigenvector of T.

In order to extend this proposition to describe rates of convergence and prove our main theorems, we need to describe the space B in more detail. This is done inductively as follows. The first step is to choose \(\nu _{1,1}\in \{\lambda _i\}_{i=1}^N\) of maximum modulus such that

We then let \(\xi _{1,1}\) be a linear multiple of \(\xi _1\) such that \(\chi _{\nu _{1,1}}(T)\xi _{1,1}\) has norm one. Now suppose that at the m-th stage we have constructed vectors \(\{\xi _{m,i}\}_{i=1}^m\) with the same linear span as \(\{\xi _{i}\}_{i=1}^m\) and such that there exist \(\{\nu _{m,j}\}_{j=1}^{s_m}\subset \{\lambda _i\}_{i=1}^N\) with the following properties. After re-ordering the vectors \(\{\xi _{m,i}\}_{i=1}^m\) if necessary, there exist integers \(0=k_{m,0}<k_{m,1}<k_{m,2}<\cdots <k_{m,s_m}=m\) such that

-

(1)

\(\left| \nu _{m,s_m}\right|<\left| \nu _{m,s_m-1}\right|<\cdots <\left| \nu _{m,1}\right| .\)

-

(2)

\(\chi _{\lambda }(T)\xi _{m,i}=0\) if \(i>k_{m,j}\) and \(\lambda \in \{\lambda _i\}_{i=1}^N\) has \(\left| \lambda \right| >\left| \nu _{m,j+1}\right| \).

-

(3)

\(\{\chi _{\nu _{m,j}}(T)\xi _{m,i}\}_{i=k_{m,j-1}+1}^{k_{m,j}}\) are orthonormal.

We seek to add the space spanned by the vector \(\xi _{m+1}\) whilst preserving these properties.

First we deal with (2). Let \(\eta _{m+1}\in \{\lambda _i\}_{i=1}^N\) be of maximal modulus such that \(\chi _{\{\lambda _1,\ldots ,\eta _{m+1}\}}(T)\xi _{m+1}\notin \mathrm {span}\{\chi _{\{\lambda _1,\ldots ,\eta _{m+1}\}}(T)\xi _{j}\}_{j=1}^{m}\). If \(\left| \eta _{m+1}\right| <\left| \nu _{m,1}\right| \) then let \(t({m+1})\) be maximal such that \(\left| \eta _{m+1}\right| <\left| \nu _{m,t({m+1})}\right| \). We then choose complex numbers \(\{a_{m,j}\}_{j=1}^{k_{m,t({m+1})}}\) such that writing

we have that \(\chi _{\lambda }(T)\tilde{\xi }_{m+1,m+1}=0\) if \(\lambda \in \{\lambda _i\}_{i=1}^N\) has \(\left| \lambda \right| >\left| \eta _{m+1}\right| \). Note that by (2), (3) and the definition of \(\eta _{m+1}\), the coefficients \(a_{m,j}\) are determined uniquely in terms of \(\{\xi _{m,i}\}_{i=1}^{k_{m,t({m+1})}}\). If \(\left| \eta _{m+1}\right| \ge \left| \nu _{m,1}\right| \) then let \(t({m+1})=0\) and we set \(\tilde{\xi }_{m+1,m+1}=\xi _{m+1}\). In this case we still have that \(\chi _{\lambda }(T)\tilde{\xi }_{m+1,m+1}=0\) if \(\lambda \in \{\lambda _i\}_{i=1}^N\) has \(\left| \lambda \right| >\left| \eta _{m+1}\right| \).

We then define \(\xi _{m+1,j}=\xi _{m,j}\) for \(1\le j\le m\) and now deal with (3). If \(\eta _{m+1}\notin \{\nu _{m,j}\}_{j=1}^{s_m}\) then let \(\xi _{m+1,m+1}\) be a linear multiple of \(\tilde{\xi }_{m+1,m+1}\) such that \(\chi _{\eta _{m+1}}(T)\xi _{m+1,m+1}\) has norm 1 and we let \(\{\nu _{m+1,j}\}_{j=1}^{s_m+1}\) be a re-ordering of \(\{\nu _{m,j}\}_{j=1}^{s_m}\cup \{\eta _{m+1}\}\). Otherwise, we have \(\eta _{m+1}=\nu _{m,t({m+1})+1}\) and we apply Gram-Schmidt to

(without changing \(\{\xi _{m+1,i}\}_{i=k_{m,t({m+1})}+1}^{k_{m,t({m+1})+1}}\)). Note that by (2) and the definition of \(\eta _{m+1}\) these vectors are linearly independent. This gives \(\xi _{m+1,m+1}\) such that

are orthonormal and \(\chi _{\lambda }(T)\xi _{m+1,m+1}=0\) if \(\lambda \in \{\lambda _i\}_{i=1}^N\) has \(\left| \lambda \right| >\left| \nu _{m,t({m+1})+1}\right| .\) After re-ordering indices if necessary, we see that (1)-(3) now hold for \(m+1\).

After l steps the above process terminates giving a new basis \(\{\tilde{\xi }_i\}_{i=1}^l=\{\xi _{l,i}\}_{i=1}^l\) for \(\mathrm {span}\{\xi _{i}\}_{i=1}^l\) along with \(\{\nu _{j}\}_{j=1}^n=\{\nu _{l,j}\}_{j=1}^n\subset \{\lambda _i\}_{i=1}^N\) and \(0=k_0<k_1<k_2<\cdots <k_n=l\) such that

-

(i)

\(\left| \nu _{n}\right|<\left| \nu _{n-1}\right|<\cdots <\left| \nu _{1}\right| .\)

-

(ii)

\(\chi _{\lambda }(T)\tilde{\xi }_{i}=0\) if \(i>k_{j}\) and \(\lambda \in \{\lambda _i\}_{i=1}^N\) has \(\left| \lambda \right| >\left| \nu _{j+1}\right| \).

-

(iii)

\(\{\chi _{\nu _{j}}(T)\tilde{\xi }_{i}\}_{i=k_{j-1}+1}^{k_{j}}\) are orthonormal.

The subspace B can then be described as

Definition 3.3

With respect to the above construction we define the following:

Since the Gram-Schmidt process is defined uniquely up to phases we see that \(Z(T,\{\xi _j\}_{j=1}^l)\) is well-defined. The above construction also shows that if \(\{\chi _{\omega }(T)\xi _i\}_{i=1}^{l+1}\) are linearly independent then

We can now prove the following refinement of Proposition 3.2:

Proposition 3.4

Suppose the assumptions of Proposition 3.2 hold. Let \(J\le N\) be minimal such that \(\{\chi _{\{\lambda _1,\ldots ,\lambda _J\}}(T)\xi _i\}_{i=1}^l\) are linearly independent. Set

Then \(r<1\) and \({\delta }(B,\mathrm {span}\{T^k\xi _i\}_{i=1}^l)\le Z(T,\{\xi _j\}_{j=1}^l)r^k\). Since the spaces are l-dimensional, it follows from (1.5) that we have the convergence rate

Proof

Consider the subspaces

Let \(\zeta = \sum _{i=k_{j-1}+1}^{k_j} \alpha _i\chi _{\nu _j}(T)\tilde{\xi }_{i}\in E_j\) be a unit vector (hence \(\sum _{i=k_{j-1}+1}^{k_j}\left| \alpha _i\right| ^2=1\)) and consider

By construction, we have for any such \(\tilde{\xi }_{i}\) in the above sum that

This gives \(T^k\tilde{\xi }_{i} = \nu ^k_j\chi _{\nu _j}(T)\tilde{\xi }_{j,i} + T^k\chi _{\theta _j}(T)\tilde{\xi }_{i}.\) Now, by the assumption on \(\sigma (T),\) we have

Thus, since

we have

Here we have used Hölder’s inequality together with the fact that \(\Vert \chi _{\theta _j}(T)\tilde{\xi }_{i}\Vert ^2=\Vert \tilde{\xi }_{i}\Vert ^2-1\) by orthonormality of \(\{\chi _{\nu _j}(T)\tilde{\xi }_i\}_{i=k_{j-1}+1}^{k_j}\). The right-hand side gives an upper bound for \(\delta (E_j,E_j^k)\). Analogous rates of convergence hold for the other subspaces and from (1.6) we have

since the spaces \(E_j\) are orthogonal. \(\square \)

For the rest of this section we shall assume the following:

-

(A1)

\(T \in \mathcal {B}(\mathcal {H})\) is an invertible normal operator and \(\{e_j\}_{j\in \mathbb {N}}\) an orthonormal basis for \(\mathcal {H}\). \(\{Q_k\}\) and \(\{R_k\}\) are Q- and R-sequences of T with respect to the basis \(\{e_j\}_{j\in \mathbb {N}}.\)

-

(A2)

\(\sigma (T) = \omega \cup \Psi \) such that \(\omega \cap \Psi = \emptyset \) and \(\omega =\{\lambda _i\}_{i=1}^N,\) where the \(\lambda _i\)s are isolated eigenvalues with (possibly infinite) multiplicity \(m_i\). Let \(M=m_1+\cdots +m_N=\mathrm {dim}(\mathrm {ran}\chi _{\omega }(T))\) and suppose that \(|\lambda _1|> \ldots > |\lambda _N|.\) Suppose further that \(\sup \{|\theta |:\theta \in \Psi \} < |\lambda _N|.\)

To apply Propositions 3.2 and 3.4 to prove the main result Theorem 3.9, we need to take care of the case that some of the \(e_j\) may have \(\chi _{\omega }(T)e_j=0\).

Definition 3.5

Suppose that (A1) and (A2) hold and let \(K\in \mathbb {N}\cup \{\infty \}\) be minimal with the property that \(\dim (\mathrm {span}\{\chi _{\omega }(T)e_j\}_{j=1}^K) = M.\) Define

Define also the corresponding subset \(\{\hat{e}_j\}_{j=1}^M\subset \{e_j\}_{j=1}^K\) such that \(\{{\hat{e}}_j\}_{j=1}^M = \Lambda _{\omega }{\setminus } \tilde{\Lambda }_{\omega }\) and such that upon writing \(\hat{e}_j=e_{p_j}\), the \(p_j\) are increasing.

Note that we have the following decomposition of T into

where \(\{\xi _j\}_{j=1}^M\) is an orthonormal set of eigenvectors of T. The following simple lemma extends Lemma 39 in [36] to infinite M but the proof is verbatim so omitted.

Lemma 3.6

If \(e_m \in \Lambda _{\Psi } \cup \tilde{\Lambda }_{\omega },\) then

where s(m) is the largest integer such that \(\{\hat{e}_j\}_{j=1}^{s(m)} \subset \{e_j\}_{j=1}^m.\)

The following theorem is the key step of the proof of Theorem 3.9 and concerns convergence to the eigenvectors of T.

Theorem 3.7

Assume (A1) and (A2) and define

Then there exists a collection of orthonormal eigenvectors \(\{\hat{q}_j\}_{j=1}^M\subset \mathrm {ran}\chi _{\omega }(T)\) of \(\ T\) and collections of constants A(m), B(j) and \(C(\mu )\) such that

-

(a)

If \(e_m\in \Lambda _{\Psi }\cup \tilde{\Lambda }_{\omega }\) and \(\mu \) is maximal with \(p_\mu <m\) (recall that \({\hat{e}}_j=e_{p_j}\)), then we have

$$\begin{aligned} \left\| \chi _\omega (T)q_{k,m}\right\| \le A(m) Z(T,\{{\hat{e}}_j\}_{j=1}^\mu )r^k. \end{aligned}$$(3.3)In the case that \(m<p_1\), we interpret this as \(\left\| \chi _\omega (T)q_{k,m}\right\| =0\) which holds from Lemma 3.6.

-

(b)

For any \(j<M+1\),

$$\begin{aligned} {\hat{\delta }}(\mathrm {span}\{\hat{q}_j\},\mathrm {span}\{{\hat{q}}_{k,j}\})\le B(j)Z(T,\{\hat{e}_i\}_{i=1}^j) r^k.\end{aligned}$$(3.4) -

(c)

For any \(\mu <M+1\),

$$\begin{aligned} \delta (\mathrm {span}\{\hat{q}_{j,k}\}_{j=1}^{\mu },\mathrm {span}\{{\hat{q}}_{j}\}_{j=1}^{\mu })\le C(\mu )Z(T,\{{\hat{e}}_j\}_{j=1}^\mu ) r^k \end{aligned}$$(3.5)and hence

$$\begin{aligned} {\hat{\delta }}(\mathrm {span}\{\hat{q}_{j,k}\}_{j=1}^{\mu },\mathrm {span}\{{\hat{q}}_{j}\}_{j=1}^{\mu })\le \mu ^{\frac{1}{2}}C(\mu )Z(T,\{{\hat{e}}_j\}_{j=1}^\mu ) r^k. \end{aligned}$$(3.6)

Here, as in Lemma 3.6, \(q_{k,j} = Q_k e_j\) and \(\hat{q}_{k,j} = Q_k {\hat{e}}_j\). Finally, if M is finite then we must have \(\mathrm {span}\{{\hat{q}}_j\}_{j=1}^M = \mathrm {ran}\chi _{\omega }(T).\)

We will provide an inductive proof of Theorem 3.7 which requires the following for the inductive step of part (a).

Lemma 3.8

Assume the conditions in the statement of Theorem 3.7. Suppose also that (b) in Theorem 3.7 holds for \(j=1,\ldots ,\mu \) and that (c) holds for a given \(\mu <M\). Let \(e_{p_{\mu +1}} = \hat{e}_{\mu +1}\), then if \(e_m \in \Lambda _{\Psi } \cup \tilde{\Lambda }_{\omega },\) where \(m < p_{\mu +1}\), (3.3) also holds with

Proof

First note that from (2.6), invertibility of T and the fact that \(\{\chi _{\omega }(T){\hat{e}}_j\}_{j=1}^\mu \) are linearly independent, it must hold that \(\{\chi _{\omega }(T)\hat{q}_{k,j}\}_{j=1}^{\mu }\) are linearly independent also. Then by using the assumptions stated and the fact that \(\chi _\omega (T)\hat{q}_j={\hat{q}}_j\) we have

Also, we have that \(s(m) \le \mu \) and Lemma 3.6 implies

Using the fact that \(\left\| \chi _{\omega }(T)q_{k,m}\right\| \le 1\) and the definition of \(\delta \) (along with the fact that \(\mathrm {span}\{ {\hat{q}}_j\}_{j=1}^{\mu }\) is finite-dimensional), it follows that there exists some \(v_k=\sum _{j=1}^\mu \beta _{j,k}{\hat{q}_j}\in \mathrm {span}\{{\hat{q}}_j\}_{j=1}^{\mu }\) with \(\left\| v_k\right\| \le 1\) and

We also have from assumption (b) that

since \(q_{k,m}\) is orthogonal to \({\hat{q}}_{k,j}\). This together with (3.7) gives that \(\left| \beta _{j,k}\right| \le \big [C(\mu )+B(j)\big ]Z(T,\{\hat{e}_j\}_{j=1}^\mu )r^k\). Hence we must have

Using (3.7) again then gives the result. Note that we have used orthonormality of \(\{{\hat{q}}_j\}_{j=1}^{\mu }\) which will be proven as part of the induction. \(\square \)

Proof of Theorem 3.7

We begin with the initial step of the induction for (b) and (c). Note that (a) trivially holds by construction with \(A(m)=0\) for any \(m<p_1\) where \(e_{p_1}={\hat{e}}_{1}\) and this provides the initial step for (a).

By Propositions 3.2 and 3.4, there exists a unit eigenvector \({\hat{q}}_1\in \mathrm {ran}\chi _{\omega }(T)\) such that

Since \(\mathrm {span}\{T^k\hat{e}_1\}\subset \mathrm {span}\{T^ke_i\}_{i=1}^{p_1}\), this implies that

Thus, it follows that

from (2.6). Note that \(\{q_{k,i}\}_{i=1}^{p_1}\) are orthonormal (recall that \(Q_k\) is unitary) and hence by (3.9) there exists some coefficients \(\alpha _{k,i}\) with \(\sum _{i=1}^{p_1}|\alpha _{k,i}|^2 \le 1\) such that defining \(\tilde{\eta }_k = \sum _{i=1}^{p_1}\alpha _{k,i}q_{k,i}\) we have

If \(e_m \in \Lambda _{\Psi } \cup \tilde{\Lambda }_{\omega },\) where \(m < {p_1}\) then by Lemma 3.6\(\langle q_{k,m},\hat{q}_1\rangle =0\). It follows that we must have

Hence we can take \(B(1)=1\) and \(C(1)=1\) in (b) and (c) respectively which completes the initial step.

For the induction step we will argue simultaneously for (a), (b) and (c) using induction on \(\mu \). Suppose that (a) holds for \(m<p_{\mu }\) with \(e_{p_\mu }={\hat{e}}_\mu \) together with (b) and (c) for \(j\le \mu \) and some \(\mu <M\). Let \(e_{p_{\mu +1}}={\hat{e}}_{\mu +1}\) then we can use Lemma 3.8 to extend (a) to all \(m<p_{\mu +1}\) and this provides the step for (a). For (b), we note that Propositions 3.2 and 3.4 imply that

where \(\xi \) is a unit eigenvector of T. We may also assume without loss of generality that \(\xi \) is orthogonal to \({\hat{q}}_j\) for \(j=1,\ldots ,\mu \). As before, since \(\mathrm {span}\{T^k\hat{e}_i\}_{i=1}^{\mu +1} \subset \mathrm {span}\{T^ke_i\}_{i=1}^{p_{\mu +1}} \) we have

and hence by invertibility of T

Again, using that\(\{q_{k,i}\}_{i=1}^{p_{\mu +1}}\) are orthonormal, there exists some coefficients \(\alpha _{k,i}\) with \(\sum _{i=1}^{p_{\mu +1}}|\alpha _{k,i}|^2 \le 1\) such that defining \(\tilde{\eta }_k = \sum _{i=1}^{p_{\mu +1}}\alpha _{k,i}q_{k,i}\) we have

If \(e_m \in \Lambda _{\Psi } \cup \tilde{\Lambda }_{\omega },\) where \(m < {p_{\mu +1}}\) then as shown above we have

Taking the inner product of \(\xi -\tilde{\eta }_k\) with \(q_{k,m}\) and using (3.13) together with the orthonormality of the \(q_{k,j}\)s, it follows that \(\left| \alpha _{k,m}\right| \le \big (A(m)+1\big )Z(T,\{{\hat{e}}_j\}_{j=1}^{\mu +1})r^k\). Similarly, if \(j\le \mu \) then for any \(c\in \mathbb {C}\)

since \(\xi \) is orthogonal to \({\hat{q}}_j\). Minimising over c, we can bound this by \(B(j)Z(T,\{{\hat{e}}_j\}_{j=1}^{\mu }) r^k\). In the same way, it then follows that \(|\alpha _{k,p_{j}}|\le \big (B(j)+1\big )Z(T,\{\hat{e}_j\}_{j=1}^{\mu +1})r^k\) where \({\hat{e}}_j=e_{p_j}\). Together, these imply that

To finish the inductive step, we define \({\hat{q}}_{\mu +1}=\xi \). Recall that \(\xi \) is orthogonal to any \({\hat{q}}_{l}\) with \(l\le \mu \). Hence it follows that \(\{{\hat{q}}_i\}_{i=1}^{\mu +1}\) are orthonormal and we can take

in (b). For the induction step for (c), the fact that \(\{\hat{q}_{k,i}\}_{i=1}^{\mu +1}\) are orthonormal and (1.6) imply we can take

Finally, if M is finite we demonstrate that \(\mathrm {span}\{\hat{q}_j\}_{j = 1}^M = \mathrm {span}\{\xi _j\}_{j=1}^M.\) Since the \(\{\hat{q}_i\}_{i=1}^M\) are orthogonal and are eigenvectors of \(\sum _{j=1}^M \lambda _{c_j} \,\xi _j\otimes \bar{\xi }_j\), it follows that \(\mathrm {span}\{{\hat{q}}_j\}_{j = 1}^M = \mathrm {span}\{\xi _j\}_{j=1}^M = \mathrm {ran}\chi _{\omega }(T).\)\(\square \)

3.2 Main results

Our first result generalises Theorem 3.1 to infinite dimensions and relies on Theorem 3.7 (which concerns convergence to eigenvectors).

Theorem 3.9

(Convergence theorem for normal operators in infinite dimensions) Let \(T \in \mathcal {B}(l^2(\mathbb {N}))\) be an invertible normal operator with \(\sigma (T) = \omega \cup \Psi \) and \(\omega = \{\lambda _i\}_{i=1}^N,\) where the \(\lambda _i\)’s are isolated eigenvalues with (possibly infinite) multiplicity \(m_i\) satisfying \(|\lambda _1|> \cdots > |\lambda _N|.\) Suppose further that \(\sup \{|\theta |:\theta \in \Psi \} < |\lambda _N|,\) and let \(\{e_j\}_{j\in \mathbb {N}}\) be the canonical orthonormal basis. Let \(\{Q_n\}_{n\in \mathbb {N}}\) and \(\{R_n\}_{n\in \mathbb {N}}\) be Q- and R-sequences of T with respect to \(\{e_j\}_{j\in \mathbb {N}}.\) Let \(\{{\hat{e}}_j\}_{j=1}^M \subset \{e_j\}_{j\in \mathbb {N}}\), where \(M = m_1 + \cdots +m_N\), be the subset described in Definition 3.5 and Theorem 3.7, i.e. \(\mathrm {span}\{Q_k {\hat{e}}_j\} \rightarrow \mathrm {span}\{{\hat{q}}_j\}\) where \(\{{\hat{q}}_j\}_{j=1}^M\subset \mathrm {ran}\chi _{\omega }(T)\) is a collection of orthonormal eigenvectors of T and if \(e_j \notin \{{\hat{e}}_j\}_{j=1}^M,\) then \(\chi _{\omega }(T)Q_ke_j \rightarrow 0.\) Then:

-

(i)

Every subsequence of \(\{Q_n^*TQ_n\}_{n\in \mathbb {N}}\) has a convergent subsequence \(\{Q_{n_k}^*TQ_{n_k}\}_{k\in \mathbb {N}}\) such that

$$\begin{aligned} Q_{n_k}^*TQ_{n_k} {\mathop {\longrightarrow }\limits ^{\text {WOT}}} \left( \bigoplus _{j=1}^{M} \langle T{\hat{q}}_j,{\hat{q}}_j\rangle {\hat{e}}_j \otimes {\hat{e}}_j \right) \bigoplus \sum _{j \in \Theta } \xi _j \otimes e_j, \end{aligned}$$as \(k \rightarrow \infty ,\) where

$$\begin{aligned} \Theta = \{j: e_j \notin \{{\hat{e}}_l\}_{l=1}^M\}, \quad \xi _j \in \overline{\mathrm {span}\{e_i\}_{i\in \Theta }} \end{aligned}$$and only \(\sum _{j \in \Theta } \xi _j \otimes e_j\) depends on the choice of subsequence. Furthermore, if T has only finitely many non-zero entries in each column then we can replace WOT convergence by SOT convergence.

-

(ii)

We have the following convergence of sections:

$$\begin{aligned} {\widehat{P}}_{M} Q_n^*TQ_n{\widehat{P}}_{M} {\mathop {\longrightarrow }\limits ^{\text {SOT}}} \bigoplus _{j=1}^{M} \langle T{\hat{q}}_j,{\hat{q}}_j\rangle {\hat{e}}_j \otimes {\hat{e}}_j ,\qquad \text {as }n \rightarrow \infty , \end{aligned}$$where \({\widehat{P}}_M\) denotes the orthogonal projection onto \(\overline{\mathrm {span}\{{\hat{e}}_j\}_{j=1}^M}\). Furthermore, if we define

$$\begin{aligned} \rho =\mathrm {sup}\{\left| z\right| :z\in \Psi \},\quad r=\max \{\left| \lambda _2/\lambda _1\right| ,\ldots ,\left| \lambda _N/\lambda _{N-1}\right| ,\rho /\left| \lambda _N\right| \} \end{aligned}$$then \(r<1\) and for any fixed \(x\in \mathrm {span}\{\hat{e}_j\}_{j=1}^M\) we have the following rate of convergence

$$\begin{aligned} \left\| {\widehat{P}}_{M} Q_n^*TQ_n \widehat{P}_{M}x-\left( \bigoplus _{j=1}^{M} \langle T{\hat{q}}_j,\hat{q}_j\rangle {\hat{e}}_j \otimes {\hat{e}}_j \right) x\right\| = O(r^n),\quad \text {as }n\rightarrow \infty .\nonumber \\ \end{aligned}$$(3.14)

If M is finite then we can write (after possibly re-ordering)

and in part (ii) we have the rate of convergence

If \(\{\chi _{\omega }(T)e_l\}_{l=1}^M\) are linearly independent, then we can take \({\hat{e}}_j=e_j\).

Remark 3.10

What Theorem 3.9 essentially says is that if we take the n-th iteration of the IQR algorithm and truncate to an \(m\times m\) matrix (i.e. \(P_mQ_n^*TQ_nP_m\)) then, as n grows, the eigenvalues of this matrix will converge to the extremal parts of the spectrum of T. In particular, the theorem suggests that the IQR algorithm can locate the extremal parts of the spectrum.

Proof of Theorem 3.9

To prove (i), since a closed ball in \(\mathcal {B}(l^2(\mathbb {N}))\) is weakly sequentially compact, it follows that any subsequence of \(\{Q_n^*TQ_n\}_{n\in \mathbb {N}}\) must have a weakly convergent subsequence \(\{Q_{n_k}^*TQ_{n_k}\}_{k\in \mathbb {N}}\). In particular, there exists a \(W \in \mathcal {B}(l^2(\mathbb {N}))\) such that

Let \({\widehat{P}}_M\) denote the projection onto \(\overline{\mathrm {span}\{{\hat{e}}_j\}_{j=1}^M}\). Note that part (i) of the theorem will follow if we can show that

and

We will indeed show this, and we start by observing that, due to the weak convergence and the standard functional calculus, we have that

We then have the following

Thus, by (3.18), (3.20), (3.21) and Theorem 3.7 we get (3.17) and also that \(\widehat{P}_M^{\perp }W {\widehat{P}}_M = 0\). Also, by (3.19), (3.20), (3.21) and Theorem 3.7 we get that \({\widehat{P}}_MW {\widehat{P}}_M^{\perp } = 0\). Note that in all of these cases, Theorem 3.7 implies that the rate of convergence is such that the difference between \(\langle W {\hat{e}}_j, e_i \rangle \), \(\langle W e_i,{\hat{e}}_j \rangle \) and their limiting values is \(O(r^{n_k})\) (however, not necessarily uniformly over the indices). Now suppose that T has finitely many non-zero entries in each column. This can be described by a function \(f:\mathbb {N}\rightarrow \mathbb {N}\) non-decreasing with \(f(n)\ge n\) such that \(\langle Te_j,e_i\rangle = 0\) when \(i > f(j)\) as in Definition 4.1. Proposition 4.2 shows that this is preserved under the iteration in the IQR algorithm, i.e. \(Q_{n_k}^*TQ_{n_k}\) also has this property. So let \(x\in l^2(\mathbb {N})\) and \(\epsilon >0\). Choose y of finite support such that \(\Vert x-y\Vert \le \epsilon \). It is then clear that \(\Vert Q_{n_k}^*TQ_{n_k}y-Wy\Vert \rightarrow 0\) as \(n_k\rightarrow \infty \) (since we only require convergence in finitely many entries). Hence

Since \(\epsilon >0\) and x were arbitrary, we have \( Q_{n_k}^*TQ_{n_k} {\mathop {\longrightarrow }\limits ^{\text {SOT}}} W\).

To prove (ii), suppose that \(x\in \mathrm {span}\{{\hat{e}}_j\}_{j=1}^M\), then x can be written as

with at most finitely many \(x_j\) non-zero. We have that \({\hat{\delta }}(\mathrm {span}\{Q_n{\hat{e}}_j\},\mathrm {span}\{{\hat{q}}_j\})= O(r^n)\) and hence there exists some \(a_{n,j}\) of unit modulus such that \(\left\| Q_n{\hat{e}}_j-a_{n,j}{\hat{q}}_j\right\| = O(r^n)\). Since \(Q_n\) is unitary, we then have

where we have used the fact that T is bounded in the last line. We therefore have convergence on \(\mathrm {span}\{{\hat{e}}_j\}_{j=1}^M\), and, since the operators are uniformly bounded, we must have convergence on \(\overline{\mathrm {span}\{{\hat{e}}_j\}_{j=1}^M}\) which implies that

For the last parts, suppose that M is finite. Theorem 3.7 then implies (3.15) after a possible re-ordering. The rate of convergence in (3.14) also implies that

More generally, let \(K\in \mathbb {N}\cup \{\infty \}\) be minimal such that \(\dim (\mathrm {span}\{\chi _{\omega }(T)e_j\}_{j=1}^K) = M.\) Recall that we defined

Recall also from the proof of Theorem 3.7 that \(\{\hat{e}_j\}_{j=1}^{M} = \Lambda _{\omega } {\setminus } \tilde{\Lambda }_{\omega }.\) If \(\{\chi _{\omega }(T)e_j\}_{j=1}^M\) are linearly independent then \(\tilde{\Lambda }_{\omega } = \emptyset \), and therefore \(\{{\hat{e}}_j\}_{j=1}^{M} = \{ e_j\}_{j=1}^{M},\) which yields that the projection \({\widehat{P}}_M\) in (3.17) is the projection onto \(\overline{\mathrm {span}\{e_j\}_{j=1}^M}\). \(\square \)

Theorems 3.9 and 3.7 also give us convergence to the eigenvectors. With the use of (possibly countably many) shifts and rotations, the above theorem allows us to find all eigenvalues, their multiplicities and eigenspaces outside the convex hull of the essential spectrum, i.e. outside the essential numerical range.

Example 3.11

It is possible in the case of infinite M that the \({\hat{q}}_j\) do not form an orthonormal basis of \(\mathrm {ran}\chi _{\omega }(T)\) and we can even lose part of \(\omega \) in the convergence of \({\widehat{P}}_{M} Q_n^*TQ_n \widehat{P}_{M}\) to a diagonal operator. This is to be contrasted to the finite-dimensional case. For example, suppose that with respect to an initial orthonormal basis \(\{v_j\}_{j\in \mathbb {N}}\), T is given by the diagonal matrix \(\mathrm {Diag}(1/2,1,1,\ldots )\). Now define \(f_j=v_1+(1/j)v_{j+1}\) and apply Gram-Schmidt to the sequence \(\{f_j\}_{j\in \mathbb {N}}\) to generate orthonormal vectors \(\{e_j\}_{j\in \mathbb {N}}\). It is easy to see that any \(v_j\) can be approximated to arbitrary accuracy using finite linear combinations of \(e_j\) and hence \(\{e_j\}_{j\in \mathbb {N}}\) is an orthonormal basis of our Hilbert space. We also have that the \(\chi _{1}(T)(f_j)=(1/j)v_{j+1}\) are linearly independent and hence so are \(\chi _{1}(T)(e_j)\). It follows that the IQR iterates converge in the strong operator topology to the identity operator. However, we could equally take \(\omega =\{1,1/2\}\) in Theorem 3.9. Hence we have the curious case that \(\overline{\mathrm {span}\{\hat{q}_j\}_{j\in \mathbb {N}}}\subset \overline{\mathrm {span}\{\hat{v}_j\}_{j>1}}\) and we lose the eigenvalue 1 / 2.

The following corollary is entirely analogous to the finite-dimensional case.

Corollary 3.12

Suppose that the conditions of Theorem 3.9 hold with M finite. Suppose also that for \(j=1,\ldots ,N\) the vectors \(\{\chi _{\{\lambda _1,\ldots ,\lambda _j\}}(T)e_i\}_{i=1}^{\sum _{l\le j}m_l}\) are linearly independent. In the notation of Theorem 3.9, let \(\rho =\mathrm {sup}\{\left| z\right| :z\in \Psi \}\). For \(j<N\) define \(r_j=\max \{|\lambda _{k+1}/\lambda _k|:k\le j\}\) and for \(j=N\) define \(r_N=\max \{|\lambda _{k+1}/\lambda _k|,|\lambda _N/\rho |:k\le j\}\). We then have the following rates of convergence to the diagonal operator for \(i,j\le M\):

-

1.

\(\left| \langle Q_n^*TQ_n e_j, e_i\rangle \right| = O(r_k^n)\) as \(n\rightarrow \infty \) if \(i>j\) and k is minimal such that \(i\le \sum _{l\le k}m_l\),

-

2.

\(\left| \langle Q_n^*TQ_n e_i, e_i\rangle -\lambda _k\right| = O(r_k^n)\) as \(n\rightarrow \infty \) if k is minimal such that \(i\le \sum _{l\le k}m_l\).

Proof

The result follows from Theorem 3.9 applied successively to \(\omega _1,\omega _2,\ldots ,\omega _N\) where \(\omega _j=\{\lambda _k:k\le j\}\). In general, analogous results follows from Theorem 3.9 when M is infinite and with other linear independence conditions on \(\chi _{\omega '}(T)e_i\) with \(\omega '\subset \omega \) but the statements become less succinct. \(\square \)

In the finite-dimensional case and the case of distinct eigenvalues of the same magnitude, the QR algorithm applied to a normal matrix will ‘converge’ to a block diagonal matrix (without necessarily converging in each block). This can be extended to infinite dimensions by inductively using the following theorem which also extends to non-normal operators.

Theorem 3.13

(Block convergence theorem in infinite dimensions) Let \(T \in \mathcal {B}(l^2(\mathbb {N}))\) be an invertible operator (not necessarily normal) and suppose that there exists an orthogonal projection P of rank M (possibly infinite) such that both the ranges of P and of \(I-P\) are invariant under T. Suppose also that there exists \(\alpha>\beta >0\) such that

-

\(\Vert Tx\Vert \ge \alpha \Vert x\Vert \quad \forall x\in \mathrm {ran}(P)\),

-

\(\Vert Tx\Vert \le \beta \Vert x\Vert \quad \forall x\in \mathrm {ran}(I-P)\).

Let \(\{Q_n\}_{n\in \mathbb {N}}\) and \(\{R_n\}_{n\in \mathbb {N}}\) be Q- and R-sequences of T with respect to \(\{e_i\}.\) Then there exists a subset \(\{{\hat{e}}_j\}_{j=1}^M\subset \{e_i\}_{i\in \mathbb {N}}\) such that

-

(i)

For any finite \(\mu \le M\) we have \(\delta (\mathrm {span}\{Q_n{\hat{e}}_j\}_{j=1}^\mu ,\mathrm {ran}(P))= O(\beta ^n/\alpha ^n)\) as \(n\rightarrow \infty \). If M is finite this implies full convergence \({\hat{\delta }} (\mathrm {span}\{Q_n{\hat{e}}_j\}_{j=1}^M,\mathrm {ran}(P))= O(\beta ^n/\alpha ^n)\) as \(n\rightarrow \infty \).

-

(ii)

Every subsequence of \(\{Q_n^*TQ_n\}_{n\in \mathbb {N}}\) has a convergent subsequence \(\{Q_{n_k}^*TQ_{n_k}\}_{k\in \mathbb {N}}\) such that

$$\begin{aligned} Q_{n_k}^*TQ_{n_k} {\mathop {\longrightarrow }\limits ^{\text {WOT}}} \sum _{j=1}^M \xi _j \otimes {\hat{e}}_j \bigoplus \sum _{i \in \Theta } \zeta _i \otimes e_i, \end{aligned}$$as \(k \rightarrow \infty ,\) where

$$\begin{aligned} \Theta = \{j: e_j \notin \{{\hat{e}}_l\}_{l=1}^M\}, \quad \xi _j \in \overline{\mathrm {span}\{{\hat{e}}_l\}_{l=1}^M}, \quad \zeta _i \in \overline{\mathrm {span}\{e_l\}_{l\in \Theta }}. \end{aligned}$$

If \(\{Pe_l\}_{l=1}^M\) are linearly independent then we can take \({\hat{e}}_j=e_j\). Furthermore, if T has only finitely many non-zero entries in each column then we can replace WOT convergence by SOT convergence.

Remark 3.14

Theorem 3.13 essentially says that the IQR algorithm can compute the invariant subspace \(\mathrm {ran}(P)\) of such an operator if there is enough separation between T restricted to \(\mathrm {ran}(P)\) and \(\mathrm {ran}(I-P)\). In other words, provided the existence of a dominant invariant subspace.

Proof of Theorem 3.13

The main ideas of the proof of Theorem 3.13 have already been presented so we sketch the proof. We first define the vectors \(\{{\hat{e}}_j\}_{j=1}^M\) in a similar way to Definition 3.5 inductively by \({\hat{e}}_j=e_{p_j}\) where

Let \(r=\beta /\alpha <1\). We will prove inductively that

-

(a)

\({\hat{\delta }}(\mathrm {span}\{Q_n{\hat{e}}_j\}_{j=1}^\mu ,\mathrm {span}\{PQ_n{\hat{e}}_j\}_{j=1}^\mu )\le C_1(\mu )r^n\) for any finite \(\mu \le M\),

-

(b)

\(\Vert PQ_ne_j\Vert \le C_2(j)r^n\) for any \(j\in \Theta \),

for some constants \(C_1(\mu )\) and \(C_2(j)\). Suppose that this has been done. Part (i) of Theorem 3.13 now follows since \(\mathrm {span}\{PQ_n\hat{e}_j\}_{j=1}^\mu \subset \mathrm {ran}(P)\). We then argue as in the proof of Theorem 3.9 to gain

Then by studying the inner products \(\langle TQ_{n_k}e_j,Q_{n_k}e_i\rangle \) using the invariance of \(\mathrm {ran}(P)\), \(\mathrm {ran}(I-P)\) under T and from (b), part (ii) of Theorem 3.13 easily follows (note that (a) implies that \(\Vert (I-P)Q_n{\hat{e}}_j\Vert \le C_1(j)r^n\)). The final part of the theorem then follows from the same arguments in the proof of Theorem 3.9. Hence we only need to prove (a) and (b).

We first claim that

P commutes with T which is invertible and hence both of these spaces have dimension \(\mu \) by the construction of the \({\hat{e}}_j\). It follows that (3.22) implies

To show (3.22), let \(x_1^n,\ldots ,x_\mu ^n\) be an orthonormal basis for \(\mathrm {span}\{PT^n{\hat{e}}_j\}_{j=1}^\mu \) and let \(\xi =\sum _{j=1}^\mu \alpha _j x_j^n\) have norm at most 1. Now, we may choose coefficients \(\beta _{j,n}\) such that \( T^n\sum _{j=1}^\mu \beta _{j,n} x_j^n = \xi \) since \(T|_{\mathrm {ran}(P)}\) is invertible when viewed as an operator acting on \(\mathrm {ran}(P)\). By the assumptions on T we must have that

We may change basis from \(\{{\hat{e}}_j\}_{j=1}^\mu \) to \(\{\tilde{e}_j\}_{j=1}^\mu \) such that \(P{\tilde{e}}_j=x_j^n\). Form the vector

Then clearly by Hölder’s inequality

proving (3.22) and hence (3.23).

Note that the proof of Lemma 3.6 carries over (replacing the projection \(\chi _{\omega }(T)\) by P) to prove that

where s(m) is maximal with \(\{\hat{e}_j\}_{j=1}^{s(m)}\subset \{e_j\}_{j=1}^m\). It follows that

where we have used (2.6) to reach the second line and the fact that \(\mathrm {span}\{PT^n \hat{e}_j\}_{j=1}^{\mu }\subset \mathrm {span}\{PT^n e_j\}_{j=1}^{p_\mu }\) to reach the third line. Again, both spaces have dimension \(\mu \) so we have

With these arguments out of the way (these are the analogue of Proposition 3.4) we can now form our inductive argument, similar to the proof of Theorem 3.7. Suppose first that (a) holds for \(\mu \) (allowing \(\mu =0\) for the initial step) and let \(j\in \Theta \) have \(j<p_{\mu +1}\) (where \(p_{\mu +1}=\infty \) if \(\mu =M\)). From (a) for \(\mu \) and (3.24) we have that

for some \(v_{n}\) with \(\Vert v_{n}\Vert \le C_1(\mu )r^n\). Then we must have

Using (a) again, along with the fact that \(Q_n e_j\) is orthogonal to \(\{Q_n{\hat{e}}_i\}_{i=1}^\mu \), we must have \(\left| a_{n,i}\right| \le 2C_1(\mu )r^n\). It follows that we can take \(C_2(j)=(2\sqrt{\mu }+1)C_1(\mu )\) for \(j\in [p_{\mu }+1,\ldots ,p_{\mu +1})\) in (b). Now we use (3.25). Let \(\xi \in \mathrm {span}\{PQ_n{\hat{e}}_j\}_{j=1}^{\mu +1}\) have unit norm and assume that \(p_{\mu +1}<\infty \) (else there is nothing to prove since then \(\mu =M\)). Then there exists \(b_{n,j}\) and \(w_n\) such that

and \(\Vert w_n\Vert \le C_5(\mu +1)r^n\). Now let \(j\in \Theta \) with \(j<p_{\mu +1}\) then we must have

We have proven (b) for such j and hence we have \(\left| b_{n,j}\right| \le \big (C_2(j)+C_5(\mu +1)\big )r^n\). It follows that we can take

where the square root factor appears since the relevant spaces are \(\mu \)-dimensional. This completes the inductive step (the initial step is identical) and hence the proof of the theorem. \(\square \)

Theorem 3.13 can be made sharper (under a slightly stricter assumption on the linear independence of \(\{e_j\}_{j=1}^M\)) with the following theorem which includes the case that \(\mathrm {ran}(I-P)\) is not necessarily invariant.

Theorem 3.15

(Convergence to invariant subspace in infinite dimensions) Let \(T \in \mathcal {B}(l^2(\mathbb {N}))\) be an invertible operator (not necessarily normal) and suppose that there exists an orthogonal projection P of finite rank M such that the range of P is invariant under T. Suppose also that there exists \(\alpha>\beta >0\) such that

-

\(\Vert Tx\Vert \ge \alpha \Vert x\Vert \quad \forall x\in \mathrm {ran}(P)\),

-

\(\Vert (I-P)T(I-P)\Vert \le \beta \).

Under these conditions, there exists a canonical M-dimensional \(T^*-\)invariant subspace S and we let \({\tilde{P}}\) denote the orthogonal projection onto S (in the special case that \(\mathrm {ran}(I-P)\) is also T-invariant such as in Theorems 3.9 and 3.13, then \(S=\mathrm {ran}(P)\)). Suppose also that \(\{{\tilde{P}}e_j\}_{j=1}^M\) are linearly independent. Let \(\{Q_n\}_{n\in \mathbb {N}}\) and \(\{R_n\}_{n\in \mathbb {N}}\) be Q- and R-sequences of T with respect to \(\{e_i\}.\) Then

-

(i)

The subspace angle \(\phi (\mathrm {span}\{e_j\}_{j=1}^M,S)<\pi /2\) and we have

$$\begin{aligned}&{\hat{\delta }} (\mathrm {span}\{Q_n e_j\}_{j=1}^M,\mathrm {ran}(P))\nonumber \\&\quad \le \frac{\sin \big (\phi (\mathrm {span}\{e_j\}_{j=1}^M,\mathrm {ran}(P))\big )}{\cos \big (\phi (\mathrm {span}\{e_j\}_{j=1}^M,S)\big )}\frac{\beta ^n}{\alpha ^n}\Big (1+\frac{\Vert PT(I-P)\Vert }{\alpha -\beta }\Big ), \end{aligned}$$(3.26) -

(ii)

Every subsequence of \(\{Q_n^*TQ_n\}_{n\in \mathbb {N}}\) has a convergent subsequence \(\{Q_{n_k}^*TQ_{n_k}\}_{k\in \mathbb {N}}\) such that

$$\begin{aligned} Q_{n_k}^*TQ_{n_k} {\mathop {\longrightarrow }\limits ^{\text {WOT}}} \sum _{j=1}^M \xi _j \otimes e_j \bigoplus \sum _{i=M+1}^{\infty } \zeta _i \otimes e_i, \end{aligned}$$as \(k \rightarrow \infty ,\) where

$$\begin{aligned} \xi _j \in \overline{\mathrm {span}\{e_l\}_{l=1}^M}, \quad \zeta _i\in \mathcal {H}. \end{aligned}$$

Furthermore, if T has only finitely many non-zero entries in each column then we can replace WOT convergence by SOT convergence.

Remark 3.16

Theorem 3.15 says that the IQR algorithm can be used to approximate dominant invariant subspaces. In particular, we shall use the bound (3.26) to build a \(\Delta _1\) algorithm in Sect. 5. Note in the normal case that Theorem 3.9 is more precise, both in giving convergence of individual vectors to eigenvectors and in the less restrictive assumptions on spanning sets and M. In the normal case (and that of Theorem 3.13) we also have that the limit operator has a block diagonal form.

3.3 Proof of Theorem 3.15

In this section we will prove Theorem 3.15. The proof technique is different from those used above, and hence we have given it a separate section. Throughout, we will denote the ratio \(\beta /\alpha \) by r. Note that since M is finite, the bound \(\alpha \) implies that \(T|_{\mathrm {ran}(P)}:\mathrm {ran}(P)\rightarrow \mathrm {ran}(P)\) is invertible with \(\Vert T|_{\mathrm {ran}(P)}^{-1}\Vert \le 1/\alpha \). First, let Q denote a unitary change of basis matrix from \(\{e_j\}\) to \(\{{\tilde{e}}_j\}\) where \(\{{\tilde{e}}_j\}_{j=1}^M\) is a basis for \(\mathrm {ran}(P)\). Then as matrices with respect to the original basis we can write

where \(T_{11}\in \mathbb {C}^{M\times M}\) and \(T_{12}\) has M rows. Our assumptions imply that \(\Vert T_{11}^{-1}\Vert \le 1/\alpha \) and \(\Vert T_{22}\Vert \le \beta \). The next lemma shows that we can change the basis further to eliminate the sub-block \(T_{12}\). This is needed to apply a power iteration type argument.

Lemma 3.17

Define the linear function \(F:\mathcal {B}(l^2(\mathbb {N}),\mathbb {C}^M)\rightarrow \mathcal {B}(l^2(\mathbb {N}),\mathbb {C}^M)\) by

where we identify elements of \(\mathcal {B}(l^2(\mathbb {N}),\mathbb {C}^M)\) as matrices. Then we can define \(A\in \mathcal {B}(l^2(\mathbb {N}),\mathbb {C}^M)\) by \(A-F(A)=-T_{11}^{-1}T_{12}\). Furthermore, if we define

then B(A) has inverse \(B(-A)\) and

Proof

Our assumptions on T ensure that F is a contraction with \(\left\| F\right\| \le r<1\). Hence we can define A via the series

It is then straightforward to check \(A-F(A)=-T_{11}^{-1}T_{12}\), \(B(A)B(-A)=B(-A)B(A)=I\) and the identity (3.27). \(\square \)

Let

then we have the matrix identity

The canonical \(T^*-\)invariant subspace alluded to in Theorem 3.15 is then simply \(S=\mathrm {span}\{Ye_j\}_{j=1}^M\). The space is canonical since it is easily seen that it is unchanged if we use a different basis for \(\mathrm {ran}(P_1)\) and \(\mathrm {ran}(P_2)\) in the definition of Q.

Now let \(P_0=\left( \begin{matrix} e_1&e_2&\ldots&e_M \end{matrix} \right) \in \mathcal {B}(\mathbb {C}^M,l^2(\mathbb {N}))\) denote the matrix whose columns are the first M basis elements \(\{e_j\}_{j=1}^M\). Since the \(\{R_i\}\) are upper triangular, it is easy to see that

We will denote the (invertible) matrix \(P_0^*R_nP_0\in \mathbb {C}^{M\times M}\) by \(Z_n\). Now define

then we have the relation

But by Lemma 3.17 we have

Unwinding the definitions, this implies the matrix identities

Lemma 3.18

The following identity holds

Proof

Note that \(\mathrm {span}\{Q_n e_j\}_{j=1}^M=\mathrm {ran}(Q_nP_0)\) and \(\mathrm {ran}(P)=\mathrm {ran}(P_1)\). Since \(P_1P_1^*\) and \(Q_nP_0P_0^*Q_n^*\) are orthogonal projections, it follows that

But we have that \(\Vert P_0^*Q_n^*P_2\Vert =\Vert V_n^2\Vert \) and hence we are done if we can show \(\Vert P_0^*Q_n^*P_2\Vert =\Vert (I-P_0)^*Q_n^*P_1\Vert \). Consider the unitary matrix

Now let \(x\in \mathbb {C}^{M}\) be of unit norm, then \(\Vert U_{11}x\Vert ^2+\Vert U_{21}x\Vert ^2=1\). It follows that \(\Vert U_{21}\Vert ^2=1-\sigma _0(U_{11})^2\), where \(\sigma _0\) denotes the smallest singular value. Applying the same argument to \(U^*\) we see that \(\Vert U_{12}\Vert ^2=1-\sigma _0(U_{11})^2=\Vert U_{21}\Vert ^2\), completing the proof. \(\square \)

Lemma 3.19

The matrix \((V_0^1-AV_0^2)\) is invertible with

Proof

First note that since \(\{{\tilde{P}}e_j\}_{j=1}^M\) are linearly independent, we must have \(\phi (\mathrm {span}\{e_j\}_{j=1}^M,S)<\pi /2\) and hence the bound in (3.31) is finite. Let \(W=(P_1-P_2A^*)(I+AA^*)^{-1/2}\in \mathcal {B}(\mathbb {C}^M,l^2(\mathbb {N}))\). By considering \(W^*W=I\in \mathbb {C}^{M\times M}\), we see that the columns of W are orthonormal. In fact, expanding Y we have

and hence the columns of W are a basis for the subspace S. Arguing as in the proof of Lemma 3.18, we have that

This implies that \(W^*P_0\) is invertible with

We also have the identity

Since \((I+AA^*)^{-1/2}\) has norm at most 1, we see that \((V_0^1-AV_0^2)\) is invertible and (3.31) holds. \(\square \)

Proof of Theorem 3.15

Using Lemma 3.19 and the matrix identities (3.28) and (3.29), we can write

Using (3.30) and (3.31), this implies

It is clear by summing a geometric series that

It follows that \(\Vert V_n^1-AV_n^2\Vert \le 1+\Vert PT(I-P)\Vert /(\alpha -\beta )\). Substituting this into (3.32) proves part (i) of the theorem.

Next we argue that if \(i>M\) then \(\Vert PQ_ne_i\Vert \rightarrow 0\) as \(n\rightarrow \infty \). We have that

with \(\Vert v_n\Vert \rightarrow 0\) by part (i). Note that we then have

with \(\{\epsilon _{j,n}\}\) null. But again by (i) we have that \(PQ_ne_j\) approaches \(\mathrm {span}\{Q_ne_k\}_{k=1}^M\) which is orthogonal to \(Q_ne_i\) and hence \(\{\alpha _{j,n}\}\) is null. The proof of part (ii) now follows the same argument as in the proof of part (i) of Theorem 3.9 and of the final part of Theorem 3.13. The key property being that if \(j\le M\) and \(i>M\) then \(\langle Q_n^*TQ_ne_j,e_i\rangle \rightarrow 0\) due to the invariance of \(\mathrm {ran}(P)\) under T. Note that it does not necessarily follow (as is easily seen by considering upper triangular T) that \(\langle Q_n^*TQ_ne_i,e_j\rangle \rightarrow 0\) for such i, j. \(\square \)

4 The IQR algorithm can be computed

The previous section gives a theoretical justification for why the IQR algorithm may work, but we are faced with the possibly unpleasant problem of how to compute with infinite data structures on a computer. Fortunately, there is a way to overcome such a problem. The key is to impose some structural requirements on the infinite matrix.

4.1 Quasi-banded subdiagonals

Definition 4.1

Let T be an infinite matrix acting as a bounded operator on \(l^2(\mathbb {N})\) with basis \(\{e_j\}_{j\in \mathbb {N}}\). For \(f:\mathbb {N}\rightarrow \mathbb {N}\) non-decreasing with \(f(n)\ge n\) we say that T has quasi-banded subdiagonals with respect to f if \(\langle Te_j,e_i\rangle = 0\) when \(i > f(j).\)

This is the class of infinite matrices with a finite number of non-zero elements in each column (and not necessarily in each row) which is captured by the function f. It is for this class that the computation of the IQR algorithm is feasible on a finite machine. For this class of operators one can actually compute (without any approximation or any extra discretisation) the matrix elements of the n-th iteration of the IQR algorithm as if it was done on an infinite computer (meaning the computation collapses to a finite one). The following result of independent interest is needed in the proof and generalises the well-known fact in finite dimensions that the QR algorithm preserves bandwidth (see [57] for a good discussion of the tridiagonal case).

Proposition 4.2

Let \(T \in \mathcal {B}(l^2(\mathbb {N}))\) and let \(T_n\) be the n-th element in the IQR iteration, such that \( T_n = Q^*_n \cdots Q^*_1 T Q_1 \cdots Q_n, \) where

and \(U^j_l\) is a Householder transformation. If T has quasi-banded subdiagonals with respect to f then so does \(T_n\).

Proof

By induction, it is enough to prove the result for \(n=1\). From the construction of the Householder reflections \(U_m^1=P_{m-1}\oplus S_m\), the chosen \(\eta _m\) (see Theorem 2.2) have

Using the fact that f is increasing, it follows that each \(U^1_m\) has quasi-banded subdiagonals with respect to f, as does the product \(U^1_1\cdots U^1_m\). It follows that \(Q_1\) must have quasi-banded subdiagonals with respect to f and hence so does \(T_1=R_1Q_1\) since \(R_1\) is upper triangular. \(\square \)

Theorem 4.3

Let \(T \in \mathcal {B}(l^2(\mathbb {N}))\) have quasi-banded subdiagonals with respect to f and let \(T_n\) be the n-th element in the IQR iteration, i.e. \( T_n = Q^*_n \cdots Q^*_1 T Q_1 \cdots Q_n, \) where

and \(U^j_l\) is a Householder transformation (the superscript is not a power, but an index). Let \(P_m\) be the usual projection onto \(\mathrm {span}\{e_j\}_{j=1}^m\) and denote the a-fold iteration of f by \(\underbrace{f\circ {}f\circ {}\ldots \circ {}f}_{a\text { times}}=f_a\). Then

Remark 4.4

What Theorem 4.3 says is that to compute the finite section of size m of the n-th iteration of the IQR algorithm (i.e. \(P_mT_nP_m\)), one only needs information from the finite section of size \(f_{n}(m)\) (i.e. \(P_{f_{n}(m)} T P_{f_{n}(m)}\)) since the relevant Householder reflections can be computed from this information. In other words, the IQR algorithm can be computed.

Proof of Theorem 4.3

By induction it is enough to prove that

To see why this is true, note that by the assumption that T has quasi-banded subdiagonals with respect to f, Proposition 4.2 shows that \(T_n\) has quasi-banded subdiagonals with respect to f for all \(n \in \mathbb {N}.\) Thus, it follows from the construction in the proof of Theorem 2.2 that each \(U^j_l\) is of the form

where \(I_{l,j,1}\) denotes the identity on \(P_{l-1}\mathcal {H}\), \(I_{l,j,2}\) denotes the identity on \(\mathrm {span}\{e_k:l\le k\le f(l)\}\), \(I_{l,j,3}\) denotes the identity on \(P_{f(l)}^{\perp }\mathcal {H}\) and \(\xi _{l,j}\in \mathrm {span}\{e_k:l\le k\le f(l)\}\). Since \(P_m\) is compact, it then follows that

\(\square \)

Remark 4.5

This result allows us to implement the IQR algorithm because each \(U^j_l\) only affects finitely many columns or rows of A if multiplied either on the left or the right. In computer science, it is often referred to as “Lazy evaluation” when one computes with infinite data structures, but defers the use of the information until needed. A simple implementation is shown in the appendix for the case that the matrix has k subdiagonals (i.e. we have \(f(n)=n+k\)).

The next question is how restrictive is the assumption in Definition 4.1? In particular, suppose that \(T \in \mathcal {B}(\mathcal {H})\) and that \(\xi \in \mathcal {H}\) is a cyclic vector for T (i.e. \(\mathrm {span}\{\xi , T\xi , T^2\xi , \ldots \}\) is dense in \(\mathcal {H}\)). Then by applying the Gram-Schmidt procedure to \(\{\xi , T\xi , T^2\xi , \ldots \}\) we obtain an orthonormal basis \(\{\eta _1, \eta _2, \eta _3, \ldots \}\) for \(\mathcal {H}\) such that the matrix representation of T with respect to \(\{\eta _1, \eta _2, \eta _3, \ldots \}\) is upper Hessenberg, and thus the matrix representation has only one subdiagonal. The question is therefore about the existence of a cyclic vector. Note that if T does not have invariant subspaces then every vector \(\xi \in \mathcal {B}(\mathcal {H})\) is a cyclic vector. Now what happens if \(\xi \) is not cyclic for T? We may still form \(\{\eta _1, \eta _2, \eta _3, \ldots \}\) as above, however, \(\mathcal {H}_1 = \overline{\mathrm {span}\{\eta _1, \eta _2, \eta _3, \ldots \}}\) is now an invariant subspace for T and \(\mathcal {H}_1 \ne \mathcal {H}.\) We may still form a matrix representation of T with respect to \(\{\eta _1, \eta _2, \eta _3, \ldots \}\), but this will now be a matrix representation of \(T|_{\mathcal {H}_1}.\) Obviously, we can have that \(\sigma (T|_{\mathcal {H}_1}) \subsetneq \sigma (T)\).

However, the following example shows that the class of matrices for which we can compute the IQR algorithm covers a wide number of applications. In particular, it includes all finite interaction Hamiltonians on graphs. Such operators play a prominent role in solid state physics [48, 51] describing propagation of waves and spin waves as well as encompassing Jacobi operators studied in many physical models and integrable lattices [71].

Example 4.6

Consider a connected, undirected graph G, such that each vertex degree is finite and the set of vertices V(G) is countably infinite. Consider the set of all bounded operators A on \(l^2(V(G))\cong l^2(\mathbb {N})\) such that the set \(S(v):=\{w\in V:\left\langle w,Av\right\rangle \ne 0\}\) is finite for any \(v\in V\). Suppose our enumeration of the vertices obeys the following pattern. \(e_1\)’s neighbours (including itself) are \(S_1=\{e_1,e_2,\ldots ,e_{q_1}\}\) for some finite \(q_1\). The set of neighbours of these vertices is \(S_2=\{e_1,\ldots ,e_{q_2}\}\) for some finite \(q_2\) where we continue the enumeration of \(S_1\) and this process continues inductively enumerating \(S_m\). If we know S(v) for all \(v\in V\) then we can find an \(f:\mathbb {N}\rightarrow \mathbb {N}\) such that \(A_{j,m}=0\) if \(|j|>f(m)\). We simply choose \(f(n)=q_{r_n}\) where \(r_n\) is minimal such that \(\cup _{j\le n}S(e_j)\subset S_{r_n}\).

4.2 Invertible operators

More generally, given an invertible operator T with information on how its columns decay at infinity, we can compute finite sections of the IQR iterates with error control. For computing spectral properties, we can assume, by shifting \(T\rightarrow T+\lambda I\) then translating by \(-\lambda \) back, that the operator we are interested in is invertible, hence the invertibility criterion is not that restrictive. Throughout, we will use the following lemma which says that for invertible operators, the QR decomposition is essentially unique.

Lemma 4.7

Let T be an invertible operator (viewed as a matrix acting on \(l^2(\mathbb {N})\)), then there exists a unique decomposition \(T=QR\) with Q unitary and R invertible, upper triangular such that \(R_{ii}\in \mathbb {R}_{>0}\). Furthermore, any other “QR” decomposition \(T=Q'R'\) has a diagonal matrix \(D=\mathrm {Diag}(t_1,t_2,\ldots )\) such that \(\left| t_i\right| =1\) and \(Q=Q'D\). In other words, the QR decomposition is unique up to phase choices.

Proof

Consider the QR decomposition already discussed in this paper, \(T=Q''R''\). T is invertible, and hence \(Q''\) is a surjective isometry so is unitary. Hence \(R''=Q''^*T\) is invertible. Being upper triangular, it follows that \(R''_{ii}\ne 0\) for all i. Choose \(t_i\in \mathbb {T}\) such that \(t_iR''_{ii}\in \mathbb {R}_{>0}\) and set \(D=\mathrm {Diag}(t_1,t_2,\ldots )\). Letting \(Q=Q''D^*\) and \(R=DR''\) we clearly have the decomposition as claimed.

Now suppose that \(T=Q'R'\) then we can write \(Q=Q'R'R^{-1}\). It follows that \(R'R^{-1}\) is a unitary upper triangular matrix and hence must be of the form \(D=\mathrm {Diag}(t_1,t_2,\ldots )\) with \(\left| t_i\right| =1\). \(\square \)

Another way to see this result is to note that the columns of Q are obtained by applying the Gram–Schmidt procedure to the columns of T. The restriction that \(R_{ii}\in \mathbb {R}_{>0}\) can also be incorporated into Theorem 4.3. Theorem 4.3 (in this subcase of invertibility) is then a consequence of the fact that if T has quasi-banded subdiagonals with respect to f then

and the relations (2.5)—we can apply Gram-Schmidt (or a more stable modified version) to the columns of \(P_{f_n(m)}TP_{f_n(m)}\) and truncate the resulting matrix.

Assume that given \(T\in \mathcal {B}(l^2(\mathbb {N}))\) invertible (not necessarily with quasi-banded subdiagonals), we can evaluate an increasing family of increasing functions \(g^j:\mathbb {N}\rightarrow \mathbb {N}\) such that defining the matrix \(T_{(j)}\) with columns \(\{P_{g^j(n)}Te_n\}\) we have that \(T_{(j)}\) is invertible and