Abstract

We study nested variational inequalities, which are variational inequalities whose feasible set is the solution set of another variational inequality. We present a projected averaging Tikhonov algorithm requiring the weakest conditions in the literature to guarantee the convergence to solutions of the nested variational inequality. Specifically, we only need monotonicity of the upper- and the lower-level variational inequalities. Also, we provide the first complexity analysis for nested variational inequalities considering optimality of both the upper- and lower-level.

Similar content being viewed by others

1 Introduction

We focus on solving (upper-level) variational inequalities whose feasible set is given by the solution set of another (lower-level) variational inequality. These problems are commonly referred to as nested variational inequalities and they represent a flexible modelling tool when it comes to solving, for instance, problems arising in finance (see, e.g., the most recent Lampariello et al. (2021) for an application in the multi-portfolio selection context) as well as several other fields (see, e.g., Facchinei et al. 2014; Scutari et al. 2012 for resource allocation problems in communications and networking).

As far as the literature on nested VIs is concerned, it is still in its infancy if compared to the bilevel instance of hierarchical optimization or, more generally, bilevel structures as in Dempe (2002), Lampariello and Sagratella (2017, 2020) and Lampariello et al. (2019). However, there are two main solution methods that are most adopted in the field-related literature: hybrid-like techniques (see, e.g., Lu et al. 2009; Marino and Xu 2011; Yamada 2001) and Tikhonov-type schemes (see, e.g. Lampariello et al. (2020) for the latest developments and Kalashnikov and Kalashinikova (1996) for some earlier developments, as well as Facchinei et al. (2014) and the references therein). It should be pointed out that hybrid-like procedures very often require particularly strong assumptions [(e.g. demanding co-coercivity of the lower-level map as in Lu et al. (2009), Marino and Xu (2011) and Yamada (2001)] in order to ensure convergence or to work properly at all. Hence, we rely on the Tikhonov paradigm drawing from the general schemes proposed in Facchinei et al. (2014) and Lampariello et al. (2020), however asking for less stringent assumptions and combining the Tikhonov approach with a new averaging procedure.

We widen the scope and expand the applicability of nested variational inequalities by showing for the first time in related literature that solutions can be provably computed in the more general framework of simply monotone upper- and lower-level variational inequalities. Specifically, in Facchinei et al. (2014) and Lampariello et al. (2020), where as far as we are aware the most advanced results are obtained, the upper-level map is required to be monotone plus. Relying on a combination of a Tikhonov approach with an averaging procedure, the algorithm we propose is shown to converge provably to a solution of a nested variational inequality where the upper-level map is required to be just monotone.

We also obtain complexity results for our method. Except for Lampariello et al. (2020), not only does this analysis represent the only other complexity study in the literature of nested variational inequalities, it is also the first one in the field dealing with upper-level optimality.

2 Problem definition and motivation

Let us consider the nested variational inequality \(\text {VI}\big (G, \text {SOL}(F,Y)\big )\), where \(G: {\mathbb {R}}^{n} \rightarrow {\mathbb {R}}^n\) is the upper-level map, and \(\text {SOL}(F,Y)\) is the solution set of the lower-level VI(F, Y). We recall that, given a subset \(Y \subseteq {\mathbb {R}}^n\) and a mapping \(F: {\mathbb {R}}^{n} \rightarrow \mathbb R^n\), the variational inequality VI(F, Y) is the problem of computing a vector \(x\in {\mathbb {R}}^n\) such that

In other words, \(\text {VI}\big (G, \text {SOL}(F,Y)\big )\) is the problem of finding \(x \in {\mathbb {R}}^n\) that solves

As it is clear from (2), the feasible set of \(\text {VI}\big (G, \text {SOL}(F,Y)\big )\) is implicitly defined as the solution set of the lower-level VI(F, Y). The nested variational inequality (2) we consider has a purely hierarchical structure in that the lower-level problem (1) is non parametric with respect to the upper-level variables, unlike the more general bilevel structures presented in Lampariello and Sagratella (2020). Under mild conditions, VIs equivalently reformulate NEPs so that in turn, by means of structure (2), we are able to model well-known instances of bilevel optimization and address multi-follower games.

We introduce the following blanket assumptions which are widely adopted in the literature of solution methods for variational inequalities:

-

(A1)

the upper-level map G is Lipschitz continuous with constant \(L_{G}\) and monotone on Y;

-

(A2)

the lower-level map F is Lipschitz continuous with constant \(L_F\) and monotone on Y;

-

(A3)

the lower-level feasible set Y is nonempty, convex and compact.

Due to (A2) and (A3), SOL(F, Y) is a nonempty, convex, compact and not necessarily single-valued set, see e.g. Facchinei and Pang (2003, Section 2.3). As a consequence, the feasible set of the nested variational inequality (2) is not necessarily a singleton. Moreover, thanks to (A1), the solution set of the nested variational inequality (2) can include multiple points.

Notice that assumption (A1) on the upper-level map G is much less demanding than the one required in Facchinei et al. (2014) and Lampariello et al. (2020). Specifically, here we assume G to be only monotone, while in Facchinei et al. (2014) and Lampariello et al. (2020) it must be monotone plus.

For the sake of completeness, we recall that a mapping \(G: Y \subseteq {\mathbb {R}}^{n} \rightarrow {\mathbb {R}}^n\) is said to be monotone plus on Y if both the following conditions hold:

-

1.

G is monotone on Y, i.e. \((G(x)-G(y))^{\scriptscriptstyle T}(x-y) \ge 0 \quad \forall \; x,y \in Y\);

-

2.

\((x-y)^{\scriptscriptstyle T}(G(x)-G(y))=0 \Rightarrow G(x)=G(y) \quad \forall \; x,y \in Y\).

Consequently, we are now able to dispose of the monotonicity plus assumption on operator G, which inevitably represents a more stringent condition when compared to plain monotonicity, thus hereby asking for G to be simply monotone. Indeed, whenever the upper-level map G is nonsymmetric, requiring G to be monotone plus is “slightly less” than assuming G to be strongly monotone (see, e.g. Bigi et al. 2021). In fact, the main objective of this work is to define, for the first time in the field-related literature, an algorithm (Algorithm 1) which is able to compute solutions of the monotone nested variational inequality (2), under the weaker assumptions (A1)-(A3), see the forthcoming Theorem 1.

In addition, we study complexity properties of Algorithm 1 in detail, see Theorems 2 and 3. We highlight that all steps in Algorithm 1 can be readily implemented and no nontrivial computations are required, see e.g. the numerical illustration in Sect. 5.

Summarizing,

-

we show that the algorithm we propose is globally (subsequential) convergent to solutions of monotone nested variational inequalities under the weakest conditions in the literature,

-

we provide the first complexity analysis for nested variational inequalities considering optimality of both the upper- and lower-level [instead in Lampariello et al. (2020) just lower-level optimality is contemplated].

3 A projected averaging Tikhonov algorithm

For the sake of notation, let us introduce the following operator:

which is the classical operator used to define subproblems in Tikhonov-like methods. For any \(\tau \in {\mathbb {R}}_{++}\), by assumptions (A1) and (A2), \(\Phi _\tau \) is monotone and Lipschitz continuous with constant \(L_\Phi \triangleq L_{F} + L_{G}\) on Y. Moreover, the following finite quantities are useful in the forthcoming analysis:

For the sake of clarity, let us recall that, by definition, the Euclidean projection \(P_Y(x)\) of a vector \(x \in {\mathbb {R}}^n\) onto a closed convex subset \(Y \subseteq {\mathbb {R}}^n\) is the unique solution of the strongly convex (in y) problem

The solution of the latter problem is a unique vector \({\bar{y}} \in Y\) that is closest to x in the Euclidean norm [see, e.g. Facchinei and Pang (2003, Th.1.5.5) for an exhaustive overview on the Euclidean projector and its properties].

In our analysis we rely on approximate solutions of VIs. Specifically, we say that \(x \in K\) approximately solves VI\((\Psi ,K)\) (with \(\Psi \) continuous and K convex and compact) if

where \(\varepsilon \ge 0\). Relation (3), for example, when VI\((\Psi ,K)\) defines the first-order optimality conditions of a convex problem, guarantees that the problem is solved up to accuracy \(\varepsilon \). Relation (3), in view of assumption (A3), is equivalent to

We remark that \(\Psi (x)^\top (y-x)\) is linear in y; moreover, if K is polyhedral (as, e.g., in the multi-portfolio selection context) computing \(\min _{y \in K} \; \Psi (x)^\top (y-x)\) amounts to solving a linear optimization problem. In any event, we assume this computation to be easy to do in practice.

With the following result we relate approximate solutions of the VI subproblem

where \(\varepsilon _{\text {sub}} \ge 0\), with approximate solutions of problem (2).

Proposition 1

Assume conditions (A1)-(A3) to hold, and let \(x \in Y\) be a solution of the VI subproblem (5) with \(\tau > 0\) and \(\varepsilon _{\text {sub}} \ge 0\). It holds that

with \(\varepsilon _{\text {up}} \ge \varepsilon _{\text {sub}} \tau \), and

with \(\varepsilon _{\text {low}} \ge \varepsilon _{\text {sub}} + \frac{1}{\tau } H D\).

Proof

We have for all \(y \in \text {SOL}(F,Y)\):

where the first inequality is due to (5), the second one comes from the monotonicity of F, and the last one is true because \(x \in Y\) and then \(F(y)^\top (x-y) \ge 0\). That is (6) is true.

Moreover, we have for all \(y \in Y\):

where the inequality is due to (5). Therefore we get (7). \(\square \)

Proposition 1 suggests a way to solve, with a good degree of accuracy, the hierarchical problem (2). That is solving the VI subproblem (5) with a big value for \(\tau \) and an \(\varepsilon _{\text {sub}}\) sufficiently small in order to make \(\varepsilon _{\text {sub}} \tau \) small enough. Following this path, we propose a Projected Averaging Tikhonov Algorithm (PATA), see Algorithm 1, to compute solutions of problem (2).

Some comments about PATA are in order. Index i denotes the outer iterations that occur when the condition in step (S.4) is verified, and they correspond to solutions \(w^{i+1}\) of the VI subproblems (5) with \(\varepsilon _{\text {sub}} = {\bar{\varepsilon }}^i\) and \(\tau = {\bar{\tau }}^i\). The sequence \(\{y^k\}\) is obtained by making classical projection steps with stepsizes \(\gamma ^k\), see step (S.2). The sequence \(\{z^k\}\) consists of the inner iterations needed to compute a solution of the VI subproblem (5), and it is obtained by performing a weighted average on the points \(y^j\), see step (S.3). Index l is included in order to let the sequence of the stepsizes \(\{\gamma ^k\}\) restart at every outer iteration and to consider only the points \(y^j\) belonging to the current subproblem to compute \(z^{k+1}\).

We remark that the condition in step (S.4) only requires the solution of an optimization problem with a linear objective function over the convex set Y (see the discussion about inexact solutions of VIs below relation (4)). In Sect. 5 we give a practical implementation of PATA.

In the following section we show that Proposition 1 can be used to prove that PATA effectively computes solutions of problem (2).

4 Main convergence properties

First of all we deal with convergence properties of PATA.

Theorem 1

Assume conditions (A1)-(A3) to hold, and let conditions

hold with \(\beta > 1\) and \(c > 0\). Every limit point of the sequence \(\{w^i\}\) generated by PATA is a solution of problem (2).

Proof

First of all we show that \(i \rightarrow \infty \). Assume by contradiction that this is not true, therefore there exists an index \({\bar{k}}\) such that the condition in step (S.4) is violated for every \(k \ge {\bar{k}}\), and either \({\bar{k}} = 0\) or the condition in step (S.4) is satisfied at the iteration \({\bar{k}} - 1\). We denote \({\bar{\tau }} = \tau ^{{\bar{k}}}\), and observe that \(\tau ^k = {\bar{\tau }}\) for every \(k \ge {\bar{k}}\).

For every \(j \in [{\bar{k}}, k]\), and for any \(v \in Y\), we have

and, in turn,

Summing, we get

which implies

where the last inequality is due to the monotonicity of \(\Phi _{{\bar{\tau }}}\). Denoting by \(z \in Y\) any limit point of the sequence \(\{z^k\}\), taking the limit in the latter relation and subsequencing, the following inequality holds:

because \(\sum _{j={\bar{k}}}^{\infty } \gamma ^{j-{\bar{k}}} = +\infty \) and \(\left( \sum _{j={\bar{k}}}^{\infty } (\gamma ^{j-{\bar{k}}})^2\right) /\left( \sum _{j={\bar{k}}}^{\infty } \gamma ^{j-{\bar{k}}}\right) = 0\), and then z is a solution of the dual problem

Hence, the sequence \(\{z^k\}\) converges to a solution of VI\((Y, \Phi _{{\bar{\tau }}})\), see e.g. Facchinei and Pang (2003, Theorem 2.3.5) in contradiction to \(\min _{y \in Y} \Phi _{{\bar{\tau }}}(z^{k+1})^\top (y - z^{k+1}) < - \varepsilon ^k = - \varepsilon ^{{\bar{k}}}\) for every \(k \ge {\bar{k}}\).

Therefore the algorithm produces an infinite sequence \(\{w^i\}\) such that \(w^{i+1} \in Y\) and

that is (5) holds at \(w^{i+1}\) with \(\varepsilon _{\text {sub}} = \frac{c}{({\bar{\tau }}^i)^\beta }\). By Proposition 1, specifically from (6) and (7), we obtain

and

Taking the limit \(i \rightarrow \infty \), and recalling that G and F are continuous and \(\beta > 1\), we get the desired convergence property. \(\square \)

Conditions (8) for the sequence of stepsizes \(\{\gamma ^k\}\) are satisfied, e.g., if we choose

with \(a > 0\) and \(\alpha \in (0,1]\), see Proposition 4 in the Appendix. Another possible choice of step-size rule satisfying conditions (8), as shown in Facchinei et al. (2015), is

where \(\theta \in (0,1)\) is a given constant, provided that \(\gamma ^0 \in (0,1]\). Both the above step-size rules satisfy conditions (8), needed for Theorem 1 to be valid.

We remark that, even if we require assumptions that are less stringent with respect to related literature, we still obtain the same type of convergence as in related literature, namely subsequential convergence to a solution of problem (2). Note that, thanks to assumption (A3), at least a limit point of sequence \(\{w^i\}\) generated by PATA exists. As it is common practice when using an iterative algorithm like PATA, referring to (11) and (12), \(w^{i+1}\) can be considered an approximate solution of problem (2) as soon as \(\frac{c}{({\bar{\tau }}^i)^{\beta -1}}\) and \(\left( \frac{c}{({\bar{\tau }}^i)^\beta } + \frac{1}{{\bar{\tau }}^i} H D \right) \) are small enough. Clearly, if the upper-level map G is strongly monotone on Y, the whole sequence \(\{w^i\}\) converges to the unique solution of problem (2).

We consider the so-called natural residual map for VI\((\Psi , K)\) (with \(\Psi \) continuous and K convex and compact)

As recalled in Lampariello et al. (2020), the function U is continuous and nonnegative. Moreover, \(U(x) = 0\) if and only if \(x \in \text {SOL}(\Psi , K)\). Specifically, classes of problems exist for which the value U(x) also gives an actual upper-bound to the distance between x and \(\text {SOL}(\Psi , K)\), see Lampariello et al. (2020) and the references therein. Therefore, the following condition

with \({\widehat{\varepsilon }} \ge 0\), is alternative to (3). However, in view of the compactness of K, relations (3) and (14) turn out to be related to each other: we show in Appendix (Proposition 3) that if x satisfies (3), then (14) holds with \({\widehat{\varepsilon }} \ge \sqrt{\varepsilon }\). Vice versa, condition (14) implies (3) with \(\varepsilon \ge (\Omega + \Xi ) {\widehat{\varepsilon }}\), where \(\Omega \triangleq \max _{v, y \in K}\Vert v - y\Vert _2\) and \(\Xi \triangleq \max _{y \in K} \Vert \Psi (y)\Vert _2\).

We remark that, whenever Y is not compact, e.g. if \(Y = {\mathbb {R}}^n\), the condition in step (S.4) of Algorithm 1 involving the classical gap function for VIs (see, e.g., Facchinei and Pang 2003, page 88) cannot be relied upon and could be replaced by the natural map-related relation \(\Vert P_Y(z^{k+1} - \Phi _{\tau ^k}(z^{k+1})) - z^{k+1}\Vert \le \varepsilon ^k\), at the price of a slightly modified convergence analysis.

In order to deal with the convergence rate analysis of our method, we consider the natural residual map for the lower-level VI(F, Y)

Clearly, the following condition

with \({\widehat{\varepsilon }}_{\text {low}} \ge 0\), is alternative to (7) to take care of the feasibility of problem (2).

In this context, we underline that the convergence rate we establish is intended to give an upper bound to the number of iterations needed to drive both the upper-level error \(\varepsilon _{\text {up}}\), given in (6), and the lower-level error \({\widehat{\varepsilon }}_{\text {low}}\), given in (16), under some prescribed tolerances \(\delta _{\text {up}}\) and \({\widehat{\delta }}_{\text {low}}\), respectively.

Theorem 2

Assume conditions (A1)-(A3) to hold and, without loss of generality, assume \(L_\Phi < 1\). Consider PATA. Given some precisions \(\delta _{\text {up}}, {\widehat{\delta }}_{\text {low}} \in (0,1)\), set \(\gamma ^k = \min \left\{ 1,\frac{1}{2 k^\frac{1}{2}}\right\} \), \({\bar{\tau }}^i = \max \{1,i\}\), and \({\bar{\varepsilon }}^i = \frac{1}{({\bar{\tau }}^{i})^2}\). Let us define the quantity

Then, the upper-level approximate problem (6) is solved for \(x = z^{k+1}\) with \(\varepsilon _{\text {up}} \le \delta _{\text {up}}\) and the lower-level approximate problem (16) is solved for \(x = z^{k+1}\) with \({\widehat{\varepsilon }}_{\text {low}} \le {\widehat{\delta }}_{\text {low}}\) and the condition in step (S.4) is satisfied in at most

iterations k, where \(\eta > 0\) is a small number, and

Proof

First of all we show that if \(i \ge I_{\max }\), we reach the desired result. Specifically, about the upper-level problem (6), we obtain

where the first equality is due to Proposition 1, and the last inequality follows from \(i \ge I_{\max } \ge (\delta _{\text {up}})^{-1}\).

About the lower-level problem (16), preliminarily we observe that

because \(w^{i+1}\) satisfies the condition in step (S.4) with \({\bar{\varepsilon }}^i\), see Proposition 3. Moreover, we get

where the first inequality is due to (26) and (18), and the last inequality follows from \(i \ge I_{\max } \ge ({\widehat{\delta }}_{\text {low}})^{-1} (H+1)\).

Now we consider the number of inner iterations needed to satisfy the condition in step (S.4) with the smallest error \({\bar{\varepsilon }}^{I_{\max }} = I_{\max }^{-2}\) and for a \(\tau > 0\). Without loss of generality, in the following developments we will assume \({\bar{k}}=0\), meaning that we are simply computing the number of inner iterations. By (10), the dual subproblem

is solved for

. From Lemma 1, we obtain

. From Lemma 1, we obtain

because

Therefore

Now we show that

In fact, taking \(y = v^k = P_Y(z^k - \Phi _\tau (z^k)) \in Y\) in (19), we have

where the last inequality follows from the Lipschitz continuity of \(\Phi _\tau \) and the characteristic property of the projection.

From Proposition 3 and inequality (21), we obtain the following error for the subproblem

and then, by (20), the desired accuracy for the subproblem is obtained when

that is

The thesis follows by multiplying the number of outer iterations (\(i \ge I_{\max }\)) for the number of inner ones. \(\square \)

In order to provide other complexity results for our method, we consider the following proposition, which is the dual counterpart of Proposition 1, and provides a theoretical basis for Theorem 3.

Proposition 2

Assume conditions (A1)-(A3) to hold, and let \(x \in Y\) be an approximate solution of the dual VI subproblem:

with \(\tau > 0\) and  . It holds that x turns out to be an approximate solution for the dual formulation of problem (2), that is

. It holds that x turns out to be an approximate solution for the dual formulation of problem (2), that is

with  , and

, and

with  .

.

Proof

We have for all \(y \in \text {SOL}(F,Y)\):

where the first inequality is due to (23) and the last one is true because \(x \in Y\) and then \(F(y)^\top (x-y) \ge 0\). That is (24) is true.

Moreover, we have for all \(y \in Y\):

where the inequality is due to (23). Therefore we get (25). \(\square \)

The following theorem considers a simplified version of PATA. Specifically, the parameter \(\tau \) is right away initialized to a value sufficiently large to get the prescribed optimality accuracy. Moreover, approximate optimality for problem (2) is considered only in its dual version. That said, the complexity bound obtained is better than the one given by Theorem 2.

Theorem 3

Assume conditions (A1)-(A3) to hold. Consider PATA. Given some precision \(\delta \in (0,1)\), set \(\gamma ^k = \min \left\{ 1,\frac{1}{2 k^\frac{1}{2}}\right\} \), \({\bar{\tau }}^0 = {\bar{I}}_{\max }\), and \({\bar{\varepsilon }}^0 = 0\) where

Then, the upper-level approximate dual problem (24) is solved for \(x = z^{k+1}\) with  and the lower-level approximate dual problem (25) is solved for \(x = z^{k+1}\) with

and the lower-level approximate dual problem (25) is solved for \(x = z^{k+1}\) with

in at most

in at most

iterations k, where \(\eta > 0\) is a small number, and \(C_1\) and \(C_{2,\eta }\) are given in (17).

Proof

First of all we denote with  the error with which the current iteration solves the dual subproblem (23). Notice that as soon as

the error with which the current iteration solves the dual subproblem (23). Notice that as soon as  , the desired accuracy for both the upper- and the lower-level dual problems is reached. In fact, as done in the proof of Theorem 2, and considering Proposition 2, we have:

, the desired accuracy for both the upper- and the lower-level dual problems is reached. In fact, as done in the proof of Theorem 2, and considering Proposition 2, we have:

where the first equality is due to Proposition 1, and the last inequality follows from \(i \ge {\bar{I}}_{\max } \ge \delta ^{-1}\), and

where the first inequality is due to (26) and (18), and the last inequality follows from \(i \ge {\bar{I}}_{\max } \ge \delta ^{-1} (H+1)\).

By (20) we have

Therefore,  is implied by

is implied by

that is

and the thesis follows. \(\square \)

5 Numerical experiments

We now tackle a practical example which is representative of the fact that, under assumptions (A1)-(A3), PATA produces the sequence of points \(\{z^k\}\) that is (subsequentially) convergent to a solution of the hierarchical problem, while the sequence \(\{y^k\}\) never approaches the solution set. Notice that \(\{y^k\}\) coincides with the sequence produced by the Tikhonov methods proposed in Lampariello et al. (2020) when no proximal term is considered.

Let us examine the selection problem (2), where:

where \({\mathbb {B}}(0,1)\) denotes the unit ball. The unique feasible point and, thus, the unique solution of the problem is \(z^* = (0,0)^{\scriptscriptstyle T}\). The assumptions (A1)–(A3) are satisfied, but notice that G does not satisfy convergence conditions of the Tikhonov-like methods proposed in Facchinei et al. (2014) and Lampariello et al. (2020) because it is not monotone plus.

The generic kth iteration of PATA, in this case, should read as reported below:

where we take, for example, but without loss of generality, \(\tau ^k = \tau \ge 1\) and \(\gamma ^k = \gamma > 0\). We remark that the unique exact solution of the VI subproblem (5) is the origin, and then every inexact solution, with a reasonably small error, cannot be far from it. For every k it holds that

hence \(\Vert y^{k+1}\Vert _2 = \min \left\{ 1, \sqrt{1+\gamma ^2(\frac{1}{2\tau }-1)^2}\ \Vert y^k\Vert _2 \right\} \). Therefore we consider \(\Vert y^0\Vert _2 = 1\) and get \(\Vert y^{k}\Vert _2 = 1\) for every k, because \(\sqrt{1+\gamma ^2(\frac{1}{2\tau }-1)^2}\ > 1\). Therefore, neither does the sequence \(\{y^k\}\) produced by PATA lead to the unique solution \(z^*\) of problem (2), nor does it approach the inexact solution set of the VI subproblem.

We now consider the sequence \(\{z^k\}\) produced by PATA. In order to show that this sequence leads us to the solution of the hierarchical problem, we analyze a numerical implementation of the algorithm. Some further considerations are in order before showing the actual implemented scheme.

-

A general rule for the update of the variable \(z^{k}\) is given by the following relation:

$$\begin{aligned} z^{k+1}= \frac{z^k \gamma ^{\text {sum},l,k}+ \gamma ^{k+1-l}y^{k+1}}{\gamma ^{\text {sum},l,k} + \gamma ^{k+1-l}}, \end{aligned}$$where

$$\begin{aligned} \gamma ^{\text {sum},l,k} \triangleq \sum _{j=l}^k \gamma ^{j-l}, \end{aligned}$$which gives us the expression of \(z^{k+1}\) reported in Step (S.3) in PATA. This is done in order to avoid keeping trace of all \(y^{j}\), \(j=l,..,k\), which carries a heavy computational weight. Instead, we only need to know the current value of \(z^{k}\), the sum \(\gamma ^{\text {sum},l,k}\), \(\gamma ^{k+1-l}\) and, last but not least, the current point \(y^{k+1}\). This allows us to save 4 entities only, which is far more convenient.

-

Because the feasible set \(Y={\mathbb {B}}(0,1)\) is the unit ball of radius 1, the computation of the projection steps (see Step (S.3)) becomes straightforward, since it is sufficient to divide the argument by its vector norm:

$$\begin{aligned} P_{{\mathbb {B}}(0,1)}(w) = \frac{w}{\Vert w\Vert _2} \ \forall w:\Vert w\Vert _2 \ge 1. \end{aligned}$$Moreover, a closed-form expression for the unique solution u of the minimum problem at Step (S.4):

$$\begin{aligned} u = \arg \min _{y \in {\mathbb {B}}(0,1) }\ [F(z^{k+1}) + \frac{1}{\tau ^{k}} G(z^{k+1})]^\top (y - z^{k+1}) \end{aligned}$$is achievable. On the basis that the feasible set \(Y={\mathbb {B}}(0,1)\) becomes an active constraint at the optimal solution u, the KKT-multiplier associated to this constraint is strictly positive. We, of course, do not know the value of the multiplier itself, but we can impose that the optimal point has Euclidean norm 1, so that it belongs to the boundary of \({\mathbb {B}}(0,1)\):

$$\begin{aligned} u = -\frac{F(z^{k+1}) + \frac{1}{\tau ^{k}} G(z^{k+1})}{\Vert F(z^{k+1}) + \frac{1}{\tau ^{k}} G(z^{k+1})\Vert _2}. \end{aligned}$$

We now show the implemented scheme in Algorithm 2.

As far as the steps of Algorithm 2 are concerned, (S.2) and (S.3) perform step (S.2) of PATA, while (S.5) and (S.6) fulfil step (S.4) of PATA.

We set the parameters \(k^{\max } = 10^6\), \(\text {tol} = 10^{-3}\), \(a = \alpha = \frac{1}{2}\), \(\beta = 2\). Table 1 summarizes the results obtained by running Algorithm 2. It is clear to see how \(\Vert z^{k+1}\Vert _2\) tends to 0 as the number of iterations k grows, which is what we expected, being \(z^*=(0, 0)^{\scriptscriptstyle T}\) the unique solution of the problem.

To further reiterate the elements of novelty that PATA displays, we hereby present some numerical experiments in which PATA performs better than Algorithm 1 presented in Lampariello et al. (2020). We do not intend to present a thorough numerical comparison between these solution methods, we just want to show that PATA is a fundamental solution tool when the classical Tikhonov gradient method presented in Lampariello et al. (2020) struggles to converge.

Again, for the sake of simplicity we consider \(Y={\mathbb {B}}(0,1)\). This time, we extend the problem to encompass \(n=100\) variables and consider \(G(x) = M_{G} x + b_G\) and \(F(x) = M_F\), with

\(b_G = \zeta v^{b_G}\), and \(v^{M_G}\), \(u^{M_G}\), \(w^{M_G}\), \(v^{M_F}\), \(u^{M_F}\), \(w^{M_F}\), and \(v^{b_G}\) are randomly generated between 0 and 1, \(\zeta > 0\). We remark that when \(\zeta =0\) the problem is a generalization of that in the simple example described at the beginning of this section. In our experiments, we consider the cases \(\zeta = 0.1\) and 0.01.

As far as PATA parameters are concerned, for the purpose of the implementation we set \(k^{\max } = 5 \cdot 10^4\), \(a = 1\), \(\alpha = \frac{1}{4}\) and \(\beta = 2\). As for the Tikhonov scheme proposed in Lampariello et al. (2020), we set \(\lambda = 0.1\).

Following Proposition 1, a merit function for the nested variational inequality (2) can be given by

We generated 3 different instances of the problem and considered 2 values for \(\zeta \), for a total of 6 different test problems.

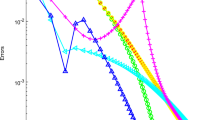

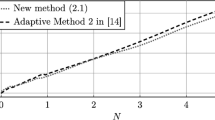

Figure 1 shows the evolution of the optimality measure as the number of inner iterations k grows towards \(k^{\max }\), when both PATA and the classical Tikhonov algorithm described in Lampariello et al. (2020) are applied to the 6 test problems.

It is clear to see how the practical implementation for PATA always outperforms the classical Tikhonov in Lampariello et al. (2020), as it needs a significant smaller number of inner iterations k to reach small values of the optimality measure. However, we remark that computing averages, such as in step (S.3) of PATA, can be computationally expensive. Hence, PATA becomes an essential alternative tool when other Tikhonov-like methods, not including averaging steps, either fail to reach small values of the optimality measure, as shown in our practical implementation (see Fig. 1), or do not converge at all.

A similar scenario can be observed also when dealing with nonlinear problems. In Table 2, we report the numerical results that are obtained by running Algorithm 2 to address a nonlinear modification of the problem defined at beginning of this section: in particular, inspired by some of the test problems in Solodov and Svaiter (1999), we take

The unique solution of this problem is again the origin. As for the previous case, PATA seems to work well: it reaches a good approximation level of the exact solution in a fair amount of inner iterations. Note that the nonlinear operator F is monotone on Y but also strongly monotone on \(Y \setminus \{(0, 0)\}\): the strong monotonicity modulus vanishes when approaching the origin. This issue is clearly reflected in the behavior of a standard gradient-like version of the algorithm, that is readily obtained neglecting in Algorithm 2 the averaging step, thus taking there \(z^{k+1} = y^{k+1}\) (see Table 3). Comparing Tables 2 and 3, one can observe as, for the initial outer iterations i, PATA and the non averaged counterpart perform similarly, while, when approaching the origin, the non averaged gradient-like procedure struggles to converge (see the number of inner iterations that performed for each outer iterate in Table 3).

6 Conclusions

We have shown that PATA is (subsequentially) convergent to solutions of monotone nested variational inequalities under the weakest conditions in the literature so far, see Theorem 1. Specifically, besides the standard convexity and monotonicity assumptions, G is required to be just monotone, while all other papers demand the monotonicity plus of G, see Facchinei et al. (2014) and Lampariello et al. (2020).

In addition, PATA enjoys interesting complexity properties, see Theorems 2 and 3. Notice that we have provided the first complexity analysis for nested variational inequalities considering optimality of both the upper- and lower-level. Conversely, authors in Lampariello et al. (2020) only handled lower-level optimality.

Although the convergence and complexity properties of the method are guaranteed by the results in Sect. 4, we plan to test numerically PATA more extensively (also when addressing nonlinear problems) in order to evaluate more accurately its performances from a practical point of view.

Possible future research may focus on generalizing the problem to consider quasi variational inequalities as well as generalized variational inequalities. The first step would be extending Proposition 1 to encompass these more complex variational problems. We leave this investigation to following works.

Change history

17 November 2022

Missing Open Access funding information has been added in the Funding Note

References

Bigi G, Lampariello L, Sagratella S (2021) Combining approximation and exact penalty in hierarchical programming. Optimization 71:1–17

Dempe S (2002) Foundations of bilevel programming. Springer, New York

Facchinei F, Pang JS (2003) Finite-dimensional variational inequalities and complementarity problems. Springer

Facchinei F, Pang JS, Scutari G, Lampariello L (2014) VI-constrained hemivariational inequalities: distributed algorithms and power control in ad-hoc networks. Math Program 145(1–2):59–96

Facchinei F, Scutari G, Sagratella S (2015) Parallel selective algorithms for nonconvex big data optimization. IEEE Trans Signal Process 63(7):1874–1889

Kalashnikov VV, Kalashinikova NI (1996) Solving two-level variational inequality. J Global Optim 8(3):289–294

Lampariello L, Sagratella S (2017) A bridge between bilevel programs and Nash games. J Optim Theory Appl 174(2):613–635

Lampariello L, Sagratella S (2020) Numerically tractable optimistic bilevel problems. Comput Optim Appl 76(2):277–303

Lampariello L, Sagratella S, Stein O (2019) The standard pessimistic bilevel problem. SIAM J Optim 29(2):1634–1656

Lampariello L, Neumann C, Ricci JM, Sagratella S, Stein O (2020) An explicit Tikhonov algorithm for nested variational inequalities. Comput Optim Appl 77(2):335–350

Lampariello L, Neumann C, Ricci JM, Sagratella S, Stein O (2021) Equilibrium selection for multi-portfolio optimization. Eur J Oper Res 295:363–373

Lu X, Xu HK, Yin X (2009) Hybrid methods for a class of monotone variational inequalities. Nonlinear Anal Theory Methods Appl 71(3–4):1032–1041

Marino G, Xu HK (2011) Explicit hierarchical fixed point approach to variational inequalities. J Optim Theory Appl 149(1):61–78

Scutari G, Facchinei F, Pang JS, Lampariello L (2012) Equilibrium selection in power control games on the interference channel. In: 2012 Proceedings IEEE INFOCOM. IEEE, pp 675–683

Solodov M, Svaiter B (1999) A new projection method for variational inequality problems. SIAM J Control Optim 37(3):765–776

Yamada I (2001) The hybrid steepest descent method for the variational inequality problem over the intersection of fixed point sets of nonexpansive mappings. Inherently Parallel Algorithms Feasibility Optim Appl 8:473–504

Acknowledgements

Lorenzo Lampariello was partially supported by the MIUR PRIN 2017 (grant 20177WC4KE).

Funding

Open access funding provided by Universitá degli Studi di Roma La Sapienza within the CRUI-CARE Agreement

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

The following proposition is instrumental for the discussion regarding the natural map V in Sect. 4.

Proposition 3

Let \(x \in K\) satisfy the primal VI approximate optimality condition (3), then the natural map approximate optimality condition (14) holds with \({\widehat{\varepsilon }} \ge \sqrt{\varepsilon }\). Vice versa, let \(x \in K\) satisfy condition (14), then (3) holds with \(\varepsilon \ge (\Omega + \Xi ) {\widehat{\varepsilon }}\), where \(\Omega \triangleq \max _{v, y \in K}\Vert v - y\Vert _2\) and \(\Xi \triangleq \max _{y \in K} \Vert \Psi (y)\Vert _2\).

Proof

Let \(z = P_K(x-\Psi (x))\). If (3) holds, then

where the last inequality is due to the characteristic property of the projection. Therefore, (14) holds with \({\widehat{\varepsilon }} \ge \sqrt{\varepsilon }\).

Now we consider the case in which (14) holds. Thanks again to the characteristic property of the projection, we have

and, thus, for all \(y \in K\),

Therefore, (3) holds with \(\varepsilon \ge (\Omega + \Xi ) {\widehat{\varepsilon }}\). \(\square \)

The following lemma is helpful to prove Theorems 2 and 3.

Lemma 1

Let \(\{\gamma ^k\ : \gamma ^k=\min \{1,\frac{a}{k^\alpha }\}\}\), with \(\alpha \in (0,1]\) and \(a>0\). Setting \(K \in {\mathbb {N}}\), the following upper and lower bounds hold true:

-

(i)

\(\alpha \ne 1\) : \(\sum _{k=0}^K \gamma ^k \ge \lceil a^\frac{1}{\alpha } \rceil + \frac{a}{1-\alpha }[(K+1)^{1-\alpha } - \lceil a^\frac{1}{\alpha } \rceil ^{1-\alpha }]\);

-

(ii)

\(\alpha = 1\) : \(\sum _{k=0}^K \gamma ^k \ge \lceil a \rceil + \ln \left( (\frac{K+1}{\lceil a \rceil })^a\right) \);

-

(iii)

\(\alpha \ne \frac{1}{2}\) : \(\sum _{k=0}^K (\gamma ^k)^2 \le \lceil a^\frac{1}{\alpha } \rceil + \frac{a^2}{\lceil a^\frac{1}{\alpha } \rceil ^{2\alpha }} + \frac{a^2}{1-2\alpha }[K^{1-2\alpha } - \lceil a^\frac{1}{\alpha } \rceil ^{1-2\alpha }]; \)

-

(iv)

\(\alpha = \frac{1}{2}\) : \(\sum _{k=0}^K (\gamma ^k)^2 \le \lceil a^2 \rceil + \frac{a^2}{\lceil a^2 \rceil }+ a^2\ln (\frac{K}{\lceil a^2 \rceil }).\)

Proof

In cases (i) and (ii):

where the inequality is due to the integral test for Harmonic series. When \(\alpha \ne 1\) it follows that:

whilst, if \(\alpha = 1\), it follows that:

In cases (iii) and (iv):

where, in the third equality, the first term of the series is taken out, whilst the inequality is once again due to the integral test for Harmonic series.

When \(\alpha \ne \frac{1}{2}\) it follows that:

while, if \(\alpha = \frac{1}{2}\), it follows that:

\(\square \)

The following proposition enables us to use \(\gamma ^k=\min \{1,\frac{a}{k^\alpha }\}\), which is shown to satisfy conditions (8) in Theorem 1.

Proposition 4

The sequence of stepsizes \(\{\gamma ^k\ : \gamma ^k=\min \{1,\frac{a}{k^\alpha }\}\}\), with \(\alpha \in (0,1]\) and \(a>0\), satisfies both sets of hypotheses:

-

(i)

\(\sum _{k=0}^\infty \gamma ^k = \infty \)

-

(ii)

\(\frac{\sum _{k=0}^\infty (\gamma ^k)^2}{\sum _{k=0}^\infty \gamma ^k} = 0\)

needed for Theorem 1 to be valid, see conditions (8).

Proof

(i) We need to examine two separate cases, i.e. when \(\alpha =1\) and \(\alpha <1\), when K goes to \(+\infty \).

When \(\alpha =1\):

When, instead, \(\alpha <1\):

Notice that, if \(\alpha >1\), the limit would be \(\lceil a^\frac{1}{\alpha } \rceil - \frac{a}{1-\alpha } \lceil a^\frac{1}{\alpha } \rceil ^{1-\alpha } \ne +\infty \), hence why it is necessary that \(\alpha \le 1\).

(ii) We first show that, if \(\alpha \in \left( \frac{1}{2},1 \right] \), the following relation holds:

which, in turn, implies that:

When \(\alpha =\frac{1}{2}\), it easy to see that:

where the first equality follows from L’Hopital’s rule.

Finally, if \(\alpha \in \left( 0,\frac{1}{2}\right) \), it is once again easy to see that:

where the first equality follows again from L’Hopital’s rule, and the last equality is true because \(\alpha > 0\). \(\square \)

This last lemma is again used in the proof of Theorem 2.

Lemma 2

The following upper bound holds for the lower-level merit function V (see the definition (15)) at z for every positive \(\tau \):

Proof

The claim is a consequence of the following chain of relations:

where the last inequality follows from the nonexpansive property of the projection mapping. \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lampariello, L., Priori, G. & Sagratella, S. On the solution of monotone nested variational inequalities. Math Meth Oper Res 96, 421–446 (2022). https://doi.org/10.1007/s00186-022-00799-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00186-022-00799-5