Abstract

We consider the problem of minimizing a polynomial function over the integer lattice. Though impossible in general, we use a known sufficient condition for the existence of continuous minimizers to guarantee the existence of integer minimizers as well. In case this condition holds, we use sos programming to compute the radius of a p-norm ball which contains all integer minimizers. We prove that this radius is smaller than the radius known from the literature. Our numerical results show that the number of potentially optimal solutions is reduced by several orders of magnitude. Furthermore, we derive a new class of underestimators of the polynomial function. Using a Stellensatz from real algebraic geometry and again sos programming, we optimize over this class to get a strong lower bound on the integer minimum. Also our lower bounds are evaluated experimentally. They show a good performance, in particular within a branch and bound framework.

Similar content being viewed by others

Notes

It is well-known that the gradient vanishes necessarily at an optimal continuous solution of the unconstrained problem.

More precisely, finite convergence holds if the gradient ideal \(\langle \partial _{x_1} f, \ldots , \partial _{x_n} f \rangle \) is radical and the corresponding complex gradient variety consists of finitely many points. These properties are generic in the sense of algebraic geometry. See Nie et al. (2006) for details.

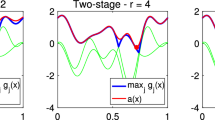

For this example we solved GLOB for \(h = 0.3\) and \(\deg g = 6\), using SOSTOOLS 3.00 and CSDP 6.1.0.

Note that every sos polynomial \(\sigma \ne 0\) has even degree.

We use MATLAB 2014b 64-bit (MATLAB is a registered trademark of The MathWorks Inc., Natick, Massachusetts), SOSTOOLS 3.00 (Papachristodoulou et al. 2013) to translate the sos programs into semidefinite programs and CSDP 6.1.0 (Borchers 1999)/SDPT3 (Toh et al. 1999) to solve the latter. The experiments were conducted on a GNU/Linux machine running on 2 Intel® Xeon®X5650 CPUs, 6 cores each, with a total of 96 GB RAM.

We shortly discussed the extraction of x after Corollary 3; in our setup, this corresponds to a non-empty third return argument of SOSTOOLS’s findbound.m-routine.

By fixing some variables at each node and then computing new underestimators, this could be improved but would need additional runtime for the computation of the new underestimator.

References

Anjos M, Lasserre JB (2012) Handbook on semidefinite, conic and polynomial optimization. Springer, Berlin

Behrends S (2013) Lower bounds for polynomial integer optimization, Master thesis

Blekherman G, Parrilo PA, Thomas RR (2013) Semidefinite optimization and convex algebraic geometry, vol 13. SIAM, Philadelphia

Borchers B (1999) CSDP, a C library for semidefinite programming. Optim Methods Softw 11(1–4):613–623

Boros E, Hammer PL (2002) Pseudo-boolean optimization. Discrete Appl Math 123(1):155–225

Buchheim C, D’Ambrosio C (2014) Box-constrained mixed-integer polynomial optimization using separable underestimators. In: Lee J, Vygen J (eds) Integer programming and combinatorial optimization. Springer, Berlin, pp 198–209

Buchheim C, Rinaldi G (2007) Efficient reduction of polynomial zero-one optimization to the quadratic case. SIAM J Optim 18(4):1398–1413

Buchheim C, Hübner R, Schöbel A (2015) Ellipsoid bounds for convex quadratic integer programming. SIAM J Optim 25(2):741–769

Cox DA, Little J, O’Shea D (2007) Ideals, varieties, and algorithms: an introduction to computational algebraic geometry and commutative algebra. Springer, Berlin

De Loera JA, Hemmecke R, Köppe M, Weismantel R (2006) Integer polynomial optimization in fixed dimension. Math Oper Res 31(1):147–153

Fortet R (1960) L’algebre de boole et ses applications en recherche opérationnelle. Trab Estad Invest Oper 11(2):111–118

Gupta OK, Ravindran A (1985) Branch and bound experiments in convex nonlinear integer programming. Manag Sci 31(12):1533–1546

Henrion D, Lasserre JB (2005) Detecting global optimality and extracting solutions in GloptiPoly. In: Henrion D, Garulli A (eds) Positive polynomials in control. Springer, Berlin, pp 293–310

Hemmecke R, Köppe M, Lee J, Weismantel R (2010) Nonlinear integer programming. In: Jünger M, Liebling T, Naddef D, Nemhauser GL, Pulleyblank WR, Reinelt G, Rinaldi G, Wolsey LA (eds) 50 Years of integer programming 1958–2008. Springer, Berlin, pp 561–618

Hildebrand R, Köppe M (2013) A new Lenstra-type algorithm for quasiconvex polynomial integer minimization with complexity \(2^{O(n \log n)}\). Discrete Optim 10(1):69–84

Jeroslow RC (1973) There cannot be any algorithm for integer programming with quadratic constraints. Oper Res 21(1):221–224

Jünger M, Liebling T, Naddef D, Nemhauser GL, Pulleyblank WR, Reinelt G, Rinaldi G, Wolsey LA (2010) 50 Years of integer programming 1958–2008: from the early years to the state-of-the-art. Springer, Berlin

Khachiyan LG (1983) Convexity and complexity in polynomial programming. In: Proceedings of the international congress of mathematicians, pp 1569–1577

Khachiyan L, Porkolab L (2000) Integer optimization on convex semialgebraic sets. Discrete Comput Geom 23(2):207–224

Laurent M (2009) Sums of squares, moment matrices and optimization over polynomials. In: Putinar M, Sullivant S (eds) Emerging applications of algebraic geometry. Springer, Berlin, pp 157–270

Lasserre JB (2001) Global optimization with polynomials and the problem of moments. SIAM J Optim 11(3):796–817

Lasserre JB, Thanh TP (2011) Convex underestimators of polynomials. In: Decision and control and European control conference (CDC-ECC), 2011 50th IEEE conference on. IEEE, pp 7194–7199

Lee J, Leyffer S (2012) Mixed integer nonlinear programming. Springer, Berlin

Marshall M (2003) Optimization of polynomial functions. Can Math Bull 46(4):575–587

Marshall M (2008) Positive polynomials and sums of squares. Mathematical Surveys and Monographs 146. American Mathematical Society (AMS), Providence

Marshall M (2009) Representation of non-negative polynomials, degree bounds and applications to optimization. Can J Math 61(205–221):13

Matiyasevich YV (1970) Enumerable sets are diophantine. Dokl Akad Nauk SSSR 191(2):279–282

Nesterov Y (2000) Squared functional systems and optimization problems. In: Frenk H, Roos K, Terlaky T, Zhang S (eds) High performance optimization. Springer, Berlin, pp 405–440

Nie J (2012) Sum of squares methods for minimizing polynomial forms over spheres and hypersurfaces. Front Math China 7(2):321–346

Nie J, Schweighofer M (2007) On the complexity of Putinar’s positivstellensatz. J Complex 23(1):135–150

Nie J, Demmel J, Sturmfels B (2006) Minimizing polynomials via sum of squares over the gradient ideal. Math Program 106(3):587–606

Papachristodoulou A, Anderson J, Valmorbida G, Prajna S, Seiler P, Parrilo PA (2013) SOSTOOLS: sum of squares optimization toolbox for MATLAB. arXiv:1310.4716, http://www.cds.caltech.edu/sostools

Parrilo PA (2000) Structured semidefinite programs and semialgebraic geometry methods in robustness and optimization, Ph.D. thesis. Citeseer

Parrilo PA, Sturmfels B (2003) Minimizing polynomial functions. In: Algorithmic and quantitative real algebraic geometry, DIMACS Series in Discrete Mathematics and Theoretical Computer Science, vol 60, pp 83–99

Rosenberg IG (1975) Reduction of bivalent maximization to the quadratic case. Cahiers du Centre d’etudes de Recherche Operationnelle 17:71–74

Schweighofer M (2005) Optimization of polynomials on compact semialgebraic sets. SIAM J Optim 15(3):805–825

Shor NZ (1987) Class of global minimum bounds of polynomial functions. Cybern Syst Anal 23(6):731–734

Shor NZ, Stetsyuk PI (1997) Modified \(r\)-algorithm to find the global minimum of polynomial functions. Cybern Syst Anal 33(4):482–497

Toh K-C, Todd MJ, Tütüncü RH (1999) SDPT3–a MATLAB software package for semidefinite programming, version 1.3. Optim Methods Softw 11(1–4):545–581

Watters LJ (1967) Reduction of integer polynomial programming problems to zero-one linear programming problems. Oper Res 15(6):1171–1174

Wolkowicz H, Saigal R, Vandenberghe L (2000) Handbook of semidefinite programming: theory, algorithms, and applications, vol 27. Springer, Berlin

Author information

Authors and Affiliations

Corresponding author

Computing the norm bounds

Computing the norm bounds

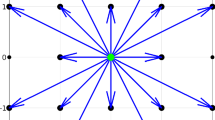

In Remark 7 we saw that we get a tighter norm bound R on the minimizers the closer the \(c_j\) get to their optimal value \(c_j^*\). In the following, we present two means that improve on the Approach 1. in Sect. 4.1 that do not rely on sos programming. The second method we present is a refinement of the first. For both, we improve the norm bound R by replacing the estimate \(|x^\alpha | \le 1\) on \(\mathbb {S}^{n-1}_p\) with \(|x^\alpha | \le \hat{x}^\alpha \), where \(\hat{x}\) is a continuous maximizer of the function \(\mathbb {S}^{n-1}_p \rightarrow \mathbb {R}\), \(x \mapsto x^\alpha \).

1.1 A direct improvement

One has the following closed form for the continuous minimizer \(\hat{x}\) with nonnegative coordinates:

Lemma 20

Let \(0 \ne \alpha \in \mathbb {N}_0^n\) and \(p \in [1, \infty )\). Then, the monomial \(X^\alpha \) attains its maximum on \(\mathbb {S}^{n-1}_p\) at \(\hat{x}\) with coordinates

Proof

By a simple analysis, the proof can be reduced to \(\alpha _i \ge 1\) for \(i=1, \ldots , n\) and then to maximization of \(X^\alpha \) on \(\{ x \in \mathbb {S}^{n-1}_p \ | \ x_1> 0, \ldots , x_n > 0 \}\). Using the method of Lagrange multipliers, the claim follows from a short calculation. \(\square \)

Observation 21

Denote by \(\hat{x}_{(\alpha )}\) the maximizer of \(X^\alpha \) on \(\mathbb {S}^{n-1}_p\) as in (17). Hence for \(x \in \mathbb {S}^{n-1}_p\) we have

This \(c_j\) is as least as large as the \(c_j\) from Proposition 8, since, for \(0 \ne \alpha \), \((\hat{x}_{(\alpha )})^\alpha < 1\)—unless \(X^\alpha \in \mathbb {R}[X_i]\) for some i, in which case \(\hat{x}_{(\alpha )} = e_i\), the ith unit vector, and thus \((\hat{x}_{(\alpha )})^\alpha = 1\).

1.2 A different approach

This last approach on computing bounds \(c_j\) is different to the ones before, as we actually compute \(2^n\) norm bounds: we restrict f to each of the \(2^n\) orthants

and compute a norm bound on integer minimizers of every \(f|_{H_\tau }\). This has the advantage that, roughly speaking, we may neglect half of the terms of \(f = \sum a_\alpha X^\alpha \). Also, minimization on \(H_\tau \) can be reduced to minimization on \(H_{(1, \ldots , 1)}\), i.e., the set of those \(x \in \mathbb {R}^n\) with \(x \ge 0\), as we shall see in a moment.

Introducing the notation \(|a|^- = |\min (a, 0)|\) for \(a \in \mathbb {R}\) and with \(\hat{x}\) from (17), we have for every term \(a_\alpha x^\alpha \ge - |a_\alpha |^- x^\alpha \ge -| a_\alpha |^- \hat{x}^\alpha \) as \(x \ge 0\), thus

which means about half of the coefficients are neglected in comparison to (18), if signs are distributed equally among the \(a_\alpha \). Now let \(R^{(1, \ldots , 1)}\) be the largest real root of

The verbatim argument of Theorem 6 shows that \(f(x) > f(0)\) for \(\Vert x \Vert _p > R^{(1, \ldots , 1)}\) and \(x \ge 0\). This bounds integer and continuous minimizers on \(H_{(1, \ldots , 1)}\). Bounding the norm of minimizers of f on \(H_\tau \), \(\tau \in \{-1,1\}^{n}\), can be reduced to bounding the norm of minimizers on \(H_{(1, \ldots , 1)}\) by a simple change of coordinates. To this end, let \(\tau (x) = (\tau _1 x_1, \ldots , \tau _n x_n)\), \(x \in \mathbb {R}^n\), and \(f^\tau \) be the polynomial

As \(\tau ^\alpha \in \{-1, 1\}\), f and \(f^\tau \) merely differ in the sign of their coefficients, and \(f^\tau _d(x) \ge c_d\) still holds for \(x \in \mathbb {S}^{n-1}_p\) as the sphere is \(\tau \)-invariant, that is \(\tau (\mathbb {S}^{n-1}_p) = \mathbb {S}^{n-1}_p\). Similarly to before, denote by \(R^{\tau }\) the largest real root of

with \(c_j^\tau = -|a_\alpha \tau ^{\alpha }|^- \hat{x}^\alpha \). It is now clear that \(f^{\tau }(x) > f(0)\) for \(\Vert x\Vert _p > R^\tau \) and \(x \ge 0\), equivalently, \(f(x) > f(0)\) for \(\Vert x\Vert _p > R^\tau \) and \(x \in H_\tau \).

This results in more effort in the preprocessing, but reduces the number of feasible solutions.

Rights and permissions

About this article

Cite this article

Behrends, S., Hübner, R. & Schöbel, A. Norm bounds and underestimators for unconstrained polynomial integer minimization. Math Meth Oper Res 87, 73–107 (2018). https://doi.org/10.1007/s00186-017-0608-y

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00186-017-0608-y