Abstract

Two agents with a common prior on the possible states of the world participate in a process of information transmission, consisting of sharing posterior probabilities of an event of interest. Aumann’s Agreement Theorem implies that such a process must end with both agents having the same posterior probability. We show that the \(\ell _1\)-variation of the sequence of posteriors of each agent, obtained along this process, must be finite, and provide an upper bound for its value.

Similar content being viewed by others

Notes

The state of the world is not distributed according to \(\mathbb {P}\); rather, it is one of the elements of \(\Omega \). The probability measure \(\mathbb {P}\) reflects the uncertainty of the agents regarding the state of the world.

We assume that the public announcements of current posteriors are conducted simultaneously at each stage of the information transmission.

We denote by |B| the cardinality of a set B.

Such setup does not guarantee agreement, as each agent i can announce \(B^i_n(\omega ) = [0,1],\) \(\forall n \in \mathbb {N}\).

A positive probability event A is an atom of a probability space \((\Omega ,{\mathcal {G}},\mathbb {P})\) if for every event \(B \subseteq A\), either or \(\mathbb {P}(B)=0\).

References

Aumann RJ (1976) Agreeing to disagree. Ann. Stat. 4(6):1236–1239

Burkholder DL (1966) Martingale transforms. Ann. Math. Stat. 37(6):1494–1504

Di Tillio A, Lehrer E, Samet D (2021) Monologues, dialogues and common priors. To appear in Theoretical Economics.

Doob JL (1953) Stochastic processes, vol 101. Wiley, New York

Geanakoplos JD, Polemarchakis HM (1982) We can’t disagree forever. J Econ Theory 28(1):192–200

Maschler M, Solan E, Zamir S (2013) Game theory. Cambridge University Press, Cambridge

Williams D (1991) Probability with martingales. Cambridge University Press, Cambridge

Acknowledgements

The author would like to thank his Ph.D. advisor Prof. Ehud Lehrer for introducing him to the topic. The author is also grateful to Prof. Eilon Solan, Prof. David Gilat, and Andrei Iacob for their suggestions, remarks and overall contribution to this work. The author would also like to thank two anonymous reviewers for their thoughtful comments which led to the improvement of the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: \(\ell _1\)-Variation of the conditional means of a martingale

Let \((\Omega , {\mathcal {B}}, \mathbb {P})\) be a probability space. A filtration \(\mathcal {F}= (\mathcal {F}_n)_{n=1}^{\infty }\) is an increasing sequence of sub-\(\sigma \)-fields of \({\mathcal {B}}\). A sequence \((X_n)_{n=1}^{\infty }\) of random variables is said to be a discrete-time martingale with respect to the filtration \((\mathcal {F}_n)_{n=1}^{\infty }\) if

-

(i)

\(\mathbb {E}|X_n| < \infty \),

-

(ii)

\((X_n)_n\) is adapted to \(\mathcal {F}\), i.e., \(X_n\) is measurable with respect to \(\mathcal {F}_n\) for all \(n \in \mathbb {N}\),

-

(iii)

\(\mathbb {E}(X_{n+1}\,|\,\mathcal {F}_n)=X_n\) for all \(n \in \mathbb {N}\). An important example of a discrete-time martingale that we will consider in the paper is a Doob martingale. Such a martingale arises when we consider the sequence of conditional expectations \(X_n = {\mathbb {E}}(Y\,|\,\mathcal {F}_n)\) of a random variable Y satisfying \({\mathbb {E}}|Y|<\infty \), with respect to a filtration \(\mathcal {F}= (\mathcal {F}_n)_{n=1}^{\infty }\). In case \(Y = \mathbb {1}_C\) for some event C (i.e., \(C \in {\mathcal {B}}\)), we will use a standard notation and write \(X_n = \mathbb {P}(C\,|\,\mathcal {F}_n)\).

We say that the discrete-time martingale sequence \(X = (X_n)_{n=1}^{\infty }\) is an \({\mathcal {L}}_2\) (\({\mathcal {L}}_1\)) bounded martingale if \(\sup _n {\mathbb {E}}(|X_n|^2)<\infty \) (\(\sup _n {\mathbb {E}}|X_n|<\infty \)). Let us now introduce two distinct types of variation for discrete-time martingales. The \(\ell _1\)-variation of the discrete-time martingale sequence \(X = (X_n)_{n=1}^{\infty }\) (with respect to \(\mathcal {F}\)) is the random variable

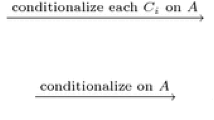

Next, for every event A with \(\mathbb {P}(A)>0\), we define the \(\ell _1\)-variation of the conditional means of X on A by

where

We say that X has finite \(\ell _1\)-variation of the conditional means if \(V(X,A)<\infty \) for all events A with \(\mathbb {P}(A)>0\).

Our main technical result regarding martingales bounds the \(\ell _1\)-variation of the conditional means.

Theorem A1

If X is an \({\mathcal {L}}_2\)-bounded martingale, then

for all events A with \(\mathbb {P}(A)>0\), where \(X_{\infty }\) is the \({{\mathcal {L}}}_2\)-limit of X, and \(Y_{\infty }\) is the \({{\mathcal {L}}}_2\)-limit of the \({\mathcal {L}}_2\)-bounded martingale Y defined by \(Y_n = \mathbb {P}(A \,|\,\mathcal {F}_n)\) for all \(n \in \mathbb {N}\). In particular, X has finite \(\ell _1\)-variation of conditional means.

Remark A1

The conditional mean of the \(\ell _1\)-variation of an \({\mathcal {L}}_2\)-bounded martingale can be infinite on any event of positive probability. For instance, let \((d_n)_{n=1}^{\infty }\) be a sequence of independent random variables distributed according to the law \(\mathbb {P}\left( d_n = \frac{1}{n}\right) = \mathbb {P}\left( d_n = -\frac{1}{n}\right) = \frac{1}{2}\). Define the martingale \(M = (M_n)_{n=1}^{\infty }\) by \(M_n = d_1 + \cdots + d_n\) for all \(n \in {\mathbb {N}}\). Then M is an \({\mathcal {L}}_2\)-bounded martingale satisfying

for every event A with \(\mathbb {P}(A)>0\).

As a corollary of Theorem A1 we obtain the following result.

Corollary A1

Suppose that X is a discrete-time \({\mathcal {L}}_2\)-bounded martingale. Suppose that the probability space \((\Omega , {\mathcal {B}}, \mathbb {P})\) contains an atomFootnote 5A. Let \((a_n)_{n=1}^{\infty }\) be the (a.s. fixed) values of \((X_n)_{n=1}^{\infty }\) on A. Note that \(a_n = {\mathbb {E}}(X_n\,|\,A)\) for all \(n \in {\mathbb {N}}\), thus implying that \(V(X) = V(X,A)\) a.s. on A. With the help of Theorem A1 we may deduce that

a.s. on A.

This result is strongly related to the following theorem of Burkholder (1966), which holds for a wider class of martingales.

Theorem A2

(Burkholder, 1966) Suppose that X is an \({\mathcal {L}}_1\)-bounded martingale. If A is an atom of the probability space, then

almost everywhere on A.

Let us now proceed to the proof of the Theorem A1.

Proof of Theorem A1

By conditioning on \({\mathcal {F}}_{n+1}\), one has

Similarly, by conditioning on \({\mathcal {F}}_{n}\) we have \({\mathbb {E}}\left( X_{n+1}Y_{n}\right) = {\mathbb {E}}\left( X_{n}Y_{n}\right) \) for all \(n \in {\mathbb {N}}\). Therefore, with the use of Eq. (18) we obtain

Hence by combining Eqs. (18) and (19) we have

where the second inequality holds since \(|ab| \le \frac{a^2+b^2}{2}\) for every \(a,b \in \mathbb {R}\), and the last equality follows from Theorem 12.1 in Williams (1991). Thus, combining Eq. (20) together with Eqs. (14) and (15) we deduce that

as desired. \(\square \)

The following example shows that Theorem A1 cannot be extended to \({{\mathcal {L}}}_1\)-bounded martingales, by providing an \({{\mathcal {L}}}_1\)-bounded martingale M and an event A for which \(V(M,A) = \infty \).

Example A1

Consider the i.i.d. random variables \((d_n)_{n=1}^{\infty }\) distributed according to the law

Let \({\mathcal {D}} \subseteq {\mathcal {G}}\) be the smallest \(\sigma \)-field on which the random variables \((d_n)_{n=1}^{\infty }\) are measurable. Define the martingale \(M = (M_n)_{n=1}^{\infty }\) on \((\Omega ,{\mathcal {D}},\mathbb {P})\) with respect to the natural filtration induced by \((d_n)_{n=1}^{\infty }\) by \( M_n = \prod _{k=1}^{n} d_k,\ \ \forall n \in \mathbb {N}. \) Since \(M_n\ge 0\) for every \(n \in \mathbb {N}\), we have \(\mathbb {E}|M_n| = \mathbb {E}M_n = 1\), implying that M is bounded in \({{\mathcal {L}}}_1\). For each \(n \in \mathbb {N}\) define the event \( A_n = \big \lbrace M_1 =2 , M_2 =4,\ldots ,M_n=2^n,M_{n+1}=0\big \rbrace , \) and let \(A = \bigcup _{n=1}^{\infty }A_n\). We have

and so \(V(M,A) = \infty \).

Remark A2

The events \((A_n)_{n=1}^{\infty }\) in Example A1 are disjoint atoms of the probability space \((\Omega ,{\mathcal {D}},\mathbb {P})\), and thus the result of Burkholder (Theorem A2) cannot be extended to an infinite union of atoms of \({{\mathcal {L}}}_1\)-bounded martingales.

Appendix B: Complements to Aumann’s Bayesian dialogue

Proof of Proposition 1

We begin by proving (i). It is easily verified that \(\mathbb {P}(A) = Q^1_1\). Since the partition element of agent i is determined by the pair \((n,x) \in \mathbb {N}\times \{0,\ldots ,n\}\) of tosses he was allotted and the number of H outcomes he observed, following the notation of Theorem 2 we have

Moreover,

Combining the latter with Eqs. (7), and (21) we obtain Eq. (11), thus showing (i). Let us now move on and assume the Q is the uniform distribution on [0, 1]. For each \(n \in \mathbb {N}\), and \(x \in \{0,\ldots ,n\}\) we have

where \(\mathbf{B }\) is the beta-function, for which we used the identity \(\mathbf{B }(\alpha ,\beta ) = \frac{\Gamma (\alpha )\Gamma (\beta )}{\Gamma (\alpha +\beta )}\). In turn, Eq. (23) implies that

Item (i) in Proposition 1 yields the bound

proving the second item of Proposition 1. \(\square \)

Remark B1

In the proof of Proposition 1 we did not fully utilize the bound presented in Theorem 2. In fact, we did not subtract the quantity \(\mathbb {P}(C(\omega ))/2\mathbb {P}(F_i(\omega ))\). The reason for this is that \(\mathbb {P}(C(\omega ))/2\mathbb {P}(F_i(\omega )) \le \frac{1}{2}\) \(\forall \omega \in \Omega \), making it negligible compared to the right-hand side of Eq. (11), which need not be bounded across different values of \(\omega \in \Omega \).

Rights and permissions

About this article

Cite this article

Shaiderman, D. An upper bound for the \(\ell _1\)-variation along the road to agreement. Int J Game Theory 50, 1053–1067 (2021). https://doi.org/10.1007/s00182-021-00781-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00182-021-00781-1

Keywords

- Aumann’s Agreement Theorem

- Bayesian dialogues

- \(\ell _1\)-Variation

- Martingales with discrete parameter