Abstract

The high dimensionality of genetic data poses many challenges for subgroup identification, both computationally and theoretically. This paper proposes a double-penalized regression model for subgroup analysis and variable selection for heterogeneous high-dimensional data. The proposed approach can automatically identify the underlying subgroups, recover the sparsity, and simultaneously estimate all regression coefficients without prior knowledge of grouping structure or sparsity construction within variables. We optimize the objective function using the alternating direction method of multipliers with a proximal gradient algorithm and demonstrate the convergence of the proposed procedure. We show that the proposed estimator enjoys the oracle property. Simulation studies demonstrate the effectiveness of the novel method with finite samples, and a real data example is provided for illustration.

Similar content being viewed by others

References

Boyd S, Parikh N, Chu E, Peleato B, Eckstein J et al (2011) Distributed optimization and statistical learning via the alternating direction method of multipliers. Found Trends Mach Learn 3(1):1–122

Brito PQ, Soares C, Almeida S, Monte A, Byvoet M (2015) Customer segmentation in a large database of an online customized fashion business. Robot Comput Integr Manuf 36:93–100

Chen J, Tran-Dinh Q, Kosorok MR, Liu Y (2021) Identifying heterogeneous effect using latent supervised clustering with adaptive fusion. J Comput Graph Stat 30(1):43–54

Fan J, Li R (2001) Variable selection via nonconcave penalized likelihood and its oracle properties. J Am Stat Assoc 96(456):1348–1360

Fan J, Lv J (2008) Sure independence screening for ultrahigh dimensional feature space. J R Stat Soc Ser B (Stat Methodol) 70(5):849–911

Gramfort A, Kowalski M, Hämäläinen M (2012) Mixed-norm estimates for the M/EEG inverse problem using accelerated gradient methods. Phys Med Biol 57(7):1937

Hofree M, Shen JP, Carter H, Gross A, Ideker T (2013) Network-based stratification of tumor mutations. Nat Methods 10(11):1108–1115

Kim Y, Hao J, Mallavarapu T, Park J, Kang M (2019) Hi-lasso: high-dimensional lasso. IEEE Access 7:44562–44573

Li J, Li Y, Jin B, Kosorok MR (2021) Multithreshold change plane model: estimation theory and applications in subgroup identification. Stat Med 40(15):3440–3459

Lipkovich I, Dmitrienko A, D’Agostino R Sr (2017) Tutorial in biostatistics: data-driven subgroup identification and analysis in clinical trials. Stat Med 36(1):136–196

Luo Z, Yao X, Sun Y, Fan X (2022) Regression-based heterogeneity analysis to identify overlapping subgroup structure in high-dimensional data. Biom J 64(6):1109–1141

Ma S, Huang J (2017) A concave pairwise fusion approach to subgroup analysis. J Am Stat Assoc 112(517):410–423

Ma S, Huang J, Zhang Z, Liu M (2020) Exploration of heterogeneous treatment effects via concave fusion. Int J Biostat 16(1):20180026

Rand WM (1971) Objective criteria for the evaluation of clustering methods. J Am Stat Assoc 66(336):846–850

Schwalbe E, Lindsey J, Nakjang S et al (2017) Novel molecular subgroups for clinical classification and outcome prediction in childhood medulloblastoma: a cohort study. Lancet Oncol 18:958–971

Shen J, He X (2015) Inference for subgroup analysis with a structured logistic-normal mixture model. J Am Stat Assoc 110(509):303–312

Shen X, Pan W, Zhu Y (2012) Likelihood-based selection and sharp parameter estimation. J Am Stat Assoc 107(497):223–232

Shen X, Huang H-C, Pan W (2012) Simultaneous supervised clustering and feature selection over a graph. Biometrika 99(4):899–914

Tavallali P, Tavallali P, Singhal M (2021) K-means tree: an optimal clustering tree for unsupervised learning. J Supercomput 77(5):5239–5266

Tibshirani R (1996) Regression shrinkage and selection via the lasso. J R Stat Soc Ser B (Stat Methodol) 58(1):267–288

Wang H, Li B, Leng C (2009) Shrinkage tuning parameter selection with a diverging number of parameters. J R Stat Soc Ser B (Stat Methodol) 71(3):671–683

Wang S, Nan B, Rosset S, Zhu J (2011) Random lasso. Ann Appl Stat 5(1):468

Wang W, Zhu Z (2022) Homogeneity and sparsity analysis for high-dimensional panel data models. J Bus Econ Stat 1–10

Yuan M, Lin Y (2006) Model selection and estimation in regression with grouped variables. J R Stat Soc Ser B (Stat Methodol) 68(1):49–67

Yue M, Huang L (2020) A new approach of subgroup identification for high-dimensional longitudinal data. J Stat Comput Simul 90(11):2098–2116

Yue M, Li J, Cheng M-Y (2019) Two-step sparse boosting for high-dimensional longitudinal data with varying coefficients. Comput Stat Data Anal 131:222–234

Zhang C-H (2010) Nearly unbiased variable selection under minimax concave penalty. Ann Stat 38(2):894–942

Zhang P, Ma J, Chen X, Yue S (2020) A nonparametric method for value function guided subgroup identification via gradient tree boosting for censored survival data. Stat Med 39(28):4133–4146

Zou H (2006) The adaptive lasso and its oracle properties. J Am Stat Assoc 101(476):1418–1429

Zou H, Hastie T (2005) Regularization and variable selection via the elastic net. J R Stat Soc Ser B (Stat Methodol) 67(2):301–320

Acknowledgements

The authors sincerely appreciate the insightful feedback provided by the Editor, Associate Editor, and two anonymous reviewers, which has greatly improved the quality of this manuscript. This research is supported by the NSF of China under Grant Numbers 12171450 and 71921001.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Proofs of theorems

Appendix: Proofs of theorems

Proof of Theorem 1

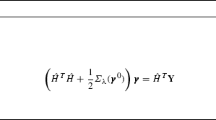

Since the objective function is convex, we only need to find the global minimizer in the local of the truth value. Let us first prove asymptotic normality part. We first constrain \(Q(\varvec{\psi })\) on the S-dimensional subspace \(\left\{ \mathbf {\psi } \in {\mathbb {R}}^{Kp}:\mathbf {\psi }_{{\mathcal {K}}2} = {\textbf{0}}\right\} \) of \({\mathbb {R}}^{Kp}\). This constrained penalized least square objective function is given by

where \(\varvec{\varphi } = \left( \varphi _1, \ldots , \varphi _S \right) ^T\), S is the number of parameter of \(\varvec{\varphi }_{{\mathcal {K}}1}\). We then show that there exists a local minimizer \(\tilde{\varvec{\varphi }}_{{\mathcal {K}}1}\) of \({\bar{Q}}(\varvec{\varphi })\) such that \(\left\| \tilde{\varvec{\varphi }}_{{\mathcal {K}}1} - \varvec{\psi }_{0{\mathcal {K}}1} \right\| = O_p(\sqrt{S/\left| {\mathcal {G}}_{\min }\right| })\).

We can set \(\tilde{\varvec{\varphi }} = \varvec{\psi }_{0{\mathcal {K}}1} + \varvec{\mu }/\sqrt{\left| {\mathcal {G}}_{\min }\right| }\) for \(\varvec{\mu } = \left( \mu _1,\ldots ,\mu _{S}\right) ^T \in {\mathbb {R}}^{S}\), so we can denote \({\bar{Q}}(\varvec{\varphi })\) as:

Consider \(V_n(\varvec{\mu }) = {\bar{Q}}(\varvec{\mu }) - {\bar{Q}}({\textbf{0}})\) as follows.

A routine decomposition yields the following decomposition:

For \(\varvec{\psi }_{s} = 0\), then by condition (C3) (ii):

For \(\varvec{\psi }_{s} \ne 0\), we have

By condition (C3) (i):

Therefore

So we have:

condition (C4) tell us \(\check{{\textbf{X}}}_1^T \check{{\textbf{X}}}_1 /\left| {\mathcal {G}}_{\min }\right| \rightarrow \varvec{\Omega }\) as \(n \rightarrow \infty \). It follows from the Lindeberg central limit theorem, that \(\check{{\textbf{X}}}_1^T \varvec{\epsilon } /\sqrt{\left| {\mathcal {G}}_{\min }\right| }\) converges in distribution to a normal \({\mathcal {N}}({\textbf{0}},\sigma ^2 \varvec{\Omega })\).

We obtained that

where \(W \sim {\mathcal {N}}({\textbf{0}},\sigma ^2 \varvec{\Omega })\). So we prove the asymptotic normality part (ii). And we have \(\left\| \tilde{\varvec{\psi }}_{{\mathcal {K}}1} - \varvec{\psi }_{0 {\mathcal {K}}1} \right\| = O_p(\sqrt{S/\left| {\mathcal {G}}_{\min }\right| })\).

Next, we will prove the consistency part (i). Suppose that, for a sufficient large n, there exists some \(j \in J_0^c\) such that \(\varvec{\psi }_{j} \ne 0\) with a positive probability. Then from the sub-gradient equation (12), we have

On one hand, according to Condition (C3) (ii), the first item of the right-hand side of converges to infinite with a positive probability, namely,

On the other hand, the left-hand side of is bounded in probability since

We get a contradiction that two sides of have different orders as n tends to infinity. So we show that

\(\square \)

Proof of Theorem 2

First, for a matrix \({\textbf{A}}\), let \(\left\| {\textbf{A}} \right\| _{\infty }\) denote the maximum absolute value of the elements of \({\textbf{A}}\). Let \(T: {\mathcal {M}}_{{\mathcal {G}}} \rightarrow {\mathbb {R}}^{K \times p}\) be the mapping such that \(T(\varvec{\beta })\) is the matrix whose s’th row coordinate equals the common value of \(\varvec{\beta }_{i \cdot }\) for \(i \in {\mathcal {G}}_k, k \in \{1,\ldots ,K\}\). Let \(T^{*}: {\mathbb {R}}^{n \times p} \rightarrow {\mathbb {R}}^{K \times p}\) be the mapping such that \(T^{*}(\varvec{\beta }) = \left\{ |{\mathcal {G}}_k|^{-1} \sum _{i \in {\mathcal {G}}_k} \varvec{\beta }_{i \cdot },k \in \{1,\ldots ,K\} \right\} \). Then through ranking the nonzero part of parameters ahead of zeros, the vector form of \(\varvec{\beta }\) can be written as \(\left( \varvec{\beta }_{{\mathcal {K}}1}^T,\varvec{\beta }_{{\mathcal {K}}2}^T\right) ^T\), and the true value is \(\left( \varvec{\beta }_{0{\mathcal {K}}1}^T,{\textbf{0}}\right) ^T\).

Consider the neighborhood of \(\varvec{\beta }_0\):

where \(\tau \) is a constant and \(\tau > 0\). For any \(\varvec{\beta } \in {\mathbb {R}}^{n \times p}\), let \(\varvec{\beta }^{*} = T^{-1} (T^{*}(\varvec{\beta }))\) and by the result in Theorem 4.1, there is an event \(E_1\) such that in the event \(E_1\),

So \(\tilde{\varvec{\beta }} \in \Theta \) in \(E_1\), where \(\tilde{\varvec{\beta }}\) is the parameter estimation corresponding to \(\tilde{\varvec{\psi }}\). There is an event \(E_2\) such that \(P(E_2^c) \le 2/n\). In \(E_1 \cap E_2\), there is a neighborhood of \(\tilde{\varvec{\beta }}\), denoted by \(\Theta _n\), and \(P(E_1 \cap E_2) \ge 1 - 2/n\). We show that \(\tilde{\varvec{\beta }}\) is a local minimizer of the objective function with probability approaching 1 such that (1) \(\ell _p(\varvec{\beta }^{*}) > \ell _p(\tilde{\varvec{\beta }})\) for any \(\varvec{\beta }^{*} \in \Theta \) and \(\varvec{\beta }^{*} \ne \tilde{\varvec{\beta }}\). (2) \(\ell _p(\varvec{\beta }) > \ell _p(\varvec{\beta }^{*})\) for any \(\varvec{\beta } \in \Theta _n \cap \Theta \) for sufficiently large n and \(\left| {\mathcal {G}}_{\min }\right| \).

Step 1 We just need to consider the term: \(\sum _{i<j} w_{ij} \left\| \tilde{\varvec{\beta }}_{i \cdot } - \tilde{\varvec{\beta }}_{j \cdot } \right\| _2\): When \(i,j \in {\mathcal {G}}_k, k \in \{1,\ldots ,K\}\), we have \(w_{ij} \left\| \tilde{\varvec{\beta }}_{i \cdot } - \tilde{\varvec{\beta }}_{j \cdot } \right\| _2 = 0\); when \(i \in {\mathcal {G}}_k, j \in {\mathcal {G}}_{k^{\prime }}\) and \(k \ne k^{\prime }\), we have

By condition (C5) (ii):

By the result of Theorem 1, (1) is proved.

Step 2 For a positive sequence \(t_n\), let \(\Theta _n = \left\{ \varvec{\beta }: \left\| \varvec{\beta } - \tilde{\varvec{\beta }}\right\| _{\infty } \le t_n \right\} \). For \(\varvec{\beta } \in \Theta _n \cap \Theta \), by Taylor’s expansion we have

where

in which \(\varvec{\beta }^m = \eta \varvec{\beta } + (1-\eta )\varvec{\beta }^{*}\) for some \(\eta \in (0,1)\). Moreover,

where \(\varvec{\zeta }_{i \cdot } = \left( \zeta _{i1}, \ldots , \zeta _{ip} \right) ^T\). And we have:

Furthermore,

and we have \(\varvec{\beta }_{i \cdot }^m - \varvec{\beta }_{i^{\prime } \cdot }^m = \eta \varvec{\beta }_{i \cdot } + (1-\eta )\varvec{\beta }_{i \cdot }^{*} - \eta \varvec{\beta }_{i^{\prime } \cdot } - (1-\eta )\varvec{\beta }_{i^{\prime } \cdot }^{*} = \eta (\varvec{\beta }_{i \cdot } - \varvec{\beta }_{i^{\prime } \cdot })\).

For \(k \ne k^{\prime },i \in {\mathcal {G}}_k,i^{\prime } \in {\mathcal {G}}_{k^{\prime }}\), according to condition (C5)

As a result,

We have

Moreover,

Since \(\varvec{\beta }^m\) is between \(\varvec{\beta }\) and \(\varvec{\beta }^{*}\),

Then

where \({\textbf{Q}}_i = {\textbf{x}}_{i\cdot }( y_i - {\textbf{x}}_{i\cdot }^T \varvec{\beta }_{i \cdot }^m)\) and

then

By condition (C2) (iii) that \(\sup _i \left\| {\textbf{x}}_{i\cdot }\right\| \le c_2 \sqrt{q_{\max }}\) and \(\sup _i \left\| \varvec{\beta }_{0i \cdot }-\varvec{\beta }_{i \cdot }^m\right\| \le \tau \sqrt{q_{\max }/\left| {\mathcal {G}}_{\min }\right| }\), we have

By Condition (C1), for \(i \in \{1,\ldots ,n\}\), and \(\{j: \varvec{\beta }_{0ij} \ne 0\}\),

Thus there is an event \(E_2\) such that \(P(E_2^c) \le 2/n\), and over the event \(E_2\),

Then

Therefore,

Let \(t_n = o(1)\), since \(\lambda _2 \left\| \left( \varvec{\zeta }_{i1 \cdot }I(\beta _{0i1} \ne 0), \ldots , \varvec{\zeta }_{ip \cdot }I(\beta _{0ip} \ne 0) \right) \right\| = o\left( \sqrt{\frac{q_{\max }}{\left| {\mathcal {G}}_{\min }\right| }}\right) \), and \(\sqrt{\frac{\ln {n}}{n}} \rightarrow 0, \frac{q_{\max }^{3/2}}{\sqrt{n\left| {\mathcal {G}}_{\min }\right| }} \rightarrow 0\) as \(n \rightarrow \infty \). Therefore, by condition (C5) (i) we have \(\ell _{p}(\varvec{\beta })-\ell _{p}\left( \varvec{\beta }^{*}\right) \ge 0\) for sufficiently large n. This completes the proof. \(\square \)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yu, H., Wu, J. & Zhang, W. Simultaneous subgroup identification and variable selection for high dimensional data. Comput Stat (2023). https://doi.org/10.1007/s00180-023-01436-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00180-023-01436-3