Abstract

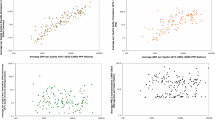

The analysis of continuously spatially varying processes usually considers two sources of variation, namely, the large-scale variation collected by the trend of the process, and the small-scale variation. Parametric trend models on latitude and longitude are easy to fit and to interpret. However, the use of parametric models for characterizing spatially varying processes may lead to misspecification problems if the model is not appropriate. Recently, Meilán-Vila et al. (TEST 29:728–749, 2020) proposed a goodness-of-fit test based on an \(L_2\)-distance for assessing a parametric trend model with correlated errors, under random design, comparing parametric and nonparametric trend estimates. The present work aims to provide a detailed computational analysis of the behavior of this approach using different bootstrap algorithms for calibration, one of them including a procedure that corrects the bias introduced by the direct use of the residuals in the variogram estimation, under a fixed design geostatistical framework. Asymptotic results for the test are provided and an extensive simulation study, considering complexities that usually arise in geostatistics, is carried out to illustrate the performance of the proposal. Specifically, we analyze the impact of the sample size, the spatial dependence range and the nugget effect on the empirical calibration and power of the test.

Similar content being viewed by others

References

Alcalá J, Cristóbal J, González-Manteiga W (1999) Goodness-of-fit test for linear models based on local polynomials. Stat Probabil Lett 42:39–46

Castillo-Páez S, Fernández-Casal R, García-Soidán P (2019) A nonparametric bootstrap method for spatial data. Comput Stat Data Anal 137:1–15

Cressie N (1985) Fitting variogram models by weighted least squares. J Int Ass Math Geol 17(5):563–586

Cressie NA (1993) Statistics for spatial data. Wiley, New York

Crujeiras RM, Van Keilegon I (2010) Least squares estimation of nonlinear spatial trends. Comput Stat Data Anal 54(2):452–465

Davison AC, Hinkley DV (1997) Bootstrap methods and their application, vol 1. Cambridge University Press, Cambridge

Diggle P, Ribeiro PJ (2007) Model-based geostatistics. Springer, New York

Diggle P, Menezes R, Tl Su (2010) Geostatistical inference under preferential sampling. J R Stat Soc Ser C Appl Stat 59(2):191–232

Fan J, Gijbels I (1996) Local polynomial modelling and its applications. Chapman and Hall, London

Fernández-Casal R (2019) npsp: Nonparametric spatial (geo)statistics. http://cran.r-project.org/package=npsp, R package version 0.7-5

Fernández-Casal R, Francisco-Fernández M (2014) Nonparametric bias-corrected variogram estimation under non-constant trend. Stoch Env Res Risk A 28(5):1247–1259

Fernández-Casal R, González-Manteiga W, Febrero-Bande M (2003a) Flexible spatio-temporal stationary variogram models. Stat Comput 13(2):127–136

Fernández-Casal R, González-Manteiga W, Febrero-Bande M (2003b) Space-time dependency modeling using general classes of flexible stationary variogram models. J Geophys Res-Atmos 108(D24):1–12

Francisco-Fernandez M, Opsomer JD (2005) Smoothing parameter selection methods for nonparametric regression with spatially correlated errors. Can J Stat Rev Can Stat 33(2):279–295

Gasser T, Müller HG (1979) Kernel estimation of regression functions. In: Gasser T, Rosenblatt M (eds) Smoothing techniques for curve estimation. Springer, New York, pp 23–68

González-Manteiga W, Cao R (1993) Testing the hypothesis of a general linear model using nonparametric regression estimation. Test 2(1–2):161–188

González-Manteiga W, Crujeiras RM (2013) An updated review of goodness-of-fit tests for regression models. Test 22(3):361–411

Hall P, Patil P (1994) Properties of nonparametric estimators of autocovariance for stationary random fields. Probab Theory Rel 99(3):399–424

Härdle W, Mammen E (1993) Comparing nonparametric versus parametric regression fits. Ann Stat 21(4):1926–1947

Härdle W, Müller M (2012) Multivariate and semiparametric kernel regression. In: Schimek MG (ed) Smoothing and regression: approaches, computation, and application. Wiley, New Jersey

Kim TY, Ha J, Hwang SY, Park C, Luo ZM (2013) Central limit theorems for reduced U-statistics under dependence and their usefulness. Aust N Z J Stat 55(4):387–399

Lahiri SN (2013) Resampling methods for dependent data. Springer, New York

Li CS (2005) Using local linear kernel smoothers to test the lack of fit of nonlinear regression models. Stat Methodol 2(4):267–284

Liu XH (2001) Kernel smoothing for spatially correlated data. PhD thesis, Department of Statistics, Iowa State University

Meilán-Vila A, Opsomer JD, Francisco-Fernández M, Crujeiras RM (2020) A goodness-of-fit test for regression models with spatially correlated errors. TEST 29:728–749

Olea RA, Pardo-Iguzquiza E (2011) Generalized bootstrap method for assessment of uncertainty in semivariogram inference. Math Geosci 43(2):203–228

Opsomer J, Francisco-Fernández M (2010) Finding local departures from a parametric model using nonparametric regression. Stat Pap 51:69–84

Opsomer J, Wang Y, Yang Y (2001) Nonparametric regression with correlated errors. Stat Sci 16:134–153

Priestley MB, Chao M (1972) Non-parametric function fitting. J R Stat Soc Ser B Stat Methodol 34(3):385–392

R Development Core Team (2020) R: a language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. http://www.R-project.org. Accessed 18 Mar 2021

Ribeiro PJ, Diggle PJ (2020) geoR: analysis of geostatistical data. https://cran.r-project.org/package=geoR, R package version 1.7-5.2.2

Shapiro A, Botha JD (1991) Variogram fitting with a general class of conditionally nonnegative definite functions. Comput Stat Data Anal 11(1):87–96

Solow AR (1985) Bootstrapping correlated data. Math Geol 17(7):769–775

Vilar-Fernández J, González-Manteiga W (1996) Bootstrap test of goodness of fit to a linear model when errors are correlated. Commun Stat Theory Methods 25(12):2925–2953

Wand MP, Jones MC (1994) Kernel smoothing. Chapman and Hall/CRC, London

Acknowledgements

The authors acknowledge the support from the Xunta de Galicia Grant ED481A-2017/361 and the European Union (European Social Fund - ESF). This research has been partially supported by MINECO Grants MTM2016-76969-P and MTM2017-82724-R, and by the Xunta de Galicia (Grupo de Referencia Competitiva ED431C-2016-015, ED431C-2017-38 and ED431C-2020-14, and Centro de Investigación del SUG ED431G 2019/01), all of them through the ERDF. The authors also thank two anonymous referees and the Associate Editor for their comments that significantly improved this article.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix. Proof of the main theorem

Appendix. Proof of the main theorem

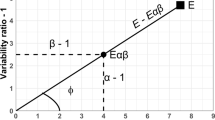

In this appendix, under assumptions (A1)–(A8), Theorem 1 is proved. The asymptotic distribution of the test statistic, given in (7), is derived. This test compares the nonparametric and the smooth parametric estimators, given in (6) and (8), respectively, using an \(L_2\)-distance.

Proof

The test statistic (7) can be decomposed as

Now, taking into account that the trends considered are of the form \(m=m_{{{\varvec{\beta }}}_0}+ n^{-1/2}|\varvec{H}|^{-1/4}g\), one gets:

where

Under assumptions (A1), (A2) and (A6), and given that the difference \(m_{\hat{{{\varvec{\beta }}}}}(\varvec{s})-m_{{{{\varvec{\beta }}}}_0}(\varvec{s})=O_p(n^{-1/2})\), it is obtained that

For the term \(I_2(\varvec{s})\), using the assumption (A2), it follows that

which corresponds to \(b_{1\varvec{H}}\) in Theorem 1. Finally, \(I_3(\varvec{s})\) (associated with the error component) can be decomposed as:

Close expressions of \(I_{31}\) and \(I_{32}\) can be obtained computing the expectation and the variance of these terms. Under assumption (A6), it can be proved that

Similarly, using assumptions (A3), (A6) and (A7), it can be obtained that

Let

Notice that, using assumption (A3),

where \(K_M {=} \max _{\varvec{s}}[K(\varvec{s})]\) and \(\rho _M {=} \max _{\varvec{s}}[\rho _n(\varvec{s})]\), and using assumptions (A2), (A3), (A6) and (A8), one gets that

From (13) and (14) it follows that

Now, consider the term

Let

Thus,

and this can be seen as a U-statistic with degenerate kernel. To obtain the asymptotic normality of \(I_{32}\) we apply the central limit theorem for reduced U-statistics under dependence given by Kim et al. (2013).

For this term \(I_{32}\) we have

Under the assumptions (A4)–(A8), as shown by Liu (2001),

It follows that

Similarly, it can be obtained that the asymptotic variance of \(I_{32}\) is

The term \(I_{32}\) converges in distribution to a normally distributed random variable with mean the second term of \(b_{0\varvec{H}}\) and variance V.

In virtue of the Cauchy–Bunyakovsky–Schwarz inequality, the cross terms in \(T_n\) resulting from the products of \(I_1\), \(I_2\) and \(I_3\) are all of small order. Therefore, combining the results given in Eqs. (12) and (15), and the asymptotic normality of \(I_{32}\) (with its bias (16) and its variance (17)), it follows that

where

\(\square \)

Rights and permissions

About this article

Cite this article

Meilán-Vila, A., Fernández-Casal, R., Crujeiras, R.M. et al. A computational validation for nonparametric assessment of spatial trends. Comput Stat 36, 2939–2965 (2021). https://doi.org/10.1007/s00180-021-01108-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-021-01108-0