Abstract

Recent targeted attacks have increased significantly in sophistication, undermining the fundamental assumptions on which most cryptographic primitives rely for security. For instance, attackers launching an Advanced Persistent Threat (APT) can steal full cryptographic keys, violating the very secrecy of “secret” keys that cryptographers assume in designing secure protocols. In this article, we introduce a game-theoretic framework for modeling various computer security scenarios prevalent today, including targeted attacks. We are particularly interested in situations in which an attacker periodically compromises a system or critical resource completely, learns all its secret information and is not immediately detected by the system owner or defender. We propose a two-player game between an attacker and defender called FlipIt or The Game of “Stealthy Takeover.” In FlipIt, players compete to control a shared resource. Unlike most existing games, FlipIt allows players to move at any given time, taking control of the resource. The identity of the player controlling the resource, however, is not revealed until a player actually moves. To move, a player pays a certain move cost. The objective of each player is to control the resource a large fraction of time, while minimizing his total move cost. FlipIt provides a simple and elegant framework in which we can formally reason about the interaction between attackers and defenders in practical scenarios. In this article, we restrict ourselves to games in which one of the players (the defender) plays with a renewal strategy, one in which the intervals between consecutive moves are chosen independently and uniformly at random from a fixed probability distribution. We consider attacker strategies ranging in increasing sophistication from simple periodic strategies (with moves spaced at equal time intervals) to more complex adaptive strategies, in which moves are determined based on feedback received during the game. For different classes of strategies employed by the attacker, we determine strongly dominant strategies for both players (when they exist), strategies that achieve higher benefit than all other strategies in a particular class. When strongly dominant strategies do not exist, our goal is to characterize the residual game consisting of strategies that are not strongly dominated by other strategies. We also prove equivalence or strict inclusion of certain classes of strategies under different conditions. Our analysis of different FlipIt variants teaches cryptographers, system designers, and the community at large some valuable lessons:

-

1.

Systems should be designed under the assumption of repeated total compromise, including theft of cryptographic keys. FlipIt provides guidance on how to implement a cost-effective defensive strategy.

-

2.

Aggressive play by one player can motivate the opponent to drop out of the game (essentially not to play at all). Therefore, moving fast is a good defensive strategy, but it can only be implemented if move costs are low. We believe that virtualization has a huge potential in this respect.

-

3.

Close monitoring of one’s resources is beneficial in detecting potential attacks faster, gaining insight into attacker’s strategies, and scheduling defensive moves more effectively.

Interestingly, FlipIt finds applications in other security realms besides modeling of targeted attacks. Examples include cryptographic key rotation, password changing policies, refreshing virtual machines, and cloud auditing.

Similar content being viewed by others

1 Introduction

The cyber-security landscape has changed tremendously in recent years. Major corporations and organizations, including the United Nations, Lockheed Martin, Google and RSA, have experienced increasingly sophisticated targeted attacks (e.g., Aurora, Stuxnet). These attacks, often referred in industry circles as Advanced Persistent Threats (APTs), exhibit several distinctive characteristics compared to more traditional malware. APTs are extremely well funded and organized, have very specific objectives, and have their actions controlled by human operators. They are in general persistent for long periods of time in a system, advancing stealthily and slowly to maintain a small footprint and reduce detection risks. Typically, APTs exploit infrastructure vulnerabilities through an arsenal of zero-day exploits and human vulnerabilities through advanced social-engineering attacks.

When designing security protocols, cryptographers and computer security researchers model adversarial behavior by making simplifying assumptions. Traditional cryptography relies for security on the secrecy of cryptographic keys (or of system state). Modern cryptography considers the possibility of partial compromise of system secrets. Leakage resilient cryptography, for instance, constructs secure primitives under the assumption that a system is subject to continuous, partial compromise. But in an adversarial situation, assumptions may fail completely. For instance, attackers launching an APT can steal full cryptographic keys, crossing the line of inviolate key secrecy that cryptographers assume in designing primitives. Assumptions may also fail repeatedly, as attackers find new ways to penetrate systems.

In this paper, motivated by recent APT attacks, we take a new perspective and develop a model in which an attacker periodically compromises a system completely, in the sense of learning its entire state, including its secret keys. We are especially interested in those scenarios where theft is stealthy or covert and not immediately noticed by the victim. We model these scenarios by means of a two-player game we call FlipIt, a.k.a. The Game of “Stealthy Takeovers”.

FlipIt is a two-player game with a shared resource that both players (called herein attacker and defender) wish to control. The resource of interest could be, for instance, a secret key, a password, or an entire infrastructure, depending on the situation being modeled. Players take control of the resource by moving, and paying a certain move cost, but unlike most existing games, in FlipIt players can move at any given time. Most importantly, a player does not immediately know when the other player moves! A player only finds out about the state of the system when she moves herself. This stealthy aspect of the game is a unique feature of FlipIt, which to the best of our knowledge has not been explored in the game theory literature. The goal of each player is to maximize a metric we call benefit, defined as the fraction of time the player controls the resource minus the average move cost. A good strategy for a given player, therefore, is one that gives the player control of the resource a large fraction of the time with few moves.

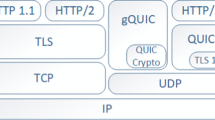

Our main goal in this paper is to find best-choice (ideally dominant or strongly dominant) playing strategies for different variants of the FlipIt game that achieve maximum benefit for each player. An instance of FlipIt is determined by the information players receive before the game, the feedback players obtain while playing the game, and a space of strategies or actions for each player. We have created a hierarchical graphical representation of different classes of strategies for one player in Fig. 1. The strategies are ordered (from top to bottom) by increasing amount of feedback received by a player during the game. As we move down the hierarchy, we encompass broader classes of strategies. In the paper, we prove results about the equivalence of different classes of strategies, (strongly) dominant and dominated strategies within a particular class, and strict inclusion of a class in a broader class. We also characterize, for several instances of FlipIt, the residual game consisting of strategies that are not strongly dominated. We detail below several FlipIt instances that we define and analyze, and then describe the theoretical results proven in the paper, as well as the results based on experimental evidence. We also discuss some of our conjectures regarding the relationship among different classes in the hierarchy, leading to some challenging open problems.

FlipIt Instances

Broadly, as graphically shown in Fig. 1, we define two main classes of strategies for a player: non-adaptive strategies (NA) in which the player does not receive any feedback during the game; and adaptive strategies (AD) in which the player receives certain types of feedback when moving.

In the class of non-adaptive strategies, one subclass of particular interest is that of renewal strategies. For a player employing a renewal strategy, the intervals between the player’s consecutive moves are independent and identically distributed random variables generated by a renewal process. Examples of renewal strategies include: the periodic strategy in which the interval between two consecutive moves is fixed at a constant value and the exponential strategy in which the intervals between consecutive moves are exponentially distributed. The moves of a player employing a non-adaptive (but possibly randomized) strategy can be determined before the game starts, as there is no feedback received during the game.

In the class of adaptive strategies, we distinguish a subclass called last move or LM, in which the player finds out upon moving the exact time when his opponent moved last. In a general adaptive strategy, a player receives upon moving complete information about his opponent’s moves (this is called full history or FH). Classes LM and FH collapse in some cases (for instance when the opponent plays with a renewal strategy).

A further dimension to a player’s strategy is the amount of information the player receives before the game starts. Besides the case in which the player receives no information about his opponent before the game, other interesting cases are: (1) rate-of-play (or RP), in which the player finds out the exact rate of play of his opponent; (2) knowledge-of-strategy (KS), in which the player finds out full information about the strategy of the opponent (but not the opponent’s randomness).

Contributions and Results

Our first contribution in this paper is the definition of the FlipIt game, and its application to various computer security scenarios, including APTs. We view FlipIt as a theoretical framework that helps provide a foundation for the science of cyber-security.

We then analyze several instances of the game of increasing sophistication and complexity. Our main theoretical and experimental results, as well as conjectures, and remaining open questions are summarized in Table 1. To simplify the exposition, we present results for the case when the attacker is more powerful than the defender and organize these results according to the strength of the attacker. Since the game is symmetric, though, analogous results apply when the defender is more powerful than the attacker. In this article, we restrict ourselves to games in which the defender plays with a renewal strategy.

To elaborate, we start by analyzing the simple game in which both players employ a periodic strategy with a random phase. (In such a strategy, moves are spaced at equal intervals, with the exception of the first randomly selected move called the phase.) We compute the Nash equilibrium point for the periodic game and show that the benefit of the player with higher move cost is always 0 in the Nash equilibrium. When move costs of both players are equal, players control the resource evenly and both achieve benefit 0.

We next consider a variant of FlipIt in which both players play with either a renewal or periodic strategy with random phase. We call such games renewal games. Our main result for the renewal game (Theorem 4 in Sect. 4.3) shows that the periodic strategy with a random phase strongly dominates all renewal strategies of fixed rate. Therefore, we can completely characterize the residual FlipIt game when players employ either renewal or periodic strategies with random phases. It consists of all periodic strategies with random phases.

We also analyze renewal games in which the attacker receives additional information about the defender at the beginning of the game, in particular the rate of play of the defender. For this version of the game, we prove that Theorem 4 still holds, and periodic strategies are strongly dominant. Additionally, we determine in Theorem 5 in Sect. 4.4 parameter choices for the rates of play of both attacker and defender that achieve maximum benefit.

Moving towards increased sophistication in the attacker’s strategy, we next analyze several FlipIt instances for the attacker in the broad Last-Move (LM) class. The attacker in this case adaptively determines his next move based on the feedback learned during the game, in particular the exact time of the defender’s last move. In this setting, periodic play for the defender is not very effective, as the attacker learning the defender’s period and last-move time can move right after the defender and achieve control of the resource most of the time. We demonstrate in Theorem 6 in Sect. 5.3 that by playing with an exponential strategy instead, the defender forces an adaptive attacker into playing periodically. (That is, the periodic strategy is the strongly dominant attacker strategy in the class of all LM strategies against an exponential defender strategy.) Paradoxically, therefore, the attacker’s strongly dominant strategy does not make use of the full knowledge the attacker gains from the feedback received during the game. (The full hierarchy in Fig. 1 for the attacker strategy collapses for exponential defender play.) Additionally, we determine optimal parameters (i.e., parameters that optimize both players’ benefits) for both the exponential defender and periodic attacker distributions in Theorems 7 and 8 in Sect. 5.3.

For both non-adaptive and fully adaptive attacker strategies, we show that by playing periodically at sufficiently high rate, the defender can force the attacker to drop out of the game. The move cost of the defender needs to be significantly lower than that of the attacker, however, for such a strategy to bring benefit to the defender. This demonstrates that the player radically lowering his move cost relative to his opponent obtains a high advantage in the game and can effectively control the resource at all times.

We propose an enhancement to the exponential distribution employed by the defender, a strategy that we call delayed exponential. In this strategy, the defender waits after each move for some fixed interval of time and then waits for an exponentially distributed time interval until making her next move. We show experimentally that with this strategy the benefit of the defender is higher than that for exponential play. This result provides evidence that the exponential strategy is not the strongly dominant renewal defender strategy against an LM attacker. There are still interesting questions for which we cannot yet provide a definitive answer: What other renewal strategies for the defender could further increase her benefit?; Is there a strongly dominant strategy for the defender in the class of all renewal strategies against an LM attacker?

Lastly, we explore a Greedy algorithm in which the attacker’s moves are chosen to maximize the local benefit achieved in an interval between two consecutive attacker moves. We analyze the Greedy strategy for several renewal defender strategies and demonstrate that it results in the strongly dominant strategy for an exponential defender strategy. We also show one example for which the Greedy strategy does not result in a dominant strategy for the attacker. We leave open the challenging problem of which strategies are (strongly) dominant for an LM attacker against particular classes of defender strategies (e.g., renewal strategies).

Lessons Derived from FlipIt

Our analysis of FlipIt teaches cryptographers, system designers, and the community at large some valuable lessons:

-

1.

Systems should be designed under the assumption of repeated total compromise, including theft of cryptographic keys. Many times, attackers cross the line of secrey cryptographers assume in their protocol designs. This view has already been expressed by the head of the NSA’s Information Assurance Directorate [28]: “No computer network can be considered completely and utterly impenetrable—not even that of the NSA. NSA works under the assumption that various parts of their systems have already been compromised, and is adjusting its actions accordingly.”

FlipIt provides guidance on how and when to implement a cost-effective defense. For instance, our analysis can help defenders determine when they should change cryptographic keys or user credentials, and how often should they clean their machines or refresh virtual machine instances. FlipIt analysis, conversely, also guides attackers in how to schedule their attacks to have maximal effect, while minimizing attack cost.

-

2.

Aggressive play by the defender can motivate the attacker to drop out of the game (and essentially not to play at all). The best defensive strategy, therefore, is to play fast (for instance by changing passwords frequently, rebooting servers often, or refreshing virtual machines at short intervals) and make the opponent drop out of the game. To be able to move fast, the defender should arrange the game so that her moves cost much less than the attacker’s moves.

An interesting research challenge for system designers is how to design an infrastructure in which refresh/clean costs are very low. We believe that virtualization has huge potential in this respect.

-

3.

As we have shown in our theoretical analysis, any amount of feedback (even limited) received during the game about the opponent benefits a player in FlipIt. Defenders, therefore, should monitor their systems frequently to gain information about the attacker’s strategy and detect potential attacks quickly after take over. Both monitoring and fast detection help a defender more effectively schedule moves, which results in more control of the resource and less budget spent on moves, increasing the defender’s benefit.

Conjectures and Open Problems

In this paper, we introduce a new framework, reflected in the FlipIt game, for modeling different cyber-security situations. The FlipIt game is unique in the game theory literature mostly for its stealthy aspect and continuous-time play. We prove some interesting results about different variants of the game, and the relationships among several classes of strategies. Nevertheless, many interesting questions remain to be answered in order to understand fully all facets of this simple (but challenging-to-analyze) game. We elaborate here on some of the more interesting open problems we have identified, and also state some conjectures for whose truth our results provide strong evidence.

-

1.

For FlipIt with non-adaptive strategies for both defender and attacker, we have fully analyzed the subclass of renewal games. An analysis of the game in which one or both players employ general non-adaptive strategies is deferred to future work. It would be interesting to determine if periodic strategies are still dominant in the general non-adaptive FlipIt game.

-

2.

In the case in which the attacker receives additional information about the defender at the beginning of the game (e.g., the rate of play of the defender or extra information about the defender’s strategy) and both players employ non-adaptive strategies, which strategies are best choices for both players? From this class of games, we analyzed the renewal game in which one player receives RP information and the other receives no information, RP, or KS. We leave open the analysis of other instances of FlipIt in this class.

-

3.

We believe that the most interesting open questions arise in the case in which one player (e.g., the attacker) is adaptive. Here we enumerate several of them:

-

When the attacker is LM, which renewal strategies for the defender are (strongly) dominant?

-

Which LM strategies are (strongly) dominant against a renewal defender?

-

For which class of defender strategies is the Greedy algorithm (strongly) dominant for an LM attacker?

-

When the attacker is LM, the relationship between the non-adaptive and adaptive classes for the defender is not completely understood. We have though strong evidence (also deferred to follow-up work) to state the following conjecture:

-

Conjecture 1

Consider an instance of FlipIt with an LM attacker. Then the defender receiving feedback during the game and playing with an adaptive strategy can strictly improve her benefit relative to non-adaptive play.

-

4.

The most challenging instance of FlipIt is when both players utilize adaptive strategies. In this setting, it would be interesting to determine (strongly) dominant strategies (if any), and to what extent they involve cooperation among players.

2 Game Motivation

We start by defining FlipIt in an informal way. In the second part of this section, we describe several practical applications that motivated the game definition. In Sect. 3, we give a formal definition of FlipIt with careful specification of players’ action spaces, strategies, and benefits.

2.1 Game Definition by Example

Consider a resource that can be controlled (or “owned”) by either of two players (attacker or defender). Ownership will change back and forth following a move of either player, with the goal of each player being to maximize the fraction of time that he or she controls the resource. A distinctive feature of FlipIt is its stealthy aspect, that is, the players do not know when the other player has taken over. Nor do they know the current ownership of the resource unless they perform a move. Also important is the fact that to move, a player must pay a move cost; players thus have a disincentive against moving too frequently.

In an example implementation of a basic version of FlipIt, each player has a control panel with a single button and a light. The player may push his/her button at any time (in the most general form of FlipIt, we consider time to be continuous, but we support discrete variants as well). Pushing the button always causes the button-pusher to take ownership of the resource. We assume that players do not push their buttons at exactly the same time (or, if they do, then ownership does not change hands).

If pushing the button causes ownership to change hands, then the light flashes when the button is pushed. We call this a “takeover.” If the button-pusher already had ownership of the resource, then the light does not flash and the button-push was wasted.

The players cannot see each other’s control panels, and thus the defender does not know when the attacker takes control, and vice versa. The only way a player can determine the state of the game is to push his/her button. Thus a move by either player has two consequences: it acquires the control of the resource (if not already controlled by the mover), but at the same time, it reveals the pre-move state of the resource to the player taking control.

There is always a cost to pushing the button. In this example, pushing the button costs the equivalent of one second of ownership. Thus, at any time t, each player’s net score is the number of seconds he has had ownership of the resource, minus the number of times he has pushed his button.

We show a graphical representation of the game in Fig. 2. The control of the resource is graphically depicted through shaded rectangles, a blue rectangle (dark gray in grayscale) representing a period of defender control, a red rectangle (light gray in grayscale) one of attacker control. Players’ moves are graphically depicted with shaded circles. A vertical arrow denotes a takeover, when a player (re)takes control of the resource upon moving.

The FlipIt game. Blue and red circles represent defender and attacker moves, respectively. Takeovers are represented by arrows. Shaded rectangles show the control of the resource—blue (dark gray in grayscale) for the defender and red (light gray in grayscale) for the attacker. We assume that upon initialization at time 0, the defender has control.

The main focus of the paper is in analyzing “optimal play” for both attacker and defender in this simple game. We wish to explore this question under various assumptions about the details of the rules, or about the strategies the players employ. In what follows, we motivate the choice of this game through several practical applications, and describe various extensions of the basic game needed to model these applications.

2.2 Motivating Applications

A prime motivation for FlipIt is the rise of Advanced Persistent Threats (APTs), which often play out as a protracted, stealthy contest for control of computing resources. FlipIt, however, finds many other natural applications in computer security, and even in other realms of trust assurance. Most of these applications correspond to slightly modified versions of the basic FlipIt game. To motivate exploration of FlipIt, we describe some of these other applications here, along with the extensions or modifications of the basic game required to model them.

2.2.1 Advanced Persistent Threats (APTs)—A Macro-level Game

An APT is a concerted, stealthy, and long-lived attack by a highly resourced entity against a critical digital resource. Publicly acknowledged APTs include disablement of an Iranian uranium enrichment facility by means of the Stuxnet worm [7] and the breach of security vendor RSA, an attack whose ultimate objective was reportedly the theft of military secrets or intellectual property from organizations relying on RSA’s authentication tokens [25].

Stealth is a key characteristic: attackers rely on extended reconnaissance and human oversight to minimize the risk of detection by the defender, which can quickly set back or thwart an APT. Similarly, a defender looks to conceal its knowledge of a detected APT, to avoid alerting the attacker and allowing it to achieve renewed stealth by changing its strategy.

In the case where the attacker looks to exercise persistent control over a set of target resources (as opposed to achieving a one-time mission), FlipIt can serve as a global model for an APT. The defender’s sensitive assets may include computers, internal networks, document repositories, and so forth. In such a macro-level view, we treat all of these assets as an aggregate FlipIt resource that the defender wishes to keep “clean.” The goal of the attacker is to compromise the resource and control it for a substantial fraction of time.

In this macro-level model, a move by the attacker is a campaign that results in control over essential target resources, that is, a thorough breach of the system. A move by the defender is a system-wide remediation campaign, e.g., patching of all machines in a network, global password refresh, reinstallation of all critical servers, etc.

The macro-level view of a defender’s critical resources as a single FlipIt resource is attractively simple. A more realistic and refined model, however, might model an APT as a series of stages along a path to system takeover, each stage individually treated as a micro-level FlipIt game. The attacker’s global level of control at a given time in this case is a function of its success in each of the component games. We now give examples of some micro-level games.

2.2.2 Micro-level Games

Host Takeover

In this version of the game, the target resource is a computing device. The goal of the attacker is to compromise the device by exploiting a software vulnerability or credential compromise. The goal of the defender is to keep the device clean through software reinstallation, patching, or other defensive steps.

An action by either side carries a cost. For the attacker, the cost of host compromise may be that of, e.g., mounting a social-engineering attack that causes a user to open an infected attachment. For the defender, cleaning a host may carry labor and lost-productivity costs.

FlipIt provides guidance to both sides on how to implement a cost-effective schedule. It helps the defender answer the question, “How regularly should I clean machines?” and the attacker, “When should I launch my next attack?”

A Variant/Extension

There are many ways to compromise or clean a host, with varying costs and criteria for success. In a refinement of the game, players might choose among a set of actions with varying costs and effectiveness.

For example, the attacker might choose between two types of move: (1) Use of a published exploit or (2) Use of a zero-day exploit, while the defender chooses either to: (1) patch a machine or (2) reinstall its software. For both players, (1) is the less expensive, but (2) the more effective. Action (2) results in takeover for either player, while action (1) will only work if the opponent’s most recent move was action (1). (For instance, patching a machine will not recover control from a zero-day exploit, but software reinstallation will.)

Refreshing Virtual Machines (VMs)

Virtualization is seeing heavy use today in the deployment of servers in data centers. As individual servers may experience periods of idleness, consolidating multiple servers as VMs on a single physical host often results in greater hardware utilization. Similarly, Virtual Desktop Infrastructure (VDI) is an emerging workplace technology that provisions users with VMs (desktops) maintained in centrally managed servers. In this model, users are not bound to particular physical machines. They can access their virtual desktops from any endpoint device available to them, even smart phones.

While virtualization exhibits many usability challenges, one key advantage is a security feature: VMs can be periodically refreshed (or built from scratch) from “clean” images, and data easily restored from backups maintained at the central server.

Takeover of a VM results in a game very similar to that for a physical host. Virtualization is of particular interest in the context of FlipIt, though, because FlipIt offers a means of measuring (or at least qualitatively illustrating) its security benefits. Refreshing a VM is much less cumbersome than rebuilding the software stack in a physical host. In other words, virtualization lowers the move cost for the defender. Optimal defender play will therefore result in resource control for a higher proportion of the time than play against a comparable attacker on a physical host.

An Extension

Virtualization also brings about an interesting question at the macro-level. How can refreshes of individual VMs be best scheduled while maintaining service levels for a data center as a whole? In other words, how can refresh schedules best be crafted to meet the dual goals of security and avoidance of simultaneous outage of many servers/VDI instances?

Password Reset

When an account or other resource is password-protected, control by its owner relies on the password’s secrecy. Password compromise may be modeled as a FlipIt game in which a move by the attacker results in its learning the password. (The attacker may run a password cracker or purchase a password in an underground forum.) The defender regains control by resetting the password, and thus restoring its secrecy.

This game differs somewhat from basic FlipIt. An attacker can detect the reset of a password it has compromised simply by trying to log into the corresponding account. A defender, though, does not learn on resetting a password whether it has been compromised by an attacker. Thus, the defender can only play non-adaptively, while the attacker has a second move option available, a probe move that reveals the state of control of the resource.

2.2.3 Other Applications

Key Rotation

A common hedge against the compromise of cryptographic keys is key rotation, the periodic generation and distribution of fresh keys by a trusted key-management service. Less common in practice, but well explored in the research literature is key-evolving cryptography, a related approach in which new keys are generated by their owner, either in isolation or jointly with a second party. In all of these schemes, the aim is for a defender to change keys so that compromise by an attacker in a given epoch (interval of time) does not impact the secrecy of keys in other epochs.

Forward-secure protocols [12] protect the keys of past epochs, but not those of future epochs. Key-insulated [6] and intrusion-resilient [6] cryptography protect the keys of both past and future epochs, at the cost of involving a second party in key updates. Cryptographic tamper evidence [11] provides a mechanism for detecting compromise when both the valid owner and the attacker make use of a compromised secret key.

Key updates in all of these schemes occur at regular time intervals, i.e., epochs are of fixed length. FlipIt provides a useful insight here, namely the potential benefit of variable-length epochs.

The mapping of this scenario onto FlipIt depends upon the nature of the target key. An attacker can make use of a decryption key in a strongly stealthy way, i.e., can eavesdrop on communications without ready detection, and can also easily detect a change in key. In this case, the two players knowledge is asymmetric. The defender must play non-adaptively, while the attacker has the option of a probe move at any time, i.e., can determine the state of the system at low cost.

Use of a signing key, on the other hand, can betray compromise by an attacker, as an invalidly signed message may appear anomalous due to its content or repudiation by the key owner.

Variant/Extension

To reflect the information disclosed by use of a signing key, we might consider a variant of FlipIt in which system state is revealed probabilistically. Compromise of a signing key by an attacker only comes to light if the defender actually intercepts a message signed by the attacker and determines from the nature of the signed message or other records that the signature is counterfeit. Similarly, the attacker only learns of a key update by the defender when the attacker discovers a signature by the defender under a new key. (In a further refinement, we might distinguish between moves that update/compromise a key and those involving its actual use for signing.)

Cloud Service Auditing

Cloud computing is a recent swing of the pendulum away from endpoint-heavy computing toward centralization of computing resources [16]. Its benefits are many, including, as noted above, certain forms of enhanced security offered by virtualization, a common element of cloud infrastructure. Cloud computing has a notable drawback, though: it requires users (often called “tenants”) to rely on the trustworthiness of service providers for both reliability and security.

To return visibility to tenants, a number of audit protocols have been proposed that enable verification of service-level agreement (SLA) compliance by a cloud service provider [1–4, 13, 26]. The strongest of these schemes are challenge-response protocols. In a Proof of Retrievability (PoR), for instance, a tenant challenges a cloud service provider to demonstrate that a stored file is remotely retrievable, i.e., is fully intact and accessible via a standard application interface. Other protocols demonstrate properties such as quality-of-service (QoS) levels, e.g., retention of a file in high-tier storage, and storage redundancy [4], i.e., distribution of a file across multiple hard drives.

Execution of an audit protocol carries a cost: A PoR, for instance, requires the retrieval of some file elements by the cloud and their verification by a tenant. It is natural then to ask: What is the best way for a tenant to schedule challenges in an audit scheme?. (Conversely, what is the best way for a cloud service provider to cheat an auditor?) This question goes unaddressed in the literature on cloud audit schemes. (The same question arises in other forms of audit, and does see some treatment [5, 20].)

Auditing for verification of SLA compliance is particularly amenable to modeling in FlipIt. A move by the defender (tenant) is a challenge/audit, one that forces the provider into compliance (e.g., placement of a file in high-tier storage) if it has lapsed. A move by the attacker (cloud) is a downgrading of its service level in violation of an SLA (e.g., relegation of a file to a low storage tier). The metric of interest is the fraction of time the provider meets the SLA.

3 Formal Definition and Notation

This section gives a formal definition of the stealthy takeover game, and introduces various pieces of useful notation.

Players

There are two players: the defender is the “good” player, identified with 0 (or Alice). The attacker is the “bad” player, identified with 1 (or Bob). It is convenient in our development to treat the game as symmetric between the two players.

Time

The game begins at time t=0 and continues indefinitely as t→∞. In the general form of the game, time is viewed as being continuous, but we also support a version of the game with discrete time.

Game State

The time-dependent variable C=C(t) denotes the current player controlling the resource at time t; C(t) is either 0 or 1 at any time t. We say that the game is in a “good state” if C(t)=0, and in a “bad state” if C(t)=1.

For i=0,1 we also let

denote whether the game is in a good state for player i at time t. Here I is an “indicator function”: I(⋅)=1 if its argument is true, and 0 otherwise. Thus, C 1(t)=C(t) and C 0(t)=1−C 1(t). The use of C 0 and C 1 allows us to present the game in a symmetric manner.

The game begins in a good state: C(0)=0.

Moves

A player may “move” (push his/her button) at any time, but is only allowed to push the button a finite number of times in any finite time interval. The player may not, for example, push the button at times 1/2,2/3,3/4,…, as this means pushing the button an infinite number of times in the time interval [0,1]. (One could even impose an explicit lower bound on the time allowed between two button-pushes by the same player.)

A player cannot move more than once at a given time. We allow different players to play at the same time, although with typical strategies this happens with probability 0. If it does happen, then the moves “cancel” and no change of state happens. (This tie-breaking rule makes the game fully symmetric, which we prefer to alternative approaches such as giving a preference to one of the players when breaking a tie.) It is convenient to have a framework that handles ties smoothly, since discrete versions of the game, wherein all moves happen at integer times, might also be of interest. In such variants ties may be relatively common.

We denote the sequence of move times, for moves by both players, as an infinite non-decreasing sequence:

The sequence might be non-decreasing, rather than strictly increasing, since we allow the two players move at the same time.

We let p k denote the player who made the kth move, so that p k ∈{0,1}. We let p denote the sequence of player identities:

We assume that t 1=0 and p 1=0; the good player (the defender) moves first at time t=0 to start the game.

For i=0,1 we let

denote the infinite increasing sequence of times when player i moves.

The sequences t 0 and t 1 are disjoint subsequences of the sequence t. Every element t k of t is either an element t 0,j of t 0 or an element t 1,l of t 1.

The game’s state variable C(t) denotes the player who has moved most recently (not including the current instant t), so that

When C(t)=i then player i has moved most recently and is “in control of the game”, or “in possession of the resource.” We assume, again, that C(0)=0.

Note that C(t k )=p k−1; this is convenient for our development, since if a player moves at time t k then C(t k ) denotes the player who was previously in control of the game (which could be either player).

For compatibility with our tie-breaking rule, we assume that if t k =t k+1 (a tie has occurred), then the two moves at times t k and t k+1 have subscripts ordered so that no net change of state occurs. That is, we assume that p k =1−C(t k ) and p k+1=1−p k . Thus, each button-push causes a change of state, but no net change of state occurs.

We let n i (t) denote the number of moves made by player i up to and including time t, and let

denote the total number of moves made by both players up to and including time t.

For t>0 and i=0,1, we let

denote the average move rate by player i up to time t.

We let r i (t) denote the time of the most recent move by player i; this is the largest value of t i,k that is less than t (if player i has not moved since the beginning of the game, then we define r i (t)=−1). Player 0 always moves at time 0, and therefore r 0(t)≥0. We let r(t)=max(r 0(t),r 1(t))≥0 denote the time of the most recent move by either player.

Feedback During the Game

We distinguish various types of feedback that a player may obtain during the game (specifically upon moving).

It is one of the most interesting aspects of this game that the players do not automatically find out when the other player has last moved; moves are stealthy. A player must move himself to find out (and reassert control).

We let ϕ i (t k ) denote the feedback player i obtains when the player moves at time t k . This feedback may depend on which variant of the game is being played.

-

Non-adaptive [NA]. In this case, a player does not receive any useful feedback whatsoever when he moves; the feedback function is constant.

$$\phi_i(t_k) = 0. $$ -

Last move [LM]. The player moving at time t k >0 finds out the exact time when the opponent played last before time t k . That is, player i learns the value:

$$\phi_i(t_k)=r_{1-i}(t_k). $$ -

Full history [FH]. The mover finds out the complete history of moves made by both players so far:

$$\phi_i(t_k) = \bigl((t_1,t_2, \ldots,t_k),(p_1,p_2,\ldots,p_k) \bigr). $$

We abbreviate these forms of feedback as NA, LM, and FH, and we define other types of feedback in Sect. 7. We consider “non-adaptive” (NA) feedback to be the default (standard) version of the game. When there is feedback and the game is adaptive, then players interact in a meaningful way and therefore cooperative (e.g.,“tit-for-tat”) strategies may become relevant.

Views and History

A view is the history of the game from one player’s viewpoint, from the beginning of the game up to time t. It lists every time that player moved, and the feedback received for that move.

For example, the view for player i at time t is the list:

where t i,j is the time of player i’s jth move, her last move up to time t, and ϕ(t i,j ) is the feedback player i obtains when making her jth move.

A history of a game is the pair of the players’ views.

Strategies

A strategy for playing this game is a (possibly randomized) mapping S from views to positive real numbers. If S is a strategy and v a view of length j, then S(v) denotes the time for the player to wait before making move j+1, so that t i,j+1=t i,j +S(v).

The next view for the player following strategy S will thus be

We define now several classes of strategies, and we refer the reader to Fig. 1 for a hierarchy of these classes.

Non-adaptive Strategies

We say that a strategy is non-adaptive if it does not require feedback received during the game, and we denote by \(\mathcal{N}\) the class of all non-adaptive strategies. A player with a non-adaptive strategy plays in the same manner against every opponent. A player with a non-adaptive strategy can in principle generate the time sequence for all of his moves in advance, since they do not depend on what the other player does. They may, however, depend on some independent source of randomness; non-adaptive strategies may be randomized.

Renewal Strategies

Renewal strategies are non-adaptive strategies for which the intervals between consecutive moves are generated by a renewal process (as defined, for instance, by Feller [8]). Therefore, the inter-arrival times between moves are independent and identical distributed random variables chosen from a probability density function f. As the name suggests, these strategies are “renewed” after each move: the interval until the next move only depends on the current move time and not on previous history.

Periodic Strategies

An example of a simple renewal strategy is a periodic strategy. We call a strategy periodic if there is a δ such that the player always presses his button again once exactly δ seconds have elapsed since his last button-push. We assume that the periodic strategy has a random phase, i.e., the first move is selected uniformly at random from interval [0,δ] (if the strategy is completely deterministic an adaptive opponent can find out the exact move times and schedule his moves accordingly).

Exponential Strategies

We call a strategy exponential or Poisson if the player pushes his button in a Poisson manner: there is some rate λ such that in any short time increment Δ the probability of a button-push is approximately λ⋅Δ. In this case, S(v) has an exponential distribution and this results in a particular instance of a renewal strategy.

Adaptive Strategies

The class of adaptive strategies encompasses strategies in which players receive feedback during the game. In the LM class of strategies, denoted \(\mathcal {A}_{ \mathsf{LM}}\), a player receives last-move feedback, while in the FH-adaptive class, denoted \(\mathcal{A}_{ \mathsf{FH}}\), a player receives full-history feedback, as previously defined.

No-play Strategies

We denote by Φ the strategy of not playing at all (effectively dropping out of the game). We will show that this strategy is sometimes the best response for a player against an opponent playing extremely fast.

Information Received Before the Game Starts

Besides receiving feedback during the game, sometimes players receive additional information about the opponent before the game starts. We capture this with ϕ i (0), which denotes the information received by player i before the game starts. There are several cases we consider:

-

Rate of Play [RP]. In this version of the game, player i finds out the limit of the rate of play α 1−i (t) of its opponent at the beginning of the game (assuming that the rate of play converges to a finite value):

$$\phi_i(0) = \lim_{t \rightarrow\infty} \alpha_{1-i}(t). $$No additional information, however, is revealed to the player about his opponent’s moves during the game.

-

Knowledge of Strategy [KS]. Player i might find additional information about the opponent’s strategy. For instance, if the opponent (player 1−i) employs a renewal strategy generated by probability density function f, then KS information for player i is the exact distribution f:

$$\phi_{i}(0) = f. $$Knowledge of the renewal distribution in this case does not uniquely determine the moves of player 1−i, as the randomness used by player 1−i is not divulged to player i. In this paper, we only use KS in conjunction with renewal strategies, but the concept can be generalized to other classes of strategies.

RP and KS are meaningfully applicable only to a non-adaptive attacker. An adaptive attacker from class LM or FH can estimate the rate of play of the defender, as well as the defender’s strategy during the game from the information received when moving. But a non-adaptive attacker receiving RP or KS information before the game starts can adapt his strategy and base moves on pre-game knowledge about the opponent’s strategy.

Pre-game information received at the beginning of the game, hence, could be used, in conjunction with the feedback received while moving, to determine the strategy of playing the game. We can more formally extend the definition of views to encompass this additional amount of information as

Gains and Benefits

Players receive benefit equal to the number of time units for which they are the most recent mover, minus the cost of making their moves. We denote the cost of a move for player i by k i ; it is important for our modeling goals that these costs could be quite different.

Player i’s total gain G i in a given game (before subtracting off the cost of moves) is just the integral of C i :

Thus G i (t) denotes the total amount of time that player i has owned the resource (controlled the game) from the start of the game up to time t, and

The average gain rate for player i is defined as

so that γ i (t) is the fraction of time that player i has been in control of the game up to time t. Thus, for all t>0:

We let B i (t) denote player i’s net benefit up to time t; this is the gain (total possession time) minus the cost of player i’s moves so far:

We also call B i (t) the score of player i at time t. The maximum benefit or score B 0(t)=t−k 0 for player 0 would be obtained if neither player moved again after player 0 took control at time t=0.

We let β i (t) denote player i’s average benefit rate up to time t:

this is equal to the fraction of time the resource has been owned by player i, minus the cost rate for moving.

In a given game, we define player i’s asymptotic benefit rate or simply benefit as

We use lim inf since β i (t) may not have limiting values as t increases to infinity.

An alternative standard and reasonable approach for summarizing an infinite sequence of gains and losses would be to use a discount rate λ<1 so that a unit gain at future time t is worth only λ t. Our current approach is simpler when at least one of the players is non-adaptive, so we shall omit consideration of the alternative approach here.

While we have defined the benefit for a given game instance, it is useful to extend the notion over strategies. Let S i be the strategy of player i, for i∈{0,1}. Then the benefit of player i for the FlipIt game given by strategies (S 0,S 1) (denoted β i (S 0,S 1)) is defined as the expectation of the benefit achieved in a game instance given by strategies (S 0,S 1) (the expectation is taken over the coin flips of S 0 and S 1).

Game-Theoretic Definitions

We denote by \(\mathsf{FlipIt}( \mathcal {C}_{0}, \mathcal{C}_{1})\) the FlipIt game in which player i chooses a strategy from class \(\mathcal{C}_{i}\), for i∈{0,1}. For a particular choice of strategies \(S_{0} \in \mathcal{C}_{0}\) and \(S_{1} \in \mathcal{C}_{1}\), the benefit of player i is defined as above. In our game, benefits are equivalent to the notion of utility used in game theory.

Using terminology from the game theory literature:

-

A strategy \(S_{0} \in \mathcal{C}_{0}\) is strongly dominated for player 0 in game \(\mathsf{FlipIt}( \mathcal {C}_{0}, \mathcal{C}_{1})\) if there exists another strategy \(S_{0}' \in \mathcal {C}_{0}\) such that

$$\beta_0(S_0,S_1) < \beta_0 \bigl(S_0',S_1\bigr), \quad \forall S_1 \in \mathcal{C}_1. $$ -

A strategy \(S_{0} \in \mathcal{C}_{0}\) is weakly dominated for player 0 in game \(\mathsf{FlipIt}( \mathcal {C}_{0}, \mathcal{C}_{1})\) if there exists another strategy \(S_{0}' \in \mathcal{C}_{0}\) such that

$$\beta_0(S_0,S_1) \le\beta_0 \bigl(S_0',S_1\bigr), \quad \forall S_1 \in \mathcal{C}_1, $$with at least one S 1 for which the inequality is strict.

-

A strategy S 0 is strongly dominant for player 0 in game \(\mathsf{FlipIt}( \mathcal {C}_{0}, \mathcal{C}_{1})\) if

$$\beta_0(S_0,S_1) > \beta_0 \bigl(S_0',S_1\bigr), \quad \forall S_0' \in \mathcal{C}_0, \ \forall S_1 \in \mathcal{C}_1. $$ -

A strategy S 0 is weakly dominant for player 0 in game \(\mathsf{FlipIt}( \mathcal {C}_{0}, \mathcal{C}_{1})\) if

$$\beta_0(S_0,S_1) \ge\beta_0 \bigl(S_0',S_1\bigr), \quad \forall S_0' \in \mathcal{C}_0, \ \forall S_1 \in \mathcal{C}_1. $$

Similar definitions can be given for player 1 since the game is fully symmetric.

There is an implicit assumption in the game theory literature that a rational player does not choose to play a strategy that is strongly dominated by other strategies. Therefore, iterative elimination of strongly dominated strategies for both players is a standard technique used to reduce the space of strategies available to each player (see, for instance, the book by Myerson [18]). We denote by \(\mathsf{FlipIt}^{*}( \mathcal {C}_{0}, \mathcal{C}_{1})\) the residual FlipIt game consisting of surviving strategies after elimination of strongly dominated strategies from classes \(\mathcal{C}_{0}\) and \(\mathcal{C}_{1}\). A rational player will always choose a strategy from the residual game.

A Nash equilibrium for the game \(\mathsf {FlipIt}( \mathcal{C}_{0}, \mathcal{C}_{1})\) is a pair of strategies \((S_{0},S_{1}) \in \mathcal{C}_{0} \times \mathcal{C}_{1}\) such that

4 Renewal Games

In this section, we analyze FlipIt over a simple but interesting class of strategies, those in which a player “renews” play after every move, in the sense of selecting each interval between moves independently and uniformly at random from a fixed distribution. Such a player makes moves without regard to his history of previous moves, and also without feedback about his opponent’s moves. The player’s next move time depends only on the time he moved last.

In this class of strategies, called renewal strategies, the intervals between each player’s move times are generated by a renewal process, a well-studied type of stochastic process. (See, for example, the books of Feller [8], Ross [23] and Gallager [9] that offer a formal treatment of renewal theory.) We are interested in analyzing the FlipIt game played with either renewal or periodic strategies (with random phases as defined in Sect. 4.1), finding Nash equilibria for particular instances of the game, determining strongly dominated and dominant strategies (when they exist), and characterizing the residual FlipIt game.

These questions turn out to be challenging. While simple to characterize, renewal strategies lead to fairly complex mathematical analysis. Our main result in this section is that renewal strategies are strongly dominated by periodic strategies (against an opponent playing also a renewal or periodic strategy), and the surviving strategies in the residual FlipIt game are the periodic strategies. In addition, in the subclass of renewal strategies with a fixed rate of play, the periodic strategy is the strongly dominant one.

We also analyze in depth the FlipIt game in which both players employ a periodic strategy with a random phase. We compute the Nash equilibria for different conditions on move costs, and discuss the choice of the rate of play when the attacker receives feedback according to NA and RP definitions given in Sect. 3.

4.1 Playing Periodically

We start this section by analyzing a very simple instance of the FlipIt game. We consider a non-adaptive continuous game in which both players employ a periodic strategy with a random phase. If the strategy of one player is completely deterministic, then the opponent has full information about the deterministic player’s move times and therefore can schedule his moves to control the resource at all times. We introduce phase randomization into the periodic strategy for this reason, selecting the time of the first move uniformly at random from some interval.

More specifically, a periodic strategy with random phase is characterized by the fixed interval between consecutive moves, denoted δ. We assume that the first move called the phase move is chosen uniformly at random in interval [0,δ]. The average play rate (excluding the first move) is given by α=1/δ. We denote by P α the periodic strategy with random phase of rate α and \(\mathcal{P}\) the class of all periodic strategies with random phases:

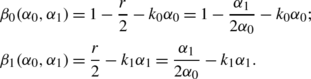

An interesting game to consider is one in which player i employs strategy \(P_{\alpha_{i}}\). Let δ i =1/α i be the period, and k i the move cost of player i, for i∈{0,1}. This game is graphically depicted in Fig. 3.

As an initial exercise, and to get acquainted to the model and definitions, we start by computing benefits for both players in the periodic game.

Computing Benefits

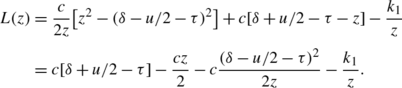

For the periodic game defined above, the benefits of both players depend on rates of play α 0 and α 1. We thus denote the benefit of player i by β i (α 0,α 1) (this is the expected benefit computed over the random phase selections of the two players as in the definition given in Sect. 3). To compute both players’ benefits, we consider two cases:

- Case 1::

-

α 0≥α 1 (The defender plays as least as fast as the attacker.)

Let r=δ 0/δ 1. The intervals between two consecutive defender’s moves have length δ 0. Consider a given defender move interval. The probability over the attacker’s phase selection that the attacker moves in this interval is r. Given that the attacker moves within the interval, he moves exactly once within the interval (since δ 0≤δ 1) and his move is distributed uniformly at random. Thus the expected period of attacker control within the interval is r/2. We can therefore express the players’ benefits as

- Case 2::

-

α 0≤α 1 (The defender plays no faster than the attacker.)

Similar analysis yields benefits

Nash Equilibria

As a second step, we are interested in finding Nash equilibria, points for which neither player will increase his benefit by changing his rate of play. More formally, a Nash equilibrium for the periodic game is a point \((\alpha_{0}^{*},\alpha_{1}^{*})\) such that the defender’s benefit \(\beta_{0}(\alpha_{0},\alpha_{1}^{*})\) is maximized at \(\alpha_{0} = \alpha _{0}^{*}\) and the attacker’s benefit \(\beta_{1}(\alpha_{0}^{*},\alpha_{1})\) is maximized at \(\alpha_{1} = \alpha_{1}^{*}\).

To begin with, some useful notation. We denote by opt 0(α 1) the set of values (rates of play α 0) that optimize the benefit of the defender for a fixed rate of play α 1 of the attacker. Similarly, we denote by opt 1(α 0) the set of values (rates of play α 1) that optimize the benefit of the attacker for a fixed rate of play α 0 of the defender. The following theorem specifies Nash equilibria for the periodic game and is proven in Appendix A.

Theorem 1

The FlipIt game \(\mathsf {FlipIt}( \mathcal{P}, \mathcal{P})\) in which both players employ periodic strategies with random phases has the following Nash equilibria:

We illustrate the case for k 0=1 and k 1=1.5 in Fig. 4. We depict in the figure the two curves opt 0 and opt 1 and the unique Nash equilibrium at their intersection. (See the proof of Theorem 1 for an explicit formula for opt 0 and opt 1.) The highlighted blue (dark gray in grayscale) and red (light gray in grayscale) regions correspond to cases for which it is optimal for the defender, and the attacker, respectively, not to move at all (when opt 0(α 1)=0 or opt 1(α 0)=0, respectively).

Parameter Choices

Finally, we would like to show the impact the parameter choice has on the benefits achieved by both players in the game. The periodic strategy with random phase for player i is uniquely determined by choosing rate of play α i . Again, in the non-adaptive version of the game neither player has any information about the opponent’s strategy (expect for the move costs, which we assume are known at the beginning of the game). Therefore, players need to decide upon a rate of play based only on knowledge of the move costs.

We show in Fig. 5 the benefits of both players as a function of play rates α 0 and α 1. We plot these graphs for different values of move costs k 0 and k 1. Darker shades correspond to higher values of the benefits, and white squares correspond to negative or zero benefit.

Defender’s (left, depicted in blue, dark gray in gray scale) and attacker’s (right, depicted in red, light gray in gray scale) benefit for various move costs. Benefits are represented as a function of rates of play α 0 and α 1. Darker shades correspond to higher values of the benefits, and white squares correspond to negative or zero benefit.

The top four graphs clearly demonstrate the advantage the player with a lower move cost has on its opponent. Therefore, an important lesson derived from FlipIt is that by lowering the move cost a player can obtain higher benefit, no matter how the opponent plays! The bottom four graphs compare the benefits of both players for equal move costs (set at 1 and 2, respectively). The graphs show that when move costs are equal, both players can achieve similar benefits, and neither has an advantage over the other. As expected, the benefits of both players are negatively affected by increasing the move costs. We also notice that in all cases playing too fast results eventually in negative benefit (depicted by white squares), as the cost of the moves exceeds that of the gain achieved from controlling the resource.

Achieve Good Benefits in Any Game Instance

We notice that if both players play periodically with random phase, the choice of the random phase could result in gain 0 for one of the players (in the worst case). This happens if the opponent always plays “right after” and essentially controls the resource at all times. The benefits computed above are averages over many game instances, each with a different random phase.

We can, nevertheless, guarantee that expected benefits are achieved in any game instance by “re-phasing” during the game. The idea is for a player to pick a new random phase at moves with indices a 1,a 2,…,a n ,…. It turns out that for a j =j 2, the expectation of β i (t) (the benefit up to time t) over all random phases converges to β i (α 0,α 1) as t→∞ and by the law of large numbers its standard deviation converges to 0 as t→∞. This can be used to show that each game instance achieves benefits β 0(α 0,α 1) and β 1(α 0,α 1) computed above.

Forcing the Attacker to Drop Out

We make the observation that if the defender plays extremely fast (periodic with rate α 0>1/2k 1), the attacker’s strongly dominant non-adaptive strategy is to drop out of the game. The reason is that in each interval between defender’s consecutive moves (of length δ 0=1/α 0<2k 1), the attacker can control the resource on average at most half of the time, resulting in gain strictly less than k 1. However, the attacker has to spend k 1 for each move, and therefore the benefit in each interval is negative.

We can characterize the residual FlipIt game in this case:

4.2 General Renewal Strategies

We now analyze the general case of non-adaptive renewal games. In this class of games both players’s strategies are non-adaptive and the inter-arrival times between each player’s moves are produced by a renewal process (characterized by a fixed probability distribution). We start by presenting some well-known results from renewal theory that will be useful in our analysis and then present a detailed analysis of the benefits achieved in the renewal game.

Renewal Theory Results

Let {X j } j≥0 be independent and identically distributed random variables chosen from a common probability density function f. Let F be the corresponding cumulative distribution function. {X j } j≥0 can be interpreted as inter-arrival times between events in a renewal process: the nth event arrives at time \(S_{n} = \sum_{j=0}^{n}X_{j}\). Let μ=E[X j ], for all j≥0.

A renewal process generated by probability density function f is called arithmetic if inter-arrival times are all integer multiples of a real number d. The span of an arithmetic distribution is the largest d for which this property holds. A renewal process with d=0 is called non-arithmetic.

For a random variable X given by a probability density function f, corresponding cumulative distribution F, and expected value μ=E[X], we define the size-bias density function as

and the size-bias cumulative distribution function as

The age function Z(t) of a renewal process is defined at time interval t as the time since the last arrival. Denote by f Z(t) and F Z(t) the age density and cumulative distribution functions, respectively. The following lemma [8] states that as t goes to ∞, the age density and cumulative distribution functions converge to the size-bias density and cumulative distribution functions, respectively:

Lemma 1

For a non-arithmetic renewal process given by probability density function f, the age density and cumulative distribution functions converge as

Playing FlipIt with Renewal Strategies

A renewal strategy, as explained above, is one in which a player’s moves are generated by a renewal process. In a non-arithmetic renewal strategy, the player’s moves are generated by a non-arithmetic renewal process. We denote by R f the renewal strategy generated by a non-arithmetic renewal process with probability density function f, and by \(\mathcal{R}\) the class of all non-arithmetic renewal strategies:

Here we consider the FlipIt game in which both players employ non-arithmetic renewal strategies: player i uses strategy \(R_{f_{i}}\). Let us denote the intervals between defender’s moves as {X j } j≥0 and the intervals between attacker’s moves as {Y j } j≥0. {X j } j≥0 are identically distributed random variables chosen independently from probability density function f 0 with average μ 0, while {Y j } j≥0 are independent identically distributed random variables chosen from probability density function f 1 with average μ 1. Let α i =1/μ i be the rate of play of player i.

We denote the corresponding cumulative distribution functions as F 0 and F 1. Since both renewal processes are non-arithmetic, we can apply Lemma 1 and infer that the age density and cumulative distribution functions of both processes converge. Denote by \(f_{0}^{*}, f^{*}_{1}\) and \(F_{0}^{*}, F^{*}_{1}\) the size-bias density and cumulative distribution functions for the two distributions.

A graphical representation of this game is given in Fig. 6.

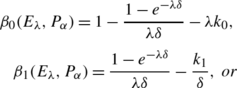

In this context, the following theorem (proven in Appendix A) gives a formula for the players’ benefits:

Theorem 2

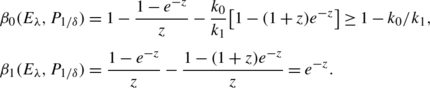

In the non-adaptive renewal FlipIt game \(\mathsf {FlipIt}( \mathcal{R}, \mathcal{R})\), the players’ benefits for strategies \((R_{f_{0}},R_{f_{1}}) \in \mathcal{R}\times \mathcal{R}\) are

Interestingly, Theorem 2 can be generalized to strategies from class \(\mathcal{R}\cup \mathcal{P}\). We observe that for strategy \(P_{\alpha} \in \mathcal{P}\), the density and cumulative distribution of the underlying periodic distribution are

The size-bias density and cumulative distribution functions for the periodic distribution are

Based on these observations, we obtain the following theorem, whose proof is given in Appendix A.

Theorem 3

In the non-adaptive FlipIt game \(\mathsf {FlipIt}( \mathcal{R}\cup \mathcal {P}, \mathcal{R}\cup \mathcal{P})\), the players’ benefits for strategies \((S_{0},S_{1}) \in ( \mathcal{R}\cup \mathcal{P}) \times ( \mathcal{R}\cup \mathcal{P})\) are

4.3 The Residual Renewal Game

We are now able to completely analyze the FlipIt game with strategies in class \(\mathcal{R}\cup \mathcal{P}\). We show first that for a fixed rate of play α i of player i the periodic strategy with random phase is the strongly dominant strategy for player i (or, alternatively, all non-arithmetic renewal strategies are strongly dominated by the periodic strategy with the same rate). As a consequence, after both players iteratively eliminate the strongly dominated strategies, the surviving strategies in the residual game \(\mathsf{FlipIt}^{*}( \mathcal{R}\cup \mathcal{P} , \mathcal{R}\cup \mathcal{P})\) are the periodic strategies.

For a fixed α>0, we denote by \(\mathcal {R}_{\alpha}\) the class of all non-arithmetic renewal strategies of fixed rate α, and by \(\mathcal{P}_{\alpha}\) the class of all periodic strategies of rate α. As there exists only one periodic strategy of rate α, \(\mathcal{P}_{\alpha} = \{P_{\alpha}\}\). The proof of the following theorem is given in Appendix A.

Theorem 4

-

1.

For a fixed rate of play α 0 of the defender, strategy \(P_{\alpha_{0}}\) is strongly dominant for the defender in game \(\mathsf{FlipIt}( \mathcal{R}_{\alpha_{0}} \cup \mathcal{P}_{\alpha_{0}}, \mathcal{R}\cup \mathcal{P})\). A similar result holds for the attacker.

-

2.

The surviving strategies in the residual FlipIt game \(\mathsf{FlipIt}( \mathcal{R}\cup \mathcal{P} , \mathcal{R}\cup \mathcal{P})\) are strategies from class \(\mathcal{P}\):

$$\mathsf{FlipIt}^*( \mathcal{R}\cup \mathcal{P}, \mathcal{R} \cup \mathcal{P}) = \mathsf {FlipIt}( \mathcal{P}, \mathcal{P}). $$

Remark

While we have proved Theorem 4 for the non-adaptive case, the result also holds if one or both players receive information at the beginning of the game according to the RP definition. This follows immediately from the proof of Theorem 4 given in Appendix A.

4.4 Renewal Games with an RP Attacker

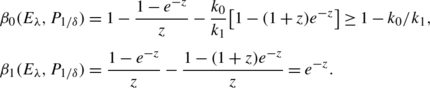

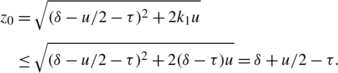

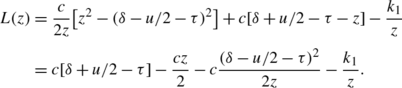

In the case in which one player (assume the attacker) receives feedback according to RP (i.e., knows the rate of play of the defender), we can provide more guidance to both players on choosing the rates of play that achieve maximal benefit. In particular, we consider a scenario in which the attacker finds out the rate of play of the defender before the game starts, and we assume that the attacker plays rationally in the sense that he tries to maximize his own benefit: for a fixed rate of play α 0 of the defender, the attacker chooses to play with rate \(\alpha_{1}^{*} = \mathsf{argmax} \mbox{ } \beta_{1}(\alpha_{0},\cdot )\). Under this circumstance, the defender can also determine for each fixed rate of play α 0, the rate of play \(\alpha_{1}^{*}\) of a rational attacker. In a pre-game strategy selection phase, then, a rational defender selects and announces the rate of play \(\alpha_{0}^{*}\) that maximizes her own benefit: \(\alpha_{0}^{*} = \mathsf{argmax} \mbox{ } \beta_{0}(\cdot,\alpha_{1}^{*})\). The following theorem provides exact values of \(\alpha_{0}^{*}\) and \(\alpha_{1}^{*}\), as well as maximum benefits achieved by playing at these rates.

Theorem 5

Consider the periodic FlipIt game with NA defender and RP attacker. Assume that the attacker always chooses his rate of play to optimize his benefit: \(\alpha_{1}^{*} = \operatorname{\mathsf{argmax}} \beta_{1}(\alpha _{0},\cdot)\) given a fixed rate of play of the defender α 0, and the defender chooses her rate of play \(\alpha_{0}^{*}\) that achieves optimal benefit: \(\alpha_{0}^{*} = \operatorname{\mathsf{argmax}} \beta _{0}(\cdot,\alpha_{1}^{*})\). Then:

-

1.

For \(k_{1} < (4-\sqrt{12})k_{0}\) the rates of play that optimize each player’s benefit are

$$\alpha_0^* = \frac{k_1}{8k_0^2}; \qquad \alpha_1^* = \frac {1}{4k_0}. $$The maximum benefits for the players are

$$\beta_0\bigl(\alpha_0^*,\alpha_1^*\bigr) = \frac{k_1}{8k_0}; \qquad \beta_1\bigl( \alpha_0^*,\alpha_1^*\bigr) = 1 - \frac{k_1}{2k_0}. $$ -

2.

For \(k_{1} \ge(4-\sqrt{12})k_{0}\) the rates of play that optimize each player’s benefit are (↓ denotes convergence from above):

$$\alpha_0^* \downarrow\frac{1}{2k_1}; \qquad \alpha_1^* = 0. $$The maximum benefits for the players are (↑ denotes convergence from below):

$$\beta_0\bigl(\alpha_0^*,\alpha_1^*\bigr) \uparrow1- \frac{k_0}{2k_1}; \qquad \beta_1\bigl( \alpha_0^*,\alpha_1^*\bigr) = 0. $$

5 LM Attacker

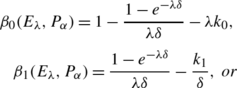

In the previous section, we analyzed a non-adaptive instance of FlipIt in which both players employ (non-arithmetic) renewal or periodic strategies with a random phase. We consider now a FlipIt game in which the defender is still playing with a renewal or periodic strategy, but the attacker is more powerful and receives feedback during the game. In particular, the attacker finds out the exact time of the defender’s last move every time he moves, and is LM according to our definition in Sect. 3. It is an immediate observation that for a defender playing with a renewal strategy, an LM attacker is just as powerful as an FH one.

We believe that this models a realistic scenario in which attackers have access to more information than defenders. For instance, attackers can determine with high confidence the time intervals when the defender changes her password by trying to use a compromised password at different time intervals and observe its expiration time. In this model, our goals are two-fold: first, we would like to understand which renewal strategies chosen by the defender achieve high benefit against an adaptive attacker; second, we are interested in finding good adaptive strategies for the attacker, given a fixed distribution for the defender.

We analyze this game for the defender playing with periodic and exponential distributions. The periodic defender strategy is a poor choice against an LM attacker, as the defender’s benefit is negative (unless the defender can afford to play extremely fast and force the attacker to drop out of the game). For the defender playing with an exponential distribution, we prove that the attacker’s strongly dominant strategy is periodic play (with a rate depending on the defender’s rate of play). We also define a new distribution called delayed exponential, in which the defender waits for a fixed interval of time before choosing an exponentially distributed interval until her next move. We show experimentally that for some parameter choices, the delayed-exponential distribution results in increased benefit for the defender compared to exponential play.

5.1 Game Definition

In this version of the game, the defender plays with either a non-arithmetic renewal strategy from class \(\mathcal{R}\) or with a periodic strategy with random phase from class \(\mathcal {P}\). Hence, the inter-arrival times between defender’s consecutive moves are given by independent and identically distributed random variables {X j } j≥0 from probability density function f 0. (In case the defender plays with a periodic strategy with a random phase, X 0 has a different distribution that {X j } j≥1.)

The attacker is LM and his inter-arrival move times are denoted by {Y j } j≥0. At its jth move, the attacker finds out the exact time since the defender’s last move, denoted τ j . The attacker determines the interval until his next move Y j+1 taking into consideration the history of his own previous moves (given by \(\{Y_{i}\} _{i=0}^{j}\)), as well as τ j . Without loss of generality, the attacker does not consider times {τ i } i<j when determining interval Y j+1 for the following reason. The defender “restarts” its strategy after each move, and thus knowledge of all the history {τ i } i<j does not help the attacker improve his strategy. This game is depicted graphically in Fig. 7.

Observation

We immediately observe that an LM attacker is as powerful as an FH attacker against a renewal or periodic defender:

The reason is that an FH attacker, while receiving more feedback during the game than an LM one, will only make use of the last defender’s move in determining his strategy. Information about previous defender’s moves does not provide any additional advantage, as the defender’s moves are independent of one another.

5.2 Defender Playing Periodically

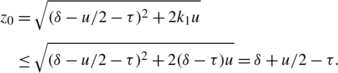

We start by analyzing the simple game in which the defender plays periodically with period δ 0 and a random phase. The attacker finds out after his first move after δ 0 the exact phase of the distribution (the time of the defender’s first move denoted X 0). As such, the attacker knows the exact time of all future moves by the defender: X 0+δ 0,X 0+2δ 0,…. The strongly dominant strategy for the attacker is then to play “immediately after” the defender, and control the resource all the time after the first move. However, when the defender plays sufficiently fast, it can force the attacker to drop out of the game. We distinguish three cases based on the defender’s rate of play.

- Case 1::

-

α 0<1/k 1

It is quite easy to see that in this case the strongly dominant attacker strategy is to play right after the defender. Therefore, the defender only controls the resource for a small time interval at the beginning of the game. We can prove that the gain of the defender is asymptotically 0 (γ 0=0) and that of the attacker converges to 1 (γ 1=1). The benefits of the players are

$$\beta_0 = - k_0/\delta_0; \qquad \beta_1 = 1-k_1/\delta_0. $$ - Case 2::

-

α 0>1/k 1

In this case, the attacker would have to spend at least as much budget on moving as the gain obtained from controlling the resource, resulting in negative or zero benefit. Therefore, the defender forces the attacker to drop out of the game. The following observation is immediate:

Forcing the Attacker to Drop Out If the defender plays periodically with rate α 0>1/k 1, an LM attacker’s strongly dominant strategy is not playing at all. Therefore all LM attacker strategies are strongly dominated by the no-play strategy against a defender playing with strategy \(P_{\alpha_{0}}\), with α 0>1/k 1, and we can characterize the residual FlipIt game in this case:

$$\mathsf{FlipIt}^*(P_{\alpha_0}, \mathcal {A}_{ \mathsf{FH}}) = \mathsf{FlipIt}(P_{\alpha_0},\varPhi), \quad \forall \alpha_0 > 1/k_1. $$The benefit achieved by the defender is β 0=1−k 0/k 1.

It is important to note that this holds for FH attackers, as well, since we have discussed that the residual games for LM and FH attackers against a \(\mathcal{R}\cup \mathcal{P}\) defender result in the same set of surviving strategies.

- Case 3::

-

α 0=1/k 1

Two attacker strategies are (weakly) dominant in this case: play right after the defender and no play at all. Both strategies achieve zero benefit for the attacker. The defender yields benefit either β 0=−k 0/k 1 (for the attacker playing after the defender) or β 0=1−k 0/k 1 (for the no-play attacker strategy).

This demonstrates that the periodic strategy with a random phase is a poor choice for the defender (unless the defender plays extremely fast), resulting always in negative benefit. This motivates us to search for other distributions that will achieve higher benefit for the defender against an LM attacker.

5.3 Defender Playing Exponentially