Abstract

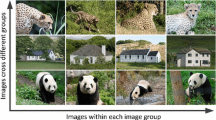

The co-salient object detection (Co-SOD) aims to discover common, salient objects from a group of images. With the development of convolutional neural networks, the performance of Co-SOD methods has been significantly improved. However, some models cannot construct collaborative relationships across images optimally and lack effective retention of collaborative features in the top-down decoding process. In this paper, we propose a novel group attention retention network (GARNet), which captures excellent collaborative features and retains them. First, a group attention module is designed to construct the inter-image relationships. Second, an attention retention module and a spatial attention module are designed to retain inter-image relationships for protecting them from being diluted and filter out the cluttered context during feature fusion, respectively. Finally, considering the intra-group consistency and inter-group separability of images, an embedding loss is additionally designed to discriminate between real collaborative objects and distracting objects. The experiments on four datasets (iCoSeg, CoSal2015, CoSoD3k, and CoCA) show that our GARNet outperforms previous state-of-the-art methods. The source code is available at https://github.com/TJUMMG/GARNet.

Similar content being viewed by others

References

Ahn, J., Cho, S., Kwak, S.: Weakly supervised learning of instance segmentation with inter-pixel relations. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2204–2213 (2019)

Bai, Z., Liu, Z., Li, G., Wang, Y.: Adaptive group-wise consistency network for co-saliency detection. IEEE Trans. Multimed. 25, 764–776 (2023). https://doi.org/10.1109/TMM.2021.3138246

Batra, D., Kowdle, A., Parikh, D., Luo, J., Chen, T.: icoseg: Interactive co-segmentation with intelligent scribble guidance. In: Computer Vision and Pattern Recognition (2010)

Cao, X., Tao, Z., Zhang, B., Fu, H., Feng, W.: Self-adaptively weighted co-saliency detection via rank constraint. IEEE Trans. Image Process. 23(9), 4175–4186 (2014)

Carion, N., Massa, F., Synnaeve, G., Usunier, N., Kirillov, A., Zagoruyko, S.: End-to-end object detection with transformers. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) Computer Vision–ECCV 2020, pp. 213–229. Springer International Publishing, Cham (2020)

Chang, K.-Y., Liu, T.-L., Lai, S.-H.: From co-saliency to co-segmentation: an efficient and fully unsupervised energy minimization model. In: CVPR 2011, pp. 2129–2136 (2011)

Chen, H.-Y., Lin, Y.-Y., Chen, B.-Y.: Co-segmentation guided hough transform for robust feature matching. IEEE Trans. Pattern Anal. Mach. Intell. 37(12), 2388–2401 (2015)

Chen, Z., Cong, R., Xu, Q., Huang, Q.: DPANet: Depth potentiality-aware gated attention network for RGB-D salient object detection. IEEE Trans. Image Process. 30, 7012–7024 (2021)

Devlin, J., Chang, M., Lee, K., Toutanova, K.: BERT: pre-training of deep bidirectional transformers for language understanding. In: Burstein, J., Doran, C., Solorio, T. (eds) Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT 2019, Minneapolis, MN, USA, June 2–7, 2019, Volume 1 (Long and Short Papers), pp. 4171–4186. Association for Computational Linguistics (2019)

Fan, Q., Fan, D.-P., Fu, H., Tang, C.-K., Shao, L., Tai, Y.-W.: Group collaborative learning for co-salient object detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 12288–12298 (2021)

Fan, D.-P., Gong, C., Cao, Y., Ren, B., Cheng, M.-M., Borji, A.: Enhanced-alignment measure for binary foreground map evaluation. arXiv:1805.10421 (2018)

Fan, D.-P., Li, T., Lin, Z., Ji, G.-P., Zhang, D., Cheng, M.-M., Fu, H., Shen, J.: Re-thinking co-salient object detection. IEEE Trans. Pattern Anal. Mach. Intell. 44(8), 4339–4354 (2022). https://doi.org/10.1109/TPAMI.2021.3060412

Fu, H., Cao, X., Tu, Z.: Cluster-based co-saliency detection. IEEE Trans. Image Process. 22(10), 3766–3778 (2013)

Gong, X., Liu, X., Li, Y., Li, H.: A novel co-attention computation block for deep learning based image co-segmentation. Image Vis. Comput. 101, 103973 (2020). https://doi.org/10.1016/j.imavis.2020.103973

Guo, R., Niu, D., Qu, L., Li, Z.: Sotr: Segmenting objects with transformers. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 7157–7166 (2021)

Han, J., Cheng, G., Li, Z., Zhang, D.: A unified metric learning-based framework for co-saliency detection. IEEE Trans. Circuits Syst. Video Technol. 28, 2473–2483 (2017)

Han, K., Wang, Y., Chen, H., Chen, X., Guo, J., Liu, Z., Tang, Y., Xiao, A., Xu, C., Xu, Y., Yang, Z., Zhang, Y., Tao, D.: A survey on vision transformer. IEEE Trans. Pattern Anal. Mach. Intell. (2022). https://doi.org/10.1109/TPAMI.2022.3152247

He, H., Wang, J., Li, X., Hong, M., Huang, S., Zhou, T.: EAF-Net: an enhancement and aggregation-feedback network for RGB-T salient object detection. Mach. Vis. Appl. 33(4), 1–15 (2022)

Hsu, K.J., Tsai, C.C., Lin, Y., Qian, X., Chuang, Y.: Unsupervised CNN-based co-saliency detection with graphical optimization (2018)

Jiang, B., Jiang, X., Tang, J., Luo, B., Huang, S.: Multiple graph convolutional networks for co-saliency detection. In: 2019 IEEE International Conference on Multimedia and Expo (ICME), pp. 332–337 (2019)

Jin, W.-D., Xu, J., Cheng, M.-M., Zhang, Y., Guo, W.: Icnet: Intra-saliency correlation network for co-saliency detection. In: Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M.F., Lin, H. (eds.) Advances in Neural Information Processing Systems, vol. 33, pp. 18749–18759. Curran Associates Inc., Red Hook (2020)

Joulin, A., Tang, K., Fei-Fei, L.: Efficient image and video co-localization with Frank–Wolfe algorithm. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) Computer Vision–ECCV 2014, pp. 253–268. Springer International Publishing, Cham (2014)

Khan, S., Naseer, M., Hayat, M., Zamir, S. W., Khan, F. S., Shah, M.: Transformers in vision: a survey. ACM Comput. Surv. (2021)

Kingma, D., Ba, J.: Adam: a method for stochastic optimization. Comput. Sci. (2014)

Kolesnikov, A., Dosovitskiy, A., Weissenborn, D., Heigold, G., Uszkoreit, J., Beyer, L., Minderer, M., Dehghani, M., Houlsby, N., Gelly, S., Unterthiner, T., Zhai, X.: An image is worth \(16\times 16\) words: transformers for image recognition at scale (2021)

Korczakowski, J., Sarwas, G., Czajewski, W.: CoU2Net and CoLDF: two novel methods built on basis of double-branch co-salient object detection framework. IEEE Access 10, 84989–85001 (2022). https://doi.org/10.1109/ACCESS.2022.3197752

Le, M. O., Wenbin, Z., Liquan, S., Lina, L., Zhi: Co-saliency detection based on hierarchical segmentation. IEEE Signal Process. Lett. 21(1), 88–92 (2014)

Li, B., Sun, Z., Li, Q., Wu, Y., Anqi, H.: Group-wise deep object co-segmentation with co-attention recurrent neural network. In: 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 8518–8527 (2019a). https://doi.org/10.1109/ICCV.2019.00861

Li, B., Sun, Z., Tang, L., Sun, Y., Shi, J.: Detecting robust co-saliency with recurrent co-attention neural network. In: Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence, IJCAI-19, pp. 818–825. International Joint Conferences on Artificial Intelligence Organization, 7 (2019b)

Li, K., Wang, S., Zhang, X., Xu, Y., Xu, W., Tu, Z.: Pose recognition with cascade transformers. In: 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1944–1953 (2021)

Li, H., Ngan, K.N.: A co-saliency model of image pairs. IEEE Trans. Image Process. 20(12), 3365–3375 (2011)

Li, Y., Fu, K., Liu, Z., Yang, J.: Efficient saliency-model-guided visual co-saliency detection. IEEE Signal Process. Lett. 22(5), 588–592 (2015)

Li, T., Song, H., Zhang, K., Liu, Q.: Recurrent reverse attention guided residual learning for saliency object detection. Neurocomputing 389, 170–178 (2020). https://doi.org/10.1016/j.neucom.2019.12.109

Li, T., Zhang, K., Shen, S., Liu, B., Liu, Q., Li, Z.: Image co-saliency detection and instance co-segmentation using attention graph clustering based graph convolutional network. IEEE Trans. Multimed. 24, 492–505 (2022). https://doi.org/10.1109/TMM.2021.3054526

Lin, T. Y., Maire, M., Belongie, S., Hays, J., Zitnick, C. L.: Microsoft coco: Common objects in context. In: European Conference on Computer Vision (2014)

Lin, K., Wang, L., Liu, Z.: End-to-end human pose and mesh reconstruction with transformers. In: 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1954–1963 (2021)

Liu, Z., Lin, Y., Cao, Y., Hu, H., Wei, Y., Zhang, Z., Lin, S., Guo, B.: Swin transformer: Hierarchical vision transformer using shifted windows. In: 2021 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 9992–10002 (2021b). https://doi.org/10.1109/ICCV48922.2021.00986

Liu, N., Zhang, N., Wan, K., Shao, L., Han, J.: Visual saliency transformer. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 4722–4732 (2021a)

Liu, N., Han, J., Yang, M.H.: PiCANet: pixel-wise contextual attention learning for accurate saliency detection. IEEE Trans. Image Process. 99, 6438–6451 (2020)

Liu, J., Yuan, M., Huang, X., Su, Y., Yang, X.: Diponet: Dual-information progressive optimization network for salient object detection. Digit. Signal Process. 126, 103425 (2022). https://doi.org/10.1016/j.dsp.2022.103425

Liu, Z., Dong, H., Zhang, Z., Xiao, Y.: Global-guided cross-reference network for co-salient object detection. Mach. Vis. Appl. 33(5), 1–13 (2022)

Liu, Z., Tan, Y., He, Q., Xiao, Y.: SwinNet: Swin transformer drives edge-aware RGB-D and RGB-T salient object detection. IEEE Trans. Circuits Syst. Video Technol. 32(7), 4486–4497 (2022). https://doi.org/10.1109/TCSVT.2021.3127149

Lowe, D.G.: Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 60(2), 91–110 (2004)

Luo, Y., Jiang, M., Wong, Y., Zhao, Q.: Multi-camera saliency. IEEE Trans. Pattern Anal. Mach. Intell. 37(10), 2057–2070 (2015)

Pang, Y., Zhao, X., Zhang, L., Lu, H.: Multi-scale interactive network for salient object detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2020)

Prakash, A., Chitta, K., Geiger, A.: Multi-modal fusion transformer for end-to-end autonomous driving. In: 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 7073–7083 (2021)

Ren, G., Dai, T., Stathaki, T.: Adaptive intra-group aggregation for co-saliency detection. In: ICASSP 2022–2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 2520–2524 (2022). https://doi.org/10.1109/ICASSP43922.2022.9746218

Shen, T., Lin, G., Liu, L., Shen, C., Reid, I. D.: Weakly supervised semantic segmentation based on web image co-segmentation. Comput. Vis. Pattern Recognit. (2017)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. Comput. Sci. (2014)

Srivastava, G., Srivastava, R.: User-interactive salient object detection using YOLOv2, lazy snapping, and gabor filters. Mach. Vis. Appl. 31(3), 1–7 (2020)

Su, Y., Deng, J., Sun, R., Lin, G., Wu, Q.: A unified transformer framework for group-based segmentation: co-segmentation, co-saliency detection and video salient object detection. (2022) arXiv:2203.04708

Su, Y., Deng, J., Sun, R., Lin, G., Su, H., Wu, Q.: A unified transformer framework for group-based segmentation: co-segmentation, co-saliency detection and video salient object detection. IEEE Trans. Multimed. (2023). https://doi.org/10.1109/TMM.2023.3264883

Tan, Z., Gu, X.: Co-saliency detection with intra-group two-stage group semantics propagation and inter-group contrastive learning. Knowl. Based Syst. 252, 109356 (2022). https://doi.org/10.1016/j.knosys.2022.109356

Tang, L., Li, B., Kuang, S., Song, M., Ding, S.: Re-thinking the relations in co-saliency detection. IEEE Trans. Circuits Syst. Video Technol. 32(8), 5453–5466 (2022). https://doi.org/10.1109/TCSVT.2022.3150923

Touvron, H., Cord, M., Douze, M., Massa, F., Sablayrolles, A., Jegou, H.: Training data-efficient image transformers and distillation through attention. In: Meila, M., Zhang, T. (eds) Proceedings of the 38th International Conference on Machine Learning, volume 139 of Proceedings of Machine Learning Research, pp. 10347–10357. PMLR (2021)

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, L.U., Polosukhin, I.: Attention is all you need. In: Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R. (eds.) Advances in Neural Information Processing Systems, vol. 30. Curran Associates Inc., Red Hook (2017)

Wang, X., Girshick, R., Gupta, A., He, K.: Non-local neural networks. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7794–7803 (2018)

Wang, L., Lu, H., Wang, Y., Feng, M., Xiang, R.: Learning to detect salient objects with image-level supervision. In: IEEE Conference on Computer Vision and Pattern Recognition (2017)

Wang, Y., Xu, Z., Wang, X., Shen, C., Cheng, B., Shen, H., Xia, H.: End-to-end video instance segmentation with transformers. In: 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 8737–8746 (2021b)

Wang, C., Zha, Z.-J., Liu, D., Xie, H.: Robust deep co-saliency detection with group semantic. In: Proceedings of the AAAI Conference on Artificial Intelligence vol. 33, pp. 8917–8924 (2019)

Wang, N., Zhou, W., Wang, J., Li, H.: Transformer meets tracker: exploiting temporal context for robust visual tracking. In: 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1571–1580 (2021a)

Wu, Z., Su, L., Huang, Q. (2019) Stacked cross refinement network for edge-aware salient object detection. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 7264–7273 (2019)

Wu, Z., Pan, S., Chen, F., Long, G., Zhang, C., Yu, P.S.: A comprehensive survey on graph neural networks. IEEE Trans. Neural Netw. Learn. Syst. 32(1), 4–24 (2021)

Yan, X., Chen, Z., Wu, Q., Lu, M., Sun, L.: 3MNet: multi-task, multi-level and multi-channel feature aggregation network for salient object detection. Mach. Vis. Appl. 32(2), 1–13 (2021)

Yang, L., Geng, B., Cai, Y., Hanjalic, A., Hua, X.-S.: Object retrieval using visual query context. IEEE Trans. Multimed. 13(6), 1295–1307 (2011)

Yao, X., Han, J., Zhang, D., Nie, F.: Revisiting co-saliency detection: a novel approach based on two-stage multi-view spectral rotation co-clustering. IEEE Trans. Image Process. 26(7), 3196–3209 (2017)

Ye, L., Liu, Z., Li, J., Zhao, W.L., Shen, L.: Co-saliency detection via co-salient object discovery and recovery. IEEE Signal Process. Lett. 22(11), 2073–2077 (2015)

Yu, S., Xiao, J., Zhang, B., Lim, E. G.: Democracy does matter: comprehensive feature mining for co-salient object detection. In: 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 969–978 (2022). https://doi.org/10.1109/CVPR52688.2022.00105

Yuan, L., Chen, Y., Wang, T., Yu, W., Shi, Y., Jiang, Z.-H., Tay, F. E., Feng, J., Yan, S.: Tokens-to-token vit: training vision transformers from scratch on imagenet. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 558–567 (October 2021)

Zha, Z.-J., Wang, C., Liu, D., Xie, H., Zhang, Y.: Robust deep co-saliency detection with group semantic and pyramid attention. IEEE Trans. Neural Netw. Learn. Syst. 31(7), 2398–2408 (2020)

Zhang, D., Han, J., Li, C., Wang, J., Li, X.: Detection of co-salient objects by looking deep and wide. Int. J. Comput. Vis. (2016b)

Zhang, D., Han, J., Li, C., Wang, J.: Co-saliency detection via looking deep and wide. In: 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2994–3002 (2015)

Zhang, K., Li, T., Liu, B., Liu, Q.: Co-saliency detection via mask-guided fully convolutional networks with multi-scale label smoothing. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2019a)

Zhang, K., Li, T., Liu, B., Liu, Q.: Co-saliency detection via mask-guided fully convolutional networks with multi-scale label smoothing. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3090–3099 (2019b)

Zhang, K., Li, T., Shen, S., Liu, B., Chen, J., Liu, Q.: Adaptive graph convolutional network with attention graph clustering for co-saliency detection. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 9047–9056 (2020a)

Zhang, X., Wang, T., Qi, J., Lu, H., Gang, W.: Progressive attention guided recurrent network for salient object detection. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (2018)

Zhang, D., Han, J., Han, J., Ling, S.: Cosaliency detection based on intrasaliency prior transfer and deep intersaliency mining. IEEE Trans. Neural Netw. Learn. Syst. 27(6), 1163–1176 (2016)

Zhang, D., Meng, D., Han, J.: Co-saliency detection via a self-paced multiple-instance learning framework. IEEE Trans. Pattern Anal. Mach. Intell. 39(5), 865–878 (2017)

Zhang, Q., Cong, R., Hou, J., Li, C., Zhao, Y.: Coadnet: collaborative aggregation-and-distribution networks for co-salient object detection. In: Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H. (eds.) Advances in Neural Information Processing Systems, vol. 33, pp. 6959–6970. Curran Associates Inc., Red Hook (2020)

Zhang, Z., Jin, W., Xu, J., Cheng, M.-M.: Gradient-induced co-saliency detection. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) Computer Vision—ECCV 2020, pp. 455–472. Springer International Publishing, Cham (2020)

Zhang, K., Wu, Y., Dong, M., Liu, B., Liu, D., Liu, Q.: Deep object co-segmentation and co-saliency detection via high-order spatial-semantic network modulation. IEEE Trans. Multimed. 7, 1–14 (2022). https://doi.org/10.1109/TMM.2022.3198848

Zhao, J., Liu, J.-J., Fan, D.-P., Cao, Y., Yang, J., Cheng, M.-M.: EGNet: edge guidance network for salient object detection. In: 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 8778–8787 (2019)

Zhao, W., Zhang, J., Li, L., Barnes, N., Liu, N., Han, J.: Weakly supervised video salient object detection. In: 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 16821–16830 (2021)

Zheng, S., Lu, J., Zhao, H., Zhu, X., Luo, Z., Wang, Y., Fu, Y., Feng, J., Xiang, T., Torr, P. H., Zhang, L.: Rethinking semantic segmentation from a sequence-to-sequence perspective with transformers. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 6881–6890 (2021)

Zheng, P., Fu, H., Fan, D.-P., Fan, Q., Qin, J., Tai, Y.-W., Tang, C.-K., Van Gool, L.: GCoNEt+: A stronger group collaborative co-salient object detector. IEEE Trans. Pattern Anal. Mach. Intell. (2023). https://doi.org/10.1109/TPAMI.2023.3264571

Zhou, H., Xie, X., Lai, J.-H., Chen, Z., Yang, L.: Interactive two-stream decoder for accurate and fast saliency detection. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 9138–9147 (2020)

Zhu, X., Su, W., Lu, L., Li, B., Dai, J.: Deformable detr: deformable transformers for end-to-end object detection (2020)

Zhu, Z., Zhang, Z., Lin, Z., Sun, X., Cheng, M.-M.: Co-salient object detection with co-representation purification. IEEE Trans. Pattern Anal. Mach. Intell. (2023). https://doi.org/10.1109/TPAMI.2023.3234586

Acknowledgements

This work is supported in part by Shanghai Rising Star Project under grant 23QA1408800, in part by 166 Project under grant 211-CXCY-M115-00-01-01, and in part by National Science Foundation of China under Grant 62371333.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liu, J., Wang, J., Fan, Z. et al. Group attention retention network for co-salient object detection. Machine Vision and Applications 34, 107 (2023). https://doi.org/10.1007/s00138-023-01462-7

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00138-023-01462-7