Abstract

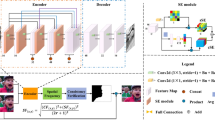

Multi-focus image fusion, which is the fusion of two or more images focused on different targets into one clear image, is a worthwhile problem in digital image processing. Traditional methods are usually based on frequency domain or space domain, but they cannot guarantee the accurate measurement of all the image details of the activity level, and also cannot perfect the selection of image fusion rules. Therefore, the deep learning method with strong feature representation ability is called the mainstream of multi-focus image fusion. However, until now, most of the deep learning frameworks have not balanced the relationship between the two input features, the shallow features and the feature fusion. In order to improve the defects of previous work, we propose an end-to-end deep network, which includes an encoder and a decoder. Encoder is a pseudo-Siamese network. It extracts the same and different feature sets by using the features of double encoder, then reuses the shallow features and finally forms the coding. In decoder, the coding will be analyzed and dimensionally reduced enough to generate high-quality fusion image. We carried out extensive experiments. The results show that our network structure is better. Compared with various image fusion methods based on deep learning and traditional multi-focus image fusion methods in recent years, our method is slightly better than theirs in both objective metric contrast and subjective visual contrast.

Similar content being viewed by others

References

Li, S., Kang, X., Fang, L., Hu, J., Yin, H.: Pixel-level image fusion: a survey of the state of the art. Inf. Fusion 33, 100–112 (2017)

Feng, S., Zhao, H., Shi, F., Cheng, X., Wang, M., Ma, Y., Chen, X.: CPFNet: Context pyramid fusion network for medical image segmentation. IEEE Trans. Med. Imaging 39(10), 3008–3018 (2020)

Ma, J., Yu, W., Chen, C., Liang, P., Guo, X., Jiang, J.: Pan-GAN: an unsupervised pan-sharpening method for remote sensing image fusion. Inf. Fusion 62, 110–120 (2020)

Ma, J., Ma, Y., Li, C.: Infrared and visible image fusion methods and applications: A survey. Inf. Fusion 45, 153–178 (2019)

Liu, Y., Chen, X., Peng, H., Wang, Z.: Multi-focus image fusion with a deep convolutional neural network. Inf. Fusion 36, 191–207 (2017)

Piella, G.: A general framework for multiresolution image fusion: from pixels to regions. Inf. Fusion 4(4), 259–280 (2003)

Burt, P. J., Adelson, E. H.: The Laplacian pyramid as a compact image code. In: Readings in Computer Vision (pp. 671-679). Morgan Kaufmann (1987)

Toet, A.: A morphological pyramidal image decomposition. Pattern Recogn. Lett. 9(4), 255–261 (1989)

Li, H., Manjunath, B.S., Mitra, S.K.: Multisensor image fusion using the wavelet transform. Graph. Models Image Process. 57(3), 235–245 (1995)

Nencini, F., Garzelli, A., Baronti, S., Alparone, L.: Remote sensing image fusion using the curvelet transform. Inf. Fusion 8(2), 143–156 (2007)

Lewis, J.J., O’Callaghan, R.J., Nikolov, S.G., Bull, D.R., Canagarajah, N.: Pixel-and region-based image fusion with complex wavelets. Inf. Fusion 8(2), 119–130 (2007)

Zhang, Q., Guo, B.L.: Multifocus image fusion using the nonsubsampled contourlet transform. Signal Process. 89(7), 1334–1346 (2009)

Yang, B., Li, S.: Multifocus image fusion and restoration with sparse representation. IEEE Trans. Instrum. Meas. 59(4), 884–892 (2009)

Joshi, K., Kirola, M., Chaudhary, S., Diwakar, M., Joshi, N. K.: Multi-Focus Image Fusion Using Discrete Wavelet Transform Method. In International Conference on Advances in Engineering Science Management and Technology (ICAESMT)-2019, Uttaranchal University, Dehradun, India (2019)

Li, S., Kwok, J.T., Wang, Y.: Combination of images with diverse focuses using the spatial frequency. Inf. Fusion 2(3), 169–176 (2001)

Huang, W., Jing, Z.: Evaluation of focus measures in multi-focus image fusion. Pattern Recogn. Lett. 28(4), 493–500 (2007)

Aslantas, V., Kurban, R.: Fusion of multi-focus images using differential evolution algorithm. Expert Syst. Appl. 37(12), 8861–8870 (2010)

De, I., Chanda, B.: Multi-focus image fusion using a morphology-based focus measure in a quad-tree structure. Inf. Fusion 14(2), 136–146 (2013)

Bai, X., Zhang, Y., Zhou, F., Xue, B.: Quadtree-based multi-focus image fusion using a weighted focus-measure. Inf. Fusion 22, 105–118 (2015)

Li, M., Cai, W., Tan, Z.: A region-based multi-sensor image fusion scheme using pulse-coupled neural network. Pattern Recogn. Lett. 27(16), 1948–1956 (2006)

Li, S., Yang, B.: Multifocus image fusion using region segmentation and spatial frequency. Image Vis. Comput. 26(7), 971–979 (2008)

Li, S., Kang, X., Hu, J.: Image fusion with guided filtering. IEEE Trans. Image Process. 22(7), 2864–2875 (2013)

Liu, Y., Liu, S., Wang, Z.: Multi-focus image fusion with dense SIFT. Inf. Fusion 23, 139–155 (2015)

Li, S., Kang, X., Hu, J., Yang, B.: Image matting for fusion of multi-focus images in dynamic scenes. Inf. Fusion 14(2), 147–162 (2013)

Nejati, M., Samavi, S., Shirani, S.: Multi-focus image fusion using dictionary-based sparse representation. Inf. Fusion 25, 72–84 (2015)

Zhang, Y., Bai, X., Wang, T.: Boundary finding based multi-focus image fusion through multi-scale morphological focus-measure. Inf. Fusion 35, 81–101 (2017)

Amin-Naji, M., Aghagolzadeh, A., Ezoji, M.: Ensemble of CNN for multi-focus image fusion. Inf. Ffusion 51, 201–214 (2019)

Tang, H., Xiao, B., Li, W., Wang, G.: Pixel convolutional neural network for multi-focus image fusion. Inf. Sci. 433, 125–141 (2018)

Yang, Y., Nie, Z., Huang, S., Lin, P., Wu, J.: Multilevel features convolutional neural network for multifocus image fusion. IEEE Trans. Comput. Imaging 5(2), 262–273 (2019)

Guo, X., Nie, R., Cao, J., Zhou, D., Qian, W.: Fully convolutional network-based multifocus image fusion. Neural Comput. 30(7), 1775–1800 (2018)

Goodfellow, I. J., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Bengio, Y.: Generative adversarial networks. (2014) arXiv preprint arXiv:1406.2661

Ma, J., Yu, W., Liang, P., Li, C., Jiang, J.: FusionGAN: a generative adversarial network for infrared and visible image fusion. Inf. Fusion 48, 11–26 (2019)

Guo, X., Nie, R., Cao, J., Zhou, D., Mei, L., He, K.: Fusegan: learning to fuse multi-focus image via conditional generative adversarial network. IEEE Trans. Multimedia 21(8), 1982–1996 (2019)

Xu, K., Qin, Z., Wang, G., Zhang, H., Huang, K., Ye, S.: Multi-focus image fusion using fully convolutional two-stream network for visual sensors. TIIS 12(5), 2253–2272 (2018)

Zhao, W., Wang, D., Lu, H.: Multi-focus image fusion with a natural enhancement via a joint multi-level deeply supervised convolutional neural network. IEEE Trans. Circuits Syst. Video Technol. 29(4), 1102–1115 (2018)

Zhang, Y., Liu, Y., Sun, P., Yan, H., Zhao, X., Zhang, L.: IFCNN: a general image fusion framework based on convolutional neural network. Inf. Fusion 54, 99–118 (2020)

Li, H., Nie, R., Cao, J., Guo, X., Zhou, D., He, K.: Multi-focus image fusion using u-shaped networks with a hybrid objective. IEEE Sens. J. 19(21), 9755–9765 (2019)

Wang, M., Liu, X., Jin, H.: A generative image fusion approach based on supervised deep convolution network driven by weighted gradient flow. Image Vis. Comput. 86, 1–16 (2019)

Jung, H., Kim, Y., Jang, H., Ha, N., Sohn, K.: Unsupervised deep image fusion with structure tensor representations. IEEE Trans. Image Process. 29, 3845–3858 (2020)

Mustafa, H.T., Yang, J., Zareapoor, M.: Multi-scale convolutional neural network for multi-focus image fusion. Image Vis. Comput. 85, 26–35 (2019)

Mou, L., Schmitt, M., Wang, Y., Zhu, X. X.: A CNN for the identification of corresponding patches in SAR and optical imagery of urban scenes. In 2017 Joint Urban Remote Sensing Event (JURSE) (pp. 1-4). IEEE (2017)

Zhang, H., Xu, H., Xiao, Y., Guo, X., Ma, J.: Rethinking the image fusion: A fast unified image fusion network based on proportional maintenance of gradient and intensity . Proc. AAAI Conf. Artif. Intel. 34(07), 12797–12804 (2020)

Peng, C., Zhang, X., Yu, G., Luo, G., Sun, J.: Large kernel matters–improve semantic segmentation by global convolutional network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 4353-4361) (2017)

Wang, L., Wang, Y., Liang, Z., Lin, Z., Yang, J., An, W., Guo, Y.: Learning parallax attention for stereo image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 12250-12259) (2019)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 770-778) (2016)

Ma, B., Yin, X., Wu, D., Ban, X., Huang, H.: Gradient Aware Cascade Network for Multi-Focus Image Fusion (2020) arXiv preprint arXiv:2010.08751

Everingham, M., Winn, J.: The pascal visual object classes challenge 2012 (voc2012) development kit, p. 8. Pattern Analysis, Statistical Modelling and Computational Learning, Tech, Rep (2011)

Nejati, M., Samavi, S., Shirani, S.: Multi-focus image fusion using dictionary-based sparse representation. Inf. Fusion 25, 72–84 (2015)

Pham, H., Guan, M., Zoph, B., Le, Q., Dean, J.: Efficient neural architecture search via parameters sharing. In International Conference on Machine Learning (pp. 4095-4104). PMLR (2018)

Lai, R., Li, Y., Guan, J., Xiong, A.: Multi-scale visual attention deep convolutional neural network for multi-focus image fusion. IEEE Access 7, 114385–114399 (2019)

Xu, H., Fan, F., Zhang, H., Le, Z., Huang, J.: A deep model for multi-focus image fusion based on gradients and connected regions. IEEE Access 8, 26316–26327 (2020)

Ma, B., Zhu, Y., Yin, X., Ban, X., Huang, H., Mukeshimana, M.: Sesf-fuse: an unsupervised deep model for multi-focus image fusion. Neural Comput. Appl., 1-12 (2020)

Qiu, X., Li, M., Zhang, L., Yuan, X.: Guided filter-based multi-focus image fusion through focus region detection. Signal Process. Image Commun. 72, 35–46 (2019)

Ma, J., Zhou, Z., Wang, B., Miao, L., Zong, H.: Multi-focus image fusion using boosted random walks-based algorithm with two-scale focus maps. Neurocomputing 335, 9–20 (2019)

Bai, X., Zhang, Y.: Detail preserved fusion of infrared and visual images by using opening and closing based toggle operator. Opt. Laser Technol. 63, 105–113 (2014)

Roberts, J.W., Van Aardt, J.A., Ahmed, F.B.: Assessment of image fusion procedures using entropy, image quality, and multispectral classification. J. Appl. Remote Sens. 2(1), 023522 (2008)

Aslantas, V., Bendes, E.: A new image quality metric for image fusion: the sum of the correlations of differences. Aeu-Int. J. Electron. Commun. 69(12), 1890–1896 (2015)

Zheng, Y., Essock, E.A., Hansen, B.C., Haun, A.M.: A new metric based on extended spatial frequency and its application to DWT based fusion algorithms. Inf. Fusion 8(2), 177–192 (2007)

Sheikh, H.R., Bovik, A.C.: Image information and visual quality. IEEE Trans. Image Process. 15(2), 430–444 (2006)

Zhang, H., Le, Z., Shao, Z., Xu, H., Ma, J.: MFF-GAN: An unsupervised generative adversarial network with adaptive and gradient joint constraints for multi-focus image fusion. Inf. Fusion 66, 40–53 (2021)

Acknowledgements

The authors acknowledge the National Natural Science Foundation of China (Grant Nos. 61772319, 62002200, 62202268, 62272281), Shandong Provincial Science and Technology Support Program of Youth Innovation Team in Colleges (2021KJ069, 2019KJN042), Yantai science and technology innovation development plan(2022JCYJ031).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Jiang, L., Fan, H. & Li, J. Multi-level receptive field feature reuse for multi-focus image fusion. Machine Vision and Applications 33, 92 (2022). https://doi.org/10.1007/s00138-022-01345-3

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00138-022-01345-3