Abstract

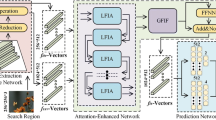

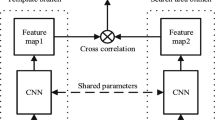

As a fundamental problem in computer vision, the aim of object tracking is to capture the accurate information of the given target in the video sequence, with the initial information determined in the first frame. Despite its significant improvement in the past decades, however, they are still facing various challenges, including occlusion, deformation, fast motion, etc. To attain robust performance, a tracking algorithm based on triple attention mechanism and global reasoning model is presented in this work, which is inspired by the progress of the Siamese network recently. First, in order to solve the problem of insufficient feature extraction, a triple attention model is proposed, which consists of three parts: squeeze-and-excitation (SE) block, spatial SE (sSE) block, and channel SE (cSE) block. Second, to tackle the lack of context information in the tracking procedure, a global reasoning model was added into the template branch and search branch, which will generate two different score maps. As the tracking process continued, these two score maps were summed to construct a regression confidence map with their weight, respectively. Extensive experiments on exited benchmarks including OTB50, OTB100, VOT 2016, VOT2018, GOT-10k, LaSOT, NFS, and TC128 demonstrate that the proposed method achieves competitive results.

Similar content being viewed by others

References

Abdelpakey, M.H., Shehata, M.S., Mohamed, M.M.: Denssiam: End-to-end densely-siamese network with self-attention model for object tracking. In: International Symposium on Visual Computing. Springer, pp. 463–473 (2018)

Bertinetto, L., Valmadre, J., Golodetz, S., Miksik, O., Torr, P.H.: Staple: Complementary learners for real-time tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1401–1409 (2016)

Bertinetto, L., Valmadre, J., Henriques, J.F., Vedaldi, A., Torr, P.H.: Fully-convolutional siamese networks for object tracking. In: European Conference on Computer Vision. Springer, pp. 850–865 (2016)

Bhat, G., Johnander, J., Danelljan, M., Shahbaz Khan, F., Felsberg, M.: Unveiling the power of deep tracking. In: Proceedings of the European Conference on Computer Vision (ECCV), pp 483–498 (2018)

Brasó, G., Leal-Taixé, L.: Learning a neural solver for multiple object tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6247–6257 (2020)

Chakravorty, T., Bilodeau, G.A., Granger, É.: Robust face tracking using multiple appearance models and graph relational learning. Mach. Vis. Appl. 31(4), 1–17 (2020)

Chen, X., Yan, B., Zhu, J., Wang, D., Yang, X., Lu, H.: Transformer tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8126–8135 (2021)

Chen, Y., Rohrbach, M., Yan, Z., Shuicheng, Y., Feng, J., Kalantidis, Y.: Graph-based global reasoning networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 433–442 (2019)

Danelljan, M., Hager, G., Shahbaz Khan, F., Felsberg, M.: Convolutional features for correlation filter based visual tracking. In: Proceedings of the IEEE International Conference on Computer Vision Workshops, pp. 58–66 (2015)

Danelljan, M., Häger, G., Khan, F.S., Felsberg, M.: Discriminative scale space tracking. IEEE Trans. Pattern Anal. Mach. Intell. 39(8), 1561–1575 (2016)

Danelljan, M., Robinson, A., Khan, F.S., Felsberg, M.: Beyond correlation filters: Learning continuous convolution operators for visual tracking. In: European Conference on Computer Vision. Springer, pp. 472–488 (2016)

Danelljan, M., Bhat, G., Shahbaz Khan, F., Felsberg, M.: Eco: Efficient convolution operators for tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6638–6646 (2017)

Danelljan, M., Bhat, G., Khan, F.S., Felsberg, M.: Atom: Accurate tracking by overlap maximization. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4660–4669 (2019)

Fan, H., Lin, L., Yang, F., Chu, P., Deng, G., Yu, S., Bai, H., Xu, Y., Liao, C., Ling, H.: Lasot: a high-quality benchmark for large-scale single object tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5374–5383 (2019)

Fu, J., Liu, J., Tian, H., Li, Y., Bao, Y., Fang, Z., Lu, H.: Dual attention network for scene segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3146–3154 (2019)

Gao, H., Wang, Z., Ji, S.: Large-scale learnable graph convolutional networks. In: Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp. 1416–1424 (2018)

Gao, J., Zhang, T., Xu, C.: Graph convolutional tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4649–4659 (2019)

Guo, Q., Feng, W., Zhou, C., Huang, R., Wan, L., Wang, S.: Learning dynamic siamese network for visual object tracking. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1763–1771 (2017)

Hadfield, S., Bowden, R., Lebeda, K.: The visual object tracking vot2016 challenge results. Lecture Notes in Computer Science 9914, 777–823 (2016)

He, A., Luo, C., Tian, X., Zeng, W.: A twofold siamese network for real-time object tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4834–4843 (2018)

Held, D., Thrun, S., Savarese, S.: Learning to track at 100 fps with deep regression networks. In: European Conference on Computer Vision. Springer, pp. 749–765 (2016)

Henriques, J.F., Caseiro, R., Martins, P., Batista, J.: High-speed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal. Mach. Intell. 37(3), 583–596 (2014)

Hu, J., Shen, L., Sun, G.: Squeeze-and-excitation networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7132–7141 (2018)

Huang, J., Zhou, W.: Re 2 ema: Regularized and reinitialized exponential moving average for target model update in object tracking. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, pp. 8457–8464 (2019)

Huang, L., Zhao, X., Huang, K.: Bridging the gap between detection and tracking: a unified approach. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 3999–4009 (2019)

Huang, L., Zhao, X., Huang, K.: Got-10k: A large high-diversity benchmark for generic object tracking in the wild. IEEE Trans. Pattern Anal. Mach. Intell. (2019)

Jiang, X., Li, P., Li, Y., Zhen, X.: Graph neural based end-to-end data association framework for online multiple-object tracking. arXiv preprint arXiv:1907.05315 (2019)

Kiani Galoogahi, H., Fagg, A., Huang, C., Ramanan, D., Lucey, S.: Need for speed: A benchmark for higher frame rate object tracking. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1125–1134 (2017)

Kipf, T.N., Welling, M.: Semi-supervised classification with graph convolutional networks. arXiv preprint arXiv:1609.02907 (2016)

Krebs, S., Duraisamy, B., Flohr, F.: A survey on leveraging deep neural networks for object tracking. In: 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC). IEEE, pp. 411–418 (2017)

Kristan, M., Leonardis, A., Matas, J., Felsberg, M., Pflugfelder, R., Cehovin Zajc, L., Vojir, T., Bhat, G., Lukezic, A., Eldesokey, A., et al.: The sixth visual object tracking vot2018 challenge results. In: Proceedings of the European Conference on Computer Vision (ECCV) (2018)

Li, B., Yan, J., Wu, W., Zhu, Z., Hu, X.: High performance visual tracking with siamese region proposal network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8971–8980 (2018)

Li, J., Gao, X., Jiang, T.: Graph networks for multiple object tracking. In: The IEEE Winter Conference on Applications of Computer Vision, pp. 719–728 (2020)

Li, P., Chen, B., Ouyang, W., Wang, D., Yang, X., Lu, H.: Gradnet: gradient-guided network for visual object tracking. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 6162–6171 (2019)

Li, R., Wang, S., Zhu, F., Huang, J.: Adaptive graph convolutional neural networks. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 32 (2018)

Li, X., Wang, W., Hu, X., Yang, J.: Selective kernel networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 510–519 (2019)

Li, Z., Bilodeau, G.A., Bouachir, W.: Multiple convolutional features in siamese networks for object tracking. Mach. Vis. Appl. 32(3), 1–11 (2021)

Liang, P., Blasch, E., Ling, H.: Encoding color information for visual tracking: algorithms and benchmark. IEEE Trans. Image Process. 24(12), 5630–5644 (2015)

Ma, C., Li, Y., Yang, F., Zhang, Z., Zhuang, Y., Jia, H., Xie, X.: Deep association: End-to-end graph-based learning for multiple object tracking with conv-graph neural network. In: Proceedings of the 2019 on International Conference on Multimedia Retrieval, pp. 253–261 (2019)

Nam, H., Han, B.: Learning multi-domain convolutional neural networks for visual tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4293–4302 (2016)

Ondruska, P., Posner, I.: Deep tracking: Seeing beyond seeing using recurrent neural networks. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 30 (2016)

Roy, A.G., Navab, N., Wachinger, C.: Concurrent spatial and channel ‘squeeze & excitation’ in fully convolutional networks. In: International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, pp. 421–429 (2018)

Tang, M., Yu, B., Zhang, F., Wang, J.: High-speed tracking with multi-kernel correlation filters. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4874–4883 (2018)

Tao, R., Gavves, E., Smeulders, A.W.: Siamese instance search for tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1420–1429 (2016)

Valmadre, J., Bertinetto, L., Henriques, J., Vedaldi, A., Torr, P.H.: End-to-end representation learning for correlation filter based tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2805–2813 (2017)

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., Polosukhin, I.: Attention is all you need. Advances in neural information processing systems 30 (2017)

Veličković, P., Cucurull, G., Casanova, A., Romero, A., Lio, P., Bengio, Y.: Graph attention networks. arXiv preprint arXiv:1710.10903 (2017)

Voigtlaender, P., Luiten, J., Torr, P.H., Leibe, B.: Siam r-cnn: visual tracking by re-detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6578–6588 (2020)

Wang, N., Song, Y., Ma, C., Zhou, W., Liu, W., Li, H.: Unsupervised deep tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1308–1317 (2019)

Wang, N., Zhou, W., Qi, G., Li, H.: Post: Policy-based switch tracking. In: AAAI, pp. 12184–12191 (2020)

Wang, Q., Teng, Z., Xing, J., Gao, J., Hu, W., Maybank, S.: Learning attentions: residual attentional siamese network for high performance online visual tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4854–4863 (2018)

Wang, Q., Wu, B., Zhu, P., Li, P., Zuo, W., Hu, Q.: Eca-net: Efficient channel attention for deep convolutional neural networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11534–11542 (2020)

Wang, X., Gupta, A.: Videos as space-time region graphs. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 399–417 (2018)

Wang, Y., Weng, X., Kitani, K.: Joint detection and multi-object tracking with graph neural networks. arXiv preprint arXiv:2006.13164 (2020)

Weng, X., Wang, Y., Man, Y., Kitani, K.: Gnn3dmot: Graph neural network for 3d multi-object tracking with multi-feature learning. arXiv preprint arXiv:2006.07327 (2020)

Woo, S., Park, J., Lee, J.Y., So Kweon, I.: Cbam: Convolutional block attention module. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 3–19 (2018)

Wu, Y., Lim, J., Yang, M.H.: Online object tracking: a benchmark. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2411–2418 (2013)

Wu, Y., Lim, J., Yang, M.H.: Object tracking benchmark. In: Proceedings of the IEEE Transactions on Pattern Analysis and Machine Intelligence, pp. 1834–1848 (2015)

Xie, S., Girshick, R., Dollár, P., Tu, Z., He, K.: Aggregated residual transformations for deep neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1492–1500 (2017)

Yi, K.M., Jeong, H., Lee, B., Choi, J.Y.: Visual tracking in complex scenes through pixel-wise tri-modeling. Mach. Vis. Appl. 26(2), 205–217 (2015)

Zhang, L., Li, X., Arnab, A., Yang, K., Tong, Y., Torr, P.H.: Dual graph convolutional network for semantic segmentation. arXiv preprint arXiv:1909.06121 (2019)

Zhang, Y., Wang, L., Qi, J., Wang, D., Feng, M., Lu, H.: Structured siamese network for real-time visual tracking. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 351–366 (2018)

Zhang, Z., Peng, H.: Deeper and wider siamese networks for real-time visual tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4591–4600 (2019)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Shu, P., Xu, K. & Bao, H. Triple attention and global reasoning Siamese networks for visual tracking. Machine Vision and Applications 33, 51 (2022). https://doi.org/10.1007/s00138-022-01301-1

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00138-022-01301-1