Abstract

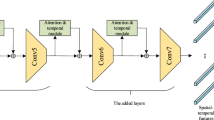

Visual context is fundamental to understand human actions in videos. However, the discriminative temporal information of videos is usually sparse and most frames are redundant mixed with a large amount of interference information, which may result in redundant computation and recognition failure. Hence, an important question is how to efficiently employ temporal context information. In this paper, we propose a learnable temporal attention mechanism to automatically select important time points from action sequences. We design an unsupervised Recurrent Temporal Sparse Autoencoder (RTSAE) network, which learns to extract sparse keyframes to sharpen discriminative yet to retain descriptive capability, as well to shield interference information. By applying this technique to a dual-stream convolutional neural network, we significantly improve the performance in both accuracy and efficiency. Experiments demonstrate that, with the help of the RTSAE, our method achieves competitive results to state of the art on UCF101 and HMDB51 datasets.

Similar content being viewed by others

Notes

Statistically, S-Net performs slightly better than T-Net in most cases, however, T-Net is irreplaceable in certain special cases.

References

Abd-Almageed, W.: Online, simultaneous shot boundary detection and key frame extraction for sports videos using rank tracing. In: IEEE International Conference on Image Processing, pp. 3200–3203 (2008)

Achlioptas, D.: Database-friendly random projections: Johnson–Lindenstrauss with binary coins. J. Comput. Syst. Sci. 66(4), 671–687 (2003)

Alex, G.: Supervised Sequence Labelling with Recurrent Neural Networks. Studies in Computational Intelligence, vol. 385. Springer, Berlin (2012)

Cai, Z., Wang, L., Peng, X., Qiao, Y.: Multi-view super vector for action recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 596–603 (2014)

Carreira, J., Zisserman, A.: Quo Vadis, action recognition? A new model and the kinetics dataset. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4724–4733 (2017)

Cho, K., Courville, A., Bengio, Y.: Describing multimedia content using attention-based encoder–decoder networks. IEEE Trans. Multimedia 17(11), 1875–1886 (2015)

Courbariaux, M., Bengio, Y.: Binarynet: training deep neural networks with weights and activations constrained to +1 or \(-\)1. CoRR arXiv:1602.02830 (2016)

De Avila, S.E.F., Lopes, A.P.B., da Luz, A., de Albuquerque Araújo, A.: Vsumm: a mechanism designed to produce static video summaries and a novel evaluation method. Pattern Recognit. Lett. 32(1), 56–68 (2011)

Doersch, C., Gupta, A., Efros, A. A.: Unsupervised visual representation learning by context prediction. In: Proceedings of the IEEE Conference on Computer Vision, pp. 1422–1430 (2015)

Du, W., Wang, Y., Qiao, Y.: Recurrent spatial-temporal attention network for action recognition in videos. IEEE Trans. Image Process. 27(3), 1347–1360 (2018)

Feichtenhofer, C., Pinz, A., Zisserman, A.: Convolutional two-stream network fusion for video action recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1933–1941 (2016)

Fengjun, L., Fengjun, L.: Single view human action recognition using key pose matching and Viterbi path searching. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–8 (2007)

Gong, B., Chao, W. L., Grauman, K., Sha, F.: Diverse sequential subset selection for supervised video summarization. In: Proceedings: Advances in Neural Information Processing Systems, pp 2069–2077 (2014)

Guo, G., Lai, A.: A survey on still image based human action recognition. Pattern Recognit. 47(10), 3343–3361 (2014)

Gygli, M., Grabner, H., Riemenschneider, H., Van Gool, L.: Creating summaries from user videos. In: European Conference on Computer Vision, pp. 505–520. Springer, Berlin (2014)

Ikizler-Cinbis, N., Sclaroff, S.: Object, scene and actions: combining multiple features for human action recognition. In: European Conference on Computer Vision, pp 494–507 (2010)

Jeff, D., Anne Hendricks, L., Sergio, G., Marcus, R.: Long-term recurrent convolutional networks for visual recognition and description. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2625–2634 (2015)

Jiang, Y., Liu, J., Zamir, A.R., Toderici, G., Laptev, I., Shah, M., Sukthankar, R.: Thumos challenge: action recognition with a large number of classes. In: Proceedings of the IEEE International Conference on Computer Vision Workshops (2013)

Kahou, S.E., Michalski, V., Memisevic, R.: Ratm: recurrent attentive tracking model (2015). arXiv preprint arXiv:1510.08660

Kar, A., Rai, N., Sikka, K., Sharma, G.: Adascan: adaptive scan pooling in deep convolutional neural networks for human action recognition in videos. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 20–28 (2017)

Karpathy, A., Toderici, G., Shetty, S., Leung, T., Sukthankar, R., Fei-Fei, L.: Large-scale video classification with convolutional neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1725–1732 (2014)

Kuehne, H., Jhuang, H., Stiefelhagen, R., Serre, T.: Hmdb51: a large video database for human motion recognition. In: High Performance Computing in Science and Engineering, pp. 571–582 (2013)

Li, L., Ling, S., Xiantong, Z., Xuelong, L.: Learning discriminative key poses for action recognition. IEEE Trans. Cybern. 43(6), 1860–1870 (2013)

Liu, G., Lin, Z., Yu, Y.: Robust subspace segmentation by low-rank representation. In: International Conference on Machine Learning, pp. 663–670 (2010)

Liu, L., Shao, L., Rockett, P.: Boosted key-frame selection and correlated pyramidal motion-feature representation for human action recognition. Pattern Recognit. 46(7), 1810–1818 (2013)

Lopyrev, K.: Generating news headlines with recurrent neural networks (2015). arXiv preprint arXiv:1512.01712

Mundur, P., Rao, Y., Yesha, Y.: Keyframe-based video summarization using delaunay clustering. Int. J. Digit. Libr. 6(2), 219–232 (2006)

Over, P., Awad, G., Michel, M., Fiscus, J., Kraaij, W., Smeaton, A.F., Quénot, G., Ordelman, R.: Trecvid 2015—an overview of the goals, tasks, data, evaluation mechanisms and metrics. In: Proceeding of TRECVID, NIST, USA (2015)

Pei, W., Baltrušaitis, T., Tax, D.M., Morency, L.P.: Temporal attention-gated model for robust sequence classification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 820–829 (2017)

Peng, X., Wang, L., Wang, X., Qiao, Y.: Bag of visual words and fusion methods for action recognition: Comprehensive study and good practice. In: Computer Vision and Image Understanding (2016)

Piergiovanni, A., Fan, C., Ryoo, M.S.: Learning latent sub-events in activity videos using temporal attention filters. In: AAAI Conference on Artificial Intelligence (2017)

Ranjan, R., Patel, V.M., Chellappa, R.: Hyperface: a deep multi-task learning framework for face detection, landmark localization, pose estimation, and gender recognition. In: Published in: IEEE Transactions on Pattern Analysis and Machine Intelligence, pp. 1–1 (2016)

Rowe, J., Friston, K., Frackowiak, R., Passingham, R.: Attention to action: specific modulation of corticocortical interactions in humans. Neuroimage 17(2), 988–998 (2002)

Sharma, S., Kiros, R., Salakhutdinov, R.: Action recognition using visual attention (2015). arXiv preprint arXiv:1511.04119

Shen, X., Wu, Y.: A unified approach to salient object detection via low rank matrix recovery. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 853–860 (2012)

Simonyan, K., Zisserman, A.: Two-stream convolutional networks for action recognition in videos. In: Advances in Neural Information Processing Systems, pp. 568–576 (2014)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. In: International Conference on Learning Representations (2015)

Song, S., Lan, C., Xing, J., Zeng, W., Liu, J.: An end-to-end spatio-temporal attention model for human action recognition from skeleton data (2016)

Soomro, K., Roshan Zamir, A., Shah, M.: UCF101: a dataset of 101 human actions classes from videos in the wild (2012). Preprint arXiv:1212.0402

Srivastava, N., Mansimov, E., Salakhutdinov, R.: Unsupervised learning of video representations using LSTMS. In: International Conference on Machine Learning, pp. 843–852 (2015)

Tran, D., Bourdev, L., Fergus, R., Torresani, L., Paluri, M.: Learning spatiotemporal features with 3D convolutional networks. In: Proceedings of the IEEE Conference on Computer Vision, pp. 4489–4497 (2015)

Wang, L., Qiao, Y., Tang, X.: Action recognition with trajectory-pooled deep-convolutional descriptors. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4305–4314 (2015)

Wang, H., Schmid, C.: Action recognition with improved trajectories. In: Proceeding of IEEE International Conference on Computer Vision, pp. 3551–3558 (2013)

Wang, H., Schmid, C.: Lear-inria submission for the thumos workshop. In: Proceeding of IEEE IEEE International Conference on Computer Vision Workshops, vol. 2 8 (2013)

Wang, B., Wang, L., Shuai, B., Zuo, Z., Liu, T., Chan, K.L., Wang, G.: Joint learning of convolutional neural networks and temporally constrained metrics for tracklet association. In: Computer Vision and Pattern Recognition Workshops, pp. 386–393 (2016)

Wang, L., Xiong, Y., Lin, D., Gool, L.V.: Untrimmednets for weakly supervised action recognition and detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6402–6411 (2017)

Wang, L., Xiong, Y., Wang, Z., Qiao, Y., Lin, D., Tang, X., Val Gool, L.: Temporal segment networks: towards good practices for deep action recognition. In: European Conference on Computer Vision (2016)

Wang, L., Xiong, Y., Wang, Z., Qiao, Y.: Towards good practices for very deep two-stream convnets (2015). arXiv preprint arXiv:1507.02159

Xia, L., Chen, C.C., Aggarwal, J.: View invariant human action recognition using histograms of 3D joints. In: Proceeding of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 20–27 (2012)

Xin, M., Zhang, H., Sun, M., Yuan, D.: Recurrent temporal sparse autoencoder for attention-based action recognition. In: The International Joint Conference on Neural Networks, IEEE, pp. 456–463 (2016)

Xin, M., Zhang, H., Yuan, D., Sun, M.: Learning discriminative action and context representations for action recognition in still images. In: Proceedings of the IEEE International Conference on Multimedia and Expo, pp 1–6 (2017)

Xin, M., Zhang, H., Wang, H., Sun, M., Yuan, D.: Arch: Adaptive recurrent-convolutional hybrid networks for long-term action recognition. Neurocomputing 178, 87–102 (2016)

Yale, S., Louis-Philippe, M., Randall, D.: Action recognition by hierarchical sequence summarization. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3562–3569 (2013)

Yeung, S., Russakovsky, O., Mori, G., Li, F.F.: End-to-end learning of action detection from frame glimpses in videos. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2678–2687 (2016)

Yue-Hei Ng, J., Matthew, H., Sudheendra, V., Oriol, V., Rajat, M., George T.: Beyond short snippets: deep networks for video classification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4694–4702 (2015)

Zhang, K., Zhang, L., Yang, M.H.: Real-time compressive tracking. In: Proceedings: European Conference on Computer Vision, pp. 864–877 (2012)

Zhao, Z., Yang, Q., Cai, D., He, X., Zhuang, Y., Zhao, Z., Yang, Q., Cai, D., He, X., Zhuang, Y.: Video question answering via hierarchical spatio-temporal attention networks. In: International Joint Conference on Artificial Intelligence, pp. 3518–3524 (2017)

Zhou, B., Khosla, A., Lapedriza, A., Oliva, A., Torralba, A.: Learning deep features for discriminative localization. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2921–2929 (2016)

Zhu, W., Hu, J., Sun, G., Cao, X., Qiao, Y.: A key volume mining deep framework for action recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1991–1999 (2016)

Acknowledgements

This work was supported by the National Key Research and Development Program of China under Grant No. 2016YFE0108100, and the National Natural Science Foundation of China under Grant No. 61571026.

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was done when Miao Xin was a visiting Ph.D. student at Harvard University.

Rights and permissions

About this article

Cite this article

Zhang, H., Xin, M., Wang, S. et al. End-to-end temporal attention extraction and human action recognition. Machine Vision and Applications 29, 1127–1142 (2018). https://doi.org/10.1007/s00138-018-0956-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00138-018-0956-5