Abstract

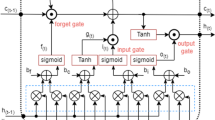

In recent years, long short-term memory (LSTM) networks have been extensively used in various domains such as machine interpretation, language displaying, and so forth. The paper proposes two architectures for accelerating matrix vector multiplication (MVM) in the LSTM network. The first proposal introduces an architecture for the MVM unit by incorporating the concept of Activity Span Reduction (ASR). Here, the partial products for inner product computations (IPC) are generated using ASR logic. This logic ensures that the computational units are active for an optimal duration, effectively utilizing time without compromising the functionality. This approach reduces power consumption as well as area requirements. The ASR technique is able to achieve a 65.9% reduction in power for MVM and occupies 43.77% fewer logic cell units when compared to the state of the art. The second proposal emphasizes the development of a two-stage recursive MVM architecture for the LSTM network. A partial product generator unit using recursive arithmetic architecture is proposed for the IPC in MVM, intended to reduce the power and area consumption. This MVM architecture provides a 6.8% improvement in the maximum clock frequency without an increase in power consumption. The utilization of the proposed designs in the development of the LSTM architecture provides additional evidence supporting the effectiveness of the proposed MVMs.

Similar content being viewed by others

Data Availability

This manuscript has no associated data.

References

S. Ahmad, S.G. Khawaja, N. Amjad, M. Usman, A novel multiplier-less LMS adaptive filter design based on offset binary coded distributed arithmetic. IEEE Access 9, 78138–78152 (2021)

A. Alzahrani, N. Alalwan, and M. Sarrab, Mobile cloud computing: advantage, disadvantage and open challenge. in Proceedings of the 7th Euro American Conference on Telematics and Information Systems, (2017), p. 1–4

A. Arora, M. Ghosh, S. Mehta, V. Betz, L.K. John, Tensor slices: FPGA building blocks for the deep learning era. ACM Trans. Reconfig. Technol. Syst. 15(4), 1–34 (2022)

E. Bank-Tavakoli, S.A. Ghasemzadeh, M. Kamal, A. Afzali-Kusha, M. Pedram, POLAR: a pipelined/overlapped FPGA-based LSTM accelerator. IEEE Trans. Very Large Scale Integr. Syst. 28(3), 838–842 (2020)

S. Bhaskar, T.M. Thasleema, LSTM model for visual speech recognition through facial expressions. Multimed. Tools Appl. 82(4), 5455–5472 (2023)

N. Bhosale, S. Battuwar, G. Agrawal, S.D. Nagarale, in Hardware implementation of RNN using FPGA. Artificial Intelligence Applications and Reconfigurable Architectures, (2023), p. 205–218

A. Garofalo, G. Ottavi, F. Conti, G. Karunaratne, I. Boybat, L. Benini, D. Rossi, A heterogeneous in-memory computing cluster for flexible end-to-end inference of real-world deep neural networks. IEEE J. Emerg. Sel. Top. Circuits Syst. 12(2), 422–435 (2022)

Y. Gong, M. Yin, L. Huang, C. Deng, B. Yuan, Algorithm and hardware co-design of energy-efficient LSTM networks for video recognition with hierarchical tucker tensor decomposition. IEEE Trans. Comput. 71(12), 3101–3114 (2022)

K. Guo, L. Sui, J. Qiu, S. Yao, S. Han, Y. Wang, and H. Yang, Angel-eye: a complete design flow for mapping CNN onto customized hardware. IEEE computer society annual symposium on VLSI (ISVLSI), (2016), 24–29

R. Guo, L.S. DeBrunner, Two high-performance adaptive filter implementation schemes using distributed arithmetic. IEEE Trans. Circuits Syst. II Express Briefs 58(9), 600–604 (2011)

S. Han, X. Liu, H. Mao, J. Pu, A. Pedram, M.A. Horowitz, W.J. Dally, EIE: efficient inference engine on compressed deep neural network. ACM SIGARCH Comput. Architect. News 44(3), 243–254 (2016)

G. Hinton, L. Deng, D. Yu, G.E. Dahl, A.R. Mohamed, N. Jaitly, A. Senior, V. Vanhoucke, P. Nguyen, T.N. Sainath, B. Kingsbury, Deep neural networks for acoustic modeling in speech recognition: the shared views of four research groups. IEEE Signal Process. Mag. 29(6), 82–97 (2012)

H. Jiang, L. Liu, P.P. Jonker, D.G. Elliott, F. Lombardi, J. Han, A high-performance and energy-efficient FIR adaptive filter using approximate distributed arithmetic circuits. IEEE Trans. Circuits Syst. I Regul. Pap. 66(1), 313–326 (2018)

T. Joseph, and T. S. Bindiya, High speed and power efficient multiplexer based matrix vector multiplication for LSTM network. 25th international symposium on VLSI design and test (VDAT) (IEEE, 2021), p. 1–4

M.T. Khan, R.A. Shaik, Optimal complexity architectures for pipelined distributed arithmetic-based LMS adaptive filter. IEEE Trans. Circuits Syst. I Regul. Pap. 66(2), 630–642 (2019)

M.S. Kokila, V.B. Christopher, R.I. Sajan, T.S. Akhila, M.J. Kavitha, Efficient abnormality detection using patch-based 3D convolution with recurrent model. Mach. Vis. Appl. 34(4), 54 (2023)

V.S. Lalapura, J. Amudha, H.S. Satheesh, Recurrent neural networks for edge intelligence: a survey. ACM Comput. Surv. 54(4), 1–38 (2021)

B. Liang, S. Wang, Y. Huang, Y. Liu, L. Ma, F-LSTM: FPGA-based heterogeneous computing framework for deploying LSTM-based algorithms. Electronics 12(5), 1139 (2023)

N. Mohamed, J. Cavallaro, Design and implementation of an FPGA-based DNN architecture for real-time outlier detection. J. Signal Process. Syst. (2023). https://doi.org/10.1007/s11265-023-01835-1

S.Y. Park, P.K. Meher, Low-power, high-throughput, and low-area adaptive FIR filter based on distributed arithmetic. IEEE Trans. Circuits Syst. II Express Br. 60(6), 346–350 (2013)

S.Y. Park, P.K. Meher, Efficient FPGA and ASIC realizations of a DA-based reconfigurable FIR digital filter. IEEE Trans. Circuits Syst. II Express Br. 61(7), 511–515 (2014)

R. Qing-dao-er-ji, Y.L. Su, W.W. Liu, Research on the LSTM Mongolian and Chinese machine translation based on morpheme encoding. Neural Comput. Appl. 32, 41–49 (2020)

J. Qiu, J. Wang, S. Yao, K. Guo, B. Li, E. Zhou, J. Yu, T. Tang, N. Xu, S. Song, and Y. Wang, Going deeper with embedded FPGA platform for convolutional neural network. in Proceedings of the 2016 ACM/SIGDA International Symposium on Field-Programmable Ggate Arrays, (2016), p. 26–35

Z. Que, H. Nakahara, E. Nurvitadhi, A. Boutros, H. Fan, C. Zeng, J. Meng, K.H. Tsoi, X. Niu, W. Luk, Recurrent neural networks with column-wise matrix-vector multiplication on FPGAs. IEEE Trans. Very Large Scale Integr. Syst. 30(2), 227–237 (2021)

A. Sherstinsky, Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Phys. D Nonlinear Phenom. 404, 132306 (2020)

T. Soni, A. Kumar, and M.K. Panda, in Modified Efficient Parallel Distributed Arithmetic based FIR Filter Architecture for ASIC and FPGA. 2023 10th International Conference on Signal Processing and Integrated Networks IEEE (SPIN), (2023), p. 860–865

N. Srivastava, E. Mansimov, and R. Salakhudinov, in Unsupervised learning of video representations using LSTMs. International Conference on Machine Learning, (2015), p. 843–852

I. Sutskever, O. Vinyals, and Q. V. Le, Sequence to sequence learning with neural networks. Advances in neural information processing systems, 27, (2014)

S. Wang, Z. Li, C. Ding, B. Yuan, Q. Qiu, Y. Wang, and Y. Liang, C-LSTM: Enabling efficient LSTM using structured compression techniques on FPGAs. in Proceedings of the 2018 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, (2018), p. 11–20

Z. Wang, J. Lin, Z. Wang, Accelerating recurrent neural networks: a memory-efficient approach. IEEE Trans. Very Large Scale Integr. Syst. 25(10), 2763–2775 (2017)

S.A. White, Applications of distributed arithmetic to digital signal processing: a tutorial review. IEEE Assp Mag. 6(3), 4–19 (1989)

K.P. Yalamarthy, S. Dhall, M.T. Khan, R.A. Shaik, Low-complexity distributed-arithmetic-based pipelined architecture for an LSTM network. IEEE Trans. Very Large Scale Integr. Syst. 28(2), 329–338 (2020)

J. Zhang, Y. Zeng, B. Starly, Recurrent neural networks with long term temporal dependencies in machine tool wear diagnosis and prognosis. SN Appl. Sci. 3, 1–13 (2021)

Acknowledgements

The authors would like to thank the Department of Science & Technology, Government of India for supporting this work under the FIST scheme No. SR/FST/ET-I/2017/68.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this manuscript.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Joseph, T., Bindiya, T.S. Power and Delay-Efficient Matrix Vector Multiplier Units for the LSTM Networks Using Activity Span Reduction Technique and Recursive Adders. Circuits Syst Signal Process 42, 7494–7528 (2023). https://doi.org/10.1007/s00034-023-02456-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-023-02456-6