Abstract

The authors present a novel design algorithm for 3-D orthogonal filters. Both separable and non-separable cases are discussed. In the separable case, the synthesis leads to a cascade connection of 1-D systems. In the latter case, one obtains 2-D systems followed by a 1-D one. Realization techniques for these systems are presented which utilize Givens rotations and delay elements. The results are illustrated by examples of separable and non-separable 3-D system designs, i.e., Gaussian and Laplacian filters.

Similar content being viewed by others

1 Introduction

Since the first pulse-code modulation transmission of digitally quantized speech, in World War II, digital signal processing (DSP) began to proliferate to all areas of human life. A classic DSP is based on linear systems described by impulse response functions and transfer functions implemented by structures built from adders, multipliers, and unit delays. Another approach was initiated by [31], known as the state space approach. It was also extended to the 2-D case by Roesser [18], as well as to three-dimensional (3-D) [9]. The steady increase in computational power encourages applying DSP techniques to multidimensional processing. However, the n-dimensional (n-D) DSP development has encountered difficulties caused by n-D polynomials [5]. Namely, there is no straightforward generalization of the fundamental theorem of algebra to higher dimensions. Classical digital systems are known to possess poor parameters under finite-precision arithmetic, like the sensitivity of the frequency response to changes in the structural parameters, noise, intrinsic oscillations, and limit cycles. These effects have led to the invention of wave filters [6] and orthogonal filters [2, 3]. The most common approach to orthogonal filter synthesis is a transfer function decomposition and the state space approach. When it comes to multidimensional DSP, the former technique is of a limited use due to the n-D polynomials. In contrast, the latter provides an opportunity to extend 1-D state space techniques to higher dimensions, thanks to the 2-D, 3-D, and possibly n-D state space equations. The state space approach to lossless systems was initiated by the famous paper [30], where paraunitary matrix synthesis techniques were developed for the 2-D transfer function of a continuous system. The state space approach was also used to develop 2-D orthogonal filter synthesis [16] and simplified to cover a class of separable-denominator orthogonal filters [28] which found to be useful in real-time processing [21].

Nowadays, one can observe that a processed data becomes n-D like video, multichannel audio, machine vision, to name a few. The 3-D processing is especially important in medicine [1] and image/video processing [5, 10] but also finds applications in other areas like material structures [12].

DSP synthesis is based on difference equations usually transformed by the \(\mathcal {Z}\) transform. For the 3-D function \(f(x_1, x_2 ,x_3)\), this is given by

where \(z_1\), \(z_2\), and \(z_3\) are complex numbers. Linear time invariant filters are usually classified into recursive and non-recursive. The latter, called finite impulse response (FIR) filters, are very popular due to their simplicity and natural stability. Typically, they are described by a transfer function which is the \(\mathcal {Z}\) transform of its impulse response. For the 1-D case, it is given by

where \(a_0,\ldots ,a_n\) are real constant coefficients. In the 3-D case, the transfer function of an FIR filter extends to

In this paper, we deal with a class of orthogonal filters. Introducing the energy of a 3-D real vector function \(f(x_1, x_2 ,x_3)\), in the form

we define an orthogonal filter to be a system which preserves the energy, i.e., the input energy equals the output energy. Technically, we are about to find a net of Givens rotations which realizes a given transfer function between two ports of that net. The Givens rotation rotates a vector \([u_s,u_t]^\mathrm{T}\) by \(\alpha \) radians, i.e., it implements the following set of equations

We will denote it by \(R_{s,t}(\alpha )\) and use the graphical symbol shown in (5). They are usually implemented using an iterative algorithm called CORDIC [11]. To obtain high throughput, we also utilise permutations in our structures, given by

It is known that the following holds:

Theorem 1

[7] Transfer function \(H(z_1,z_2,z_3)\) of a 3-D orthogonal filter satisfies

-

1.

\(H(z_1,z_2,z_3)\) is rational in \(z_1,z_2,z_3\);

-

2.

\(H(z_1,z_2,z_3)\) is holomorfic for all \(|z_1|\ge 1,|z_2|\ge 1,|z_3|\ge 1\);

-

3.

\(H(z_1,z_2,z_3)\) is paraunitary, i.e.

$$\begin{aligned} H^\mathrm{T}(z_1^{-1},z_2^{-1},z_3^{-1})H(z_1,z_2,z_3)=I. \end{aligned}$$(7)

The paraunitary condition (7) imposes the single-input single-output system to have a constant, unit frequency response. Typically, orthogonal systems have more inputs and outputs (at least two outputs) to implement practical non-flat frequency responses. During the synthesis process, we use a state space representation [17]. For given

which are input, output, and state vector, respectively, it is defined by

\(\tau \) is a real constant partitioned matrix of the form

2-D state space equations, known as the Roesser model, are given by [18]

Similarly, u(h, v), y(h, v) and \(x_h(h,v)\), \(x_v(h,v)\) are the 2-D input, output, and two state vectors in h and v directions, respectively, and can be represented in the following form

It is easy to show that if \(\tau \) is an orthogonal matrix, i.e., \(\tau ^\mathrm{T}\tau =I\), then the systems described by (9) and (11) are orthogonal filters. During synthesis, this property allow us to focus on the state space matrices orthogonality instead of the energy of signals.

We say that a system described by the impulse response r(h, v, d) is separable if it can be represented in the form

Otherwise, we call it non-separable.

Nowadays, one can observe that most of n-D DSP designs are based on intuitive manipulation applied to a given computational algorithm like ordering multipliers and adders to speed up calculations. Most of the time, no other parameters, except speed and chip area occupation, are taking into account. Unfortunately, when one improves one parameter, another gets worse. This can readily be seen when comparing direct form structures of infinite impulse response 1-D digital filter (fast and inaccurate) and cascade ones (more accurate but output is delayed) for high orders. Similar problems are observed in active electronic filters compared to passive ones made of inductors, capacitors, and resistors, as well as in classic 1-D DSP direct form structures versus wave and orthogonal filters. There are several other parameters influencing the design performance like the sensitivity of the frequency response to changes in the structural parameters, noise, intrinsic oscillations, and limit cycles. So, the idea which motivated the authors in this paper is that one can design better n-D systems by incorporating the techniques which helped in 1-D domain like the orthogonal filters technique. A novelty of our approach is that we can design orthogonal 3-D filters consisting of Givens rotations. To the best of the authors’ knowledge, no other techniques to synthesize 3-D rotation structures have yet been published. The scope of the paper is to present details of the synthesis algorithms for separable and non-separable systems. The main idea of our approach is to decompose a 3-D systems into a connection of lower dimension blocks and then apply previously elaborated, 1-D and 2-D orthogonal synthesis procedures [24, 26].

The paper is organized as follows. In Sect. 2.1, we present details of the synthesis algorithm of separable orthogonal 3-D filters. Next, an example which illustrates that method is presented in Sect. 2.2 (3-D Gaussian filter). In Sect. 3.1, an expanded version of the technique for the non-separable case is proposed followed by its application in Sect. 3.2 (3-D Laplace filter). In the examples included in this paper, we utilize the standard sample by sample ordering which converts the 3-D signal into 2-D images processed image by image.

2 Separable Orthogonal 3-D FIR Filters

2.1 Synthesis Algorithm

For a separable 3-D system, we represent its transfer function (3) in the form

Applying the 1-D synthesis technique, presented in [25], to \(T_h(z_h)\), \(T_v(z_v)\), and \(T_d(z_d)\) separately, we have paraunitary systems \(H_h(z_h)\), \(H_v(z_v)\), and \(H_d(z_d)\) for which we obtain three 1-D state space realizations spanned on a 3-D domain:

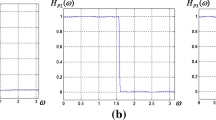

We also get scaling factors \(k_h\), \(k_v\) and \(k_d\), which are the maxima of the frequency response squared for \(H_h(z_h)\), \(H_v(z_v)\), and \(H_d(z_d)\), respectively. Then, each state space realization in (15) is implemented using pipeline structures \(H_h\), \(H_v\), and \(H_d\), respectively. Constructing a cascade connection of these three implementations, we obtain the separable 3-D orthogonal pipeline structure shown in Fig. 1.

2.2 Realization Example of a Gaussian Filter

Let us give a design example of a separable 3-D FIR Filter. We have chosen a Gaussian filter whose impulse response is given by

where

Substituting (16) into (13), we have

where

By (14), we obtain

where

The constant \(n_0\) is chosen to shift the origin of (19). The parameters for the filter have been taken from [1], i.e., \(\sigma =0.7\), \(n_0=2\), and \(h,v,d=-2,-1,0,1,2\). Substituting them into (21), we obtain kernel coefficients of the Gaussian filter which are presented in Table 1. From (20) we see that it suffice to design a state space system by the technique presented in [25] applied to T(z), which is given by

The synthesis algorithm is as follows. We construct a paraunitary transfer vector:

where \(G(z^{-1})G(z)=1-\frac{1}{k}T^\mathrm{T}(z^{-1})T(z)\) and k is a constant scalar chosen to make the factorization possible. We have chosen \(k=k_h=k_v=k_d=1.4547750\) which is the maximum of the frequency response of \(|T(e^{j\omega })|^2\). Next, we represent (23) in the form:

where:

By applying QR to \(\begin{bmatrix} H_1&H_2&H_3&H_4 \\ H_2&H_3&H_4&0 \\ H_3&H_4&0&0 \\ H_4&0&0&0 \end{bmatrix} = \hbox {QR} = Q\begin{bmatrix} M \\ 0 \end{bmatrix}\), we find the full rank \(M=\begin{bmatrix}M_0&M_1&M_2&M_3\end{bmatrix}\), where

For (23), we determine the state space representation (9), where \(A=\begin{bmatrix}M_1&M_2&M_3&0\end{bmatrix}M^{-1}\), \(B=M_0\), \(C=\begin{bmatrix} H_1&H_2&H_3&H_4 \end{bmatrix}M^{-1}\), and \(\hat{D}=H_0\):

We apply similarity transformation to (27) starting from Schur decomposition of A (to find upper triangular representation) which changes also B and C which leads to a new matrices \(A_U\), \(\hat{B}_U\), and \(C_U\)

Next, we extend \(\begin{bmatrix} A_U&\hat{B}_U \\ C_U&\hat{D} \end{bmatrix}\) to be a square matrix [27, 29] which leads to final state space realization, given by

Applying the Givens decomposition and permutations to (29), we obtain the pipeline rotation structure shown in Fig. 2 whose parameters are presented in Table 2 (detailed algorithm of the decomposition is presented in [26]).

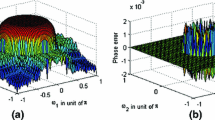

To apply the obtained 3-D Gaussian filter to image processing, we need to link each independent variable h, v, and d with the dimensions of an image. To keep things simple, we assign h, v, and d to be indexes of the 3-D image in the horizontal (rows), vertical (columns), and depth (frames) dimensions, respectively. Suppose that an image frame is of size \(H\times V\). We assume that it is processed sample by sample using row by row and frame by frame ordering. To do this, the unit delays D in each state space system \(H_h\), \(H_v\), and \(H_d\), shown in Fig. 2, need to be replaced with the following 1-D delays: \(z^{-1}\), \(z^{-H}\), and \(z^{-HV}\), respectively. The 3-D Gaussian filter has been modelled in the Scilab environment [22]. The impulse response of the filter has been simulated for the \(8\times 8\times 8\) 3-D Kronecker delta matrix input. Obtained results are similar to shown in Table 1 with mean-squared error which equals to \(6.346\cdot 10^{-23}\). The filter has been tested with a real 3-D medical DICOM image taken from [4]. For this task, the authors have implemented Scilab procedures to read and write DICOM files.

3 Non-separable Orthogonal 3-D FIR Filters

3.1 Synthesis Algorithm

Suppose we are given a non-separable transfer function (3). To obtain a rotation structure in this case, we rearrange (3) into one of the following form:

From (30), we have three ways to decompose the system into a cascade connection of 2-D and 1-D blocks. We have chosen (30a); the other two structures can be obtained in a similar way. The coefficients \(H_{j}(z_{h},z_{d})\) can be represented in the so-called Gram form

where

and \(Z_{d}=\begin{bmatrix}1&z_{d}^{-1}&\cdots&z_{d}^{-l}\end{bmatrix}\), \(Z_{h}=\begin{bmatrix}1&z_{h}^{-1}&\cdots&z_{h}^{-n}\end{bmatrix}^\mathrm{T}\). Introducing the partitioned matrix

we can represent (30a) in the form

where

Applying a full rank factorization [8] to (33), we obtain

where \(C_{d}\) and \(C_{e}\) are real full rank \((l+1)\times r\) and \(r \times (n+1)\) matrices, respectively. Substituting (36) into (34), we obtain

where

and

Each \(H_{e_i}(z_h,z_v)\) is an single-input single-output system, so we can apply the algorithm presented in [27, 28] to synthesize 2-D orthogonal state space equations, given by (11), spanned on 3-D. The factors \(k_{e_i}\) obtained from the 2-D synthesis are applied to the corresponding elements of \(\hat{H}_d(z_d)\). The resulting vector will be denoted by

Thanks to it, we remove r scaling factors from the rotation structure. As (40) is a horizontal vector, it cannot satisfy (7). So, to obtain an orthogonal structure, we take into account a transposed version of (40) [17, p. 546]. So, we can apply the algorithm illustrated in Sect. 2.2 to \(H^\mathrm{T}_d(z_d)\) obtaining (10) which is a square matrix [27]. Then, the result is transposed back to the orthogonal realization of (40), which is 1-D state space equations (9) spanned on 3-D (dimensions h and v are not processed), given by

where

In (37), we have a dot product of vectors (38) and (39). From a system point of view, (39) can be represented as r one-input one-output parallel blocks. Equation (38) can be treated as a single system consisting of r inputs and one output. So, the transfer function (37) can be realized as a cascade connection of r \(H_{e_i}(z_h,z_v)\) blocks whose outputs are connected to r inputs of \(H_d(z_d)\) and one multiplier, as shown in Fig. 3. Symbols \(H_{e_i}\) and \(H_d\) denote realizations of \(H_{e_i}(z_h,z_v)\) and \(H_d(z_d)\), respectively. Systems \(H_{e_i}\) and \(H_d\) can be implemented using Givens rotations (5) and permutations (6) by the technique presented in [26].

3.2 Realization Example of a 3-D Laplace Filter

Let us design the 3-D orthogonal Laplace filter whose mask is given by [12]

The transfer function for the system described by (43) is

where

and

Applying a full rank factorization to (45), we obtain (37), where \(r=2\),

and

Let us focus on \(H_{e_1}(z_h,z_v)\) realization, given by (48), for a moment. We represent \(H_{e_1}(z_h,z_v)\) in the form:

where

Applying the full rank decomposition to (50), we obtain:

where

For (52), we construct the paraunitary systems:

We obtain 1-D state space realizations of (53) [in similar way as (24)–(28) in Sect. 2.2]:

We add new columns to \(\hat{B}_t\) and \(\hat{D}_t\), obtaining square matrix \(\begin{bmatrix}A_t&B_t\\C_t&D_t\end{bmatrix}\) [29]:

Based on (56), we construct a transpose system which is the realization of \(U_h^{e_1}\), given by

We apply Schur upper triangularization to matrix A, obtaining a minimized state space model for \(U_h^{e_1}\):

Then, we use 1-D state space systems (58) and (55) to construct 2-D state space model:

We extend the number of columns in (60):

Finally, we apply Givens decomposition and permutations to (59) and (61), obtaining the pipeline rotation structure.

Repeating steps (49)–(61) to \(H_{e_2}(z_h,z_v)\) in (48), we design a 2-D state space model:

for which we can also get the pipeline rotation structure. In a similar way as in Sect. 2.2, (47) can by realized by (41), where

The state space equations (59), (61), (62), and (63) are implementations of blocks given in Fig. 3. Replacing \(H_{e_1}\), \(H_{e_2}\), and \(H_d\) with their orthogonal counterparts, we obtain the pipeline structure shown in Fig. 4. Each \(H_{e_1}\), \(H_{e_2}\), and \(H_d\) are implemented using Givens rotations and permutations whose parameters are presented in Tables 4, 5, and 6. As they are multi-input multi-output orthogonal systems, there are extra inputs which will be set to zero as well as additional outputs will not be used. Hence, the first three rotations \(R_1\), \(R_2\), and \(R_3\), and the \(-1\) multiplier in the implementation of \(H_{e_1}\) and rotations \(R_{19}\), \(R_{20}\), and \(R_{21}\), and the \(-1\) multiplier in the implementation of \(H_{e_2}\), have input and output constantly equal to zero. So, they can be removed from the structure. The final structure for the 3-D orthogonal Laplace filter is shown in Fig. 5. If the \(H_{e_i}\) systems have unequal numbers of rotations, we apply extra delay elements to compensate for the processing time of all the \(H_{e_i}\) blocks.

The impulse response of the filter has been simulated for the \(8\times 8\times 8\) 3-D Kronecker delta matrix input. The results are presented in Table 3.

4 Conclusions

The main contribution of the paper is the extension of 1-D and 2-D FIR orthogonal filters to 3-D case. By doing so, we open up new possibilities to take into account parameters which are usually omitted in 3-D designs like sensitivity of the frequency response to changes in structural parameters, noise, intrinsic oscillations, and limit cycles. This is the main difference from 3-D FIR techniques, known in literature, which usually focus on speed and chip area only. However, presented results are occupied by much higher mathematical burden during synthesis which calls for polynomial factorization, matrix pseudoinverse, QR decomposition, full rank matrix factorization, real Schur decomposition, and orthonormal basis extension. Nonetheless, these are standard numerical methods which can be implemented using popular mathematical software. The authors have applied Scilab [22] for these tasks.

The frequency response of a separable system is limited to a superposition of, possibly different, 1-D functions applied to each direction independently. Due to that, a separable system cannot fully exploit a neighbourhood of a processed sample. On the other hand, their synthesis techniques in n-D are as simple as 1-D approaches applied n times. In the non-separable case, we are allowed to design systems which approximate any frequency response at a cost of much more complicated synthesis algorithms. The originality of our non-separable technique, presented in Sect. 3.1, is that we represent a 3-D system in the Gram form with coefficients collected in a matrix which is a subject of a full rank factorization. Thanks to it, we separate one variable from the system at the expense of an increase in the subsystem’s inputs and outputs. As a result, we get a cascade connection of 2-D systems and a 1-D one. However, obtained structures are clearly 3-D, which means that their input and output are 3-D functions. So, to use them in real applications we need to apply any concurrent technique or sample by sample ordering to the signals and systems.

The pipeline structures, obtained in Sects. 2.1 and 3.1, have high throughput at the expense of a latency which is not larger then \((m_1(l+1)+m_2(n+1)(m + 1))\tau _R\) and \((m_1(l+1)+m_2(n+1)(m + 1))\tau _R\) for separable and non-separable cases, respectively, where \(\tau _R\) denotes the processing time of a Givens rotation and \(m_{1}\le \min \{l+1,(n+1)\cdot (m+1) \} \), \(m_{2}\le \min \{n+1,m+1\}\), for m,n, and l given by (30). So, they are well suited for hardware real-time 3-D image processing, but also may find applications in other areas where 3-D data are employed like in economy modelling, seismic data, weather forecast models, etc. It is also possible to utilise presented results as a digital replacement for analog controllers in control systems [13,14,15, 19, 20]. Due to the robust orthogonal systems properties, they will be especially applicable when high processing precision is required even for low bit quantization, as in medical imaging. One can give upper bounds for the number of Givens rotations and single delay elements. In the separable case, they are given by \(n+m+l+3\) and \(n+mH+lHV\), respectively, for an \(H\times V\) image frames, where m, n, and l are defined in (14). For the same image in the non-separable case, a structure has no more then \(m_{1}(l+1)+m_{1}m_{2}(n+1)(m+1)\) Givens rotations and \(m_{1}(n+mH)+lHV\) single delay elements, for m,n, and l given by (30).

The \(5\times 5\times 5\) 3-D Gaussian filter, presented in Sect. 2.2, has been implemented in DE2 development board with Cyclone II chip (EP2C35F672C6) running at 125 MHz clock rate [23]. In [10], a similar filter of order \(3\times 3\times 3\) has been presented using the same DE2 board of 100 MHz rate for RAM communication and 25 MHz for the filter module. The reported overall performance was 30 frames/s for \(640\times 480\) images. In [1], a 3-D anisotropic diffusion filter has been proposed which is a \(5\times 5\times 5\) 3-D Gaussian filter. It was implemented in Stratix II chip (EP2S180F1508C4) using 7524 ALUTs and 20 DSP multipliers. Their design achieves voxel processing rate of 192–194 MHz for the system clocked at 200 MHz. An obtained precision was measured with a mean-squared error which is 0.030 for 8-bit fixed number representation.

The authors’ filter, presented in [23], occupies 12020 LUTs (no DSP blocks are utilized) and achieves maximum voxel processing rate of 125 MHz which leads to 409 frames/s for \(640\times 480\) images. The mean-squared error for 8-bit fixed number representation is 0.002. Comparing obtained results one can see that the proposed filter voxel processing rate is at the clock rate. This is a natural property of fully pipeline structures. The number of ALUTs occupied by the proposed filter is greater than that in [1] and probably follows from the CORDIC implementation; however, we do not utilize extra DSP blocks. The proposed filter possesses good precision, due to Givens rotations, manifested by the low mean-squared error and other good properties. For more details the reader is referred to [23].

References

O. Dandekar, C.R. Castro-Pareja, R. Shekhar, FPGA-based real-time 3D image preprocessing for image-guided medical interventions. J. Real-Time Image Process. 1(4), 285–301 (2007)

E. Depretere, P. Dewilde, Orthogonal cascade realization of real multiport digital filters. Int. J. Circuits Theory Appl. 8, 245–272 (1980)

U.B. Desai, A state-space approach to orthogonal digital filters. IEEE Trans. Circuits Syst. 38(2), 160–169 (1991)

DICOM sample image sets. http://www.osirix-viewer.com/datasets/ (2017)

D.E. Dudgeon, R.M. Mersereau, Multidimensional Signal Processing (Prentice-Hall, Englewood Cliffs, 1984)

A. Fettweis, Digital filter structures related to classical filter networks. AEÜ 25(2), 79–89 (1971)

A. Fettweis, On the scattering matrix and the scattering transfer matrix of multidimensional lossless two-ports. AEÜ 36, 374–381 (1982)

G.H. Golub, C.F. Van Loan, Matrix Computations, 3rd edn. (The Johns Hopkins Univ. Press, Baltimore, MD, 1996)

T. Kaczorek, Two-Dimensional Systems (Springer, Berlin, 1985)

C. Liu, H.W. Mu, D.M Liu, The realization of real-time motion tracking algorithm based on FPGA, in Progress in Applied Sciences, Engineering and Technology, Advanced Materials Research, vol. 926 (Trans Tech Publications, Zurich (2014), pp. 3302–3305. doi:10.4028/www.scientific.net/AMR.926-930.3302

P.K. Meher, J. Valls, T.B. Juang, K. Sridharan, K. Maharatna, 50 years of CORDIC: algorithms, architectures, and applications. IEEE Trans. Circuits Syst. I. Regul. Pap. 56–I(9), 1893–1907 (2009)

J. Ohser, K. Schladitz, 3D Images of Materials Structures: Processing and Analysis (Wiley-VCH, Weinheim, 2009)

H. Pan, X. Jing, W. Sun, Robust finite-time tracking control for nonlinear suspension systems via disturbance compensation. Mech. Syst. Signal Process. 88, 49–61 (2017)

H. Pan, W. Sun, H. Gao, X. Jing, Disturbance observer-based adaptive tracking control with actuator saturation and its application. IEEE Trans. Autom. Sci. Eng. 13(2), 868–875 (2016). doi:10.1109/TASE.2015.2414652

H. Pan, W. Sun, H. Gao, J. Yu, Finite-time stabilization for vehicle active suspension systems with hard constraints. IEEE Trans. Intell. Transp. Syst. 16(5), 2663–2672 (2015). doi:10.1109/TITS.2015.2414657

M.S. Piekarski, Synthesis algorithm of two-dimensional orthogonal digital system: a state space approach, in Proceedings of the XXVIIIth URSI General Assembly, New Delhi, India (2005). www.ursi.org/Proceedings/ProcGA05/pdf/CP4.8(01080).pdf

J.G. Proakis, D.G. Manolakis, Digital Signal Processing: Principles, Algorithms, and Applications, 3rd edn. (Prentice-Hall, Upper Saddle River, 1996)

R.P. Roesser, A discrete state-space model for linear image processing. IEEE Trans. Autom. Control 20(1), 1–10 (1975)

W. Sun, H. Pan, H. Gao, Filter-based adaptive vibration control for active vehicle suspensions with electrohydraulic actuators. IEEE Trans. Veh. Technol. 65(6), 4619–4626 (2016). doi:10.1109/TVT.2015.2437455

W. Sun, S. Tang, H. Gao, J. Zhao, Two time-scale tracking control of nonholonomic wheeled mobile robots. IEEE Trans. Control Syst. Technol. 24(6), 2059–2069 (2016). doi:10.1109/TCST.2016.2519282

R. Suszynski, K. Wawryn, R. Wirski, 2D image processing for auto-guiding system, in 2011 IEEE 54th International Midwest Symposium on Circuits and Systems (MWSCAS) (2011), pp. 1–4. doi:10.1109/MWSCAS.2011.6026368

The SCILAB homepage. http://www.scilab.org (2017)

K. Wawryn, P. Poczekajlo, R. Wirski, FPGA implementation of 3-D separable Gauss filter using pipeline rotation structures, in 2015 22nd International Conference on Mixed Design of Integrated Circuits Systems (MIXDES) (2015), pp. 589–594 . doi:10.1109/MIXDES.2015.7208592

R. Wirski, K. Wawryn, State space synthesis of two-dimensional FIR lossless filters, in International Conference on Signals and Electronic Systems, 2008. ICSES ’08 (2008), pp. 367–370. doi:10.1109/ICSES.2008.4673438

R. Wirski, K. Wawryn, Stanowa synteza systemów bezstratnych o skończonej odpowiedzi impulsowej [State-space synthesis of finite impulse response loss-less systems]. Przeglad Elektrotechniczny 86(11a), 218–221 (2010). In Polish

R. Wirski, K. Wawryn, B. Strzeszewski, State-space approach to implementation of FIR systems using pipeline rotation structures, in International Conference on Signals and Electronic Systems, 2012. ICSES ’12 (2012), pp. 1–5. doi:10.1109/ICSES.2012.6382223

R.T. Wirski, On the realization of 2-D orthogonal state-space systems. Signal Process. 88, 2747–2753 (2008). doi:10.1016/j.sigpro.2008.05.018

R.T. Wirski, Synthesis of 2-D state-space equations for orthogonal separable denominator systems, in: International Conference on Signals and Electronic Systems (ICSES’10). Gliwice—Poland (2010)

R.T. Wirski, Synthesis of orthogonal Roesser model for two-dimensional FIR filters, in International Symposium on Information Theory and its Applications (ISITA2010), Taichung—Taiwan (2010). doi:10.1109/ISITA.2010.5649701

D.C. Youla, The synthesis of networks containing lumped and distributed elements, in Proceedings of Symposium on Generalized Networks (Polytechnic Institute of Brooklyn Press, New York, 1966), pp. 289–343

L.A. Zadeh, C.A. Desoer, Linear System Theory: The State Space Approach (McGraw-Hill, New York, 1963)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Poczekajło, P., Wirski, R.T. Synthesis and Realization of 3-D Orthogonal FIR Filters Using Pipeline Structures. Circuits Syst Signal Process 37, 1669–1691 (2018). https://doi.org/10.1007/s00034-017-0618-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-017-0618-2