Abstract

According to the historical earthquake catalog of Italy, the country experienced a pulse of seismicity between the 17th century, when the rate of destructive events increased by more than 100%, and the 20th century, characterized by a symmetric decrease. In the present work, I performed a statistical analysis to verify the reliability of such transient, considering different sources of bias and uncertainty, such as completeness and declustering of the catalog, as well as errors on magnitude estimation. I also searched for a confirmation externally to the catalog, analyzing the correlation with the volcanic activity. The similarity is high for the eruptive history of Vesuvius, which agrees on both the main rate changes of the 17th and 20th centuries and on minor variations in the intermediate period. Of general interest, beyond the specific case of Italy, the observed rate changes suggest the existence of large-scale crustal processes taking place within decades and lasting for centuries, responsible for the synchronous activation/deactivation of remote, loosely connected faults in different tectonic domains. Although their origin is still unexplained (I discuss a possible link with the climate changes and the consequent variations of the sea level), their existence and long lasting is critical for seismic hazard computation. In fact, they introduce a hardly predictable time variability that undermines any hypothesis of regularity of the earthquake cycle on individual faults and systems of interconnected faults.

Similar content being viewed by others

1 Introduction

The seismic history of Italy is described in detail by a highly reliable seismic catalog spanning the last millennium [catalog CPTI11, (Rovida et al. 2011)]. Its quality is proved by statistical and historical studies (Stucchi et al. 2011), and derives from at least three factors: the historical and cultural heritage of the country; the long tradition of systematic collection; and evaluation of macroseismic data (I recall the pioneering work by Giuseppe Mercalli and other Italian seismologists at the end of the 19th century); the fact that the earthquakes occur mainly inland, along or near the Alpine and Apennine chains, with direct and clear effects on towns and population, which facilitated their reporting in documents and chronicles.

Previous studies recognize two sudden rate changes in the last few centuries. The first one, discussed by Stucchi et al. (2011), is a strong increase occurred after the end of the Middle Ages. The authors computed the rate of M w ≥ 6.15 earthquakes in the period of completeness of the catalog (since 1530) using two alternative methods. Projected back to the last 1000 years, the two rates predict either 102 or 149 M w ≥ 6.15 earthquakes, compared to 64 that are actually included in the catalog (59 and 133% more earthquakes, respectively, for the two estimations). Such discrepancy appears large to the authors. Based on historical considerations, they do not believe that the catalog could miss so many destructive events in the Middle Ages, and suggest the possible acceleration of seismicity in the following period. The second strong variation corresponds to the decreasing trend of seismicity in the 20th century found by Rovida et al. (2014), who theorize a tectonic origin. Other studies confirm the recent trend: for M w ≥ 4.7 earthquakes in northern Italy (Bragato 2014) and for M w ≥ 5.0 earthquakes in the entire country (Bragato and Sugan 2014). Both works estimate a rate reduction larger than 50% in a little more than a century. Furthermore, Bragato and Sugan (2014) relate the decreasing trend in Italy with those analogous estimated for other areas (e.g., California) and, more in general, in the northern hemisphere.

The observed behavior could have a strong impact on the evaluation of the seismic hazard in Italy, and requires an accurate checking. The purpose of this work is to assess its reliability and robustness with respect to different sources of uncertainty. I took into consideration those related to declustering (i.e., the way in which the aftershocks are recognized and removed), magnitude estimation, and the selection of the minimum magnitude threshold. I started analyzing the earthquakes occurred in the time period 1900–2015, when the availability and reliability of the data are higher and the results are more robust. Subsequently, I extended the study to the past centuries, for a period that, depending on the magnitude threshold, reaches at most the 15th century. Furthermore, I compared the seismic activity with that of the volcanoes in Italy, extending the period of observation back to 1100. All the analyses indicate that the pulse occurred between the 17th and 20th centuries is not an artifact of the catalog but a real feature of seismicity. The result requires an adequate physical explanation, which is out of the scope of this paper. In the final discussion, I simply address the question with reference to the available literature. In particular, I observe a strict anticorrelation with the global surface temperature and, following authors, such as Hampel et al. (2010) and Luttrell and Sandwell (2010), hypothesize a possible role of the sea-level changes.

The present work continues and completes the analyses carried out in two previous studies. The decreasing rate of seismicity in Italy through the 20th century was assessed in Bragato and Sugan (2014) using linear regression on the number of earthquakes in each year. Here, the estimation is improved using Poisson regression, which is more appropriate for count data. The robustness and stability of the result are also checked in various ways: considering the uncertainty associated with the magnitude of each earthquake (i.e., introducing random changes to magnitude); using increasing values of the minimum magnitude; and partitioning the national territory in sub-areas with different seismic characteristics. The second study (Bragato 2015) starts from the same seismic catalog of the present work, furnishes an estimate the overall time density of events since 1100, and concentrates on its oscillatory component since 1600, characterized by a period of about 55 years. The present work neglects the oscillatory component and focalizes on the long-term behavior, with special attention on the pre-1600 trend, with the aim to emphasize and confirm the strong acceleration of the 18th century. The comparison with the volcanic activity is also performed for this purpose: in particular, the synchronization between Vesuvius’ eruptions and earthquakes is now assessed more formally, using a test based on Ripley’s K-function (Ripley 1977). For the seismicity, similar to post-1900 data, I added a check on the robustness of the results accounting for various factors (uncertain magnitude, level of completeness and regionalization). The paper (Bragato 2015) also discusses the possible relationship between rates of seismicity and climate-related surface processes, performing a comparison with the changes of the global sea level available since 1700 (Jevrejeva et al. 2008). Here, the comparison is extended in time back to 1100, considering a reconstruction of the global surface temperature (Mann et al. 2008). Furthermore, the correlation is formally tested performing binomial logistic regression: in this way, the time series of temperature (a continuous function of time) is compared directly with the origin time of the earthquakes (point data). In the previous paper, the time series of the global sea level was compared with the smoothed time density of earthquakes (a continuous function of time), involving the arbitrary selection of the degree of smoothing.

2 Data Selection

For the analysis of the seismicity in Italy, I referred to the historical seismic catalog CPTI11 (Rovida et al. 2011). It reports 2984 earthquakes occurred in the time period 1000–2006, including the mainshocks and a number of aftershocks, which are less frequent in the earliest period. For each earthquake, the catalog reports different types of source parameters. I adopted those classified as “default parameters”. They include the origin time, the epicentral coordinates, the epicentral and maximum observed intensity (I o and I max, respectively), and the moment magnitude (Mw). All the parameters are accompanied by an estimation of the associated error. For the earthquakes occurred before 1903, the data are derived from macrosesimic observations. In particular, when possible, Mw is computed with the Boxer method (Gasperini et al. 1999), which considers the entire intensity field, and not just I o or I max. Instrumental data are introduced gradually for the last century, and are almost the standard since 1980. I extended the catalog to the time period 2007–2015 with data from ISIDe, the Italian Seismological Instrumental and Parametric DataBase (ISIDe Working Group 2010). For homogeneity with CPTI11, I replaced the original local magnitude ML with the moment magnitude M w drawn from the European-Mediterranean Regional Centroid Moment Tensor (RCMT) Catalog (Pondrelli et al. 2011).

A key point of the analysis is the accurate assessment of the magnitude of completeness M c, to guarantee the homogeneity between the different parts of the catalog. The estimation of M c for historical seismic data has peculiar problems, mainly related to abrupt changes of the observation network, constituted by the set of reliable and, as far as possible, continuous historical sources reporting news of earthquakes (Stucchi et al. 2011). The completeness of the Italian seismic catalog has been analyzed in various works (Slejko et al. 1998; Albarello et al. 2001; Stucchi et al. 2004), using alternative methods, and obtaining different results. The discordance indicates that the completeness cannot be assessed in absolute terms, but rather at some degree of reliability, which augments for increasing values of magnitude. For the present work, I referred to the estimation of M c by Stucchi et al. (2011), based on the continuity of the historical sources. In their Table 2, the authors report magnitude thresholds that are function of the time period and seismic zone. Note that such values refer to the previous version of the catalog, CPTI04 (Gruppo di Lavoro CPTI 2004). For M w ≥ 5.5, CPTI04 has values of M w that, in general, are slightly lower than those reported in CPTI11 (average difference 0.08 ± 0.23, which increases to 0.18 ± 0.28 for M w ≥ 6.0 earthquakes). The differences are mainly due to the improvement of the database of macroseismic observations and the adoption of the Boxer method (Gasperini et al. 1999) instead of intensity/magnitude regression. In my analysis, to mitigate the problems related to the completeness, I performed the analysis for the thresholds by Stucchi et al. (2011) and confirmed the results for increasing values of the minimum magnitude.

Another critical point concerns the declustering of the catalog, which, in general, is performed to remove sequences of aftershocks and emphasize the characteristics of the background seismicity. For historical data, declustering has the further effect of making the catalog more homogeneous, because aftershocks are more likely reported in the recent period of instrumental recording than in the past. A discussion about the methods and the results of declustering, mainly arising from the lack of a quantitative physical definition of mainshock (Console et al. 2010), is still open. In my study, I was not interested to perform highly refined declustering, but rather to check if different choices could change significantly the results. I used the well-known and simple method by Gardner and Knopoff (1974) (GK declustering hereafter) for the main analysis (for the search of the aftershocks, I used a linear interpolation of the original magnitude-dependent space/time windows of the 1974 paper, here reported in Table 1), and, successively, compared the results with those obtained in two extreme cases: no declustering and overdeclustering (i.e., GK declustering with the magnitude-dependent space/time windows multiplied by a factor 1.5).

For the volcanic activity, I considered the eruptions occurred in Italy in the last millennium that are reported in Smithsonian’s Global Volcanism Program (GVP) database (Siebert et al. 2010, http://www.volcano.si.edu). The GVP database describes each eruption with its start and end dates, as well as with its Volcanic Explosivity Index (VEI) (Newhall and Self 1982). An eruption can last from days to decades, and the VEI is attributed on the basis of the strongest, often final, episode. I selected the eruptions with VEI ≥ 2, assuming that the catalog is complete at this level. This is a working hypothesis that seams reasonable for the two main volcanoes, Etna and Vesuvius, because their eruptions have direct effects on important cities or their immediate surroundings (Catania and Naples, respectively). It is more questionable for the volcanic islands, since it depends on the reports of sailors and a few inhabitants (if any), although the strongest eruptions could be visible from the mainland. In any case, the problem is attenuated by the fact that I am searching for abrupt shifts of activity (from no activity to intense activity and vice versa) rather than minor changes.

3 Seismic Rates Since 1900

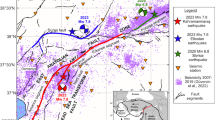

I analyzed the earthquakes occurred in Italy between 1900 and 2015 filtered by GK declustering. I cut the catalog at the minimum magnitude M w = 4.8, the magnitude of completeness that, according to the Table 2 of Stucchi et al. (2011), is valid for the entire country, with the exception of the small zone in the Ionian sea, whose contribution is negligible. The data set comprises 387 earthquakes (epicenters in Fig. 1) with maximum magnitude M w = 7.1 reached for the 1908, Messina earthquake. At a visual inspection, their yearly distribution (Fig. 2a) evidences more intense activity before 1970, with an average rate of about four earthquakes per year (3.9 ± 2.1), and a peak of ten earthquakes reached on 1930. After 1970, the average rate falls to about 2.5 earthquakes per year (2.5 ± 1.5), with a peak of six earthquakes reached just one time, on 1980 (this number was reached or exceeded 15 times before 1970). To assess the statistical significance of the decreasing trend, I performed Poisson regression on the data of the histogram. Poisson regression is a form of general regression (Zeileis et al. 2008), which is more appropriate in the case of count data. Given the set of observations {(x i , y i , i = 1,…, 116)} (y i is the number of events during the year x i , 116 the number of years of the analysis), it is assumed that the values y i are drawn from a set of independent Poisson random variables y i having rate parameter (equal to the mean and the variance) λ i , which depends on xi through the log-linear transformation \(\ln (\lambda_{i} ) = a + bx_{i}\). I performed the regression using the function “glm” of the package MASS (Venables and Ripley 2002) implemented in the R software system (R Core Team 2012). The function furnishes the estimation of the parameters a and b with the corresponding standard errors and p values (Table 2). In particular, I obtained b = −0.0073 ± 0.0016, a negative value that, according to the p value, is significantly different from 0 at the 99% confidence level. The estimated regression curve \(\lambda = e^{a + bx}\) and its 95% confidence interval are shown in Fig. 2a (continuous and dashed lines, respectively): it predicts a decreasing rate, going from 4.9 events per year on 1900–2.1 events per year on 2015 (a reduction by 57% in 116 years). To assess the robustness of the result, I considered alternative choices for the minimum magnitude threshold and declustering, as well as the uncertainty associated with the magnitude. Furthermore, to assess the homogeneity of the decrease throughout the country, I compared the trends estimated for different sub-areas. In Fig. 2b and c, I analyzed the impact of more conservative hypotheses on the completeness of the catalog, and estimated the trend for the earthquakes with M w ≥ 5 and M w ≥ 5.2, respectively. In both cases, Poisson regression (Table 2) furnishes a negative value for b that is significant at the 99% confidence level, with a rate reduction by 68 and 62% in 116 years, respectively. Poisson regression, especially for the case M w ≥ 5.2, could be biased by the presence of a large number of years with zero earthquakes. To avoid the problem, other and more complex regression models would be available (for example, the zero-inflated model). More simply, in Fig. 3, I have enlarged the bin of the histogram from 1 to 5.8 years, to guarantee the presence of at least one earthquake per class. Even in this case, the existence of a significant negative trend is confirmed (Fig. 3; Table 2), with a reduction of the seismic rate in 116 years that is larger than 57% for any choice of the minimum threshold. I assessed the sensitivity on magnitude uncertainty by Monte Carlo simulation. For each value of magnitude, CPTI11 reports an estimation of the associated error. For the selected earthquakes, it ranges between 0.06 and 1.02, depending on the type of estimation and the number of observation points. Starting from the original catalog (with no magnitude restriction), I generated 1000 alternative catalogs perturbing each value of magnitude with additive noise drawn form the normal distribution N(0, σ M), where σ M is the reported magnitude error. For each catalog, I performed GK declustering, cut the data for M w ≥ 5.0 (a bit larger than the previous value 4.8 to further guarantee completeness), and estimated the Poisson regression model. All the regression curves are shown in Fig. 4a (gray band with the mean values in black) confirming the decreasing trend. The same figure illustrates the sensitivity to different levels of declustering: GK declustering in its original configuration (Fig. 4a), overdeclustering (GK space/time windows multiplied by 1.5, Fig. 4b), and no declustering (Fig. 4c). The three families of curves have very similar decreasing trends, although they differ for the overall number of earthquakes and, consequently, for the vertical scaling.

Yearly distribution of earthquakes occurred between 1900 and 2015 and selected according to different thresholds of magnitude and maximum observed intensity (declustered catalog). The lines represent the fit to the data by Poisson regression (continuous) and the corresponding 95% confidence interval (dashed)

Yearly distribution of earthquakes occurred between 1900 and 2015 and selected according to different thresholds of magnitude and maximum observed intensity (declustered catalog) for earthquakes binned in time intervals of 5.8 years. The lines represent the fit to the data by Poisson regression (continuous) and the corresponding 95% confidence interval (dashed)

The 20th century was characterized by the development of the instrumental seismology, so that the CPTI11 catalog contains a mix of macroseismic-derived and instrumental-derived magnitudes, with the first-type prevailing at the beginning of the century. I explored the possibility that such heterogeneity was responsible for the observed decrease. To the purpose, I performed Poisson regression for a subset of earthquakes selected solely on the basis of their maximum macroseismic intensity. I considered the values of I max reported in the catalog CPTI11, integrated for the time period 2007–2015 with the estimations by the QUEST, QUick Earthquake Survey Team (http://quest.ingv.it). Figure 2d reports the yearly distribution of the earthquakes with I max ≥ VII (declustered catalog with 141 mainshocks). Figure 3d shows the same data grouped in bins of 5.8 years. In both cases, Poisson regression furnishes a negative value for b that is significant at the 99% confidence level (Table 2), confirming the result obtained in terms of magnitude. It is important to note that the decrement of earthquakes selected by I max can be hardly attributed to a reduction of the damage deriving from improved building techniques. This happens for two reasons: first, the assignment of I max takes into account the effect on different types of buildings; second, even for recent earthquakes, the damage involved a large number of historical residential buildings, which helped to maintain the estimation of Imax homogeneous throughout the entire time period.

Finally, I assessed the homogeneity of the seismic decrease throughout the Italian territory. I considered the zones of the seismic source model ZS9 (Meletti et al. 2008) adopted for the most recent hazard map of Italy. To get enough data for a robust estimation, I merged the zones in three macro-areas (Fig. 5): NORTH, including the Alpine and pre-Alpine belts, as well as the Alpine–Dinaric junction on north-east; CENTER, corresponding to the central end northern Apennines and SOUTH, comprising the southern Apennines, the Calabrian Arc, and Sicily. The time distributions of earthquakes in the three macro-areas (GK declustered catalog for M w ≥ 4.8) are compared in Fig. 6, together to the corresponding Poisson regression curves (their parameters are reported in Table 2). With some differences, the decrease appears stably in the three macro-areas. The rate of reduction is larger for NORTH and CENTER (83 and 70% in 116 years, respectively) compared to SOUTH (59% in 116 years). Furthermore, in the latter area, the b parameter is slightly less significant (p value 0.02 instead of 0.00, Table 2) and the decrease is mainly concentrated in a sudden step after 1980.

Epicenters of M w ≥ 4.8 earthquakes occurred in the time period 1900–2015 within the seismic zonation ZS9 by Meletti et al. (2008) (poligons). The different patterns evidence the three macro-areas used for the separate analysis (NORTH, CENTER, and SOUTH)

Time distribution (bin width 5.8 years) of M w ≥ 4.8 earthquakes occurred between 1900 and 2015 in each of the three macro-areas of Fig. 5. The lines represent the fit by Poisson regression (continuous lines) and the corresponding 95% confidence interval (dashed lines)

4 A Historical Perspective

At the cost of increasing the magnitude of completeness, the analysis can be extended for various centuries in the past. Considering the historical sources, Stucchi et al. (2011) estimate that the entire catalog is complete for M w ≥ 6.14 since 1530. To take into account the uncertainty of such evaluation (including that implied by the change of catalog from CPTI04 to CPTI11 discussed in the previous section), I analyzed the earthquakes for M w ≥ 6.1, and compared the results with those obtained for increasing values of the minimum magnitude. I focalized on the declustered catalog (GK declustering, 60 mainshocks with M w ≥ 6.1, epicenters in Fig. 7) but, as shown in the following, I obtained similar or identical results for no declustering and overdeclustering, respectively.

The time distribution of the historical seismicity alternates periods of increasing and decreasing activity and cannot be fitted satisfactorily using a monotonic regression function, as done for the post-1900 period. Alternatively, I traced the smoothed time density of earthquakes obtained by Gaussian kernel estimation (Bowman and Azzalini 1997):

where t 1,…, t N are the times of occurrence of the events, \(\varphi (z;h)\) is the kernel function (zero-mean normal density function in z with standard deviation h), and h is the smoothing parameter. The choice of h is critical and can influence the interpretation of the results. If h is small (low degree of smoothing), the estimated density curve results in a series of spikes located at the time of occurrence of the events, giving no information about the overall mass distribution of the time points t i . At the other extreme, if h is very large, the density curve becomes unimodal with the maximum located near the center of mass of the time points. To analyze the trends at different time scales, I produced graphics for h = 10, 20, and 40 years. I chose the minimum value h = 10 years, slightly larger than the average inter-arrival time of M w ≥ 6.1 earthquakes (about 8 years), to reduce the spike effects. To follow the long-term trend, I chose h = 40 years, which is much shorter than the overall study period but still useful to evidence rate changes occurring at the time scale of one century. For the smoothed curves, it is also possible to estimate an approximation of the confidence interval \(\hat{f}(t) \pm \sigma\) (σ the standard error), called the “variability band” (Bowman and Azzalini 1997), defined by

where \(\hat{f}(t)\) is the estimated density, N is the number of events, h is the smoothing parameter, and α is the constant value \(\smallint \varphi^{2} (z;h){\text{d}}z.\)

The time distribution of M w ≥ 6.1 earthquakes since 1530 (declustered catalog) is shown in Fig. 8. All levels of smoothing (h = 10, 20, and 40 years) evidence a strong increase of activity starting around 1630, with the peak on 1700. Thereafter, the seismic rate remains high for about 200 years and decreases in the last century, in agreement with what found for lower magnitudes in the previous section. For reference, in Fig. 8a, I traced the average annual seismic rates in the three periods 1530–1630, 1630–1950, and 1950–2015 (dashed lines): between 1630 and 1950, the rate of M w ≥ 6.1 earthquakes is three times that the value of the initial period and about 30% higher than that observed since 1950. I checked the significance of the two rate changes around 1630 and 1950 by comparison with a uniform random distribution (i.e., I checked if they can be attributable to random fluctuations in a uniform distribution of events). I performed the one-sample Kolmogorv–Smirnov (KS) test for uniformity on the earthquakes occurred in the two time intervals 1530–1730 (centered on 1630) and 1885–2015 (centered on 1950). For the first time interval, the KS test furnishes p = 0.003, so that the hypothesis of uniformity is rejected at the 99% confidence level. For the second time interval, the p value is 0.44, and the hypothesis of uniformity cannot be rejected: differently from the case of M w ≥ 4.8 earthquakes discussed in the previous section, the post-1900 decrease of M w ≥ 6.1 earthquakes is still not sufficiently strong to be distinguishable from a random variation. I performed a similar analysis to assess the significance of the rate changes internal to the time period of highest activity, between 1630 and 1950 (oscillations in Fig. 8). The KS test gives p = 0.82, so that the local maxima and minima in Fig. 8 (in particular, the peaks around 1700 and 1900) are not distinguishable from random fluctuations.

Kernel-smoothed density of M w ≥ 6.1 earthquakes in Italy since 1530 (declustered catalog) computed for h = 10, 20, and 40 years, with the indication of the variability band computed according to Eq. 2 (gray strip). The dashed segments in the panel a indicate the average rate of events in the three periods 1530–1630, 1630–1950, and 1950–2015. The vertical ticks on the top indicate the occurrence of the 60 earthquakes

To further guarantee the completeness of the catalog, I repeated the analysis for increasing values of the minimum magnitude. In Fig. 9, I reported the results for the extreme case with M w ≥ 6.6: the number of earthquakes is greatly reduced (from 60 to 25), but their time distribution is very similar to that for M w ≥ 6.1 (Fig. 8). They appear more peaked near 1700 and 1900, but, even in this case, the hypothesis of uniformity in the time period 1630–1950 (20 earthquakes) cannot be rejected (KS test with p = 0.86). Thanks to the improved completeness, the estimation for M w ≥ 6.6 can be extended back to 1400 (Stucchi et al. 2011): the time distribution of earthquakes indicates prolonged stable conditions preceding the abrupt acceleration of the 17th century.

Kernel-smoothed density of M w ≥ 6.6 earthquakes in Italy since 1400 (declustered catalog) computed for h = 10, 20, and 40 years, with the indication of the variability band computed according to Eq. 2 (gray strip). The dashed segments in the panel a indicate the average rate of events in the three periods 1400–1630, 1630–1950, and 1950–2015. The vertical ticks on the top indicate the occurrence of the 25 earthquakes

I checked if different choices of declustering could affect the observed behavior. Figure 10 compares the time distribution of M w ≥ 6.1 earthquakes before and after GK declustering (65 and 60 earthquakes, respectively). The two curves are very similar: the only noticeable effect of declustering is the smoothing of the two peaks centered on 1700 and 1783. After GK declustering, the remaining earthquakes are extremely sparse in space and time, so that overdeclustering is unable to find further aftershocks and leaves the catalog unchanged.

In Fig. 11, I assessed the spatial stability of the seismic behavior for M w ≥ 6.1 earthquakes since 1530. With reference to the partition of the territory of Fig. 5, I performed separate analysis for SOUTH (31 earthquakes) and CENTER–NORTH (I merged the two areas to get enough data, 23 earthquakes in total). The two smoothed time densities computed for h = 40 years preserve the characteristics of the parent distribution (Fig. 8c): bimodality, acceleration in the 17th century, and decrease in the last century.

Time density of M w ≥ 6.1 earthquakes in the two macro-areas NORTH–CENTER and SOUTH of Fig. 5 (declustered catalog, kernel estimation with smoothing parameter h = 40 years)

5 Comparison with the Volcanic Activity

For the Italian volcanoes (triangles in Fig. 7), the GVP database reports 170 VEI ≥ 2 eruptions since 1100 (I considered the limit of the 12th century to follow the availability of M w ≥ 6.1 earthquake in CPTI11). Figure 12 traces the eruptive history of the volcanoes with at least two eruptions. The most active volcanoes are Etna, Vesuvius, and Vulcano (115, 30, and 13 eruptions, respectively). Stromboli has a quite continuous activity, which makes recognizing single eruptions more difficult and less reliable. Campi Flegrei del Mar di Sicilia is a system of submarine volcanoes located off-shore south-west of Sicily, known for their temporary emersion after major eruptions. In the time period covered by the seismic transient (1630–1950, marked by dotted vertical bars in Fig. 12), three volcanoes have increased activity: Vesuvius, Vulcano, and Campi Flegrei del Mar di Sicilia. Vesuvius presents the best match with seismic activity: it reactivated on 1631, after a pause of 131 years (the previous VEI ≥ 2 is dated 1500, although a minor, VEI = 1, eruption is reported on 1570), maintained an elevated eruption rate for about 300 years, and its last eruption ended on 1944. The ongoing quiet period is the longest since 1631. The relationship between seismicity and the eruptive activity of Etna is more controversial. On one hand, the volcano has a peak of activity towards the end of the 20th century, which could indicate scarce correlation with the seismicity. On the other hand, the peak occurs at the end of a long period of continuous increase that starts around the 17th century (Fig. 13). The trend could be interpreted as the delayed and smoothed response to the same impulse that affected the other volcanoes.

Eruptive history of the Italian volcanoes with at least two VEI ≥ 2 eruptions since 1100 [data from the Smithsonian’s Global Volcanism Program database, (Siebert et al. 2010)]. The vertical ticks correspond to the beginning of the eruptions, while the horizontal segments indicate their duration

Given the coincidence with the Italian seismicity on the two change points of the 17th and 20th centuries, the eruptive activity of the Vesuvius is particularly interesting. Its reconstruction is extremely reliable compared to other volcanoes. It derives from the combination and cross-checking of historical documentation (particularly rich, given the proximity to Naples, one of the largest cities in Europe since the Middle Ages) and the results of modern geophysical investigations (Scandone et al. 1993; Principe et al. 2004; Guidoboni and Boschi 2006). It is very likely that the reactivation of 1631 had been really preceded by a long period of scarce activity lasting at least 500 years. In the time period following 1631, characterized by intense activity, the eruptions appear synchronized with seismicity even on minor rate changes. Qualitatively, the strict time correlation emerges from the comparison of the smoothed time densities of events (Fig. 14): the two curves roughly correspond on their six local maxima between 1600 and 1950, with a strong coincidence on their absolute maxima around 1700. For a quantitative and formal assessment, I adopted a technique based on the bivariate Ripley’s K function (Ripley 1977) simplified for one dimension (Doss 1989), adopting the implementation by Gavin et al. (2006), to which I refer for details (see in particular their Appendix B). In this approach, the synchronization is checked directly on the two sets of event dates, avoiding any transformation, smoothing, arbitrary choice of parameters (e.g., the bandwidth parameter h in Fig. 14), and consequent loss of information. In its basic form, the K function is expressed as

where V = {V 1,…, V nV } and E = {E 1,…, E nE } are the sequences of eruption and earthquake dates, respectively; I() is the identity function; T is the total period of observation in years. The K-function is transformed to obtain the L function:

Comparison between the kernel-smoothed density of M w ≥ 6.1 earthquakes in Italy and that of VEI ≥ 2 eruptions of the Vesuvius (smoothing parameters h = 10 years). The corresponding variability bands (Eq. 2) are also indicated in gray. According to Stucchi et al. (2011), the earthquake catalog is complete since 1530

Furthermore, the 95% confidence envelope for L VE(t) is computed using N randomizations of V and E (N = 1000 in the present case). Values of t for which L VE(t) is larger than the confidence envelope mean that the number of couples for which |V i − E j || < t is significantly larger than those awaited in a random distribution: it is an indication of synchrony within a time lag t (similarly, values within confidence envelope indicate independence, while values less than the confidence envelope indicate asynchrony or repulsion between the two types of events). The results for the eruptions of Vesuvius and M w ≥ 6.1 earthquakes in Italy are shown in Fig. 15. In the entire period of completeness of the earthquake catalog (1530–2015, Fig. 15a), the synchronization is significant at the 95% confidence level for time lags between 0 and 26 years. If the analysis is restricted to the period of more intense seismic activity (1630–1950, Fig. 15b), so that the initial and final periods of total inactivity of Vesuvius are skipped, the synchronization is confirmed and more localized on time lags of 1 and 3 years.

Test for synchronization between M w ≥ 6.1 earthquakes in Italy and VEI ≥ 2 eruptions of the Vesuvius. The curves indicate the L-function computed according to Eq. 4 (continuous lines) and its 95% confidence envelope (dashed lines). The analysis for the time period 1530–2015 a indicates significant synchronization with time lags between 0 and 26 years. The analysis for the time period of more intense seismic activity, between 1630 and 1950, b indicates significant synchronization for time lags of 1 and 3 years

Concerning the physical explanation of the synchronization, (Marzocchi et al. 1993) and (Nostro et al. 1998) suggest that the earthquakes in southern Italy could stimulate the eruptions through stress transfer. In my previous paper (Bragato 2015), analyzing data since 1600, I found that the time correlation extends to larger distances, involving even earthquakes in northern Italy. Based on that, I hypothesized the existence of an external source of stress, acting simultaneously on both earthquakes and eruptions. The present paper, showing the correspondence on the two major rate changes of the 17th and 20th centuries, reinforces the confidence on such hypothetical large-scale relationship.

Independently on its physical origin, the strict time correlation after 1600 suggests the use of the well-documented Vesuvius’ activity as a proxy for the seismicity of Italy. The hypothesis is questionable and surely reserves further investigation. If it is accepted and assumed valid even for the past, the scarcity of Vesuvius’ eruptions in the Middle Ages could be an indication of truly reduced seismic activity between 1100 and 1600 (Fig. 14).

6 Discussion and Conclusions

Previous analysis supports of the existence of real seismic transient occurred in Italy between the 17th and 20th centuries. In particular, the relative abundance and homogeneity of data since 1900 offers a clear indication of a significant decrease of the earthquake rate in the last century. The decrease is common to the entire country, but more gradual and continuous on center and north. The extension of the analysis to the past is problematic for the incompleteness of the catalog. Anyway, the acceleration of the 17th century appears evident and robust. It is also reinforced by analogy with the eruptive activity of Vesuvius, although the physical nature of the relationship is not fully clarified.

The synchronous behavior of large areas or areas that are very distant from each other has been observed for earthquakes in Italy (Viti et al. 2003; Mantovani et al. 2010; Bragato 2014) and elsewhere (Bath 1984a, b; Tsapanos and Liritzis 1992; Liritzis et al. 1995; Bragato and Sugan 2014), as well as for volcanoes (Marzocchi et al. 2004). The explanatory theories for the phenomenon are of different nature. Some models rely on the earthquake-to-earthquake interaction through different mechanisms of stress transfer (Freed 2005). Among them, those based on post-seismic viscoelastic relaxation explain triggering at distances of the order of 100 of kilometers with delays of some years (Viti et al. 2003). Another class of models involves transient crustal deformation at the level of entire plates or portions of plates (Kasahara 1979). Finally, other authors invoke mechanisms that are external to the lithosphere. Among these, I found particularly interesting those involving surface processes related to the climate changes (McGuire 2013). It is known that variations of the global temperature cause the movement of large masses, through atmospheric and oceanic circulation, sea-level rise, ice and snow melting, and inland water accumulation due to intense rainfall. Geophysical models, such as those by Hampel et al. (2010) and Luttrell and Sandwell (2010), demonstrate that the consequent change of the surface load could control faulting, either promoting or inhibiting earthquakes. Furthermore, similar processes seem to act on different time scales, going from seasonal to millennial [see (Bragato 2015) for a short review on the subject]. For the case of Italy, I noted that the current seismic decrease is going in parallel with the global warming, while the peak of seismicity around 1700 coincides with one of the coldest periods of the last millennium (Parker 2013). Based on that, I investigated the statistical relationship between the occurrence of earthquakes and the time series of the global surface temperature (GST) reconstructed by Mann et al. (2008). I started with a graphical comparison and concluded with the quantitative assessment of the correlation using logistic regression. In Fig. 16, I traced the smoothed time density of M w ≥ 6.1 earthquakes (declustered catalog) and the global temperature anomaly ∆T (difference with the mean temperature in the reference period 1961–1990). The temperature (note the use of the reversed y scale in the figure) has the well-known trend, with a warm phase (the Medieval Warm Period) lasting until about 1400, followed by a cold period (the Little Ice Age) that concludes around 1800, to leave space to the global warming that we are still observing nowadays. The bulk of seismicity follows the emergence of the cold period with a delay of more than 150 years. I formally assessed the degree of anticorrelation using binomial logistic regression (Venables and Ripley 2002) on the complete part of the catalog (post 1530). I estimated a regression model that gives the probability of occurrence of an earthquake at year i based on the temperature observed di years before. I repeated the estimation for different values of di and chose the best-fitting based on the minimization of the Akaike Information Criterion (AIC). For a given di, I considered the set of observations \(\left\{ {\left( {x_{{i - {\text{d}}i}} ,y_{i} } \right), \quad i = 1530, \ldots ,2015} \right\}\), where \(x_{{i - {\text{d}}i}}\) is the temperature anomaly of the year i − di, and y i takes the values 1 in case of occurrence of an earthquake on year i, 0 otherwise. Binomial logistic regression assumes that the observations y i are drawn from a set of independent Bernoulli random variables Y i , each one taking the value 1 with probability \(p_{i}\). Furthermore, the values of \(p_{i}\) depend on \(x_{{i - {\text{d}}i}}\) through the logit transformation \({\text{logit}}(p_{i} ) = a + bx_{{i - {\text{d}}i}}\), where \({\text{logit}}(p_{i} ) = \ln [p_{i} /(1 - p_{i} )]\). The estimated value of b and its significance give an indication of the degree of correlation between the two covariates. I obtained the best fit for a time lag of 174 years, with a = −3.58 ± 0.57 and b = −3.06 ± 1.02. The parameter b has a negative value significantly different from 0 at the 99% confidence level, which strongly supports the anticorrelation. I tested the robustness of the relationship by Monte Carlo simulation, perturbing magnitude and temperature according to the associated estimation error [up to 0.15 °C in the reconstruction of temperature by Mann et al. (2008)]. I performed logistic regression on 1000 alternative samples, and in all the cases, b assumes a negative value that is significant at the 99% confidence level. Logistic regression indicates that the hypothesis of anticorrelation is sufficiently well founded to deserve further investigation by geophysical modeling. As discussed previously, in the relationship between the climate changes and the occurrence or earthquakes, an important role could be played by sea-level changes. In particular, the global warming of the last two centuries has been accompanied by (and likely has caused) an increase of the global sea level of 0.3 m (Jevrejeva et al. 2008), with possible influences on the stress state of the crust.

Comparison between the occurrence of M w ≥ 6.1 earthquakes in Italy (kernel-smoothed density computed for h = 10 years, thin line with the variability band in gray) and the global surface temperature anomaly ∆T reconstructed by Mann et al. (2008) (dashed line). The plot of temperature is reversed (increasing values downward) and shifted by 174 years to evidence the estimated anticorrelation with the earthquake density (see the text for details)

From the point of view of the seismic hazard in Italy, the present analysis confirms that in the last century, the country is going through a period of decreasing earthquake activity. If the dependence on the climate holds, there is the chance that such trend will continue in the future. On the other hand, the case of Italy demonstrates that significant shifts of the seismic regime can occur suddenly, within few decades, and persist for centuries. Such changes, which act at a large spatial scale, are hardly predictable and, until now, not considered in hazard studies, neither in Italy nor elsewhere. It is also shown that wide and complex geological systems can behave as a whole at the time scale of 10–100 years, which stress the importance of studies at the regional and global levels even for short-term local analyses.

References

Albarello, D., Camassi, R., & Rebez, A. (2001). Detection of space and time heterogeneity in the completeness level of a seismic catalogue by a “robust” statistical approach: An application to the Italian area. Bulletin of the Seismological Society of America, 91, 1694–1703. doi:10.1785/0120000058.

Bath, M. (1984a). Correlation between regional and global seismic activity. Tectonophysics, 104, 187–194.

Bath, M. (1984b). Correlation between Greek and global seismic activity. Tectonophysics, 109, 345–351.

Bowman, A. W., & Azzalini, A. (1997). Applied smoothing techniques for data analysis. Oxford: Oxford University Press.

Bragato, P. L. (2014). Rate changes, premonitory quiescence, and synchronization of earthquakes in northern Italy and its surroundings. Seismological Research Letters, 85, 639–648. doi:10.1785/0220130139.

Bragato, P. L. (2015). Italian seismicity and Vesuvius’ eruptions synchronize on a quasi 60-year oscillation. Earth and Space Science,. doi:10.1002/2014EA000030.

Bragato, P. L., & Sugan, M. (2014). Decreasing rate of M ≥ 7 earthquakes in the northern hemisphere since 1900. Seismological Research Letters, 85, 1234–1242. doi:10.1785/0220140111.

Console, R., Jackson, D. D., & Kagan, Y. Y. (2010). Using the ETAS model for catalog declustering and seismic background assessment. Pure and Applied Geophysics, 167, 819–830. doi:10.1007/s00024-010-0065-5.

Doss, H. (1989). On estimating the dependence between two point processes. Annals of Statistics, 17, 749–763.

Gardner, J. K., & Knopoff, L. (1974). Is the sequence of earthquakes in Southern California, with aftershocks removed, Poissonian? Bulletin of the Seismological Society of America, 64, 1363–1367.

Gasperini, P., Bernardini, F., Valensise, G., & Boschi, E. (1999). Defining seismogenic sources from historical earthquake felt reports. Bulletin of the Seismological Society of America, 89, 94–110.

Gavin, D. G., Sheng Hu, F., Lertzman, K., & Corbett, P. (2006). Weak climatic control of stand-scale fire history during the late holocene in southeastern British Columbia. Ecology, 87, 1722–1732.

Gruppo di Lavoro CPTI. (2004). Catalogo Parametrico dei Terremoti Italiani, versione 2004 (CPTI04). Bologna, Italy: INGV. http://emidius.mi.ingv.it/CPTI/. Accessed July 2016.

Guidoboni, E., & Boschi, E. (2006). Vesuvius before the 1631 eruption. EOS, 87, 417–432.

Hampel, A., Hetzel, R., & Maniatis, G. (2010). Response of faults to climate-driven changes in ice and water volumes on earth’s surface. Philosophical Transactions of the Royal Society A, 368, 2501–2517. doi:10.1098/rsta.2010.0031.

Italian Seismological Instrumental and Parametric DataBase (ISIDe) Working Group, Istituto Nazionale di Geofisica e Vulcanologia (INGV) (2010). Italian seismological instrumental and parametric database. http://iside.rm.ingv.it. Accessed July 2016.

Jevrejeva, S., Moore, J. C., Grinsted, A., & Woodworth, P. L. (2008). Recent global sea level acceleration started over 200 years ago? Geophysical Research Letters, 35, L08715. doi:10.1029/2008GL033611.

Kasahara, K. (1979). Migration of crustal deformation. Tectonophysics, 52, 329–341.

Liritzis, I., Diagourtas, D., & Makropoulos, C. (1995). A statistical reappraisal in the relationship between global and greek seismic activity. Earth, Moon and Planets, 69, 69–86.

Luttrell, K., & Sandwell, D. (2010). Ocean loading effects on stress at near shore plate boundary fault systems. Journal Geophysical Research, 115, B08411. doi:10.1029/2009JB006541.

Mann, M. E., Zhang, Z., Hughes, M. K., Bradley, R. S., Miller, S. K., Rutherford, S., et al. (2008). Proxy-based reconstructions of hemispheric and global surface temperature variations over the past two millennia. Proceedings of the National Academy of Sciences of the United States of America, 105, 13252–13257. doi:10.1073/pnas.0805721105.

Mantovani, E., Viti, M., Babbucci, D., Albarello, D., Cenni, N., & Vannucchi, A. (2010). Long-term earthquake triggering in the Southern and Northern Apennines. Journal of Seismology, 14, 53–65. doi:10.1007/s10950-008-9141-z.

Marzocchi, W., Scandone, R., & Mulargia, F. (1993). The tectonic setting of Mount Vesuvius and the correlation between its eruptions and the earthquakes of the Southern Apennines. Journal of Volcanology and Geothermal Research, 58, 27–41.

Marzocchi, W., Zaccarelli, L., & Boschi, E. (2004). Phenomenological evidence in favor of a remote seismic coupling for large volcanic eruptions. Geophysical Reseach Letters, 31, L04601. doi:10.1029/2003GL018709.

McGuire, B. (2013). Waking the giant: How a changing climate triggers earthquakes, tsunamis, and volcanoes. Oxford: Oxford University Press.

Meletti, C., Galadini, F., Valensise, G., Stucchi, M., Basili, R., Barba, S., et al. (2008). A seismic source zone model for the seismic hazard assessment of the Italian territory. Tectonophysics, 450, 85–108. doi:10.1016/j.tecto.2008.01.003.

Newhall, C. G., & Self, S. (1982). The Volcanic Explosivity Index (VEI): An estimate of explosive magnitude for historical volcanism. Journal Geophysical Research, 87, 1231–1238. doi:10.1029/JC087iC02p01231.

Nostro, C., Stein, R., Cocco, M., Belardinelli, M. E., & Marzocchi, W. (1998). Two-way coupling between Vesuvius eruptions and southern Apennine earthquakes, Italy, by elastic stress transfer. Journal of Geophysical Research, 103(487–24), 504. doi:10.1029/98JB00902.

Parker, G. (2013). Global crisis: War, climate change and catastrophe in the seventeenth century. New Haven and London: Yale University Press.

Pondrelli, S., Salimbeni, S., Morelli, A., Ekström, G., Postpischl, L., Vannucci, G., et al. (2011). European–Mediterranean regional centroid moment tensor catalog: Solutions for 2005–2008. Physics of the Earth Planetary Interiors, 185, 74–81. doi:10.1016/j.pepi.2011.01.007.

Principe, C., Tanguy, J. C., Arrighi, S., Paiotti, A., Le Goff, M., & Zoppi, U. (2004). Chronology of Vesuvius’ activity from A.D. 79 to 1631 based on archeomagnetism of lavas and historical sources. Bulletin of Volcanology, 66, 703–724. doi:10.1007/s00445-004-0348-8.

R Core Team (2012). R: a language and environment for statistical computing (R Foundation for Statistical Computing, Vienna, Austria). http://www.r-project.org. Accessed July 2016.

Ripley, B. D. (1977). Modeling spatial patterns. Journal of the Royal Statistical Society, B39, 172–212.

Rovida, A., Camassi, R., Gasperini, P., & Stucchi, M. (Eds.) (2011). CPTI11, the 2011 version of the parametric catalogue of Italian earthquakes (INGV, Milano, Bologna). doi:10.6092/INGV.IT-CPTI11.

Rovida, A., Camassi, R., & Stucchi, M. (2014). Temporal variations in the Italian seismicity of the 20th century, Second European conference on earthquake engineering and seismology, Istanbul, 25–29 August 2014. Session “Earthquakes of the past: present knowledge and future perspectives”, abstract #3278.

Scandone, R., Giacomelli, L., & Gasparini, P. (1993). Mount Vesuvius: 2000 years of volcanological observations. Journal of Volcanology and Geothermal Research, 58, 5–25.

Siebert, L., Simkin, T., & Kimberly, P. (2010). Volcanoes of the World (3rd ed.). Berkeley: University of California Press.

Slejko, D., Peruzza, L., & Rebez, A. (1998). Seismic hazard maps of Italy. Annales Geophysicae, 41, 183–214.

Stucchi, M., Albini, P., Mirto, C., & Rebez, A. (2004). Assessing the completeness of the Italian historical earthquake data. Annales Geophysicae, 47, 659–673.

Stucchi, M., Meletti, C., Montaldo, V., Crowley, H., Calvi, G. M., & Boschi, E. (2011). Seismic hazard assessment (2003–2009) for the Italian building code. Bulletin of the Seismological Society of America, 101, 1885–1911. doi:10.1785/0120100130.

Tsapanos, T. M., & Liritzis, I. (1992). Time-lag of the earthquake energy release between three seismic regions. Pure and Applied Geophysics, 139, 293–308.

Venables, W. N., & Ripley, B. D. (2002). Modern applied statistics with S (4th ed.). New York: Springer. ISBN 0-387-95457-0.

Viti, M., D’Onza, F., Mantovani, E., Albarello, D., & Cenni, N. (2003). Post-seismic relaxation and earthquake triggering in the southern Adriatic region. Geophysical Journal International, 153, 645–657.

Wessel, P., Smith, W. H. F., Scharroo, R., Luis, J. F., & Wobbe, F. (2013). Generic mapping tools: Improved version released. EOS Transactions AGU, 94, 409–410. doi:10.1002/2013EO450001.

Zeileis, A., Kleiber, C., & Jackman, S. (2008). Regression models for count data in R. Journal of Statistical Software,. doi:10.18637/jss.v027.i08.

Acknowledgements

This research was supported by Regione Friuli Venezia Giulia and Regione Veneto. I thank Monica Sugan for the help in processing the seismic catalog. I also thank the Editor, Adrien Oth, and two anonymous reviewers for reading the paper and suggesting useful improvements. I had the opportunity to discuss the early version of this paper with my colleagues at OGS: Carla Barnaba, Paolo Fabris, Giorgio Durì, Umberta Tinivella, Laura Ursella, and David Zuliani. The analysis of synchronization between the activity of Vesuvius and the earthquakes in Italy was performed using the program K1D, version 1.2, by D. G. Gavin (http://geog.uoregon.edu/envchange/software.html, last accessed October 2016). All the figures were produced using the Generic Mapping Tool, version 5.1.1 (Wessel et al. 2013), available at http://www.soest.hawaii.edu/gmt (last accessed July 2016).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Bragato, P.L. A Statistical Investigation on a Seismic Transient Occurred in Italy Between the 17th and 20th Centuries. Pure Appl. Geophys. 174, 907–923 (2017). https://doi.org/10.1007/s00024-016-1429-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00024-016-1429-2