Abstract

We present estimates of future earthquake rate density (probability per unit area, time, and magnitude) on a 0.1-degree grid for a region including California and Nevada, based only on data from past earthquakes. Our long-term forecast is not explicitly time-dependent, but it can be updated at any time to incorporate information from recent earthquakes. The present version, founded on several decades worth of data, is suitable for testing without updating over a five-year period as part of the experiment conducted by the Collaboratory for Study of Earthquake Predictability (CSEP). The short-term forecast is meant to be updated daily and tested against similar models by CSEP. The short-term forecast includes a fraction of our long-term one plus time-dependent contributions from all previous earthquakes. Those contributions decrease with time according to the Omori law: proportional to the reciprocal of the elapsed time. Both forecasts estimate rate density using a radially symmetric spatial smoothing kernel decreasing approximately as the reciprocal of the square of epicentral distance, weighted according to the magnitude of each past earthquake. We made two versions of both the long- and short-term forecasts, based on the Advanced National Seismic System (ANSS) and Preliminary Determinations of Epicenters (PDE) catalogs, respectively. The two versions are quite consistent, but for testing purposes we prefer those based on the ANSS catalog since it covers a longer time interval, is complete to a lower magnitude threshold and has more precise locations. Both forecasts apply to shallow earthquakes only (depth 25 km or less) and assume a tapered Gutenberg-Richter magnitude distribution extending to a lower threshold of 4.0.

Similar content being viewed by others

1 Introduction

Earthquake forecasts play an important role in hazard and risk estimation, informed decisions in risk management, and emergency response. California has a large vulnerable population, so there has been intense research on forecasting in that state. As a means of improving the forecasts, and perhaps more importantly vetting the ideas behind them, a collaborative effort known as RELM, for “Regional Earthquake Likelihood Models,” was initiated in 2006. Published ideas used to gauge earthquake potential in California vary dramatically, including assumptions that earthquakes occur primarily on known faults, near recent earthquakes, or where geodetic strain rate is highest; that fault length limits or does not limit earthquake size, that large earthquakes behave very similarly or differently from smaller ones, and that large earthquakes deter or make more likely similar ones in the future. The importance of the assumptions has been highlighted in a series of earthquake probability reports (Working Group on Southern California Earthquake Probabilities, 1995; Working Group on California Earthquake Probabilities, 1990, 2003, 2009). These reports were intended to produce consensus, which could only be reached by including conflicting models with various weights. To help resolve the conflicts, the RELM project invited researchers to express their ideas as comprehensive forecasts in the form of earthquake rate density on a pre-assigned grid for prospective testing. About a dozen such models were described in a special issue of Seismological Research Letters (SRL), with an introduction by Field (2007). A set of likelihood tests for assessing the models after one and then five years was described by Schorlemmer et al. (2007) and Schorlemmer and Gerstenberger (2007). While the tests are still underway, the project has been subsumed under a larger international program: The Collaboratory for Study of Earthquake Predictability (CSEP). It adapts the RELM philosophy and methods to other parts of the world and tests short-term models, updated daily. One short-term model (Gerstenberger et al., 2005), nicknamed STEP for “Short Term Earthquake Probability,” has been operating and made available on USGS web site (http://earthquake.usgs.gov/eqcenter/step//) for a few years.

Here we present long-term (5-year) and short-term (1-day) forecast models in the CSEP format with the intention that they will be tested against others in the CSEP project.

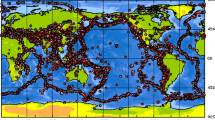

Our techniques have been extensively described in several of our publications (Kagan and Jackson, 1994, 2000, 2010; Jackson and Kagan, 1999). In those papers we used the Central Moment Tensor (CMT) earthquake catalog, described by Ekström et al. (2005), as our source of information on past earthquakes. That catalog has several advantages: Uniform definitions and stable methodology since 1977, provision of a seismic moment tensor for each event, and quite precise estimates of moment magnitude. The completeness threshold has improved from about magnitude 5.8 in the beginning to about 5.4 now. In Figure 1 we display the CMT earthquakes in the California-Nevada region.

Our forecast for two west Pacific regions (Kagan and Jackson, 1994, 2000, 2010; Jackson and Kagan, 1999) is subdivided into two programs: a long- and a short-term forecast. In the former program we use the first part of a catalog to smooth the seismicity level, and the second part of the catalog is used to validate and optimize the prediction. In the short-term forecast, Omori’s law type of dependence is used to temporally extrapolate earthquake rate into the future.

We used the focal mechanisms to estimate the orientation of the fault systems controlling seismic behavior, indicating a preferred direction for future earthquakes. However, assumptions that we made in that process hold reliably only in subduction zones, so the focal mechanism information is less useful in locations like California and Nevada that are not on subduction zones. Thus, for this work we have modified our procedures to allow use of the PDE (Preliminary determinations of epicenters, 2008) and ANSS (Advanced National Seismic System ANSS Catalog Search, 2008) catalogs, with lower completeness thresholds but without focal mechanism reports. We then compared the resulting forecasts.

2 Catalogs

The ANSS composite is a world-wide earthquake catalog which merges the master earthquake catalogs from contributing ANSS institutions but removes duplicate or non-unique solutions for the same event (ANSS Catalog Search, 2008). The ANSS catalog for the California and Nevada region extends back to about 1800, however reliable reporting of magnitude 4.0 earthquakes starts in southern California from 1932 (Hileman et al., 1973) and in northern California and parts of Nevada from 1942 (Bolt and Miller, 1975, p. 24). Preliminary results are usually available within a few minutes of each earthquake, and revisions are made as needed, sometimes months later.

The PDE world-wide catalog is published by the USGS (Preliminary Determinations of Epicenters, 2008). Locations and magnitudes are determined by analysis of data from a single global network. Contrary to its name, the PDE catalog is published in final form with a latency of a few months. At the time of writing, the PDE catalog was in final form through the end of 2007. However, the temporary catalog is usually available with a delay of a few minutes.

The PDE catalog measures earthquake size using several magnitude scales, including the body-wave (m b ) and surface-wave (M S ) magnitudes for most moderate and large events since 1965 and 1968, respectively. The moment magnitude (m w ) estimate has been added recently. For our purposes the PDE catalog is useful from 1969 through 2008/08/01, with a completeness magnitude of about 4.7. Forecasting and testing would be improved if there were one standard magnitude, but no one magnitude is reported consistently for all relevant events in the PDE catalog. Kagan (1991) experimented with a weighted average of several magnitudes to estimate one standard magnitude scale, but results were inconclusive. In this work we decided to use the maximum magnitude among those shown for each earthquake. For moderate earthquakes it is usually the m b or M S magnitude scale, while for larger recent earthquakes the maximum magnitude is most likely m w .

The ANSS catalog has some advantages over the PDE. It covers a longer time interval and has a lower magnitude threshold, thus it contains more events in any particular region. ANSS locations are generally more accurate because they are based on local seismic networks, while PDE locations are based on a sparser global network. However, ANSS has the disadvantage that the magnitudes are not defined consistently, because the catalog is a composite of data from several networks. For this reason, the magnitude estimates are less accurate. The inconsistencies in magnitude determination are not so severe in California and Nevada as in other places, because the three relevant networks supplying data to ANSS have been standardized to a certain extent. Advantages of the PDE catalog for our purposes are that the magnitudes are more consistent, as they are determined from a global network, and the catalog is not altered after publication in final form. Because both catalogs have advantages, we made separate forecasts from each before settling on a choice for use in the CSEP tests.

We computed rate density within a “test area” having latitude limits from 31.5°N to 43.0°N and longitude limits from 113.0°W to 125.0°W. This window encloses the California polygon proposed by Schorlemmer and Gerstenberger (2007, Table 2) for the RELM forecast testing. Our calculations show that earthquakes as much as 500 km outside the test window can influence the seismicity inside. Thus we compiled and analyzed earthquakes within a larger “data region” having latitude and longitude bounds 5 degrees outside those of the test region. We tested the obtained subcatalogs for the presence of duplicate solutions and absent earthquakes. Within the test region there are 569 m ≥ 4.7 events in the PDE subcatalog and 4497 m ≥ 4.0 in the ANSS subcatalog. The beginning time is 1932/01/01 for the ANSS catalog and 1969/01/01 for the PDE catalog, the end date of both subcatalogs is 2008/08/01.

3 Forecast

We have developed a long-term forecast procedure based on earthquake data alone (Kagan and Jackson, 1994, 2000, 2010). This procedure is based on the smoothing of past earthquake locations. The degree of spatial smoothing is controlled by the function

where r is epicentroid distance, and r s is the scale parameter.

In our previous investigations we optimized r s to predict best the second part of a catalog from the first part (Kagan and Jackson, 2010). Here we apply the same value, r s = 5 km to both catalogs using an isotropic kernel function. The rate density is calculated on a 0.1° × 0.1° grid for shallow earthquakes (depth less or equal to 25 km); thus the forecast is estimated on a 125 × 117 grid.

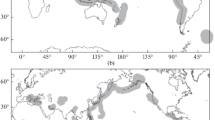

Figures 2 and 3 show the time-independent forecasts for California and Nevada, based on the PDE catalog and on the ANSS catalog, respectively. The maps are similar in appearance, any difference being due to the lower magnitude threshold and longer duration of the ANSS catalog.

California and Nevada and its surrounding long-term seismicity forecast. Colors show the rates of shallow earthquake occurrence calculated using the PDE 1969-2008/08/01 Catalog. The forecast window is the same as in Figure 1

California and Nevada and its surrounding long-term seismicity forecast. Colors show the rates of shallow earthquake occurrence calculated using the ANSS 1932-2008/08/01 Catalog. The forecast window is the same as in Figure 1

The short-term forecast in this work was carried out according to the technique described by Kagan and Jackson (2000, Section 3.1.2, see also Kagan and Knopoff, 1987). Using the branching model (ibid.), we obtained a likelihood function for the earthquake process. We compare the likelihood function for the branching process with a process based on the spatially inhomogeneous Poisson model of seismicity. We obtained the statistical estimates of the model parameters (Kagan et al., 2010) by maximizing the likelihood ratio of the two models using a Newton-Raphson technique.

For the PDE forecast we used the values of parameters obtained during the likelihood function search (Kagan et al., 2010, Table 5, m ≥ 4.7, obtained for ‘Active Continent’ zones): The branching coefficient μ = 0.152, the parent productivity exponent a = 0.64, the time decay exponent θ = 0.18, and the horizontal location error ε ρ = 11.9 km.

For the forecast based on the ANSS catalog we used the values of parameters obtained during the likelihood function search (Kagan et al., 2010, Table 7, m ≥4.0): μ = 0.172, a = 0.64, θ = 0.22, ε ρ = 6.08 km. The earthquake size distribution is assumed to follow the tapered Gutenberg-Richter law with the corner moment M c = 1.16 × 1024 Newton m (m w ≈8.05) and the exponential falloff rate for the seismic moment distribution β = 0.65 (Bird and Kagan, 2004, Table 5).

In our forecasts we use combined final and temporary (preliminary) ANSS and PDE catalogs, updated every few days or weeks. Figures 4 and 5 display the short-term forecast for California and Nevada, based on the PDE and ANSS catalogs, respectively. The map for the PDE forecast is smoother because of larger location errors than those in the ANSS catalog. These location errors (ε ρ) describe the spread of triggered events in the forecasting procedure.

California and Nevada and its surrounding short-term seismicity forecast. Colors show the rates of shallow earthquake occurrence calculated using the PDE 1969-2008/08/01 Catalog. The forecast window is the same as in Figure 1

California and Nevada and its surrounding short-term seismicity forecast. Colors show the rates of shallow earthquake occurrence calculated using the ANSS 1932-2008/08/01 Catalog. The forecast window is the same as in Figure 1

In Table 1 we display the forecast for the area surrounding the recent Chino Hills, CA earthquake (2008/07/29 m 5.4, Hauksson et al., 2008). According to the PDE temporary catalog, its coordinates were 33.734°N, 117.906°W, depth 13 km. The ANSS coordinates are 33.953°N, 117.7613°W, depth 14.7 km. We expect the ANSS location to be more accurate because it is determined from a denser, local network.

In the short-term forecast maps (Figs. 4 and 5) red spots in the east Los Angeles area correspond to the earthquake rate increase caused by the Chino Hills event. The PDE map patch is more extended due to the lower accuracy of earthquake locations in the catalog.

The long-term forecasts (Table 1 columns 3 and 6) are similar, taking into account different magnitude thresholds (m t = 4.7 for the PDE and m t = 4.0 for the ANSS catalog) and slightly different epicentral coordinates (see above). The long-term earthquake rate sum for the PDE catalog is 78.7 × 10−9 Eq/day × km2, while for the ANSS catalog, it is equal to 339 × 10−9 Eq/day × km2. The ratio of the two rates is 4.3, but to compare these rates we need to take into account different magnitude thresholds for both forecasts.

Although the b values for magnitudes in the ANSS and PDE catalogs may differ from the parameters obtained for the seismic moment tensor (Bird and Kagan, 2004), if we use b = 1.5 × β = 1.5 × 0.65 = 0.975, the ratio shown above approximately equals that expected from the Gutenberg-Richter relation: 10b × 0.7 = 100.68 = 4.81. Similarly, the short-term rates (columns 4 and 7) differ by a factor of 4.51.

The experimental short- and long-term forecasts for California and Nevada based on the ANSS catalog are implemented at our Web site http://scec.ess.ucla.edu/∼ykagan/cnpred_index.html. We update the forecasts every day around midnight Los Angeles time. To make our forecast compatible with other forecasts tested by CSEP we have slightly modified the forecast window and the magnitude threshold. The latitude limits are from 31.45°N to 43.05°N and the longitude limits are from 113.05°W to 125.45°W; and the magnitude cutoff is taken to be 3.95.

4 Discussion

Several long-term earthquake forecasts for California and southern California have been published recently (Bird and Liu, 2007; Shen et al., 2007; Helmstetter et al., 2007; Kagan et al., 2007). Bird and Liu (2007) and Shen et al. (2007) forecasts are based on geodetic, geologic and tectonic measurements. They translated tectonic deformation into earthquake rates, using the results by Bird and Kagan (2004) of earthquake size distribution in the continental transform boundaries. Helmstetter et al. (2007) and Kagan et al. (2007) obtain the estimate of earthquake rates by using principally the same technique as in this paper—by smoothing past seismicity. Helmstetter et al. (2007) used small (m ≥ 2.0) earthquake locations from the ANSS catalog in the time period 1981/01/01–2005/08/23. Contrary to that, Kagan et al. (2007) smoothed locations of large and moderate (m ≥ 5.0) historical and instrumental events starting with year 1800, to obtain a forecast map for southern California. Moreover, they replaced all earthquakes of magnitude 6.5 and larger by an extended source representation: The whole rupture area is used in the forecast calculation.

How can we compare these and other forecasts collected in the special issue of SRL (Field, 2007) to the present forecast? A forecast based on tectonic deformation would most likely perform best for very long time intervals, whereas shorter term earthquake clustering effects, such as aftershocks, would not be effectively forecasted. Similarly, the forecast based on historical data (Kagan et al., 2007) can be expected to perform less skillfully for shorter time intervals. We would expect that the forecast by Helmstetter et al. (2007) which has a high spatial resolution and an extensive data set of small earthquakes, would perform best in the short term. In this respect, the present forecast would fill the gap between these two programs. Kagan and Jackson (1994) conjectured that the forecast based on past earthquakes would be effective at the time scales comparable to the length of the catalog used in smoothing. However, the time intervals of the best predictive skills for all these forecasts are not known. The CSEP testing project (Field, 2007) should help us to quantitatively evaluate these bounds.

5 Conclusions

Forecasts based on the ANSS and PDE catalogs give very similar results for earthquake rate density. We prefer the ANSS forecast because it has a longer history, lower magnitude threshold and greater location accuracy than the PDE. Magnitude inconsistencies still present problems in both catalogs. For example, local and body wave magnitudes cannot be converted accurately to moment magnitudes. However, these problems do not seem to influence the forecasts severely. For example, differences between our smoothed seismicity forecasts and fault-based ones will dwarf any variations caused by magnitude inconsistencies.

The forecasts presented here are ready for testing by CSEP. The long-term forecast is based on earthquake data since 1932, and the rate density estimates vary little from year to year. Thus, the rate densities can be treated as constant in time for the duration of a five-year test. The short-term forecast is implemented by an open-source computer program with standard earthquake catalog input. It can be automatically updated on a daily basis in response to evolving aftershock sequences or other rapid changes in seismicity.

References

Advanced National Seismic System (ANSS) Catalog Search (2008), Northern California Earthquake Data Center (NCEDC), http://www.ncedc.org/anss/catalog-search.html.

Bird, P. and Kagan, Y. Y. (2004), Plate-tectonic analysis of shallow seismicity: Apparent boundary width, beta, corner magnitude, coupled lithosphere thickness, and coupling in seven tectonic settings, Bull. Seismol. Soc. Am. 94(6), 2380-2399 (plus electronic supplement).

Bird, P. and Liu, Z. (2007), Seismic hazard inferred from tectonics: California, Seism. Res. Lett. 78(1), 37–48.

Bolt, B. A., and Miller, R. D. (1975), Catalogue of Earthquakes in Northern California and Adjoining Areas; 1 January 1910 – 31 December 1972, Seismographic Stations, Berkeley, Calif., 567 pp.

Ekström, G., Dziewonski, A. M., Maternovskaya, N. N., and Nettles, M. (2005), Global seismicity of 2003: Centroid-moment-tensor solutions for 1087 earthquakes, Phys. Earth Planet. Inter. 148(2–4), 327–351.

Field, E. H. (2007), Overview of the Working Group for the Development of Regional Earthquake Likelihood Models (RELM), Seism. Res. Lett. 78(1), 7–16.

Gerstenberger, M. C., Wiemer, S., Jones, L. M., and Reasenberg, P. A. (2005), Real-time forecasts of tomorrow’s earthquakes in California, Nature 435, 328–331, doi:10.1038/nature03622.

Hauksson, E., K. Felzer, D. Given, M. Giveon, S. Hough, K. Hutton, H. Kanamori, V. Sevilgen, Wei, S. and Yong, A. (2008), Preliminary Report on the 29 July 2008 M w 5.4 Chino Hills, Eastern Los Angeles Basin, California, Earthquake Sequence, Seismol. Res. Lett., 79(6), 855–866.

Helmstetter, A., Kagan, Y. Y., and Jackson, D. D. (2007), High-resolution time-independent grid-based forecast for M ≥ 5 earthquakes in California, Seism. Res. Lett. 78(1), 78–86.

Hileman, J. A., Allen, C. R., and Nordquist, J. M. (1973), Seismicity of the Southern California Region, 1 January 1932 to 31 December 1972, Cal. Inst. Technology, Pasadena.

Jackson, D. D. and Kagan, Y. Y. (1999), Testable earthquake forecasts for 1999, Seism. Res. Lett. 70(4), 393–403.

Kagan, Y. Y. (1991), Likelihood analysis of earthquake catalogues, Geophys. J. Int. 106(1), 135–148.

Kagan, Y. Y., Bird, P., and Jackson, D. D. (2010), Earthquake patterns in diverse tectonic zones of the Globe, Pure Appl. Geophys., this issue.

Kagan, Y. Y. and Jackson, D. D. (1994), Long-term probabilistic forecasting of earthquakes, J. Geophys. Res. 99(B7), 13,685–13,700.

Kagan, Y. Y. and Jackson, D. D. (2000), Probabilistic forecasting of earthquakes, Geophys. J. Int. 143(2), 438–453.

Kagan, Y. Y. and Jackson, D. D. (2010), Earthquake forecasting in diverse tectonic zones of the Globe, Pure Appl. Geophys., this issue.

Kagan, Y. Y., Jackson, D. D., and Rong, Y. F. (2007), A testable five-year forecast of moderate and large earthquakes in southern California based on smoothed seismicity, Seism. Res. Lett. 78(1), 94–98.

Kagan, Y. Y. and Knopoff, L. (1987), Statistical short-term earthquake prediction, Science 236(4808), 1563–1567.

Preliminary Determinations of Epicenters (PDE) (2008), U.S. Geological Survey, U.S. Dep. of Inter., Natl. Earthquake Inf. Cent., http://neic.usgs.gov/neis/epic/epic.html and http://neic.usgs.gov/neis/epic/code_catalog.html.

Schorlemmer, D. and Gerstenberger, M. C. (2007), RELM Testing Center, Seism. Res. Lett. 78(1), 30–35.

Schorlemmer, D., Gerstenberger, M. C. Wiemer, S., Jackson, D. D., and Rhoades, D. A. (2007), Earthquake likelihood model testing, Seism. Res. Lett. 78(1), 17–29.

Shen, Z.-K., Jackson, D. D., and Kagan, Y. Y. (2007), Implications of geodetic strain rate for future earthquakes, with a five-year forecast of M 5 earthquakes in southern California, Seism. Res. Lett. 78(1), 116–120.

Working Group on California Earthquake Probabilities (WGCEP) (1990), Probabilities of large earthquakes in the San Francisco Bay Region, California, USGS Circular 1053, 51 pp.

Working Group on Southern California Earthquake Probabilities, (WGCEP) (1995), Jackson, D. D., Aki, K., Cornell, C. A., Dieterich, J. H., Henyey, T. L., Mahdyiar, M., Schwartz, D., and Ward, S. N., Seismic hazards in southern California: Probable earthquakes, 1994-2024, Bull. Seism. Soc. Am. 85, 379–439.

Working Group on California Earthquake Probabilities (WGCEP) (2003), Earthquakes probabilities in the San Francisco Bay region: 2002 to 2031, USGS, Open-file Rept. 03-214.

Working Group on California Earthquake Probabilities (WGCEP) (2009), Field, E. H., Dawson, T.E., Felzer, K. R., Frankel, A. D., Gupta, V., Jordan, T. H., Parsons, T., Petersen, M. D., Stein, R. S., Weldon II, R. J., and Wills, C. J., Uniform California earthquake rupture forecast, Version 2 (UCERF 2), Bull. Seism. Soc. Am. 99, 2053–2107.

Acknowledgments

The authors appreciate support from the National Science Foundation through grants EAR 04-09890 and EAR-0711515, as well as from the Southern California Earthquake Center (SCEC). SCEC is funded by NSF Cooperative Agreement EAR-0529922 and the U.S. Geologic Survey (USGS) Cooperative Agreement 07HQAG0008. We thank Kathleen Jackson for significant improvements in the text. Comments by M. Murru, an anonymous reviewer and the Associate Editor D. Rhoades have been helpful in the revision of the manuscript. Publication 1246, SCEC.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License (https://creativecommons.org/licenses/by-nc/2.0), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Kagan, Y.Y., Jackson, D.D. Short- and Long-Term Earthquake Forecasts for California and Nevada. Pure Appl. Geophys. 167, 685–692 (2010). https://doi.org/10.1007/s00024-010-0073-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00024-010-0073-5