Abstract

Background

High-resolution Digital Image Correlation (DIC) measurements have previously been produced by stitching of neighboring images, which often requires short working distances. Separately, the image processing community has developed super resolution (SR) imaging techniques, which improve resolution by combining multiple overlapping images.

Objective

This work investigates the novel pairing of super resolution with digital image correlation, as an alternative method to produce high-resolution full-field strain measurements.

Methods

First, an image reconstruction test is performed, comparing the ability of three previously published SR algorithms to replicate a high-resolution image. Second, an applied translation is compared against DIC measurement using both low- and super-resolution images. Third, a ring sample is mechanically deformed and DIC strain measurements from low- and super-resolution images are compared.

Results

SR measurements show improvements compared to low-resolution images, although they do not perfectly replicate the high-resolution image. SR-DIC demonstrates reduced error and improved confidence in measuring rigid body translation when compared to low resolution alternatives, and it also shows improvement in spatial resolution for strain measurements of ring deformation.

Conclusions

Super resolution imaging can be effectively paired with Digital Image Correlation, offering improved spatial resolution, reduced error, and increased measurement confidence.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Digital Image Correlation (DIC) is a non-contacting technique used to examine localized strain across a material’s surface [1]. By comparing images of a sample before, during, and after load application, DIC can calculate surface deformation and strain at any point which is visible to cameras. This method allows for measurements to be taken at a variety of length scales without a loss of quality in the measurement for different physical scales [2].

Although many physical length scales can be used, the resolution of the images used to compute deformation remains a limiting factor for DIC measurements. DIC can detect sub-pixel magnitudes of displacement [3], but the measurements are computed using subsets of pixels, thus averaging each ‘pointwise’ displacement measurement over the area of each subset. In addition, strains are computed from multiple subset displacements, averaging the strain measurement over an even larger area [4] and causing undesirable spatial ‘smoothing’ of measurements [5]. Due to the number of pixels necessary for a single strain data point, the physical size of the subsets used has a direct impact on the spatial smoothing of the measurement. To maximize the benefits of the full-field measurements from DIC compared to single averaged measurements from strain gauges, higher resolution images allow for smaller physical subset sizes which produce each “pointwise” measurement. This is especially true when it is necessary to perform DIC over small regions of interest.

To address the need for improved spatial resolution, some researchers have improved image resolution by removing a sample from an experiment’s controlled environment to take DIC images under an optical microscope before and after deformation [6]. Similarly, even higher-resolution DIC images have been captured with a scanning electron microscope (SEM) [7, 8]. Such microscopy techniques enable measurements at the nanometer length scale, improving the spatial resolution. However, this added resolution in a single image often comes with a reduced field of view. To overcome the issue of small fields of view, images with adjacent fields of view can be stitched together to create one single image. At the optical scale, researchers have stitched together multiple images from optical microscopes in order to compare DIC results with grain microstructure [9, 10]. Stitching has also been demonstrated at the SEM scale, [11, 12], allowing even higher resolutions. The result is higher spatial resolution, with DIC subset covering smaller physical areas, while capturing large fields of view.

However, such high-resolution, large field-of-view methods face two key limitations. First, microscopy-based experiments must either be (a) conducted under operating conditions that are survivable by the imaging equipment or (b) performed ex-situ, removing the sample from the experiment’s environment. At room temperature, specialized SEM setups have been developed that allow in-situ images [13,14,15], whereas high-temperature applications have traditionally utilized ex-situ measurements [16]. The second limitation stems from the working distance. Previous experiments have been performed using microscopes that have very short working distances, making stitching ill-suited for environmental chambers and long-range optics. High-magnification imaging with low-resolution cameras in specially-fitted has facilitated in-situ DIC of small fields of view at elevated temperatures, but requires a specially fitted optical microscope heating stage [17]. Similarly, long-range optics have allowed for high-resolution DIC measurements in environmental chambers, but without accommodation for large fields of view [18]. However, the challenge remains that high-magnification in-situ measurements with full fields of view are limited by working distance of the optics.

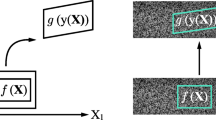

As an alternate approach, Super Resolution (SR) imaging techniques may produce images of sufficiently high resolution while maintaining larger fields of view and long working distances. Super resolution is a post-processing technique to combine multiple overlapping low resolution (LR) images to produce a high resolution (HR) image in a region common to all images [19]. Image stitching and SR are compared schematically in Fig. 1. While both processes are shown to yield similarly increased resolution, image stitching is often done ex situ (or occasionally under an environmental microscope), whereas SR could theoretically improve the process by allowing the LR images to be taken in situ and at longer working distances (although the HR images still require post-processing).

This super resolution post processing of images has been a growing field over the last several decades. The basic principles were initially studied as early as 1974 as a mathematical method to improve images beyond resolutions otherwise limited by the diffraction limit of light, and reduce effects of blurring in images [20]. In 1984, Tsai and Huang first applied some of these principles for creating higher-resolution images from multiple frames [21]. Early applications ranged from improving resolution of emission spectra images in biochemistry [22] to overcoming the quality-reducing effect of atmospheric turbulence in telescopes [23]. As potential applications for super resolution imaging surfaced, computing ability also improved, leading to several advances in SR taking place in recent years. Emerging applications in other fields include retinal imaging [24], other telepathology [25], and improved smartphone cameras [26]. In the case of smartphones, SR techniques have allowed camera resolution to approach that of traditional cameras such as DSLRs by overcoming current sensor, pixel, aperture, and other hardware limitations. Some variations of super-resolution processes use the techniques to improve a single low-resolution or blurry image [27], compared to traditional applications which use multiple images with overlapping fields of view to improve accuracy [28]. Among the newest advances in super-resolution are the utilization of machine learning, comparing known low- and high-resolution image pairs to train super-resolution algorithms [29], demonstrating the expanding set of potential applications.

While principles of super resolution have been solving a variety of problems for years, SR has yet to be applied in the field of experimental mechanics. This paper demonstrates the potential application of super resolution imaging to improve high-magnification DIC measurements using open-access SR software. The three techniques featured in this software and examined in this research are Robust Super Resolution [30], the Papoulis Gerchberg method [19, 31], and Structure Adaptive Normalized Convolution [32], the merits of which are discussed in the theory section below. The algorithms are evaluated for their effectiveness to perform DIC: first qualitatively, by reconstructing a sample image and comparing the quality of each visually; then quantitatively, by rigid body displacement and deformation measurements. The quantitative SR tests are then compared with unprocessed LR results to demonstrate the improvements in high-magnification DIC due to the SR resolution.

Theory

There are two common processes which are essential to all super resolution (SR) algorithms: 1) identifying position of each low resolution (LR) image with respect to one common high resolution (HR) reference grid and 2) projecting LR pixels onto the grid [19]. Figure 2(a) shows schematically a set of 4 LR pixels on a small section of the HR grid. The LR pixels are numbered 1–4, while the HR pixels are lettered A-D. Because each LR image has some displacement Δx and Δy with respect to the HR grid, the SR algorithm must determine which LR pixels will influence a given HR pixel. In Fig. 2(a), an HR pixel is shown to be influenced by up to four LR pixels from just a single image. For example, HR pixel A is entirely contained within LR pixel 1; HR pixel B lies partially within LR pixels 1–2; while HR pixel D lies partially within all four LR pixels 1–4. Because there are often several LR images input into a SR algorithm, many LR pixels are used to create a single HR pixel. Figure 2(b) shows schematically the same 4 h pixels projected onto a second LR grid with pixels numbered 5–8. For example, pixel A is entirely contained within pixels 1 and 5 and would thus weight both pixels evenly; while pixel B has larger proportions within pixels 2 and 5 than within pixels 1 and 6, and would thus weight pixels 2 and 5 more heavily. The specific processes of these two steps, as well as a description of the algorithms used to accomplish these steps, are described below.

Step 1: Position Registration Between LR and HR

The first challenge in super-resolution computing is placing multiple LR images onto a common grid. To take advantage of information from multiple images, the images must necessarily be unique from each other. This is often due to some small translational displacement between the camera position in the capture of the image [33], but it can also be caused by lens distortion or other deformations caused by the camera and lens system [34]. To reconcile all images, these camera displacements between a chosen first image and subsequent images must be estimated with sub-pixel accuracy. Those relative displacements then allow the position of each image to be registered on the common grid or framework for the SR image.

There are several algorithms to accomplish this step of position registration. Early efforts used a transformation to the frequency domain, where translations in the horizontal and vertical direction can be estimated by frequency phase shifts [21, 35]. Such methods assume global motion occurs for the entire field of view, which is a potential disadvantage for images whose subjects undergo non-uniform deformation [36]. Several additional techniques have been implemented to improve their performance of such methods. These include extracting rotation information from the phase shifts [35], as well as avoiding aliasing by using low-frequency parts of the image [37]. A more recent algorithm, proposed by Vandewalle et al. [38] uses this Fourier transform on the image to identify translation on subsequent images when compared with an initial image. This algorithm combines the robustness against aliasing from frequency filtering with the ability to capture rotations as well as linear displacements from the phase shifts and amplitudes.

Other methods remain in the image spatial domain, rather than the frequency domain [39]. One of the foundational spatial domain algorithms was developed by Keren [40]. It utilizes Taylor expansions to estimate planar motion between images, based on the parameters of rotation and vertical and horizontal shifts. The algorithm then seeks to minimize the error of the approximation, solving a set of linear equations to find the shift and rotation parameters. This is an iterative method, adding the parameter solutions to the system of linear equations and resolving until it converges sufficiently. In order to cut down on computation time, the algorithm uses a ‘Gaussian pyramid’ scheme, which focuses first on a coarse down-sampled image, and then a progressively finer down-sampled image until the full image is used. Other spatial domain methods have been developed which can account for other motion models such as segmented and temporal motion [41]. Algorithms have also been developed which estimate the rotation first, then correct the rotation before estimating spatial shifts [42].

Both the spatial and frequency-based registration algorithms return rotation and shift parameters. These shifts, with sub-pixel accuracy, allow all LR images to be placed on a common reference grid. Once these shifts have been estimated, the pixel information from the LR images can then be used to construct the SR image [38]. Of the methods discussed, Keren’s is used through the rest of this paper.

Step 2: Combining Multiple LR Images into a Single SR Image

After positioning all LR images on common coordinates, the overlapping information from the LR pixel sets must be processed and combined into a single SR pixel set. Several algorithms have been developed to accomplish this reconstruction. They have some common features, yet they also vary in complexity. A comparison of the features of four algorithms is included in Table 1. They are fairly representative of SR capabilities and provide a framework with which to analyze the application of SR imaging for DIC measurements.

One of the earliest SR techniques to create a high-resolution grid from projected low-resolution pixels is Iterated Back Projection (IBP). The goal of IBP is to construct a SR image that, when deconstructed into LR images, best reproduces the original LR set [43]. The SR image is obtained iteratively from an initial guess featuring a grid of SR pixels with the same resolution and placement as the desired SR outcome. After each iteration, the SR image is deconstructed by averaging groups of SR pixels together based on (1) the size of SR pixels with respect to a LR pixel, (2) the diffraction pattern of a single point, transmitted to the image plane on the sensor (the point spread function)[44], and (3) the distance between each SR pixel and the LR pixel being influenced. A simple example, without consideration of the point spread function, is demonstrated in Fig. 2(b): HR pixel A lies entirely within LR pixel 1 and thus is weighted entirely in the average; whereas HR pixel D is only ¼ within LR pixel 1 and is thus weighted by ¼. Once deconstruction of a HR estimate is complete, the original and deconstructed LR images are compared to update the HR result as informed by considerations (1)-(3). This process is iterated until the simulated LR images converge with the original LR images within an acceptable error.

One of the shortcomings of the IBP method is oversensitivity to noise. In the algorithm, when the normalized average of the LR pixels is taken, there is no significant mechanism to address issues of noise. To respond to this, the Robust Super-Resolution (RSR) algorithm uses a median estimator, rather than an average [30]. This makes the algorithm more robust against noise outliers. The result is an algorithm that builds on IBP by addressing the significant drawback of high sensitivity to motion blur or high noise. Because RSR is itself a direct improvement upon IBP, only RSR is considered through the rest of this paper.

Similar to IBP and RSR, the Papoulis-Gerchberg (PG) algorithm works through iteration [19]. For its initial guess, any SR pixel which lies entirely within one LR pixel is given the same value as the LR pixel. Any SR pixels which span multiple LR pixels are initially set equal to zero [31]. After known values are assigned, extrapolation between known pixel values is performed using signal processing techniques developed by Papoulis and Gerchberg [45]. This extrapolation is an iterative process of alternate projections and begins by transforming the image signal from the spatial to the frequency domain. The spectral signal goes through a low-pass filter, and the signal is then transformed back to the spatial domain. This new, extrapolated signal is then added to the original known signal, and the transformation and filtering is iterated. Each iteration reduces the mean square error of the extrapolation, and eventually the iterations will converge. The result is a noise-reduced SR image.

Finally, Structure Adaptive Normalized Convolution (SANC) is a response to the need to pick up underlying directional textures in the image, such as lines and curves. SANC works by assuming that LR images are blurred by a Gaussian convolution [32], meaning that a pixel is assumed to be influenced by pixels which lie close to it. Many SR algorithms (including IBP) assume Gaussian blur, which they refer to as a point spread function [4]. SANC is unique, however, because it considers image structure when assuming a Gaussian blur and it accounts for signal certainty. Image structure is considered for every pixel in the use of a gradient structure tensor. The gradient structure tensor determines if a pixel lies along a line in the image. Normalized averaging similar to IBP is performed, but it is improved by using information from the gradient structure tensor and accounting for signal certainty in a similar way to RSR. SANC uses both methods to limit the effect of noise and accurately predict shape structure when performing SR.

Methods

To assess the usefulness of SR computing in improving spatial resolution in DIC, three of the algorithms are used in several separate tests: RSR, PG, and SANC. In the first test, a qualitative comparison is performed in which an image is downsampled and then reconstructed using the SR algorithms, to demonstrate the advantages and disadvantages of each algorithm. The second test evaluates the pairing of SR and DIC in comparing applied and DIC-measured rigid body translation of a patterned specimen. The third test consists of a mechanical test which produces a non-uniform strain field, to compare LR and HR images and their effectiveness in DIC strain measurements.

SR Algorithm Initial Comparison

An initial test determined the accuracy with which each SR algorithm could recreate an existing HR image. A test image, shown in Fig. 3, was chosen which contains 3 important features: a) familiar shapes, b) straight edges, and c) areas of repetitive texture. This highlights the ability of each SR algorithm to reproduce those features. These abilities help to inform the practicability of using these algorithms for DIC-based strain measurements.

(a) LR pixels (1, 2, 3, 4) have a displacement of Δx and Δy with respect to the HR grid pixels (A, B, C, D), which allows registration on the common HR grid, and (b) Pixels from an LR image (1, 2, 3, 4), overlapping with pixels from another LR image (5, 6, 7, 8) are both used to inform the same HR pixels (A, B, C, D)

Using super resolution imaging software developed and made publicly available by Vandewalle et al. [38], the HR test image is deconstructed into nine overlapping LR images. First, nine copies of the HR image are created, shifting all but the first by random x and y displacements, ranging from -4 to 4 h pixels in 0.125 h sub-pixel increments. Next, each of the 9 h images are converted to LR images, downsampling by averaging each 2 × 2 group of HR pixels into a single larger LR pixel. In pixels which shift beyond the edge of the initial image, pixels outside the edge of the shifted region of interest retain their original values. Since each LR pixel summarizes data from 4 h pixels, the overall size of the LR image is reduced to a quarter of the HR image. The 9 LR images are then run through each of the chosen SR algorithms, using the software from Vandewalle, with an interpolation factor of 2, meaning that each dimension of the image is increased by a factor of 2. Thus, each LR pixel covers the same physical area as a 2 × 2 set of SR pixels. The result of the test is one SR image for each algorithm which could be compared to the HR image of the same resolution and field of view. This process is depicted in Fig. 4. Visual inspection rather than numerical interpretation is used for comparison, as is widely done in comparing SR algorithms [46].

Rigid Body Translation Test

As a first introduction of SR imaging in DIC measurements, SR images were used as input images to measure a known translation. First, a micrometer-driven translation stage was positioned vertically as shown in Fig. 5, holding a small T-316 stainless steel ring sample, with outer diameter of 12.7 mm and wall thickness of 1.2 mm. A speckle pattern was applied to the ring with black paint on a white background. A Basler 15 MP camera was attached to a second vertically positioned translation stage, allowing controlled offsets of the image to produce overlapping LR fields of view. These stages were separated to allow a 290 mm working distance between the end of a 25 mm lens and the ring sample, representative of distance requirements for viewing through an environmental chamber. The specimen was illuminated by Cole-Parmer fiber optic lights. The camera’s field of view, including the speckled ring, is shown in the right of the figure.

A series of images were then taken of the specimen as summarized in Table 2. After focusing the lens on the ring sample, 9 reference images were taken at differing camera positions, followed by 9 noise images. The camera position varied from ± 0.0254 mm in both the vertical and horizontal directions and was centered about zero. The ring sample was then translated 0.127 mm in the vertical direction, and 9 images were taken in the same manner. This process was repeated up to a final ring sample translation of 0.762 mm. Prior to super resolution post-processing, each LR image was cropped to the same size (1500 × 1644 pixels) to still capture the ring along with applied translation, while reducing computation time. The result was a set of images for each algorithm which included a single image at every translation of the ring.

For each set of 9 LR images, the same SR software used in the initial comparison test was used to produce 3 SR images. For all 3 SR images, the step 1 image registration was again performed using the Keren registration algorithm. Step 2 was then performed using the Robust Super Resolution (RSR), Papoulis-Gerchberg (PG), and Structure-Adapted Normalized Convolution (SANC) algorithms, respectively, with an interpolation value of 2. The SR images produced by each of the RSR, PG, and SANC algorithms were then imported into VIC-2D [47], a commercial DIC algorithm which is widely used in the experimental mechanics community. Correlation was performed using a subset size of 49 pixels and a step size of 5 pixels to obtain full field displacements. For comparison, a set of images consisting of one LR image from each displacement was also imported into VIC-2D. A subset size of 25 pixels and step size of 3 pixels was used for the LR measurement, such that a comparable physical area in mm would be represented by each LR and SR subset. The displacements were then plotted against the known applied displacements to assess how closely each of the SR methods can reproduce a known translation, and to compare SR-DIC results to traditional LR-DIC measurements.

As a comparison tool, two additional images sets were produced: One LR image set made by averaging the nine LR images to combat noise (referred to as LR Average), and one HR image set which expands the single LR Average image by a factor of 2 through cubic interpolation (referred to as HR Interpolation). To produce the LR Average image set, the LR images were first shifted by the x and y offsets found with the Step 1 Keren algorithm in order to register on a common grid, then averaged together. Expanding each LR Average image by a factor of 2 through bicubic interpolation, then, provides a benchmark to compare the RSR, PG, and SANC algorithms against. Both the LR Average and HR Interpolation image sets were prepared and imported into VIC-2D for DIC measurement.

After preparing displacement data, further analysis on the accuracy of the measurement was performed, investigating the effect of subset size on the spatial standard deviation of the displacement measurement, as subset size has a significant impact on the correlation accuracy [48]. For each algorithm, the analysis was first performed at the subset sizes described in the methods Sect. (49 for SR, 25 for LR). Smaller subset sizes were investigated, moving down in increments of 4 pixels for SR (45, 41…) and of 2 pixels for LR (23, 21…) to maintain similar physical subset sizes. This reduction in subset size continued until the images no longer correlated, thus exploring the lower limit of subset size for each algorithm. Similarly, subset sizes larger than the sizes of 49 and 25 were investigated in increments of 8 pixels for SR (57, 65…) and 4 pixels for LR (29, 33…) up to a size of 97 or 49 pixels. For every subset size, the same step size was maintained (5 for SR, 3 for LR) to preserve a similar number of total subsets.

Mechanical Deformation Test

To study the full implementation or SR imaging into DIC strain measurements, SR techniques were then used in a mechanical deformation test. The same ring specimen from the rigid body translation test was placed in a Gleeble 1500D load frame with an environmental chamber. The same camera and lens as before were aimed at the specimen through the chamber viewing window from a working distance of 330 mm. In addition, a Qioptic Optem Fusion zoom lens was used with a second Basler 15 MP camera and focused on a portion of the ring. The variable magnification of the zoom lens was adjusted to produce a field of view roughly 15 times smaller than the LR lens in order to provide a more accurate ‘high resolution’ image with which to compare the super resolution images. Custom grips were designed to apply a tensile load on the inner surface of the ring, as shown in Fig. 6.

Rigid Body Translation test setup. Translation stage with the camera and lens (left) was moved in both vertical and horizontal directions to capture 9 images, while speckled ring translation stage (right) was translated in the vertical direction to produce rigid body motion. Camera field of view, focused on the ring, is shown at the right

The grips were slowly moved apart until a small increase of force was registered by the load cell, indicating that both grips had come into contact with the inside face of the ring. From this zero-displacement location, a set of 9 images was then captured in succession, to be combined later to produce a single SR reference image. Several seconds passed between each of the 9 images to allow for small random offsets of the field of view caused by vibration of the load frame. Another set of 9 images was then captured to provide a noise measurement. The grips were then moved apart under displacement control in increments of 0.1 mm, causing a non-uniform strain distribution in the ring. At each displacement increment, another set of 9 images was captured. This process of grip displacement followed by image capture continued until a final net grip displacement of 1.4 mm was achieved.

Upon completion of the mechanical deformation, each set of 9 LR images was combined using the three SR algorithms. Then, the sets of SR images were imported in VIC-2D and correlated with a subset size of 49 and step size of 5. Similarly, one of the 9 LR images at each displacement was imported and correlated with a subset size of 25 and a step size of 3, allowing similar physical areas to be represented by each subset. The zoom lens images were also imported and correlated with a subset size of 151 and step size of 5. Strain maps were generated and compared for each of the SR methods and for the LR image set. A subset size-match confidence analysis was performed for the mechanical deformation test, following the same process used for the rigid body translation test.

Results

The comparison of the three chosen SR algorithms to LR imaging techniques is supported by the results of the three tests: The SR algorithm initial comparison, the rigid body translation test, and the mechanical deformation test. These results are summarized below.

SR Algorithm Initial Comparison

Figure 7 shows the results of the deconstruction and reconstruction of an image, meant to highlight similarities and differences between the SR algorithms. The original HR image depicting a “one-way” traffic sign in front of a background of trees and sky is shown in part (a); an enlarged section is shown in part (b). The same section of the LR image is shown in part (c), and the three SR versions of the section in parts (d), (e), and (f). A comparison of the HR and LR images shows that the words on the sign are blurred but still readable in the LR image. The straight edges of the sign have also become jagged due to pixel averaging, along with some loss of definition in the leaves in the background.

The results of the SR image reconstruction are given in Fig. 7(d), (e), and (f). The RSR algorithm slightly improves upon the LR image quality in regions of texture, but it also makes some features worse. For example, the leaves recapture some of their sharper colors, but the straight edges of the sign are visibly noisy and grainy. In the PG image, the shape of the letters are clearer than in the RSR and comparable to the LR, with sharper corners. However, the lines still have a more jagged appearance, with the diagonal lines appearing instead as long ‘steps’. The opposite is true in the SANC image, where several features are more smoothed over. This improves the quality of some features, such as the straight edges losing some of the jagged ‘steps’. However, it also worsens other features, as the corners of letters are less sharp than in PG. Overall, the SR images are generally clearer than the LR image, but they fall short of perfectly replicating the HR image.

The visual similarity between the original HR image and the reconstructed SR images can be compared using a metric developed by Wang et al. called structural similarity [49]. The structural similarity (SSIM) index is a quantification of the perceived similarity between two images of the same resolution, scaled between 0 (no similarity) and 1 (perfectly similar). Using a publicly available MATLAB implementation of the SSIM algorithm [49], the grayscale versions of the images were compared against each other. The indices for the super resolution images, compared against the original HR image, were 0.5678 for RSR, 0.6047 for PG, and 0.7663 for SANC. These results show the best score for SANC, with lower scores for PG and RSR.

Rigid Body Translation Test

Figure 8(a-d) shows the average vertical translation measured across all subsets of the ring sample for each LR and SR method as a function of the applied translation. Uncertainty bands of three standard deviations of the displacement of all subsets at each translation are also plotted. Thus, at the zero displacement, this 3σ uncertainty band represents the noise floor of the measurement, demonstrating the temporal variation when no displacement is applied. At the subsequent displacements, the uncertainty bands represent three times the spatial standard deviation. For many of the data points, the standard deviations are so small that the uncertainty bands overlap with the data markers for the mean displacements. Each plot also features a solid black line indicating the true applied translation of the sample, accompanied by dashed gray lines above and below, indicating the uncertainty of applied translation due to the resolution of the translation stage, defined as half a tick mark (tick marks are every 0.001 in or 0.0254 mm). The LR measurements were computed using the center image of each 9-image set, while the SANC, RSR, and PG measurements were computed using the constructed SR images. In all cases, the applied translation is within the applied translation uncertainty associated with the stage resolution, indicating that all 4 measurement methods show sufficient agreement with applied translation. Of note is the fact that the PG images at translation increments of 0.127 mm, 0.254 mm, and 0.381 mm failed to correlate in VIC-2D, and so no measurement data is seen for those measurements.

In Fig. 9, the mean measurement error from all subsets (defined as the applied translation subtracted from the measured translation) of the data displayed in Fig. 8 is plotted at each translation increment. The uncertainty bands again indicate three standard deviations of the measurement variation. As in Fig. 8, the solid black line marks zero error, or perfect agreement between applied and measured translation, and the dashed gray bounding lines show uncertainty of the applied translation due to the resolution of tick mark increments on the translation stage. Compared to Fig. 8, in which the uncertainty bands were too small to easily see, Fig. 9 allows a clearer side-by-side comparison of the error in each SR method. Again, note that due to select images failing to correlate, there is no PG data for the translation increments of 0.127 mm, 0.254 mm, and 0.381 mm. Also included in this figure are the LR Average and HR Interpolation benchmarks described in the methods. The LR Average set is expected to show a reduction in noise from combining all 9 images while maintaining the original resolution, and the HR Interpolation set expands the LR Average by a factor of two to match the size of the SR images without using the SR algorithms.

Plots showing average measured translation vs. applied translation, with applied resolution uncertainty bands in blue and perfect agreement in brown, for (a) LR images, (b) RSR images, (c) PG images, and (d) SANC images. Uncertainty bands on markers represent three standard deviations of the spatial variation in measured translation across all subsets. The solid black line shows perfect agreement between applied and measured translation, and bounding gray lines show uncertainty of applied translation due to stage resolution

Figure 10 shows further comparison between the SR and LR measurements for the largest applied translation (0.762 mm) in terms of how the spatial standard deviation is affected by subset size. The mean translation measurement for each subset size is shown in the first subplot, along with uncertainty bands (the same 3σ quantities shown in the uncertainty bands of Fig. 9). The subset size of the LR images is shown (in pixels) on the horizontal axis above the plot, while the subset size of SR images is shown (in pixels) on the horizontal axis below the plot. The second subplot shows the size of the uncertainty bands from the first plot, again as a function of subset size. The common physical subset size is shown on the very bottom horizontal axis. As expected, the uncertainty band size decreases as subset size increases. It should be noted that this trend holds for all methods, although this decrease is small enough in some cases (such as the LR data) that it is hard to see when all 6 methods are plotted on the same axes.

The error (measured—applied ring translation) averaged across subsets for DIC results based on LR, LR Average, HR Interpolation, RSR, PG, and SANC images, given at each applied ring translation. Note that markers have been slightly offset for readability, and are grouped around the corresponding applied translation. Uncertainty bands show three standard deviations of spatial variation, and gray bounding lines represent resolution uncertainty of the translation stage

Mechanical Deformation Test

The contours of displacements in the direction of loading for the LR, RSR, SANC and zoom lens DIC results are shown in Fig. 11. Each contour is taken at the same deformation increment and each is plotted on the same scale for the same field of view. The images produced with the PG algorithm failed to correlate, therefore there are no PG contours in Fig. 11. Also included in the figure are representative subsets of the speckle pattern on the specimen surface. All are taken from the same location on the surface, and the LR, RSR, and SANC cover the same physical area. The zoom lens subset covers a smaller area, located in the upper left corner of the other subsets.

Upper subplot: final measured translation plotted with respect to physical subset size, in mm; uncertainty bands indicate the spatial variation across all subsets, represented as three standard deviations. The solid black line shows the applied translation, bounded by dashed gray lines representing uncertainty of applied translation due to stage resolution. Note that SR data are slightly offset for readability (only sizes indicated by tick marks were used). Lower subplot shows the size of the uncertainty bands from the upper subplot

Discussion

Based on the results of the three tests just shown, several observations can be made on the strengths and weaknesses of the SR algorithms investigated. In addition, benefits and disadvantages of using SR imaging instead of traditional LR techniques are also demonstrated. These characteristics shown by the SR initial comparison, rigid body translation, and mechanical deformation tests are discussed below.

SR Initial Comparison Test

The initial comparison effectively shows some of the qualitative differences between the SR algorithm results. Comparing the images in Fig. 7(b)-(f), all 3 SR algorithms show slight improvements over the LR, but the image quality of each SR image is poor compared to the HR.

In the case of RSR, some features show slight improvement over the LR image, such as recapturing some of the brighter colors on the lighted portions of the leaves. But many of the features become more pixelated than even the LR image, causing a loss of definition. This is evident in the screw at the top of the sign; the circular boundary between screw and sign is more distorted in the RSR image than in the LR image. Although some aspects are visually clearer, the algorithm still adds graininess that is not present in the LR.

In the case of PG, the outline of the blue gaps of sky between leaves is slightly more defined in the PG image. Additionally, the lettering has slightly heavier weight than the LR, making it more comparable to the HR. However, ‘trailing steps’ can be seen leading away to the left from the black-white diagonal border of the sign. This can also be seen in the LR image, but not in the HR image. Rather than smoothing out this boundary to approach the target HR image, the PG amplifies the trailing steps and emphasizes the artificial feature.

In the case of SANC, the appearance seems to fit most closely to the HR image. The lines and boundaries are smooth, and objects are easier to recognize than in either the RSR or the PG images. However, this strength of the SANC image is also its greatest shortcoming. Some details found in the HR image are lost to the smoothing effect, such as the blurring of letter corners.

This comparison test demonstrates that the most recent innovation of the three SR algorithms (SANC) performs best in visually obvious elements of reconstruction, but also highlights some of the potential downfalls in the new application to DIC. Because the SANC algorithm accounts for shape features, it follows that the features in the SANC-reproduced image more closely resemble those found in the HR image. The algorithm distorts the shape of the convolution kernel to match image geometries based on gradient fields. Thus, the SANC image best replicates the features and objects in an HR image in a qualitative ‘eye’ test. But this smoothing of feature boundaries could lead to decreased performance in reconstructing speckle patterns. This could be especially true when individual speckles are relatively small compared to the features that the SANC algorithm is built to search for. And while RSR and PG produce more pixelated shapes, they may still work for speckled patterns used in DIC.

Rigid Body Translation Test

In the rigid body translation test, images from all three of the SR algorithms measure an applied translation as well as or better than LR images. In Fig. 8, the average of all subsets in each image that successfully correlated was within the resolution uncertainty of the translation stage. However, some of the images produced by the PG algorithm failed to correlate, meaning that data was not extractable at all translation increments. Upon inspection of those images which failed to correlate, a checkerboard pattern of black pixels was interspersed in the image. This was also evidenced in the PG images from the mechanical deformation test as seen in Fig. 12. Three different types of this defect were found: A checkerboard of mostly black pixels, as seen on the far left of the figure, a series of black pixel vertical stripes, or a checkerboard of mostly non-altered pixels. This conversion of pixels to black did not appear in the LR original, shown in the right of the figure, or in the other SR images, as seen in the subsets of Fig. 11. This checkerboard pattern also did not appear in the PG images from the initial comparison test with the one-way sign. It may be caused by the PG algorithm, a bug in its implementation in this software [38], or a combination of both, but it can obviously affect the DIC results or make correlation impossible.

DIC contours for displacements in the direction of loading for LR, RSR, SANC, and Zoom lens. Corresponding subsets of the speckle pattern for each method are shown in the bottom row. For legibility in this paper, the contrast was artificially improved using Matlab’s histogram equalization function, but only the un-altered versions were used in DIC calculations. The contrast of the un-altered images is also reported, defined as the span of the median 90% of pixel gray levels [50]

Figure 9 affords a clearer look at both the accuracy and shortcomings of the different methods, by focusing on the error between the measurement and applied translation. All six image sets produced averages that lie close to the line of perfect agreement and within the bounding lines marking the uncertainty of applied translation due to stage increment resolution. At each translation increment, the ring translation was the same for all methods, and all images are centered on the same point. Thus, the unknown error in applied translation due to stage resolution uncertainty is the same for each of the three SR methods and the LR method. Therefore, the spread of bias errors shown in the figure between the six methods at each increment reflects on the precision of the methods. Although all six show some variability in these bias errors from increment to increment, they all show acceptable precision well within the stage resolution uncertainty.

Because of this unknown error in the applied measurement, it is difficult to assess the three SR algorithms based solely on the average displacements in Fig. 9. To provide further clarity, the uncertainty bands can highlight the consistency within each image. Because there is no strain or deformation in this rigid body translation test, the applied motion is nominally uniform and ideal measurement would yield zero variation. At zero translation, the uncertainty bands show the noise floor. As expected, the LR image gives the largest spatial variation, followed by the LR Average and HR Interpolation benchmarks. The three SR algorithms show slight improvement over the benchmarks in their noise floors, with RSR showing the greatest difference.

A significant contribution to this measurement error is the temporal variation from image to image. In particular, the LR image sets are the most prone to temporal variation, or noise. This is because all other methods benefit from combining information from multiple images. To better understand the temporal variation of these inherently noisier LR images, a series of untranslated LR images was correlated, and a specific subset (seen in the left of Fig. 13) was followed through time. The fluctuation of the measured translation of that subset is shown at the right of Fig. 13. This temporal variation helps to quantify the error of the LR measurement associated with the random noise, which is mitigated through averaging in producing the images with the other methods.

At nearly every subsequent translation increment in Fig. 9, the LR also has the largest bands, demonstrating the greatest spatial variation amongst the subsets. The improvement in spatial variation each method offers over LR is given in Table 3. Generally, the size of the uncertainty bands remains relatively uniform from increment to increment for the LR Average and HR Interpolation benchmarks and SR images. However, the RSR varies greatly from increment to increment, showing the best and worst improvement over LR at different increments, as seen in the table. Because the data from the PG image dropped at several increments due to the loss of pixels as shown in Fig. 12, it also has consistency issues. The SANC and benchmarks demonstrate consistency and seems to show the greatest reliability in the accuracy of the measurement, with the SANC generally having uncertainty bands of a size equal to or smaller than the benchmarks.

The differences in spatial variation are further investigated as a function of subset size in Fig. 10. These data are taken from the measurements at the final ring translation increment of 0.782 mm, showing uncertainty band size plotted against the common physical subset size for the LR, benchmark, and SR images. At each comparable physical subset size, the SR algorithms all have smaller spatial variation, giving greater confidence in the measurement. Across all subset sizes, the LR Average benchmark showed a reduction in the band size by a factor of about 3, which aligns with the expectation that averaging N images can reduce noise by a factor of √N [51]. Interestingly, the HR Interpolation benchmark data showed nearly identical band size as the LR Average at the same physical subset size, despite having 4 times more pixels per subset. This suggests that the added resolution through single image interpolation provides ‘empty magnification’ without adding useful information to the LR Average image. In contrast, the SR uncertainty bands are smaller than those of the benchmark data at the same subset sizes. This difference becomes more pronounced as subset size increases, with PG and RSR showing improvement by a factor of roughly 2 at the largest several subset sizes. This reduction of spatial variation seems to be due to more than just the averaging effect on image noise. As one of the main contributors to spatial variation errors is pattern-induced bias error [52], this may indicate that SR algorithms can improve the quality of the pattern captured.

Also interesting to note in Fig. 10 is the lower limit to subset size; data is left off the plot once the image no longer correlates. The RSR algorithm has a subset size lower limit of 21 pixels, which is the same as the LR has. However, this 21-pixel RSR subset covers a quarter of the area that the 21-pixel LR subset does, allowing much finer strain resolution. Although the LR Average offers a reduction in noise over the original LR images, the lower limit is only slightly improved from 21 to 19. When considering the actual physical subset size, the lower limit of the HR Interpolation is better than the PG and SANC algorithms but worse than the RSR. This superior range of subset size, combined with superior spatial variation error, clearly demonstrates the advantages the RSR algorithm holds over the other methods.

Mechanical Deformation Test

The mechanical deformation of the ring in this test demonstrated the capabilities of the LR and SR methods to measure heterogeneous strain fields. The results show similar displacement contours between the LR and SR images, as seen in Fig. 11. In each case, the ring exhibits a greater displacement gradient on the inside edge of the ring compared with the outer edge. This is consistent with higher strains at the inner edge as the curved ring is stretched and straightened, which is the expected behavior in this loading case. This distribution seems to match up well between the LR and SR. There are slight differences between the contours, however, as both the RSR and SANC appear to show slightly greater displacements on the top of the inner edge of the ring than the LR. The ‘higher resolution’ zoom lens image shows slightly higher displacements than the SR at the same location on the inner edge. However, the fact that the SR contours are slightly closer to the more accurate Zoom contour should not overshadow the reality that all methods produced very similar results.

The subsets shown in the bottom of Fig. 11 do give some insight into the differences between the various methods. The LR subset shows a typically pixelated speckle pattern. The RSR subset also looks pixelated, although there is a difference in the gray levels of the speckle borders. This seems to indicate a more gradual transition from the dark interior of the speckle to the lighter background. A similar effect is seen in the SANC subset, except that these transition zones are much smoother, leaving speckles that are more ‘bloblike’ than blocky. Neither of the SR methods approached the clarity of speckle offered by the Zoom lens with roughly 15 times the resolution of the LR images, as seen in the Zoom subset.

Pairing of Zoom Lens with Super Resolution

The use of the zoom lens in the mechanical deformation test served as a standard of comparison for the SR algorithms, demonstrating that SR is equally capable in deformation measurements, if not offering slight improvement. It follows that pairing the two methods of higher resolution, a zoom lens and SR techniques, could produce further improvement. That possibility was evaluated by combining multiple zoom lens images at each grip displacement increment using the RSR and SANC algorithms, and then using Vic-2D to produce DIC strain contours. The RSR contour failed to correlate, but the SANC contour is shown in Fig. 14.

DIC displacement field in the direction of loading at final grip displacement increment for the combination of the zoom lens and SR. The SANC algorithm with an interpolation factor of 1.5 was used. A representative subset is overlaid on the portion of the speckle pattern shown below the contour. As with Fig. 11, the contrast was improved through Matlab’s histogram equalization for visualization of speckles, but only the un-altered versions were used in DIC calculations

The contour shows good agreement with the LR zoom lens results of Fig. 11, with similar distributions of displacements at comparable locations. However, it should be noted that the same speckle pattern is on the ring for the original LR images and these SR zoom images, meaning that the speckle size and length scale of the pattern is ill-suited for such an increase in resolution, as can be seen in the subset of Fig. 14. Because the zoom lens’ field of view is roughly 15 times smaller than the lower magnification lens, and because an interpolation factor of 1.5 was used for the SR zoom images, the pixel size of speckles in Fig. 14 is approximately 23 times larger than in the LR contours of Fig. 11. As such, the results of the SR zoom images are limited by the speckle pattern. This is expected to be the reason that the RSR zoom images failed to correlate in the DIC software. However, this does highlight that SR can be paired with lenses of varying magnification, as long as the length scale of the speckle pattern is appropriate for the image resolution.

Summary of Algorithm Performance

Through the three tests, differences in performance between traditional LR images and the 3 distinct SR alternatives became apparent. The initial comparison with the one-way sign showed clear improvement from the LR images for SANC, and to a lesser degree the PG and RSR also showed some improvement. However, none perfectly replicated the original HR image. Rigid body translation began to show problems with pairing PG with a DIC speckle pattern, dropping some of the translations. The minimal error in both RSR and SANC measurements showed good accuracy, although the uncertainty bands of SANC showed better consistency than was offered by the RSR algorithm. Conversely, RSR showed the smallest physical subset size, offering the best spatial resolution of the displacement measurement and the lowest spatial variation error. In the mechanical deformation, the issues with PG and DIC became very clear, causing all images to fail to correlate. In pairing SR with the zoom lens, the SANC image set was the only one of the three which was able to successfully correlate to the final deformation (Table 4).

Conclusion

In summary, the application of super resolution (SR) algorithms was investigated as a method of increasing the resolution of DIC strain measurements for samples at long working distances. Comparisons between Robust Super Resolution (RSR), Papoulis Gerchberg (PG), and Structure Adaptive Normalized Convolution (SANC) were evaluated through three tests: visual inspection, rigid body translation, and mechanical deformation experiments. The first test demonstrated that all three algorithms produce images which have some improvement visually over the LR images they are constructed from, with SANC performing best. The second test showed significant improvement offered by all three algorithms in measurement accuracy, precision, and spatial variation error, with RSR performing the best and PG failing to correlate in some cases. The final test again showed improvement in spatial resolution when using SR methods, and RSR and SANC performed equally well. With all three tests considered, SANC seems the algorithm best suited for SR-DIC among those investigated in this work, although RSR performs nearly as well and computes much more quickly.

When increasing resolution through SR methods, SR-DIC measurements show improvement over the original LR images, although they do not show the same improvement that would be expected from increasing to the same resolution through improved optics. There is some increase in computational time when taking these measurements, and the time required to capture multiple images makes it better suited for quasi-static in-situ experiments. Tests which most benefit from the use of SR techniques are those in which long working distances and small sample size prevent DIC from providing high resolution strain information across an entire region of interest.

References

Sutton MA, Orteu JJ, Schreier H (2009) Image Correlation for Shape. Basic Concepts, Theory and Applications. Springer Science & Business Media, Motion and Deformation Measurements

Hild F, Roux S (2006) Digital Image Correlation: from Displacement Measurement to Identification of Elastic Properties – a Review. Strain 42(2):69–80. https://doi.org/10.1111/j.1475-1305.2006.00258.x

Lin Y, Lan Z (2010) “Sub-pixel displacement measurement in Digital Image Correlation using Particle Swarm Optimization,” in 2010 International Conference on Information, Networking and Automation (ICINA) 2: V2–497-V2–501. https://doi.org/10.1109/ICINA.2010.5636461

Reu P (2015) Virtual strain gage size study. Exp Tech 39(5):1–3. https://doi.org/10.1111/ext.12172

LePage WS, Daly SH, Shaw JA (2016) Cross Polarization for Improved Digital Image Correlation. Exp Mech 56(6):969–985. https://doi.org/10.1007/s11340-016-0129-2

Berfield TA, Patel JK, Shimmin RG, Braun PV, Lambros J, Sottos NR (2007) Micro- and Nanoscale Deformation Measurement of Surface and Internal Planes via Digital Image Correlation. Exp Mech 47(1):51–62. https://doi.org/10.1007/s11340-006-0531-2

Kammers AD, Daly S (2013) Self-Assembled Nanoparticle Surface Patterning for Improved Digital Image Correlation in a Scanning Electron Microscope. Exp Mech 53(8):1333–1341. https://doi.org/10.1007/s11340-013-9734-5

Maraghechi S, Hoefnagels JPM, Peerlings RHJ, Rokoš O, Geers MGD (2019) Correction of Scanning Electron Microscope Imaging Artifacts in a Novel Digital Image Correlation Framework. Exp Mech 59(4):489–516. https://doi.org/10.1007/s11340-018-00469-w

Carroll J, Abuzaid W, Lambros J, Sehitoglu H (2010) An experimental methodology to relate local strain to microstructural texture. Rev Sci Instrum 81(8):083703. https://doi.org/10.1063/1.3474902

Abuzaid W, Patriarca L (2020) A study on slip activation for a coarse-grained and single crystalline CoCrNi medium entropy alloy. Intermetallics 117:106682. https://doi.org/10.1016/j.intermet.2019.106682

Chen Z, Lenthe W, Stinville JC, Echlin M, Pollock TM, Daly S (2018) High-Resolution Deformation Mapping Across Large Fields of View Using Scanning Electron Microscopy and Digital Image Correlation. Exp Mech 58(9):1407–1421. https://doi.org/10.1007/s11340-018-0419-y

Stinville JC, Echlin MP, Texier D, Bridier F, Bocher P, Pollock TM (2016) Sub-Grain Scale Digital Image Correlation by Electron Microscopy for Polycrystalline Materials during Elastic and Plastic Deformation. Exp Mech 56(2):197–216. https://doi.org/10.1007/s11340-015-0083-4

Tasan CC, Hoefnagels JPM, Diehl M, Yan D, Roters F, Raabe D (2014) Strain localization and damage in dual phase steels investigated by coupled in-situ deformation experiments and crystal plasticity simulations. Int J Plast 63:198–210. https://doi.org/10.1016/j.ijplas.2014.06.004

Zhao Z, Ramesh M, Raabe D, Cuitiño AM, Radovitzky R (2008) Investigation of three-dimensional aspects of grain-scale plastic surface deformation of an aluminum oligocrystal. Int J Plast 24(12):2278–2297. https://doi.org/10.1016/j.ijplas.2008.01.002

Merzouki T, Collard C, Bourgeois N, Ben Zineb T, Meraghni F (2010) “Coupling between measured kinematic fields and multicrystal SMA finite element calculations,” Mech Mater 42(1):72–95. https://doi.org/10.1016/j.mechmat.2009.09.003

Pataky GJ, Sehitoglu H (2015) Experimental Methodology for Studying Strain Heterogeneity with Microstructural Data from High Temperature Deformation. Exp Mech 55(1):53–63. https://doi.org/10.1007/s11340-014-9926-7

Bumgardner C, Croom B, Li X (2017) High-temperature delamination mechanisms of thermal barrier coatings: In-situ digital image correlation and finite element analyses. Acta Mater 128:54–63. https://doi.org/10.1016/j.actamat.2017.01.061

Hansen RS, Bird TJ, Voie R, Burn KZ, Berke RB (2019) A high magnification UV lens for high temperature optical strain measurements. Rev Sci Instrum 90(4):045117. https://doi.org/10.1063/1.5081899

Jain D (2005) “Superresolution using Papoulis-Gerchberg algorithm”, EE392J-Digital Video Processing. Stanford University, CA

Gerchberg RW (1974) Super-resolution through Error Energy Reduction. Opt Acta Int J Opt 21(9):709–720. https://doi.org/10.1080/713818946

Tsai RY, Huang TS (1984) Advances in Computer Vision and Image Processing. Proc Inst Elect Eng 1:317–339

Agard DA, Steinberg RA, Stroud RM (1981) Quantitative analysis of electrophoretograms: A mathematical approach to super-resolution. Anal Biochem 111(2):257–268. https://doi.org/10.1016/0003-2697(81)90562-5

Fienup JR (1980) Iterative Method Applied To Image Reconstruction And To Computer-Generated Holograms. Opt Eng 19(3):193297. https://doi.org/10.1117/12.7972513

Mahapatra D, Bozorgtabar B, Hewavitharanage S, Garnavi R (2017) “Image Super Resolution Using Generative Adversarial Networks and Local Saliency Maps for Retinal Image Analysis”, in Medical Image Computing and Computer Assisted Intervention − MICCAI. Cham 2017:382–390. https://doi.org/10.1007/978-3-319-66179-7_44

Zhang Y, Wu Y, Zhang Y, Ozcan A (2016) Color calibration and fusion of lens-free and mobile-phone microscopy images for high-resolution and accurate color reproduction. Sci Rep 6(1):1–14. https://doi.org/10.1038/srep27811

Wronski B et al. (2019) “Handheld multi-frame super-resolution,” ACM Trans. Graph. TOG 38(4):28:1–28:18. https://doi.org/10.1145/3306346.3323024

Ledig C et al. (2017) “Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network,” in 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR)105–114. https://doi.org/10.1109/CVPR.2017.19

Zhang L, Zhang H, Shen H, Li P (2010) A super-resolution reconstruction algorithm for surveillance images. Signal Process 90(3):848–859. https://doi.org/10.1016/j.sigpro.2009.09.002

Kawulok M et al. (2019) “Deep learning for fast super-resolution reconstruction from multiple images,” in Real-Time Image Processing and Deep Learning 2019, 10996: 109960B. https://doi.org/10.1117/12.2519579

Zomet A, Rav-Acha A, Peleg S (2001) “Robust super-resolution,” in Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. CVPR 2001, 1: I–I. https://doi.org/10.1109/CVPR.2001.990535

Papoulis A (1975) A new algorithm in spectral analysis and band-limited extrapolation. IEEE Trans Circuits Syst 22(9):735–742. https://doi.org/10.1109/TCS.1975.1084118

Pham TQ, van Vliet LJ, Schutte K (2006) Robust Fusion of Irregularly Sampled Data Using Adaptive Normalized Convolution. EURASIP J Adv Signal Process 2006(1):083268. https://doi.org/10.1155/ASP/2006/83268

Schultz RR, Meng L, Stevenson RL (1998) Subpixel Motion Estimation for Super-Resolution Image Sequence Enhancement. J Vis Commun Image Represent 9(1):38–50. https://doi.org/10.1006/jvci.1997.0370

Capel D, Zisserman A (2003) Computer vision applied to super resolution. IEEE Signal Process Mag 20(3):75–86. https://doi.org/10.1109/MSP.2003.1203211

Reddy BS, Chatterji BN (1996) An FFT-based technique for translation, rotation, and scale-invariant image registration. IEEE Trans Image Process 5(8):1266–1271. https://doi.org/10.1109/83.506761

Vandewalle P, Susstrunk SE, Vetterli M (2003) “Superresolution images reconstructed from aliased images,” in Visual Communications and Image Processing 2003, 5150: 1398–1405. https://doi.org/10.1117/12.506874

Foroosh H, Zerubia JB, Berthod M (2002) Extension of phase correlation to subpixel registration. IEEE Trans Image Process 11(3):188–200. https://doi.org/10.1109/83.988953

Vandewalle P, Süsstrunk S, Vetterli M (2006) A Frequency Domain Approach to Registration of Aliased Images with Application to Super-resolution. EURASIP J Adv Signal Process 2006(1):071459. https://doi.org/10.1155/ASP/2006/71459

Fischler MA, Bolles RC (1981) Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Commun ACM 24(6):381–395. https://doi.org/10.1145/358669.358692

Keren D, Peleg S, Brada R (1988) “Image sequence enhancement using sub-pixel displacements,” Proc CVPR 88 Comput Soc Conf Comput Vis Pattern Recognit 742–746. https://doi.org/10.1109/CVPR.1988.196317

Irani M, Rousso B, Peleg S (1994) Computing occluding and transparent motions. Int J Comput Vis 12(1):5–16. https://doi.org/10.1007/BF01420982

Gluckman J (2003) “Gradient field distributions for the registration of images,” in Proceedings 2003 International Conference on Image Processing (Cat. No.03CH37429) 2: II–691. https://doi.org/10.1109/ICIP.2003.1246774

Irani M, Peleg S (1990)“Super resolution from image sequences,” in 10th International Conference on Pattern Recognition [1990] Proceedings 2(2)115–120. https://doi.org/10.1109/ICPR.1990.119340

Shechtman Y, Weiss LE, Backer AS, Lee MY, Moerner WE (2016) “Multicolour localization microscopy by point-spread-function engineering,” Nat Photonics 10(9):9. https://doi.org/10.1038/nphoton.2016.137

Chatterjee P, Mukherjee S, Seetharaman G “Application of Papoulis-Gerchberg Method in Image Super-resolution and Inpainting.”

Giansiracusa M, Ezekiel S, Raquepas J, Blasch E, Thomas M (2016) “A comparative study of multi-scale image super-resolution techniques,” in 2016 IEEE Applied Imagery Pattern Recognition Workshop (AIPR) 1–7. https://doi.org/10.1109/AIPR.2016.8010598

Solutions C (2009) Vic-2D User Manual. Irmo, South Carolina

Pan B, Xie H, Wang Z, Qian K, Wang Z (2008) Study on subset size selection in digital image correlation for speckle patterns. Opt Express 16(10):7037–7048. https://doi.org/10.1364/OE.16.007037

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) “Image quality assessment: from error visibility to structural similarity,” IEEE Trans. Image Process 13(4): 600–612. https://doi.org/10.1109/TIP.2003.819861

Thai TQ, Hansen RS, Smith AJ, Lambros J, Berke RB (2019) Importance of Exposure Time on DIC Measurement Uncertainty at Extreme Temperatures. Exp Tech 43(3):261–271. https://doi.org/10.1007/s40799-019-00313-3

Gao Z, Xu X, Su Y, Zhang Q (2016) Experimental analysis of image noise and interpolation bias in digital image correlation. Opt Lasers Eng 81:46–53. https://doi.org/10.1016/j.optlaseng.2016.01.002

Fayad SS, Seidl DT, Reu PL (2020) Spatial DIC Errors due to Pattern-Induced Bias and Grey Level Discretization. Exp Mech 60(2):249–263. https://doi.org/10.1007/s11340-019-00553-9

Acknowledgements

This material is based upon work supported under an Integrated University Program Graduate Fellowship. Any opinions, findings, conclusions or recommendations expressed in this publication are those of the author and do not necessarily reflect the views of the Department of Energy Office of Nuclear Energy.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

The authors have no conflicts of interest to declare which are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hansen, R.S., Waldram, D.W., Thai, T.Q. et al. Super Resolution Digital Image Correlation (SR-DIC): an Alternative to Image Stitching at High Magnifications. Exp Mech 61, 1351–1368 (2021). https://doi.org/10.1007/s11340-021-00729-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11340-021-00729-2