Abstract

Principles of typical motion planning algorithms are investigated and analyzed in this paper. These algorithms include traditional planning algorithms, classical machine learning algorithms, optimal value reinforcement learning, and policy gradient reinforcement learning. Traditional planning algorithms investigated include graph search algorithms, sampling-based algorithms, interpolating curve algorithms, and reaction-based algorithms. Classical machine learning algorithms include multiclass support vector machine, long short-term memory, Monte-Carlo tree search and convolutional neural network. Optimal value reinforcement learning algorithms include Q learning, deep Q-learning network, double deep Q-learning network, dueling deep Q-learning network. Policy gradient algorithms include policy gradient method, actor-critic algorithm, asynchronous advantage actor-critic, advantage actor-critic, deterministic policy gradient, deep deterministic policy gradient, trust region policy optimization and proximal policy optimization. New general criteria are also introduced to evaluate the performance and application of motion planning algorithms by analytical comparisons. The convergence speed and stability of optimal value and policy gradient algorithms are specially analyzed. Future directions are presented analytically according to principles and analytical comparisons of motion planning algorithms. This paper provides researchers with a clear and comprehensive understanding about advantages, disadvantages, relationships, and future of motion planning algorithms in robots, and paves ways for better motion planning algorithms in academia, engineering, and manufacturing.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Intelligent robot, nowadays, is serving people from different backgrounds in dense and dynamic shopping malls, train stations and airports (Bai et al., 2015) like Daxin in Beijing and Changi in Singapore. Intelligent robots guide pedestrians to find coffee house, departure gates and exits via accurate motion planning, and assist pedestrians in luggage delivery. Another example of intelligent robot is the parcel delivery robot from e-commercial tech giants like JD in China and Amazon in US. Researchers in tech giants make it possible for robots to autonomously navigate themselves and avoid dynamic and uncertain obstacles via applying motion planning algorithms to accomplish parcel delivery tasks. In short, intelligent robot gradually plays a significant role in service industry, agricultural production, manufacture industry and dangerous scenarios like nuclear radiation environment to replace human manipulation, therefore the risk of injury is reduced, and efficiency is improved.

Research of motion planning is going through a flourishing period, due to development and popularity of deep learning (DL) and reinforcement learning (RL) that have better performance in coping with non-linear problems with complexity. The complexity of these problems generally refers to the uncertainty, ambiguity, and incompleteness (Cai et al., 2017), especially the uncertainty that is the most challenging issue in robotic motion planning. Many universities, tech giants, and research groups all over the world therefore attach much importance, time, and energy on developing new motion planning techniques by applying DL algorithms or integrating traditional motion planning algorithms with advanced machine learning (ML) algorithms. Autonomous vehicle is an example. Among tech giants, Google initiated their self-driving project named Waymo in 2016 (Samuel., 2017). In 2017, Tesla pledged a fully self-driving capable vehicle (Bilbeisi & Kesse, 2017). Autonomous car from Baidu had been tested successfully in highways of Beijing in 2017 (Fan et al., 2018), and man-manipulated buses had already been replaced by autonomous buses from Huawei in some specific areas of Shenzhen. Other companies in traditional vehicle manufacturing, like Audi and Toyota, also have their own experimental autonomous vehicles. Among research institutes and universities, Navlab (navigation lab) of Carnegie Mellon, Oxford University and MIT are leading research institutes. Up to 2020, European countries like Belgium, France, Italy, and UK are planning to operate transport systems for autonomous vehicles. Twenty-nine US states had passed laws in permitting autonomous vehicles. Autonomous vehicle is therefore expected to widely spread in near future with improvement of traffic laws.

Motion planning and robotic platform Robots use motion planning algorithms to plan their trajectories both at global and local level. Human-like and dog-like robots from Boston Dynamic and autonomous robotic car from MIT (Everett et al., 2018) are good examples. All of them leverage motion planning algorithms to enable robots to freely walk in dense and dynamic scenarios both indoor and outdoor. Chassis of robots has two types of wheels, including Ackerman-type wheel and differential wheel (Fig. 1).

Three types of robotic platform. The first and second figures represent wheel-based chassis (Minguez et al., 2008). The first figure represents an Ackerman-type (car-like) chassis, while the second figure represents a differential-wheel chassis. The third and fourth figures represent four-leg dog “SpotMini” from Boston Dynamic and the robotic arm (Meyes et al., 2017)

In Ackerman-type robots, two front wheels steer the robot, while two rear wheels drive the robot. The Ackerman-type chassis has two servos. Two front wheels share a same servo, and it means these two wheels can steer with a same steering angle or range φ (Fig. 1). Two rear wheels share another servo to control the speed of robots. The robot using differential wheel, however, is completely different with Ackerman-type robot in functions of servo. The chassis with differential wheels generally has two servos, and each wheel is controlled by one servo for forwarding. Steering is realized by giving different speeds to each wheel. Steering range in Ackerman-type robots is limited because two front wheels steer with a same angle φ. The Ackerman-type wheel is therefore suitable to be used in high-speed outdoor scenarios because of stability. Robots with differential wheels, however, can steer in an \(angle\in (\mathrm{0,2}\pi ]\), and it means robots can change their yaw solely without changing their position (x, y). Robots with differential wheels are also sensitive to the speed difference of two front wheels. The sensitivity depends on the rate of the gearing steer mechanism that yields the speed reduction and angular moment rotation. It means it is flexible to move in low-speed indoor scenarios but very dangerous to move in high-speed situations if something wrong in the speed control of two front wheels, because little speed changes of two front wheels in differential chassis can be exaggerated and accident follows.

It is popular to use legs in the chassis of robots in recent years. Typical examples are human-like and animal-like (dog-like, Fig. 1) robots from Boston Dynamic. The robotic arm (Fig. 1) is also a popular platform to deploy motion planning algorithms. In summary, wheels, arms, and legs are choices of chassis to implement motion planning algorithms which are widely used in academic and industrial scenarios including commercial autonomous driving, service robot, surgery robot and industrial arms.

Architecture of robots Classical hierarchical robotic architecture (Meystel, 1990) in Fig. 2a is composed by three stages: sense, plan and act (Murphy, 2000). Robots with this architecture can be successfully used in simple applications. It can generate long-term action plans, however, researchers are unsatisfied with the slow speed of this architecture in the update of world model and navigation plan, when coping with the environment with uncertainty. Reactive architecture (Brooks, 1986) in Fig. 2b, therefore, is introduced to cope with uncertain scenarios. Reactive architecture is designed to output instant response by the sense-act structure (Murphy, 2000). Reactive strategies or algorithms (e.g., potential fields) originate from the intuitive response of animals, and they are computationally inexpensive. However, the robot based on reactive architecture is short-sighted. It cannot generate long-term plans to fulfill challenging tasks. Hybrid deliberative/reactive architecture in Fig. 2c fuses advantages of hierarchical and reactive architectures, and it is also successfully used in autonomous robots (Arkin et al., 1987; Murphy, 2000). Hybrid deliberative/reactive architecture uses the deliberative layer to realize high-level long-term planning, while the reactive layer is used to realize local reactive planning. Hybrid deliberative/reactive architecture is still widely used in robots nowadays. A typical example is that: (1) in deliberative layer, world maps of the environment are constructed using information from sensors like the light detection and ranging (liDAR). Planning algorithms (e.g., A*) then plan high-level paths; (2) in reactive layer, reactive strategies, like dynamic window approach (DWA) for local planning and PID for speed control, are used to make local planning or instant reactions to cope with dynamic and uncertain scenarios. Finally, high-level planning, local planning or instant reactions are evaluated in the behavior manager to generate a better combined planning.

However, autonomous robotic architecture is evolving towards a simple architecture in Fig. 2d with the development of DL and RL algorithms in recent years. For example, in recent works (Chen et al., 2016, 2019, 2020; Everett et al., 2018; Long et al., 2018): (1) The goal’s information (e.g., position of goals), sensor’s information (e.g. distances to other robots) and attributes of robots (e.g., radius) are combined to form the features of robots; (2) More features of robots are obtained by interacting with the environment, and feedbacks (rewards) are obtained accordingly; (3) These features are recorded by the networks as the world model that will be updated according to feedbacks, and it is followed by obtaining a converged model; (4) Previous procedures are defined as the trainer to obtain the world model, and then time-sequential actions are generated in the navigator by performing the trained world model to navigate robots to destinations; (5) However, these time-sequential actions cannot be recognized by the actuators of robots (e.g., motor), therefore it is necessary to use the parser to parse them to proper formats that can be executed by actuators. This architecture of current autonomous robots can be simply described as five functional modules: feature extraction, environment perception, environment understanding, time-sequential navigation, and decision execution. It can be also simply divided into three stages: sense and train, plan, and act.

The advantages of recent autonomous robotic architecture are the simplicity, safety, and efficiency. Unlike Fig. 2c, there is no clear boundary between deliberative and reactive layers in Fig. 2d. Time-sequential planning can realize long-term planning goals, local planning goals or quick response with safety (e.g., safe distances to other objects) and efficiency (e.g., shortest path, shortest time) at the same time. Disadvantages of recent autonomous robotic architecture are expensive computation cost and poor network convergence especially when networks are trained with data from large outdoor scenarios.

Motion planning and path planning Motion planning is the extension of path planning. They are almost the same term, but few differences exist. For example, path planning aims at finding the path between the origin and destination in workspace by strategies like shortest distance or shortest time (Fig. 3), therefore path is planned from the global metric or topological level. Motion planning, however, aims at generating interactive trajectories in workspace when robots interact with dynamic environment, therefore motion planning needs to consider kinetics features, velocities and poses of robots and dynamic objects nearby (Fig. 3) when robots move towards the goal. Note that workspace here is an area where an algorithm works, or the task exists.

Path planning and motion planning. The left figure denotes a planned path based on shortest distance and time, and path is generated from high or global level. The right figure denotes famous piano mover’s problem that not only consider planning a path from global level, but also consider kinetics features, speeds and poses of the piano

To conclude, on one hand, motion planning must consider short-term optimal or suboptimal reactive strategies to make instant or reactive response. This is achieved by rotary or linear control in hardware (e.g., motor, servo) from the perspective of robotic and control engineering. On the other hand, motion planning should achieve long-term optimal planning goals as path planning when robots interact with the environment.

Classification of planning algorithms Robotic planning algorithms can be divided into two categories: traditional algorithms and ML-based algorithms according to their principles and the era they were invented. Traditional algorithms are composed by four groups including graph search algorithms (e.g., A*), sampling-based algorithms like rapidly-exploring random tree (RRT), interpolating curve algorithms (e.g., line and circle), and reaction-based algorithms (e.g., DWA). ML based planning algorithms include classical ML algorithms like support vector machine (SVM), optimal value RL like deep Q-learning network (DQN) and policy gradient RL (e.g., actor-critic algorithm). Categories of planning algorithms are summarized in Fig. 4.

Development of ML-based algorithms Classical ML, like SVM, are used to implement simple motion planning at an earlier stage, but its performance is poor because SVM is short-sighted for its one-step prediction. It requires well-prepared vector as inputs that cannot fully represent features of image-based dataset. Significant improvement to extract high-level features from images were made after the invention of convolutional neural network (CNN) (Lecun et al., 1998). CNN is widely used in many image-related tasks including motion planning, but it cannot cope with complex time-sequential motion planning problems. These better suit Markov chain (Chan et al., 2012) and long short-term memory (LSTM) (Inoue et al., 2019). Neural networks are then combined with LSTM or algorithms that are based on Markov chain (e.g., Q learning (Smart & Kaelbling, 2002)) to implement time-sequential motion planning. However, the efficiency is limited (e.g., poor performance in network convergence). A breakthrough was made when Google DeepMind introduced nature DQN (Mnih et al., 2013, 2015), in which reply buffer is to reuse old data to improve the efficiency. Performance in robustness, however, is limited because of noise that impacts the estimation of state-action value (Q value). Double DQN (Hasselt et al., 2016; Sui et al., 2018) and dueling DQN (Wang et al., 2015) are therefore invented to cope with problems caused by noise. Double DQN utilizes another network to evaluate the estimation of Q value in DQN to reduce noise, while advantage value (A value) is utilized in dueling DQN to obtain better Q value, and noise is mostly reduced. The Q learning, DQN, double DQN and dueling DQN are all based on optimal values (Q value and A value) to select optimal time-sequential actions. These algorithms are therefore called optimal value algorithms. Implementation of optimal value algorithms, however, is computationally expensive.

Optimal value algorithms are latter replaced by policy gradient method (Sutton et al., 1999), in which gradient approach (Zhang, 2019) is directly utilized to upgrade policy that is used to generate optimal actions. Policy gradient method is more stable in network convergence, but it lacks efficiency in speed of network convergence. Actor-critic algorithm ((Cormen et al., 2009; Konda & Tsitsiklis, 2001)) improves the speed of convergence by the actor-critic architecture. However, improvement in convergence speed is achieved by sacrificing the stability of convergence, therefore the network of actor-critic algorithm is hard to converge in earlier-stage training. Asynchronous advantage actor-critic (A3C) (Gilhyun, 2018; Mnih et al., 2016), advantage actor-critic (A2C)Footnote 1 (Babaeizadeh et al., 2016), trust region policy optimization (TRPO) (Schulman et al., 2017a) and proximal policy optimization (PPO) (Schulman et al., 2017b) algorithms are then invented to cope with this shortcoming. Multi-thread technique (Mnih et al., 2016) is utilized in A3C and A2C to accelerate the speed of convergence, while TRPO and PPO improve the policy of actor-critic algorithm by introducing trust region constraint in TRPO, and “surrogate” and adaptive penalty in PPO to improve the speed and stability of convergence. Data, however, is dropped after training, and new data must therefore be collected to train the network until convergence of network.

Off-policy gradient algorithms including deterministic policy gradient (DPG) (Silver et al., 2014) and deep DPG (DDPG) ((Lillicrap et al., 2019; Munos et al., 2016)) are invented to reuse data by replay buffer. DDPG fuses the actor-critic architecture and deterministic policy to enhance the convergence speed. In summary, classical ML, optimal value RL, and policy gradient RL are typical ML algorithms in robotic motion planning, and the development of these ML-based motion planning algorithms is shown in Fig. 5.

Development of ML-based robotic motion planning algorithms. These algorithms evolve from classical ML to optimal value RL and policy gradient RL. Classical ML cannot address time-sequential planning problem but RL copes with it well. Optimal value RL suffers slow and unstable convergence speed but policy gradient RL performs better in network convergence

In this paper, state-of-art ML-based algorithms are investigated and analyzed to provide researchers with a comprehensive and clear understanding about functions, structures, advantages, and disadvantages of planning algorithms. We also summarize new criteria to evaluate the performance of planning algorithms. Potential directions for making practical optimization in motion planning algorithms are discussed simultaneously. Contributions of this paper include: (1) Survey of traditional planning algorithms. (2) Detailed investigations of classical ML, optimal value RL and policy gradient RL for robotic motion planning. (3) Analytical comparisons of these algorithms according to new evaluation criteria; (4) Analysis of future directions.

This paper is organized as follows: Sects. “Traditional planning algorithms”, “Classical ML”, “Optimal value RL” and “Policy gradient RL” present principles and applications of traditional planning algorithms, classical ML, optimal value RL and policy gradient RL in robotic motion planning; section VI presents analytical comparisons of these algorithms, and criteria for performance evaluation; section VII analyzes future directions of robotic motion planning.

Traditional planning algorithms

Traditional planning algorithms can be divided into four groups: graph-search, sampling-based, interpolating curve, and reaction-based algorithms. They will be described in detail in the following sections.

Graph-search algorithms

Graph-search algorithms can be divided into depth-first search, breadth-first search, and best-first search (Dijkstra, 1959). The depth-first search algorithm builds a search tree as deep and fast as possible from the origin to destination until a proper path is found. The breadth-first search algorithm shares similarities with the depth-first search algorithm by building a search tree. The search tree in the breadth-first search algorithm, however, is accomplished by extending the tree as broad and quick as possible until a proper path is found. The best-first search algorithm adds a numerical criterion (value or cost) to each node and edge in the search tree. According to that, the search process is guided by calculation of values in the search tree to decide: (1) whether the search tree should be expanded; (2) which branch in the search tree should be extended. The process of building search trees repeats until a proper path is found. Graph search algorithms are composed by many algorithms. The most popular are Dijkstra’s algorithm (Dijkstra, 1959) and A* algorithm (Hart et al., 1968).

Dijkstra’s algorithm is one of earliest optimal algorithms based on best-first search technique to find the shortest paths among nodes in a graph. Finding the shortest paths in a road network is a typical example. Steps of the Dijkstra algorithm (Fig. 6) include: (1) converting the road network to a graph, and distances between nodes in the graph are expected to be found by exploration; (2) picking the unvisited node with the lowest distance from the source node; (3) calculating the distance from the picked node to each unvisited neighbor and update the distance of all neighbor nodes if the distance to the picked node is smaller than the previous distance; (4) marking the visited node when the calculation of distance to all neighbors is done. Previous steps repeat until the shortest distance between origin and destination is found. Dijkstra’s algorithm can be divided into two versions: forward version and backward version. Calculation of overall cost in the backward version, called cost-to-come, is accomplished by estimating the minimum distance from selected node to destination, while estimation of overall cost in the forward version, called cost-to-go, is realized by estimating the minimum distance from selected node to the initial node. In most cases, nodes are expanded according to the cost-to-go.

Steps of the Dijkstra algorithm (a) and road networks in web maps (b) (Indrajaya et al., 2015; Mariescu & Franti., 2018). Web maps are based on GPS data. Road network is mapped into the graph that is composed by nodes and edges, therefore graph search algorithms like A* and Dijkstra’s algorithms can be used in these graphs

A* algorithm is based on the best-first search, and it utilizes heuristic function to find the shortest path by estimating the overall cost. The algorithm is different from the Dijkstra’s algorithm in the estimation of the path cost. The cost estimation of a node i in a graph by A* is as follows: (1) estimate the distance between the initial node and node i; (2) find the nearest neighbor j of the node I; and estimate the distance of nodes j and i; (3) estimate the distance between the node j and the goal node. The overall estimated cost is the sum of these three factors:

where \({C}_{i}\) represents overall estimated cost of node i, \({c}_{start,i}\) the estimated cost from the origin to the node i, \({d}_{i,j}\) the estimated distance from the node i to its nearest node j, and \({d}_{j,goal}\) the estimated distance from the node j to the node of goal. A* algorithm has a long history in path planning in robots. A common application of the A* algorithm is mobile rovers planning via an occupancy grid map (Fig. 7) using the Euclidean distance (Wang, 2005). There are many variants of A* algorithm, like dynamic A* and dynamic D* (Stentz, 1994), Field D* (Ferguson & Stentz, 2006), Theta* (Daniel et al., 2014), Anytime Repairing A* (ARA*) and Anytime D* (Likhachev et al., 2008), hybrid A* (Montemerlo et al., 2008), and AD* (Ferguson et al., 2008). Other graph search algorithms have a difference with common robotic grid map. For example, the state lattice algorithm (Ziegler & Stiller, 2009) uses one type of grid map with a specific shape (Fig. 7), while the grid in normal robotic map is in a square-grid shape (Fig. 7).

The left figure represents a specific grid map in the State Lattice algorithm (Ziegler & Stiller, 2009), while the right figure represents a normal square-grid (occupancy grid) map in the robot operating system (ROS)

Sampling-based algorithms

Sampling-based algorithms randomly sample a fixed workspace to generate sub-optimal paths. The RRT and the probabilistic roadmap method (PRM) are two algorithms that are commonly utilized in motion planning. The RRT algorithm is more popular and widely used for commercial and industrial purposes. It constructs a tree that attempts to explore the workspace rapidly and uniformly via a random search (LaValle & Kuffner, 1999). The RRT algorithm can consider non-holonomic constraints, such as the maximum turning radius and momentum of the vehicle (Bautista et al., 2015). The example of trajectories generated by RRT is shown in Fig. 8. The PRM algorithm (Kavraki et al., 2002) is normally used in a static scenario. It is divided into two phases: learning phase and query phase. In the learning phase, a collision-free probabilistic roadmap is constructed and stored as a graph. In query phase, a path that connects original and targeted nodes is searched from the probabilistic roadmap. An example of trajectory generated by PRM is shown in Fig. 8

.

Interpolating curve algorithms

Interpolating curve algorithm is defined as a process that constructs or inserts a set of mathematical rules to draw trajectories. The interpolating curve algorithm is based on techniques, e.g., computer aided geometric design (CAGD), to draw a smooth path. Mathematical rules are used for path smoothing and curve generation. Typical path smoothing and curve generation rules include line and circle (Reeds & Shepp, 1990), clothoid curves (Funke et al., 2012), polynomial curves (Xu et al., 2012), Bezier curves (Bautista et al., 2014) and spline curves (Farouki & Sakkalis, 1994). Examples of trajectories are shown in Fig. 9

.

Reaction-based algorithms

Unlike graph-search algorithms that cost longer time to plan high-level or global-level paths, reaction-based algorithms are about making reactions or doing local path planning quickly and intuitively, as the description of algorithms in reactive architecture (Murphy, 2000). Here three reaction-based algorithms that are widely used in engineering and manufacturing are presented, and they are potential field method (PFM), velocity obstacle method (VOM), and DWA.

PFM (Khatib, 1986) is about using vectors to represent behaviors and using vector summation to combine vectors from different behaviors to produce an emergent behavior (Murphy, 2000). Potential field is a differentiable real-valued function \(U\) whose value can be seen as energy, and its gradient can be seen as a force. If potential field function \(U\) is defined artificially, it is called artificial potential field (APF). Its gradient \(\nabla U(x)\), where x denotes a robot configuration (e.g., positions of robots), is a vector which points at a local direction that maximally increases \(U\) (Tobaruela, 2012). Hence, robots in potential field or combined potential field (Fig. 10) will be forced to move along the gradient of potential field to maximize \(U\).

Different types of potential filed. (a–e) denote five primitive potential fields: uniform, perpendicular, attraction, repulsion, and tangential. (f) denotes a potential field combined by attraction (goal) and repulsion (obstacle) (Murphy, 2000)

Shortcomings of PFM include: (1) local minima if potential field converges to a minimum that is not global minimum. (2) oscillation of motion when robots navigate among very close obstacles at high speed. (3) impossibility to go through small openings. These shortcomings can be solved or partially solved by potential field variants (e.g., generalized potential fields method (GPFM) (Krogh, 1984), virtual force field (VFF) (Borenstein & Koren, 1989), vector field histogram (VFH) (Borenstein & Koren, 1991) and harmonic potential field (HPF) (Masoud, 2007)) in real-world engineering and manufacturing.

VOM (Fiorini & Shiller, 1998) relies on current positions and velocities of robots and obstacles to compute a reachable avoidance velocity space (RAV), and then a proper avoidance maneuver (velocity) is selected from RAV to avoid static and moving obstacles (Fiorini & Shiller, 1998). To compute a RAV (Fig. 11): (1) Velocity obstacle (VO) must be obtained. VO is a velocity set or space, and the selection of velocity from VO will lead to collision. (2) A set of reachable velocities (RV) should be obtained. This is achieved by mapping the actuator constraints to acceleration constraints (Fiorini & Shiller, 1998). (3) RAV is obtained by computing the difference between RV and VO.

The principle of VOM. (a)–(c) denote the VO, RV, and RAV. (d) denotes the exhaustive search in the search tree. (e)–(g) denote heuristic search method to select the highest avoidance velocity towards goals, the maximum avoidance velocity, and velocities that ensure desired trajectory structures (Fiorini & Shiller, 1998)

To select a proper avoidance maneuver (Fig. 11), exhaustive global search method and heuristic search method in RAV are suitable for off-line and on-line cases, respectively: (1) A search tree can be obtained by expanding the tree on RAV. A proper avoidance maneuver can be selected from the search tree according to assigned cost on the branch of search tree. Cost is relevant with some objective functions (e.g., distance traveled, motion time and energy). The search tree is expanded off-line, therefore near-optimal trajectories that lead to shortest time or distance can be obtained (Fiorini & Shiller, 1998). (2) The heuristic search costs less time on search process, and it is designed to select specific velocities that can realize special goals (e.g., the highest avoidance velocity towards goals, the maximum avoidance velocity, and velocities that ensure desired trajectory structures).

However, collisions with obstacles still exist when using velocity obstacle method in complex scenarios like dense and dynamic cases. Hence, some optimized velocity obstacle methods, like reciprocal velocity obstacle (RVO) (Berg et al., 2008, 2011; Guy et al., 2009), are introduced to better avoid collisions.

DWA (Fox et al., 1997) is about choosing a proper translational and rotational velocity \(({\varvec{v}},{\varvec{w}})\) that will maximize an objective function within dynamic window. Objective function includes a measure of progress towards a goal location, the forward velocity of the robot, and the distance to the next obstacle on the trajectory. Proper velocity \(({\varvec{v}},{\varvec{w}})\) is selected within the dynamic window (a search space of velocity) which consists of the velocities reachable within a short time interval. This is achieved by: (1) computing a two-dimensional possible velocity search space \({V}_{s}\) that is related to circular trajectories (curvatures) uniquely determined by \(({\varvec{v}},{\varvec{w}})\). (2) computing admissible velocities \({V}_{a}\) that ensures the stop before the robot reaches the closest obstacle on the corresponding curvature. (3) computing dynamic window velocity \({V}_{d}\). All curvatures outside the dynamic window cannot be reached within the next time interval. (4) selecting a proper velocity from resulting search space which consists of resulting velocity \({V}_{r}\) defined by \({V}_{r}={V}_{s}\cap {V}_{a}\cap {V}_{d}\) (Fox et al., 1997). The relationship of these velocity search spaces is shown in Fig. 12 where the resulting search space is the white area in the dynamic window.

The relationship of possible velocity search space \({V}_{s}\), admissible velocities \({V}_{a}\), dynamic window velocity \({V}_{d}\), and resulting velocity \({V}_{r}\) (Stentz, 1994)

Classical ML

Here basic principles of four classical but pervasive ML algorithms for motion planning are presented. These algorithms include three supervised learning algorithms (SVM, LSTM and CNN) and one RL that is the Monte-Carlo tree search (MCTS).

SVM (Evgeniou & Pontil, 1999) is a well-known supervised learning algorithm for classification. The basic principle of SVM is about drawing an optimal separating hyperplane between inputted data by training a maximum margin classifier (Evgeniou & Pontil, 1999). Inputted data is in the form of vector that is mapped into high-dimensional space where classified vectors are obtained by performing trained classifier. SVM is used in 2-class classification that cannot suit real-world task, but its variant multiclass SVM (MSVM) (Weston & Watkins, 1998) works.

LSTM ((Hochreiter & Schmidhuber, 1997; Inoue et al., 2019)) is a variant of recurrent neural network (RNN). LSTM can remember inputted data (vectors) in its cells. Because of limited capacity of cell in storage, a part of data will be dropped when cells are updated with past and new data, and then a part of data will be remembered and transferred to next time step. These functions in cells are achieved by neural network as the description in Fig. 13. In robotic motion planning, robots’ features and labels in each time step are fed into neural networks in cells for training, therefore decisions for motion planning are made by performing trained network.

Cells of LSTM that are implemented using neural network (N. Arbel. How LSTM networks solve the problem of vanishing gradients. Web. Dec 21, 2018. https://medium.com/datadriveninvestor/how-do-lstm-networks-solve-the-problem-of-vanishing-gradients). \({c}_{t}\) denotes cell’s state in time step t. \({h}_{t}\) denotes the output that will be transferred to the next state as its input, therefore format of input is the vector \({[h}_{t-1},{x}_{t}]\). Cell states are controlled and updated by three gates (forget gate, input gate and output gate) that are implemented using neural networks with weights \({W}_{f}\), \({W}_{c}+{W}_{i}\), and \({W}_{o}\) respectively

MCTS is a classical RL algorithm, and it is the combination of Monte-carlo method (Kalos & Whitlock, 2008) and the search tree (Coulom, 2006). MCTS is widely used in games (e.g., Go and chess) for motion prediction ((Paxton et al., 2017; Silver et al., 2016)). Mechanism of MCTS is composed by four processes that include selection, expansion, simulation, and backpropagation as Fig. 14. In robotic motion planning, node of MCTS represents possible state of robot, and stores state value of robot in each step. First, selection is made to choose some possible nodes in the tree based on known state value. Second, tree expands to unknown state by tree policy (e.g., random search). Third, simulation of expansion is made on new-expanded node by default policy (e.g., random search) until terminal state of robot and reward R is obtained. Finally, backpropagation is made from new-expanded node to root node, and state values in these nodes are updated according to received reward. These four processes are repeated until the convergence of state values in the tree. The robot can therefore plan its motion according to state values in the tree. MCTS fits discrete-action tasks (e.g., AlphaGo (Silver et al., 2016)), and it also fits time-sequential tasks like autonomous driving.

CNN (Lecun et al., 1998) has become a research focus of ML after LeNet5 (Lecun et al., 1998) was introduced and successfully applied into handwritten digits recognition. CNN is one of the essential types of neural network because it is good at extracting high-level features from high-dimensional high-resolution images by convolutional layers. CNN makes the robot avoid obstacles and plans motions of robot according to human experience by models trained in forward propagation and back propagation processes, especially the back propagation. In the back propagation, a model with a weight matrix/vector θ is updated to record features of obstacles. Note that \(\theta ={\left\{{w}_{i},{b}_{i}\right\}}_{i}^{L}\) where w and b represent weight and bias, and i represents the serial number of w-b pairs. L represents the length of weight.

Training steps of CNN are shown as Fig. 15. Images of objects (obstacles) are used as inputs of CNN. Outputs are probability distributions obtained by softmax function (Bridle, 1990). Loss value \({Loss}_{CE}\) is cross-entropy (CE) and that is obtained by

where p denotes probability distributions of output (observed real value), q represents probability distributions of expectation \(\left( {p,q \epsilon \left( {0,1} \right)} \right)\), and i represents the serial number of each batch of images in training. The loss function measures the difference (distance) of observed real value p and expected value q. Mean-square error (MSE) is an alternative of CE and MSE is defined by \(Loss_{MSE} = \mathop \sum \limits_{i} \left( {p_{i} - q_{i} } \right)^{2}\) where \(p_{i}\) represents observed values while \(q_{i}\) represents predicted values or expectation. The weight is updated in optimizer by minimizing the loss value using gradient descent approach (Zhang, 2019) therefore new weight \(w_{i}^{new}\) is obtained by

where w represents the weight, η represents a learning rate (\(\eta \epsilon \left( {0,1} \right)\)) and i represents the serial number of each batch of images in training. Improved variants of CNN are also widely used in motion planning, e.g., residue networks (Gao et al., 2017; He et al., 2016).

Training steps of CNN. The trajectory is planned by human in data collection in which steering angles of robots are recorded as labels of data. Robots learn behavior strategies in training and move along the planned trajectory in the test. The softmax function maps values of feature to probabilities \(p\in (\mathrm{0,1})\). The optimizer represents gradient descent approach, e.g., stochastic gradient descent (SGD) (Zhang, 2019)

Optimal value RL

Here basic concepts of RL are recalled firstly, and then the principles of Q learning, nature DQN, double DQN and dueling DQN are given.

Classical ML algorithm like CNN is competent only in static obstacle avoidance by one-step prediction, therefore it cannot cope with time-sequential obstacle avoidance. RL algorithms, e.g., optimal value RL, fit time-sequential tasks. Typical examples of these algorithms include Q learning, nature DQN, double DQN and dueling DQN. Motion planning is realized by attaching destination and safe paths with big reward (numerical value), while obstacles are attached with penalties (negative reward). Optimal path is found according to total rewards from initial place to destination. To better understand optimal value RL, it is necessary to recall several fundamental concepts: Markov chain, Markov decision process (MDP), model-based dynamic programming, model-free RL, Monte-Carlo method (MC), temporal difference method (TD), and State-action-reward-state-action (SARSA). MDP is based on Markov chain (Chan et al., 2012), and it can be divided into two categories: model-based dynamic programming and model-free RL. Mode-free RL can be divided into MC and TD that includes SARSA and Q learning algorithms. Relationship of these concepts is shown in Fig. 16.

Markov chain Variable set \({\varvec{X}}=\left\{{X}_{n}n>0\right\}\) is called Markov chain (Chan et al., 2012) if X meets

This means the occurrence of event \({X}_{t+1}\) depends only on event \({X}_{t}\) and has no correlation to any earlier events.

Markov decision process MDP (Chan et al., 2012) is a sequential decision process based on Markov Chain. This means the state and action of the next step depend only on the state and action of the current step. MDP is described as a tuple \(<S,A,P,R>\). S represents the state and here it refers to the state of robot and obstacles. A represents an action taken by robot. State S transits into another state under a state-transition probability P and a reward R from the environment is obtained. Principle of MDP is shown in Fig. 16. First, the robot in state s interacts with the environment and generate an action based on policy \(\pi \left(\mathrm{s}\right)s\to a\). Robot then obtains the reward r from the environment, and state transits into the next state s’. The reach of next state s’ marks the end of one loop and the start of the next loop.

Model-free RL and model-based dynamic programming Problems in MDP can be solved using model-based dynamic programming and model-free RL methods. The model-based dynamic programming is used in a known environment, while the model-free RL is utilized to solve problems in an unknown environment.

Temporal difference and Monte Carlo methods The model-free RL includes MC and TD. A sequence of actions is called an episode. Given an episode < S1, A1, R2, S2, A2, R3, …, St, At, Rt+1, …, ST > , the state value V(s) in the time step t is defined as the expectation of accumulative rewards \({G}_{t}\) by

where \(\gamma\) represent a discount factor (\(\gamma \epsilon \left[ {0,1} \right]\)). MC uses \(G_{t} - V\left( s \right)\) to update its state value VMC(s) by

where “\(\leftarrow\)” represents the update process in which new value will replace previous value. \(\alpha\) is a discount factor. TD uses \(R_{t + 1} + \gamma V\left( {s_{t + 1} } \right) - V\left( s \right)\) to update its state value \({ }V_{TD} \left( s \right)\) by

where \(\alpha\) is a learning rate, \(R_{t + 1} + \gamma V\left( {s_{t + 1} } \right)\) is the TD target in which the estimated state value \(V\left( {s_{t + 1} } \right)\) is obtained by bootstrapping method (Tsitsiklis, 2003). This means MC updates its state value after the termination of an episode, while TD update its state value in every steps. TD method is therefore efficient than MC in state value update.

Q learning

TD includes SARSA (Rummery & Niranjan, 1994) and Q learning ((Smart & Kaelbling, 2002; Sutton & Barto, 1998)). Given an episode < S1, A1, R2, S2, A2, R3, …, St, At, Rt+1, …, ST > , SARSA and Q learning use the ε-greedy method (Santos Mignon., 2017) to select an action \({A}_{t}\) at time step t. There are two differences between SARSA and Q learning: (1) SARSA uses ε-greedy again to select an estimated action value \(Q\left({S}_{t+1},{A}_{t+1}\right)\) at time step t + 1 to update its action value by

while Q learning directly uses maximum estimated action value \({\text{max}}_{Q} Q\left( {S_{t + 1} ,A_{t + 1} } \right)\) at time step t + 1 to update its action value by

(2) SARSA adopts selected action \(A_{t + 1}\) directly to update its next action value, but Q learning algorithm use ε-greedy to select a new action to update its next action value.

SARSA uses ε-greedy method to sample all potential action values of next step and selects a “safe” action eventually, while Q learning pays attention to the maximum estimated action value of the next step and selects optimal actions eventually. Steps of SARSA is shown in Algorithm 1 (Sutton & Barto, 1998), while Q learning algorithm as Algorithm 2 (Sutton & Barto, 1998) and Fig. 17. Implementations of robotic motion planning by Q learning are as (Panov et al., 2018; Qureshi et al., 2018; Smart & Kaelbling, 2002).

Nature deep Q-learning network

DQN (Mnih et al., 2013) is a combination of Q leaning and deep neural network (e.g., CNN). DQN uses CNN to approximate Q values by its weight θ. Hence, Q table in Q learning changes to Q value network that can be converged in a faster speed in complex motion planning. DQN became a research focus when it was invented by Google DeepMind (Mnih et al., 2013, 2015), and performance of DQN approximates or even surpasses the performance of human being in Atari games (e.g., Pac-man and Enduro in Fig. 18) and real-world motion planning tasks (Bae et al., 2019; Isele et al., 2017). DQN utilizes CNN to approximate Q values (Fig. 19) by

In contrast with the Q learning, DQN features three components: CNN, replay buffer (Schaul et al., 2016) and targeted network. CNN extracts feature from images that are inputs. Outputs can be Q value of current state Q(s,a) and Q value of next state Q(s’,a’), therefore experiences < s,a,r,s’ > are obtained and temporarily stored in replay buffer. It is followed by training DQN using experiences in the replay buffer. In this process, a targeted network \(\theta^{\prime}\) is leveraged to minimize the loss value by

Loss value measures the distance between expected value and real value. In DQN, expected value is (r + γmaxQ(s’,a’;θ’)) that is similar to labels in supervised learning, while Q(s,a;θ) is the observed real value. weights of targeted network and Q value network share a same weight θ. The difference is that weight of Q value network θ is updated in each step, while weight of targeted network θ’ is updated in a long period of time. Hence, θ is updated frequently while θ’ is more stable. It is necessary to keep targeted network stable, otherwise Q value network will be hard to converge. Detailed steps of DQN are shown as Algorithm 3 (Mnih et al., 2013) and Fig. 20.

Double deep Q-learning network

Noise in DQN leads to bias and false selection of next action \(a^{\prime}\) follows, therefore leading to over-estimation of next action value \(Q\left( {s^{\prime},a^{\prime};\theta ^{\prime}} \right)\). To reduce the over-estimation caused by noise, researchers invented the double DQN (Hasselt et al., 2016) in which another independent targeted network with weight \(\theta^{ - }\) is introduced to evaluate the selected action \(a^{\prime}\). Hence, equation of targeted network therefore changes from \(y^{DQN} = r + \gamma \max Q\left( {s^{\prime},a^{\prime};\theta ^{\prime}} \right)\) to

Steps of double DQN are the same with DQN. Examples of application are (Chao et al., 2020; Lei et al., 2018; Sui et al., 2018) in which double DQN is used in games and physical robots based on ROS.

Dueling deep Q-learning network

The state value \(V^{\pi } \left( s \right)\) measures “how good the robot is” in the state s where π denotes policy \(\pi :s \to a\), while the action value \(Q^{\pi } \left( {s,a} \right)\) denotes “how good the robot is” after robot takes action a in state s using policy π. Advantage value (A value) denotes the difference of \(Q^{\pi } \left( {s,a} \right)\) and \(V^{\pi } \left( s \right)\) by

therefore A value measures “how good the action a is” in state s if robot takes the action a. In neural network case (Fig. 21), weights α, β, θ are added, therefore

where θ is the weight of neural network and it is the shared weight of Q, V and A values. Here α denotes the weight of A value, and β the weight of V value. \(V\left( {s;\theta ,\beta } \right)\) is a scalar, and \(A\left( {s,a;\theta ,\alpha } \right)\) is a vector. There are however too many V-A value pairs if Q value is simply divided into two components without constraints, and only one V-A pairs are qualified. Thus, it is necessary to constrain the V value or A value to obtain a fixed V-A pair. According to relationship of \(Q^{\pi } \left( {s,a} \right)\) and \(V^{\pi } \left( s \right)\) where \(V^{\pi } \left( s \right) = {\mathbb{E}}_{a\sim \pi \left( s \right)} \left[ {Q^{\pi } \left( {s,a} \right)} \right]\), the expectation of A value is

Equation 15 can be used as a rule to constrain A value for obtaining a stable V-A pair. Expectation of A value is obtained by using \(A\left( {s_{t} ,a_{t} } \right)\) to subtract mean A value that is obtained from all possible actions, therefore

where \({\mathcal{A}}\) represents action space in time step t, \(\left| {\mathcal{A}} \right|\) the number of actions, and \(a_{t}^{ - }\) one of possible actions in \({\mathcal{A}}\) at time step t. Expectation of A value keeps zero for \(t \epsilon \left[ {0,T} \right]\), although the fluctuation of \(A\left( {s_{t} ,a_{t} } \right)\) in different action choices. Researchers use the expectation of A value to replace the current A value by

Thus, a stable V-A pair is obtained although original semantic definition of A value (Eq. 13) is changed (Wang et al., 2015). In other words: (1) advantage constraint \(\left\{ {A\left( {s,a;\theta ,\alpha } \right) - \frac{1}{{\left| {\mathcal{A}} \right|}}\mathop \sum \limits_{{a_{t}^{ - } \epsilon {\mathcal{A}}}} A\left( {s_{t} ,a_{t}^{ - } ;\theta ,\alpha } \right)} \right\} = 0\) is used to constrain the update of A value network \(\alpha\); (2) Q value network \(\theta\) is therefore obtained by \(Q\left( {s,a;\theta ,\alpha ,\beta } \right) = V\left( {s;\theta ,\beta } \right)\). Q value network \(\theta\) is updated according to V value that is more accurate and it is easy to obtain via accumulative rewards defined by Eq. 5. Hence, a better estimation of action value is obtained by performing a better Q value network \(\theta\).

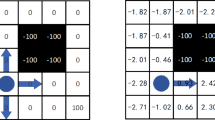

DQN obtained action value \(Q\left( {s,a} \right)\) directly by using network to approximate action value. This process introduces over-estimation of action value. Dueling DQN obtains better action value \(Q\left( {s,a} \right)\) by constraining advantage value \(A\left( {s,a} \right)\). Finally, three weights (\(\theta ,\alpha ,\beta\)) are obtained after training, and Q value network \(\theta\) is with less bias but A value is better than action value to represent “how good the action is” (Fig. 22).

Q(s,a) and A(s,a) saliency maps (red-tinted overlay) on the Atari game (Enduro). Q(s,a) learns to pay attention to the road, but pay less attention to obstacles in the front. A(s,a) learns to pay much attention to dynamic obstacles in the front (Wang et al., 2015)

Further optimizations are distributional DQN (Bellemare et al., 2017), noise network (Fortunato et al., 2017), dueling double DQNFootnote 2 and rainbow model (Hessel et al., 2017). Distributional DQN is like the dueling DQN, as noise is reduced by optimizing the architecture of DQN. Noise network is about improving the ability in exploration by a more exquisite and smooth approach. Dueling double DQN and rainbow model are hybrid algorithms. Rainbow model fuses several suitable components: double networks, replay buffer, dueling network, multi-step learning, distributional network, and noise network.

Policy gradient RL

Here the principles of policy gradient method and actor-critic algorithm are given firstly. It is followed by recalling the principles of their optimized variants: (1) A3C and A2C; (2) DPG and DDPG; (3) TROP and PPO.

Optimal value RL uses neural network to approximate optimal values to indirectly select actions. This process is simplified as \(a \leftarrow argmax_{a} R\left( {s,a} \right) + Q\left( {s,a;\theta { }} \right)\). Noise leads to over-estimation of \(Q\left( {s,a;\theta { }} \right)\), therefore the selected actions are suboptimal, and network \(\theta\) is hard to converge. Policy gradient algorithm uses neural network \(\theta\) as policy \(\pi_{\theta } :s \to a\) to directly select actions to avoid this problem. Brief steps of policy gradient algorithm are shown in Fig. 23.

Training and test steps of policy gradient algorithms. In the training, time-sequential actions are generated by the behavior policy. Note that policy is divided to behavior policy and target policy. Behavior policy is about selecting actions for training and behavior policy will not be updated, while target policy is also used to select actions but it will be updated in training. Policy refers to target policy normally. Robots learn trajectories via target policy (neural network as approximator) and trained policy is obtained. In the test, optimal time-sequential actions are generated directly by trained policy \({\pi }_{\theta }:s\to a\) until destination is reached

Policy gradient method

Policy is a probability distribution P{a|s,θ} = πθ(a|s) = π(a|s;θ) that is used to select action a in state s, where weight θ is the parameter matrix that is used as an approximation of policy π(a|s). Policy gradient method (PG) (Sutton et al., 1999) seeks an optimal policy and uses it to find optimal actions. how to find this optimal policy? Given a episode τ = (s1,a1,…,sT,aT), the probability to output actions in τ is \(\pi_{\theta } \left( \tau \right) = p\left( {s_{1} } \right)\mathop \prod \limits_{t = 2}^{T} \pi_{\theta } (a_{t} |s_{t} )p(s_{t} |s_{t - 1} ,a_{t - 1} )\). The aim of the PG is to find optimal parameter \(\theta^{*} = \arg max_{\theta } {\mathbb{E}}_{{\tau \sim \pi_{\theta } \left( \tau \right)}} \left[ {R\left( \tau \right)} \right]\) where episode reward \(R\left( \tau \right) = \mathop \sum \limits_{t = 1}^{T} r\left( {s_{t} ,a_{t} } \right)\)) is the accumulative rewards in episode \(\tau\). Objective of PG is defined as the expectation of rewards in episode \(\tau\) by

To find higher expectation of rewards, gradient operation is used on objective to find the increment of network that may lead to a better policy. Increment of network is the gradient value of objective, and that is

An example of PG is Monte-carlo reinforce (Williams, 1992). Data \(\tau\) for training are generated from simulation by stochastic policy. Previous objective and its gradient (Eq. 18–19) are replaced by

where N is the number of episodes, T the length of trajectory. A target policy \(\pi_{\theta }\) is used to generate episodes for training. For example, Gaussian distribution function is used as behavior policy to select actions by \(a\sim {\mathcal{N}}\left( {\mu \left( s \right),\sigma^{2} } \right)\). Network \(f\left( {s;\theta } \right)\) is then used to approximate expectation of Gaussian distribution by \(\mu \left( s \right) = f\left( {s;\theta } \right)\). It means \(a\sim {\mathcal{N}}\left( {mean = f\left[ {s;\theta = \left\{ {w_{i} ,b_{i} } \right\}_{i}^{L} )} \right],stdev = \sigma^{2} } \right)\) and \(\mu \left( {s;\theta } \right) = \left[ {mean,stdev} \right]\) where w and b represent weight and bias of network, L the number of w-b pairs. Its objective is defined as \(J\left( \theta \right) = f\left( {s_{t} ;w} \right) - a_{{t{\Sigma }}}^{2}\), therefore the objective gradient is

where \(\frac{df}{d\theta }\) is obtained by backward-propagation. According to Eq. 21–22, its objective gradient is

Once objective gradient is obtained, network is updated by gradient ascent method. That is

Actor-critic algorithm

The update of policy in PG is based on expectation of accumulative rewards in episode \(\tau \) \({\mathbb{E}}_{\tau \sim {\pi }_{\theta }\left(\tau \right)}\left[R\left(\tau \right)\right]\). This leads to high variance that causes low speed in network convergence, but convergence stability is improved. Actor-critic algorithm (AC) (Cormen et al., 2009; Kim et al., 2019; Konda & Tsitsiklis, 2001) reduces the variance by one-step reward in TD-error e for network update. TD-error is defined by

To enhance convergence speed, AC uses actor-critic architecture that includes actor network (policy network) and critic network. Critic network is used in TD-error therefore TD-error changes into

Objective of critic network is defined by

Objective gradient is therefore obtained by minimizing the mean-square error

Critic network is updated by gradient ascent method (Zhang, 2019). That is

where β represents learning rate. Objective of policy network is defined by

Hence, objective gradient of policy network is obtained by

and policy network is updated by

where α is a learning rate of actor network. Detailed steps of the AC are as Fig. 24: (1) action \(a_{t}\) at time step t is selected by policy network \(\theta \); (2) selected action is executed and reward is obtained. State transits into the next state \({s}_{t}\); (3) state value is obtained by critic network and TD error is obtained; (4) policy network is updated by minimizing objective of critic network; (5) critic network is updated according to objective gradient of critic network. This process repeats until the convergence of policy and critic networks.

A3C and A2C

A3C In contrast to AC, the A3C (Everett et al., 2018) has three features (1) multi-thread computing; (2) multi-step rewards; (3) policy entropy. Multi-thread computing means multiple interactions with the environment to collect data and update networks. Multi-step rewards are used in critic network, therefore the TD-error e of A3C is obtained by

Hence, the speed of convergence is improved. Here γ is a discount factor, and n is the number of steps. Data collection by policy \(\pi \left( {s_{t} ;\theta } \right)\) will cause over-concentration, because initial policy is with poor performance therefore actions are selected from small area of workspace. This causes poor quality of the input, therefore convergence speed of network is poor. Policy entropy increases the ability of policy in action exploitation to reduce over-concentration. Objective gradient of A3C therefore changes to

where β is a discount factor and \(H(\pi \left( {s_{t} ;\theta } \right)\) is the policy entropy.

A2C A2C (Everett et al., 2018) is the alternative of A3C algorithm. Each thread in A3C algorithm can be utilized to collect data, train critic and policy networks, and send updated weights to global model. Each thread in A2C however can only be used to collect data. Weights in A2C are updated synchronously compared with the asynchronous update of A3C, and experiments demonstrate that synchronous update of weights is better than asynchronous way in weights update ((Babaeizadeh et al., 2016; Mnih et al., 2016)). Their mechanisms in weight update are shown in Fig. 25.

DPG and DDPG

Here some prerequisites are recalled on-policy algorithm, off-policy algorithm, important sampling ratio, stochastic policy gradient algorithm, and then the principles of DPG and DDPG are given.

Prerequisites In data generation and training processes, if behavior policy and target policy of an algorithm are the same policy \({\pi }_{\theta }\), this algorithm is called on-policy algorithm. On-policy algorithm however may lead to low-quality data in data generation and a slow speed in network convergence. This problem can be solved by using one policy (behavior policy) βθ for data generation and another policy (target policy) \({\pi }_{\theta }\) for learning and making decision. Algorithms using different policies on data generation and learning are therefore called off-policy algorithms. Although policies in off-policy algorithm are different, their relationship can still be measured by transition probability ρβ(s) that is the importance-sampling ratio and defined by

Importance-sampling ratio measures the similarity of two policies. These policies must be with large similarity in the definition of important sampling. Particularly, behavior policy βθ is the same as policy \(\pi_{\theta }\) in on-policy algorithms. This means \(\pi_{\theta } = \beta_{\theta }\) and ρβ(s) = ρπ(s) = 1.

In on-policy policy gradient algorithm (e.g., PG), its objective is defined as

where \(\rho^{\pi }\) is the distribution of state transition. The objective gradient of PG \(\nabla_{\theta } J\left( \theta \right) = {\mathbb{E}}_{{\tau \sim \pi_{\theta } \left( \tau \right)}} \left[ {\nabla_{\theta } \log \pi_{\theta } \left( \tau \right)R\left( \tau \right)} \right]\) includes a vector \(C = \nabla_{\theta } \log \pi_{\theta } \left( \tau \right)\) and a scalar \(R = R\left( \tau \right)\). Vector C is the trend of policy update, while scalar R is the range of this trend. Hence, the scalar R acts as a critic that decides how policy is updated. Action value \({Q}^{\pi }\left(s,a\right)\) is defined as the expectation of discounted rewards by

\(Q^{\pi } \left( {s,a} \right)\) is an alternative of scalar R, and it is better than R as the critic. Hence, objective gradient of PG changes to

and policy is updated using objective gradient with action value \(Q^{\pi } \left( {s,a} \right)\). Hence, algorithms are called stochastic policy gradient algorithm if action value \(Q^{\pi } \left( {s,a} \right)\) is used as critic.

DPG DPG are algorithms in which a deterministic policy \(\mu_{\theta } \left( s \right)\) is trained to select actions, instead of policy \(\pi_{\theta } (a|s)\) in AC. A policy is deterministic policy \(\mu_{\theta } \left( s \right)\) if it directly maps the state to the action \(\leftarrow \mu_{\theta } \left( s \right)\), while stochastic policy \(\pi_{\theta } \left( {a{|}s} \right)\) maps state and action to a probability \(P\left( {a{|}s} \right)\) (Silver et al., 2014). The update of deterministic policy is defined as

If network θ is used as approximator of deterministic policy, update of network changes to

There are small changes in state distribution \(\rho^{\mu }\) of deterministic policy during the update of network \(\theta\), but this change will not impact the update of network. Hence, network of deterministic policy is updated by

because

Once \(Q^{\mu } \left( {s,a} \right)\) is obtained, \(\theta\) can be updated after obtaining objective gradient.

How to find \(Q^{\mu } \left( {s,a} \right)\)? Note that discounted reward \(Q^{\mu } \left( {s,a} \right)\) is a critic in stochastic policy gradient mentioned before. If network w is used as approximator, \(Q^{\mu } \left( {s,a} \right)\) is obtained by

stochastic policy gradient algorithm includes two networks, in which w is the critic that approximates Q value and \(\theta\) is used as actor to select actions in test (actions are selected by behavior policy β in training). Stochastic policy gradient in this case is called off-policy deterministic actor-critic (OPDAC) or OPDAC-Q. Objective gradient of OPDAC therefore changes from the Eq. 42 to

where β represents the behavior policy. Two networks are updated by

However, no constrains is used on network w in the approximation of Q value and this will lead to a large bias.

How to obtain a \(Q^{w} \left( {s,a} \right)\) without bias? Compatible function approximation (CFA) can eliminate the bias by adding two constraints on w (proof is given in Silver et al. (2014)): (1) \(\left. {\nabla_{a} Q^{w} \left( {s,a} \right)} \right|_{{a = \mu_{\theta } \left( s \right)}} = \nabla_{\theta } \mu_{\theta } \left( s \right)^{{ \intercal }} w\); (2) \(MSE\left( {\theta ,w} \right) = {\mathbb{E}}\left[ { \epsilon \left( {s;\theta ,w} \right)} \right]\left[ { \epsilon \left( {s;\theta ,w} \right)^{{ \intercal }} \epsilon \left( {s;\theta ,w} \right)} \right] \to 0\) where \(\in \left( {s;\theta ,w} \right) = \nabla_{a} Q^{w} \left( {s,a} \right)|_{{a = \mu_{\theta } \left( s \right)}} - \nabla_{a} Q^{\mu } \left( {s,a} \right)|_{{a = \mu_{\theta } \left( s \right)}}\). In other words, \(Q^{w} \left( {s,a} \right)\) should meet

where state value \({V}^{v}(s)\) may be any differentiable baseline function (Silver et al., 2014). Here v and \(\varnothing \) are feature and parameter of state value (\({V}^{v}\left(s\right)={v}^{\intercal }\varnothing (s)\)). Parameter \(\varnothing \) is also the feature of advantage function (\({A}^{w}\left(s,a\right)={\varnothing (s,a)}^{\intercal }w\)), and \(\varnothing \left(s,a\right)\) is defined as \(\mathrm{\varnothing }(\mathrm{s},\mathrm{a})\stackrel{\scriptscriptstyle\mathrm{def}}{=}{\nabla }_{\theta }{\mu }_{\theta }(s)(a-{\mu }_{\theta }\left(s\right))\). Hence, a low-bias Qw(s,a) is obtained using OPDAC-Q and CFA. This new algorithm with less bias is called Compatible OPDAC-Q (COPDAC-Q) (Silver et al., 2014), in which weights are updated as Eq. 49–51

where \(\delta_{t}\) is the same as the Eq. 45. Here \(a_{v}\), \(\alpha_{w}\) and \(\alpha_{\theta }\) are learning rates. Note that linear function approximation method (Silver et al., 2014) is used to obtain advantage function \(A^{w} \left( {s,a} \right)\) that is used to replace the value function \(Q^{w} \left( {s,a} \right)\) because \(A^{w} \left( {s,a} \right)\) is efficient than \(Q^{w} \left( {s,a} \right)\) in weight update. Linear function approximation however may lead to divergence of \(Q^{w} \left( {s,a} \right)\) in critic δ. Critic δ can be replaced by the gradient Q-learning critic (Sutton et al., 2009) to reduce the divergence. Algorithm that combines COPDAC-Q and gradient Q-learning critic is called COPDAC Gradient Q-learning (COPDAC-GQ). Details of gradient Q-learning critic and COPDAC-GQ algorithm can be found in ((Silver et al., 2014; Sutton et al., 2009)).

By analytical illustration above, 2 examples (COPDAC-Q and COPDAC-GQ) of DPG algorithm are obtained. In short, key points of DPG are to: (1) find a no-biased \(Q^{w} \left( {s,a} \right)\) as critic; (2) train a deterministic policy \(\mu_{\theta } \left( s \right)\) to select actions. networks of DPG are updated as AC via the policy ascent approach. Brief steps of DPG are shown in Fig. 26.

DDPG (Lillicrap et al., 2019) is the combination of replay buffer, deterministic policy μ(s) and actor-critic architecture. \(\theta^{Q}\) is used as critic network to approximate action value \(Q\left( {s_{i} ,a_{i} ;\theta^{Q} } \right)\). \(\theta^{\mu }\) is used as policy network to approximate deterministic policy \(\mu \left( {s;\theta^{\mu } } \right)\). TD target y of DDPG is defined by

where \(\theta^{{Q^{\prime}}}\) and \(\theta^{{\mu^{\prime}}}\) are copies of \(\theta^{Q}\) and \(\theta^{\mu }\) as target networks that are updated with low frequency. The objective of critic network is defined by

Critic network \(\theta^{Q}\) is updated by minimizing the loss value (MSE loss)

where N is the number of tuples < s,a,r,s’ > sampled from replay buffer. Target function of policy network is defined by

and objective gradient is obtained by

Hence, policy network \(\theta^{\mu }\) is updated according to gradient ascent method by

where α is a learning rate. New target networks

where τ is a learning rate, are obtained by “soft” update method that improves the stability of network convergence. Detailed steps of DDPG are shown in Algorithm 4 (Lillicrap et al., 2019) and Fig. 27. Examples can be found in ((Jorgensen & Tamar, 2019; Munos et al., 2016)) in which DDPG is used in robotic arms.

Steps of DDPG. DDPG combines the replay buffer, actor-critic architecture, and deterministic policy. First, action is selected by policy network and reward is obtained. State transits to next state. Second, experience tuple is saved in replay buffer. Third, experiences are sampled from replay buffer for training. Fourth, critic network is updated. Finally, policy network is updated

TRPO and PPO

PPO (Long et al., 2018; Schulman et al., 2017b) is the optimized version of TRPO (Schulman et al., 2017a). Hence, here the principle of TRPO is given before recalling that of PPO.

TRPO Previous policy gradient algorithms update their policies by \(\theta \leftarrow \theta +{\nabla }_{\theta }J(\theta )\). However, new policy is improved unstably with fluctuation. The goal of TRPO is to improve its policy monotonously, therefore stability of convergence is improved by finding a new policy with the objective that is defined by

where \(L_{{\theta_{old} }} \left( \theta \right)\) is the approximation of new policy’s expectation, \(D_{KL}^{max} \left( {\theta_{old} ,\theta } \right)\) the KL divergence between old policy \(\theta_{old}\) and new policy \(\theta\), and \(\delta\) a trust region constraint of KL divergence. The objective gradient \(\nabla_{\theta } J\left( \theta \right)\) is obtained by maximizing the objective \(J\left( \theta \right)\).

\(\eta \left( \theta \right)\) and \(\eta \left( {\theta_{old} } \right)\) denote expectations of new and old policies, respectively. Their relationship is defined by \(\eta \left( \theta \right) = \eta \left( {\theta_{old} } \right) + {\mathbb{E}}_{{s_{0} ,a_{0} ,s_{1} ,a_{1} \ldots }} \left[ {\mathop \sum \limits_{t = 0}^{\infty } \gamma^{t} A_{{\theta_{old} }} \left( {s_{t} ,a_{t} } \right)} \right]\) where \(\gamma\) is a discount factor, and \(A_{{\theta_{old} }} \left( {s_{t} ,a_{t} } \right)\) is the advantage value that is defined by \(A_{\theta } \left( {s,a} \right) = Q_{\theta } \left( {s,a} \right) - V_{\theta } \left( s \right)\). Thus, \(\eta \left( \theta \right) = \eta \left( {\theta_{old} } \right) + \mathop \sum \limits_{s} \rho_{\theta } \left( s \right)\mathop \sum \limits_{a} \theta (a|s)A_{{\theta_{old} }} \left( {s,a} \right)\) where \(\rho_{\theta } \left( s \right)\) is the probability distribution of new policy, but \(\rho_{\theta } \left( s \right)\) is unknown therefore it is impossible to obtain new policy \(\eta \left( \theta \right)\). Approximation of new policy’s expectation \(L_{{\theta_{old} }} \left( \theta \right)\) is defined by

where \(\rho_{{\theta_{old} }} \left( s \right)\) is known. The relationship of \(L_{{\theta_{old} }} \left( \theta \right)\) and \(\eta \left( \theta \right)\) (Kakade & Langford, 2002) is proved to be

where penalty coefficient \(C = \frac{2 \epsilon \gamma }{{\left( {1 - \gamma } \right)^{2} }}\), \(\gamma \epsilon \left[ {0,1} \right]\) and \(\in\) the maximum advantage. Hence, it is possible to obtain \(\eta \left( \theta \right)\) by \(maximize_{\theta } \left[ {L_{{\theta_{old} }} \left( \theta \right) - C \cdot D_{KL}^{max} \left( {\theta_{old} ,\theta } \right)} \right]\) or

However, penalty coefficient \(C\) (constrain of KL divergence) will lead to small step size in policy update. Hence, a trust region constraint \(\delta\) is used to constrain KL divergence by

therefore step size in policy update is enlarged robustly. New improved policy is obtained in trust region by maximizing objective \(L_{{\theta_{old} }} \left( \theta \right)\), s.t. \(D_{KL}^{max} \left( {\theta_{old} ,\theta } \right) \le \delta\). This objective can be simplified further (Schulman et al., 2017a) and new policy \(\theta \) is obtained by

PPO The objective of TRPO is \(maximize_{\theta } {\mathbb{E}}\left[ {\frac{{\theta \left( {a{|}s} \right)}}{{\theta_{old} (a|s)}}A_{{\theta_{old} }} \left( {s,a} \right)} \right], s.t. D_{KL}^{max} \left( {\theta_{old} ,\theta } \right) \le \delta\), in which a fixed trust region constraint \(\delta \) is used to constrain KL divergence instead of penalty coefficient\(C\). Fixed trust region constraint \(\delta \) leads to a reasonable step size in policy update therefore stability in convergence is improved and convergence speed is acceptable. However, objective of TRPO is obtained in implementation by conjugate gradient method (Schulman et al., 2017b) that is computationally expensive.

PPO optimizes objective \(maximize_{\theta } {\mathbb{E}}\left[ {\frac{{\theta \left( {a{|}s} \right)}}{{\theta_{old} (a|s)}}A_{{\theta_{old} }} \left( {s,a} \right) - C \cdot D_{KL}^{max} \left( {\theta_{old} ,\theta } \right)} \right]\) from two aspects: (1) probability ratio \(r\left( \theta \right) = \frac{{\theta \left( {a{|}s} \right)}}{{\theta_{old} \left( {a{|}s} \right)}}\) in objective is constrained in interval \(\left[ {1 - \epsilon ,1 + \epsilon } \right]\) by introducing “surrogate” objective

where \(\in\) is a hyperparameter, to penalize changes of policy that move \(r\left( \theta \right)\) away from 1 (Schulman et al., 2017b); (2) penalty coefficient \(C\) is replaced by adaptive penalty coefficient \(\beta\) that increases or decreases according to the expectation of KL divergence in new update. To be exact,

where \(d = {\mathbb{E}}[D_{KL}^{max} \left( {\theta_{old} ,\theta } \right)]\) and \(d_{targ}\) denotes target value of KL divergence in each policy update, therefore KL-penalized objective is obtained by

In the implementation with neural network, loss function is required to combine the policy surrogate and value function error (Schulman et al., 2017b), and entropy are also used in objective to encourage exploration. Hence, combined surrogate objective is obtained by

where \(c_{1}\), \(c_{2}\), S and \(L^{VF} \left( \theta \right)\) denote two coefficients, entropy bonus and square-error loss respectively. Objectives of PPO (\(L^{CLIP + VF + S} \left( \theta \right)\) and \(L^{KLPEN} \left( \theta \right)\)) is optimized by SGD that costs less computing resource than conjugate gradient method. PPO is implemented with actor-critic architecture, therefore it converges faster than TRPO.

Analytical comparisons

To provide a clear understanding about advantages and disadvantages of different motion planning algorithms, we divide these algorithms into four groups: traditional algorithms, classical ML algorithms, optimal value RL and policy gradient RL. Comparisons are made according to principles of the algorithm mentioned in section II, III, IV and V. First, direct comparisons of the algorithm in each group are made to provide a clear understanding about the input, output, and key features of these algorithms. Second, analytical comparisons of all motion planning algorithms are made to provide a comprehensive understanding about performances and applications of the algorithm, according to criteria summarized. Third, analytical comparisons about the convergence of RL-based motion planning algorithms are specially made, because RL-based algorithms are the research focus recently.

Direct comparisons of motion planning algorithm

Traditional algorithms This group includes graph search algorithms, sampling-based algorithms, interpolating curve algorithms and reaction-based algorithms. Table 1 lists their input, output, and key features. According to Table 1: (1) Graph search algorithms, sampling-based algorithms, and interpolating curve algorithms use graph or map of workspace as the input and global trajectories are generated directly, while reaction-based algorithms use different types of information as the input. (2) Graph search algorithms find the shortest and collision-free trajectories by the search methods (e.g., best-first search). For example, Dijkstra’s algorithm is based on best-first search. However, the search process is computationally expensive because search space is large, therefore heuristic function is used to reduce the search space and the shortest path is found by estimating the overall cost (e.g., A*). (3) Sampling-based algorithms randomly sample collision-free trajectories in search space (e.g., PRM), and constraints (e.g., non-holonomic constraint) are needed for some algorithms (e.g., RRT) in the sampling process. (4) Interpolating curve algorithms plan their path by mathematical rules, and then planned path is smoothed by CAGD. (5) In reaction-based algorithms, the moving directions of robots in PFM (APF) are the gradients of the converged and combined potential field function \(U\). The velocity of robots in VOM is selected from RAV that is related to VO and RV. Exhaustive search and heuristic search can be used in the velocity selection process of VOM, and selected velocity must maximize the objective function \(U\) (e.g., distance traveled and motion time). Before velocity selection process of DWA, it is necessary to reduce the search space of velocity to obtain the resulting search space \({V}_{r}={V}_{s}\cap {V}_{a}\cap {V}_{d}\). Proper velocity of robots in DWA is selected from \({V}_{r}\), and selected velocity must maximize the objective function \(U\). Note that \(U\) includes a measure of progress towards a goal location, the forward velocity of the robot, and the distance to the next obstacle on the trajectory. (6) Outputs of graph search algorithms, sampling-based algorithms, and interpolating curve algorithms are trajectories. The outputs of PFM (APF) are directions of robots according to the gradient of the converged and combined potential field function \(U\), while outputs of VOM and DWA are selected velocity among possible velocities

.

Classical ML algorithms This group includes MSVM, LSTM, MCTS and CNN. These algorithms are listed in Table 2. According to that: (1) MSVM, LSTM and MCTS use well-prepared vector as the input, while CNN can directly use image as its input. (2) LSTM and MCTS can output time-sequential actions, because of their structures (e.g., tree) that can store and learn time-sequential features. MSVM and CNN cannot output time-sequential actions because they output one-step prediction by performing trained classifier. (3) MSVM plans the motion of the robot by training a maximum margin classifier. LSTM stores and processes inputs in its cell that is a stack structure, and then actions are outputted by performing trained LSTM model. MCTS is the combination of Monte-Carlo method and search tree. Environmental states and values are stored and updated in its node of tree, therefore actions are outputted by performing trained MCTS model. CNN converts high-dimensional images to low-dimensional features by convolutional layers. These low-dimensional features are used to train a CNN model, therefore actions are outputted by performing trained CNN model.

Optimal value RL This group here includes Q learning, DQN, double DQN, and dueling DQN. Features of algorithms here include the replay buffer, objectives of algorithm, and method of weight update. Comparisons of these algorithms are listed in Table 3. According to that: (1) Q learning normally uses well-prepared vector as the input, while DQN, double DQN and dueling DQN use images as their input because these algorithms use convolutional layer to process high-dimensional images. (2) Outputs of these algorithms are time-sequential actions by performing trained model. (3) DQN, double DQN and dueling DQN use replay buffer to reuse the experience, while Q learning collects experiences and learns from then in an online way. (4) DQN, double DQN and dueling DQN use MSE \({e}^{2}\) as their objectives. Their differences are: first, DQN obtains action values by neural network \(Q(s,a;\theta )\), while Q learning obtains action values by querying the Q-table; second, double DQN uses another neural network \({\theta }^{-}\) to evaluate selected actions to obtain better action values by \(Q\left({s}^{^{\prime}},\mathrm{arg}{\mathit{max}}_{a}Q\left({s}^{^{\prime}},{a}^{^{\prime}};{\theta }^{^{\prime}}\right);{\theta }^{-}\right)\); third, dueling DQN obtains action values by dividing them to advantage values and state values. The constraint \({\mathbb{E}}_{a\sim \pi (s)}\left[A(s,a)\right]=0\) is used on the advantage value, therefore a better action value is obtained by \(Q\left(s,a;\theta ,\alpha ,\beta \right)=V\left(s;\theta ,\beta \right)+\left\{A\left(s,a;\theta ,\alpha \right)-\frac{1}{|\mathcal{A}|}\sum_{{a}_{t}^{-}\in \mathcal{A}}A({s}_{t},{a}_{t}^{-};\theta ,\alpha )\right\}\). Networks that approximate action value in these algorithms are updated by minimizing MSE with gradient descent approach.