Abstract

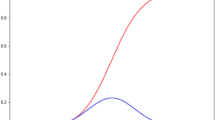

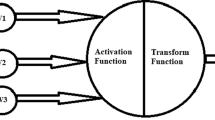

This paper addresses a new kind of neuron model, which has trainable activation function (TAF) in addition to only trainable weights in the conventional M-P model. The final neuron activation function can be derived from a primitive neuron activation function by training. The BP like learning algorithm has been presented for MFNN constructed by neurons of TAF model. Several simulation examples are given to show the network capacity and performance advantages of the new MFNN in comparison with that of conventional sigmoid MFNN.

Similar content being viewed by others

References

Segee, B. E., Using spectral techniques for improved performance in ANN, Proc. of IEEE Intl. Conf. on Neural Networks, 1993, 500–505.

Lee, S., Kil, R. M., A Gaussian potential function network with hierarchically self-organizing learning, Neural Networks, 1991, 4: 207–224.

Stork, D. G., How to solve the N-bit parity problem with two hidden units, NN, 1992, 5: 923–926.

Stork, D. G., N-bit parity problems: A reply to Brown and Korn, Neural Networks, 1993, 6: 607–609.

Wu, Y. S., A new approach to design a simplest ANN for performing certain specific problems, Proc. ICONI’ 95 Beijing, 1995, 1: 477–480.

Wu, Y. S., How to choose appropriate transfer function in designing a simplest ANN for solving specific problems, Science in China, Ser. E., 1996, 39(4): 369–372.

Wu, Y. S., Zhao, M. S., Ding, X. Q., A new kind of multilayer perceptron with tunable activation function and its application, Science in China, Ser. E., 1997, 40(1): 105–112.

Wu, Y. S., Zhao, M. S., Ding, X. Q., A new perceptron model with tunable activation function, Chinese Journal of Electronics, 1996, 5 (20): 55–62.

Hornik, K., Approximation capabilities of multilayer feedforward networks, NN, 1991, 4: 251–257.

Baum, E. B., Lang, K. L., Constructing hidden units using examples and queries, in Advances in Neural Information Processing System 3, San Mateo, CA (eds. Lippman, P., Moody, J. E., Touretzby, D. S. et al.), Morgan Kaufmann, 1991, 904–910.

Chen, Q. C., Generating-shrinking algorithm for learning arbitrary classification, Neural Networks, 1994, 7: 1477–1489.

Wu, Y. S., et al., Knowledge extraction based multilayer feedforward neural networks, Proc. ICONIP’ 98, 1630–1633.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wu, Y., Zhao, M. A neuron model with trainable activation function (TAF) and its MFNN supervised learning. Sci China Ser F 44, 366–375 (2001). https://doi.org/10.1007/BF02714739

Received:

Issue Date:

DOI: https://doi.org/10.1007/BF02714739