Abstract

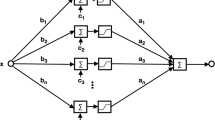

In this paper we demonstrate that finite linear combinations of compositions of a fixed, univariate function and a set of affine functionals can uniformly approximate any continuous function ofn real variables with support in the unit hypercube; only mild conditions are imposed on the univariate function. Our results settle an open question about representability in the class of single hidden layer neural networks. In particular, we show that arbitrary decision regions can be arbitrarily well approximated by continuous feedforward neural networks with only a single internal, hidden layer and any continuous sigmoidal nonlinearity. The paper discusses approximation properties of other possible types of nonlinearities that might be implemented by artificial neural networks.

Similar content being viewed by others

References

[A] R. B. Ash,Real Analysis and Probability, Academic Press, New York, 1972.

[BH] E. Baum and D. Haussler, What size net gives valid generalization?,Neural Comput. (to appear).

[B] B. Bavarian (ed.), Special section on neural networks for systems and control,IEEE Control Systems Mag.,8 (April 1988), 3–31.

[BEHW] A. Blumer, A. Ehrenfeucht, D. Haussler, and M. K. Warmuth, Classifying learnable geometric concepts with the Vapnik-Chervonenkis dimension,Proceedings of the 18th Annual ACM Symposium on Theory of Computing, Berkeley, CA, 1986, pp. 273–282.

[BST] L. Brown, B. Schreiber, and B. A. Taylor, Spectral synthesis and the Pompeiu problem,Ann. Inst. Fourier (Grenoble),23 (1973), 125–154.

[CD] S. M. Carroll and B. W. Dickinson, Construction of neural nets using the Radon transform, preprint, 1989.

[C] G. Cybenko, Continuous Valued Neural Networks with Two Hidden Layers are Sufficient, Technical Report, Department of Computer Science, Tufts University, 1988.

[DS] P. Diaconis and M. Shahshahani, On nonlinear functions of linear combinations,SIAM J. Sci. Statist. Comput.,5 (1984), 175–191.

[F] K. Funahashi, On the approximate realization of continuous mappings by neural networks,Neural Networks (to appear).

[G] L. J. Griffiths (ed.), Special section on neural networks,IEEE Trans. Acoust. Speech Signal Process.,36 (1988), 1107–1190.

[HSW] K. Hornik, M. Stinchcombe, and H. White, Multi-layer feedforward networks are universal approximators, preprint, 1988.

[HL1] W. Y. Huang and R. P. Lippmann, Comparisons Between Neural Net and Conventional Classifiers, Technical Report, Lincoln Laboratory, MIT, 1987.

[HL2] W. Y. Huang and R.P. Lippmann, Neural Net and Traditional Classifiers, Technical Report, Lincoln Laboratory, MIT, 1987.

[H] P. J. Huber, Projection pursuit,Ann. Statist.,13 (1985), 435–475.

[J] L. K. Jones, Constructive approximations for neural networks by sigmoidal functions, Technical Report Series, No. 7, Department of Mathematics, University of Lowell, 1988.

[K] A. N. Kolmogorov, On the representation of continuous functions of many variables by superposition of continuous functions of one variable and addition,Dokl. Akad. Nauk. SSSR,114 (1957), 953–956.

[LF] A. Lapedes and R. Farber, Nonlinear Signal Processing Using Neural Networks: Prediction and System Modeling, Technical Report, Theoretical Division, Los Alamos National Laboratory, 1987.

[L1] R. P. Lippmann, An introduction to computing with neural nets,IEEE ASSP Mag.,4 (April 1987), 4–22.

[L2] G. G. Lorentz, The 13th problem of Hilbert, inMathematical Developments Arising from Hilbert’s Problems (F. Browder, ed.), vol. 2, pp. 419–430, American Mathematical Society, Providence, RI, 1976.

[MSJ] J. Makhoul, R. Schwartz, and A. El-Jaroudi, Classification capabilities of two-layer neural nets.Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, Glasgow, 1989 (to appear).

[MP] M. Minsky and S. Papert,Perceptrons, MIT Press, Cambridge, MA, 1969.

[N] N. J. Nilsson,Learning Machines, McGraw-Hill, New York, 1965.

[P] G. Palm, On representation and approximation of nonlinear systems, Part II: Discrete systems,Biol. Cybernet.,34 (1979), 49–52.

[R1] W. Rudin,Real and Complex Analysis, McGraw-Hill, New York, 1966.

[R2] W. Rudin,Functional Analysis, McGraw-Hill, New York, 1973.

[RHM] D. E. Rumelhart, G. E. Hinton, and J. L. McClelland, A general framework for parallel distributed processing, inParallel Distributed Processing: Explorations in the Microstructure of Cognition (D. E. Rumelhart, J. L. McClelland, and the PDP Research Group, eds.), vol. 1, pp. 45–76, MIT Press, Cambridge, MA, 1986.

[V] L. G. Valiant, A theory of the learnable,Comm. ACM,27 (1984), 1134–1142.

[WL] A Wieland and R. Leighton, Geometric analysis of neural network capabilities,Proceedings of IEEE First International Conference on Neural Networks, San Diego, CA, pp. III-385–III-392, 1987.

Author information

Authors and Affiliations

Additional information

This research was supported in part by NSF Grant DCR-8619103, ONR Contract N000-86-G-0202 and DOE Grant DE-FG02-85ER25001.

Rights and permissions

About this article

Cite this article

Cybenko, G. Approximation by superpositions of a sigmoidal function. Math. Control Signal Systems 2, 303–314 (1989). https://doi.org/10.1007/BF02551274

Received:

Revised:

Issue Date:

DOI: https://doi.org/10.1007/BF02551274