Abstract

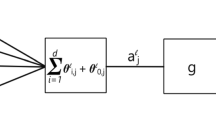

When solving large complex optimization problems, the user is faced with three major problems. These are (i) the cost in human time in obtaining accurate expressions for the derivatives involved; (ii) the need to store second derivative information; and (iii), of lessening importance, the time taken to solve the problem on the computer. For many problems, a significant part of the latter can be attributed to solving Newton-like equations. In the algorithm described, the equations are solved using a conjugate direction method that only needs the Hessian at the current point when it is multiplied by a trial vector. In this paper, we present a method that finds this product using automatic differentiation while only requiring vector storage. The method takes advantage of any sparsity in the Hessian matrix and computes exact derivatives. It avoids the complexity of symbolic differentiation, the inaccuracy of numerical differentiation, the labor of finding analytic derivatives, and the need for matrix store. When far from a minimum, an accurate solution to the Newton equations is not justified, so an approximate solution is obtained by using a version of Dembo and Steihaug's truncated Newton algorithm (Ref. 1).

Similar content being viewed by others

References

Dembo, R., andSteihaug, T.,Truncated Newton Methods for Large Scale Optimization, Mathematical Programming, Vol. 26, pp. 190–212, 1982.

Rall, L. B.,Automatic Differentiation: Techniques and Applications, Springer-Verlag, Berlin, Germany, 1981.

Dixon, L. C. W., andPrice, R. C.,Numerical Experience with the Truncated Newton Method, Numerical Optimisation Centre, Hatfield Polytechnic, Technical Report No. 169, 1986.

Dixon, L. C. W., Dolan, P. D., andPrice, R. C.,Finite Element Optimization, Proceedings of the Meeting on Simulation and Optimization of Large Systems, Reading, England, 1986, edited by A. J. Osiadacz, Oxford Science Publications, Oxford, England, 1988.

Pope, J., andShepherd, J.,On the Integrated Analysis of Catch-at-Age and Groundfish Survey or CPUE Data, Ministry of Fisheries and Food, Lowestoft, England, 1984.

Dembo, R., Eisenstat, S. C., andSteihaug, T.,Inexact Newton Methods, SIAM Journal on Numerical Analysis, Vol. 19, pp. 400–408, 1982.

Steihaug, T.,The Conjugate Gradient Method and Trust Regions in Large Scale Optimization, SIAM Journal on Numerical Analysis, Vol. 20, pp. 626–637, 1983.

Anonymous,Optima Manual, Numerical Optimisation Centre, Hatfield Polytechnic, Hatfield, Hertfordshire, England, 1984.

Author information

Authors and Affiliations

Additional information

This paper was presented at the SIAM National Meeting, Boston, Massachusetts, 1986.

Rights and permissions

About this article

Cite this article

Dixon, L.C.W., Price, R.C. Truncated Newton method for sparse unconstrained optimization using automatic differentiation. J Optim Theory Appl 60, 261–275 (1989). https://doi.org/10.1007/BF00940007

Issue Date:

DOI: https://doi.org/10.1007/BF00940007