Abstract

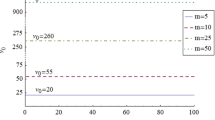

In this paper, the performances of three quadratically convergent algorithms coupled with four one-dimensional search schemes are studied through several nonquadratic examples. The algorithms are the rank-one algorithm (Algorithm I), the projection algorithm (Algorithm II), and the Fletcher-Reeves algorithm (Algorithm III). The search schemes are the exact quadratic search (EQS), the exact cubic search (ECS), the approximate quadratic search (AQS), and the approximate cubic search (ACS). The performances are analyzed in terms of number of iterations and number of equivalent function evaluations for convergence. From the numerical experiments, the following conclusions are found: (a) while the number of iterations generally increases by relaxing the search stopping condition, the number of equivalent function evaluations decreases; therefore, approximate searches should be preferred to exact searches; (b) the numbers of iterations for ACS, ECS, and EQS are about the same; therefore, the use of more sophisticated, higher order search schemes is not called for; the present ACS scheme, modified so that only the function, instead of the gradient, is used in bracketing the minimal point, could prove to be most desirable in terms of the number of equivalent function evaluations; (c) for Algorithm I, ACS and AQS yield almost identical results; it is believed that further improvements in efficiency are possible if one uses a fixed stepsize approach, thus bypassing the one-dimensional search completely; (d) the combination of Algorithm II and ACS exhibits high efficiency in treating functions whose order is higher than two and whose Hessian at the minimal point is singular; and (f) Algorithm III, even with the best search scheme, is inefficient in treating functions with flat bottoms; it is doubtful that the simplicity of its update will compensate for its inefficiency in such pathological cases.

Similar content being viewed by others

References

Huang, H. Y.,Unified Approach to Quadratically Convergent Algorithms for Function Minimization, Journal of Optimization Theory and Applications, Vol. 5, No. 6, 1970.

Hestenes, M. R., andStiefel, E.,Methods of Conjugate Gradients for Solving Linear Systems, Journal of Research of the National Bureau of Standards, Vol. 49, No. 6, 1952.

Davidon, W. C.,Variable Metric Method for Minimization, Argonne National Laboratory, Report No. ANL-5990, 1959.

Fletcher, R., andPowell, M. J. D.,A Rapidly Convergent Descent Method for Minimization, Computer Journal, Vol. 6, No. 2, 1963.

Fletcher, R., andReeves, C. M.,Function Minimization by Conjugate Gradients, Computer Journal, Vol. 7, No. 2, 1964.

Broyden, C. G.,Quasi-Newton Methods and Their Application to Function Minimization, Mathematics of Computation, Vol. 21, No. 99, 1967.

Pearson, J. D.,On Variable Metric Methods of Minimization, Research Analysis Corporation, Technical Paper No. RAC-TP-302, 1968.

Huang, H. Y., andLevy, A. V.,Numerical Experiments on Quadratically Convergent Algorithms for Function Minimization, Journal of Optimization Theory and Applications, Vol. 6, No. 3, 1970.

Murtagh, B. A., andSargent, R. W. H.,Computational Experience With Quadratically Convergent Minimization Methods, Computer Journal, Vol. 13, No. 2, 1970.

Huang, H. Y.,Method of Dual Matrices for Function Minimization, Rice University, Aero-Astronautics Report No. 88, 1972.

Rosenbrock, H. H.,An Automatic Method for Finding the Greatest or the Least Value of a Function, Computer Journal, Vol. 3, No. 3, 1960.

Colville, A. R.,A Comparative Study of Nonlinear Programming Codes, IBM, New York Scientific Center, TR No. 320-2949, 1968.

Cragg, E. E., andLevy, A. V.,Study on a Supermemory Gradient Method for the Minimization of Functions, Journal of Optimization Theory and Applications, Vol. 4, No. 3, 1969.

Hoshino, S.,A Linear Search Algorithm for Function Minimization, Kyoto University, Data Processing Center, Report No. A-3, 1970.

Author information

Authors and Affiliations

Additional information

Communicated by A. Miele

This research was supported by the National Science Foundation, Grant No. 32453.

Rights and permissions

About this article

Cite this article

Huang, H.Y., Chambliss, J.P. Quadratically convergent algorithms and one-dimensional search schemes. J Optim Theory Appl 11, 175–188 (1973). https://doi.org/10.1007/BF00935882

Issue Date:

DOI: https://doi.org/10.1007/BF00935882