Abstract

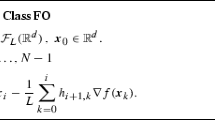

This note discusses the conditions for convergence of algorithms for finding the minimum of a function of several variables which are based on solving a sequence of one-variable minimization problems. Theorems are given which contrast the weakest conditions for convergence of gradient-related algorithms with those for more general algorithms, including those which minimize in turn along a sequence of uniformly linearly independent search directions.

Similar content being viewed by others

References

Ortega, J. M., andRheinboldt, W. C.,Iterative Solution of Nonlinear Equations in Several Variables, Academic Press, New York, New York, 1970.

Goldstein, A. A.,Minimizing Functionals on Normed Linear Spaces, SIAM Journal on Control, Vol. 4, pp. 81–89, 1966.

Ostrowski, A. M.,The Solution of Equations and Systems of Equations, Academic Press, New York, New York, 1970.

Murtagh, B. A., andSargent, R. W. H.,A Constrained Minimization Method with Quadratic Convergence, Optimization, Edited by R. Fletcher, Academic Press, New York, New York, 1969.

Murtagh, B. A., andSargent, R. W. H.,Computational Experience with Quadratically Convergent Minimization Methods, Computer Journal, Vol. 13, pp. 185–194, 1970.

Wolfe, P.,Convergence Conditions for Ascent Methods, SIAM Review Vol. 11, pp. 226–235, 1969.

Zoutendijk, G.,Nonlinear Programming-Computational Methods, Integer and Nonlinear Programming, Edited by J. Abadie, North Holland Publishing Company, Amsterdam, Holland, 1970.

Polak, E.,Computational Methods in Optimization—A Unified Approach, Academic Press, New York, New York, 1971.

Rosenbrock, H. H.,An Automatic Method for Finding the Greatest or Least Value of a Function, Computer Journal, Vol. 3, pp. 175–184, 1960.

Swann, W. H.,Report on the Development of a New Direct Search Method of Optimization, ICI Limited, CIRL Research Note No. 64/3, 1964.

Powell, M. J. D.,An Efficient Method of Finding the Minimum of a Function Without Calculating Derivatives, Computer Journal, Vol. 7, pp. 155–162, 1964.

Powell, M. J. D.,On Search Directions for Minimization Algorithm, Mathematical Programming, Vol. 4, pp. 193–201, 1973.

Zangwill, W. I.,Minimizing a Function Without Calculating Derivatives, Computer Journal, Vol. 10, pp. 293–296, 1967.

Author information

Authors and Affiliations

Additional information

Communicated by M. R. Hestenes

The senior author benefited greatly from discussion of this problem with various participants at the NATO Summer School on Mathematical Programming in Theory and Practice held at Figueira da Foz, Portugal, June 1972, and in particular with M. J. D. Powell, who, in continuing correspondence, has produced a series of examples and counterexamples for various versions of the theorems.

Rights and permissions

About this article

Cite this article

Sargent, R.W.H., Sebastian, D.J. On the convergence of sequential minimization algorithms. J Optim Theory Appl 12, 567–575 (1973). https://doi.org/10.1007/BF00934779

Published:

Issue Date:

DOI: https://doi.org/10.1007/BF00934779