Abstract

Recently, various decisions in security-related processes are assisted by so-called algorithmic decision making (ADM) systems, e.g., for predicting recidivism rates of criminals, for assessing the risk of a person being a terrorist, or the prediction of future criminal acts (predictive policing). However, the quality of such risk assessment is dependent on many modeling decisions. Based on requirements of proper democratic processes, especially security related ADM systems might thus require societal oversight. We argue that based on democracy-based processes it also needs to be discussed and decided, how aspects of its quality should be assessed: e.g., neither the proper measure for racial bias nor the one for its overall accuracy of prediction is decided on today. Finally, even if the ADM system would be as objective and perfect as it can be, its embedding in an important societal process might have severe side effects and needs to be controlled. In this article, we analyze the situation based on a political science view. We then point to some crucial decisions that need to be made in the planning stage, questions that need to be asked when purchasing a system, and measures that need to be implemented to measure the overall quality of the societal process in which the system is embedded in.

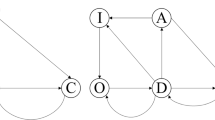

Figure by Algorithm Accountability Lab [Prof. Dr. K. A. Zweig]/CC BY

Figure by Algorithm Accountability Lab [Prof. Dr. K. A. Zweig]/CC BY

Figure by Algorithm Accountability Lab [Prof. Dr. K. A. Zweig]/CC BY

Figure by Algorithm Accountability Lab [Prof. Dr. K. A. Zweig]/CC BY

Figure by Algorithm Accountability Lab [Prof. Dr. K. A. Zweig]/CC BY

Source: Democracy Barometer (Bühlmann et al. 2012) (with minor changes by the authors)

Similar content being viewed by others

Notes

The assumption that an ADM system is in and of itself objective and transparent ist not correct. In any case it is possible to construct them such that their decision process is transparent, sometimes only by the cost of simplifying their decision structure. They are objective only in the sense that people with the same properties will be judged the same, independent of the time of day or other typically human biases.

Admittedly, the criminal justice system is not the only field in which ADM systems are increasingly used by public actors. Other fields are, for instance, decision-making processes in social policies (Niklas et al. 2015) or selection-processes in higher education (Frouillou 2016). Nevertheless, security-related decisions seem to the most researched area in this connection, which is not surprising given the importance of these decisions for a human’s life chances.

It needs to be noted that in computer science, most systems are evaluated by a single measure rather than by an array of different and possibly conflicting measures. This is an intrinsic feature of all processes that use computers to find an optimal ADM system.

See below for a more thorough discussion of the “quality of democracy”.

Such a classification can be based on a scoring algorithm where each person is assigned a score or probability to be a terrorist. In most cases, institutions will then define a threshold which defines the two classes: people with a score higher than the threshold and those with a score of at most the threshold.

The formular can be viewed here: https://drive.google.com/file/d/0B8KbLffq9fg5cS0zbzF2VkY1dEpzZW4tZUttT3hVY29LUkhv/view (downloaded last on 28th of January, 2018).

Modern Software is designed in a modular way, where a subroutine encapsulate small, well-defined functionalities.

A data set in which the class assignment which should be predicted (e.g., terroristic courier or not) is already indicated.

We do not discuss the case of “fairness” in algorithm-informed decision-making here, although it clearly is relevant in the context of judicial decisions. However, as we start from a broader framework of democratic theory, the issue of fairness will not be central here. Moreover, it has been widely discussed in the scientific debate around the use of algorithms (for a state of the art report, see (Berk et al. 2017)). It is important to note that there are also discussions on the question of fairness from the computer scientist perspective (Kleinberg et al. 2017; Angwin et al. 2016; Brennan et al. 2009). Both fields agree that the question for algorithmic or societal fairness is not yet fully solved.

For a more critical view on this call for transparency, see the recent contribution by Ananny and Crawford (2017). In fact, the further discussion (see Sect. 5.0.2) touches on several of the limitations that the authors have put forward (e.g., the need for explanation in order to be held accountable and the need to have enforcement rules if a transparent process proves to be problematic).

Clearly, transparency also matters for due process (see above). However, whereas the possibility to oppose decisions (e.g., via the creation of independent bodies), is the core of the argument on due process, accountability is not thinkable without transparency of rules and procedures.

Although it is probable that ADM systems will be used increasingly in the upcoming years, it has to be thinkable – from the perspective of democratic accountability– that a certain system in a specific decision-making context will be stopped.

References

Achen CH, Bartels LM (2016) Democracy for realists. Princeton University Press, Princeton

Ananny M, Crawford K (2017) Seeing without knowing: limitations of the transparency ideal and its application to algorithmic accountability. New Media Soc 33:1–17

Angwin J, Larson J, Mattu S, Kirchner L (2016) Machine bias—there’s software used across the country to predict future criminals. And its biased against blacks. ProPublica. Accessed 27 Oct 2017 (Online)

Barber B (2003) Strong democracy: participatory politics for a new age. University of California Press, Berkeley

Beetham D (1999) Democracy and human rights. Polity Press, Cambridge

Berk R, Heidari H, Jabbari S, Kearns M, Roth A (2017) Fairness in criminal justice risk assessments: the state of the art. arXiv:1703.09207

Brennan T, Dieterich W, Ehret B (2009) Evaluating the predictive validity of the COMPAS risk and needs assessment system. Crim Justice Behav 36(1):21–40

Bühlmann M, Merkel W, Mller L, Weels B (2012) The democracy barometer: a new instrument to measure the quality of democracy and its potential for comparative research. Eur Polit Sci 11(4):519–536

Burrell J (2016) How the machine thinks: understanding opacity in machine learning algorithms. Big Data Soc 3(1):2053951715622512

Buzan B, Wæver O, De Wilde J (1998) Security: a new framework for analysis. Lynne Rienner, Boulder

Citron DK, Pasquale FA (2014) The scored society: due process for automated predictions. Wash Law Rev 89:1–33

Dahl RA (1971) Polyarchy. Participation and opposition. Yale University Press, New Haven

Danaher et al (2017) Algorithmic governance: developing a research agenda through the power of collective intelligence. Big Data Soc. https://doi.org/10.1177/2053951717726554

Desmarais SL, Singh JP (2013) Risk assessment instruments validated and implemented in correctional settings in the United States—guide 028352. National Institute of Corrections, Washington

Diakopoulos N (2015) Algorithmic accountability: journalistic investigation of computational power structures. Digit Journal 3(3):398–415

Diakopoulos N (2016) We need to know the algorithms the government uses to make important decisions about us. The conversation. https://theconversation.com/we-need-to-know-the-algorithms-the-government-usesto-make-important-decisions-about-us-57869. Accessed 29 Dec 2017 (Online)

Duwe G, Kim K (2017) Out with the old and in with the new? an empirical comparison of supervised learning algorithms to predict recidivism. Crim Justice Policy Rev 28(6):570–600

Egbert S (2018) About discursive storylines and techno-fixes: the political framing of the implementation of predictive policing in Germany. Eur J Secur Res. https://doi.org/10.1007/s41125-017-0027-3

Empter S, Novy L (2009) Sustainable governance indicators 2009: policy performance and executive capacity in the OECD. Verlag Bertelsmann Stiftung, Gütersloh

EPIC (2017) Algorithms in the criminal justice system. https://epic.org/algorithmic-transparency/crim-justice/. Accessed 27 Oct 2017 (Online)

Epifanio M (2011) Legislative response to international terrorism. J Peace Res 48(3):399–411

Epifanio M (2014) The politics of targeted and untargeted counterterrorist regulations. Terror Polit Violence 28(4):1–22

Flaxman S, Goel S, Rao JM (2016) Filter bubbles, echo chambers, and online news consumption. Public Opin Q 80(S1):298–320

Freeman K (2016) Algorithmic injustice: how the Wisconsin supreme court failedo protect due process rights in State v. Loomis. N Carol J Law Technol 18:75–233

Frouillou L (2016) Post-bac admission: an algorithmically constrained “free choice”. In: Justice spatiale|spatial justice, no. 10. https://www.jssj.org/wp-content/uploads/2016/07/JSSJ10_3_VA.pdf. Accessed June 2016

Habermas J (1992) Faktizität und Geltung. Beiträge zur Diskurstheorie des Rechts und des Demokratischen Rechtsstaats. Suhrkamp, Frankfurt am Main

Helbing D (2015) The automation of society is next. Createspace, North Charleston

Helbing D, Pournaras E (2015) Build digital democracy. Nature 527(7576):33–34

Helbing D, Frey BS, Gigerenzer G, Hafen E, Hagner M, Hofstetter Y, van den Hoven J, Zicari RV, Zwitter A (2017). Will democracy survive big data and artificial intelligence? Sci Am. https://www.scientificamerican.com/article/will-democracy-survive-big-data-and-artificial-intelligence. Accessed 25 Feb 2017

Hildebrandt M (2016) Law as information in the era of data-driven agency. Mod Law Rev 79(1):1–30

Hollyer JR, Rosendorff BP, Vreeland JR (2011) Democracy and transparency. J Polit 73(4):1191–1205

Huber GA, Gordon SC (2004) Accountability and coercion: is justice blind when it runs for office? Am J Polit Sci 48(2):247–263

Hutchby I (2001) Technology, texts and affordances. Sociology 35(2):441–456

Jäckle S, Wagschal U, Bauschke R (2012) Das Demokratiebarometer: basically theory driven? Z Vgl Polit 6(1):99–125

Jäckle S, Wagschal U, Bauschke R (2013) Allein die Masse machts nicht—Antwort auf die Replik von Merkel et al. zu unserer Kritik am Demokratiebarometer. Z Vgl Polit 7(2):143–153

Just N, Latzer M (2017) Governance by algorithms: reality construction by algorithmic selection on the internet. Media Cul Soc 39(2):238–258

Kahneman D, Tversky A (1979) Prospect theory: an analysis of decision under risk. Econometrica 47(2):263–292

Khanna P (2017) Technocracy in America: rise of the info-state. CreateSpace Independent Publishing Platform

Kitchin R (2017) Thinking critically about and researching algorithms. Inf Commun Soc 20(1):14–29

Kleinberg J, Mullainathan S, Raghavan M (2017). Inherent trade-offs in the fair determination of risk scores. https://arxiv.org/abs/1609.05807

Kneip S (2011) Constitutional courts as democratic actors and promoters of the rule of law: institutional prerequisites and normative foundations. Z Vgl Polit 5(1):131–155

Krafft TD (2017) Qualitätsmaße binärer Klassifikatoren im Bereich kriminalprognostischer Instrumente der vierten Generation. Master’s thesis, TU Kaiserslautern. arxiv.org/abs/1804.01557v1

Kroll JA, Huey J, Barocas S, Felten EW, Reidenberg JR, Robinson DG, Yu H (2017) Accountable algorithms. Univ Pa Law Rev 165:633

Lacey N (2008) The prisoners’ dilemma. CUP, Cambridge

Lee S, Jensen C, Arndt C, Wenzelburger G (2017) Risky business? Welfare state reforms and government support in Britain and Denmark. Br J Polit Sci. https://doi.org/10.1017/S0007123417000382

Lepri B, Oliver N, Letouzé E, Pentland A, Vinck P (2017) Fair, transparent, and accountable algorithmic decision-making processes. Philos Technol. https://doi.org/10.1007/s13347-017-0279-x

Lischka K, Klingel A (2017) Wenn Maschinen Menschen bewerten. Studie der Bertelsmann Stiftung, Gütersloh

Mair and Peter (2008) Democracies. Oxford University Press, Oxford

Merkel W (2010) Systemtransformation. Springer, Wiesbaden

Merkel W, Tanneberg D, Bühlmann M (2013) Den Daumen senken: Hochmut und Kritik. Z Vgl Polit 7(1):75–84

Michie D, Spiegelhalter DJ, Taylor CC (1994) Machine learning, neural and statistical classification. Ellis Horwood Ltd. ISBN-13: 978-0131063600

Niklas J, Sztandar-Sztanderska K, Szymielewicz K (2015) Profiling the unemployed in Poland: social and political implications of algorithmic decision making. Fundacja Panoptykon, Warsaw

Northpointe (2012). Practitioners guide to COMPAS core. http://www.northpointeinc.com/downloads/compas/Practitioners-Guide-COMPAS-Core-_031915.pdf. Accessed 27 Oct 2017 (Online)

O’Neil C (2016) Weapons of math destruction. How big data increases inequality and threatens democracy, 1st edn. Crown, New York

Parchoma G (2014) The contested ontology of affordances: implications for researching technological affordances for collaborative knowledge production. Comput Hum Behav 37:360–368

Pasquale F (2016) The Black box society. The secret algorithms that control money and information. Harvard University Press, Cambridge (first Harvard University Press paperback edition)

Pelzer R (2018) Policing of terrorism using data from social media. Eur J Secur Res. https://doi.org/10.1007/s41125-018-0029-9

Press SJ, Wilson S (1978) Choosing between logistic regression and discriminant analysis. J Am Stat Assoc 73(364):699–705

Przeworski A, Stokes SC, Manin B (1999) Democracy, accountability, and representation, vol 2. Cambridge University Press, Cambridge

Richey S, Taylor JB (2017) Google and democracy: politics and the power of the internet. Routledge, Abingdon

Samuelson W, Zeckhauser R (1988) Status quo bias in decision making. J Risk Uncertain 1(1):7–59

Schedler A (1999) Conceptualizing accountability. Lynne Rienner, Boulder, pp 13–28

Schumpeter JA (1942) Capitalism, socialism and democracy. Harper & Brothers, New York

Soroka SN, Wlezien C (2010) Degrees of democracy: politics, public opinion, and policy. Cambridge University Press, Cambridge

Steinbock DJ (2005) Data matching, data mining, and due process. Ga Law Rev 40(1):1–84

The Intercept (2015) US government designated prominent Al Jazeera journalist as member of Al Qaeda. https://theintercept.com/document/2015/05/08/skynet-courier/. Accessed 27 Oct 2017 (Online)

Waldron J (2003) Security and liberty. J Polit Philos 11(2):191–210

Waldron J (2006) Safety and security. Neb Law Rev 85(2):454–507

Wenzelburger G (2018) Political economy or political systems? How welfare capitalism and political systems affect law and order policies in twenty western industrialised nations. Soc Policy Soc 17(2):209–226

Wenzelburger G, Staff H (2017) The third way and the politics of law and order: explaining differences in law and order policies between Blair’s new labour and Schröder’s SPD. Eur J Polit Res 56:553–577

Wormith JS (2017) Automated offender risk assessment: next generation or black hole. Criminol Public Policy 16(1):281–303

Yeung K (2017) Algorithmic regulation: a critical interrogation. Regul Gov. https://doi.org/10.1111/rego.12158

Ziewitz M (2016) Governing algorithms: myth, mess and methods. Sci Technol Human Val 41(1):3–16

Zweig KA (2016a) 2. Arbeitspapier: Überprüfbarkeit von Algorithmen. https://algorithmwatch.org/de/zweites-arbeitspapier-ueberpruefbarkeit-algorithmen/. Accessed 27 Oct 2016 (Online)

Zweig KA (2016b) Network analysis literacy: a practical approach to the analysis of networks. Springer, Berlin

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Zweig, K.A., Wenzelburger, G. & Krafft, T.D. On Chances and Risks of Security Related Algorithmic Decision Making Systems. Eur J Secur Res 3, 181–203 (2018). https://doi.org/10.1007/s41125-018-0031-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41125-018-0031-2