Abstract

Euclid is a European Space Agency medium-class mission selected for launch in 2020 within the cosmic vision 2015–2025 program. The main goal of Euclid is to understand the origin of the accelerated expansion of the universe. Euclid will explore the expansion history of the universe and the evolution of cosmic structures by measuring shapes and red-shifts of galaxies as well as the distribution of clusters of galaxies over a large fraction of the sky. Although the main driver for Euclid is the nature of dark energy, Euclid science covers a vast range of topics, from cosmology to galaxy evolution to planetary research. In this review we focus on cosmology and fundamental physics, with a strong emphasis on science beyond the current standard models. We discuss five broad topics: dark energy and modified gravity, dark matter, initial conditions, basic assumptions and questions of methodology in the data analysis. This review has been planned and carried out within Euclid’s Theory Working Group and is meant to provide a guide to the scientific themes that will underlie the activity of the group during the preparation of the Euclid mission.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

EuclidFootnote 1 (Laureijs et al. 2011; Refregier 2009; Cimatti et al. 2009) is an ESA medium-class mission selected for the second launch slot (expected for 2020) of the cosmic vision 2015–2025 program. The main goal of Euclid is to understand the physical origin of the accelerated expansion of the universe. Euclid is a satellite equipped with a 1.2 m telescope and three imaging and spectroscopic instruments working in the visible and near-infrared wavelength domains. These instruments will explore the expansion history of the universe and the evolution of cosmic structures by measuring shapes and redshifts of galaxies over a large fraction of the sky. The satellite will be launched by a Soyuz ST-2.1B rocket and transferred to the L2 Lagrange point for a 6-year mission that will cover at least 15,000 square degrees of sky. Euclid plans to image a billion galaxies and measure nearly 100 million galaxy redshifts.

These impressive numbers will allow Euclid to realize a detailed reconstruction of the clustering of galaxies out to a redshift 2 and the pattern of light distortion from weak lensing to redshift 3. The two main probes, redshift clustering and weak lensing, are complemented by a number of additional cosmological probes: cross correlation between the cosmic microwave background and the large scale structure; abundance and properties of galaxy clusters and strong lensing and possible luminosity distance through supernovae Ia. To extract the maximum of information also in the nonlinear regime of perturbations, these probes will require accurate high-resolution numerical simulations. Besides cosmology, Euclid will provide an exceptional dataset for galaxy evolution, galaxy structure, and planetary searches. All Euclid data will be publicly released after a relatively short proprietary period and will constitute for many years the ultimate survey database for astrophysics.

A huge enterprise like Euclid requires highly considered planning in terms not only of technology but also for the scientific exploitation of future data. Many ideas and models that today seem to be abstract exercises for theorists will in fact finally become testable with the Euclid surveys. The main science driver of Euclid is clearly the nature of dark energy, the enigmatic substance that is driving the accelerated expansion of the universe. As we discuss in detail in Part I, under the label “dark energy” we include a wide variety of hypotheses, from extradimensional physics to higher-order gravity, from new fields and new forces to large violations of homogeneity and isotropy. The simplest explanation, Einstein’s famous cosmological constant, is still currently acceptable from the observational point of view, but is not the only one, nor necessarily the most satisfying, as we will argue. Therefore, it is important to identify the main observables that will help distinguish the cosmological constant from the alternatives and to forecast Euclid’s performance in testing the various models.

Since clustering and weak lensing also depend on the properties of dark matter, Euclid is a dark matter probe as well. In Part II we focus on the models of dark matter that can be tested with Euclid data, from massive neutrinos to ultra-light scalar fields. We show that Euclid can measure the neutrino mass to a very high precision, making it one of the most sensitive neutrino experiments of its time, and it can help identify new light fields in the cosmic fluid.

The evolution of perturbations depends not only on the fields and forces active during the cosmic eras, but also on the initial conditions. By reconstructing the initial conditions we open a window on the inflationary physics that created the perturbations, and allow ourselves the chance of determining whether a single inflaton drove the expansion or a mixture of fields. In Part III we review the choices of initial conditions and their impact on Euclid science. In particular we discuss deviations from simple scale invariance, mixed isocurvature-adiabatic initial conditions, non-Gaussianity, and the combined forecasts of Euclid and CMB experiments.

Practically all of cosmology is built on the copernican principle, a very fruitful idea postulating a homogeneous and isotropic background. Although this assumption has been confirmed time and again since the beginning of modern cosmology, Euclid’s capabilities can push the test to new levels. In Part IV we challenge some of the basic cosmological assumptions and predict how well Euclid can constrain them. We explore the basic relation between luminosity and angular diameter distance that holds in any metric theory of gravity if the universe is transparent to light, and the existence of large violations of homogeneity and isotropy, either due to local voids or to the cumulative stochastic effects of perturbations, or to intrinsically anisotropic vector fields or spacetime geometry.

Finally, in Part V we review some of the statistical methods that are used to forecast the performance of probes like Euclid, and we discuss some possible future developments.

This review has been planned and carried out within Euclid’s Theory Working Group and is meant to provide a guide to the scientific themes that will underlie the activity of the group during the preparation of the mission. At the same time, this review will help us and the community at large to identify the areas that deserve closer attention, to improve the development of Euclid science and to offer new scientific challenges and opportunities.

2 Part I Dark energy

2.1 Introduction

With the discovery of cosmic acceleration at the end of the 1990s, and its possible explanation in terms of a cosmological constant, cosmology has returned to its roots in Einstein’s famous 1917 paper that simultaneously inaugurated modern cosmology and the history of the constant \(\varLambda \). Perhaps cosmology is approaching a robust and all-encompassing standard model, like its cousin, the very successful standard model of particle physics. In this scenario, the cosmological standard model could essentially close the search for a broad picture of cosmic evolution, leaving to future generations only the task of filling in a number of important, but not crucial, details.

The cosmological constant is still in remarkably good agreement with almost all cosmological data more than 10 years after the observational discovery of the accelerated expansion rate of the universe. However, our knowledge of the universe’s evolution is so incomplete that it would be premature to claim that we are close to understanding the ingredients of the cosmological standard model. If we ask ourselves what we know for certain about the expansion rate at redshifts larger than unity, or the growth rate of matter fluctuations, or about the properties of gravity on large scales and at early times, or about the influence of extra dimensions (or their absence) on our four dimensional world, the answer would be surprisingly disappointing.

Our present knowledge can be succinctly summarized as follows: we live in a universe that is consistent with the presence of a cosmological constant in the field equations of general relativity, and as of 2016, the value of this constant corresponds to a fractional energy density today of \(\varOmega _{\varLambda }\approx 0.7\). However, far from being disheartening, this current lack of knowledge points to an exciting future. A decade of research on dark energy has taught many cosmologists that this ignorance can be overcome by the same tools that revealed it, together with many more that have been developed in recent years.

Why then is the cosmological constant not the end of the story as far as cosmic acceleration is concerned? There are at least three reasons. The first is that we have no simple way to explain its small but non-zero value. In fact, its value is unexpectedly small with respect to any physically meaningful scale, except the current horizon scale. The second reason is that this value is not only small, but also surprisingly close to another unrelated quantity, the present matter-energy density. That this happens just by coincidence is hard to accept, as the matter density is diluted rapidly with the expansion of space. Why is it that we happen to live at the precise, fleeting epoch when the energy densities of matter and the cosmological constant are of comparable magnitude? Finally, observations of coherent acoustic oscillations in the cosmic microwave background (CMB) have turned the notion of accelerated expansion in the very early universe (inflation) into an integral part of the cosmological standard model. Yet the simple truth that we exist as observers demonstrates that this early accelerated expansion was of a finite duration, and hence cannot be ascribable to a true, constant \(\varLambda \); this sheds doubt on the nature of the current accelerated expansion. The very fact that we know so little about the past dynamics of the universe forces us to enlarge the theoretical parameter space and to consider phenomenology that a simple cosmological constant cannot accommodate.

These motivations have led many scientists to challenge one of the most basic tenets of physics: Einstein’s law of gravity. Einstein’s theory of general relativity (GR) is a supremely successful theory on scales ranging from the size of our solar system down to micrometers, the shortest distances at which GR has been probed in the laboratory so far. Although specific predictions about such diverse phenomena as the gravitational redshift of light, energy loss from binary pulsars, the rate of precession of the perihelia of bound orbits, and light deflection by the sun are not unique to GR, it must be regarded as highly significant that GR is consistent with each of these tests and more. We can securely state that GR has been tested to high accuracy at these distance scales.

The success of GR on larger scales is less clear. On astrophysical and cosmological scales, tests of GR are complicated by the existence of invisible components like dark matter and by the effects of spacetime geometry. We do not know whether the physics underlying the apparent cosmological constant originates from modifications to GR (i.e., an extended theory of gravity), or from a new fluid or field in our universe that we have not yet detected directly. The latter phenomena are generally referred to as ‘dark energy’ models.

If we only consider observations of the expansion rate of the universe we cannot discriminate between a theory of modified gravity and a dark-energy model. However, it is likely that these two alternatives will cause perturbations around the ‘background’ universe to behave differently. Only by improving our knowledge of the growth of structure in the universe can we hope to progress towards breaking the degeneracy between dark energy and modified gravity. Part I of this review is dedicated to this effort. We begin with a review of the background and linear perturbation equations in a general setting, defining quantities that will be employed throughout. We then explore the nonlinear effects of dark energy, making use of analytical tools such as the spherical collapse model, perturbation theory and numerical N-body simulations. We discuss a number of competing models proposed in literature and demonstrate what the Euclid survey will be able to tell us about them. For an updated review of present cosmological constraints on a variety of dark energy and modified gravity models, we refer to the Planck 2015 analysis (Planck Collaboration 2016c).

2.2 Background evolution

Most of the calculations in this review are performed in the Friedmann–Lemaître–Robertson–Walker (FLRW) metric

where a(t) is the scale factor (normalized to \(a=1\) today) and k the spatial curvature. The usual symbols for the Hubble function \(H=\dot{a}/a\) and the density fractions \(\varOmega _x\), where x stands for the component, are employed. We characterize the components with the subscript M or m for matter, \(\gamma \) or r for radiation, b for baryons, k or K for curvature and \(\varLambda \) for the cosmological constant. Whenever necessary for clarity, we append a subscript 0 to denote the present epoch, e.g., \(\varOmega _{M,0}\). Sometimes the conformal time \(\eta =\int \mathrm {d}t/a\) and the conformal Hubble function \(\mathcal {H}=aH= \mathrm {d}a/(a\mathrm {d}\eta )\) are employed. Unless otherwise stated, we denote with a dot derivatives w.r.t. cosmic time t (and sometimes we employ the dot for derivatives w.r.t. conformal time \(\eta \)) while we use a prime for derivatives with respect to \(\ln a\).

The energy density due to a cosmological constant with \(p=-\,\rho \) is obviously constant over time. This can easily be seen from the covariant conservation equation \(T_{\mu ;\nu }^\nu =0\) for the homogeneous and isotropic FLRW metric,

However, since we also observe radiation with \(p=\rho /3\) and non-relativistic matter for which \(p\approx 0\), it is natural to assume that the dark energy is not necessarily limited to a constant energy density, but that it could be dynamical instead.

One of the simplest models that explicitly realizes such a dynamical dark energy scenario is described by a minimally-coupled canonical scalar field evolving in a given potential. For this reason, the very concept of dynamical dark energy is often associated with this scenario, and in this context it is called ‘quintessence’ (Wetterich 1988; Ratra and Peebles 1988). In the following, the scalar field will be indicated with \(\phi \). Although in this simplest framework the dark energy does not interact with other species and influences spacetime only through its energy density and pressure, this is not the only possibility and we will encounter more general models later on. The homogeneous energy density and pressure of the scalar field \(\phi \) are defined as

and \(w_\phi \) is called the equation-of-state parameter. Minimally-coupled dark-energy models can allow for attractor solutions (Copeland et al. 1998; Liddle and Scherrer 1999; Steinhardt et al. 1999): if an attractor exists, depending on the potential \(V(\phi )\) in which dark energy rolls, the trajectory of the scalar field in the present regime converges to the path given by the attractor, though starting from a wide set of different initial conditions for \(\phi \) and for its first derivative \({\dot{\phi }}\). Inverse power law and exponential potentials are typical examples of potential that can lead to attractor solutions. As constraints on \(w_\phi \) become tighter (e.g., Komatsu et al. 2011), the allowed range of initial conditions to follow into the attractor solution shrinks, so that minimally-coupled quintessence is actually constrained to have very flat potentials. The flatter the potential, the more minimally-coupled quintessence mimics a cosmological constant, the more it suffers from the same fine-tuning and coincidence problems that affect a \(\varLambda \)CDM scenario (Matarrese et al. 2004).

However, when GR is modified or when an interaction with other species is active, dark energy may very well have a non-negligible contribution at early times. Therefore, it is important, already at the background level, to understand the best way to characterize the main features of the evolution of quintessence and dark energy in general, pointing out which parameterizations are more suitable and which ranges of parameters are of interest to disentangle quintessence or modified gravity from a cosmological constant scenario.

In the following we briefly discuss how to describe the cosmic expansion rate in terms of a small number of parameters. This will set the stage for the more detailed cases discussed in the subsequent sections. Even within specific physical models it is often convenient to reduce the information to a few phenomenological parameters.

Two important points are left for later: from Eq. (I.2.3) we can easily see that \(w_\phi \ge -1\) as long as \(\rho _\phi >0\), i.e., uncoupled canonical scalar field dark energy never crosses \(w_\phi =-\,1\). However, this is not necessarily the case for non-canonical scalar fields or for cases where GR is modified. We postpone to Sect. I.3.3 the discussion of how to parametrize this ‘phantom crossing’ to avoid singularities, as it also requires the study of perturbations.

The second deferred part on the background expansion concerns a basic statistical question: what is a sensible precision target for a measurement of dark energy, e.g., of its equation of state? In other words, how close to \(w_\phi =-\,1\) should we go before we can be satisfied and declare that dark energy is the cosmological constant? We will address this question in Sect. I.4.

2.2.1 Parametrization of the background evolution

If one wants to parametrize the equation of state of dark energy, two general approaches are possible. The first is to start from a set of dark-energy models given by the theory and to find parameters describing their \(w_\phi \) as accurately as possible. Only later one can try and include as many theoretical models as possible in a single parametrization. In the context of scalar-field dark-energy models (to be discussed in Sect. I.5.1), Crittenden et al. (2007) parametrize the case of slow-rolling fields, Scherrer and Sen (2008) study thawing quintessence, Hrycyna and Szydlowski (2007) and Chiba et al. (2010) include non-minimally coupled fields, Setare and Saridakis (2009) quintom quintessence, Dutta and Scherrer (2008) parametrize hilltop quintessence, Chiba et al. (2009) extend the quintessence parametrization to a class of k-essence models, Huang et al. (2011) study a common parametrization for quintessence and phantom fields. Another convenient way to parametrize the presence of a non-negligible homogeneous dark energy component at early times (usually labeled as EDE) was presented in Wetterich (2004). We recall it here because we will refer to this example in Sect. I.6.1.1. In this case the equation of state is parametrized as:

where b is a constant related to the amount of dark energy at early times, i.e.,

Here the subscripts ‘0’ and ‘e’ refer to quantities calculated today or early times, respectively. With regard to the latter parametrization, we note that concrete theoretical and realistic models involving a non-negligible energy component at early times are often accompanied by further important modifications (as in the case of interacting dark energy), not always included in a parametrization of the sole equation of state such as (I.2.4) (for further details see Sect. I.6 on nonlinear aspects of dark energy and modified gravity).

The second approach is to start from a simple expression of w without assuming any specific dark-energy model (but still checking afterwards whether known theoretical dark-energy models can be represented). This is what has been done by Huterer and Turner (2001), Maor et al. (2001), Weller and Albrecht (2001) (linear and logarithmic parametrization in z), Chevallier and Polarski (2001), Linder (2003) (linear and power law parametrization in a), Douspis et al. (2006), Bassett et al. (2004) (rapidly varying equation of state).

The most common parametrization, widely employed in this review, is the linear equation of state (Chevallier and Polarski 2001; Linder 2003)

where the subscript X refers to the generic dark-energy constituent. While this parametrization is useful as a toy model in comparing the forecasts for different dark-energy projects, it should not be taken as all-encompassing. In general, a dark-energy model can introduce further significant terms in the effective \(w_X(z)\) that cannot be mapped onto the simple form of Eq. (I.2.6).

An alternative to model-independent constraints is measuring the dark-energy density \(\rho _X(z)\) (or the expansion history H(z)) as a free function of cosmic time (Wang and Garnavich 2001; Tegmark 2002; Daly and Djorgovski 2003). Measuring \(\rho _X(z)\) has advantages over measuring the dark-energy equation of state \(w_X(z)\) as a free function; \(\rho _X(z)\) is more closely related to observables, hence is more tightly constrained for the same number of redshift bins used (Wang and Garnavich 2001; Wang and Freese 2006). Note that \(\rho _X(z)\) is related to \(w_X(z)\) as follows (Wang and Garnavich 2001):

Hence, parametrizing dark energy with \(w_X(z)\) implicitly assumes that \(\rho _X(z)\) does not change sign in cosmic time. This precludes whole classes of dark-energy models in which \(\rho _X(z)\) becomes negative in the future (“Big crunch” models, see Wang et al. 2004 for an example) (Wang and Tegmark 2004).

Note that the measurement of \(\rho _X(z)\) is straightforward once H(z) is measured from baryon acoustic oscillations, and \(\varOmega _m\) is constrained tightly by the combined data from galaxy clustering, weak lensing, and cosmic microwave background data—although strictly speaking this requires a choice of perturbation evolution for the dark energy as well, and in addition one that is not degenerate with the evolution of dark matter perturbations; see Kunz (2009).

Another useful possibility is to adopt the principal component approach (Huterer and Starkman 2003), which avoids any assumption about the form of w and assumes it to be constant or linear in redshift bins, then derives which combination of parameters is best constrained by each experiment.

For a cross-check of the results using more complicated parameterizations, one can use simple polynomial parameterizations of w and \(\rho _{\mathrm {DE}}(z)/\rho _{\mathrm {DE}}(0)\) (Wang 2008b).

2.3 Perturbations

This section is devoted to a discussion of linear perturbation theory in dark-energy models. Since we will discuss a number of non-standard models in later sections, we present here the main equations in a general form that can be adapted to various contexts. This section will identify which perturbation functions the Euclid survey (Laureijs et al. 2011) will try to measure and how they can help us to characterize the nature of dark energy and the properties of gravity.

2.3.1 Cosmological perturbation theory

Here we provide the perturbation equations in a dark-energy dominated universe for a general fluid, focusing on scalar perturbations.

For simplicity, we consider a flat universe containing only (cold dark) matter and dark energy, so that the Hubble parameter is given by

We will consider linear perturbations on a spatially-flat background model, defined by the line of element

where A is the scalar potential; \(B_{i}\) a vector shift; \(H_{L}\) is the scalar perturbation to the spatial curvature; \(H_{T}^{ij}\) is the trace-free distortion to the spatial metric; \(\mathrm {d}\eta = \mathrm {d}t/a\) is the conformal time.

We will assume that the universe is filled with perfect fluids only, so that the energy momentum tensor takes the simple form

where \(\rho \) and p are the density and the pressure of the fluid respectively, \(u^{\mu }\) is the four-velocity and \(\varPi ^{\mu \nu }\) is the anisotropic-stress perturbation tensor that represents the traceless component of the \(T_{j}^{i}\).

The components of the perturbed energy momentum tensor can be written as:

Here \({\bar{\rho }}\) and \({\bar{p}}\) are the energy density and pressure of the homogeneous and isotropic background universe, \(\delta \rho \) is the density perturbation, \(\delta p\) is the pressure perturbation, \(v^{i}\) is the velocity vector. Here we want to investigate only the scalar modes of the perturbation equations. So far the treatment of the matter and metric is fully general and applies to any form of matter and metric. We now choose the Newtonian gauge (also known as the longitudinal or Poisson gauge), characterized by zero non-diagonal metric terms (the shift vector \(B_{i}=0\) and \(H_{T}^{ij}=0\)) and by two scalar potentials \(\varPsi \) and \(\varPhi \); the metric Eq. (I.3.2) then becomes

The advantage of using the Newtonian gauge is that the metric tensor \(g_{\mu \nu }\) is diagonal and this simplifies the calculations. This choice not only simplifies the calculations but is also the most intuitive one as the observers are attached to the points in the unperturbed frame; as a consequence, they will detect a velocity field of particles falling into the clumps of matter and will measure their gravitational potential, represented directly by \(\varPsi \); \(\varPhi \) corresponds to the perturbation to the spatial curvature. Moreover, as we will see later, the Newtonian gauge is the best choice for observational tests (i.e., for perturbations smaller than the horizon).

In the conformal Newtonian gauge, and in Fourier space, the first-order perturbed Einstein equations give (see Ma and Bertschinger 1995, for more details):

where a dot denotes \(d/d\eta \), \(\delta _\alpha =\delta \rho _\alpha /\bar{\rho }_\alpha \), the index \(\alpha \) indicates a sum over all matter components in the universe and \(\pi \) is related to \(\varPi _{j}^{i}\) through:

The energy–momentum tensor components in the Newtonian gauge become:

where we have defined the variable \(\theta =ik_j v^j\) that represents the divergence of the velocity field.

Perturbation equations for a single fluid are obtained taking the covariant derivative of the perturbed energy momentum tensor, i.e., \(T_{\nu ;\mu }^{\mu }=0\). We have

The equations above are valid for any fluid. The evolution of the perturbations depends on the characteristics of the fluids considered, i.e., we need to specify the equation of state parameter w, the pressure perturbation \(\delta p\) and the anisotropic stress \(\pi \). For instance, if we want to study how matter perturbations evolve, we simply substitute \(w=\delta p = \pi = 0\) (matter is pressureless) in the above equations. However, Eqs. (I.3.17) and (I.3.18) depend on the gravitational potentials \(\varPsi \) and \(\varPhi \), which in turn depend on the evolution of the perturbations of the other fluids. For instance, if we assume that the universe is filled by dark matter and dark energy then we need to specify \(\delta p\) and \(\pi \) for the dark energy.

The problem here is not only to parameterize the pressure perturbation and the anisotropic stress for the dark energy (there is not a unique way to do it, see below, especially Sect. I.3.3 for what to do when w crosses \(-\,1\)) but rather that we need to run the perturbation equations for each model we assume, making predictions and compare the results with observations. Clearly, this approach takes too much time. In the following Sect. I.3.2 we show a general approach to understanding the observed late-time accelerated expansion of the universe through the evolution of the matter density contrast.

In the following, whenever there is no risk of confusion, we remove the overbars from the background quantities.

2.3.2 Modified growth parameters

Even if the expansion history, H(z), of the FLRW background has been measured (at least up to redshifts \(\sim \, 1\) by supernova data, i.e., via the luminosity distance), it is not possible yet to identify the physics causing the recent acceleration of the expansion of the universe. Information on the growth of structure at different scales and different redshifts is needed to discriminate between models of dark energy (DE) and modified gravity (MG). A definition of what we mean by DE and MG will be postponed to Sect. I.5.

An alternative to testing predictions of specific theories is to parameterize the possible departures from a fiducial model. Two conceptually-different approaches are widely discussed in the literature:

-

Model parameters capture the degrees of freedom of DE/MG and modify the evolution equations of the energy–momentum content of the fiducial model. They can be associated with physical meanings and have uniquely-predicted behavior in specific theories of DE and MG.

-

Trigger relations are derived directly from observations and only hold in the fiducial model. They are constructed to break down if the fiducial model does not describe the growth of structure correctly.

As the current observations favor concordance cosmology, the fiducial model is typically taken to be spatially flat FLRW in GR with cold dark matter and a cosmological constant, hereafter referred to as \(\varLambda \)CDM.

For a large-scale structure and weak lensing survey the crucial quantities are the matter-density contrast and the gravitational potentials and we therefore focus on scalar perturbations in the Newtonian gauge with the metric (I.3.8).

We describe the matter perturbations using the gauge-invariant comoving density contrast \(\varDelta _M\equiv \delta _M+3aH \theta _M/k^2\) where \(\delta _M\) and \(\theta _M\) are the matter density contrast and the divergence of the fluid velocity for matter, respectively. The discussion can be generalized to include multiple fluids.

In \(\varLambda \)CDM, after radiation-matter equality there is no anisotropic stress present and the Einstein constraint equations become

These can be used to reduce the energy–momentum conservation of matter simply to the second-order growth equation

Primes denote derivatives with respect to \(\ln a\) and we define the time-dependent fractional matter density as \(\varOmega _M(a)\equiv 8\pi G\rho _M(a)/(3H^2)\). Notice that the evolution of \(\varDelta _M\) is driven by \(\varOmega _M(a)\) and is scale-independent throughout (valid on sub- and super-Hubble scales after radiation-matter equality). We define the growth factor G(a) as \(\varDelta =\varDelta _0G(a)\). This is very well approximated by the expression

and

defines the growth rate and the growth index \(\gamma \) that is found to be \(\gamma _{\varLambda }\simeq 0.545\) for the \(\varLambda \)CDM solution (see Wang and Steinhardt 1998; Linder 2005; Huterer and Linder 2007; Ferreira and Skordis 2010).

Clearly, if the actual theory of structure growth is not the \(\varLambda \)CDM scenario, the constraints (I.3.19) will be modified, the growth Eq. (I.3.20) will be different, and finally the growth factor (I.3.21) is changed, i.e., the growth index is different from \(\gamma _\varLambda \) and may become time and scale dependent. Therefore, the inconsistency of these three points of view can be used to test the \(\varLambda \)CDM paradigm.

I.3.2.1 Two new degrees of freedom

Any generic modification of the dynamics of scalar perturbations with respect to the simple scenario of a smooth dark-energy component that only alters the background evolution of \(\varLambda \)CDM can be represented by introducing two new degrees of freedom in the Einstein constraint equations. We do this by replacing (I.3.19) with

Non-trivial behavior of the two functions Q and \(\eta \) can be due to a clustering dark-energy component or some modification to GR. In MG models the function Q(a, k) represents a mass screening effect due to local modifications of gravity and effectively modifies Newton’s constant. In dynamical DE models Q represents the additional clustering due to the perturbations in the DE. On the other hand, the function \(\eta (a,k)\) parameterizes the effective anisotropic stress introduced by MG or DE, which is absent in \(\varLambda \)CDM.

Given an MG or DE theory, the scale- and time-dependence of the functions Q and \(\eta \) can be derived and predictions projected into the \((Q,\eta )\) plane. This is also true for interacting dark sector models, although in this case the identification of the total matter density contrast (DM plus baryonic matter) and the galaxy bias become somewhat contrived (see, e.g., Song et al. 2010, for an overview of predictions for different MG/DE models).

Using the above-defined modified constraint Eq. (I.3.23), the conservation equations of matter perturbations can be expressed in the following form (see Pogosian et al. 2010)

where we define \(x_Q\equiv k/(aH\sqrt{Q})\). Remember \(\varOmega _M=\varOmega _M(a)\) as defined above. Notice that it is \(Q/\eta \) that modifies the source term of the \(\theta _M\) equation and therefore also the growth of \(\varDelta _M\). Together with the modified Einstein constraints (I.3.23) these evolution equations form a closed system for \((\varDelta _M,\theta _M,\varPhi ,\varPsi )\) which can be solved for given \((Q,\eta )\).

The influence of the Hubble scale is modified by Q, such that now the size of \(x_Q\) determines the behavior of \(\varDelta _M\); on “sub-Hubble” scales, \(x_Q\gg 1\), we find

and \(\theta _M=-\,aH\varDelta _M'\). The growth equation is only modified by the factor \(Q/\eta \) on the RHS with respect to \(\varLambda \)CDM (I.3.20). On “super-Hubble” scales, \(x_Q\ll 1\), we have

Q and \(\eta \) now create an additional drag term in the \(\varDelta _M\) equation, except if \(\eta >1\) when the drag term could flip sign. Pogosian et al. (2010) also showed that the metric potentials evolve independently and scale-invariantly on super-Hubble scales as long as \(x_Q\rightarrow 0\) for \(k \rightarrow 0\). This is needed for the comoving curvature perturbation, \(\zeta \), to be constant on super-Hubble scales.

Many different names and combinations of the above defined functions \((Q,\eta )\) have been used in the literature, some of which are more closely related to actual observables and are less correlated than others in certain situations (see, e.g., Amendola et al. 2008b; Mota et al. 2007; Song et al. 2010; Pogosian et al. 2010; Daniel et al. 2010; Daniel and Linder 2010; Ferreira and Skordis 2010).

For instance, as observed above, the combination \(Q/\eta \) modifies the source term in the growth equation. Moreover, peculiar velocities are following gradients of the Newtonian potential, \(\varPsi \), and therefore the comparison of peculiar velocities with the density field is also sensitive to \(Q/\eta \). So we define

Weak lensing and the integrated Sachs–Wolfe (ISW) effect, on the other hand, are measuring \((\varPhi +\varPsi )/2\), which is related to the density field via

A summary of different other variables used was given by Daniel et al. (2010). For instance, the gravitational slip parameter introduced by Caldwell et al. (2007) and widely used is related through \(\varpi \equiv 1/\eta -1\). Recently, Daniel and Linder (2010) used \(\{{{\mathcal {G}}}\equiv \varSigma ,\ \mu \equiv Q,\ {{\mathcal {V}}}\equiv \mu \}\), while (Bean and Tangmatitham 2010) defined \(R\equiv 1/\eta \). All these variables reflect the same two degrees of freedom additional to the linear growth of structure in \(\varLambda \)CDM.

Any combination of two variables out of \(\{Q,\eta ,\mu ,\varSigma ,\ldots \}\) is a valid alternative to \((Q,\eta )\). It turns out that the pair \((\mu ,\varSigma )\) is particularly well suited when CMB, WL and LSS data are combined as it is less correlated than others (see Zhao et al. 2010; Daniel and Linder 2010; Axelsson et al. 2014).

I.3.2.2 Parameterizations and non-parametric approaches

So far we have defined two free functions that can encode any departure of the growth of linear perturbations from \(\varLambda \)CDM. However, these free functions are not measurable, but have to be inferred via their impact on the observables. Therefore, one needs to specify a parameterization of, e.g., \((Q,\eta )\) such that departures from \(\varLambda \)CDM can be quantified. Alternatively, one can use non-parametric approaches to infer the time and scale-dependence of the modified growth functions from the observations.

Ideally, such a parameterization should be able to capture all relevant physics with the least number of parameters. Useful parameterizations can be motivated by predictions for specific theories of MG/DE (see Song et al. 2010) and/or by pure simplicity and measurability (see Amendola et al. 2008b). For instance, Zhao et al. (2010) and Daniel et al. (2010) use scale-independent parameterizations that model one or two smooth transitions of the modified growth parameters as a function of redshift. Bean and Tangmatitham (2010) also adds a scale dependence to the parameterization, while keeping the time-dependence a simple power law:

with constant \(Q_0\), \(Q_\infty \), \(R_0\), \(R_\infty \), s and \(k_c\). Generally, the problem with any kind of parameterization is that it is difficult—if not impossible—for it to be flexible enough to describe all possible modifications.

Daniel et al. (2010) and Daniel and Linder (2010) investigate the modified growth parameters binned in z and k. The functions are taken constant in each bin. This approach is simple and only mildly dependent on the size and number of the bins. However, the bins can be correlated and therefore the data might not be used in the most efficient way with fixed bins. Slightly more sophisticated than simple binning is a principal component analysis (PCA) of the binned (or pixelized) modified growth functions. In PCA uncorrelated linear combinations of the original pixels are constructed. In the limit of a large number of pixels the model dependence disappears. At the moment however, computational cost limits the number of pixels to only a few. Zhao et al. (2009a, 2010) employ a PCA in the \((\mu ,\eta )\) plane and find that the observables are more strongly sensitive to the scale-variation of the modified growth parameters rather than the time-dependence and their average values. This suggests that simple, monotonically or mildly-varying parameterizations as well as only time-dependent parameterizations are poorly suited to detect departures from \(\varLambda \)CDM.

I.3.2.3 Trigger relations

A useful and widely popular trigger relation is the value of the growth index \(\gamma \) in \(\varLambda \)CDM. It turns out that the value of \(\gamma \) can also be fitted also for simple DE models and sub-Hubble evolution in some MG models (see, e.g., Linder 2005, 2009; Huterer and Linder 2007; Linder and Cahn 2007; Nunes and Mota 2006; Ferreira and Skordis 2010). For example, for a non-clustering perfect fluid DE model with equation of state w(z) the growth factor G(a) given in (I.3.21) with the fitting formula

is accurate to the \(10^{-3}\) level compared with the actual solution of the growth Eq. (I.3.20). Generally, for a given solution of the growth equation the growth index can simply be computed using

The other way round, the modified gravity function \(\mu \) can be computed for a given \(\gamma \) (Pogosian et al. 2010)

The fact that the value of \(\gamma \) is quite stable in most DE models but strongly differs in MG scenarios means that a large deviation from \(\gamma _\varLambda \) signifies the breakdown of GR, a substantial DE clustering or a breakdown of another fundamental hypothesis like near-homogeneity. Furthermore, using the growth factor to describe the evolution of linear structure is a very simple and computationally cheap way to carry out forecasts and compare theory with data. However, several drawbacks of this approach can be identified:

-

As only one additional parameter is introduced, a second parameter, such as \(\eta \), is needed to close the system and be general enough to capture all possible modifications.

-

The growth factor is a solution of the growth equation on sub-Hubble scales and, therefore, is not general enough to be consistent on all scales.

-

The framework is designed to describe the evolution of the matter density contrast and is not easily extended to describe all other energy–momentum components and integrated into a CMB-Boltzmann code.

2.3.3 Phantom crossing

In this section, we pay attention to the evolution of the perturbations of a general dark-energy fluid with an evolving equation of state parameter w. Current limits on the equation of state parameter \(w=p{/}\rho \) of the dark energy indicate that \(p\approx -\rho \), and so do not exclude \(p<-\rho \), a region of parameter space often called phantom energy. Even though the region for which \(w<-\,1\) may be unphysical at the quantum level, it is still important to probe it, not least to test for coupled dark energy and alternative theories of gravity or higher dimensional models that can give rise to an effective or apparent phantom energy.

Although there is no problem in considering \(w<-\,1\) for the background evolution, there are apparent divergences appearing in the perturbations when a model tries to cross the limit \(w=-\,1\). This is a potential headache for experiments like Euclid that directly probe the perturbations through measurements of the galaxy clustering and weak lensing. To analyze the Euclid data, we need to be able to consider models that cross the phantom divide \(w=-\,1\) at the level of first-order perturbations (since the only dark-energy model that has no perturbations at all is the cosmological constant).

However, at the level of cosmological first-order perturbation theory, there is no fundamental limitation that prevents an effective fluid from crossing the phantom divide.

As \(w \rightarrow -1\) the terms in Eqs. (I.3.17) and (I.3.18) containing \(1/(1+w)\) will generally diverge. This can be avoided by replacing \(\theta \) with a new variable V defined via \(V=\rho \left( 1+w \right) \theta \). This corresponds to rewriting the 0-i component of the energy momentum tensor as \(ik_j T_{0}^{j}= V\), which avoids problems if \(T_{0}^{j}\ne 0\) when \(\bar{p}=-\,{\bar{\rho }}\). Replacing the time derivatives by a derivative with respect to the logarithm of the scale factor \(\ln a\) (denoted by a prime), we obtain (Ma and Bertschinger 1995; Hu 2004; Kunz and Sapone 2006):

In order to solve Eqs. (I.3.33) and (I.3.34) we still need to specify the expressions for \(\delta p\) and \(\pi \), quantities that characterize the physical, intrinsic nature of the dark-energy fluid at first order in perturbation theory. While in general the anisotropic stress plays an important role as it gives a measure of how the gravitational potentials \(\varPhi \) and \(\varPsi \) differ, we will set it in this section to zero, \(\pi =0\). Therefore, we will focus on the form of the pressure perturbation. There are two important special cases: barotropic fluids,Footnote 2 which have no internal degrees of freedom and for which the pressure perturbation is fixed by the evolution of the average pressure, and non-adiabatic fluids like, e.g., scalar fields for which internal degrees of freedom can change the pressure perturbation.

I.3.3.1 Parameterizing the pressure perturbation

Barotropic fluids.

We define a fluid to be barotropic if the pressure p depends strictly only on the energy density \(\rho \): \(p=p(\rho )\). These fluids have only adiabatic perturbations, so that they are often called adiabatic. We can write their pressure as

Here \(p({\bar{\rho }}) = \bar{p}\) is the pressure of the isotropic and homogeneous part of the fluid. The second term in the expansion (I.3.35) can be re-written as

where we used the equation of state and the conservation equation for the dark-energy density in the background. We notice that the adiabatic sound speed \(c_a^2\) will necessarily diverge for any fluid where w crosses \(-\,1\).

However, for a perfect barotropic fluid the adiabatic sound speed \(c_{a}^2\) turns out to be the physical propagation speed of perturbations. Therefore, it should never be negative (\(c_{a}^{2}<0\))—otherwise classical, and possible quantum, instabilities appear (superluminal propagation, \(c_{a}^{2}>1\), may be acceptable as the fluid is effectively a kind of ether that introduces a preferred frame, see Babichev et al. 2008). Even worse, the pressure perturbation

will necessarily diverge if w crosses \(-\,1\) and \(\delta \rho \ne 0\). Even if we find a way to stabilize the pressure perturbation, for instance an equation of state parameter that crosses the \(-\,1\) limit with zero slope (\(\dot{w}\)), there will always be the problem of a negative speed of sound that prevents these models from being viable dark-energy candidates (Vikman 2005; Kunz and Sapone 2006).

Non-adiabatic fluids

To construct a model that can cross the phantom divide, we therefore need to violate the constraint that p is a unique function of \(\rho \). At the level of first-order perturbation theory, this amounts to changing the prescription for \(\delta p\), which now becomes an arbitrary function of k and t. One way out of this problem is to choose an appropriate gauge where the equations are simple; one choice is, for instance, the rest frame of the fluid where the pressure perturbation reads (in this frame)

where now the \(\hat{c}_{s}^{2}\) is the speed with which fluctuations in the fluid propagate, i.e., the sound speed. We can write Eq. (I.3.38), with an appropriate gauge transformation, in a form suitable for the Newtonian frame, i.e., for Eqs. (I.3.33) and (I.3.34). We find that the pressure perturbation is given by (Erickson et al. 2002; Bean and Dore 2004; Carturan and Finelli 2003)

The problem here is the presence of \(c_{a}^2\), which goes to infinity at the crossing and it is impossible that this term stays finite except if \(V\rightarrow 0\) fast enough or \(\dot{w}=0\), but this is not, in general, the case.

This divergence appears because for \(w=-\,1\) the energy momentum tensor Eq. (I.3.3) reads \(T^{\mu \nu }=pg^{\mu \nu }\). Normally the four-velocity \(u^{\mu }\) is the time-like eigenvector of the energy–momentum tensor, but now all vectors are eigenvectors. So the problem of fixing a unique rest-frame is no longer well posed. Then, even though the pressure perturbation looks fine for the observer in the rest-frame, because it does not diverge, the badly-defined gauge transformation to the Newtonian frame does, as it also contains \(c_{a}^{2}\).

I.3.3.2 Regularizing the divergences

We have seen that neither barotropic fluids nor canonical scalar fields, for which the pressure perturbation is of the type (I.3.39), can cross the phantom divide. However, there is a simple model (called the quintom model Feng et al. 2005; Hu 2005) consisting of two fluids of the same type as in the previous Sect. I.3.3.1 but with a constant w on either side of \(w=-\,1\).Footnote 3 The combination of the two fluids then effectively crosses the phantom divide if we start with \(w_{\mathrm {tot}}>-\,1\), as the energy density in the fluid with \(w<-\,1\) will grow faster, so that this fluid will eventually dominate and we will end up with \(w_{\mathrm {tot}}<-\,1\).

The perturbations in this scenario were analyzed in detail in Kunz and Sapone (2006), where it was shown that in addition to the rest-frame contribution, one also has relative and non-adiabatic perturbations. All these contributions apparently diverge at the crossing, but their sum stays finite. When parameterizing the perturbations in the Newtonian gauge as

the quantity \(\gamma \) will, in general, have a complicated time and scale dependence. The conclusion of the analysis is that indeed single canonical scalar fields with pressure perturbations of the type (I.3.39) in the Newtonian frame cannot cross \(w=-\,1\), but that this is not the most general case. More general models have a priori no problem crossing the phantom divide, at least not with the classical stability of the perturbations.

Kunz and Sapone (2006) found that a good approximation to the quintom model behavior can be found by regularizing the adiabatic sound speed in the gauge transformation with

where \(\lambda \) is a tunable parameter which determines how close to \(w=-\,1\) the regularization kicks in. A value of \(\lambda \approx 1/1000\) should work reasonably well. However, the final results are not too sensitive on the detailed regularization prescription.

This result appears also related to the behavior found for coupled dark-energy models (originally introduced to solve the coincidence problem) where dark matter and dark energy interact not only through gravity (Amendola 2000a). The effective dark energy in these models can also cross the phantom divide without divergences (Huey and Wandelt 2006; Das et al. 2006; Kunz 2009).

The idea is to insert (by hand) a term in the continuity equations of the two fluids

where the subscripts m, x refer to dark matter and dark energy, respectively. In this approximation, the adiabatic sound speed \(c_{a}^{2}\) reads

which stays finite at crossing as long as \(\lambda \ne 0\).

However in this class of models there are other instabilities arising at the perturbation level regardless of the coupling used, (cf. Väliviita et al. 2008).

I.3.3.3 A word on perturbations when \(w=-\,1\)

Although a cosmological constant has \(w=-\,1\) and no perturbations, the converse is not automatically true: \(w=-\,1\) does not necessarily imply that there are no perturbations. It is only when we set from the beginning (in the calculation):

i.e., \(T^{\mu \nu } \propto g^{\mu \nu }\), that we have as a solution \(\delta = V =0\).

For instance, if we set \(w=-\,1\) and \(\delta p = \gamma \delta \rho \) (where \(\gamma \) can be a generic function) in Eqs. (I.3.33) and (I.3.34) we have \(\delta \ne 0\) and \(V\ne 0\). However, the solutions are decaying modes due to the \(-\frac{1}{a}\left( 1-3w\right) V\) term so they are not important at late times; but it is interesting to notice that they are in general not zero.

As another example, if we have a non-zero anisotropic stress \(\pi \) then the Eqs. (I.3.33) and (I.3.34) will have a source term that will influence the growth of \(\delta \) and V in the same way as \(\varPsi \) does (just because they appear in the same way). The \(\left( 1+w\right) \) term in front of \(\pi \) should not worry us as we can always define the anisotropic stress through

where \(\varPi ^{i}_{\,j}\ne 0\) when \(i\ne j\) is the real traceless part of the energy momentum tensor, probably the quantity we need to look at: as in the case of \(V=(1+w) \theta \), there is no need for \(\varPi \propto (1+w)\pi \) to vanish when \(w=-\,1\).

It is also interesting to notice that when \(w = -\,1\) the perturbation equations tell us that dark-energy perturbations are not influenced through \(\varPsi \) and \(\varPhi '\) [see Eqs. (I.3.33) and (I.3.34)]. Since \(\varPhi \) and \(\varPsi \) are the quantities directly entering the metric, they must remain finite, and even much smaller than 1 for perturbation theory to hold. Since, in the absence of direct couplings, the dark energy only feels the other constituents through the terms \((1+w)\varPsi \) and \((1+w)\varPhi '\), it decouples completely in the limit \(w=-\,1\) and just evolves on its own. But its perturbations still enter the Poisson equation and so the dark matter perturbation will feel the effects of the dark-energy perturbations.

Although this situation may seem contrived, it might be that the acceleration of the universe is just an observed effect as a consequence of a modified theory of gravity. As was shown in Kunz and Sapone (2007), any modified gravity theory can be described as an effective fluid both at background and at perturbation level; in such a situation it is imperative to describe its perturbations properly as this effective fluid may manifest unexpected behavior.

2.4 Generic properties of dark energy and modified gravity models

This section explores some generic issues that are not necessarily a feature of any particular model. We will recall the properties of particular classes of models as examples, leaving the details of the model description to Sect. I.5.

We begin by discussing the general implications of modelling dark energy as an extra degree of freedom, instead of the cosmological constant. We then discuss how the literature tends to categorize models into models of dark energy and models of modified gravity. We focus on the expansion of the cosmological background and ask what precision of measurement is necessary in order to make definite statements about large parts of the interesting model space. Then we address the issue of dark-energy perturbations, their impact on observables and how they can be used to distinguish between different classes of models. Finally, we present some general consistency relations among the perturbation variables that all models of modified gravity should fulfill.

2.4.1 Dark energy as a degree of freedom

De Sitter spacetime, filled with only a cosmological constant, is static, undergoes no evolution. It is also invariant under Lorentz transformations. When other sources of energy–momentum are added into this spacetime, the dynamics occurs on top of this static background, or better to say—vacuum. This is to say that the cosmological constant is a form of dark energy which has no dynamics of its own and the value of which is fixed for all frames and coordinate choices.

A dynamical model for acceleration implies the existence of some change of the configuration in space or time. It is no longer a gravitational vacuum. In the case of a perfectly homogeneous and isotropic universe, the evolution can only be a function of time. In reality, the universe has neither of these properties and therefore the configuration of any dynamical dark energy must also be inhomogeneous. Whether the inhomogeneities are small is a model-dependent statement.

It is important to stress that there exists no such thing as a modified gravity theory with no extra degrees of freedom beyond the metric. All models which seemingly involve just the metric degrees of freedom in some modified sense (say f(R) or f(G)), in fact can be shown to be equivalent to general relativity plus an extra scalar degree of freedom with some particular couplings to gravity (Chiba 2003; Kobayashi et al. 2011). Modifications such as massive gravity increase the number of polarisations.

In the context of \(\varLambda \)CDM, it has proven fruitful to consider the dynamics of the universe in terms of a perturbation theory: a separation into a background, linear and then higher-order fluctuations, each of increasingly small relevance (see Sect. I.3.1). These perturbations are thought to be seeded with a certain amplitude by an inflationary era at early times. Gravitational collapse then leads to a growth of the fluctuations, eventually leading to a breakdown of the perturbation theory; however, for dark matter in \(\varLambda \)CDM, this growth is only large enough to lead to non-linearity at smaller scales.

When dynamical DE is introduced, it must be described by at least one new (potentially more) degree of freedom. In principle, in order to make any statements about any such theory, one must specify the initial conditions on some space-like hypersurface and then the particular DE model will describe the subsequent evolutionary history. Within the framework of perturbation theory, initial conditions must be specified for both the background and the fluctuations. The model then provides a set of related evolution equations at each order.

We defer the discussion of the freedom allowed at particular orders to the appropriate sections below (Sect. I.4.3 for the background, Sect. I.4.4 for the perturbations). Here, let us just stress that since DE is a full degree of freedom, its initial conditions will contain both adiabatic and isocurvature modes, which may or may not be correlated, depending on their origin and which may or may not survive until today, depending on the particular model. Secondly, the non-linearity in the DE configuration is in principle independent of the non-linearity in the distribution of dark matter and will depend on both the particular model and the initial conditions. For example, the chameleon mechanism present in many non-minimally coupled models of dark energy acts to break down DE perturbation theory in higher-density environments (see Sect. I.5.8). This breakdown of linear theory is environment-dependent and only indirectly related to non-linearities in the distribution of dark matter.

Let us underline that the absolute and unique prediction of \(\varLambda \)CDM is that \(\varLambda \) is constant in space and time and therefore does not contribute to fluctuations at any order. Any violation of this statement at any one order, if it cannot be explained by astrophysics, is sufficient evidence that the acceleration is not caused by vacuum energy.

2.4.2 A definition of modified gravity

In this review we often make reference to DE and MG models. Although in an increasing number of publications a similar dichotomy is employed, there is currently no consensus on where to draw the line between the two classes. Here we will introduce an operational definition for the purpose of this document.

Roughly speaking, what most people have in mind when talking about standard dark energy are models of minimally-coupled scalar fields with standard kinetic energy in 4-dimensional Einstein gravity, the only functional degree of freedom being the scalar potential. Often, this class of model is referred to simply as “quintessence”. However, when we depart from this picture a simple classification is not easy to draw. One problem is that, as we have seen in the previous sections, both at background and at the perturbation level, different models can have the same observational signatures (Kunz and Sapone 2007). This problem is not due to the use of perturbation theory: any modification to Einstein’s equations can be interpreted as standard Einstein gravity with a modified “matter” source, containing an arbitrary mixture of scalars, vectors and tensors (Hu and Sawicki 2007b; Kunz et al. 2008).

Therefore, we could simply abandon any attempt to distinguish between DE and MG, and just analyse different models, comparing their properties and phenomenology. However, there is a possible classification that helps us set targets for the observations, which is often useful in concisely communicating the results of complex arguments. In this review, we will use the following notation:

-

Standard dark energy These are models in which dark energy lives in standard Einstein gravity and does not cluster appreciably on sub-horizon scales and does not carry anisotropic stress. As already noted, the prime example of a standard dark-energy model is a minimally-coupled scalar field with standard kinetic energy, for which the sound speed equals the speed of light.

-

Clustering dark energy In clustering dark-energy models, there is an additional contribution to the Poisson equation due to the dark-energy perturbation, which induces \(Q \ne 1\). However, in this class we require \(\eta =1\), i.e., no extra effective anisotropic stress is induced by the extra dark component. A typical example is a k-essence model with a low sound speed, \(c_s^2\ll 1\).

-

Modified gravity models These are models where from the start the Einstein equations are modified, for example scalar–tensor and f(R) type theories, Dvali–Gabadadze–Porrati (DGP) as well as interacting dark energy, in which effectively a fifth force is introduced in addition to gravity. Generically they change the clustering and/or induce a non-zero anisotropic stress. Since our definitions are based on the phenomenological parameters, we also add dark-energy models that live in Einstein’s gravity but that have non-vanishing anisotropic stress into this class since they cannot be distinguished by cosmological observations.

Notice that both clustering dark energy and explicit modified gravity models lead to deviations from what is often called ‘general relativity’ (or, like here, standard dark energy) in the literature when constraining extra perturbation parameters like the growth index \(\gamma \). For this reason we generically call both of these classes MG models. In other words, in this review we use the simple and by now extremely popular (although admittedly somewhat misleading) expression “modified gravity” to denote models in which gravity is modified and/or dark energy clusters or interacts with other fields. Whenever we feel useful, we will remind the reader of the actual meaning of the expression “modified gravity” in this review.

Therefore, on sub-horizon scales and at first order in perturbation theory our definition of MG is straightforward: models with \(Q=\eta =1\) (see Eq. I.3.23) are standard DE, otherwise they are MG models. In this sense the definition above is rather convenient: we can use it to quantify, for instance, how well Euclid will distinguish between standard dynamical dark energy and modified gravity by forecasting the errors on \(Q,\eta \) or on related quantities like the growth index \(\gamma \).

On the other hand, it is clear that this definition is only a practical way to group different models and should not be taken as a fundamental one. We do not try to set a precise threshold on, for instance, how much dark energy should cluster before we call it modified gravity: the boundary between the classes is therefore left undetermined but we think this will not harm the understanding of this document.

2.4.3 The background: to what precision should we measure w?

The effect of dark energy on background expansion is to add a new source of energy density. The chosen model will have dynamics which will cause the energy density to evolve in a particular manner. On the simplest level, this evolution is a result of the existence of intrinsic hydrodynamical pressure of the dark energy fluid which can be described by the instantaneous equation of state. Alternatively, an interaction with other species can result in a non-conservation of the DE EMT and therefore change the manner in which energy density evolves (e.g. coupled dark energy). Taken together, all these effects add up to result in an effective equation of state for DE which drives the expansion history of the universe.

It is important to stress that all background observables are geometrical in nature and therefore can only be measurements from curvatures. It is not possible to disentangle the dark energy and dark matter in a model independent matter and therefore only the measurement of the Hubble parameter up to a normalization factor, \(H(z)/H_0\), and the spatial curvature \(\varOmega _{k0}\) can be obtained in a DE-model independent manner. In particular, the measurement of the dark-matter density, \(\varOmega _{m0}\), becomes possible only on choosing some parameterization for \(w_\text {eff}\) (e.g., a constant) (Amendola et al. 2013a). One must therefore always be mindful that extracting DE properties from background measurements is limited to constraining the coefficients of a chosen parameterization of the effective equation of state for the DE component, rather than being measurements of the actual effective w and definitely not the intrinsic w of the dark energy.

Given the above complications, two crucial questions are often asked in the context of dark-energy surveys:

-

Since current measurements of the expansion history appear so consistent with \(w=-\,1\), do we not already know that the dark energy is a cosmological constant?

-

To which precision should we measure w? Or equivalently, why is the Euclid target precision of about 0.01 on \(w_0\) and 0.1 on \(w_a\) interesting?

We will now attempt to answer these questions at least partially. First, we address the question of what the measurement of w can tell us about the viable model space of DE. Then we examine whether we can draw useful lessons from inflation. Finally, we will look at what we can learn from arguments based on Bayesian model comparison.

In the first part, we will argue that whereas any detection of a deviation from \(\varLambda \)CDM expansion history immediately implies that acceleration is not driven by a cosmological constant, the converse is not true, even if \(w=-\,1\) exactly. We will also argue that a detection of a phantom equation of state, \(w<-\,1\), would reveal that gravity is not minimally coupled or that dark energy interacts and immediately eliminate the perfect-fluid models of dark energy, such as quintessence.

Then we will see that for single field slow-roll inflation models we effectively measure \(w \sim -\,1\) with percent-level accuracy (see Fig. 1); however, the deviation from a scale-invariant spectrum means that we nonetheless observe a dynamical evolution and, thus, a deviation from an exact and constant equation of state of \(w=-\,1\). Therefore, we know that inflation was not due to a cosmological constant; we also know that we can see no deviation from a de Sitter expansion for a precision smaller than the one Euclid will reach.

In the final part, we will consider the Bayesian evidence in favor of a true cosmological constant if we keep finding \(w=-\,1\); we will see that for priors on \(w_0\) and \(w_a\) of order unity, a precision like the one for Euclid is necessary to favor a true cosmological constant decisively. We will also discuss how this conclusion changes depending on the choice of priors.

I.4.3.1 What can a measurement of w tell us?

The prediction of \(\varLambda \)CDM is that \(w=-\,1\) exactly at all times. Any detection of a deviation from this result immediately disqualifies the cosmological constant as a model for dark energy.

The converse is not true, however. Simplest models of dynamical dark energy, such as quintessence (Sect. I.5.1) can approach the vacuum equation of state arbitrarily closely, given sufficiently flat potentials and appropriate initial conditions. An equation of state \(w=-\,1\) at all times is inconsistent with these models, but this may never be detectable.

Moreover, there exist classes of models, e.g., shift-symmetric k-essence with de-Sitter attractors, which have equation of state \(w=-\,1\) exactly, once the attractor is approached. Despite this, the acceleration is not at all driven by a cosmological constant, but by a perturbable fluid which has vanishing sound speed and can cluster. Such models can only be differentiated from a cosmological constant by the measurements of perturbations, if at all, see Sect. I.4.4.

Beyond eliminating the cosmological constant as a mechanism for acceleration, measuring \(w>-\,1\) is not by itself very informative as to the nature of dark energy. Essentially all classes of models can evolve with such an equation of state given appropriate initial conditions (which is not to say that any evolution history can be produced by any class of models). On the other hand, the observation of a phantom equation of state, \(w<-\,1\), at any one moment in time is hugely informative as to the nature of gravitational physics. It is well known that any such background made up of either a perfect fluid or a minimally coupled scalar field suffers from gradient instabilities, ghosts or both (Dubovsky et al. 2006). Therefore such an observation immediately implies that either gravity is non-minimally coupled and therefore there is a fifth force, that dark energy is not a perfect fluid, that dark energy interacts with other species, or that dynamical ghosts are not forbidden by nature, perhaps being stabilized by a mechanism such as ghost condensation (Arkani-Hamed et al. 2004b). Any of these would provide a discovery in itself as significant as excluding a cosmological constant.

In conclusion, we aim to measure w since it is the most direct way of disproving that acceleration is caused by a cosmological constant. However, if it turns out that no significant deviation can be detected this does not imply that the cosmological constant is the mechanism for dark energy. The clustering properties must then be verified and found to not disagree with \(\varLambda \)CDM predictions.

I.4.3.2 Lessons from inflation

In all probability the observed late-time acceleration of the universe is not the first period of accelerated expansion that occurred during its evolution: the current standard model of cosmology incorporates a much earlier phase with \(\ddot{a}>0\), called inflation. Such a period provides a natural mechanism for generating several properties of the universe: spatial flatness, gross homogeneity and isotropy on scales beyond naive causal horizons and nearly scale-invariant initial fluctuations.

The first lesson to draw from inflation is that it cannot have been due to a pure cosmological constant. This is immediately clear since inflation actually ended and therefore there had to be some sort of time evolution. We can go even further: since de Sitter spacetime is static, no curvature perturbations are produced in this case (the fluctuations are just unphysical gauge modes) and therefore an exactly scale-invariant power spectrum would have necessitated an alternative mechanism.

The results obtained by the Planck collaboration from the first year of data imply that the initial spectrum of fluctuations is not scale invariant, but rather has a tilt given by \(n_\text {s} = 0.9608\pm 0.0054\) and is consistent with no running and no tensor modes (Planck Collaboration 2014a). This is consistent with the final results from WMAP (Hinshaw et al. 2013). It is surprisingly difficult to create this observed fluctuation spectrum in alternative scenarios that are strictly causal and only act on sub-horizon scales (Spergel and Zaldarriaga 1997; Scodeller et al. 2009).

Let us now translate what the measured properties of the initial power spectrum of fluctuations imply for a observer existing during the inflationary period. We will assume that inflation was driven by one of the simple models (i.e., with sound speed \(c_\text {s}=1\)). Following the analysis in Ilić et al. (2010), we notice that

where \(\epsilon _H \equiv 2 M^2_{\mathrm {Pl}}(H'{/}H)^2\) and where the prime denotes a derivative with respect to the inflaton field.

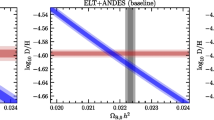

The evolution of w as a function of the comoving scale k, using only the 5-year WMAP CMB data. Red and yellow are the 95 and 68% confidence regions for the LV formalism. Blue and purple are the same for the flow-equation formalism. From the outside inward, the colored regions are red, yellow, blue, and purple. Image reproduced by permission from Ilić et al. (2010); copyright by APS

This equation of state is directly related to the tensor-to-scalar ratio through \(r \sim 24(1+w)\). Since no tensor modes have been detected thus far, no deviation from \(w=-\,1\) has been seen either. In fact the Planck 95% limit is \(r\lesssim 0.1\) implying that \(1+w\lesssim 0.04\). Moreover, the spectral tilt itself is also related to the rate of change of w,

where \(\eta _H \equiv 2 M^2_{\mathrm {Pl}} H''/H \). Thus, if \(n_s\ne 1\) we have that either \(\eta _H\ne 0\) or \(\varepsilon _H\ne 0\), and consequently either \(w\ne -1\) or w is not constant at the pivot scale.

We can rewrite Eq. (I.4.1) as

Without tuning, it is natural for \(\eta _H\sim \mathcal {O}(\varepsilon _H^2)\). However, classes of models exist where \(\eta _H\sim \varepsilon _H\). Thus, given the observations of the scale dependence of the initial curvature fluctuations, we can conclude that \(1+w\) should lie between 0.005 and 0.04, which is well within the current experimental bounds on the DE equation of state and roughly at the limit of Euclid’s sensitivity. We have plotted the allowed values of w as a function of scale in Fig. 1.

We should note that there are classes of models where the cancellation between \(\eta _H\) and the tilt in Eq. (I.4.3) is indeed natural which is why one cannot give a lower limit for the amplitude of primordial gravitational waves and w lies arbitrarily close to \(-\,1\). On the other hand, the observed period of inflation is probably in the middle of a long slow-roll phase. By Eq. (I.4.2), this cancellation would only happen at one moment in time. We have plotted the typical evolution of w in inflation in Fig. 2.

Despite being the only other physically motivated period of acceleration, inflation does occur at a very different energy scale, between 1 MeV and GUT scale \(10^{16}\) GeV, while the energy scale for dark energy is \(10^{-3}\) eV. We should therefore be wary about pushing the analogy too far.

The complete evolution of w(N), from the flow-equation results accepted by the CMB likelihood. Inflation is made to end at \(N=0\) where \(w(N=0)=-\,1/3\) corresponding to \(\epsilon _H(N=0)=1\). For our choice of priors on the slow-roll parameters at \(N=0\), we find that w decreases rapidly towards \(-\,1\) (see inset) and stays close to it during the period when the observable scales leave the horizon (\(N\approx 40\textendash 60\)). Image reproduced by permission from Ilić et al. (2010); copyright by APS

I.4.3.3 When should we stop? Bayesian model comparison

In Sect. I.4.3.1, we explained that the measurement of the equation of state w can exclude some classes of models, including the cosmological constant of \(\varLambda \)CDM. However, most classes of models allow the equation of state to be arbitrarily close to that of vacuum energy, \(w=-\,1\), while still representing completely different physics. Since precision cannot be infinite, we need to propose an algorithm to determine how well this property should be measured. As we showed in Sect. I.4.3.2 above, inflation provides an example of a period that acceleration that, if it occurred at late times would have been judged as consistent with \(w=-\,1\) given today’s constraints. We therefore should require a better measurement, but how much better?

We approach the answer to this question from the perspective of Bayesian evidence: at what precision does the non-detection of a deviation of the background expansion history signifies that we should prefer the simpler null hypothesis that \(w=-\,1\).

In our Bayesian framework, the first model, the null hypothesis \(M_0\), posits that the background expansion is due to an extra component of energy density that has equation of state \(w=-\,1\) at all times. The other models assume that the dark energy is dynamical in a way that is well parametrized either by an arbitrary constant w (model \(M_1\)) or by a linear fit \(w(a)=w_0+(1-a) w_a\) (model \(M_2\)).

Here we are using the constant and linear parametrization of w because on the one hand we can consider the constant w to be an effective quantity, averaged over redshift with the appropriate weighting factor for the observable, see Simpson and Bridle (2006), and on the other hand because the precision targets for observations are conventionally phrased in terms of the figure of merit (FoM) given by \(1\big /\sqrt{|{\mathrm {Cov}}(w_0,w_a)|}\). We will, therefore, find a direct link between the model probability and the FoM. It would be an interesting exercise to repeat the calculations with a more general model, using e.g. PCA, although we would expect to reach a similar conclusion.

Bayesian model comparison aims to compute the relative model probability

where we used Bayes formula and where \(B_{01}\equiv P(d|M_0)/P(d|M_1)\) is called the Bayes factor. The Bayes factor is the amount by which our relative belief in the two models is modified by the data, with \(\ln B_{01} > 0\ (<0)\) indicating a preference for model 0 (model 1). Since model \(M_0\) is nested in \(M_1\) at the point \(w=-\,1\) and in model \(M_2\) at \((w_0=-\,1,w_a=0)\), we can use the Savage–Dickey (SD) density ratio (e.g. Trotta 2007a). Based on SD, the Bayes factor between the two models is just the ratio of posterior to prior at \(w=-\,1\) or at \((w_0=-\,1,w_a=0)\), marginalized over all other parameters.

Let us start by following Trotta et al. (2010) and consider the Bayes factor \(B_{01}\) between a cosmological constant model \(w=-\,1\) and a free but constant effective w. If we assume that the data are compatible with \(w_{{\mathrm {eff}}}=-\,1\) with an uncertainty \(\sigma \), then the Bayes factor in favor of a cosmological constant is given by

where for the evolving dark-energy model we have adopted a flat prior in the region \(-\,1 - \varDelta _{-} \le w_{{\mathrm {eff}}}\le -1+\varDelta _+\) and we have made use of the Savage–Dickey density ratio formula (see Trotta 2007a). The prior, of total width \(\varDelta = \varDelta _+ + \varDelta _-\), is best interpreted as a factor describing the predictivity of the dark-energy model under consideration. In what follows we will consider example benchmark three models as alternatives to \(w=-\,1\):

-

Fluid-like: we assume that the acceleration is driven by a fluid the background configuration of which satisfies both the strong energy condition and the null energy condition, i.e., we have that \(\varDelta _+ = 2/3, \varDelta _- = 0\).

-

Phantom: phantom models violate the null energy condition, i.e., are described by \(\varDelta _+ = 0, \varDelta _- > 0\), with the latter being possibly rather large.

-

Small departures: We assume that the equation of state is very close to that of vacuum energy, as seems to have been the case during inflation: \(\varDelta _+ = \varDelta _- = 0.01\).

A model with a large \(\varDelta \) will be more generic and less predictive, and therefore is disfavored by the Occam’s razor of Bayesian model selection, see Eq. (I.4.5). According to the Jeffreys’ scale for the strength of evidence, we have a moderate (strong) preference for the cosmological constant model for \(2.5< \ln B_{01} < 5.0\) (\(\ln B_{01}>5.0\)), corresponding to posterior odds of 12:1–150:1 (above 150:1).