Abstract

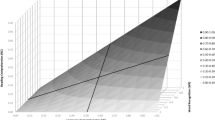

The Wechsler Intelligence Scale for Children-Fifth Edition (WISC-V; Wechsler 2014a) Technical and Interpretation Manual (Wechsler 2014b) dedicated only a single page to discussing the 10-subtest WISC-V primary battery across the entire 6 to 16 age range. Users are left to extrapolate the structure of the 10-subtest battery from the 16-subtest structure. Essentially, the structure of the 10-subtest WISC-V primary battery remains largely uninvestigated particularly at various points across the developmental period. Using principal axis factoring and the Schmid–Leiman orthogonalization procedure, the 10-subtest WISC-V primary structure was examined across four standardization sample age groups (ages 6–8, 9–11, 12–14, 15–16). Forced extraction of the publisher’s promoted five factors resulted in a trivial fifth factor at all ages except 15–16. At ages 6 to 14, the results suggested that the WISC-V contains the same four first-order factors as the prior WISC-IV (Verbal Comprehension, Perceptual Reasoning, Working Memory, Processing Speed; Wechsler 2003). Results suggest interpretation of the Visual Spatial and Fluid Reasoning indexes at ages 6 to 14 may be inappropriate due to the fusion of the Visual Spatial and Fluid Reasoning subtests. At ages 15–16, the five-factor structure was supported. Results also indicated that the WISC-V provides strong measurement of general intelligence and clinical interpretation should reside primarily at that level. Regardless of whether a four- or five-factor index structure is emphasized, the group factors reflecting the WISC-V indices do not account for a sufficient proportion of variance to warrant primary interpretive emphasis.

Similar content being viewed by others

References

Adams, K. M. (2000). Practical and ethical issues pertaining to test revisions. Psychological Assessment, 12, 281–286. doi:10.1037/1040-3590.12.3.281.

Alexandre, J. S., Morin, A., Arens, A. K., Antoine, T., & Herve, C. (2015). Exploring sources of construct-relevant multidimensionality in psychiatric measurement: a tutorial and illustration using the composite scale of morningness. International Journal of Methods in Psychiatric Research . doi:10.1002/mpr.1485.Advanced online publication

Beaujean, A. A. (2015a). John Carroll’s views on intelligence: bi-factor vs. higher-order models. Journal of Intelligence, 3, 121–136. doi:10.3390/jintelligence3040121.

Beaujean, A. A. (2015b). Adopting a new test edition: psychometric and practical considerations. Research and Practice in the Schools, 3, 51–57.

Beaujean, A. A. (2016). Reproducing the Wechsler Intelligence Scale for Children-Fifth Edition: factor model results. Journal of Psychoeducational Assessment, 34, 404–408. doi:10.1177/0734282916642679.

Bodin, D., Pardini, D. A., Burns, T. G., & Stevens, A. B. (2009). Higher order factor structure of the WISC–IV in a clinical neuropsychological sample. Child Neuropsychology, 15, 417–424.

Brown, T. A. (2015). Confirmatory factor analysis for applied research (2nd ed.). New York: Guilford.

Brunner, M., Nagy, G., & Wilhelm, O. (2012). A tutorial on hierarchically structured constructs. Journal of Personality, 80, 796–846. doi:10.1111/j.1467–6494.2011.00749.x.

Canivez, G. L. (2008). Orthogonal higher-order factor structure of the Stanford–Binet Intelligence Scales for children and adolescents. School Psychology Quarterly, 23, 533–541. doi:10.1037/a0012884.

Canivez, G. L. (2010). Review of the Wechsler Adult Intelligence Test–Fourth Edition. In R. A. Spies, J. F. Carlson, & K. F. Geisinger (Eds.), The eighteenth mental measurements yearbook (pp. 684–688). Lincoln: Buros Institute of Mental Measurements.

Canivez, G. L. (2014a). Review of the Wechsler Preschool and Primary Scale of Intelligence–Fourth Edition. In J. F. Carlson, K. F. Geisinger, & J. L. Jonson (Eds.), The nineteenth mental measurements yearbook (pp. 732–737). Lincoln: Buros Institute of Mental Measurements.

Canivez, G. L. (2014b). Construct validity of the WISC–IV with a referred sample: direct versus indirect hierarchical structures. School Psychology Quarterly, 29, 38–51. doi:10.1037/spq0000032.

Canivez, G. L. (2016). Bifactor modeling in construct validation of multifactored tests: implications for understanding multidimensional constructs and test interpretation. In K. Schweizer & C. DiStefano (Eds.), Principles and methods of test construction: standards and recent advancements (pp. 247–271). Gottingen: Hogrefe.

Canivez, G. L., & Kush, J. C. (2013). WISC–IV and WAIS–IV structural validity: alternate methods, alternate results. Commentary on Weiss et al. (2013a) and Weiss et al. (2013b). Journal of Psychoeducational Assessment, 31, 157–169. doi:10.1177/0734282913478036.

Canivez, G. L., & McGill, R. J. (2016). Factor structure of the Differential Ability Scales–Second Edition: exploratory and hierarchical factor analyses with the core subtests. Psychological Assessment, 28, 1475–1488. doi:10.1037/pas0000279.

Canivez, G. L., & Watkins, M. W. (2010a). Investigation of the factor structure of the Wechsler Adult Intelligence Scale–Fourth Edition (WAIS–IV): exploratory and higher-order factor analyses. Psychological Assessment, 22, 827–836. doi:10.1037/a0020429.

Canivez, G. L., & Watkins, M. W. (2010b). Exploratory and higher-order factor analyses of the Wechsler Adult Intelligence Scale–Fourth Edition (WAIS–IV) adolescent subsample. School Psychology Quarterly, 25, 223–235. doi:10.1037/a0022046.

Canivez, G. L., & Watkins, M. W. (2016). Review of the Wechsler Intelligence Scale for Children–Fifth Edition: Critique, commentary, and independent analyses. In A. S. Kaufman, S. E. Raiford, & D. L. Coalson (Authors), Intelligent testing with the WISC–V (pp. 683–702). Hoboken, NJ: Wiley.

Canivez, G. L., Konold, T. R., Collins, J. M., & Wilson, G. (2009). Construct validity of the Wechsler Abbreviated Scale of Intelligence and Wide Range Intelligence Test: convergent and structural validity. School Psychology Quarterly, 24, 252–265. doi:10.1037/a0018030.

Canivez, G. L., Watkins, M. W., James, T., James, K., & Good, R. (2014). Incremental validity of WISC–IVUK factor index scores with a referred Irish sample: predicting performance on the WIAT–IIUK. British Journal of Educational Psychology, 84, 667–684. doi:10.1111/bjep.12056.

Canivez, G. L., Watkins, M. W., & Dombrowski, S. C. (2016a). Factor structure of the Wechsler Intelligence Scale for Children–Fifth Edition: exploratory factor analyses with the 16 primary and secondary subtests. Psychological Assessment, 28, 975–986. doi:10.1037/pas0000238.

Canivez, G. L., Watkins, M. W., & Dombrowski, S. C. (2016b). Structural validity of the Wechsler Intelligence Scale for Children–Fifth Edition: confirmatory factor analyses with the 16 primary and secondary subtests. Psychological Assessment . doi:10.1037/pas0000358.Advance online publication

Carretta, T. R., & Ree, J. J. (2001). Pitfalls of ability research. International Journal of Selection and Assessment, 9, 325–335.

Carroll, J. B. (1993). Human cognitive abilities. Cambridge: Cambridge University Press.

Carroll, J. B. (1995). On methodology in the study of cognitive abilities. Multivariate Behavioral Research, 30, 429–452. doi:10.1207/s15327906mbr3003_6.

Carroll, J. B. (1998). Human cognitive abilities: a critique. In J. J. McArdle & R. W. Woodcock (Eds.), Human cognitive abilities in theory and practice (pp. 5–23). Mahwah: Erlbaum.

Carroll, J. B. (2003). The higher-stratum structure of cognitive abilities: current evidence supports g and about ten broad factors. In H. Nyborg (Ed.), The scientific study of general intelligence: tribute to Arthur R. Jensen (pp. 5–21). New York: Pergamon.

Cattell, R. B. (1966). The scree test for the number of factors. Multivariate Behavioral Research, 1, 245–276. doi:10.1207/s15327906mbr0102_10.

Cattell, R. B. (1987). Intelligence: its structure, growth, and action. New York: Elsevier.

Cattell, R. B., & Horn, J. L. (1978). A check on the theory of fluid and crystallized intelligence with description of new subtest designs. Journal of Educational Measurement, 15, 139–164. doi:10.1111/j.1745–3984.1978.tb00065.x.

Chen, F. F., Hayes, A., Carver, C. S., Laurenceau, J.–. P., & Zhang, Z. (2012). Modeling general and specific variance in multifaceted constructs: a comparison of the bifactor model to other approaches. Journal of Personality, 80, 219–251. doi:10.1111/j.1467-6494.2011.00739.x.

Chen, H., Zhang, O., Raiford, S. E., Zhu, J., & Weiss, L. G. (2015). Factor invariance between genders on the Wechsler Intelligence Scale for Children–Fifth Edition. Personality and Individual Differences, 86, 1–5. doi:10.1016/j.paid.2015.05.020.

Child, D. (2006). The essentials of factor analysis (3rd ed.). New York: Continuum.

Deary, I. J. (2013). Intelligence. Current Biology, 23, 673–676. doi:10.1016/j.cub.2013.07.021.

DiStefano, C., & Dombrowski, S. C. (2006). Investigating the theoretical structure of the Stanford–Binet–Fifth Edition. Journal of Psychoeducational Assessment, 24, 123–136. doi:10.1177/0734282905285244.

Dombrowski, S. C. (2013). Investigating the structure of the WJ–III cognitive at school age. School Psychology Quarterly, 28, 154–169. doi:10.1037/spq0000010.

Dombrowski, S. C. (2014a). Exploratory bifactor analysis of the WJ–III cognitive in adulthood via the Schmid–Leiman procedure. Journal of Psychoeducational Assessment, 32, 330–341. doi:10.1177/0734282913508243.

Dombrowski, S. C. (2014b). Investigating the structure of the WJ–III cognitive in early school age through two exploratory bifactor analysis procedures. Journal of Psychoeducational Assessment, 32, 483–494. doi:10.1177/0734282914530838.

Dombrowski, S. C. (2015). Psychoeducational assessment and report writing. New York: Springer Science.

Dombrowski, S. C., & Watkins, M. W. (2013). Exploratory and higher order factor analysis of the WJ–III full test battery: a school aged analysis. Psychological Assessment, 25, 442–455. doi:10.1037/a0031335.

Dombrowski, S. C., Ambrose, D. A., & Clinton, A. (2007). Dogmatic insularity in learning disabilities diagnosis and the critical need for a philosophical analysis. International Journal of Special Education, 22(1), 3–10.

Dombrowski, S. C., Watkins, M. W., & Brogan, M. J. (2009). An exploratory investigation of the factor structure of the Reynolds Intellectual Assessment Scales (RIAS). Journal of Psychoeducational Assessment, 27, 494–507. doi:10.1177/0734282909333179.

Dombrowski, S. C., Canivez, G. L., Watkins, M. W., & Beaujean, A. (2015). Exploratory bifactor analysis of the Wechsler Intelligence Scale for Children–Fifth Edition with the 16 primary and secondary subtests. Intelligence, 53, 194–201. doi:10.1016/j.intell.2015.10.009.

Dombrowski, S. C., McGill, R. J., & Canivez, G. L. (2016). Exploratory and hierarchical factor analysis of the WJ IV cognitive at school age. Psychological Assessment. Advance online publication. doi:10.1037/pas0000350.

Fabrigar, L. R., Wegener, D. T., MacCallum, R. C., & Strahan, E. J. (1999). Evaluating the use of exploratory factor analysis in psychological research. Psychological Methods, 4, 272–299. doi:10.1037/1082-989X.4.3.272.

Frazier, T. W., & Youngstrom, E. A. (2007). Historical increase in the number of factors measured by commercial tests of cognitive ability: are we overfactoring? Intelligence, 35, 169–182. doi:10.1016/j.intell.2006.07.002.

Gignac, G. E. (2005). Revisiting the factor structure of the WAIS–R: insights through nested factor modeling. Assessment, 12, 320–329. doi:10.1177/1073191105278118.

Gignac, G. E. (2006). The WAIS–III as a nested factors model: a useful alternative to the more conventional oblique and higher-order models. Journal of Individual Differences, 27, 73–86. doi:10.1027/1614–0001.27.2.73.

Gignac, G. (2008). Higher-order models versus direct hierarchical models: g as superordinate or breadth factor? Psychology Science Quarterly, 50, 21–43.

Gignac, G. E., & Watkins, M. W. (2013). Bifactor modeling and the estimation of model-based reliability in the WAIS–IV. Multivariate Behavioral Research, 48, 639–662. doi:10.1080/00273171.2013.804398.

Glutting, J. J., Youngstrom, E. A., Ward, T., Ward, S., & Hale, R. (1997). Incremental efficacy of WISC–III factor scores in predicting achievement: what do they tell us? Psychological Assessment, 9, 295–301.

Glutting, J. J., Watkins, M. W., Konold, T. R., & McDermott, P. A. (2006). Distinctions without a difference: the utility of observed versus latent factors from the WISC–IV in estimating reading and math achievement on the WIAI–II. Journal of Special Education, 40, 103–114. doi:10.1177/00224669060400020101.

Gorsuch, R. L. (1983). Factor analysis (2nd ed.). Hillsdale: Erlbaum.

Gustafsson, J. E., & Snow, R. E. (1997). Ability profiles. In R. F. Dillon (Ed.), Handbook on testing (pp. 107–135). Westport: Greenwood Press.

Horn, J. L. (1965). A rationale and test for the number of factors in factor analysis. Psychometrika, 30, 179–185.

Horn, J. L. (1991). Measurement of intellectual capabilities: A review of theory. In K. S. McGrew, J. K. Werder & R. W. Woodcock (Eds.), Woodcock-Johnson technical manual (Rev. ed., pp. 197–232). Itasca, IL: Riverside.

Horn, J. L., & Blankson, A. N. (2012). Foundations for better understanding of cognitive abilities. In D. P. Flanagan & P. L. Harrison (Eds.), Contemporary intellectual assessment: theories, tests, and issues (3rd ed., pp. 73–98). New York: Guilford.

Horn, J. L., & Cattell, R. B. (1966). Refinement and test of the theory of fluid and crystallized general intelligence. Journal of Educational Psychology, 57, 253–270.

Jensen, A. R. (1998). The g factor: the science of mental ability. Westport: Praeger.

Kaiser, H. F. (1960). The application of electronic computers to factor analysis. Educational and Psychological Measurement, 20, 141–151. doi:10.1177/001316446002000116.

Kaufman, A. S. (1994). Intelligent testing with the WISC–III. New York: Wiley.

Kline, R. B. (2011). Principles and practice of structural equation modeling (3rd ed.). New York: Guilford.

Kline, R. B. (2016). Principles and practices of structural equation modeling, Fourth edition. New York: Guilford Press.

Le, H., Schmidt, F. L., Harter, J. K., & Lauver, K. J. (2010). The problem of empirical redundancy of constructs in organizational research: an empirical investigation. Organizational Behavior and Human Decision Processes, 112, 112–125. doi:10.1016/j.obhdp.2010.02.003.

Lubinski, D. (2000). Scientific and social significance of assessing individual differences: “sinking shafts at a few critical points.”. Annual Review of Psychology, 51, 405–444. doi:10.1146/annurev.psych.51.1.405.

Luciana, M., Conklin, H. M., Hooper, C. J., & Yarger, R. S. (2005). The development of nonverbal working memory and executive control processes in adolescents. Child Development, 76, 697–712.

McClain, A. L. (1996). Hierarchical analytic methods that yield different perspectives on dynamics: aids to interpretation. Advances in Social Science Methodology, 4, 229–240. doi:10.1177/0734282915624293.

Nelson, J. M., & Canivez, G. L. (2012). Examination of the structural, convergent, and incremental validity of the Reynolds Intellectual Assessment Scales (RIAS) with a clinical sample. Psychological Assessment, 24, 129–140. doi:10.1037/a0024878.

Nelson, J. M., Canivez, G. L., Lindstrom, W., & Hatt, C. (2007). Higher-order exploratory factor analysis of the Reynolds Intellectual Assessment Scales with a referred sample. Journal of School Psychology, 45, 439–456. doi:10.1016/j.jsp.2007.03.003.

Nelson, J. M., Canivez, G. L., & Watkins, M. W. (2013). Structural and incremental validity of the Wechsler Adult Intelligence Scale–Fourth Edition (WAIS–IV) with a clinical sample. Psychological Assessment, 25, 618–630. doi:10.1037/a0032086.

Oakland, T., Douglas, S., & Kane, H. (2016). Top ten standardized tests used internationally with children and youth by school psychologists in 64 countries: a 24-year follow-up study. Journal of Psychoeducational Assessment, 34, 166–176.

Ree, M. J., Carretta, T. R., & Green, M. T. (2003). The ubiquitous role of g in training. In H. Nyborg (Ed.), The scientific study of general intelligence: tribute to Arthur R. Jensen (pp. 262–274). New York: Pergamon Press.

Reise, S. P. (2012). The rediscovery of bifactor measurement models. Multivariate Behavioral Research, 47, 667–696. doi:10.1080/00273171.2012.715555.

Reise, S. P., Bonifay, W. E., & Haviland, M. G. (2013). Scoring and modeling psychological measures in the presence of multidimensionality. Journal of Personality Assessment, 95, 129–140. doi:10.1080/00223891.2012.725437.

Rodriguez, A., Reise, S. P., & Haviland, M. G. (2016). Evaluating bifactor models: calculating and interpreting statistical indices. Psychological Methods, 21, 137–150. doi:10.1037/met0000045.

Schmid, J., & Leiman, J. M. (1957). The development of hierarchical factor solutions. Psychometrika, 22, 53–61. doi:10.1007/BF02289209.

Spearman, C. (1927). The abilities of man. New York: Cambridge University Press.

Strauss, E., Spreen, O., & Hunter, M. (2000). Implications of test revisions for research. Psychological Assessment, 12, 237–244. doi:10.1037/1040-3590.12.3.237.

Thompson, B. (2004). Exploratory and confirmatory factor analysis: understanding concepts and applications. Washington, DC: American Psychological Association.

Velicer, W. F. (1976). Determining the number of components form the matrix of partial correlations. Psychometrika, 31, 321–327. doi:10.1007/BF02293557.

Watkins, M. W. (2006). Orthogonal higher-order structure of the Wechsler Intelligence Scale for Children–Fourth Edition. Psychological Assessment, 18, 123–125. doi:10.1037/1040-3590.18.1.123.

Watkins, M. W. (2010). Structure of the Wechsler Intelligence Scale for Children–Fourth Edition among a national sample of referred students. Psychological Assessment, 22, 782–787. doi:10.1037/a0020043.

Watkins, M. W. (2013). Omega. [Computer software]. Phoenix, AZ: Ed & Psych Associates.

Watkins, M. W., & Beaujean, A. A. (2014). Bifactor structure of the Wechsler Preschool and Primary Scale of Intelligence–Fourth edition. School Psychology Quarterly, 29, 52–63. doi:10.1037/spq0000038.

Watkins, M. W., Wilson, S. M., Kotz, K. M., Carbone, M. C., & Babula, T. (2006). Factor structure of the Wechsler Intelligence Scale for Children–Fourth Edition among referred students. Educational and Psychological Measurement, 66, 975–983. doi:10.1177/0013164406288168.

Watkins, M. W., Canivez, G. L., James, T., James, K., & Good, R. (2013). Construct validity of the WISC–IVUK with a large referred Irish sample. International Journal of School & Educational Psychology, 1, 102–111. doi:10.1080/21683603.2013.794439.

Wechsler, D. (2003). Wechsler Intelligence Scales for Children--Fouth Edition. San Antonio, TX: The Psychological Corporation.

Wechsler, D. (2014a). Wechsler Intelligence Scale for Children–Fifth Edition. San Antonio: NCS Pearson.

Wechsler, D. (2014b). Wechsler Intelligence Scale for Children–Fifth Edition technical and interpretive manual. San Antonio: NCS Pearson.

Wechsler, D. (2014c). Technical and interpretive manual supplement: special group validity studies with other measures and additional tables. San Antonio: NCS Pearson.

Weiner, I. B. (1989). On competence and ethicality in psychodiagnostic assessment. Journal of Personality Assessment, 53, 827–831. doi:10.1207/s15327752jpa5304_18.

Weiss, L. G., Keith, T. Z., Zhu, J., & Chen, H. (2013b). WISC-IV and clinical validation of the four- and five-factor interpretative approaches. Journal of Psycheducational Assessment, 31, 114–131.

Wolff, H. G., & Preising, K. (2005). Exploring item and higher order factor structure with the Schmid-Leiman solution: syntax codes for SPSS and SAS. Behavior Research Methods, 37, 48–58.

Yuan, K. H., & Chan, W. (2005). On nonequivalence of several procedures of structural equation modeling. Psychometrika, 70, 791–798. doi:10.1007/s11336–001–0930–910.1007/s11336–001–0930–9.

Zinbarg, R. E., Revelle, W., Yovel, I., & Li, W. (2005). Cronbach’s alpha, Revelle’s beta, and McDonald’s omega h: their relations with each other and two alternative conceptualizations of reliability. Psychometrika, 70, 123–133. doi:10.1007/s11336–003–0974–7.

Zinbarg, R. E., Yovel, I., Revelle, W., & McDonald, R. P. (2006). Estimating generalizability to a latent variable common to all of a scale’s indicators: a comparison of estimators for ωh. Applied Psychological Measurement, 30, 121–144. doi:10.1177/0146621605278814.

Zoski, K. W., & Jurs, S. (1996). An objective counterpart to the visual scree test for factor analysis: the standard error scree. Educational and Psychological Measurement, 56, 443–451. doi:10.1177/0013164496056003006.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

Stefan Dombrowski, Gary Canivez, and Marley Watkins declare that they have no conflict of interest.

Ethical Approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Electronic supplementary material

ESM 1

(DOCX 86 kb)

Rights and permissions

About this article

Cite this article

Dombrowski, S.C., Canivez, G.L. & Watkins, M.W. Factor Structure of the 10 WISC-V Primary Subtests Across Four Standardization Age Groups. Contemp School Psychol 22, 90–104 (2018). https://doi.org/10.1007/s40688-017-0125-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40688-017-0125-2