Abstract

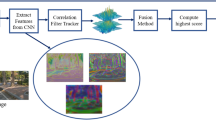

One of the most important and challenging research topics in the area of computer vision is visual object tracking, which is relevant to many real-world applications. Recently, discriminative correlation filters (DCF) have been demonstrated to overcome the problems in visual object tracking efficiently. So far, only single-resolution feature maps have been utilized in DCF. Owing to this limitation, the potential of DCF has not been exploited. Moreover, convolutional features have demonstrated a better performance for visual tracking than histogram of oriented gradients (HOG) features and color features. Based on these facts, in this paper, we propose collaborative learning based on multi-resolution feature maps for DCF, employing convolutional features. Further, the confidence score, which represents the location of the target object, is selected from various candidates based on certain rules. In addition, the continuous filters are trained to handle the variations of appearance of the target. The extensive experimental results obtained using VOT2015 and OTB-100 benchmark datasets show that the proposed algorithm performs favorably against state-of-the-art tracking algorithms.

Similar content being viewed by others

References

A. W. M. Smeulders, D. M. Chu, R. Cucchiara, S. Calderara, A. Dehghan, and M. Shah, “Visual tracking: an experimental survey,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 36, no. 7, pp. 1442–1468, July 2014.

H. Eum, J. Bae, C. Yoon, and E. Kim, “Ship detection using edge-based segmentation and histogram of oriented gradient with ship size ratio,” International Journal of Fuzzy Logic and Intelligent Systems, vol. 15, no. 4, pp. 251–259, December 2015. [click]

S. A. Wibowo, H. Lee, E. K. Kim, and S. Kim, “Fast generative approach Based on sparse representation for visual tracking,” Proc. of the 2016 Joint 8th International Conference on Soft Computing and Intelligent Systems (SCIS) and 17th International Symposium on Advanced Intelligent Systems, pp. 778–783, 2016.

Y. Jeong, S. A. Wibowo, M. Song, and S. Kim, “Position coordinate representation of flying arrow and analysis of its performance indicator,” International Journal of Control, Automation and Systems, vol. 14, no. 4, pp. 1037–1046, August 2016.

J. F. Henriques, R. Caseiro, P. Martins, and J. Batista, “High-speed tracking with kernelized correlation filters,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 37, no. 3, pp. 583–596, March 2015.

M. Danelljan, F. S. Khan, M. Felsberg, and J. van de Weijer, “Adaptive color attributes for real-time visual tracking,” Proc. of the IEEE Conference on Computer Vision and Patern Recognition, pp. 1090–1097, 2014.

M. Danelljan, G. Hager, F. S. Khan, and M. Felsberg, “Coloring channel representation for visual tracking,” Proc. of the 19th Scandinavian Conference, pp. 117–129, 2015. [click]

M. Danelljan, G. Hager, F. S. Khan, and M. Felsberg, “Learning spatially regularized correlation filters for visual tracking,” Proc. of the IEEE International Conference on Computer Vision, pp. 4310–4318, 2015.

M. Cimpoi, S. Maji, and A. Vedaldi, “Deep filter banks for texture recognition and segmentation,” Proc. of the 2015 IEEE Conference on Computer Vision and Patern Recognition, pp. 3828–3836, 2015.

R. Girshick, J. Donahue, T. Darrell, and J. Malik, “Rich feature hierarchies for accurate object detection and semantic segmentation,” Proc. of the IEEE Conference on Computer Vision and Patern Recognition, pp. 580–587, 2014. [click]

M. Oquab, L. Botton, I. Laptev, and J. Sivic, “Learning and transferring mid-level image representations using convolutional neural networks,” Proc. of the IEEE Conference on Computer Vision and Patern Recognition, pp. 1717–1724, 2014.

H. Li, Y. Li, and F. Porikli, “Deeptrack: learning discriminative feature representations by convolutional neural networks for visual tracking,” Proc. of the British Machine Vision Conference, pp. 1–12, 2014.

S. Hong, T. You, S. Kwak, and B. Han, “Online tracking by learning discriminative saliency map with convolutional neural network,” Proc. of the 32nd International Conference on Machine Learning, pp. 1–10, 2015.

L. Wang, T. Liu, G. Wang, K. L. Chan, and Q. Yang, “Video tracking using learned hierarchical features,” IEEE Trans. Image Process., vol. 24, no. 4, pp. 1424–1435, April 2015. [click]

H. Nam and B. Han, “Learning multi-domain convolutional neural networks for visual tracking,” CoRR, vol. abs/1510.07945, pp. 1–10, 2015.

C. Ma, J. B. Huang, X. Yang, and M.-H. Yang, “Hierarchical convolutional features for visual tracking,” Proc. of the IEEE International Conference on Computer Vision, pp. 3074–3082, 2015.

K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” Proc. of the 3rd International Conference on Learning Representations, pp. 1–14, 2015.

M. Danelljan, G. Hager, F. S. Khan, and M. Felsberg, “Convolutional features for correlation filter based visual tracking,” Proc. of the IEEE International Conference on Computer Vision Workshop, pp. 58–66, 2015.

M. Danelljan, A. Robinson, F. S. Khan, and M. Felsberg, “Beyond correlation filters: learning continuous convolution operators for visual tracking,” Proc. of the European Conference on Computer Vision, pp. 1–16, 2016.

K. Chatfield, K. Simonyan, A. Vedaldi, and A. Zisserman, “Return of the devil in the details: delving deep into convolutional nets,” Proc. of the British Machine Vision Conference, pp. 1–11, 2014.

Y. Wu, J. Lim, and M.-H. Yang, “Object tracking benchmark,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 37, no. 7, pp. 1834–1848, September 2015.

M. Kristan, J. Matas, A. Leonardis, M. Felsberg, L. Cehovin, G. Fernandez, T. Vojir, G. Nebehay, R. Pflugfelder, and G. Hager, “The visual object tracking VOT2015 challenge results,” Proc. of the IEEE International Conference on Computer Vision Workshop, pp. 1–23, 2015.

M. Kristan, J. Matas, A. Leonardis, T. Vojir, R. Pflugfelder, G. Fernandez, G. Nebehay, F. Porikli, and L. Cehovin, “A novel performance evaluation methodology for singletarget trackers,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 38, no. 11, pp. 2137–2155, November 2016. [click]

S. Hare, S. Golodetz, A. Saffari, V. Vineet, M.-M. Cheng, S. L. Hicks, and P. Torr, “Struck: structured output tracking with kernels,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 38, no. 10, pp. 2096–2109, October 2016. [click]

M. Felsberg, “Enhanced distribution field tracking using channel representations,” Proc. of the IEEE International Conference on Computer Vision Workshop, pp. 121–128, 2013.

T. Vojir, J. Noskova, and J. Matas, “Robust scale-adaptive mean-shift for tracking,” Pattern Recognition Letters, vol. 49, pp. 250–258, November 2014. [click]

K. Zhang, L. Zhang, and M.-H. Yang, “Real-time compressive tracking,” Proc. of the European Conference on Computer Vision, pp. 864–877, 2012.

H. Possegger, T. Mauthner, and H. Bischof, “In defense of color-based model-free tracking,” Proc. of the IEEE Conference on Computer Vision and Patern Recognition, pp. 2113–2120, 2015. [click]

M. Danelljan, G. Hager, F. S. Khan, and M. Felsberg, “Accurate scale estimation for robust visual tracking,” Proc. of the British Machine Vision Conference, pp. 1–11, 2014.

G. Zhu, F. Porikli, and H. Li, “Tracking randomly moving object on edge box proposals,” CoRR, vol. abs/1507.08085, pp. 1–10, 2015.

T. Vojir and J. Matas, “Robustifying the flock of trackers,” Proc. of the Computer Vision Winter Workshop, pp. 91–97, 2011.

D. A. Ross, J. Lim, R. S. Lin, and M.-H. Yang, “Incremental learning for robust visual tracking,” International Journal of Computer Vision, vol. 77, no. 1, pp. 125–141, May 2008. [click]

C. Bao, Y. Wu, H. Ling, and H. Ji, “Real time robust L1 tracker using accelerated proximal gradient approach,” Proc. of the IEEE Conference on Computer Vision and Patern Recognition, pp. 1830–1837, 2012.

L. Cehovin, M. Kristan, and A. Leonardis, “Robust visual tracking using an adaptive coupled-layer visual model,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 35, no. 4, pp. 941–953, April 2013. [click]

K. Lebeda, R. Bowden, and J. Matas, “Long-term tracking through failure cases,” Proc. of the IEEE International Conference on Computer Vision Workshop, pp. 153–160, 2013.

J. Zhang, S. Ma, and S. Sclaroff, “MEEM: robust visual tracking via multiple experts using entropy,” Proc. of the IEEE Conference on Computer Vision and Patern Recognition, pp. 188–203, 2014.

B. Babenko, M.-H. Yang, and S. Belongie, “Robust object tracking with online multiple instance learning,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 33, no. 8, pp. 1619–1632, August 2011.

Z. Hong, Z. Chen, C. Wang, X. Mei, D. Prokhorov, and D. Tao, “Multi-Store Tracker (MUSTER): A cognitive psychology inspired approach to object tracking,” Proc. of the IEEE Conference on Computer Vision and Patern Recognition, pp. 749–755, 2015.

K. Briechle and U. D. Hanebeck, “Template matching using fast normalized cross correlation,” SPIE, vol. 4387, pp. 95–102, March 2001.

L. Yang and Z. Jianke, “A scale adaptive kernel correlation filter tracker with feature integration,” Proc. of the European Conference on Computer Vision Workshop, pp. 254–265, 2014.

S. Moujtahid, S. Duffner, and A. Baskurt, “Classifying global scene context for on-line multiple tracker,” Proc. of the British Machine Vision Conference, pp. 1–12, 2015.

N. Wang and D.-Y. Yeung, “Ensemble-based tracking: aggregating crowdsourced structured time series data,” Proc. of the 32nd International Conference on Machine Learning, pp. 1107–1115, 2015.

N. Wang, S. Li, A. Gupta, and D.-Y. Yeung, “Transferring rich feature hierarchies for robust visual tracking,” CoRR, vol. abs/1501.04587, pp. 1–9, 2015.

K. Zhang, L. Zhang, Q. Liu, D. Zhang, and M.-H. Yang, “Fast visual tracking via dense spatio-temporal context learning,” Proc. of the European Conference on Computer Vision Workshop, pp. 127–141, 2014.

J.-Y. Lee and W. Yu, “Visual tracking by partition-based histogram backprojection and maximum support criteria,” Proc. of the IEEE International Conference on Robotics and Biomimetic, pp. 749–758, 2011.

J. Gao, H. Ling, W. Hu, and J. Xing, “Transfering learning based visual tracking with Gaussian processes regression,” Proc. of the European Conference on Computer Vision Workshop, pp. 188–203, 2014.

W. Zhong, H. Lu, and M.-H. Yang, “Robust object tracking via sparse collaborative model,” IEEE Trans. Image Process., vol. 23, no. 5, pp. 2356–2368, May 2014. [click]

Z. Kalal, K. Mikolajczyk, and J. Matas, “Trackinglearning-detection,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 34, no. 7, pp. 1409–1422, July 2012. [click]

Author information

Authors and Affiliations

Corresponding author

Additional information

Recommended by Associate Editor Sung Jin Yoo under the direction of Editor Euntai Kim. This work was supported by the Human Resources Program in Energy Technology of the Korea Institute of Energy Technology Evaluation and Planning (KETEP), granted financial resource from the Ministry of Trade, Industry & Energy, Republic of Korea. (No. 20154030200670) and supported by the Energy Efficiency & Resources Core Technology Program of the Korea Institute of Energy Technology Evaluation and Planning (KETEP) granted financial resource from the Ministry of Trade, Industry & Energy, Republic of Korea (No. 20151110200040).

Suryo Adhi Wibowo received his B.S. and M.S. degrees in Telecommunication Engineering from Telkom Institute of Technology, Indonesia, in 2009 and 2012, respectively. He is currently a Ph.D. candidate at the Department of Electrical and Computer Engineering, Pusan National University, Busan, Korea. His research interests include intelligent system, computer vision, computer graphic, virtual reality and machine learning.

Hansoo Lee received his B.S. and M.S. degrees in Electrical and Computer Engineering from Pusan National University, Busan, Korea, in 2010 and 2012, respectively. He is currently a Ph.D. candidate at the Department of Electrical and Computer Engineering, Pusan National University, Busan, Korea. His research interests include intelligent system, data mining, prediction and deep learning.

Eun Kyeong Kim received her B.S. and M.S. degrees in Electrical and Computer Engineering from Pusan National University, Busan, Korea, in 2014 and 2016. She is currently a Ph.D. candidate at the Department of Electrical and Computer Engineering, Pusan National University, Busan, Korea. Her research interests include intelligent system, object recognition, robot vision and image processing.

Sungshin Kim received his B.S. and M.S. degrees in Electrical Engineering from Yonsei University, Seoul, Korea, in 1984 and 1986, respectively, and his Ph.D. degree in Electrical and Computer Engineering from Georgia Institute of Technology, Atlanta, in 1996. He is currently a Professor in the school of Electrical and Computer Engineering, Pusan National University, Busan, Korea. His research interests include intelligent system, fault diagnosis, deep learning and data mining.

Rights and permissions

About this article

Cite this article

Wibowo, S.A., Lee, H., Kim, E.K. et al. Collaborative Learning based on Convolutional Features and Correlation Filter for Visual Tracking. Int. J. Control Autom. Syst. 16, 335–349 (2018). https://doi.org/10.1007/s12555-017-0062-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12555-017-0062-x