Abstract

Background

Hospital performance on the 30-day hospital-wide readmission (HWR) metric as calculated by the Centers for Medicare and Medicaid Services (CMS) is currently reported as a quality measure. Focusing on patient-level factors may provide an incomplete picture of readmission risk at the hospital level to explain variations in hospital readmission rates.

Objective

To evaluate and quantify hospital-level characteristics that track with hospital performance on the current HWR metric.

Design

Retrospective cohort study.

Setting/Patients

A total of 4785 US hospitals.

Metrics

We linked publically available data on individual hospitals published by CMS on patient-level adjusted 30-day HWR rates from July 1, 2011, through June 30, 2014, to the 2014 American Hospital Association annual survey. Primary outcome was performance in the worst CMS-calculated HWR quartile. Primary hospital-level exposure variables were defined as: size (total number of beds), safety net status (top quartile of disproportionate share), academic status [member of the Association of American Medical Colleges (AAMC)], National Cancer Institute Comprehensive Cancer Center (NCI-CCC) status, and hospital services offered (e.g., transplant, hospice, emergency department). Multilevel regression was used to evaluate the association between 30-day HWR and the hospital-level factors.

Results

Hospital-level characteristics significantly associated with performing in the worst CMS-calculated HWR quartile included: safety net status [adjusted odds ratio (aOR) 1.99, 95% confidence interval (95% CI) 1.61–2.45, p < 0.001], large size (> 400 beds, aOR 1.42, 95% CI 1.07–1.90, p = 0.016), AAMC alone status (aOR 1.95, 95% CI 1.35–2.83, p < 0.001), and AAMC plus NCI-CCC status (aOR 5.16, 95% CI 2.58–10.31, p < 0.001). Hospitals with more critical care beds (aOR 1.26, 95% CI 1.02–1.56, p = 0.033), those with transplant services (aOR 2.80, 95% CI 1.48–5.31,p = 0.001), and those with emergency room services (aOR 3.37, 95% CI 1.12–10.15, p = 0.031) demonstrated significantly worse HWR performance. Hospice service (aOR 0.64, 95% CI 0.50–0.82, p < 0.001) and having a higher proportion of total discharges being surgical cases (aOR 0.62, 95% CI 0.50–0.76, p < 0.001) were associated with better performance.

Limitation

The study approach was not intended to be an alternate readmission metric to compete with the existing CMS metric, which would require a re-examination of patient-level data combined with hospital-level data.

Conclusion

A number of hospital-level characteristics (such as academic tertiary care center status) were significantly associated with worse performance on the CMS-calculated HWR metric, which may have important health policy implications. Until the reasons for readmission variability can be addressed, reporting the current HWR metric as an indicator of hospital quality should be reevaluated.

Similar content being viewed by others

INTRODUCTION

In efforts to improve clinical outcomes related to patient safety and care quality for hospitalized patients, the Centers for Medicare and Medicaid Services (CMS) have tied hospital reimbursement to performance on 30-day condition-specific readmission rates since 2012. These condition-specific readmission rates have been publicly reported, are often considered markers of hospital quality,1 and more recently have been incorporated into the CMS composite of overall hospital quality (star ratings) to help consumers make informed decisions about where to get their health care.2 Policy makers have continued to expand the catalog of publicly reported readmission metrics to now include an adjusted all-cause 30-day hospital-wide readmissions (HWR) metric. This metric is intended to capture all-condition, unplanned 30-day readmissions at a hospital, thereby providing a broad indication of a hospital’s quality of care.3

To profile differences in hospital performance on the HWR metric, CMS uses a two-step approach commonly used to calculate and report other quality metrics: (1) estimating effects of patient characteristics (e.g., age, clinical diagnoses) on the outcome in question using within-hospital estimates, unconfounded with differences in hospital quality; (2) removing effects of patient characteristics on comparisons between hospitals, ideally leaving only hospital quality effects.4,5, – 6 Although this approach has been used historically for assessing hospital performance on other quality metrics, such as mortality, models using patient-level factors have performed relatively poorly in predicting readmissions.6 Broader social, environmental, community, and medical factors contribute more to readmission risk than to mortality risk. Consequently, focusing on patient-level factors may provide an incomplete picture of readmission risk at the hospital level, and hospital characteristics such as larger size, academic status, socioeconomic status, and community characteristics are potentially important causes of variations in hospital readmission rates.7,8, – 9

As an exploratory analysis, we sought to extend evaluations of hospital-level factors to the hospital-wide readmission metric, which is featured prominently in the CMS Star rating score. Our overall goal was not to generate an alternate readmission metric to compete with the existing CMS metric; that exercise would require a re-examination of patient-level data combined with hospital-level data. Rather, we sought to characterize how performance on the CMS-calculated HWR metric may be associated with hospital-level factors including size (defined by the total number of beds), safety net status [defined by the disproportionate share hospital (DSH) proportion], academic status [defined as member of the Association of American Medical Colleges (AAMC)], and National Cancer Institute Comprehensive Cancer Center (NCI-CCC) status. We also sought to examine whether offering particular service lines (e.g., transplant, psychiatric, critical care, emergency, hospice services)—often features of larger academic medical centers that have not been previously examined in the context of readmissions—tracks with hospital performance on the HWR metric.

METHODS

Study Design

We analyzed publically available national data published by CMS from July 1, 2011, through June 30, 2014, which provides data on adjusted 30-day hospital-wide readmissions at the hospital-level using cited methodology.10 We linked these data with a cohort of US hospitals provided from the American Hospital Association (AHA), which has been previously used in readmissions research,11 , 12 and contained cross-sectional, hospital-level statistics by year on hospital characteristics, as well as some patient sociodemographic and outcome data. The data set analyzed included 4785 hospitals in total observed for 1 year as reported in 2014. The objective of using this cross-sectional data set was to create a predictively valid regression model that was best fit to analyze rates of 30-day hospital-wide readmission rates, which have already been adjusted for patient factors. This study was approved by the Johns Hopkins Medicine Institutional Review Board.

Data Management

Based upon prior research we initially started with a hypothesis that there was a function of readmissions associated with academic hospitals, cancer hospitals, size, and socioeconomic status.7 , 13,14, – 15 We then built upon this model using AHA variables to contribute covariates that fit well within the model while controlling for hypothesized co-variates (e.g., variables that may account for an association between readmissions and AAMC status or bed size). All authors participated in discussion by consensus of the AHA variables and the ones hypothesized to be potentially meaningful in predicting readmissions related to types of services offered in a way that they were not providing overlapping information. At the hospital level, cross-sectional data were managed across 1 year and there were 36 explanatory variables explored as potentially linked to 30-day readmission. First, hospital status as an AAMC was explored as a dichotomous covariate. Second, all hospitals and AAMCs were stratified by status as an NCI-CCC. Third, to evaluate for socioeconomic status, hospitals were categorized according to the DSH proportion of patients both continuously and by quartile according to data pulled from the Healthcare Cost Report Information System (HCRIS).16 Medicare DSH adjustment applies to hospitals that serve a significant disproportionate number of low-income patients, defined by the disproportionate patient percentage.17 Safety Net Hospitals were defined as hospitals in the highest quartile of the DSH proportion. Fourth, size of the hospital in terms of total bed count from HCRIS was explored both continuously and in terms of categorical ranges of bed totals (1–200; 201–400; 401+) as has been done previously.7 , 16 Fifth, transplant services were explored in terms of separate services offered (e.g., heart, lung, liver, kidney, pancreas) both categorically (each service present or absent) and the cumulative number of services offered (ranging from 0 to 6 transplant services offered). Ultimately, this variable was dichotomized as hospitals having four or more transplant services versus three or fewer. Sixth, variables were explored that categorized hospitals according to other services offered: These service level variables were either defined by the presence versus absence of services offered (e.g., wound care, hospice, hemodialysis, emergency, and psychiatric services) by the proportions of beds for specific services (e.g., number of intensive care beds out of the total beds greater than the median value of all hospitals), or the volumes of services (e.g., number of inpatient surgeries as a proportion of total discharges). Proportions of service-line beds and volumes of services were dichotomized by their median values for analyses.

Analysis Plan

We used a multilevel regression modeling approach to regress 30-day readmissions rates by the aforementioned predictors in the AHA data set.18 The multilevel model tested random versus fixed intercepts for hospitals at the state level to account for the possibility of unobserved latent factors that may vary by state.10 In total, all hospitals in the AHA data set were organized at the second level into 55 weighted clusters (i.e., the 50 US states as well as Puerto Rico, Guam, US Virgin Islands, Marshall Islands, and American Samoa).

The model was tested for multiple constructs, including treating the dependent variable for 30-day readmissions as continuous (linear mixed model), categorically according to readmission quartile (ordinal logistic mixed model), and as a dichotomous outcome by comparing the fourth quartile relative to the other three quartiles (logistic mixed model). The purpose of the last model was to examine the effects that set the lowest performing hospitals by 30-day hospital-wide readmission rate.

The multilevel regression models were developed in two iterations using Stata 14 (Stata Corp. Inc. 2016, Texas). The initial model was constructed to test the relationship between 30-day readmission rate and bed totals, DSH, and the separate as well as interaction terms for AAMC and NCI-CCC. Following this initial model, forward stepwise regression was used to test the expansion of a multilevel regression model to include other covariates (among those selected as above by consensus) that showed a statistically significant and potentially meaningful impact in predicting hospital-wide readmission rates.19

The final forms of the fixed and random-intercept models were compared using a chi-square test of the log-likelihood ratios. We also tested several random slopes from the data set using independent and exchangeable autocorrelation constructs, where covariates were well powered (i.e., where there was little missing data) to do so. We assumed these models to be well powered based on a power calculation that could detect a 0.091% change in absolute readmission rate between quartiles based on 4785 hospitals clustered into 55 states. Since the goal of the HWR measure is to evaluate hospital performance versus other hospitals (i.e., to rank them), we used quantile plots to describe changes in readmissions rates between categorical changes in AAMC status, NCI-CCC status, bed total, and DSH quartile. To address missing data, we used a multilevel modeling approach in our analysis, which is able to model unbalanced data across clusters.20 Additionally, we conducted cross-validation to test the sensitivity of regression modeling to missing data by running regression on a 50% sample and then testing the fit of the regression model to the other 50% sample. The correlations and fit were not significantly different between both data samples; thus, we could reject the hypothesis that missing data altered the model results.

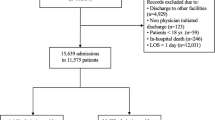

RESULTS

Our analysis of 4785 hospitals showed variability that is standardized with other studies of patient outcomes in the US. The unweighted average hospital-wide readmission rate was 15.24%. In addition, the average DSH rate was 0.35, bed total was 154 on average, and all 240 AAMC and 52 NCI-CCC hospitals were represented (Table 1). Of 52 designated as NCI-CCC, 49 were also categorized as AAMC. In general, each of these factors was positively correlated with an increased patient-adjusted 30-day hospital-wide readmission rate compared to hospitals with low DSH, smaller size, or lacking AAMC/NCI-CCC status (Fig. 1a–d).

Association between hospital-level factors and hospital performance* on the CMS hospital-wide readmission measure. *Performance defined as the hospital’s percentile rank on the hospital-wide readmission measure. This was obtained by ranking each of the 4785 US hospitals according to the hospital-wide readmission score and assigning a percentile rank to each institution (relative to all other institutions). CMS = Centers for Medicare & Medicaid Services; AAMC = Association of American Medical Colleges; AHA = American Hospital Association; DSH = Disproportionate Share Hospital; NCI-CCC = National Cancer Institute Comprehensive Cancer Center. Figures showing the unadjusted relationship between hospital-level factors, a DSH quartiles, b bed total categories, c AAMC alone status and AAMC and NCI-CCC status, and d Safety Net alone status and AAMC and Safety Net status (defined as hospitals in the highest DSH quartile), and national performance on the patient-level adjusted HWR metric. Box plots represent the 25th (bottom), 50th (line), and 75th (top) percentiles for each category shown

After consideration of all constructs for 30-day readmissions, a dichotomous measurement of the fourth quartile 30-day HWR rate relative to the other three quartiles as a binary logistic model was selected based on goodness of fit. When regressed against the 30-day HWR metric, hospitals with higher DSH, larger bed totals, and hospitals designated as AAMC or AAMC + NCI-CCC status had statistically significant odds ratios for falling into the worst performing HWR quartile (Table 2). Furthermore, state-level random effects models (models B and C) reduced the log-likelihood over a fixed-effects model (model A) suggesting that unobserved state-related factors related to 30-day readmissions may play an important role in hospital performance and were important to control.

Compared to the first three DSH quartiles, hospitals in the highest DSH quartile (safety net status) experienced a 1.99 (95% CI 1.61–2.45) adjusted odds ratio (aOR) of falling into the worst performing HWR quartile. Likewise, AAMC appeared to have a similar effect on readmission: aOR 1.95 (95% CI 1.35 to 2.83). The strongest predictor of being in the worst performing HWR quartile was the combination of AAMC status and NCI-CCC: aOR 5.16 (95% CI 2.58 to 10.31). Finally, hospitals with more beds appeared to have a slightly increased risk of falling into the worst performing HWR quartile.

Compared to a regression model that was restricted to DSH, bed total, and AAMC/NCI-CCC, stepwise regression produced a model of significantly better fit that conserved DSH, bed count, and AAMC/NCI-CCC. Hospitals with a high proportion of beds with intensive care services, those with emergency room departments, and hospitals with 4 or 5 transplant services—compared to 0, 1, 2 or 3 services—had higher readmission rates. Hospitals with hospice services and those with a higher proportion of total discharges being surgical cases had lower readmission rates.

Several combinations of other interaction terms were tested, but only AAMC and NCI-CCC offered significant prediction of HWR rates. Despite exploration of random slopes among the multilevel models, none of the predictors tested were also significant as random slopes. As such, independent autocorrelation structure was selected for final model versions over exchangeable.

DISCUSSION

We found significant effects of institution characteristics on relative hospital performance on CMS’s hospital-wide readmission measure. Specifically, large, academic safety net hospitals and cancer centers fared worse than other types of facilities. Hospitals with more than one of these factors (e.g., large academic safety net hospitals) were particularly more likely to perform worse on the HWR metric. Additional hospital-level factors associated with worse performance on CMS’s HWR metric included offering transplant services, offering emergency department services, and having a large proportion of total beds devoted to critical care. Conversely, the availability of hospice services and high surgical volumes were associated with better performance on the HWR metric. This is the first evaluation of hospital-level factors on the HWR metric, and, as such, further exploration to understand the underlying reasons for readmission variability between institutions should be addressed.

Potential explanations for our findings come in two broad categories. On one hand, the differences in the HWR metric may be true reflections of hospital quality.21,22, – 23 This would help to explain why hospitals with large surgical volumes and those providing hospice services had lower readmission rates; large surgical volumes are associated with the “volume-outcome” relationship in which a higher volume of patients undergoing a particular procedure at a hospital is associated with better outcomes for those patients,24 and offering palliative care reduces inpatient care utilization and costs for patients approaching the end of life.25,26, – 27 If indeed variation in hospital quality is the main driver for our findings, greater attention must be placed on the types of care defects that may exist at large, urban tertiary care centers and those that care for high-complexity patient populations (such as those with cancer or organ transplants). Specifically, we should work to identify the reasons these institutions are struggling and devise strategies to help them to improve. On the other hand, the differences in the HWR metric we observed may not represent institutional differences in quality. CMS’s current hospital-wide readmission measure may not adequately account for characteristics of certain types of hospitals, whether because of inadequate adjustment for measurable or unmeasurable patient characteristics or because of factors that cannot be accounted for by adjusting for individual patient characteristics. Then, we should work to improve the models and, in the meantime, avoid using them to define quality or trigger penalties. Of course, a combination of both explanations may be at play.

The hospital-level factors we considered in this study are broad and likely proxies for a number of underlying processes and potential challenges that institutions may face in affecting readmission outcomes, which may be inadequately accounted for in current models. For instance, bed size may be a surrogate for the number of services that are offered at an institution and may relate to referral patterns.28 Practically, if a middle-aged male with minimal comorbidities presents with an ischemic stroke, he may be managed appropriately at a small community hospital. If that same patient would also benefit from neurosurgical intervention, however, he probably would be better served by a hospital that provides all the services he needs; the more complex treatment plans are probably executed most effectively at centers that ‘see it all and do it all’—often large referral centers. Indeed, we found that hospitals that have a higher proportion of beds providing critical care services have higher readmission rates. Patient-level risk models are intended to capture the severity of illness, but they may fail to adequately account for the complexity and clinical needs of the sickest patients. However, future research should examine the reasons for this finding as higher volumes have generally been associated with reduced mortality and improved outcomes.24 , 29

AAMC hospitals, by their nature, are more likely to be referral centers, so one possible mechanism to explain their higher adjusted readmission rates would be a form of selection bias. Patients who fail treatment at local hospitals and are referred to AAMCs may be more vulnerable to readmission based on failing first-line treatment. Public teaching hospitals may also have missions to treat disadvantage populations, such as indigent patients,30 and indeed, this study showed that hospitals that were identified as both safety net and AAMC hospitals were more likely to have higher readmission rates. Additionally, cutting edge treatments offered at AAMCs may lead to planned readmissions that are uncaptured by current readmission algorithms. For example, some complex staged procedures that require patients to return to the hospital are classified as unplanned with current algorithms, and novel procedures may not yet be adequately codified. Hospitals that provide these types of services might appear to have excess unplanned readmissions (rather than planned readmissions) based on nuances of coding rather than care defects.

However, we must consider the possibility that some features of academic medical centers might cause higher readmission rates. For instance, market power is higher for large teaching hospitals, and this could allow them to attract patients and succeed financially despite real care defects.31 Additionally, junior physicians may feel less comfortable not sending a patient to the ED when called post-discharge.32 However, academic medical centers are learning environments where there is likely increased attention to detail, frequent use of current medical literature to guide clinical decision making, and redundancy of supervision that might reduce adverse outcomes.33 Recent data also suggest lower mortality rates at AAMCs.34 Finally, a health care facility’s teaching status on its own does not markedly improve or worsen patient outcomes.35

A number of other hospital-level characteristics were associated with poor performance on the HWR metric. A high proportion of DSH payments reflects a hospital’s responsibility for caring for socioeconomically disadvantaged patients. While some prior studies have suggested similar rates for disease-specific 30-day readmission rates between safety net and non-safety net hospitals,12 other studies have demonstrated that socioeconomic factors can play an important role in determining patient risk of readmission.15 Safety net facilities may be less able than other hospitals to invest in quality improvement,36 and their lack of financial resources may limit access to clinical resources. CMS continues to work with groups such as the National Quality Forum to study the effect of socioeconomic status on readmissions, and it is possible that socioeconomic status will be accounted for in updated federal readmission models.37 Having an emergency department may be a surrogate for offering services to patients with low socioeconomic status.38 NCI-CCC hospitals care for high volumes of complex cancer patients, where readmissions commonly represent expected sequela of treatment or disease progression.14 Consistent with prior literature, we found that organ transplant patients are highly vulnerable to complications and readmissions.39

Current methodology to standardize HWR readmission measures compares hospital observed rates to their expected results based on an average hospital’s performance caring for a similar mix of patients. A concern of adjusting for hospital-level factors is that this may improve the accuracy of the readmission measures while adjusting away deficits in quality that hospital comparison efforts seek to reveal.6 To address these policy challenges, different approaches have been proposed, such as comparing readmission rates across peer institutions only,4 defining preventable readmissions as ones linked to some process of hospital care when possible (e.g., improved care coordination)40 , 41 and incentivizing or rating institutions based on improvement rather than relative performance. Further work is needed to determine whether these strategies are helpful in the ultimate goal to improve quality to achieve best outcomes.

The results of this study should be taken within the context of its limitations. First, the HWR metric is focused on a Medicare population; different patterns of hospital-level readmission predictors may emerge if data become available for a larger population. Second, we focused on the HWR metric, but further research would need to investigate whether these results are generalizable to the condition specific metrics, (such as pneumonia, congestive heart failure, or stroke). Third, adjusting for SES is challenging particularly given that we only had access to hospital-level data, so our approach to use the disproportionate share hospital measurement as a marker of SES may not have captured residual SES effects that could have been defined by measuring patient-level factors, such as income,42 or more granular regional measures, such as area deprivation index.43 Fourth, CMS outcomes data were calculated from a time-period that was longer and in some cases earlier than the AHA annual survey data, which was a limitation of the available data. Fifth, CMS and AHA data were only provided at the hospital level, and they would benefit from including these hospital-level factors, such as different service line variables, into future derivations and evaluations of readmission measures that include patient-level data. Sixth, our multilevel model assumes that there are state-level factors that impact readmissions rates, but the variability in readmission performance may be better accounted for by other hospital-level factors (only) or in combination with patient-level factors that are not collected by CMS. Sixth, our analyses were limited by the services considered in the AHA survey and the nature of survey data collection; accordingly, we may have failed to identify other services or hospital-level factors associated with performance on the HWR metric.

CONCLUSIONS

Current patient-level risk adjustment methodology intended to allow for ranking hospitals by their relative readmission rates may not account for certain populations and services that impact readmission rates. Large academic medical centers, hospitals that serve a disproportionate share of low socioeconomic patients, and those serving complex patient populations tend to fare far worse on the CMS HWR measure than other types of institutions. While our findings do not offer an alternative to the existing measure, they raise concerns about its interpretation and practical use as a quality measure. Policy makers should reexamine the appropriateness of using the current hospital-wide readmission measure as an indicator of hospital quality.

References

Halfon P, Eggli Y, Pretre-Rohrbach I, Meylan D, Marazzi A, Burnand B. Validation of the potentially avoidable hospital readmission rate as a routine indicator of the quality of hospital care. Med Care. 2006;44(11):972-981. https://doi.org/10.1097/01.mlr.0000228002.43688.c2.

Comprehensive methodology report (v2.0), a description of the overall hospital quality star rating methodology. Yale New Haven Health Services Corporation/Center for Outcomes Research & Evaluation (YNHHSC/CORE) [accessed at www.qualitynet.org on 12 Sept 2017]. May 2016.

National Quality Measures Clearinghouse (NQMC). Unplanned readmission: Hospital-wide all-cause, unplanned readmission rate (HWR). https://www.qualitymeasures.ahrq.gov/summaries/summary/49197/unplanned-readmission-hospitalwide-allcause-unplanned-readmission-rate-hwr. Accessed 12 Sept 2017.

MedPAC. Chapter 4: Refining the hospital readmissions reduction program. Report to Congress [Site accessed on 12 Sept 2017]. 2013 Jun: Available at: www.medpac.gov/-documents-/reports.

Normand SL, Shahian D. Statistical and clinical aspects of hospital outcomes profiling. Stat Sci. 2007;22(2):206-226.

Kansagara D, Englander H, Salanitro A, et al. Risk prediction models for hospital readmission: A systematic review. JAMA. 2011;306(15):1688-1698.

Joynt KE, Jha AK. Characteristics of hospitals receiving penalties under the hospital readmissions reduction program. JAMA. 2013;309(4):342-343. https://doi.org/10.1001/jama.2012.94856.

Herrin J, St Andre J, Kenward K, Joshi MS, Audet AM, Hines SC. Community factors and hospital readmission rates. Health Serv Res. 2015;50(1):20-39. https://doi.org/10.1111/1475-6773.12177.

Tsai TC, Orav EJ, Joynt KE. Disparities in surgical 30-day readmission rates for medicare beneficiaries by race and site of care. Ann Surg. 2014;259(6):1086-1090. https://doi.org/10.1097/SLA.0000000000000326.

Hedeker D, Gibbons R. Longitudinal Data Analysis. Hoboken, NJ: John Wiley & Sons Inc; 2006.

Krumholz HM, Merrill AR, Schone EM, et al. Patterns of hospital performance in acute myocardial infarction and heart failure 30-day mortality and readmission. Circ Cardiovasc Qual Outcomes. 2009;2(5):407-413. https://doi.org/10.1161/CIRCOUTCOMES.109.883256.

Ross JS, Bernheim SM, Lin Z, et al. Based on key measures, care quality for medicare enrollees at safety-net and non-safety-net hospitals was almost equal. Health Aff (Millwood). 2012;31(8):1739-1748. https://doi.org/10.1377/hlthaff.2011.1028.

Sheingold SH, Zuckerman R, Shartzer A. Understanding medicare hospital readmission rates and differing penalties between safety-net and other hospitals. Health Aff (Millwood). 2016;35(1):124-131. https://doi.org/10.1377/hlthaff.2015.0534.

Saunders ND, Nichols SD, Antiporda MA, et al. Examination of unplanned 30-day readmissions to a comprehensive cancer hospital. J Oncol Pract. 2015;11(2):e177-81. https://doi.org/10.1200/JOP.2014.001546.

Kind AJ, Jencks S, Brock J, et al. Neighborhood socioeconomic disadvantage and 30-day rehospitalization: A retrospective cohort study. Ann Intern Med. 2014;161(11):765-774. https://doi.org/10.7326/M13-2946.

Healthcare Cost Report Information System. Cost reports. https://www.cms.gov/Research-Statistics-Data-and-Systems/Downloadable-Public-Use-Files/Cost-Reports/. Accessed 12 Sept 2017.

Medicare disproportionate share hospital. [accessed on September 12, 2017]. 2015;ICN 006741(September):https://www.cms.gov/Outreach-and-Education/Medicare-Learning-Network-MLN/MLNProducts/downloads/Disproportionate_Share_Hospital.pdf.

Snijders T, Bosker R. Multilevel Analysis: an Introduction to Basic and Advanced Multilevel Modeling. 2nd ed. Los Angeles, CA: Sage Publications; 2012.

James G, Witten D, Hastie T, Tibshirani R. An Introduction to Statistical Learning. New York, NY: Springer; 2013.

Daniels MJ, Wang C. Discussion of “missing data methods in longitudinal studies: a review” by ibrahim and molenberghs. Test (Madr). 2009;18(1):51-58. https://doi.org/10.1007/s11749-009-0141-2.

Ashton CM, Del Junco DJ, Souchek J, Wray NP, Mansyur CL. The association between the quality of inpatient care and early readmission: A meta-analysis of the evidence. Med Care. 1997:1044-1059.

Krumholz HM, Lin Z, Keenan PS, et al. Relationship between hospital readmission and mortality rates for patients hospitalized with acute myocardial infarction, heart failure, or pneumonia. JAMA. 2013;309(6):587-593. https://doi.org/10.1001/jama.2013.333.

Hoyer EH, Odonkor CA, Bhatia SN, Leung C, Deutschendorf A, Brotman DJ. Association between days to complete inpatient discharge summaries with all-payer hospital readmissions in maryland. J Hosp Med. 2016;11(6):393-400. https://doi.org/10.1002/jhm.2556.

Hughes RG, Hunt SS, Luft HS. Effects of surgeon volume and hospital volume on quality of care in hospitals. Med Care. 1987;25(6):489-503.

Obermeyer Z, Makar M, Abujaber S, Dominici F, Block S, Cutler DM. Association between the medicare hospice benefit and health care utilization and costs for patients with poor-prognosis cancer. JAMA. 2014;312(18):1888-1896. https://doi.org/10.1001/jama.2014.14950.

Brotman DJ, Hoyer EH, Leung C, Lepley D, Deutschendorf A. Associations between hospital-wide readmission rates and mortality measures at the hospital level: Are hospital-wide readmissions a measure of quality? J Hosp Med. 2016;11(9):650-651. https://doi.org/10.1002/jhm.2604.

Morrison RS, Penrod JD, Cassel JB, et al. Cost savings associated with US hospital palliative care consultation programs. Arch Intern Med. 2008;168(16):1783-1790. https://doi.org/10.1001/archinte.168.16.1783.

Loux SL, Payne SMC, Knott A. Comparing patient safety in rural hospitals by bed count. In: Henriksen K, Battles JB, Marks ES, Lewin DI, eds. Advances in Patient Safety: From Research to Implementation (volume 1: Research findings). Rockville (MD):; 2005. NBK20441 [bookaccession].

Kahn JM, Goss CH, Heagerty PJ, Kramer AA, O’Brien CR, Rubenfeld GD. Hospital volume and the outcomes of mechanical ventilation. N Engl J Med. 2006;355(1):41-50.

Ayanian JZ, Weissman JS. Teaching hospitals and quality of care: a review of the literature. Milbank Q. 2002;80(3):569-93, v.

Vogt WB, Town R, Williams CH. How has hospital consolidation affected the price and quality of hospital care? Synth Proj Res Synth Rep. 2006;(9).

Hajjaj FM, Salek MS, Basra MK, Finlay AY. Non-clinical influences on clinical decision-making: a major challenge to evidence-based practice. J R Soc Med. 2010;103(5):178-187. https://doi.org/10.1258/jrsm.2010.100104.

Pugno PA, Gillanders WR, Kozakowski SM. The direct, indirect, and intangible benefits of graduate medical education programs to their sponsoring institutions and communities. J Grad Med Educ. 2010;2(2):154-159. https://doi.org/10.4300/JGME-D-09-00008.1.

Burke LG, Frakt AB, Khullar D, Orav EJ, Jha AK. Association between teaching status and mortality in US hospitals. JAMA. 2017;317(20):2105-2113. https://doi.org/10.1001/jama.2017.5702.

Papanikolaou PN, Christidi GD, Ioannidis JP. Patient outcomes with teaching versus nonteaching healthcare: A systematic review. PLoS Med. 2006;3(9):e341.

Werner RM, Goldman LE, Dudley RA. Comparison of change in quality of care between safety-net and non-safety-net hospitals. JAMA. 2008;299(18):2180-2187. https://doi.org/10.1001/jama.299.18.2180.

Rep. Upton F[. 21st century cures act.. 05/19/2015(114th Congress):2015–2016.

Hong R, Baumann BM, Boudreaux ED. The emergency department for routine healthcare: Race/ethnicity, socioeconomic status, and perceptual factors. J Emerg Med. 2007;32(2):149-158.

Lushaj E, Julliard W, Akhter S, et al. Timing and frequency of unplanned readmissions after lung transplantation impact long-term survival. Ann Thorac Surg. 2016;102(2):378-384. https://doi.org/10.1016/j.athoracsur.2016.02.083.

Halverson AL, Sellers MM, Bilimoria KY, et al. Identification of process measures to reduce postoperative readmission. J Gastrointest Surg. 2014;18(8):1407-1415. https://doi.org/10.1007/s11605-013-2429-5.

Hansen LO, Young RS, Hinami K, Leung A, Williams MV. Interventions to reduce 30-day rehospitalization: a systematic review. Ann Intern Med. 2011;155(8):520-528. Accessed Jan 15 2015.

Daly MC, Duncan GJ, McDonough P, Williams DR. Optimal indicators of socioeconomic status for health research. Am J Public Health. 2002;92(7):1151-1157.

Knighton AJ, Savitz L, Belnap T, Stephenson B, VanDerslice J. Introduction of an area deprivation index measuring patient socioeconomic status in an integrated health system: implications for population health. EGEMS (Wash DC). 2016;4(3):1238–9214.1238. eCollection 2016. 10.13063/2327-9214.1238.

Acknowledgements

We thank the American Hospital Association (AHA), Nancy Foster, Christopher Vaz, and Sara Beazley, for providing their expertise in interpretation and acquisition of data from the AHA survey.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

All authors declare no conflicts of interest.

Additional information

We certify that no party having a direct interest in the results of the research supporting this article has or will confer a benefit on us or on any organization with which we are associated AND, if applicable, we certify that all financial and material support for this research (e.g., CMS, NIH, or NHS grants) and work are clearly identified in the title page of the manuscript (Hoyer, Padula, Brotman, Reid, Leung, Lepley, Deutschendorf).

Rights and permissions

About this article

Cite this article

Hoyer, E.H., Padula, W.V., Brotman, D.J. et al. Patterns of Hospital Performance on the Hospital-Wide 30-Day Readmission Metric: Is the Playing Field Level?. J GEN INTERN MED 33, 57–64 (2018). https://doi.org/10.1007/s11606-017-4193-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11606-017-4193-9