Abstract

Purpose

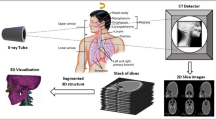

X-ray imaging is widely used for guiding minimally invasive surgeries. Despite ongoing efforts in particular toward advanced visualization incorporating mixed reality concepts, correct depth perception from X-ray imaging is still hampered due to its projective nature.

Methods

In this paper, we introduce a new concept for predicting depth information from single-view X-ray images. Patient-specific training data for depth and corresponding X-ray attenuation information are constructed using readily available preoperative 3D image information. The corresponding depth model is learned employing a novel label-consistent dictionary learning method incorporating atlas and spatial prior constraints to allow for efficient reconstruction performance.

Results

We have validated our algorithm on patient data acquired for different anatomy focus (abdomen and thorax). Of 100 image pairs per each of 6 experimental instances, 80 images have been used for training and 20 for testing. Depth estimation results have been compared to ground truth depth values.

Conclusion

We have achieved around \(4.40\,\%\,\pm \,2.04\) and \(11.47\,\%\,\pm \,2.27\) mean squared error on abdomen and thorax datasets, respectively, and visual results of our proposed method are very promising. We have therefore presented a new concept for enhancing depth perception for image-guided interventions.

Similar content being viewed by others

References

Aharon M, Elad M, Bruckstein A (2006) K-svd: an algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans Signal Proc 54(11):4311–4322

DeLucia PR, Mather RD, Griswold JA, Mitra S (2006) Toward the improvement of image-guided interventions for minimally invasive surgery: three factors that affect performance. Hum Factors 48(1):23–38

Demirci S, Baust M, Kutter O, Manstad-Hulaas F, Eckstein HH, Navab N (2013) Disocclusion-based 2d–3d registration for angiographic interventions. Comput Biol Med 43(4):312–322

Demirci S, Kutter O, Manstad-Hulaas F, Bauernschmitt R, Navab N (2008) Advanced 2d–3d registration for endovascular aortic interventions: addressing dissimilarity in images. In: Proceedings of SPIE medical imaging, pp 69,182S–69,190S

Eigen, D, Fergus R (2014) Predicting depth, surface normals and semantic labels with a common multi-scale convolutional architecture. arXiv preprint arXiv:1411.4734

Eigen D, Puhrsch C, Fergus R (2014) Depth map prediction from a single image using a multi-scale deep network. In: Proceedings of annual conference on neural information processing systems (NIPS), pp 2366–2374

Fallavollita P, Winkler A, Habert S, Wucherer P, Stefan P, Mansour R, Ghotbi R, Navab N (2014) Desired-view controlled positioning of angiographic c-arms. In: Proceedings of medical image computing and computer-assisted intervention (MICCAI), pp 659–66

Forsyth D, Torr P, Zisserman A, Vedaldi A, Soatto S (2008) Quick shift and kernel methods for mode seeking. In: Proceedings of the European conference on computer vision (ECCV), Springer, Berlin pp 705–718

Gallagher AG, Kearney PP, McGlade KJ et al (2012) Avoidable factors can compromise image-guided interventions. Medscape. http://www.medscape.com/viewarticle/756678

Groher M, Jakobs TF, Padoy N, Navab N (2007) Planning and intraoperative visualization of liver catheterizations: new CTA protocol and 2D–3D registration method. Acad Radiol 14(11):1325–1340

Groher M, Zikic D, Navab N (2009) Deformable 2D–3D registration of vascular structures in a one view scenario. IEEE Trans Med Imaging 28(6):847–860

Jiang Z, Lin Z, Davis L (2013) Label consistent k-svd: learning a discriminative dictionary for recognition. IEEE Trans Pattern Anal 35(11):2651–2664

Kersten-Oertel M, Jannin P, Collins DL (2013) The state of the art of visualization in mixed reality image guided surgery. Comput Med Imaging Grap 37(2):98–112

Lawonn K, Luz M, Preim B, Hansen C (2015) Illustrative visualization of vascular models for static 2d representations. In: Proceedings of the medical image computing and computer-assisted intervention, pp 399–406

Mairal J, Bach F, Ponce J, Sapiro G (2009) Online dictionary learning for sparse coding. In: Proceedings of the annual international conference on machine learning, pp 689–696

Markelj P, Tomaževič D, Likar B, Pernuš F (2012) A review of 3d/2d registration methods for image-guided interventions. Med Image Anal 16(3):642–661

Penney GP, Weese J, Little JA, Desmedt P, Hill DLG, Hawkes DJ (1998) A comparison of similarity measures for use in 2-d–3-d medical image registration. IEEE Trans Med Image 17(4):586–595

Ritter F, Hansen C, Dicken V, Konrad O, Preim B, Peitgen HO (2006) Real-time illustration of vascular structures. IEEE Trans Vis Comp Graphics 12(5):877–884

Saxena A, Chung SH, Ng AY (2007) 3-d depth reconstruction from a single still image. Int J Comput Vision 76(1):53–69

Stoyanov D, Darzi A, Yang GZ (2005) A practical approach towards accurate dense 3d depth recovery for robotic laparoscopic surgery. Comp Aid Surg 10(4):199–208

Virga S, Dogeanu V, Fallavollita P, Ghotbi R, Navab N, Demirci S (2015) Optimal c-arm positioning for aortic interventions. In: Proceedings of the bildverarbeitung für die medizin (BVM), informatik aktuell, pp 53–58

Wang J, Borsdorf A, Endres J, Hornegger J (2013) Depth-aware template tracking for robust patient motion compensation for interventional 2-d/3-d image fusion. In: IEEE (ed.) Proceedings of the IEEE nuclear science symposium and medical imaging conference record (NSS/MIC)

Wang J, Kreiser M, Wang L, Navab N, Fallavollita P (2014) Augmented depth perception visualization in 2d/3d image fusion. Comput Med Image Graph 38(8):744–752

Wang, X, Schulte zu Berge C, Demirci S, Fallavollita P, Navab N (2014) Improved interventional X-ray appearance. In: Proceedings of the international symposium on mixed and augmented reality (ISMAR), pp 237–242

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical standard

This article does not contain any studies with human participants or animals performed by any of the authors.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Albarqouni, S., Konrad, U., Wang, L. et al. Single-view X-ray depth recovery: toward a novel concept for image-guided interventions. Int J CARS 11, 873–880 (2016). https://doi.org/10.1007/s11548-016-1360-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-016-1360-0