Abstract

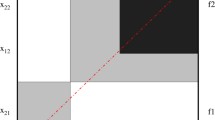

The paper presents inequalities between four descriptive statistics that can be expressed in the form [P−E(P)]/[1−E(P)], where P is the observed proportion of agreement of a k×k table with identical categories, and E(P) is a function of the marginal probabilities. Scott’s π is an upper bound of Goodman and Kruskal’s λ and a lower bound of both Bennett et al. S and Cohen’s κ. We introduce a concept for the marginal probabilities of the k×k table called weak marginal symmetry. Using the rearrangement inequality, it is shown that Bennett et al. S is an upper bound of Cohen’s κ if the k×k table is weakly marginal symmetric.

Article PDF

Similar content being viewed by others

References

Agresti, A. (1990). Categorical data analysis. New York: Wiley.

Agresti, A., & Winner, L. (1997). Evaluating agreement and disagreement among movie reviewers. Chance, 10, 10–14.

Bennett, E.M., Alpert, R., & Goldstein, A.C. (1954). Communications through limited response questioning. Public Opinion Quarterly, 18, 303–308.

Blackman, N.J.-M., & Koval, J.J. (1993). Estimating rater agreement in 2×2 tables: Correction for chance and intraclass correlation. Applied Psychological Measurement, 17, 211–223.

Brennan, R.L., & Prediger, D.J. (1981). Coefficient kappa: Some uses, misuses, and alternatives. Educational and Psychological Measurement, 41, 687–699.

Byrt, T., Bishop, J., & Carlin, J.B. (1993). Bias, prevalence and kappa. Journal of Clinical Epidemiology, 46, 423–429.

Cohen, J.A. (1960). A coefficient of agreement for nominal scales. Educational and Psychological Measurement, 20, 213–220.

Conger, A.J. (1980). Integration and generalization of kappas for multiple raters. Psychological Bulletin, 88, 322–328.

De Mast, J. (2007). Agreement and kappa-type indices. The American Statistician, 61, 148–153.

Dou, W., Ren, Y., Wu, Q., Ruan, S., Chen, Y., Bloyet, D., & Constans, J.-M. (2007). Fuzzy kappa for the agreement measure of fuzzy classifications. Neurocomputing, 70, 726–734.

Feinstein, A.R., & Cicchetti, D.V. (1990). High agreement but low kappa: I. The problems of two paradoxes. Journal of Clinical Epidemiology, 43, 543–548.

Fleiss, J.L. (1971). Measuring nominal scale agreement among many raters. Psychological Bulletin, 76, 378–382.

Fleiss, J.L. (1975). Measuring agreement between two judges on the presence or absence of a trait. Biometrics, 31, 651–659.

Goodman, G.D., & Kruskal, W.H. (1954). Measures of association for cross classifications. Journal of the American Statistical Association, 49, 732–764.

Hardy, G.H., Littlewood, J.E., & Polya, G. (1988). Inequalities (2nd ed.). Cambridge: Cambridge University Press.

Holley, J.W., & Guilford, J.P. (1964). A note on the G index of agreement. Educational and Psychological Measurement, 24, 749–753.

Hubert, L.J., & Arabie, P. (1985). Comparing partitions. Journal of Classification, 2, 193–218.

Janson, S., & Vegelius, J. (1979). On generalizations of the G index and the Phi coefficient to nominal scales. Multivariate Behavioral Research, 14, 255–269.

Krippendorff, K. (1987). Association, agreement, and equity. Quality and Quantity, 21, 109–123.

Krippendorff, K. (2004). Reliability in content analysis: Some common misconceptions and recommendations. Human Communication Research, 30, 411–433.

Maxwell, A.E. (1977). Coefficients between observers and their interpretation. British Journal of Psychiatry, 116, 651–655.

Mitrinović, D.S. (1964). Elementary inequalities. Noordhoff: Groningen.

Scott, W.A. (1955). Reliability of content analysis: The case of nominal scale coding. Public Opinion Quarterly, 19, 321–325.

Steinley, D. (2004). Properties of the Hubert-Arabie adjusted Rand index. Psychological Methods, 9, 386–396.

Visser, H., & De Nijs, T. (2006). The map comparison kit. Environmental Modelling & Software, 21, 346–358.

Warrens, M.J. (2008a). On similarity coefficients for 2×2 tables and correction for chance. Psychometrika, 73, 487–502.

Warrens, M.J. (2008b). Bounds of resemblance measures for binary (presence/absence) variables. Journal of Classification, 25, 195–208.

Warrens, M.J. (2008c). On association coefficients for 2×2 tables and properties that do not depend on the marginal distributions. Psychometrika, 73, 777–789.

Warrens, M.J. (2008d). On the indeterminacy of resemblance measures for (presence/absence) data. Journal of Classification, 25, 125–136.

Warrens, M.J. (2008e). On the equivalence of Cohen’s kappa and the Hubert-Arabie adjusted Rand index. Journal of Classification, 25, 177–183.

Zwick, R. (1988). Another look at interrater agreement. Psychological Bulletin, 103, 374–378.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License ( https://creativecommons.org/licenses/by-nc/2.0 ), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Warrens, M.J. Inequalities Between Kappa and Kappa-Like Statistics for k×k Tables. Psychometrika 75, 176–185 (2010). https://doi.org/10.1007/s11336-009-9138-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11336-009-9138-8