Abstract

This paper investigates to what extent laboratory measures of cheating generalise to the field. To this purpose, we develop a lab measure that allows for individual-level observations of cheating whilst reducing the likelihood that participants feel observed. Decisions made in this laboratory task are then compared to individual choices taken in the field, where subjects can lie by misreporting their experimental earnings. We use two field variations that differ in the degree of anonymity of the field decision. According to our measure, no correlation of behaviour between the laboratory and the field is found. We then perform the same analysis using a lab measure that can only detect cheating at the aggregate level. In this case, we do find a weak correlation between the two environments. We discuss the significance and interpretation of these results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Cheating permeates many social and economic interactions of daily life (DePaulo et al. 1996; Ariely 2012). Examples range from corporate scandals (e.g., Dieselgate, Facebook-Cambridge Analytica), tax evasion (Slemrod 2007) and consumer misbehaviour (Mazar and Ariely 2006). To make things worse, endeavours to study cheating in natural contexts are hindered by its secretive nature. Therefore, controlled experiments represent an attractive instrument to study individual attitudes towards cheating.

The die-roll paradigm (Fischbacher and Föllmi-Heusi 2013) represents the most popular measure of cheating used in the laboratory. Participants are asked to roll a die in private and to report the result to the experimenter. As the true outcome is observed by subjects only, there is a monetary incentive to lie by reporting those outcomes associated with higher rewards. Despite its simplicity, this type of task presents a considerable limitation: cheating can only be inferred at the aggregate level by comparing the empirical distribution of actual reports with its theoretical prediction. Hence, it is not possible to know, by design, if a particular subject lied or not.Footnote 1

Whether or not laboratory measures of cheating extend to non-controlled environments is still under investigation. For instance, the experimenter scrutiny or the artificiality of the lab environment might trigger different ethical norms. If this is the case, then laboratory results on cheating might not generalise to the field (Levitt and List 2007). Our paper aims to address these two limitations.

First, we design a novel task that, in contrast to the existing literature, allows us to observe cheating at the individual level. In our task, subjects have five seconds to choose, in their mind, one out of 60 colours (e.g. Yellow) from a list displayed on their screen. Once this list disappears, three new lists containing four colours each (e.g. White, Beige, Milk, Plum) are displayed. Every new list is associated with a different positive payoff. If subjects claim their chosen colour to be in one of the three new lists, they receive the payoff associated with that list; otherwise, they receive nothing. We know that the participants have cheated if they pick a list of colours on the second screen that does not contain any colour that was already present in the first larger list.

Second, we use the fact that in our task cheating is observable at the individual level and ask to what extent cheating in the lab predicts cheating in the field within the same population. Participants are not paid immediately after the experiment. Instead, after a few days, they have the opportunity to cheat in the field by self-reporting their earnings. Subjects are paid according to the amount of money they claim to have earned in the laboratory. We use two field variations that differ in the degree of anonymity of the field decision. In the first, the self-reporting procedure is completely anonymous, while the second field variation requires participants to meet in person with the experimenter.

The main contribution of this paper is twofold: (i) it develops a laboratory task that allows for individual level observations of cheating, and (ii) it allows for a comparison of both the extensive and intensive margins of cheating between the laboratory and a non-controlled environment.Footnote 2

In line with previous findings on individual dishonesty, we find that a considerable fraction of subjects cheat in our laboratory task but, for some, not to the fullest extent. However, no significant correlation of dishonest behaviour between the lab and the field is observed. Although more than half of the subjects cheat to some extent in our task, most of them refrain from over-reporting their experimental earnings. Moreover, for those who do so, we find no difference in the extent of cheating between subjects that are honest in the laboratory and those who are not. Interestingly, when using a variation of the die-roll task that only allows to infer cheating at the aggregate level, we do find a weak correlation between lab and field behaviour.

To the best of our knowledge, only few other studies examine the correlation between dishonest behaviour in the lab and cheating in the field within the same population.Footnote 3 Dai et al. (2018) perform an artefactual field experiment where passengers of public transportation are asked to play a modified version of the die-roll task. As a main result, the study finds that fare dodgers, on average, are more likely to report the most profitable outcome than ticket holders.

Similar to our study, Potters and Stoop (2016) use a student subject pool to correlate self-reported performance in a mind game implemented in the lab with a field measure of cheating. After the experiment, payments are issued via bank transfer and some subjects are deliberately overpaid by an amount of €5. A significant correlation of 0.31 between performance in the mind game and not reporting the overpayment is found. In contrast to Potters and Stoop (2016), our study allows for the observance of cheating at the individual level, measures cheating at both the extensive and intensive margins, provides full anonymity in the lab and in one of the field tasks and requires active misreporting in both environments. These new features allow for a deeper understanding of whether lab measures of cheating are reliable predictors of dishonesty in other environments.

The extent to which laboratory results on cheating can be generalised to other settings remains unclear.Footnote 4 Laboratory evidence shows persistent patterns on dishonesty across subjects. Some individuals are completely honest, while others either lie to the maximum extent possible, or forfeit part of the monetary gains when they do cheat (Gneezy et al. 2018; Abeler et al. 2019; Gerlach et al. 2019). Instead, studies that focus on dishonesty in the field provide mixed results. While some find substantial cheating among subjects (e.g., Drupp et al. 2019; Bucciol and Piovesan 2011), other studies report different findings. For example, Abeler et al. (2014) report no evidence of lying in a randomised field experiment where subjects are called at home and have a monetary incentive to misreport the outcome of a privately tossed coin. Similarly, Cohn et al. (2014) show that bankers cheat in a coin-flip task when they are reminded about their professional identity. However, when such cue is not emphasised, reported outcomes do not differ from their truthful distribution.

The remainder of this paper is organised as follows: Section 2 describes the experimental design, Sect. 3 presents the main results of the paper, Sect. 4 discusses the main findings and Sect. 5 concludes.

2 Methods

2.1 Experimental procedure

The experiment was conducted between November 2017 and July 2019 at EssexLab at the University of Essex. In total, 249 participants were recruited using hroot (Bock et al. 2014). Laboratory sessions (12 in total) lasted about 43 mins, and average total earnings (inclusive of a £4 show-up fee) were £12.62 (s.d. £4.60). The experiment was programmed using z-Tree (Fischbacher 2007).

Before the laboratory session, participants acknowledged that the experimental proceedings were paid after a few days (see Fig. 6 in appendix). Any further detail about the payment procedure was omitted. Subjects entered the lab anonymously and were randomly allocated to their terminals so that it was impossible to link their identity to a particular workstation. At the beginning of the experiment, each subject was informed that the session consisted of five parts and a short final questionnaire. Detailed instructions about each part were displayed on subjects’ screens only upon completion of the previous part (all instructions are reproduced in Appendix A). Where needed, control questions where elicited before the actual choices were made. Participants were informed that, at the end of the experiment, two of the five parts were randomly selected for payment.

2.2 Laboratory experiment

The laboratory experiment consisted of five different parts, whose order was randomised at the session level.

Part 1. In the first part of the experiment, subjects faced our so-called mind game (hereinafter list game).Footnote 5 The list game consists of a simple decision problem. First, a random list of 60 colour names (e.g., Yellow) appears on the computer screen and is displayed for only five seconds. This ensures that no subject can read all of the colours in the given time. Before the timer expires, each participant must choose, in their mind, a colour from the list. After five seconds, the list disappears and three new random lists containing four colours each are displayed on the screen—e.g., one of the three lists might be White, Beige, Milk, Plum. Subjects are then asked whether the colour they have in mind appears in one of the three new lists, each of which is associated with a specific payoff: £1, £3 and £5, respectively. If yes, then they must select the list that contains the colour they thought of, otherwise they must select the alternative option (“Not in the lists”). Participants who claim to have found their colour by selecting one of the three lists earn the corresponding payoff. However, subjects who choose the alternative option earn £0. By design, the colours displayed in the three new lists are never present in the list where subjects actually choose from. Hence, every positive payoff reported by participants can be classified as a lie.Footnote 6 As the colour choice is made in the subject’s mind, individual cheating appears to be undetectable.Footnote 7 This is verified via a control question. After the decision on whether to cheat or not is made, participants answer the following question:

“Out of 100 participants, how many do you think successfully choose a colour in the first list that is also present in one of the three lists?”

Subjects earn an additional £1 if their answer is within five points from the true value—i.e., zero. As a consequence, any answer below or equal to five indicates that subjects believe the colours in the three lists are not present in the first one. Thus, they realise that cheating could be detected with certainty.Footnote 8

Part 2. This part consists of a computerised variation of the mind game used in Kajackaite and Gneezy’s (2017). Subjects have to roll a virtual five-sided die where each side is associated with a colour. First, participants must choose one of the five colours in their mind. Then, the outcome of the die roll is revealed and subjects must report whether the colour they have in mind corresponds to the actual outcome of the die roll. If the answer is yes, they earn £5, otherwise they earn £0. This task resembles the list game because the decision is made in the subject’s mind, with the difference being that cheating cannot be detected at the individual level. The purpose of this mind game is twofold. First, it can be used to corroborate the list game as a valid measure of cheating. Second, it is possible to correlate the reports of the die-roll to behaviour in the field. However, correlation can only be measured at the aggregate level.

Part 3. In this part, subjects are randomly paired and play a dictator game. Each member of the pair is endowed with £6 and decides how much money to transfer, in steps of £1, to the other group member. After both subjects have made their decisions, one of the two choices is implemented with equal probability. The dictator game is used as a measure of greed and is elicited as a proxy for pro-social behaviour.

Part 4. Part four consists of a trust game similar to Burks et al. (2003), where each participant knows in advance that they will play both the role of a sender and a receiver. Subjects are randomly paired and after being endowed with £3, they choose whether to send £0, £1, £2 or £3 to their counterpart. Any amount sent is tripled. Without knowing the decision of the other player, both subjects decide how much to return for any possible transfer they could receive. After all decisions are made, the computer assigns the roles with equal probabilities and the corresponding decisions are implemented. We measure trust as a control for social preferences. This measure allows us to investigate whether subjects that put more trust in others or are more trustworthy, are also less likely to lie.

Part 5. In the last part, risk preferences are elicited using a slightly modified version of the lottery choice task implemented in Eckel and Grossman’s 2008 study. Participants must choose one out of five virtual boxes. Every box contains two payoffs that are realised with equal probability (see Table 4 in Appendix C). Starting from a risk free lottery that yields £2, the expected payoffs of the subsequent lotteries increase so as their variance. Hence, the higher the expected payoff, the higher the risk. The main advantage of this task resides in its simplicity and thus, can be easily understood by participants. Nonetheless, it can identify enough heterogeneity in risk attitudes. It is important to elicit risk attitudes as the decision to cheat also depends on the risk of being caught lying. Understanding the relation between individual preferences for honesty and risk attitudes might unveil important insights on one’s decision to cheat.

Upon completion of the five parts, subjects answer an incentivised questionnaire collecting socio-demographic information and a 20-item measure of Big five (Donnellan et al. 2006). Once participants complete the questionnaire, their own experimental earnings are calculated and displayed on their screen. Subjects are then asked to note their earnings on a piece of paper (‘reminder card’), to fold this into an envelope, and to conceal their rewards by clicking a button on their screen.Footnote 9 At this point, participants are the only ones who know the amount of money they have earned.Footnote 10

At the very end of the session, each subject is provided with a paper sheet entitled ‘Payment form’, which contains detailed instructions about the payment procedure.Footnote 11 Note that every form contains a hidden code that allows it to be associated with the corresponding workstation.Footnote 12 Hence, it is possible to uniquely identify behaviour in the lab—but not individuals’ identity—with subsequent choices in the field.

Subjects are then asked to leave the lab without filling in the payment form.

2.3 Field experiment

The field experiment is designed to resemble a variation of the standard payment procedure. Participants are not paid immediately after the laboratory session. Instead, after a few days, they can self-report their earnings using the payment form they were provided with. Payments are provided, in cash, upon provision of this paper sheet. Subjects are free to self-report any integer number between the minimum and the maximum possible payoff, £5 and £26, respectively.Footnote 13 Thus, there is a monetary incentive to cheat by claiming a higher payment than the amount of money actually earned in the lab. Note that, at this stage, detection of lies is not possible. Cheating in the field can only be inferred after decoding each payment form and then by comparing the self-reported payment with the actual experimental earnings. Moreover, apart from self-reported earnings and the payment date, no other personal information is contained on the forms. Hence, it is not possible to link the payment forms to individuals’ identities.

We employ two treatment variations so as to investigate possible factors that might influence cheating outside the laboratory. The first treatment involves no face-to-face (NoFtF) interaction with the experimenter, resembling the full anonymity condition present in the lab. In more detail, at the end of the experiment each participant is randomly assigned to a locker located in a university campus building and is endowed with the corresponding key. Subjects must leave the payment forms, containing their self-reported earnings, in their assigned locker. The sheets are then collected by the experimenter and replaced with cash corresponding to the money claimed by subjects. After all payments have been provided, participants can then collect their cash earnings.Footnote 14

In contrast, the second treatment requires participants to meet face-to-face (FtF) with the experimenter in an office room. Instead of leaving the payment form in a locker, subjects hand the paper sheet to the experimenter and are paid immediately.Footnote 15 Besides the personal interaction, a degree of anonymity is also assured in this phase of the experiment, as no personal information is collected.

2.4 Design considerations

The main contribution of this experiment is to allow for individual level observation of cheating. Moreover, the list game makes it possible to correlate both the extensive and intensive margins of cheating between the lab and the field.

Despite the fact that the laboratory and the field tasks differ in their intrinsic nature, the experimental design still allows for a comparison of the behaviour between two similar decision problems. It is true that the field experiment differs in many aspects from the list game and the die-roll game. The aim of this exercise, however, is to relate a laboratory measure of cheating to dishonesty in a task that might reflect a real-life situation and thus, is not too artificial.

First, it must be noted that in the lab as well as in the field, participants can only cheat by commission. This is in contrast with Potters and Stoop (2016)—the study closest to our design—where subjects can cheat by not reporting the payment error to the experimenter. The difference between cheating by commission and omission might lead to differences in behaviour. As one might expect, lying by commission is less tempting when compared to a situation where cheating requires no active choice (Pittarello et al. 2016).

Another important variable that is kept constant between the two environments is anonymity. As Gneezy et al. (2018) suggests, the probability of being caught lying highly affects dishonesty. In this experiment, despite the fact that cheating can be detected at the individual level, subjects’ identities can never be linked to their choices. This feature allows for the generation of conditions similar to those real-life situations where dishonest actions cannot be associated to one’s identity (e.g., not returning a lost wallet).Footnote 16

Finally, the design allows for the control of possibly confounding variables caused by social preferences. The consequence that lying might have on other people is known to affect dishonesty (Gneezy 2005; Erat and Gneezy 2012). For this reason, in the lab as well as in the field, the victim of the lie is always the experimenter.

3 Main results

3.1 Laboratory results

The main results presented in this section focus on choices made in the list game and on how they correlate with the die-roll task. Appendix B provides additional results using choices from other tasks elicited in the laboratory. Due to the fact that the treatment variation pertains only to the field, laboratory observations are pooled to increase the power of the analysis.

Figure 1 shows the choices made in the list game, where each bar represents one of the options that subjects could choose. The three rightmost bars (£1, £3 and £5) represent the fractions of participants that dishonestly reported to have found the colour they had in mind in one of the three subsequent lists. Instead, the first column (£0) corresponds to the percentage of subjects that have been honest in the list game. The figure highlights significant heterogeneity in lying preferences. In contrast to standard economic predictions, 41% of the subjects choose to not cheat at all by selecting the option that pays nothing. Interestingly, although 40% of participants cheat to the maximum extent possible (£5), a substantial proportion of them (4% and 15%) forfeit the maximal gains from lying by choosing the lists associated with either the £1 or £3 payoff, respectively. Hence, dishonest behaviour seems to be driven by heterogeneity in lying preferences. Some participants are either always honests or unconditional liars, whilst the remaining subjects fall in between these two categories depending on the relative gains from lying.

-

Result 1: The highest fraction of cheaters in the list game report the payoff-maximising lie. A significant proportion of liars do not cheat to the maximum extent possible.

-

Statistical support: When restricting the data to two options, one-sided binomial tests reject the null hypothesis that these two options occur with probability equal to 0.5. For the pairs (£1,£3), (£3,£5) and (£1,£5), the conditional probability for the option with a higher payoff is significantly above 0.5, at 1% level for all pairs.Footnote 17

Beliefs elicited in the control question. Participants earned £1 if their answer was within 5 points from the correct value (zero). Hence, the vertical dashed line represents the upper bound for which a subject is thought to believe the colours in the three lists were not present in the first one. Notably, the highest fraction of answers corresponds to 20. This is consistent with the belief that the 12 colours in the three lists were randomly drawn, with equal probability, from the first list containing 60 colours \((N = 249)\)

Looking at participants’ beliefs, Fig. 2 presents the answers to the control question elicited after the list game. This question allows us to verify whether participants think their lies cannot be detected. As the figure shows, only about 6% of the subjects reported a belief lower or equal to five.Footnote 18 Thus, almost all of the participants made their decisions as if it was not possible to detect cheating at the individual level.

One might question whether the new task herein introduced can be related to other laboratory measures of cheating that do not allow for individual level observations. To corroborate our new measure, we look at how choices in the list game are correlated with choices made in the die-roll game (Part 2). In the latter task, the fraction of positive claims amounts to about 60%, which is very distant from its expected value (20%). Hence, about 40% of participants cheated in the die-roll task by reporting a “Yes” answer. If the two measures are related, then we should expect participants that are dishonest in the list game to be more likely to answer “Yes” after rolling the die. As can be seen in Fig. 3, this seems to be the case. Participants who cheat in the list game (right panel) are more likely to obtain a positive payoff in the other mind game (two-sided Fisher’s exact test: \(p = 0.027\), \(N = 225\)).Footnote 19 This result is also confirmed by Table 3 in Appendix B.

3.2 Field results

In this section, we present results for both the field treatments and their correlations with behaviour in the two cheating tasks measured in the laboratory.Footnote 20

3.2.1 List game

We start by analysing the correlation between cheating in the list game and over-reporting in the field. As the maximum amount of money a subject can claim depends on their actual experimental earnings, cheating in the field is standardised as follows:

Hence, such a variable can take values in the interval of [0, 1].Footnote 21 In other words, it measures how many pounds (£) are over-reported relative to the maximum amount of money a subject could claim.

Figure 4 presents the results for both field treatments and their relation with choices made in the list game. The vertical axis measures cheating outside the laboratory as defined in the previous equation. Thus, any observation above zero represents the extent of cheating in the field for a particular subject. The horizontal axis summarises the choices made by participants in the laboratory. Thus, from this graph it is possible to relate both the extensive and intensive margins of cheating between the list game and over-reporting of experimental earnings.

Comparison of cheating between the lab and the field for the NoFtF (left panel, \(n = 123\)) and FtF (right panel, \(n = 103\)) treatments with weighted markers. The smallest circles represent one single participant. The y-axis indicates the extent of cheating in the field. The x-axis represents the choices made in the list game

As the figure shows, the data do not support the generalisability of laboratory results on cheating in either of the two field variations. First, in both cases, most of the participants refrain from over-reporting their experimental earnings. The percentage of cheaters drops from about 66% (54%) in the lab to slightly below 19% (5%) in the field in the NoFtF (FtF) treatment.Footnote 22 As expected, in the field variation with a weaker degree of anonymity (FtF), the fraction of participants that do cheat is significantly lower (two-sided Fisher’s exact test: \(N=226\), \(p=0.002\)). The face-to-face interaction appears to trigger higher costs associated with lying with the consequence of reducing dishonest behaviour. A similar result is also found in Conrads and Lotz (2015).

Moreover, it appears there is no significant difference on the extent of cheating in the field between who cheated in the list game and those who did not. The mean value of the \({\textit{Cheat \,field}}\) variable is 0.59 for both honest and dishonest participants in the NoFtF treatment. In the FtF variation, this value is 0.37 and 0.69 for those who were honest and those who lied, respectively. However, the low number of observations does not allow us to make any reliable inference for this treatment.

-

Result 2: There is no significant correlation of cheating between choices in the list game and in the field.

-

Statistical support: Cheaters in the lab are not more likely to cheat in the field in both treatment variations (two-sided Fisher’s exact test: \(N=123\), \(p=0.809\) (NoFtF); \(N=103\), \(p=1.000\) (FtF)). The Spearman correlation between choices in the list game and \({\textit{Cheat \,field}}\) is 0.04 and 0.08 in NoFtF and FtF, respectively, and not statistically significant in either of the two field variations (two-sided test: \(N=123\), \(p=0.658\) (NoFtF); \(N=103\), \(p=0.375\) (FtF)).

Thus, Table 1 confirms the results.Footnote 23

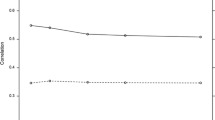

3.2.2 Die-roll game

In this section, we correlate behaviour between the lab and the field using choices made in the die-roll game. Thus, we can replicate the exercise above with the main difference being that we cannot detect cheating at the individual level. For this reason, we label as cheaters all subjects who answered “Yes” in the mind game. Figure 5 depicts the correlation between choices in this task and the Cheat field variable for both the NoFtF and FtF treatments. With this measure, we do find a weak correlation of cheating in the treatment with no face-to-face interaction.

-

Result 3: There is a significant correlation between choices in the die-roll game and cheating in the field in the NoFtF treatment.

-

Statistical support: Participants who answer “Yes” are more likely to cheat in the field (two-sided Fisher’s exact test: \(N=123\), \(p=0.088\)). The Spearman correlation coefficient between Cheat field and Yes is 0.16 and significant at the 10% level (two-sided test: \(N=123\), \(p=0.070\)).Footnote 24

These results are also confirmed by Table 2: answering “Yes” in the die-roll game is associated with a 10% chance increase of cheating in the field.

Comparison of choices in die-roll mind game and cheating in the field for the NoFtF (left panel, \(n = 123\)) and FtF (right panel, \(n = 103\)) treatments with weighted markers. The smallest circles represent one single participant. The y-axis indicates the extent of cheating in the field relative to the maximum payoff a subject could claim. The x-axis represents the choices made in the mind game involving the die roll

The evidence from Fig. 5 and Table 2 contrasts with Result 2. Interestingly, this might actually lead to the opposite conclusion.

This section correlated cheating between the lab and the field using two different measures. The results show that individual level observations of cheating appear to be of paramount importance in understanding such secretive and subtle behaviour. These type of data might then provide new insights that cannot be inferred using aggregate statistics.

4 Discussion

Dishonesty can be very sensitive to personal factors (Rosenbaum et al. 2014; Jacobsen et al. 2018), and this in turn translates into heterogeneity in lying preferences (Gibson et al. 2013). The data show that cheating within and across the two environments is sensitive to individual preferences. Moreover, while in the list game both risk and social preferences are correlated with individual dishonesty (Table 3 Appendix B), this seems not to be the case for cheating in the field. Tables 1 and 2 show that only choices in the trust game are significantly correlated with dishonesty in the payment procedure.

Apart from heterogeneity in preferences, differences in dishonest behaviour might also hinge on the experimental paradigm (Gerlach et al. 2019). For example, while Gächter and Schulz (2016) find a positive correlation between the corruption index on the country level and reports in die-roll tasks, such effect is not found using coin-flip tasks (Pascual-Ezama et al. 2015). Hence, another possible source of variability in cheating can be generated by differences between the laboratory and the field tasks.

First, it should be noted that, although in the lab all decisions are computerised, the self-reporting procedure adopted in the field requires participants to lie to the experimenter. Cohn et al. (forthcoming) indeed find that interacting with a human induces significantly less cheating when compared to interacting with a machine. Hence, this difference in the communication channel might concur with the explanation of the results presented in Sect. 3. However, the data cannot explain why subjects that have been either honest or dishonest in the list game cheat to the same extent (on average) in the FtF treatment. Hence, the communication channel, per se, does not seem to fully explain the main findings.

Another difference between the lab and field tasks might rest on the moral costs associated with cheating. While participants can lie about a random event in the list game, the self-reporting procedure forces them to cheat in the field by claiming a higher payment, i.e., by ‘stealing’ money. In the latter case, it is possible that cheating triggers higher moral costs compared to lying about an artificial outcome, and this would result in more honest reports. Hermann and Mußhoff (2019) find that individuals are less willing to steal than lie in a die-roll experiment. Therefore, the higher moral costs implied by stealing would partially explain the low number of subjects that over-reported their experimental earnings. However, this effect alone cannot fully explain the lack of correlation between the lab and the field presented in Result 2.

It is also possible that differences in dishonest behaviour depend on the time available to make a decision. While in the laboratory choices are made within a few minutes, in the field this is not the case. Subjects can spend a few days to think on whether to claim a higher payment or not. If reflecting more time on the possibility to lie reduces dishonest behaviour, this might explain why only a few subjects lied in the field. To the best of our knowledge, only Andersen et al. (2018) studied the effect of time on cheating within the die-roll paradigm; they found no difference in dishonesty when participants are given an extra day to decide. In light of this finding, it seems unlikely for the results to be driven by the difference in the time available to make the decision.

Apart from individual preferences for honesty or differences between experimental paradigms, another explanation for Result 2 might rest on the experimental design as a whole.

As the reader might have noted, one’s willingness to claim a higher payment could depend on their actual laboratory choices. Subjects who cheat in the list game are more likely to obtain higher earnings, and, in turn, they might refrain from self-reporting a higher payment because of an income effect. By a similar argument, participants that remain honest in the lab might be more tempted to cheat in the field due to the higher stakes involved. Thus, we should expect a negative relation between laboratory earnings and over-reporting in the payment procedure.Footnote 25 Although only two randomly drawn parts were used to determine each subject’s payment, if the argument above is true, it could explain why no correlation is found between the two environments.

However, field behaviour seems to not depend on actual laboratory earnings. The coefficient of actual laboratory earnings in Tables 1 and 2 is not statistically significant at any conventional level. Although cheaters in the list game actually earned, on average, £2.8 (£1.3) more than honest participants in the NoFtF (FtF) treatment, these differences are relatively small. Therefore, the relative difference in potential gains from over-reporting between those who are honest and those who lie is little. Moreover, two recent meta-analyses find a weak (if none) effect of rewards on dishonesty (Abeler et al. 2019; Gerlach et al. 2019). Thus, although the lab and field tasks are not perfectly independent, income effect and stake size do not seem to explain the results shown in the previous section.

This section examined some factors that might have determined the results presented in this paper. Although some of them can partially account for the main findings, alone, none of them can fully explain the evidence presented in Sect. 3.2.

5 Conclusions

Even though laboratory experiments on cheating abound in the economic literature, only few studies explore their generalisability to the field. This paper aims to relate a laboratory measure of cheating with dishonesty in a non-controlled environment within the same population. To this purpose, we develop a laboratory task that allows for the observance of cheating at the individual level. Behaviour in the lab is then compared to choices in the field, where subjects have the possibility to cheat by over-reporting their experimental earnings. Payments are not issued immediately after the laboratory experiment. Instead, after a few days participants are allowed to self-report their earnings to the experimenter. Subjects are paid the amount of money they claim to have earned. As shown by the laboratory data, established results as lying aversion and non-payoff-maximising lies are replicated. However, according to our measure, no correlation of cheating between the lab and the field is observed. We then perform the same analysis using a laboratory task that measures cheating at the aggregate level. Using this measure, we do find a weak correlation between the two environments. However, it is not possible to pinpoint the drivers of these results. Yet it appears that only an interaction between individual preferences and contextual factors can account for the differences in cheating between the lab and the field.

Taken together, these findings underline the importance of being cautious when extending laboratory results regarding dishonesty outside a controlled environment.

Notes

Other existing laboratory tasks that do allow individual level observations of cheating are sender-receiver games (Gneezy 2005), variations of the die-roll task (e.g., Gneezy et al. 2018) and the matrix task (Mazar et al. 2008). However, sender–receiver games involve strategic interaction and, as with the variations of the die-roll task, require observability of lies to be common knowledge, with obvious consequences on dishonest behaviour. The matrix task, instead, requires participants to be explicitly deceived to collect individual-level observations of cheating.

The extensive margin corresponds to the fraction of people who lie, whereas the intensive margin corresponds to the extent of cheating for people who choose to do so.

Usually, in mind games, subjects must ‘predict’, in their mind, the outcome of a random device (e.g. die-roll). Then, they are asked to report whether their prediction was correct or not. They receive a reward if the answer is yes, and they receive nothing if the answer is otherwise. See Jiang (2013), Potters and Stoop (2016) and Kajackaite and Gneezy (2017) for examples.

It is unlikely that subjects forget their colour. Even in that case, we would expect participants to randomise between the four options, but we do not find evidence of this.

We designed our instructions carefully (see Appendix A). Participants are never told that the colours in the three lists are not present in the first list, nor otherwise. They simply receive no information on this matter. Our design is similar in this regard to other laboratory (e.g., Andreoni 1988; Gächter and Thöni 2005) and field (e.g., Bertrand and Mullainathan 2004; Das et al. 2016) studies that withhold information from participants.

The aim of the question is not to accurately measure subjects’ beliefs. Instead, it represents a rough measure that verifies whether participants understood that lying could be detected and thus, if our new laboratory task can be interpreted as a mind game. A different and more accurate scoring rule might have emphasised cheating as the matter of the study undermining subsequent behaviour.

The role of the ‘reminder card’ is to ensure that subjects do not forget the amount of money they earned in the experiment.

Earnings where stored in the data, but they could not be linked to a subject’s identity.

This prevents behaviour in the lab to be affected by the subsequent field task.

Note that, as in the list game, participants were never told that it was possible to link lab-field choices. They received no information in this regard.

The purpose of this interval is twofold: (i) to bound the maximal payoff that a dishonest person could claim, and (ii) to minimise possible confoundings due to strategic behaviour. For example, a person that earns £12 in the lab and is tempted to report £15 might question whether this payoff was actually earned by some other participant. If not, the lie would be caught immediately, undermining the decision to cheat. Knowing that payoffs are bounded and that the subject pool is of at least 100 participants should minimise this issue.

Upon payment collection, subjects complete the receipt form left in their locker and leave this, along with the keys, in a separated letterbox along with those of other participants. This procedure ensures complete anonymity even after subjects are paid for their participation.

As in the NoFtF treatment, we adopted a procedure that guarantees that the payment forms can never be linked to participants’ identities.

As Cohn et al. (2019) show, returning a wallet is perceived as a civic honest act.

In detail, \(N=48, p<0.001\) for pair (£1,£3), \(N=136\), \(p<0.001\) for pair (£3,£5) and \(N=110\), \(p<0.001\) for pair (£1,£5).

We acknowledge that some subjects might have misunderstood the question and reported their belief of how many participants actually cheated. As we did not want to emphasise cheating as the matter of the study, such question was not elicited.

Due to a fault of some computers in one session (after playing the list game), choices in the trust game were not recorded for some subjects. Thus, the observations from that session have been removed when looking at the correlation between choices in the list game and the other laboratory tasks.

Note that the total number of observations used for the lab-field comparison is lower then the one used for the laboratory analysis. This is due to the fact that in the FtF treatment, 15 subjects either forgot to collect the payment or were not able to participate in the field experiment. In the NoFtF, during the trust game (after playing the list game), some answers were not recorded, and participants whose lab payment was determined by this task have been removed from the lab-field analysis. The conclusions presented in Sect. 3.1 do not change if these observations are fully removed from the whole analysis.

Actual lab earnings range between £5 and £19. Thus, the variable \({\textit{Cheat\, field}}\) is always defined. Further, no subject under-reported their earnings. On average, subjects actually earned £11.84 (SD 3.35) and £11.86 (SD 3.47) in the NoFtF and FtF treatments, respectively. A Mann–Whitney U test does not reject the hypothesis of equality (\(p = 0.897, N = 226\)).

Similar to this result, Gerlach et al. (2019) show in a meta-analysis that dishonesty is significantly more prevalent in lab experiments than in field studies.

In the FtF treatment, these two variables do not correlate. The Fisher’s exact test delivers \(p=0.649\) while the Spearman correlation coefficient is 0.08 with \(p=0.417\), \(N=103\).

Moral licensing or conscious accounting might generate the same effect but are less likely to play a role in explaining the main results. First, their effect might have been washed out by the dictator and the trust games. Second, the correlation found between the list game and the dice game works in the opposite direction.

References

Abeler, J., Becker, A., & Falk, A. (2014). Representative evidence on lying costs. Journal of Public Economics, 113, 96–104.

Abeler, J., Nosenzo, D., & Raymond, C. (2019). Preferences for truth-telling. Econometrica, 87(4), 1115–1153.

Al-Ubaydli, O., & List, J. A. (2013). On the generalizability of experimental results in economics: With a response to camerer (Working Paper No. 19666). National Bureau of Economic Research.

Al-Ubaydli, O., List, J. A., & Suskind, D. L. (2017). What can we learn from experiments? understanding the threats to the scalability of experimental results. American Economic Review, 107(5), 282–86.

Andersen, S., Gneezy, U., Kajackaite, A., & Marx, J. (2018). Allowing for reflection time does not change behavior in dictator and cheating games. Journal of Economic Behavior & Organization, 145, 24–33.

Andreoni, J. (1988). Why free ride? Strategies and learning in public goods experiments. Journal of Public Economics, 37(3), 291–304.

Ariely, D. (2012). The (honest) truth about dishonesty: How we lie to everyone - especially ourselves. UK: Harper Collins.

Bertrand, M., & Mullainathan, S. (2004). Are Emily and Greg more employable than Lakisha and Jamal? A field experiment on labor market discrimination. American Economic Review, 94(4), 991–1013.

Bock, O., Baetge, I., & Nicklisch, A. (2014). hroot: Hamburg registration and organization online tool. European Economic Review, 71, 117–120.

Bucciol, A., & Piovesan, M. (2011). Luck or cheating? A field experiment on honesty with children. Journal of Economic Psychology, 32(1), 73–78.

Burks, S. V., Carpenter, J. P., & Verhoogen, E. (2003). Playing both roles in the trust game. Journal of Economic Behavior & Organization, 51(2), 195–216.

Camerer, C. F. (2015). The promise and success of lab–field generalizability in experimental economics: A critical reply to levitt and list. Handbook of Experimental Economic Methodology.

Cohn, A., Fehr, E., & Maréchal, M. A. (2014). Business culture and dishonesty in the banking industry. Nature, 516, 86–89.

Cohn, A., Gesche, T., & Maréchal, M. A. (forthcoming). Honesty in the digital age. Management Science.

Cohn, A., & Maréchal, M. A. (2018). Laboratory measure of cheating predicts school misconduct. The Economic Journal, 128(615), 2743–2754.

Cohn, A., Maréchal, M. A., & Noll, T. (2015). Bad boys: How criminal identity salience affects rule violation. The Review of Economic Studies, 82(4), 1289–1308.

Cohn, A., Maréchal, M. A., Tannenbaum, D., & Zünd, C. L. (2019). Civic honesty around the globe. Science, 365(6448), 70–73.

Conrads, J., & Lotz, S. (2015). The effect of communication channels on dishonest behavior. Journal of Behavioral and Experimental Economics, 58, 88–93.

Dai, Z., Galeotti, F., & Villeval, M. C. (2018). Cheating in the lab predicts fraud in the field: An experiment in public transportation. Management Science, 64(3), 1081–1100.

Das, J., Holla, A., Mohpal, A., & Muralidharan, K. (2016). Quality and accountability in health care delivery: Audit-study evidence from primary care in india. American Economic Review, 106(12), 3765–99.

DePaulo, B. M., Kashy, D. A., Kirkendol, S. E., Wyer, M. M., & Epstein, J. A. (1996). Lying in everyday life. Journal of Personality and Social Psychology, 70(5), 979–995.

Donnellan, M. B., Oswald, F. L., Baird, B. M., & Lucas, R. E. (2006). The mini-ipip scales: Tiny-yet-effective measures of the big five factors of personality. Psychological Assessment, 18(2), 192–203.

Drupp, M. A., Khadjavi, M., & Quaas, M. F. (2019). Truth-telling and the regulator. Experimental evidence from commercial fishermen. European Economic Review, 120, 103310.

Eckel, C. C., & Grossman, P. J. (2008). Forecasting risk attitudes: An experimental study using actual and forecast gamble choices. Journal of Economic Behavior & Organization, 68(1), 1–17.

Erat, S., & Gneezy, U. (2012). White lies. Management Science, 58(4), 723–733.

Falk, A., & Heckman, J. J. (2009). Lab experiments are a major source of knowledge in the social sciences. Science, 326(5952), 535–538.

Fischbacher, U. (2007). z-tree: Zurich toolbox for ready-made economic experiments. Experimental Economics, 10(2), 171–178.

Fischbacher, U., & Föllmi-Heusi, F. (2013). Lies in disguise-an experimental study on cheating. Journal of the European Economic Association, 11(3), 525–547.

Gächter, S., & Schulz, J. F. (2016). Intrinsic honesty and the prevalence of rule violations across societies. Nature, 531, 496–499.

Gächter, S., & Thöni, C. (2005). Social learning and voluntary cooperation among like-minded people. Journal of the European Economic Association, 3(2/3), 303–314.

Gerlach, P., Teodorescu, K., & Hertwig, R. (2019). The truth about lies: A meta-analysis on dishonest behavior. Psychological Bulletin, 145(1), 1–44.

Gibson, R., Tanner, C., & Wagner, A. F. (2013). Preferences for truthfulness: Heterogeneity among and within individuals. American Economic Review, 103(1), 532–48.

Gneezy, U. (2005). Deception: The role of consequences. American Economic Review, 95(1), 384–394.

Gneezy, U., Kajackaite, A., & Sobel, J. (2018). Lying aversion and the size of the lie. American Economic Review, 108(2), 419–53.

Hanna, R., & Wang, S.-Y. (2017). Dishonesty and selection into public service: Evidence from India. American Economic Journal: Economic Policy, 9(3), 262–90.

Hermann, D., & Mußhoff, O. (2019). I might be a liar, but i am not a thief: An experimental distinction between the moral costs of lying and stealing. Journal of Economic Behavior & Organization, 163, 135–139.

Hübler, O., Menkhoff, L., & Schmidt, U. (2018). Who is cheating? the role of attendants, risk aversion, and affluence. DIW Berlin Discussion Paper No. 1736 .

Jacobsen, C., Fosgaard, T. R., & Pascual-Ezama, D. (2018). Why do we lie? A practical guide to the dishonesty literature. Journal of Economic Surveys, 32(2), 357–387.

Jiang, T. (2013). Cheating in mind games: The subtlety of rules matters. Journal of Economic Behavior & Organization, 93, 328–336.

Kajackaite, A., & Gneezy, U. (2017). Incentives and cheating. Games and Economic Behavior, 102, 433–444.

Kessler, J. B., & Vesterlund, L. (2015). The external validity of laboratory experiments: Qualitative rather than quantitative effects. Handbook of Experimental Economic Methodology.

Levitt, S. D., & List, J. A. (2007). What do laboratory experiments measuring social preferences reveal about the real world? The Journal of Economic Perspectives, 21(2), 153–174.

Mazar, N., Amir, O., & Ariely, D. (2008). The dishonesty of honest people: A theory of self-concept maintenance. Journal of Marketing Research, 45(6), 633–644.

Mazar, N., & Ariely, D. (2006). Dishonesty in everyday life and its policy implications. Journal of Public Policy & Marketing, 25(1), 117–126.

Pascual-Ezama, D., Fosgaard, T. R., Cardenas, J. C., Kujal, P., Veszteg, R., Gil-Gómez de Liaño, B., Brañas-Garza, & P. . (2015). Context-dependent cheating: Experimental evidence from 16 countries. Journal of Economic Behavior & Organization, 116, 379–386.

Pittarello, A., Rubaltelli, E., & Motro, D. (2016). Legitimate lies: The relationship between omission, commission, and cheating. European Journal of Social Psychology, 46(4), 481–491.

Potters, J., & Stoop, J. (2016). Do cheaters in the lab also cheat in the field? European Economic Review, 87, 26–33.

Rosenbaum, S. M., Billinger, S., & Stieglitz, N. (2014). Let’s be honest: A review of experimental evidence of honesty and truth-telling. Journal of Economic Psychology, 45, 181–196.

Slemrod, J. (2007). Cheating ourselves: The economics of tax evasion. Journal of Economic Perspectives, 21(1), 25–48.

Funding

Open access funding provided by Università degli Studi di Siena within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Friederike Mengel provided precious guidance, help and support. Special thanks to Agne Kajackaite and all the people met at WZB for their helpful comments. I also thank Ennio Bilancini and attendants at the AISC mid-term conference 2019, SABE/IAREP 2018, University of Essex internal seminar, and \(61^{a}\) Riunione Scientifica Annuale Società Italiana Economisti. This work was supported by the EssexLab seedcorn grants and the Economic and Social Research Council [Grant Number 1645890]. All errors are mine.

Appendices

Appendix

A Experimental instructions

This section provides the experimental instructions for both the laboratory and the field.

2.1 General instructions

Welcome!

You are about to take part in a decision-making experiment. It is important that you do not talk to any of the other participants during the experiment. If you have a question at any time, raise your hand and an assistant will come to your desk to answer it. This experiment consists of five different parts and you will play each of them only once. You will receive detailed instructions for each part on your computer screen as the experiment progresses. In each part you will be asked to make one or more decisions. Decisions made in one part of the experiment will bear no consequences for the other parts of the experiment. During the experiment your earnings will be calculated in pounds and you will have the chance to earn an amount of money that can range from £5 to £26. At the end of the main experiment you will have to complete a brief questionnaire. At the end of the experiment the computer will randomly select two parts for each participant. The sum of the earnings in these two selected parts will constitute your payment for this experiment. In addition to this money we will pay you £4 for showing up today and 1£for completing the questionnaire. Your cash earnings will not be immediately paid. Instead, payments will be issued within few days (from 23rd to 30th of November). You will receive further instructions about the payment procedure at the end of the experiment. If you have a question now, please raise your hand and a lab assistant will come to your workstation.

2.2 Instructions for the list game

You are about to play an easy game. In the next screen you will see a list of 60 colour names (e.g. tamarind). Once the list appears, a countdown of 5 seconds will start. This list will be displayed until the countdown reaches zero. Before the list disappears, you will have to choose one of the colour names in the list and keep it in your mind. Then, three random lists containing 4 colour names each (for a total of 12 colours names) will appear. If the colour you have in mind is in one of the lists, you will win the amount of money associated to that list, otherwise £0. After you click the OK button the first list containing 60 colour names will be shown and the 5 seconds timer will start. Choose a colour in your mind before the timer reaches zero. Click the OK button to start.

2.3 Instructions for the dice game

In this part you will have to roll a fair die with 5 sides. Every side corresponds to a colour. Hence, every colour has probability of 1/5 to come up. This means that, in expectation, out of 100 rolls every colour will come up 20 times. Before rolling the die, you have to choose a colour in your mind from the ones displayed below. If the outcome of the roll is the same colour you though of, you will earn 5£, otherwise 0£.

2.4 Instructions for dictator game

In this part, the computer will randomly pair you with another participant. You will remain paired with this person for the whole duration of this part. Once the decisions are made, the pair will be dissolved. You, as well as the person you are paired with, will never learn the identity of each other. In this part, both you and the participant you are paired with, will have to split the same amount of money among you. Each of you simultaneously decides the amount to transfer to the other participant. Hence, the decision of one subject is not observable by the other participant. The computer will then choose with equal probability which one of the two actions will be implemented. Your earnings from this part correspond to the money that you keep for yourself (in case your choice is implemented) or to the money the other participant decides to transfer to you (if his/her choice is implemented).

2.5 Instructions for the lottery choice

In this part, you will have to choose between five options. You will be paid based on which option you choose. Each option involves a simple lottery with two possible outcomes that are equally likely to occur. Hence, every lottery will return each of the two numbers with 50% probability.

2.6 Instructions for the trust game

In this part, the computer will randomly pair you with another participant. You will remain paired with this person for the whole duration of this part. Once the decisions are made, the pair will be dissolved. You, as well as the person you are paired with, will never learn the identity of each other. There are two types of player in this part, a sender and a receiver. You will play both roles: at first as a sender and then as a receiver. Each person will be allocated with the same amount of £X. Firstly, each of you will simultaneously decides as if you were the sender. As a sender you will have the opportunity to send some of the £X to the other person (receiver). Each pound sent to the receiver will be tripled. Thus, if the sender sends £x, the other player will receive £3x. Then, without observing the choice of the other sender, you will be asked to choose as if you were the receiver. You will have to decide how much money to send back to the sender for any possible amount of money that you can receive. Once the decisions are made, the computer will choose with equal probability which member of the pair is the sender and who is the receiver, implementing the corresponding choices. The earnings of the sender from this part will correspond to the amount of the endowment of £X he/she keeps for his/herself plus the money returned by the receiver. The earnings of the receiver from this part will correspond to the endowment of £X, plus three times the transfer from the sender, minus the money returned to the sender.

2.7 Documents

2.7.1 Consent forms

See Fig. 6.

2.7.2 Payment forms

See Fig. 7.

Payment forms for the NoFtF (left) and FtF (right) treatments. The unique and hidden code that characterizes each form is given by the combination of the number of dots in the “Payment date” and “Total earnings” fields (subject id), and the lenght of the line below the email address (session id). To prevent copies, an university logo was stamped in the bottom right corner of the paper sheets

B Additional results

This section presents additional results and focuses on the relation between behaviour in the list game and the other laboratory tasks. As described in Sect. 2, other individual attitudes as risk preferences, individual greed, and trust were further elicited during the laboratory sessions. Table 3 shows how behaviour in these tasks correlates with cheating. The first three columns represent linear average effects on choices in the list game, while specifications 4–6 show marginal effects on a dichotomous variable that takes value one if a subject lied in the same task. The variable Yes represents the report made in the die-roll game. The variable Risk corresponds to the lottery chosen in Part 5 and can take integer values starting from one, which corresponds to the safe option, to five, where higher numbers are associated with higher risk. The last two variables, Transfer dictator and Transfer trust, correspond to the money sent to the receiver in the dictator and trust game, respectively.Footnote 26

Similar to what is found in Hübler et al. (2018), it seems that participants who are more willing to choose risky lotteries are also more likely to lie. As dishonesty highly depends on the perceived risk of being exposed as a liar (Gneezy et al. 2018), it is reasonable to assume that individuals who are more prone to cheat, are also more willing to bear the risk associated with it.

Focusing on the variables Transfer dictator and Transfer trust, it is possible to note that both of them are inversely related with cheating. However, the coefficient representing the amount of money sent in the dictator game is significant in none of the probability models. This correlation translates into the relation between social preferences and dishonesty. Participants that are more generous or more trusting, cheat, on average, by a lower amount and less frequently. This suggests that individuals who value social preferences the most are also those who attribute high value to social norms or, in this particular case, honesty.

On what concerns how cheating relates to demographic co-variates elicited in the final questionnaire, no particular effect is found. Tables 6 and 7 (Appendix C) show no robust and significant pattern for any of the individual demographics or personality traits.

C Additional tables

D Screenshots

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Albertazzi, A. Individual cheating in the lab: a new measure and external validity. Theory Decis 93, 37–67 (2022). https://doi.org/10.1007/s11238-021-09841-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11238-021-09841-0