Abstract

Can an agent deliberating about an action A hold a meaningful credence that she will do A? ‘No’, say some authors, for ‘deliberation crowds out prediction’ (DCOP). Others disagree, but we argue here that such disagreements are often terminological. We explain why DCOP holds in a Ramseyian operationalist model of credence, but show that it is trivial to extend this model so that DCOP fails. We then discuss a model due to Joyce, and show that Joyce’s rejection of DCOP rests on terminological choices about terms such as ‘intention’, ‘prediction’, and ‘belief’. Once these choices are in view, they reveal underlying agreement between Joyce and the DCOP-favouring tradition that descends from Ramsey. Joyce’s Evidential Autonomy Thesis is effectively DCOP, in different terminological clothing. Both principles rest on the so-called ‘transparency’ of first-person present-tensed reflection on one’s own mental states.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Can an agent hold a meaningful credence about a contemplated action, as she deliberates? Can she believe that it is, say, 70% probable that she will do A, while she chooses whether to do A? Following Spohn (1977) and Levi (1989, 1996), some writers claim that such ‘act credences’ are problematic, or even incoherent—deliberation crowds out prediction (DCOP), as Levi puts it. Some writers take DCOP to be almost a platitude;Footnote 1 others (e.g., Ahmed 2014; Joyce 2002; Rabinowicz 2002) think that the case for it is weak, or that it is clearly false.

Another recent critic of DCOP is Hájek (2016). In Liu and Price (2018), we argue, contra Hájek, that DCOP is a special case of the so-called ‘transparency’ of first-person present-tensed reflection on one’s own mental state. If someone asks me whether I believed yesterday that it would rain today, I consider my evidence (e.g., from memory) about what I believed yesterday. If someone asks me whether I believe that it is raining now, I don’t consider my present state of belief at all, or at least not directly. I simply consider whether it is raining. My enquiry ‘looks through’ the question about belief, to a question about what the belief in question is itself about. This ‘looking through’ is transparency.

Moran (2001) explains transparency in terms of a distinction between two paths to knowledge of one’s own mental state—a theoretical path, which is the one I use to discover what I believed yesterday (or what another person believes); and a deliberative path, where I learn that I now believe that it is raining by asking myself whether it is raining (and concluding that it is). As we gloss Moran’s conclusion, transparency rests on the fact that the deliberative path ‘crowds out’ the theoretical path. The practical case of this is simply DCOP, or so we argue.

Or rather, it is a version of DCOP, for our conclusion comes with a crucial qualification. Several of the relevant terms in this debate, including ‘credence’ itself, turn out to be ill-defined. Hence authors may seem to be disagreeing about DCOP, but simply be using terms in different ways. We give examples of how this can happen, and how DCOP may properly be said to fail, if terms are used differently.

In the present paper we apply these two lessons—the prevalence of terminological disagreements, and the relevance of transparency—to central parts of the literature about DCOP. We focus in particular on the work of Jim Joyce, who presents himself as a strong opponent of DCOP (especially as advocated by Levi). We defend two conclusions. First, Joyce’s disagreement with Levi is essentially terminological. It turns on the fact that Joyce uses terms such as ‘intention’, ‘prediction’, and ‘belief’ in a different way from Levi and other supporters of DCOP. Second, Joyce himself is committed to a principle which, like the version of DCOP we defend in Liu and Price (2018) is a consequence of transparency. Terminological differences aside, Joyce turns out to be a friend of DCOP, on its most interesting and plausible reading.

Given our terminological concerns, we must be careful not to speak of the DCOP thesis. There are several theses on offer, varying in what is meant by various crucial terms, including ‘credence’ itself. With this in mind, we begin with one well-known framework for understanding credence, the classical subjective decision theory (SDT) of writers such as Ramsey and Savage. We point out that within this model there is a clear basis for a DCOP-like thesis. This observation is not new, but it is not as well-known as it should be, and we don’t know of any previous writers who put its significance into the broader context that we offer here.Footnote 2

As we explain, however, there are other models of credence that do admit act credences. We give a simple example, and then explore a sophisticated model proposed by Joyce (2002, 2007). Joyce presents himself as an opponent of DCOP. Comparing Joyce to Ramsey, however, we shall see that the main difference is that Joyce treats as belief-like some components of the decision process that for Ramsey simply live in a different box altogether—in the intention box, rather than the belief box. Both sides agree that there are such items, and that they can have some of the formal properties of credences—a degree and a propositional content. The disagreement, to the extent that there is one, is about whether they deserve to be called ‘beliefs’; and this is largely a terminological matter. (Joyce agrees with Ramsey that there are no act credences in Ramsey’s sense during deliberation.) Joyce’s disagreement with Levi has the same terminological character.

We don’t deny that there is room for argument about the terminological matter in question (i.e., whether to treat intentions as a special kind of belief). But disagreement about this matter should not be allowed to obscure a deeper point of agreement between the two models. This point of agreement is what Joyce terms the Evidential Autonomy Thesis (EAT): “[A] rational agent, while in the midst of her deliberations, is in a position to legitimately ignore any evidence she might possess about what she is likely to do,” (2007, 556–557) as Joyce puts it.

As we shall see, EAT turns out to be more fundamental than DCOP, while embodying much of what recommends DCOP to its proponents. And it, too, turns out to rest on transparency. We thus offer reconciliation in two senses. Not only is Joyce’s disagreement with DCOP much shallower than he and others have assumed, but there is wide agreement on a fundamental and closely-related feature of agency—a feature already on the table in Ramsey, and now well articulated in Moran’s work on transparency.

2 Ramsey’s operational model of credence

Modern subjectivists understand credence, or ‘subjective probability’, in terms of its role in rational decision making. For our purposes, we want to think of this approach as providing a functional definition of credence—in effect, credence is treated as a theoretical notion, which is operationally defined, along with subjective utility, in terms of its role in producing certain specified choices. The idea of formalising the notion of credence, or degree of belief, in this way goes back to Frank Ramsey’s ground-breaking work ‘Truth and Probability’ (Ramsey 1926).Footnote 3

Ramsey sets out to investigate what he calls “the logic of partial belief,” and to treat such a logic as the basis for an understanding of probability. He notes a large obstacle in the path of this project:

It is a common view that belief and other psychological variables are not measurable, and if this is true our inquiry will be vain; and so will the whole theory of probability conceived as a logic of partial belief; for if the phrase ‘a belief two-thirds of certainty’ is meaningless, a calculus whose sole object is to enjoin such beliefs will be meaningless also. Therefore unless we are prepared to give up the whole thing as a bad job we are bound to hold that beliefs can to some extent be measured. (166)

But how to measure degrees of belief? Ramsey says that there are two possibilities. The first, which he dismisses, is that “the degree of a belief is something perceptible by its owner; for instance that beliefs differ in the intensity of a feeling by which they are accompanied”. He argues instead for the second possibility: “that the degree of a belief is a causal property of it, which we can express vaguely as the extent to which we are prepared to act on it”. This is the idea—a functionalist view of degree of belief, as we would now call it—that he then proceeds to develop with characteristic alacrity.

In taking this course, Ramsey is guided by what he calls “the old-established way of measuring a person’s belief,” which is “to propose a bet and see what are the lowest odds which he will accept.” Ramsey finds this method to be “fundamentally sound” (barring some deficiencies due to features like diminishing marginal utility of money, agent’s possible disdain for gambling, etc., which can nonetheless be dealt with by stipulating a series of postulates in the formal model). More precisely, Ramsey considers an agent who chooses among gambles of the form

where p is a proposition and \(\alpha ,\beta \) are “goods” that the agent values. The gamble is understood in the usual sense: in accepting this gamble the agent gets \(\alpha \) if p is true, \(\beta \) otherwise. For instance, let p be “The result of next toss of this coin is heads” and \(\alpha \) and \(\beta \) be some monetary rewards (or penalties). An agent who accepts this gamble gets \(\alpha \) if the coin lands heads, \(\beta \) otherwise.

For notational convenience, let us write \(G(p,\alpha ,\beta )\) for the gamble that pays \(\alpha \) if p, \(\beta \) if \(\lnot p\). In Ramsey’s approach, we think of life as continually presenting us with options of this kind. As he puts it, his model

is based fundamentally on betting, but this will not seem unreasonable when it is seen that all our lives we are in a sense betting. Whenever we go to the station we are betting that a train will really run, and if we had not a sufficient degree of belief in this we should decline the bet and stay at home. (183, emphasis added)

Agents are thought of as choosing to accept or reject a given bet, or choosing among different bets, based on their degree of belief, or credence, in p and utilities they assign to \(\alpha \) and \(\beta \).

The agent is assumed to have preferences among gambles of this form. Then, provided that this preference relation among gambles satisfies a set of coherence axioms, the system yields a probability function P and a utility function U (unique up to a positive linear transformation) such that the “ultimate good” of accepting gamble \(G(p,\alpha ,\beta )\) can be represented by expected utilities, that is:

Ramsey’s theory marks the beginning of a long and fruitful development of probabilistic subjectivism, an effort made by generations of writers. Philosophically, this approach is motivated by the pragmatic thesis that probability is to be understood in terms of the rational decision making that we, qua real-world agents, strive to achieve on a day-to-day basis. Methodologically, it retains at its core Ramsey’s operationalised model of personal probabilities (credences) and utilities. Mathematically, it is built with rigorous representation theorems by means of which probabilities can be numerically defined.Footnote 4

For our purposes, what matters is that Ramsey’s model gives us an account of what it is to hold a credence, or degree of confidence, in a particular proposition. The answer, holistically generated across a space of propositions, in harmony with a simultaneous definition of utility, consists in a disposition to choose certain gambles in preference to others.

3 Can Ramsey’s model make sense of act credences?

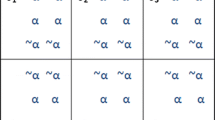

Ramsey says at one point that the agent is assumed to have “certain opinions about all propositions” (174). This cannot be quite correct, however, for there is an important class of propositions to which his model cannot assign non-trivial credences. To see this, consider an agent whose current options include the following gamble:

What would it take, in Ramsey’s system, for this agent to have a credence in whether she will accept A, as she decides whether or not to do so? The answer is that the agent would need to include, in her ranked suite of possible actions, gambles of the form:

For this is the kind of gamble that is relevant to determining whether she has some particular degree of belief in the proposition that she will accept A.

A gamble of the form of B is quite unproblematic if it is considered as a measure of the agent’s degree of belief about whether she accepts A on some other occasion (a future occasion, or even a past occasion, if we allow that the agent may have forgotten whether she accepted B at some point in the past). But B makes no sense—or at least, no sense as a measure of credence—as she decides whether to accept A.

Note that in a context in which an agent is considering gamble A, offering her B simply adds to whatever is already at stake a fixed amount of \(\gamma \) or \(\delta \), available to the agent for certain, depending on whether she accepts A. This may give us some information about the agent’s psychological state—in the limit, as \(\gamma \) and \(\delta \) are allowed to be large enough to dominate other considerations, it certainly tells us whether she prefers \(\gamma \) to \(\delta \), or vice versa (and therefore deprives us of the information that the choice would otherwise provide about other matters). But it tells us nothing about any credence on the agent’s part about whether she will do A, as she makes her choice.

To be more precise, consider, for simplicity, the case where the agent has only two gambles to choose from, namely \(A=G(p,\alpha ,\beta )\) and \(B=G(\hbox { I accept}\ A , \gamma , \delta )\) as formulated above.Footnote 5 Now suppose that the agent’s credence on proposition p is \(r_p\) and, for reductio, her credence on ‘I accept A’ is \(r_A\). Then, given her options, the possible consequences the agent may end up with as results of her actions are \( A\, \& \,B\), \( \lnot A\, \& \, B\), \( A\, \& \,\lnot B\), and \( \lnot A\, \& \,\lnot B\) (see the table below, where ‘\( A\, \& \, \lnot B\)’ reads “I accept A but reject B,” and so on.).

Act | Utility value |

|---|---|

\( A\, \& \,B\) | \( \gamma + EU(A)=\gamma + [r_p\alpha + (1-r_p)\beta ]\) |

\( \lnot A\, \& \,B\) | \(\delta \) |

\( A\, \& \, \lnot B\) | \(EU(A)=r_p\alpha + (1-r_p)\beta \) |

\( \lnot A\, \& \,\lnot B\) | status quo |

It is plain that, in this case, the decision problem reduces to a simple choice problem among different consequences of her actions. Then, the agent should just act in a manner that maximises her gain. But such choices tell us nothing about any credence on the agent’s part about whether she will do A—the act-credence \(r_A\) has nothing to do with the situation.

We could put the point like this. At the heart of Ramsey’s model is a (formalised) notion of choice. Agents are assumed to have unrestricted access to a range of options—a range of gambles, each of which they may either accept or decline. Think of this as like a bank of toggle switches: for each switch, the agent is free to set it either on or off. The beauty of the model is to choose the gambles so that the resulting pattern of switch settings reveals the agent’s credences over a range of propositions.

Beautiful as it is, this machinery cannot make sense of an assignment of a credence concerning one of switch settings. The chosen switch settings are the ‘observables’ of the model, on view to the agent concerned as much as to a third party. Until they are fixed, the entire model tells us nothing about the theoretical variables it takes to be underneath (i.e., the agent’s credences and utilities); but once they are fixed, there is no room in the model for uncertainty about them.

As we saw, the attempt to add a new switch representing a gamble conditional on one of the existing switch settings simply becomes a new reward for the choice of that switch setting. In these circumstances the agent’s choice tells us something about their preferences,Footnote 6 but nothing new of an epistemic nature. Where agents make their own truth, choices that would otherwise reflect degrees of uncertainty have no such significance—and there is simply no substitute, within Ramsey’s model.

It is clear that Ramsey recognised this distinction between epistemic matters, on the one hand, and practical matters—things that are up to us—on the other. In a later piece he says this, for example:

When we deliberate about a possible action, we ask ourselves what will happen if we do this or that. If we give a definite answer of the form ‘If I do p, q will result,’ this can properly be regarded as a material implication or disjunction ‘Either not-p or q.’ But it differs, of course, from any ordinary disjunction in that one of its members is not something of which we are trying to discover the truth, but something it is within our power to make true or false. (Ramsey 1929, 142, emphasis added)

A few lines later, Ramsey again emphasises the non-epistemic character of our relation to propositions concerning our present options:

Besides definite answers ‘If p, q will result’, we often get ones ‘If p, q might result’ or ‘q would probably result’. Here the degree of probability is clearly not a degree of belief in ‘Not-p or q’, but a degree of belief in q given p, which it is evidently possible to have without a definite degree of belief in p, p not being an intellectual problem.Footnote 7 (142, emphasis added)

Finally, Ramsey also notes that the lacuna in the agent’s credences concerns only her present actions—matters currently ‘up for decision’, as we might say. As his footnote puts it:

It is possible to take one’s future voluntary action as an intellectual problem: ‘Shall I be able to keep it up?’ But only by dissociating one’s future self. (142)

To summarise, we have shown that there is no room in Ramsey’s model for credences for currently-contemplated gambles, or actions. In other words, DCOP holds, within Ramsey’s model, and subject to the restriction to present actions. So, success of a kind for DCOP, but both qualifications are essential. We have just seen that Ramsey himself allows ‘remote’ act credences; and, as we shall shortly explain, it is a trivial matter to extend Ramsey’s model so that it allows present act credences—i.e., so that DCOP fails completely.Footnote 8

4 From Ramsey to Joyce

As we saw, the incoherence of act credences in Ramsey’s model is related to the objection, often cited in favour of DCOP, that there is something deeply problematic about offering an agent bets on her own actions. This objection is discussed in a classic paper by Rabinowicz (2002), himself an opponent of DCOP. Rabinowicz concedes that there is some merit to the argument, but suggests that it doesn’t establish as much as the proponents of DCOP require:Footnote 9

In those cases when bet offers themselves would influence our probabilities for the events on which the bets are made, probabilities no longer are translatable into betting dispositions. This does not mean, however, that probability estimates are impossible to make in cases like this. The correct conclusion is rather that the connection between probabilities and betting rates is not as tight as one might initially be tempted to think. (Rabinowicz 2002, 110)

We agree with Rabinowicz, if we interpret him simply as noting the possibility of models that extend the Ramsey framework, to allow the existence of act credences in circumstances in which the original framework does not.Footnote 10

Indeed, the point is a rather obvious one, for here is a simple way to construct such an extension. Imagine that our agent carries in his pocket a Personal Digital Assistant, Siri, who attempts to maintain a dynamic assignment of probabilities to a range of the agent’s possible future actions.Footnote 11 Now let our hybrid model use the agent’s own credences, where available, and Siri’s, where not. This hybrid model can certainly assign credences to the agent’s presently-contemplated actions—credences originating in Siri—even though the agent’s own Ramseyian model cannot.

This trivial example makes a serious point. Anyone in these debates who, unlike us, takes themselves to be discussing DCOP as a thesis about agents simpliciter, rather than as about agents modelled in some particular way, would do well to ask themselves what they mean by an ‘agent’. Without a well-motivated restriction of the field, it will be trivially true that some agents do satisfy DCOP and others do not, so that there is no general thesis to be had.

Are there non-trivial reasons for entertaining models that modify Ramsey’s framework so as to admit act credences? Certainly, and to illustrate the point we shall now turn to two motivations offered by Joyce. Joyce is a particularly interesting case, from our point of view. He is a strong advocate of DCOP-violating models, but he makes moves within them that have much in common with some of the key insights of those who favour DCOP. This offers the interesting prospect that we might be able to identify an important generic feature of agency, common to DCOP-respecting and DCOP-violating models, and itself of considerably more interest than the choice between such models. As we shall see, this possibility turns on the fact that Joyce is not so much extending Ramsey’s model, as in the Siri case, but relabelling it, by treating as ‘belief-like’ some elements that are present in a Ramseyian model, but classified in a different way.

4.1 Joyce on the role of act credences

Characterising his own version of Causal Decision Theory (CDT), Joyce notes that it “requires deliberating agents to make predictions about their own actions.” (Joyce 2002, 69) He notes that Levi maintains that such a decision theory is “incoherent because ‘deliberation crowds out prediction.’” In response, Joyce defends the following conclusion:

[T]he ability of a decision maker to adopt beliefs about her own acts during deliberation is essential to any plausible account of human agency and freedom. While Levi suggests that a deliberating agent cannot see herself as free with respect to acts she tries to predict, precisely the reverse is true. Though they play no part in the rationalization of actions, such beliefs to are essential to the agent’s understanding of the causal genesis of her behavior. (70)

Joyce thus presents himself as an opponent of DCOP. However, he provides a helpful summary of arguments in favour of DCOP—as he puts it, “some general worries that one might have about letting agents assign probabilities to their own acts”:

Worry-1 Allowing act probabilities might make it permissible for agents to use the fact that they are likely (or unlikely) to perform an act as a reason for performing it.

Worry-2 Allowing act probabilities might destroy the distinction between acts and states that is central to most decision theories.

Worry-3 Allowing act probabilities “multiplies entities needlessly” by introducing quantities that play no role in decision making. (79)

In each case, Joyce expresses sympathy for the concern, but argues that DCOP is not required in order to meet it. Thus:

As to Worry-1, I entirely agree that it is absurd for an agent’s views about the advisability of performing any act to depend on how likely she takes that act to be. Reasoning of the form “I am likely (unlikely) to A, so I should A” is always fallacious. While one might be tempted to forestall it by banishing act probabilities altogether, this is unnecessary. We run no risk of sanctioning fallacious reasoning as long as A’s probability does not figure into the calculation of its own expected utility, or that of any other act. (79–80)

Note that Ramsey’s model does not allow anything other than “banishing act probabilities altogether”, as Joyce puts it here. In effect, Joyce is agreeing that self-referential gambles—i.e., gambles with descriptions that refer to how likely they themselves are to be accepted or rejected—are incoherent, and proposing to understand ‘credence’ so that act credences do not commit us to such gambles. But for Ramsey there is no such option—credences are defined in terms of gambles, and in the case of act credences that would require the kind of self-referential gambles that Joyce agrees to be absurd. (It is not clear that the absurdity Joyce has in mind is precisely the one we identified in §3—arguably, it can’t be, for Joyce takes it to obtain even in his non-Ramseyian models. We return to this issue in §5.)

Similarly, concerning Worry-2, Joyce concludes:

Even if act probabilities do not figure into the calculation of act utilities, they may have other roles to play in the process of rational decision making. Indeed, we shall soon see that they do. (80)

Again, concerning a presentation by Levi of the apparent unmeasurability of act credences within SDT, Joyce says:

It is quite true that the probabilities [an agent] assigns to acts during her deliberations cannot be elicited using wagers in the usual way, but this does not show that they are incoherent, only that they are difficult to measure. (86–87)

Finally, Joyce makes similar remarks about Worry-3:

As Wolfgang Spohn has long argued, there is no reason to allow act probabilities in decision theory if we cannot find anything useful for them to do. Given that they play no role in the evaluation or justification of acts, it would seem that there is nothing useful for them to do. Why not abolish them? (98)

Joyce responds as follows:

Act probabilities are a kind of epiphenomena in decision theory. Though they do no real explanatory work, they are tied to things that do. We need act probabilities because (i) we need unconditional subjective probabilities for decisions about acts to causally explain action (though not to rationalize it), and (ii) we need Efficacy to explain what it is for an agent to regard acts as being under her control. Efficacy requires that \(\hbox {P}(\hbox {A}\backslash \hbox {dA}) = \hbox {P}(\lnot \hbox {A}\backslash \hbox {d}\lnot \hbox {A}) = 1\), and so \(\hbox {P}(\hbox {A}/\hbox {dA}) = \hbox {P}(\lnot \hbox {A}/\hbox {d}\lnot \hbox {A}) = 1\). One cannot have these latter conditional probabilities and unconditional probabilities for dA and \(\hbox {d}\lnot \hbox {A}\) without also having unconditional probabilities for A and \(\lnot \hbox {A}\). Act probabilities are not only coherent, they are compulsory if we are to adequately explain rational agency. We cannot outlaw them without jettisoning other subjective probabilities that are essential ingredients in the causal processes that result in deliberate actions. When it comes to beliefs about one’s own actions, deliberation does not “crowd out” prediction; it mandates it! (98–99)

This will take a little unpacking. First, it is important to note that Joyce is distinguishing between an agent’s decision to do A, written dA, and the act A itself. When he talks of act probabilities, he means P(A) and P(\(\lnot \)A), not P(dA) and P(d\(\lnot \)A). This is another potential source of talking at cross purposes—some proponents of DCOP may take it for granted that the important issue concerns the latter credences, not the former.

Fortunately this distinction doesn’t matter much in this context, because Joyce is equally committed to the need for unconditional probabilities of both kinds, P(A) and P(dA). They are connected by the principle Efficacy, which Joyce takes to encode the idea that the agent takes A to be under her control—if she chooses dA then A results, and similarly for d\(\lnot \)A and \(\lnot \)A.

Let us grant Joyce that, as he puts it here, “Efficacy requires that \(\hbox {P}(\hbox {A}\backslash \hbox {dA}) = \hbox {P}(\lnot \hbox {A}\backslash \hbox {d}\lnot \hbox {A}) = 1\), and so \(\hbox {P}(\hbox {A/dA}) = \hbox {P}(\lnot \hbox {A/d}\lnot \hbox {A}) = 1\).”Footnote 12 It certainly follows that if we allow unconditional probabilities P(dA) and P(d\(\lnot \)A) then we shall have to allow P(A) and P(\(\lnot \)A), as well. But why do these conditional claims require unconditional probabilities in the first place?

We see two possible answers at this point. The first is that the conditional probabilities are defined in terms of unconditional probabilities, so that we cannot have P(A/dA) and \(\hbox {P}(\lnot \hbox {A}/\hbox {d}\lnot \hbox {A})\) without having P(dA) and \(\hbox {P}(\hbox {d}\lnot \hbox {A})\) as well. This is a very familiar move, and needs to be mentioned as a motivation for extending Ramseyian SDT to add unconditional act credences. But it also admits a well-known reply, namely, that there are other reasons for treating conditional probability as primitive, and not defining it as the usual ratio of unconditional probabilities. Ramsey himself favoured this approach. In a passage we quoted above, he refers to one’s “degree of belief in q given p, which it is evidently possible to have without a definite degree of belief in p” (1929, 142, and see also his 1926, 180). Later proponents include Renyi (1970), Price (1986b), Mellor (1993), and Hájek (2003).

Whether or not Joyce has this consideration in mind, his main point is a different one. He proposes a detailed model of the deliberative process in which the credences P(dA) and P(d\(\lnot \)A) play a crucial role. As he notes, the model owes much to Velleman. From Joyce’s point of view the attractions of this model provide the strongest case for accepting P(dA) and P(d\(\lnot \)A), and hence for rejecting DCOP. Accordingly, we want to follow Joyce’s explication of the model in some detail. It is crucial to our claim that (apparent) disagreements about DCOP are obscuring deeper agreement about the nature of agency.

4.2 Joyce on “evidential autonomy”

Joyce introduces his discussion of the model in question by articulating yet another concern about act credences:

I am portraying the agent who changes her mind as altering her beliefs about what she will decide on the basis of no evidence whatever. She goes from being certain that she has decided on \(\lnot \)A to being certain that she has decided on A without learning anything. Can this sort of belief change be rational? By letting agents assign subjective probabilities to their own acts it seems that we are also letting them believe whatever they want about them. This means that act probabilities must be radically unlike other probabilities in that they seem not to be at all constrained by the believer’s evidence. ... This, I suspect, gets us to what is really bothering people about act probabilities. (2002, 94–95)

In response, Joyce notes first that when an agent

sees herself as a free agent in the matter of A, Efficacy ensures that all of her evidence about A comes by way of evidence about her decisions. Her justification for claiming that she will do A will always have the form: “here is such-and-such evidence that I will decide on A, and (via Efficacy) deciding on it will cause me to do it.” (95)

As Joyce says, this may seem “to push the problem back from beliefs about acts to beliefs about decisions.” But he argues that “this is not so”:

An agent’s beliefs about her own decisions have a property that most other beliefs lack: under the right conditions they are self-fulfilling, so that if the agent has them then they are true. Understanding this is one of the keys to understanding human agency and freedom. (95)

Joyce explains this point with reference to Velleman’s notion of epistemic freedom:

According to Velleman, ... the believer has a kind of “epistemic freedom” with respect to self-fulfilling beliefs that she lacks for her other opinions; she can justifiably believe whatever she wants about them. If she is sure that believing H will make H true and that believing \(\lnot \)H will make \(\lnot \)H true then, no matter what other evidence she might possess, she is at liberty to believe either H or \(\lnot \)H because she knows that whatever opinion she adopts will be warranted by the evidence she will acquire as a result of adopting it. More generally, any increase or decrease in her confidence in H provides her with evidence in favor of that increase or decrease—the stronger a self-fulfilling belief is, the more evidence one has in its favor. (96)

Like Velleman (1989), Joyce sees this idea of self-fulfilling belief as crucial to a proper understanding of agency: “Velleman holds, as I do, that agents are epistemically free with respect to their own decisions and intentions. ... [T]he idea that agents are epistemically free regarding their own decisions is important and entirely correct.” (96–97)

Finally, Joyce applies these ideas to offer a model of the dynamics of deliberation:

During the course of her deliberations [an agent’s] confidence in “I decide to do A” will wax or wane in response to information about A’s desirability relative to her other options (e.g., information about expected utilities). If A and \(\lnot \)A seem equally desirable at some point in the process, then she will be equally confident of dA and d\(\lnot \)A at that time. If further deliberation leads her to see A as the better option, then her confidence in dA will increase as her confidence in d\(\lnot \)A decreases. These deliberations will ordinarily cease when [the agent] is certain of either dA or d\(\lnot \)A, at which point she will have made her decision about whether or not to perform A by making up her mind what to believe about dA. (97)

Joyce notes that while

this process would be nothing more than an exercise in wishful thinking if [the agent’s] beliefs about dA and d\(\lnot \)A were not self-fulfilling, the fact that they are ensures that her subjective probability for each proposition increases or decreases in proportion to the evidence she has in its favor. (97)

He concludes: “This explains how [the agent’s] beliefs about what she will decide can be both responsive to her preferences and warranted by her evidence at each moment of her deliberations.” (97)

Joyce returns to these ideas in a later piece (Joyce 2007), and links them to a point made by writers on both sides of debates between causal and evidential decision theory, and introduces EAT:

[M]any decision theorists (both evidential and causal) have suggested that free agents can legitimately ignore evidence about their own acts. Judea Pearl (a causalist) has written that while “evidential decision theory preaches that one should never ignore genuine statistical evidence ... [but] actions—by their very definition—render such evidence irrelevant to the decision at hand, for actions change the probabilities that acts normally obey.” (2000, p. 109) ...

Huw Price (an evidentialist) has expressed similar sentiments: “From the agent’s point of view contemplated actions are always considered to be sui generis, uncaused by external factors ... This amounts to the view that free actions are treated as probabilistically independent of everything except their effects.” (1993, p. 261) A view somewhat similar to Price’s can be found in Hitchcock (1996).

These claims are basically right: a rational agent, while in the midst of her deliberations, is in a position to legitimately ignore any evidence she might possess about what she is likely to do. ... A deliberating agent who regards herself as free need not proportion her beliefs about her own acts to the antecedent evidence that she has for thinking that she will perform them. Let’s call this the evidential autonomy thesis. (Joyce 2007, 556–557)

Joyce adds a footnote at this point:

It is important to understand that this freedom only extends to propositions that describe actions about which the agent is currently deliberating, and whose performance she sees as being exclusively a matter of the outcome of her decision. It does not, for example, apply to acts that will be the result of future deliberations. (557)

4.3 Comparing Joyce and Ramsey

We are now in a position to appreciate that there are deep similarities between Joyce’s model and Ramsey’s. Ramsey does not admit credences for dA and d\(\lnot \)A, though with precisely the same qualification articulated in the footnote from Joyce just quoted: the restriction only applies in the context of current deliberations. For Ramsey, the rejection of such credences seems a conceptual matter, as well as a consequence of his operational account of credence. The truth of dA and d\(\lnot \)A is simply “not ... an intellectual problem,” as he puts it—“not something of which we are trying to discover the truth, but something it is within our power to make true or false.” (1929, 142, emphasis added)

In fact, however, only a hair’s breadth separates this view from Joyce’s. Joyce, too, agrees that the truth of dA and d\(\lnot \)A is not an intellectual problem of the normal sort, and that these propositions are within our power to make true or false. He simply represents this special status in a different way. For Joyce, there is a belief during deliberation, albeit one with a special epistemic status (because it is self-fulfilling). For Ramsey there is no belief until it is licensed by the formation of an intention (or volition, as Ramsey himself puts it), either to dA or d\(\lnot \)A—in other words, until after deliberation. But this is little more than a stylistic preference, at least compared to the points of agreement.

In particular, the (apparent) disagreement between Ramsey and Joyce reflects a difference about the use of the term ‘belief’. Ramsey is taking for granted what we might call an epistemically-grounded conception of belief (and hence of partial belief, or credence). On this conception, as in the standard Bayesian picture, beliefs and credences are only acquired, changed, or updated in the light of new evidence—it is a conceptual truth about beliefs that they are responsive to evidence in this way. One common correlate of this idea is the thesis that beliefs have ‘world-to-mind direction of fit’ (see, e.g., Humberstone Humberstone 1992). Nothing counts as a belief unless, in some appropriately normative sense, it is ‘trying’ to match the world.

Intentions don’t fit this pattern. As Anscombe (1957) famously pointed out, intentions have mind-to-world direction of fit. For Ramsey, then, intentions don’t count as beliefs, or partial beliefs, or credences. Ramsey will allow that we have beliefs about our own actions, of course, but they are downstream of intentions. When one forms the intention to A, one thereby acquires evidence that one will A (at least in normal cases), and may thereby come to believe that one will A. But the intention itself is not such a belief, according to this epistemically-grounded conception of belief.

In contrast, Joyce, following Velleman, thinks of the intentions we form when we deliberate as involving beliefs—reflexive beliefs about what we ourselves will do.Footnote 13 This is why beliefs about one’s own action are, as Joyce says, “essential” to his model of deliberation. In Joyce’s model the products of the process of deliberation—gradually-strengthening intentions to do something—must involve such beliefs. Unlike other beliefs, however, these particular beliefs are self-fulfilling and not responsive to evidence of the usual sort—that’s what EAT tells us.

There are two ways to get to Joyce’s view from Ramsey’s. One is to modify the epistemically-grounded conception of belief to allow a special class of exceptions, a special class of beliefs whose genesis does not require evidence—namely, the self-justifying beliefs that Joyce takes to be involved in intentions. The other is to stretch the notion of evidence just enough to allow that these special beliefs are supported by evidence after all – self-supported, in effect, by the evidence that they themselves generate or constitute. Whichever way we stretch our terminology, the principle that beliefs have world-to-mind direction of fit gets a little bit stretched, too, but again with the reassurance that these are special cases. Joyce himself is clear that they are special cases. As he remarks: “act probabilities must be radically unlike other probabilitie.” (2002, 94)

By way of comparison, here is Jenann Ismael’s negotiation of the same terminological boundary, with Wittgenstein in Ramsey’s shoes and Ismael herself in Joyce’s:Footnote 14

Wittgenstein ... thinks that for [one’s own intentions] to count as knowledge, they would have to be subject to the game of certainty and doubt, and that it would have to make sense to doubt their truth. And so for him, these cannot count as genuine knowledge. On the performative model,Footnote 15 they are still knowledge, but degenerate because self-fulfilling. Whereas Wittgenstein is suspicious of the idea of knowledge free of epistemic constraints, the performative model explains it and uses it to understand how it shapes the first-person/third-person asymmetries in predictive opinion. Both of us agree that it is wrong to see the sort of certainty we have about our own beliefs on the model of Cartesian transparency based in an introspective faculty. But the performative model provides an alternative that secures the special epistemic status and integrates it neatly with other truth-bearing discourse without undermining its status as knowledge. (Ismael 2012, 158–159)

In this case, as for Ramsey and Joyce, it is clear that the two views in question are extremely close, easily mapped from one to the other with small variations in terminology. Some readers may feel that there is an interesting question whether the Ramsey/Wittgenstein model or the Joyce/Ismael model comes closer to getting the psychology of decision right, but for our purposes what matters are the similarities. The crucial point of agreement is that the fact that supports DCOP in Ramsey’s model – i.e., that in the process of deliberation we come to beliefs about what we will do after but not before we form our intention—is mirrored under a different name in Joyce’s picture. For Joyce, it is simply EAT itself, which implies that the beliefs about our own actions that play a role in intentions are not themselves evidentially ‘downstream’ of other beliefs.

Whichever model we choose, deliberation crowds out something. For Ramsey, as we have seen, “making true” crowds out the ordinary evidential process of “discovering true”. For Joyce it also crowds out the ordinary evidential process of discovering true, but in favour of an extraordinary process of this kind—a process that is construed as generating its own evidence for the discovery in question. And EAT holds in both models, with a similar terminological shift: in Ramsey’s case the products of deliberation are evidentially autonomous because they are simply the wrong kind of psychological state to be evidentially constrained. Modulo this terminological difference, the two models are isomorphic.

Similarly for Joyce’s ‘disagreement’ with Levi: as we saw, Joyce says that “[w]hile Levi suggests that a deliberating agent cannot see herself as free with respect to acts she tries to predict, precisely the reverse is true.” (2002, 70) Because Joyce interprets intentions as involving beliefs, he understands deliberation as a process of coming to beliefs about what one will do—coming to predict what one will do, in fact. But Levi would not deny (obviously!) that a free agent can form intentions, and hence ‘try to predict’ her own actions in Joyce’s sense of term. To make sense of Levi’s claim we must read him as using ‘predict’ in the more restricted Ramseyian sense, of a credence based on (non-degenerate) evidence. Levi denies that a deliberating agent can make prediction in that sense about her contemplated action, and Joyce agrees. (For him this is EAT, effectively.) Terminological disagreements aside, in other words, Joyce is not disagreeing with Levi—on the contrary.

4.4 Beyond EAT

To put this irenic diagnosis in context, we want to note that for EAT, as for DCOP, it is a trivial matter to find models of cognitive systems acting in the world that do not satisfy this principle. Our Siri-enhanced Ramseyian agent again provides an example. In that case, Siri does “proportion her beliefs about her [agent’s] acts to the antecedent evidence that she [Siri] has for thinking that [her agent] will perform them” (to paraphrase Joyce’s own statement of EAT). So if we think of Siri and her agent as a kind of composite, extended agent, we do get a formal violation of EAT.

Defenders of EAT are likely to reply that such composites do not deserve to be called agents (or not just agents—perhaps the addition of Siri produces an extended mind, one submodule of which is properly called an agent). We have considerable sympathy for this viewpoint, but have no need to defend it here. We mention the example for two reasons. First, we want to reiterate our earlier observation that the kind of issues we have been discussing involve a great deal of model-relativity. It is helpful to think about one’s terms. But second, we do think it plausible that EAT marks an important boundary, and take ourselves to be agreeing with both Ramsey and Joyce on this point.

However, our main claim is that DCOP as such does not mark such a boundary in these matters. To paraphrase Sayre’s Law, it may be that the reason that debates about DCOP seem so intractable is that there is nothing of significance at stake.

5 Why EAT?

Where does EAT itself come from? We want to conclude by proposing an answer: in a word, transparency. This will reinforce our conclusion that Ramsey and Joyce are really on the same page, and show what must be denied by anyone who wants to disagree. We’ll introduce this diagnosis by raising a further puzzle about Joyce’s view.

5.1 Queries for Joyce

As we saw, Joyce formulates EAT as follows:

A deliberating agent who regards herself as free need not proportion her beliefs about her own acts to the antecedent evidence that she has for thinking that she will perform them. (2007, 557)

But why is this so, and precisely when is it so? Compare the case of a coin toss. Imagine a coin that says ‘The result is Heads’ on one side and ‘The result is Tails’ on the other. Whichever statement turns out to be visible when the coin is tossed is self-justifying, but that doesn’t stand in the way of our having evidence about the result in advance, let alone give us grounds to ignore such evidence, at that point. Is deliberation different, according to EAT (and Joyce)? In the coin toss, too, we needn’t apportion our beliefs after the toss to the antecedent evidence, but that isn’t news.

If there is to be something distinctive about the case of free action, not present in the coin toss case, EAT needs to apply either before the choice, or somehow during the choice. The latter possibility seems to make most sense, from Joyce’s point of view. Choice is a matter of adopting a belief about what one will do, in Joyce’s model. Read this way, EAT tells us that adoption of belief during the process of choice isn’t constrained by prior evidence. (The coin toss analogy now works in Joyce’s favour. The statement on display after the toss is entirely justified, even if the antecedent evidence made it very unlikely.)

But what is Joyce’s view about an agent’s “beliefs about her own acts” at the beginning of the process of choice? Does she take over credences based on antecedent evidence about how she will act, or does EAT already rule that out? (When does the EATing start, as it were?) There may be a clue in Joyce’s remark that act credences cannot be reasons for acting:

[I]t is absurd for an agent’s views about the advisability of performing any act to depend on how likely she takes that act to be. Reasoning of the form “I am likely (unlikely) to A, so I should A” is always fallacious.

On the face of it, this suggests that Joyce allows that an agent can hold act credences right at the beginning of a deliberation, but thinks that it would be absurd to take credences as reasons for one’s choice. But why should that be so? Thinking that I am likely to do A, I choose to do so in order to confirm my own present prediction. That’s a somewhat ‘self-satisfied’ reason, perhaps, but what makes it absurd?

It might seem that the absurdity follows from EAT. In virtue of EAT, the beliefs formed during deliberation cannot be evidentially constrained by prior evidence. Once I have chosen to A, my reason for thinking that I will A is that I have formed the self-validating belief that I will A, and prior evidence is irrelevant, at this point.

But this can’t be right diagnosis. When Joyce says that “[r]easoning of the form ‘I am likely (unlikely) to A, so I should A’ is always fallacious,” he isn’t talking about an epistemic fallacy, or a mistaken piece of evidential reasoning. Joyce’s remark is about reasons for acting. Whichever belief I choose (in Joyce’s model), it will be self-validating, but presumably I can have non-epistemic reasons for choosing one action rather than another. Joyce’s claim here is that an act credence can’t be a reason of that non-epistemic sort.

Perhaps EAT is doing the work indirectly? In virtue of EAT, pre-deliberation act credences are liable to be ‘evidentially unstable’—an unreliable guide to future credence on the same matter, as it were. EAT ensures that there is no epistemic constraint that requires that post-choice act credences align with pre-choice act credences. So treating a pre-deliberative act credence as a reason would be sitting on a stool one of the legs of which is liable to collapse under your weight—guaranteed to collapse, perhaps, in the sense that EAT ensures that the pre-choice act credence carries no authority whatsoever, after the choice is made.

Indeed, if we were to allow pre-choice act credences to be reasons they would be liable to undermine themselves before the choice ever got to be made. If my pre-choice credence that I will do A feeds into my decision to do A, then in arriving at that credence I acquire new evidence relevant to whether I will do A—I learn of a new reason relevant to my choice. But this is liable to change my pre-choice credence. At the very least, it means that there is new evidence for me to consider.Footnote 16

These considerations are moving in the right direction, but they don’t get to the heart of the matter. What we need is an explanation for the fact that pre-choice act credences cannot be attached to the deliberative stool in the first place. We propose that such an explanation—indeed, an explanation for EAT itself—can be found in the cognitive phenomenon known as transparency. We turn to some key points from Moran’s (2001) explication of this notion.Footnote 17

5.2 Moran on transparency

Moran describes transparency like this:

Ordinarily, if a person asks himself the question “Do I believe that P?,” he will treat this much as he would a corresponding question that does not refer to him at all, namely, the question “Is P true?” And this is not how he will normally relate himself to the question of what someone else believes. Roy Edgley [1969] has called this feature the “transparency” of one’s own thinking. (2001, §2.6)

He offers the following diagnosis of the phenomenon:

[W]hat ... transparency requires is the deferral of the theoretical question “What do I believe?” to the deliberative question “What am I to believe?” And in the case of the attitude of belief, answering a deliberative question is a matter of determining what is true. (62-3)

This diagnosis involves a distinction between two epistemic stances on one’s own mind, a distinction that Moran describes like this:

In characterizing two sorts of questions one may direct toward one’s state of mind, the term ‘deliberative’ is best seen at this point in contrast to ‘theoretical,’ the primary point being to mark the difference between that inquiry which terminates in a true description of my state, and one which terminates in the formation or endorsement of an attitude. (63)

Moreover, Moran takes the lessons of transparency to apply equally to deliberation about what to avow and deliberation about what to do. As he puts it:

[W]e might ... compare the case of belief with that of knowledge of one’s own future behavior: a person may have a purely predictive basis for knowing what he will do, but in the normal situation of free action it is on the basis of his decision that he knows what he is about to do. In deciding what to do, his gaze is directed “outward,” on the considerations in favor of some course of action, on what he has most reason to do. Thus his stance toward the question, “What am I going to do now?” is transparent to a question about what he is to do, answered by the “outward-looking” consideration of what is good, desirable, or feasible to do. (105)

For action, as for belief, Moran emphasises that transparency does not mean that the agent does not have knowledge of her own state of mind. The point is rather that that knowledge comes from a distinctive source, only available in the first-person present-tensed case—via a deliberative path, rather than a theoretical or empirical path, as Moran puts it. The last passage continues:

When [the agent] answers this question [i.e., “What am I going to do now?”] for himself and announces what he is going to do, ... [w]hat he has gained, and what his statement expresses, is straightforward knowledge about a particular person [i.e., himself], knowledge that can be told and thus transferred to another person who needs to know what he will do. (105–6)

Borrowing a term from our own context, we might characterise Moran’s conclusion as being that from the first-person present-tensed perspective, the deliberative path to knowledge crowds out the theoretical path (though the content of the knowledge achieved is precisely the same).

5.3 From transparency to evidential autonomy

Let us now apply these ideas to our own discussion. In the section before last we were looking for a justification for the thesis (required by Joyce’s claim that “it is absurd for an agent’s views about the advisability of performing any act to depend on how likely she takes that act to be”) that a pre-deliberative act credence cannot be a reason for the action in question. As we put it there, why can’t pre-deliberative act credences support the deliberative stool?

Transparency gives us an answer. In effect, it implies that deliberation turns the stool upside down. It makes our knowledge of what we will do rest on the deliberative seat, and not vice versa. What is absurd about taking act credences to be reasons is that during deliberation, deliberation itself is the source of one’s act credences. At this point, trying to take an act credence to be a reason is simply putting the cart before the horse—one needs one’s reasons in order to generate one’s act credences.Footnote 18

More generally, Moran’s distinction between two paths to knowledge of ourselves—the theoretical path and deliberative path—offers us a straightforward explanation of EAT itself. EAT simply rests on the fact that when we embark on the deliberative path, we set aside the theoretical path. That’s the core of transparency.

Indeed, this diagnosis suggests that Joyce’s own formulation of EAT is a little too weak. As Joyce expresses it, EAT is this principle:

A deliberating agent who regards herself as free need not proportion her beliefs about her own acts to the antecedent evidence that she has for thinking that she will perform them. (2007, 557, emphasis added)

This suggests a picture in which the antecedent evidence is still sitting there, as it were, but the agent simply has the option of ignoring it, in deciding to proportion her beliefs about her own acts. Moran’s picture is more exclusive, and less voluntary. By deliberating, we move ourselves out of the evidential space altogether. In particular, the agent doesn’t have the option of not ignoring the evidence, because she is no longer playing the evidential game, no longer following the theoretical path.

Once again, various terminological options present themselves at this point. Joyce may prefer to say that the agent doesn’t leave evidential space altogether, but rather enters a special kind of evidential space (one in which she calls the evidential shots, so to speak). Again, we want to bracket these terminological issues, in order to focus on the underlying structural bifurcation that seems agreed on all sides. This is that deliberation involves a distinctive path to knowledge of our own present choices—a path that takes precedence over, indeed ‘crowds out’, the theoretical path that we rely on in third person and non-present-tensed cases.

This bifurcation, or separation between two paths to knowledge of our own actions, is what transparency explains. (Indeed, if Moran is right, it is simply the special practical case of something more general.) We propose that it is the source of EAT, and at the heart of what is correct about DCOP. As promised, Joyce turns out to be a friend of this version of DCOP, once terminological differences are set to one side.Footnote 19

Notes

“Probably anyone will find it absurd to assume that someone has subjective probabilities for things which are under his control and which he can actualize as he pleases” (Spohn 1977, p. 115).

As we shall see, an additional advantage of starting with Ramsey is that he already has the distinction between theoretical and deliberative enquiry that is central to Moran’s explication of transparency, and hence to DCOP understood as a special case of transparency.

Different notions of subjective probability appeared earlier in, for instance, the works of Bernoulli (1713), Laplace (1810), De Morgan (1847) and Borel (1924). However Ramsey is often credited as the first to provide a systematic account of subjective probability—one of his great contributions being to show that degrees of belief are a species of probability, so long as the agent concerned satisfies certain coherence constraints.

See Appendix A in Gaifman and Liu (2017) for a brief account of Ramsey’s betting system.

It is easy to see that the following argument can be generalised to be applied to cases with more than two options.

Actually not even that, if the gambles are formulated in terms of goods—i.e., payoffs whose rankings to the agent are already assumed to be known.

For future reference, we note that Ramsey’s distinction between making true and discovering true has close affinity to themes in the work of David Velleman and Jenann Ismael, among others. Velleman (1989) holds that agents enjoy ‘epistemic freedom’ with respect to their own actions, and Ismael (2012) that choices are self-validating epistemic ‘wild cards’. Though they express the idea in slightly different ways—more on these differences below – Ramsey, Velleman and Ismael seem to have a common intuition in mind. It is of the essence of choice that it involves a kind of epistemic singularity, a place in which the rules do not apply in the normal way. We shall return to this thought below, and explore its development by Joyce and its connection to transparency.

Spohn (1977) and Levi (1989) argued that the standard betting interpretation of probability collapses when it is applied to action-events. Their arguments, which involve revisions of rewards and events in a bet, have generated heated debates regarding, among other things, what is the “correct” way to apply the betting interpretation [cf. exchanges on this matter from Levi (2000), Joyce (2002), Rabinowicz (2002), Levi (2007) and Spohn (2012)]. Gaifman (1999) provides an analysis of self-reference and cyclic reasoning involved in decision and game theoretic models, and points out that within the classical Bayesian subjective decision/probability theory, act credences turn on certain conceptual circularities that cannot be rationally justified (and hence should be barred from this framework, as Gaifman argues).

(Joyce 2002, §3.1) offers a similar response to this argument for DCOP, suggesting that it doesn’t imply that the act credences are incoherent, “only that they are difficulty to measure.” We discuss Joyce’s arguments in detail below.

We suspect that Rabinowicz has in mind something stronger, namely that such extensions might be ‘more realistic’, or otherwise preferable, but we set aside that difference for now.

Siri does this in an attempt to keep her agent out of trouble, and is able to do it effectively because she has access to the traditional sources of evidence—the agent’s entire history of, for instance, ‘Likes’ and ‘Dislikes’ on Facebook, dinner reservations on Opentable, purchasing history on Amazon, and so on. One of the challenges for proponents of DCOP is to explain why the agent himself cannot use such information to generate credences about his own choices, as he makes them—more on this below.

In Joyce’s notation P(A/B) is the conditional probability of A given B. The backslash in ‘P(A\(\backslash \)dA)’ represents what Joyce calls ‘’causal probability’—“it represents [the agent’s] beliefs about what her acts will causally promote, so that P(S\(\backslash \) A) will exceed P(S\(\backslash \lnot \)A) only if [the agent] believes that A will causally promote S.” (79)

Strictly, Joyce takes it that such beliefs are one component of an intention; there is also a desire-like component. We set aside the latter element of Joyce’s view in this section, for simplicity. We shall also ignore the fact that like Skyrms (1990), Joyce (2012) offers a stepwise model of decision-making, in which partially-formed intentions progress towards a full intention. Given that he interprets intentions as involving beliefs about the contemplated action, these partial intentions are represented as (or as involving) act credences—hence the core role of act credences in his model. This makes no essential difference to our argument that Joyce’s disagreement with Levi and Ramsey is terminological, but it does introduce a new source of potential terminological confusion. We should not mix up deliberation in the sense of the entire multi-step process with deliberation in the sense of the process at each step whereby the agent updates her partial intention. As we noted above, Ramsey can quite well allow act credences formed in the light of formation of an intention. This will introduce act credences into a multi-step process, even if intentions themselves are not represented as credences. But it won’t touch Ramsey’s distinction between making true and discovering true, at the locus of the individual steps.

Ismael notes the similarity between her view and Joyce’s: “James Joyce comes to much the same conclusion .... He writes ‘an agent’s beliefs about her own decisions are self-fulfilling, and that this can be used to explain away the seeming paradoxical features of act probabilities.’” (Ismael 2012, p. 156)

This is Ismael’s label for the view that decisions are self-fulfilling beliefs.

Ismael (2012, p. 160) notes that Jonathan Bennett makes a similar point about the instability of predictions about our own behaviour, while we deliberate. Price’s (1986a, 1991) defence of Evidential Decision Theory relies on a similar instability argument, motivated by the Principle of Total Evidence. And Gaifman (1999) highlights a similar phenomenon of instability in Bayesian decision and game theory through his analysis of the Cassandra’s paradox.

True, one might take the memory of a pre-deliberative credence to provide a reason—I’m doing it because I predicted that I would, and I want to prove myself right. But here the reason is not the prior credence itself, but the belief that one previously held that credence. We are mentioning the credence, not using it, so to speak.

We are grateful to Jim Joyes for helpful feedback on an early version of this paper, and to Haim Gaifman, Issac Levi, Michael Nielsen, and Rush Stewart for many astute comments. We are also grateful to audiences at the 2017 APA Pacific Division meeting and at Peking University and Zhejiang University, where earlier versions of this paper were presented. This publication was made possible through the support of a grant from Templeton World Charity Foundation (TWCF0128), the opinions expressed in this publication are those of the authors and do not necessarily reflect the views of TWCF.

References

Ahmed, A. (2014). Evidence, decision and causality. Cambridge: Cambridge University Press.

Anscombe, G. E. M. (1957). Intention. Cambridge: Harvard University Press.

Bernoulli, J. (1713). Ars conjectandi. Impensis Thurnisiorum, fratrum.

Borel, É. (1924). A propos d’un traité de probabilités. Revue Philosophique, 98, 321–326.

De Morgan, A. (1847). Formal logic: Or, the calculus of inference, necessary and probable. Albans: Taylor and Walton.

Edgley, R. (1969). Reason in theory and practice. London: Hutchinson.

Gaifman, H. (1999). Self-reference and the acyclicity of rational choice. Annals of Pure and Applied Logic, 96(1–3), 117–140.

Gaifman, H., & Liu, Y. (2017). A simpler and more realistic subjective decision theory. Synthese. https://doi.org/10.1007/s11229-017-1594-6.

Hájek, A. (2003). What conditional probability could not be. Synthese, 137(3), 273–323.

Hájek, A. (2016). Deliberation welcomes prediction. Episteme, 13(4), 507–528.

Hitchcock, C. R. (1996). Causal decision theory and decision-theoretic causation. Nous, 30(4), 508–526.

Humberstone, I. L. (1992). Direction of fit. Mind, 101(401), 59–83.

Ismael, J. (2012). Decision and the open future. In A. Bardon (Ed.), The future of the philosophy of time (pp. 149–168). London: Routledge.

Joyce, J. M. (2002). Levi on causal decision theory and the possibility of predicting one’s own actions. Philosophical Studies, 110(1), 69–102.

Joyce, J. M. (2007). Are newcomb problems really decisions? Synthese, 156(3), 537–562.

Joyce, J. M. (2012). Regret and instability in causal decision theory. Synthese, 187(1), 123–145.

Laplace, P.-S. (1810). Analytic theory of probabilities. Paris: Imprimerie Royale.

Levi, I. (Ed). (1989). Rationality, prediction, and autonomous choice. In The covenant of reason: rationality and the commitments of thought (pp. 19–39). Cambridge: Cambridge University Press.

Levi, I. (Ed). (1996). Prediction, deliberation and correlated equilibrium. In The covenant of reason: rationality and the commitments of thought (Chap. 5). Cambridge: Cambridge University Press.

Levi, I. (2000). Review essay: The foundations of causal decision theory. The Journal of Philosophy, 97(7), 387–402.

Levi, I. (2007). Deliberation does crowd out predecition. In T. Ronnow-Rasmussen, B. Petersson, J. Josefsson, & D. Egonssson (Eds.), Homage a Wlodek. Philosophical Papers Dedicated to Wlodek Rabinowicz. E. Lund: Department of Philosophy, Lund University.

Liu, Y., & Price, H. (2018). Heart of DARCness. Australasian Journal of Philosophy. https://doi.org/10.1080/00048402.2018.1427119.

Mellor, D. H. (1993). How to believe a conditional. The Journal of Philosophy, 90(5), 233–248.

Moran, R. (2001). Authority and Estrangement: An essay on self-knowledge. Princeton: Princeton University Press.

Pearl, J. (2000). Causality: Models, reasoning and inference. Cambridge: Cambridge University Press.

Price, H. (1986a). Against causal decision theory. Synthese, 67(2), 195–212.

Price, H. (1986b). Conditional credence. Mind, 95(377), 18–36.

Price, H. (1991). Agency and probabilistic causality. The British Journal for the Philosophy of Science, 42(2), 157–176.

Price, H. (1993). The direction of causation: Ramsey’s ultimate contingency. In Proceedings of the Biennial meeting of the philosophy of science association (Vol. 1992, pp. 253–267).

Rabinowicz, W. (2002). Does practical deliberation crowd out self-prediction? Erkenntnis, 57(1), 91–122.

Ramsey, F. P. (1926). Truth and probability. In R.B. Braithwaite (Ed.), The Foundations of Mathematics and other Logical Essays, chapter VII (pp. 156–198). London: Kegan, Paul, Trench, Trubner & Co., New York: Harcourt, Brace and Company.

Ramsey, F. P. (1929). General propositions and causality. In D. H. Mellor (Ed.), Foundations: Essays in philosophy, logic, mathematics and economics (pp. 133–51). London: Routledge and Kegan Paul.

Renyi, A. (1970). Foundations of probability. San Francisco: Holden-Day Inc.

Skyrms, B. (1990). The dynamics of rational deliberation. Cambridge: Harvard University Press.

Spohn, W. (1977). Where Luce and Krantz do really generalize Savage’s decision model. Erkenntnis, 11(1), 113–134.

Spohn, W. (2012). Reversing 30 years of discussion: Why causal decision theorists should one-box. Synthese, 187(1), 95–122.

Velleman, J. D. (1989). Epistemic freedom. Pacific Philosophical Quarterly, 70(1), 73–97.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

OpenAccess This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Liu, Y., Price, H. Ramsey and Joyce on deliberation and prediction. Synthese 197, 4365–4386 (2020). https://doi.org/10.1007/s11229-018-01926-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11229-018-01926-8