Abstract

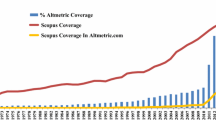

This study compares Spanish and UK research in eight subject fields using a range of bibliometric and social media indicators. For each field, lists of Spanish and UK journal articles published in the year 2012 and their citation counts were extracted from Scopus. The software Webometric Analyst was then used to extract a range of altmetrics for these articles, including patent citations, online presentation mentions, online course syllabus mentions, Wikipedia mentions and Mendeley reader counts and Altmetric.com was used to extract Twitter mentions. Results show that Mendeley is the altmetric source with the highest coverage, with 80 % of sampled articles having one or more Mendeley readers, followed by Twitter (34 %). The coverage of the remaining sources was lower than 3 %. All of the indicators checked either have too little data or increase the overall difference between Spain and the UK and so none can be suggested as alternatives to reduce the bias against Spain in traditional citation indexes.

Similar content being viewed by others

References

ACUMEN Portfolio. (2014). Guidelines for good evaluation practice (2014). The ACUMEN Consortium. http://research-acumen.eu/wp-content/uploads/D6.14-Good-Evaluation-Practices.pdf. Accessed 13 December 2015.

Adie, E., & Roe, W. (2013). Altmetric: Enriching scholarly content with article-level discussion and metrics. Learned Publishing, 26(1), 11–17.

Aibar, E., Lladós-Masllorens, J., Meseguer-Artola, A., Minguillón, J., & Lerga, M. (2015). Wikipedia at university: What faculty think and do about it. Electronic Library, 33(4), 668–683.

Albarillo, F. (2014). Language in social science databases: English versus non-english articles in JSTOR and Scopus. Behavioral & Social Sciences Librarian, 33(2), 77–90.

Alonso-Jiménez, E. (2015). Una aproximación a Wikipedia como polisistema cultural. Convergencia. Revista de Ciencias Sociales, 22(68), 125–149.

Archambault, É., Vignola-Gagné, É., Côté, G., Larivière, V., & Gingrasb, Y. (2006). Benchmarking scientific output in the social sciences and humanities: The limits of existing databases. Scientometrics, 68(3), 329–342.

Bollen, J., Van-De-Sompel, H., Smith, J. A., & Luce, R. (2005). Toward alternative metrics of journal impact: A comparison of download and citation data. Information Processing and Management, 41(6), 1419–1440.

Bonk, C. J. (2001). Online teaching in an online world. Bloomington, In CourseShare.com. http://www.publicationshare.com/docs/faculty_survey_report.pdf. Accessed 22 January 2016.

Bornmann, L. (2014). Do altmetrics point to the broader impact of research? An overview of benefits and disadvantages of altmetrics. Journal of Informetrics, 8(4), 895–903.

Breschi, S., Tarasconi, G., Catalini, C., Novella, L., Guatta, P., & Johnson, H. (2006). Highly Cited Patents, Highly Cited Publications, and Research Networks. European Commission. http://ec.europa.eu/invest-in-research/pdf/download_en/final_report_hcp.pdf Accessed 2 December 2015.

Cabrera Hernández, L. M. (2013). Web 2.0: Wikipedia como fuente de información sobre las ciencias de la alimentación y de la nutrición. Alicante: Universidad de Alicante.

Callaert, J., Van Looy, B., Verbeek, A., Debackere, K., & Thijs, B. (2006). Traces of Prior Art: An analysis of non-patent references found within patent documents. Scientometrics, 69(1), 3–20.

Chamberlain, S. (2013). Consuming article-level metrics: Observations and lessons. Information Standards Quarterly, 25(2), 4–13.

Chen, H.-L. (2010). The perspectives of higher education faculty on Wikipedia. Electronic Library, 28(3), 361–373.

Chinchilla-Rodríguez, Z., Corera-Álvarez, E., Moya-Anegón F. de, & Sanz-Menéndez, L. (2014). La producción científica española en el contexto internacional y la posición de sus instituciones de investigación en el ranking mundial (2009–2013). In M. Parellada (Dir.). Informe CYD 2014 (pp. 220–235). Barcelona: Fundación Conocimiento y Desarrollo.

Clauson, K. A., Polen, H. H., Kamel Boulos, M. N., & Dzenowagis, J. H. (2008). Scope, completeness, and accuracy of drug information in Wikipedia. The Annals of Pharmacotherapy, 42(12), 1814–1821.

Costas, R., Zahedi, Z., & Wouters, P. (2015a). Do ‘altmetrics’ correlate with citations? Extensive comparison of altmetric indicators with citations from a multidisciplinary perspective. Journal of the Association for Information Science and Technology, 66(10), 2003–2019.

Costas, R., Zahedi, Z., & Wouters, P. (2015b). The thematic orientation of publications mentioned on social media: Large-scale disciplinary comparison of social media metrics with citations. Aslib Journal of Information Management, 67(3), 260–288.

Cronin, B. (2001). Bibliometrics and beyond: Some thoughts on web-based citation analysis. Journal of Information Science, 27(1), 1–7.

Cummings, J. A., Bonk, C. J., & Jacobs, F. R. (2002). Twenty-first century college syllabi: Options for online communication and interactivity. The Internet and Higher Education, 5(1), 1–19.

Davis, P. M. (2002). The effect of the web on undergraduate citation behavior: A 2000 update. College & Research Libraries, 63(1), 53–60.

de Moya-Anegón, F., Chinchilla-Rodríguez, Z., Corera-Álvarez, E., González-Molina, A., López-Illescas, C., & Vargas-Quesada, B. (2014). Indicadores bibliométricos de la actividad científica española 2011. Informe 2013. Madrid: FECYT.

de Moya-Anegón, F., Chinchilla-Rodríguez, Z., Vargas-Quesada, B., Corera-Álvarez, E., Muñoz-Fernández, F. J., González-Molina, A., et al. (2007). Coverage analysis of Scopus: A journal metric approach. Scientometrics, 73(1), 53–78.

Doolittle, P. E., & Siudzinski, R. A. (2010). Recommended syllabus components: what do higher education faculty include in their syllabi? Journal on Excellence in College Teaching, 21(3), 29–61.

Eijkman, H. (2010). Academics and Wikipedia: Reframing Web 2.0+ as a disruptor of traditional academic power-knowledge arrangements. Campus-Wide Information Systems, 27(3), 173–185.

Engels, T. C. E., Ossenblok, T. L. B., & Spruyt, E. H. J. (2012). Changing publication patterns in the social sciences and humanities, 2000–2009. Scientometrics, 93(2), 373–390.

Eysenbach, G. (2011). Can tweets predict citations? Metrics of social impact based on twitter and correlation with traditional metrics of scientific impact. Journal of Medical Internet Research, 13(4), e123. doi:10.2196/jmir.2012.

Fairclough, R., & Thelwall, M. (2015a). More precise methods for national research citation impact comparisons. Journal of Informetrics, 9(4), 895–906.

Fairclough, R., & Thelwall, M. (2015b). National research impact indicators from Mendeley readers. Journal of Informetrics, 9(4), 845–859.

Fink, S. B. (2012). The many purposes of course syllabi. Which are essential and useful? Syllabus, 1(1). http://www.syllabusjournal.org/syllabus/article/view/161/PDF. Accessed 9 December 2015.

Garavalia, L. S., Hummel, J. H., Wiley, L. P., & Huitt, W. G. (1999). Constructing the course syllabus: Faculty and student perceptions of important syllabus components. Journal on Excellence in College Teaching, 10(1), 5–21.

García Fernández, E. C., & Deltell Escolar, L. (2012). La Guía Docente: un reto en el nuevo modelo de educación universitaria. Estudios sobre el mensaje periodístico, 18, 357–364.

García Martín, A. (Coord.) (2012). Manual de elaboración de guías docentes adaptadas al EEES. Cartagena: Universidad Politécnica de Cartagena. http://www.upct.es/~euitc/documentos/manual_guias_para_web.pdf Accessed 18 December 2015.

Goodrum, A. A., McCain, K. W., Lawrence, S., & Giles, C. L. (2001). Scholarly publishing in the Internet age: A citation analysis of computer science literature. Information Processing and Management, 37(5), 661–675.

Graham, M., Hale, S. A., & Stephens, M. (2011). Geographies of the world’s knowledge. London: Convoco.

Gunn, W. (2013). Social signals reflect academic impact: What it means when a scholar adds a paper to Mendeley. Information Standards Quarterly, 25(2), 33–39.

Hammarfelt, B. (2014). Using altmetrics for assessing research impact in the humanities. Scientometrics, 101(2), 1419–1430.

Haustein, S., Costas, R., & Larivière, V. (2015). Characterizing Social media metrics of scholarly papers: The effect of document properties and collaboration patterns. PLoS One, 10(3), e0127830. doi:10.1371/journal.pone.0120495.

Haustein, S., Peters, I., Sugimoto, C. R., Thelwall, M., & Larivière, V. (2014). Tweeting biomedicine: An analysis of tweets and citations in the biomedical literature. Journal of the American Society for Information Science and Technology, 65(4), 656–669.

Head, A. J., & Eisenberg, M. B. (2010). How today’s college students use Wikipedia for course-related research. First Monday. doi:10.5210/fm.v15i3.2830.

Henning, V., & Reichelt, J. (2008). Mendeley—A Last.fm for research? In eScience, 2008: IEEE Fourth International Conference on eScience (pp. 327–328). Indiana, USA. doi:10.1109/eScience.2008.128.

Herbert, V. G., Frings, A., Rehatschek, H., Richard, G., & Leithner, A. (2015). Wikipedia—challenges and new horizons in enhancing medical education. BMC Medical Education. doi:10.1186/s12909-015-0309-2.

Hertz, B., Van Woerkum, C., & Kerkhof, P. (2015). Why do scholars use powerpoint the way they do? Business and Professional Communication Quarterly, 78(3), 273–291.

Holmberg, K. (2015). Altmetrics for information professionals—Past, present and future. Waltham, MA: Elsevier.

Holmberg, K., & Thelwall, M. (2014). Disciplinary differences in Twitter scholarly communication. Scientometrics, 101(2), 1027–1042.

Jamali, H. R., Nicholas, D., & Herman, E. (2016). Scholarly reputation in the digital age and the role of emerging platforms and mechanisms. Research Evaluation, 25(1), 37–49.

Jaramillo, P., Castañeda, P., & Pimienta, M. (2009). Qué hacer con la tecnología en el aula: inventario de usos de las TIC para aprender y enseñar. Educación y Educadores, 12(2), 159–179.

Java, A., Song, X., Finin, T., & Tseng, B. (2007). Why we twitter: Understanding microblogging usage and communities. In Proceedings of the 9th WEBKDD and 1st SNA-KDD Workshop 2007 (pp. 56–65). California, USA.

Jeng, W., He, D., & Jiang, J. (2015). User participation in an academic social networking service: A survey of open group users on Mendeley. Journal of the Association for Information Science and Technology, 66(5), 890–904.

Koppen, L., Phillips, J., & Papageorgiou, R. (2015). Analysis of reference sources used in drug-related Wikipedia articles. Journal of the Medical Library Association: JMLA, 103(3), 140–144.

Kousha, K., & Thelwall, M. (2006). Motivations for URL citations to open access library and information science articles. Scientometrics, 68(3), 501–517.

Kousha, K., & Thelwall, M. (2007). How is science cited on the web? A classification of Google unique web citations. Journal of the Association for Information Science and Technology, 58(11), 1631–1644.

Kousha, K., & Thelwall, M. (2008). Assessing the impact of disciplinary research on teaching: An automatic analysis of online syllabuses. Journal of the American Society for Information Science and Technology, 59(13), 2060–2069.

Kousha, K., & Thelwall, M. (2015a). An automatic method for assessing the teaching impact of books from online academic syllabi. Journal of the Association for Information Science and Technology. doi:10.1002/asi.23542.

Kousha, K., & Thelwall, M. (2015b). Patent citation analysis with Google. Journal of the Association for Information Science and Technology. doi:10.1002/asi.23608.

Kousha, K., & Thelwall, M. (2015c). Web indicators for research evaluation. Part 3: Books and non-standard outputs. El profesional de la información, 24(6), 724–736.

Kousha, K., & Thelwall, M. (2016). Are Wikipedia citations important evidence of the impact of scholarly articles and books? Journal of the Association for Information Science and Technology. doi:10.1002/asi.23694.

Kubiszewski, I., Noordewier, T., & Costanza, R. (2011). Perceived credibility of Internet encyclopedias. Computers & Education, 56(3), 659–667.

Lavsa, S. M., Corman, S. L., Culley, C. M., & Pummer, T. L. (2011). Reliability of Wikipedia as a medication information source for pharmacy students. Currents in Pharmacy Teaching and Learning, 3(2), 154–158.

Letierce, J., Passant, A., Breslin, J., & Decker, S. (2010). Understanding how Twitter is used to spread scientific messages. In Proceedings of the WebSci10: Extending the Frontiers of Society On-Line. Raleigh (NC), 26–27 April. http://journal.webscience.org/314/2/websci10_submission_79.pdf.

Leydesdorff, L., de Moya-Anegón, F., & Guerrero-Bote, V. P. (2010). Journal maps on the basis of Scopus data: A comparison with the Journal Citation Reports of the ISI. Journal of the American Society for Information Science and Technology, 61(2), 352–369.

Leydesdorff, L., Wagner, C. S., Park, H.-W., & Adams, J. (2013). International collaboration in science: The global map and the network. El profesional de la información, 22(1), 87–94.

Li, X., Thelwall, M., & Giustini, D. (2012). Validating online reference managers for scholarly impact measurement. Scientometrics, 91(2), 461–471.

Lo, S. S. (2010). Scientific linkage of science research and technology development: A case of genetic engineering research. Scientometrics, 82(1), 109–120.

López-Navarro, I., Moreno, A. I., Quintanilla, M. A., & Rey-Rocha, J. (2015). Why do I publish research articles in English instead of my own language? Differences in Spanish researchers’ motivations across scientific domain. Scientometrics, 103(3), 939–976.

Luyt, B., & Tan, D. (2010). Improving Wikipedia’s credibility: References and citations in a sample of history articles. Journal of the American Society for Information Science and Technology, 61(4), 715–722.

Marta-Lazo, C., Grandío Pérez, M. M., & Gabelas Barroso, J. A. (2014). La educación mediática en las titulaciones de Educación y Comunicación de las universidades españolas. Análisis de los recursos recomendados en las guías docentes. Vivat Academia, 126, 63–78.

Mas-Bleda, A., & Aguillo, I. F. (2015). La web social como nuevo medio de comunicación y evaluación científica. Barcelona: UOC.

Matejka, K., & Kurke, L. B. (1994). Designing a great syllabus. College Teaching, 42(3), 115–117.

Meyer, M. (2000). What is special about patent citations? Differences between scientific and patent citations. Scientometrics, 49(1), 93–123.

Meyer, M. (2003). Academic patents as an indicator of useful research? A new approach to measure academic inventiveness. Research Evaluation, 12(1), 17–27.

Michel, J., & Bettels, B. (2001). Patent citation analysis. A closer look at the basic input data from patent search reports. Scientometrics, 51(1), 185–201.

Moed, H. F., & Halevi, G. (2015). Multidimensional assessment of scholarly research impact. Journal of the Association for Information Science and Technology, 66(10), 1988–2002.

Moed, H. F., & Visser, M. S. (2007). Developing Bibliometric indicators of research performance in computer science: An exploratory study’. Research report. Leiden: Leiden University, Centre for Science and Technology Studies (CWTS).

Moed H. F., & Visser, M. S. (2008). Appraisal of citation data sources. A report to HEFCE by the Centre for Science and Technology Studies. Leiden: Leiden University. http://www.hefce.ac.uk/media/hefce/content/pubs/indirreports/2008/missing/Appraisal%20of%20Citation%20Data%20Sources.pdf. Accessed 21 December 2015.

Mohammadi, E., & Thelwall, M. (2014). Mendeley readership altmetrics for the social sciences and humanities: Research evaluation and knowledge flows. Journal of the American Society for Information Science and Technology, 65(8), 1627–1638.

Mohammadi, E., Thelwall, M., Haustein, S., & Larivière, V. (2015). Who reads research articles? An altmetrics analysis of Mendeley user categories. Journal of the Association for Information Science and Technology, 66(9), 1832–1846.

Mohammadi, E., Thelwall, M., & Kousha, K. (2016). Can Mendeley bookmarks reflect readership? A survey of user motivations. Journal of the Association for Information Science and Technology, 67(5), 1198–1209.

Narin, F., Hamilton, K. S., & Olivastro, D. (1997). The increasing linkage between U. S. technology and public science. Research Policy, 26(3), 317–330.

Nederhof, A. J. (2006). Bibliometric monitoring of research performance in the social sciences and the humanities: A review. Scientometrics, 66(1), 81–100.

Orduña-Malea, E., Ayllón, J. M., Martín-Martín, A., & Delgado López-Cózar, E. (2014). About the size of Google Scholar: playing the numbers. Granada: EC3 Working Papers, 18: 23. http://arxiv.org/pdf/1407.6239. Accessed 4 February 2016.

Ortega, J. L. (2015). Relationship between altmetric and bibliometric indicators across academic social sites: The case of CSIC’s members. Journal of Informetrics, 9(1), 39–49.

Pfeil, U., Zaphiris, P., & Ang, C. S. (2006). Cultural differences in collaborative authoring of Wikipedia. Journal of Computer-Mediated Communication, 12(1), 88–113.

Plaza, L. M., & Bordons, M. (2006). Proyección internacional de la ciencia española. Enciclopedia del español en el mundo, Anuario del Instituto Cervantes 2006–2007 (pp. 547–567). Madrid: Instituto Cervantes.

Priem, J., & Costello, K. L. (2010). How and why scholars cite on Twitter. Proceedings of the American Society for Information Science and Technology, 47(1), 1–4.

Priem, J., & Hemminger, B. M. (2010). Scientometrics 2.0: Toward new metrics of scholarly impact on the social Web. First Monday. doi:10.5210/fm.v15i7.2874.

Priem, J., Taraborelli, D., Groth, P., & Neylon, C. (2010). Altmetrics: A manifesto. http://altmetrics.org/manifesto. Accessed 27 November 2015.

Rector, L. H. (2008). Comparison of Wikipedia and other encyclopedias for accuracy, breadth, and depth in historical articles. Reference Services Review, 36(1), 7–22.

Robinson-García, N., Torres-Salinas, D., Zahedi, Z., & Costas, R. (2014). New data, new possibilities: Exploring the insides of Altmetric.com. El Profesional de la Informacion, 23(4), 359–366.

Rousseau, R., & Ye, F. Y. (2013). A multi-metric approach for research evaluation. Chinese Science Bulletin, 58(26), 3288–3290.

Schmoch, U. (1993). Tracing the knowledge transfer from science to technology as reflected in patent indicators. Scientometrics, 26(1), 193–211.

Shema, H., Bar-Ilan, J., & Thelwall, M. (2012). Research blogs and the discussion of scholarly information. PLoS One, 7(5), e35869.

Shim, J. P., & Yang, J. (2009). Why is Wikipedia not more widely accepted in Korea and China? Factors affecting knowledge-sharing adoption. Decision Line, 40(2), 12–15.

Shuai, X., Pepe, A., & Bollen, J. (2012). How the scientific community reacts to newly submitted preprints: Article downloads, twitter mentions and citations. PLoS One, 7(11), e47523.

Stuart, D. (2009). Social media metrics. Online, 33(6). http://www.infotoday.com/online/nov09/Stuart.shtml. Accessed 7 February 2016.

Thelwall, M. (2009). Introduction to webometrics: Quantitative web research for the social sciences. San Rafael, CA: Morgan & Claypool.

Thelwall, M., Haustein, S., Larivière, V., & Sugimoto, C. R. (2013). Do altmetrics work? Twitter and ten other social web services. PLoS One, 8(5), e64841.

Thelwall, M., & Kousha, K. (2008). Online presentations as a source of scientific impact? An analysis of PowerPoint files citing academic journals. Journal of the American Society for Information Science and Technology, 59(5), 805–815.

Thelwall, M., & Kousha, K. (2015a). Web indicators for research evaluation. Part 1: Citations and links to academic articles from the Web. El profesional de la información, 24(5), 587–606.

Thelwall, M., & Kousha, K. (2015b). Web indicators for research evaluation. Part 2: Social media metrics. El profesional de la información, 24(5), 607–620.

Thelwall, M., & Maflahi, N. (2015). Are scholarly articles disproportionately read in their own country? An analysis of Mendeley readers. Journal of the Association for Information Science and Technology, 66(6), 1124–1135.

Thelwall, M., & Sud, P. (2012). Webometric research with the Bing Search API 2.0. Journal of Informetrics, 6(1), 44–52.

Thelwall, M., & Sud, P. (2015). Mendeley readership counts: An investigation of temporal and disciplinary differences. Journal of the Association for Information Science and Technology. doi:10.1002/asi.23559.

Thelwall, M., & Wilson, P. (2016). Mendeley readership altmetrics for medical articles: An analysis of 45 fields. Journal of the Association for Information Science and Technology, 67(8), 1962–1972.

Tijssen, R., Buter, R., & Van Leeuwen, T. (2000). Technological relevance of science: An assessment of citation linkages between patents and research papers. Scientometrics, 47(2), 389–412.

Tsou, A., Bowman, T., Ghazinejad, A., & Sugimoto, C. (2015). Who tweets about science? Proceedings of the 15th ISSI Conference (pp. 95–100). Istanbul: Boğaziçi University.

Tung, Y.-T. (2010). A Case Study of Undergraduate Course Syllabi in Taiwan. University of North Texas. http://digital.library.unt.edu/ark:/67531/metadc28487/m2/1/high_res_d/dissertation.pdf. Accessed 17 December 2015.

Uz, C., Orhan, F., & Bilgiç, G. (2010). Prospective teachers’ opinions on the value of PowerPoint presentations in lecturing. Procedia—Social and Behavioral Sciences, 2(2), 2051–2059.

Van den Bosch, A., Bogers, T., & de Kunder, M. (2016). Estimating search engine index size variability: A 9-year longitudinal study. Scientometrics, 107(2), 839–856.

Van Leeuwen, T. N., Moed, H. F., Tijssen, R. J. W., Visser, M. S., & Van Raan, A. F. J. (2001). Language biases in the coverage of the Science Citation Index and its consequences for international comparisons of national research performance. Scientometrics, 51(1), 335–346.

Vaughan, L., & Shaw, D. (2003). Bibliographic and web citations: What is the difference?. Journal of the American Society for Information Science and Technology, 54(14), 1313–1322.

Vaughan, L., & Shaw, D. (2004). Can web citations be a measure of impact? An investigation of journals in the life sciences. Proceedings of the American Society for Information Science and Technology, 41(1), 516–526.

Vaughan, L., & Shaw, D. (2005). Web citation data for impact assessment: A comparison of four science disciplines. Journal of the American Society for Information Science and Technology, 56(10), 1075–1087.

Vaughan, L., & Thelwall, M. (2004). Search engine coverage bias: Evidence and possible causes. Information Processing and Management, 40(4), 693–707.

Veletsianos, G. (2012). Higher education scholars’ participation and practices on Twitter. Journal of Computer Assisted learning, 28(4), 336–349.

Verbeek, A., Debackere, K., Luwel, M., Andries, P., Zimmermann, E., & Deleus, F. (2002). Linking science to technology: Using bibliographic references in patents to build linkage schemes. Scientometrics, 54(3), 399–420.

Wang, J. (2013). Citation time window choice for research impact evaluation. Scientometrics, 94(3), 851–872.

Web-based Education Commission (2000). The Power of the Internet for Learning: Moving from Promise to Practice. Washington: U.S. Department of Education. https://www2.ed.gov/offices/AC/WBEC/FinalReport/WBECReport.pdf. Accessed 28 December 2015.

Weller, K., Dornstädter, R., Freimanis, R., Klein, R. N., & Perez, M. (2010). Social Software in Academia: Three Studies on Users’ Acceptance of Web 2.0 Services. In Proceedings of the 2nd Web Science Conference (WebSci10): Extending the Frontiers of Society On-Line. April 26-27th, Raleigh, North Caroline, United States.

Weller, K., Dröge, E. & Puschmann, C. (2011). Citation Analysis in Twitter: Approaches for Defining and Measuring Information Flows within Tweets during Scientific Conferences. In Proceedings of the ESWC2011 Workshop on Making Sense of Microposts (pp. 1–12). Heraklion, Greece.

Welsh, T. (2000). An Evaluation of Online Syllabi in The University of Tennessee College of Communications. ALN Magazine, 4(2). Accessed 28 December 2015, https://www.researchgate.net/profile/Teresa_Welsh.

Wouters, P., Thelwall, M., Kousha, K., Waltman, L., de Rijcke, S., Rushforth, A., et al. (2015). The metric tide: literature review (supplementary report i to the independent review of the role of metrics in research assessment and management). HEFCE. doi:10.13140/RG.2.1.5066.3520.

Zahedi, Z., Costas, R., & Wouters, P. (2014). Assessing the Impact of Publications Saved by Mendeley Users: Is There Any Different Pattern Among Users? In Proceedings of the 35th IATUL Conferences (Paper 4). Espoo, Finland. http://docs.lib.purdue.edu/iatul/2014/altmetrics/4.

Zaugg, H., West, R. E., Tateishi, I., & Randall, D. L. (2011). Mendeley: Creating communities of scholarly inquiry through research collaboration. TechTrends, 55(1), 32–36.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Mas-Bleda, A., Thelwall, M. Can alternative indicators overcome language biases in citation counts? A comparison of Spanish and UK research. Scientometrics 109, 2007–2030 (2016). https://doi.org/10.1007/s11192-016-2118-8

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-016-2118-8