Abstract

The issue of whether video games with aggressive or violent content (henceforth aggressive video games) contribute to aggressive behavior in youth remains an issue of significant debate. One issue that has been raised is that some studies may inadvertently inflate effect sizes by use of questionable researcher practices and unstandardized assessments of predictors and outcomes, or lack of proper theory-driven controls. In the current article, a large sample of 3034 youth (72.8% male Mage = 11.2) in Singapore were assessed for links between aggressive game play and seven aggression or prosocial outcomes 2 years later. Theoretically relevant controls for prior aggression, poor impulse control, gender and family involvement were used. Effect sizes were compared to six nonsense outcomes specifically chosen to be theoretically unrelated to aggressive game play. The use of nonsense outcomes allows for a comparison of effect sizes between theoretically relevant and irrelevant outcomes, to help assess whether any statistically significant outcomes may be spurious in large datasets. Preregistration was employed to reduce questionable researcher practices. Results indicate that aggressive video games were unrelated to any of the outcomes using the study criteria for significance. It would take 27 h/day of M-rated game play to produce clinically noticeable changes in aggression. Effect sizes for aggression/prosocial outcomes were little different than for nonsense outcomes. Evidence from this study does not support the conclusion that aggressive video games are a predictor of later aggression or reduced prosocial behavior in youth.

Similar content being viewed by others

Introduction

The issue of whether games with aggressive or violent content (henceforth called aggressive video games, AVGFootnote 1) contribute to aggression or violence in society remains an issue of significant controversy worldwide. In the United States, debates culminated in the Supreme Court decision Brown v EMA (2011) wherein the court majority concluded that evidence could not link aggressive video games to societal harms. This has not ended debates, however, which tend to become most acute following public acts of violence, particularly by minors (Copenhaver 2015; Markey et al. 2015). One concern that has been raised is that many previous studies have not been sufficiently rigorous, employing unstandardized measures (Elson et al. 2014), failing to control for theoretically relevant third variables (Savage and Yancey 2008) or for potential questionable researcher practices such as calculating predictor or outcome variables differently between publications using the same dataset (Przybylski and Weinstein 2019). The current article seeks to address these issues through reanalysis of a dataset employing preregistration, theoretically relevant controls and a clear and standardized method for assessing both predictor and outcome variables.

Aggressive Video Games Research

Decades of research on aggressive video games has failed to produce either consistent evidence or a consensus among scholars about whether such games increase aggression in young players. Indeed, several surveys of scholars have specifically noted the lack of any consensus (Bushman et al. 2015Footnote 2; Ferguson and Colwell 2017; Quandt 2017). According to some of these surveys, opinions among scholars also divide along generational lines (older scholars, particularly those who play no or fewer games, are more suspicious of game effects), discipline (psychologists are more suspicious of game effects than criminologists or communication scholars) and attitudes toward youth themselves (scholars with more negative attitudes toward youth are more suspicious of games.).

Regarding violence related outcomes, evidence appears to be clearer than for milder aggressive behaviors. As noted in a recent US School Safety Commission report (Federal Commission on School Safety 2018) research to date has not linked aggressive video games to violent crime. Indeed, government reports such as those from Australia (Australian Government, Attorney General’s Department 2010) and Sweden (Swedish Media Council 2011) as well as the Brown v EMA (2011) case have been cautious in attributing societally relevant aggression or violence to aggressive video games. Other research has indicated that the release of aggressive video games may be related to reduced violent crime (Beerthuizen et al. 2017; Markey et al. 2015). The most reasonable explanation for this is that popular aggressive video games keep young males busy and out of trouble, consistent with routine activities theory.

On the issue of aggressive behaviors, both evidence and opinions are more equivocal. Several meta-analyses have concluded that aggressive video games may contribute to aggressive behaviors. (e.g. Anderson et al. 2010; Prescott et al. 2018). However, reanalysis of Anderson et al. (2010) has suggested that publication bias inflated outcomes, particularly for experimental studies (Hilgard et al. 2017). For Prescott et al. (2018), it is less clear that the evidence supports the authors’ conclusions. Only very small effect sizes were found (approximately r= 0.08). Most included studies relied on self-report and unstandardized measures and were not preregistered, increasing potential for spurious findings. By contrast other meta-analyses (e.g. Ferguson 2015a; Sherry 2007) have not concluded sufficient evidence links aggressive video games to aggressive behaviors. These meta-analyses also have resulted in disagreements and criticisms (e.g. Rothstein and Bushman 2015) although the Ferguson (2015a, 2015b) meta-analysis was also independently replicated (Furuya-Kanamori and Doi 2016). Nonetheless, significant disagreements remain among scholars about which pools of evidence are most convincing. The American Psychological Association (APA) has concluded that aggressive video games are not related to violence but may be related to aggression (American Psychological Association 2015) but the APA statement also was critiqued for flawed methods and potential biases (Elson et al. 2019).

Critiques of Aggressive Video Game Research

Disagreements among scholars stem from concerns regarding several issues. These include systematic methodological issues that may influence effect sizes, and the interpretability of those effect sizes and their generalizability to real-world aggression. Critiques of laboratory-based aggression studies have been well-elucidated elsewhere (McCarthy and Elson 2018; Zendle et al. 2018). As the current article focuses on longitudinal effects, this review will focus on that area.

At present, perhaps two dozen longitudinal studies have examined the impact of aggressive video games on long-term aggression in minors (e.g. Breuer et al. 2015; Lobel et al. 2017; von Salisch et al. 2011). Results have been mixed, with effect sizes generally below r= 0.10. However, these studies vary in quality. Some do not adequately control for theoretically relevant third variables (such as gender; boys both playing more aggressive video games and more physically aggressive than girls). Concerns have been raised about the unstandardized use of both predictor and outcome variables, such that these variables have been constructed differently between articles by the same research group using the same dataset (Przybylski and Weinstein 2019). This raises the possibility of questionable researcher practices that may be inflating effect sizes. This also raises the possibility that effect sizes in meta-analyses may be inflated in ways that are difficult to detect via traditional publication bias tests. Other issues involve the use of ad hoc measures, which lack standardization or clinical validity, making interpretation of the results difficult.

In addition to the methodological concerns there are also, as noted, disagreements about the interpretability of tiny effect sizes even when “statistically significant”. For decades, it has been understood that relying on statistical significance can produce interpretation errors (Wilkinson and Task Force for Statistical Inference 1999). This is particularly true in large sample size studies, wherein increased power can cause noise or “crud factor” (herein defined as spurious correlations caused by common methods variance, demand characteristics, or other survey research limitations) to become statistically significant, despite having no relation to real-world effects. Thus, the potential for overinterpretation of tiny effect sizes from large sample size studies is significant, and the Type I error rate of such effects is likely high. As such, some scholars have suggested adopting a minimal threshold for interpretation of r= 0.10 in order to minimize the potential for overinterpretation of spurious findings from large studies (Orben and Przybylski 2019a).

The potential for overinterpretation of crud factor results is particularly relevant to meta-analysis. For instance, one recent meta-analysis (Prescott et al. 2018) concluded that aggressive video games are linked longitudinally to aggression based on a very weak effect size (r= 0.08). The basis of this decision seems to have been this effect was “statistically significant” despite heterogeneity in findings among the individual studies. However, owing to highly enhanced power, almost all meta-analyses are statistically significant, so using this as an index of evidence is dubious. Such tiny effects may not reflect population effect sizes but may be the product simply of systematic methodological limitations and demand characteristics of the included studies.

One approach to examine whether tiny effect sizes are meaningful has been to compare them to nonsense relationships. In other words, compare effect sizes for the relationship of interest (in this case aggressive video games and player aggression) to effect sizes for the theoretical predictor variable (aggressive video games) on outcomes theoretically unrelated (or vice versa, the theoretical outcome with nonsense predictors), where relationships are expected to be practically no different from zero. Orben and Przybylski (2019b) did this with screen time and mental health. Examining several datasets, they demonstrated that, in large samples, screen time tended to produce very tiny but statistically significant relationships with mental health. However, these were no different in magnitude than several nonsense relationships such as the relationship between eating bananas and mental health or wearing eyeglasses on mental health (both of which were also statistically significant.) By making such comparisons, it is possible to come to understanding of whether an observed statistically significant effect size is meaningful, or likely an artifact that became statistically significant due to the increased power of large samples.

Theoretically Relevant Control Variables

As noted earlier, it is considered the gold standard of media effects research to ensure that theoretically relevant third variables are adequately controlled in multivariate analyses (Przybylski and Mishkin 2016; Savage 2004). Without doing so, bivariate correlations are likely to be spuriously high and misinform. The most obvious third variable is gender, given higher rates of both aggressive video game play and physical aggression in boys (Olson 2010). Without controlling for gender, any correlation between aggressive video games and aggression may simply be a feature of boyness.

The need for proper control variables can be informed by the Catalyst Model (Ferguson and Beaver 2009; Surette 2013) which is a diathesis-stress model of violence. This model posits that violence propensity results from genetic inheritance coupled with early environmental influences, particularly family environment. These lead to development of a personality style particularly prone to aggressiveness and hostile attributions. Decisions whether to engage in violence or aggression can be further hampered by difficulties with self-control. From this theoretical perspective, controlling for variables such as family environment, early aggressiveness and issues related to self-control and impulse control are important.

Thus, control variables have been generally well lain out for aggressive video game studies. These typically include the Time 1 (T1) outcome variable, as well as variables related to family environment (Decamp 2015), self-control and impulsiveness (Schwartz et al. 2017) as well as intelligence (Jambroes et al. 2018). Multivariate analyses with proper controls can help elucidate the added predictive value of aggressive video game play above well-known risk factors for increased aggression.

The Singapore Dataset

The current study consists of a reanalysis of a large dataset from Singapore (henceforth simply “Singapore dataset”) that has been used several times previously (see Przybylski and Weinstein 2019 for full listing and discussion, pp. 2–3). The validity of previous studies using this dataset have been questioned (Przybylski and Weinstein 2019). This is not because the dataset is inherently poor quality, but rather that variables, and particularly the aggressive video game variable, had been calculated differently across publications by the same scholars. For instance (see Ferguson 2015b), using the Singapore dataset violent game exposure has been calculated by: 1.) multiplying self-rated violent content by hours spent playing for three different games, and averaging scores (Gentile et al. 2009), 2.) a 4-item measure of violence exposure in games with no reliability mentioned (Gentile et al. 2011), 3.) changing the 4-item measure to a 2-item measure with mean frequency calculated across three games with no involvement of time spent playing (Busching et al. 2013), 4.) a 9-item scale comprised of gaming frequency, three favorite games with violent and prosocial content (Gentile et al. 2014), and 5.) a 6-item scale also comprising gaming frequency, three favorite games and 2-item violent content questions (Prot et al. 2014). In some studies, the authors do not provide enough information to understand how the video game variables were created and whether violent and prosocial video game questions were treated separately or combined (e.g., Gentile et al. 2014). This phenomenon, often described as the “garden of forking” paths greatly enhances Type I error by potentially allowing researchers the freedom to manipulate outcomes to fit hypotheses by allowing undesired degrees of researcher freedom (Gelman and Lokens 2013).

This has raised concern that questionable researcher practices may have caused false positive results from some studies linking aggressive video games to long-term aggression. Related, the dataset includes multiple measures of aggressive and prosocial behavior, but not all were reported in each article. Creating a standardized measurement for aggressive video games and using it consistently with this dataset can reduce false positive results. Careful use of theoretically relevant control variables was also lacking in many published studies, also potentially resulting in false positive results. Lastly, none of the previous studies were preregistered. Thus, there is value in conducting a reexamination of this otherwise fine dataset using a preregistered set of analyses and standardized assessment of key variables, to examine the validity of prior conclusions.

The Current Study

The present study reassesses links between aggressive video games and aggression in a large sample of youth from Singapore. These analyses test the straightforward hypotheses that aggressive video games are related to increased aggression and decreased prosocial behaviors. Seven outcome variables were preregistered, namely: Prosocial Behavior, Physically Aggressive Behavior, Socially Aggressive Behavior, Aggressive Fantasies, Cyberbullying Perpetration, Trait Anger, Trait Forgiveness.

This analysis used several approaches to reduce Type I error results in several ways. First, this analysis has been preregistered (the preregistration can be found at: https://osf.io/2dwmr.) It is certified that the authors preregistered these methods and analysis before conducting any analyses with the dataset. Second, standardized assessments are used for all variables. The aggressive video games variable is calculated in a way typical for most aggressive video game studies and is detailed specifically. Any further analyses or studies using this dataset should use this standardized approach and not vary from it. All other measures used full scale scores unless detailed otherwise. Third, theoretically relevant control variables were preregistered and employed. Lastly, all relevant outcome variables related to aggression and prosocial behavior are reported in this article. All outcome variables were preregistered prior to any analyses. No analyses were excluded or included specifically based on outcome, statistical significance, etc. The current article uses the 21-word statement suggested by Simmons et al. (2012, p. 4): “We report how we determined our sample size, all data exclusions (if any), all manipulations, and all measures in the study”.

As noted, effect sizes have often been very small in aggressive video game research, and their meaningfulness is debated. One way to examine for the meaningfulness of effect sizes is to compare hypothesized effect sizes to nonsense effect sizes. That is to say, effect sizes for variables not thought to be practically related to aggressive video games. If nonsense outcomes and aggression/prosocial outcomes are of similar effect size magnitude, this is further argument that such effect sizes should not be interpreted as meaningful, even if statistically significant. This approach was pioneered by (Orben and Przybylski 2019b) related to screen time. Further, as recommended by Orben and Przybylski (2019a), an effect size cut-off of r= 0.10 will be employed as the threshold for minimal effects of interpretive value.

Methods

Participants

Participants in the current study were 3034 youth from Singapore. Of the sample 72.8% reported being male. Mean age at time 1 (T1) was 11.21 (SD= 2.06). Mean age at time 3 (T3) was 13.12 (SD= 2.13). The majority of the sample were ethnic Chinese (72.6%), with smaller numbers of Malay (14.2%), Indian (8.7%) and others. This is consistent with the ethnic composition of Singapore. As indicated above, participants were surveyed three times at 1-year intervals.

Materials

All measures discussed below were Likert-scale unless detailed otherwise. Also, full scale scores were averaged across individual items unless otherwise indicated for each measure. All control or predictor variables were assessed at T1 unless otherwise noted, whereas all outcome variables were assessed at T3 unless otherwise noted.

Aggressive video games (AVGs, main predictor)

Assessment of video game exposure can be difficult to do reliably and, as noted above, one concern with past use of this dataset is that assessment of aggressive video games in part studies demonstrated the potential for questionable researcher practices (Przybylski and Weinstein 2019). The current study adopted a standard approach to assessing aggressive video game exposure (Olson et al. 2007). Participants were asked to rate 3 video games they currently played and how often they played them both on weekdays and weekends. The researchers obtained ESRB (Entertainment Software Ratings Board) ratings for each of the games, which have been found to be a reliable and valid estimate of violent content (Ferguson 2011). For each game, the ordinal value of the ESRB rating (1 = ‘EC’ through 5 = ‘M’) was multiplied by average daily hours played. An average of these composite scores for the three games was then computed.

It is noted that this method for computing the scores was preregistered before any data analysis and was not changed from the preregistration. Second, it is certified that any future articles using the aggressive video game variable will maintain these calculated scores. Lastly, it is advised that other authors using this dataset stick to this standardized method of computing aggressive video games for consistency and to avoid questionable researcher practices. Though no special claim to brilliance is made in devising the best possible scale, using this scale consistently across papers can reduced Type I error due to methodological flexibility and make comparisons across papers more consistent.

Demographics (control variables)

Sex, age at T1 and mother’s reported years of education were used as basic control variables.

T1 aggressiveness (control variables)

In longitudinal analyses it is important to control for the T1 variable in order to limit potential selection effects. In this case, the main outcome variables related to aggressive behavior were not assessed at T1, so to employ a consistent set of T1 selection controls, two variables assessed at T1 related to aggressiveness were employed. These include the Normative Beliefs in Aggression Scale (NOBAGS, Huesmann and Guerra 1997). This was a 20-item scale (alpha = 0.935), that asks youth whether use of aggression is acceptable in varying circumstances. The second measure was a scale for Hostile Attribution Bias (Crick 1995) which presented youth with six ambiguous scenarios and asked youth to rate the aggressive intent of characters in each scenario (alpha = 0.643). Taken together, these two measures appear to function adequately to assess aggressiveness at T1.

T1 self-control (control variables)

Given evidence that self-control is associated with aggressive behavior (Schwartz et al. 2017), two measures of initial self-control were included as controls. These included a 6-item measure of self-control (alpha = 0.620), which included items related to handling stress and losing temper, as well as a 14-item measure of impulse control problems, which assessed inattentiveness, impulsive behaviors and excitability (Liau et al. 2011).

T1 intelligence (control variable)

The Ravens Progressive Matrices were used to assess non-verbal intelligence in the youth at T1. The Ravens has generally been found to be a reliable and valid measure of intelligence across cultures (e.g. Shamama-tus-Sabah et al. 2012), although comparisons between cultures may not be advised. Given intelligence is an important factor in serious aggression (Hampton et al. 2014) it was considered important to control for. Full scale scores were used.

Family environment (control variable)

Given evidence family environment can influence aggression (DeCamp 2015), a six-item measure of family environment was included (alpha = 0.772; Glezer 1984). Items asked about whether youth felt it was pleasant living at home, whether they felt accepted or whether there were too many arguments.

Prosocial behavior and empathy (T3 outcome, T1 control)

Prosocial behavior and empathy were assessed using the helping and cooperation subscales (18 items, alpha = 0.827 at T1, 0.834 at T3) of the Prosocial Orientation Questionnaire (Cheung et al. 1998). Items asked about willingness to help or volunteer such as “I would help my friends when they have a problem.” This variable was assessed as a T3 outcome. For that analysis only the T1 variable was included as an additional control variable.

Aggressive behavior (outcome)

Aggressive behavior was assessed using a measure that included both physical (6 items, alpha = 0.869) and relational (6 items, alpha = 0.796) aggression (Linder et al. 2002; Morales and Crick 1998). Physical aggression asked about assaultive behaviors such as “When someone makes me really angry, I push or shove the person” whereas relational aggression was more social in nature rather than physical “When I am not invited to do something with a group of people, I will exclude those people from future activities.” These were assessed as separate outcome measures.

Aggressive fantasies (outcome)

Aggressive fantasies were measured using a 6-item scale (alpha = 0.839) that assessed whether youth harbored fantasies about harming others (Nadel et al. 1996). An example item is “Do you sometimes imagine or have daydreams about hitting or hurting somebody that you don’t like?”

Cyberbullying (outcome)

Cyberbullying perpetration was assessed using six items related to whether youth had been rude to, spread rumors about or threatened others on the internet (alpha = 0.888; Barlett and Gentile 2012).

Trait anger (outcome)

To assess for trait anger, a 6-item scale was employed (alpha = 0.823; Buss and Perry 1992) to assess the degree to which youth felt ongoing anger or reacted to anger badly. A sample item is “I have trouble controlling my temper.” A seventh item (#4) was found to have poor reliability with the other items and was not included in the averaged scale score. This decision was made prior to any data analysis.

Trait forgiveness (outcome)

Trait forgiveness was assessed with a 10-item scale (alpha = 0.668; Berry et al. 2005), which asked about willingness to be merciful or forgiving of others who had done the youth harm. A sample item is “I try to forgive others even when they don’t feel guilty for what they did.”

Nonsense outcomes

Several nonsense outcomes were chosen for lack of theoretical link between them and aggressive video game exposure. These included T3 height, T2 myopia (the only variable taken from T2 as this was not available at T3), age the youth moved to Singapore (if they were not born there) and whether the youth’s father was born in Singapore. Two scale scores were also included, a 17-item scale related to T3 social phobia (alpha = 0.920) and a 10-item scale related to somatic complaint such as back pain, headaches, etc., at T3 (alpha = 0.878). A PsycINFO subject search for “violent video games” and “social phobia” turned up 0 hits. A similar search using the term “somatic” likewise turned up 0 hits. Therefor it appears reasonable that these two scale scores are suitable nonsense outcomes with little theoretical link to aggressive video games.

Procedures

Participants in the study were 3034 students from the 6 primary schools and 6 secondary schools in Singapore. The longitudinal aspect of the study involves following this cohort over the three-year period. The second wave of the longitudinal survey study was conducted a year after the first wave. Procedures were similar to Wave 1. The third wave of the longitudinal Survey study was conducted a year after.

Four sets of counterbalanced (e.g. presented in differing orders to reduce ordering effects) questionnaires were delivered to all the schools. Letters of parental consent were sent to the parents through the schools. A liaison teacher from each school collated the information and excluded students from the study whose parents refused consent. The questionnaires were administered in the classrooms with the help of schoolteachers at the convenience of the schools. Detailed instructions were given to schoolteachers who helped in the administration of the survey.

Students were told that participation in the survey was voluntary and they could withdraw at any time. Privacy of the students’ responses is assured by requiring the teachers to seal collected questionnaires in the envelopes provided in the presence of the students. It was also highlighted on the questionnaires that the students’ responses would be read only by the researchers.

In the second and third years of the project, students who had to be followed-up were no longer in the classes together with their previous cohorts but were in distributed in different classes together with other students who did not participate in the project.

All schools involved preferred to administer the questionnaires by classes rather than have the selected students taken out of their classes for the study. As a result of this administrative convenience, students not involved in the project were also surveyed.

All analyses were preregistered. Control variables were consistent across analyses, with the exception of including T1 prosocial/empathy when assessing T3 prosocial/empathy. All regressions used OLS with pairwise deletion for missing data. Analyses of VIF revealed lack of collinearity issues for all analyses, with no VIF outcomes reaching 2.0.

Results

A correlation matrix of variables is presented as Table 1. Note, all regression models were significant at p< 0.001, including for nonsense outcomes, except for father’s birthplace which was significant at p= 0.003.

Main Study Hypotheses

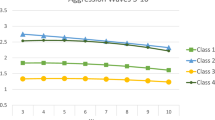

Standardized regression coefficients are presented for all main study outcomes in Table 2. For none of the outcomes was aggressive video game exposure related to aggression or prosocial related outcomes. Although no single predictor was significant across all outcomes, the most consistent predictors of outcomes included female sex (as a protective factor), positive family environment (as a protective factor) and initial problems with impulse control (as a risk factor). Prosocial behavior was also largely consistent across time.

Results for nonsense outcomes are presented in Table 3. Surprisingly, exposure to aggressive video games was a significant predictor of earlier age moved to Singapore. As there is no theoretical reason for such a relationship, this highlights how statistically significant outcomes with even non-trivial effects can sometimes be reported, which may be over interpreted by scholars favoring their hypotheses.

The mean of the absolute value of effect sizes for aggressive video game exposure on hypothesized outcomes was r= 0.032. The mean of the absolute value of effect sizes for nonsense variables was actually higher at r= 0.039. If the largest value for the nonsense outcomes is removed this reduces the effect size for the nonsense variables to r= 0.022. However, eliminating the largest value from the hypothesized outcomes likewise reduces the mean effect size to r= 0.023. Thus, it appears likely that the effect sizes for the hypothesized effects and nonsense effects are equivalent in approximate value.

Exploratory Analysis not in Preregistration

To examine for methods variance issues, all regressions were rerun with listwise deletion for missing data rather than pairwise. Results did not substantially change, suggesting that methods variance issues are not in play with the results. Effect sizes for some outcomes (such as cyberbullying) were slightly smaller for listwise deletion, but pairwise deletion results are shown in the table, consistent with the preregistration.

Another means by which to consider the practical value of a predictor is to examine how much of that predictor would be required to achieve a clinically observable effect in real life. Orben and Przybylski (2019b) pioneered this approach using screen time and mental health outcomes. In clinical work a clinically significant outcome is typically defined as approximate 1 SD above the mean (more generously for the hypothesis a 0.5 SD threshold could also be applied). Then unstandardized regressions can potentially be used to calculate how much of the predictor variable is required to push the outcome variable to observable clinical significance.

This is only possible if the predictor variable itself exists in observable metrics such as time. Thus, Orben and Przybylski were able to calculate how many hours per day of screen time was required to create a clinically observable effect on mental health in youth. However, aggressive video game exposure as a combined measure of time and violent content does not really work effectively in this sense. Thus, a new variable was created using only M-rated (the highest rating for commercially sold games) games, calculating time spent playing M-rated games specifically. This allowed calculating a mean hours/day figure for such games. Physical aggression was used as the main outcome, as this was likely the outcome of greatest interest. For this variable the mean value was 1.524, on a range of 1 through 4 (SD= 0.593). Thus, a 1 SD increase would be 2.117, whereas a 0.5 SD increase would be 1.821.

The regression for the physical aggression outcome was then rerun replacing aggressive video game exposure with time spent (hours/day average) on M-rated video games. As with the preregistered regression, the result was non-significant for M-rated game use (β = 0.022). However, if non-significance is ignored and it is assumed that this effect size might nonetheless be meaningful, then the unstandardized regression coefficient (b = .022, SE = 0.023) can be used to calculate clinical significance. Thus, a daily hour spent on M-rated video games would result in an increase of 0.022 in the measure of physical aggression. By this metric it would take 27 h/day of M-rated video game play to raise aggression to a clinically observable level, assuming effects were causal (13.5 h, for half a standard deviation).

Discussion

Controversy continues regarding whether aggressive video games contribute to aggression in real life. Neither individual longitudinal studies, nor meta-analysis have come to a conclusion regarding whether real-life effects exist. In some case, undue flexibility in analytic methods may have created false positive results (Przybylski and Weinstein 2019). To assess for this, the current article examined data from a large longitudinal study of youth in Singapore using preregistration and standardized measures. Current results found that aggressive video game exposure was not linked to either aggressive behavior or prosocial behavior two years later among youth. Regarding clinical significance, current results suggest that it would require more hours of M-rated game play to produce clinically significant aggression than exist in a day. Therefore, data from this study do not suggest that aggressive video games contribute to real-world aggression.

These results fit with numerous other recent longitudinal analyses (e.g. Breuer et al. 2015; Lobel et al. 2017; von Salisch et al. 2011) that have found no long-term predictive relationship between aggressive video games and future aggression in youth. To the extent that youth aggression is multi-determined, aggressive video game exposure does not appear to be one of the risk factors for such outcomes. Quote such as “Violent video games are just one risk factor. They’re not the biggest, and they’re not the smallest. They’re right in the middle, with kind of the same effect size as coming from a broken home,” (Gentile, quoted in Almendraia 2014) appear to be entirely incorrect. Aggressive video game playing does not appear to be a risk factor for future youth aggression at all and certainly should not be compared to the influence of broken homes. It is argued that researchers need to be far more cautious in communicating longitudinal effects for aggressive video games to the general public. Overall, evidence does not appear to support such a link. The current study not only adds to this evidence but reanalyzes evidence that sometimes was used to support such claims. With preregistration and proper controls, it is clear that the Singapore dataset should not be considered evidentiary in support of long-term aggressive video game influences on youth. Given few longitudinal studies provide effect sizes above r= 0.10 for any form of deleterious effect, claims for long-term harms from aggressive video game exposure have simply not been substantiated.

The current analyses have several implications. The first is for meta-analyses. Most meta-analyses compile effect sizes from reported articles under the assumption that the reported effect sizes are reasonably accurate and representative of population effect sizes. However, as indicated above, flexibility in methods and unstandardized assessments may cause spuriously high effect size estimates (Przybylski and Weinstein 2019) causing errors in meta-analysis. Recent preregistered studies of aggressive video game effects of which there are perhaps half a dozen have generally not found evidence for negative effects (e.g. McCarthy et al. 2016; Przbylski and Weinstein 2019, although see Ivory et al. 2017 for one high-quality exception). Thus, most extant meta-analyses may be compounding the issue of spurious effects reported in individual studies.

The second issue comes regarding the interpretation of potentially trivial effects. In many studies, including this one, effect sizes reported are below r= 0.10. Nonetheless, with large sample sizes, these may become statistically significant. The current analysis suggests that relying on statistical significance is likely to cause spurious interpretation of trivial effects. In the current analysis, the effect sizes for aggressive video game exposure predicting nonsense outcomes was equivalent to that for predicting aggression or prosocial outcomes. Similar results have been found in other studies which have examined this issue (e.g. Orben and Przybylski 2019b). These findings support the concern that the risk for Type I error results in large samples with small effect sizes is intolerably high, often resulting in misinterpretation of findings that do not, in fact, provide evidence for study hypotheses. Given that many such outcomes will have p-values much lower than .05, it is possible that traditional publication bias practices may have difficulty detecting spurious outcomes, even if they are the result of questionable researcher practices as has been noted for previous articles using this dataset (Przybylski and Weinstein 2019). Thus, the current article supports Orben and Przybylski (2019a) in recommending against interpreting effect sizes below r= 0.10 at least in this domain.

It is worth noting some of the predictors that were significant. Both female gender as well as positive family environment were protective factors whereas impulse control problems were risk factors for negative outcomes. Thus, public policies that aim toward strengthening families as well as increase youth impulse control are likely to be more productive than those that target video games.

Developmental Implications

Much of the previous few decades of scholarship have evolved with a tacit understanding that children act as passive imitators, with little distinction in their modeling between real-life and fictional events. This has led to sometimes sweeping conclusions about the harmfulness of a variety of media experiences, not limited to violent content. Perhaps most notable related to video games was the APA’s recent (2015) resolution connecting aggressive video games to aggression in real life (though not violent crime.)

Increasingly, however, research, particularly that which is preregistered and standardized, has had difficulty finding evidence that exposure to fictional media and aggressive video games specifically is connected to the development of more aggressive profiles among youth. These newer results suggest that media experiences for youth may be more nuanced and complex than simply connecting “naughty” media to negative outcomes. The current study joins this expanding pool of research in suggesting that resolutions such as that by the APA are not consistent with the cumulative pool of preregistered studies using standardized measures (e.g. Przybylski and Weinstein 2019). Or put simply, the APA resolution on aggressive video games does not reflect current best science.

This has important implications for policy insofar as that policies that are aimed at reducing youth exposure to aggressive video games are unlikely to result in positive developmental outcomes. However, such policies may come with significant costs, including restrictions on freedom of speech, limiting youth creative experiences, stigmatizing the use of games in education, and stigmatizing gaming as a hobby and gamers as a community. With little evidence to suggest that policies geared toward reducing aggressive video game exposure are likely to have positive practical outcomes, such policy efforts are not recommended in the future.

Limitations

As with all studies, ours has limitations. All measures were youth self-report. Self-report measures are not always fully reliable and can be subject to single-responder bias. Further studies using multiple responders would be desirable. Data in the current study is correlation and no causal attributions can be made. Lastly, determining a valid measure of aggressive video game exposure based on self-report can tend to be difficult. Here the current study used a standardized and replicable approach which is an improvement upon some previous approaches. However, quantifying aggressive video game exposure by using time spend on multiple games can cause some measurement error.

Conclusion

The issue of the impact of aggressive video games on youth aggression continues to be debated. There appears to be some confusion among scholars (e.g. Prescott et al. 2018) regarding whether current evidence supports long-term links between aggressive video games and youth aggression, despite most longitudinal studies failing to demonstrate robust results. The current article presents a preregistered, standardized assessment of aggressive video game effects using a large sample of Singapore youth. Results indicate that using a standardized measurement approach that was preregistered, this dataset does not support the hypothesis that aggressive video games are a risk factor for aggression in youth. Given some previous issues with researcher degrees of freedom in previous reports (see Przybylski and Weinstein 2019) for discussion, it is recommended that the current reported effect sizes be used to represent this dataset. The current analyses contribute to a growing number of studies that call into question whether aggressive video games function as a meaningful predictor of aggressive or prosocial behavior. It is hoped that this data furthers the ongoing debate on this issue.

Notes

There is a separate debate about whether the commonly used term “violent video game” is appropriately scholarly, or emotionally evocative and prejudicial. Other terms such as Kinetic Video Game, Conflict Oriented Game or Aggressive Video Game may be less visceral and more scholarly. The current article used the last option for this paper but it is suggested that scholars consider move away from the term “violent video game.”

References

Almendraia, A. (2014). It’s not the video games that are making you angry, you’re just bad at them. Huffington Post. http://huffingtonpost.healthdogs.com/2014/04/08/violent-video-games_n_5112981.html.

American Psychological Association. (2015). Resolution on violent video games. https://www.apa.org/about/policy/violent-video-games.aspx.

Anderson., C., Ihori, N., Bushman, B. J., Rothstein, H. R., Shibuya, A., Swing, E. L., Sakamoto, A., & Saleem, M. (2010). Violent video game effects on aggression, empathy, and prosocial behavior in eastern and western countries: a meta-analytic review. Psychological Bulletin, 136(2), 151–173.

Australian Government, Attorney General’s Department. (2010). Literature review on the impact of playing violent video games on aggression. Canberra, Australia: Commonwealth of Australia.

Barlett, C. P., & Gentile, D. A. (2012). Attacking others online: the formation of cyberbullying in late adolescence. Psychology of Popular Media Culture, 1(2), 123.

Brown v EMA. (2011). http://www.supremecourt.gov/opinions/10pdf/08-1448.pdf.

Beerthuizen, M. G. C. J., Weijters, G., & van der Laan, A. M. (2017). The release of grand theft auto V and registered juvenile crime in the Netherlands. European Journal of Criminology, 14(6), 751–765. https://doi.org/10.1177/1477370817717070.

Berry, J. W., Worthington, E. L., O’Connor, L. E., Parrott, L., & Wade, N. G. (2005). Forgivingness, vengeful rumination, and affective traits. Journal of Personality, 73(1), 183–226.

Breuer, J., Vogelgesang, J., Quandt, T., & Festl, R. (2015). Violent video games and physical aggression: evidence for a selection effect among adolescents. Psychology of Popular Media Culture, 4(4), 305–328. https://doi.org/10.1037/ppm0000035.

Busching, R., Gentile, D. A., Krahé, B., Möller, I., Khoo, A., Walsh, D. A., & Anderson, C. A. (2013). Testing the reliability and validity of different measures of violent video game use in the United States, Singapore, and Germany. Psychology of Popular Media Culture. https://doi.org/10.1037/ppm0000004.

Bushman, B. J., Gollwitzer, M., & Cruz, C. (2015). There is broad consensus: media researchers agree that violent media increase aggression in children, and pediatricians and parents concur. Psychology of Popular Media Culture, 4(3), 200–214. https://doi.org/10.1037/ppm0000046.

Buss, A., & Perry, M. (1992). The aggression questionnaire. Journal of Personality and Social Psychology, 63(3), 452–459.

Cheung, P. C., Ma, H. K., & Shek, D. T. L. (1998). Conceptions of success: their correlates with prosocial orientation and behaviour in Chinese adolescents. Journal of Adolescence, 21(1), 31–42. https://doi.org/10.1006/jado.1997.0127.

Copenhaver, A. (2015). Violent video game legislation as pseudo-agenda. Criminal Justice Studies: A Critical Journal of Crime, Law and Society, 28(2), 170–185. https://doi.org/10.1080/1478601X.2014.966474.

Crick, N. R. (1995). Relational aggression: the role of intent attributions, feelings of distress, and provocation type. Development and Psychopathology, 7(2), 313–322. https://doi.org/10.1017/S0954579400006520.

DeCamp, W. (2015). Impersonal agencies of communication: comparing the effects of video games and other risk factors on violence. Psychology of Popular Media Culture, 4(4), 296–304. https://doi.org/10.1037/ppm0000037.

Elson, M., Ferguson, C. J., Gregerson, M., Hogg, J. L., Ivory, J., Klisanin, D., Markey, P. M., Nichols, D., Siddiqui, S., & Wilson, J. (2019). Do policy statements on media effects faithfully represent the science? Advances in Methods and Practices in Psychological Science. 2(1), 12–25.

Elson, M., Mohseni, M. R., Breuer, J., Scharkow, M., & Quandt, T. (2014). Press CRTT to measure aggressive behavior: the unstandardized use of the competitive reaction time task in aggression research. Psychological Assessment, 26, 419–432. https://doi.org/10.1037/a0035569.

Etchells, P., & Chambers, C. (2014). Violent video games research: consensus or confusion? The Guardian. http://www.theguardian.com/science/head-quarters/2014/oct/10/violent-video-games-research-consensus-or-confusion.

Federal Commission on School Safety. (2018). Final report of the Federal Commission on School Safety. Washington, DC: US Department of Education.

Ferguson, C. J. (2015a). Do angry birds make for angry children? A meta-analysis of video game influences on children’s and adolescents’ aggression, mental health, prosocial behavior, and academic performance. Perspectives on Psychological Science, 10(5), 646–666.

Ferguson, C. J. (2015b). Pay no attention to that data behind the curtain: on angry birds, happy children, scholarly squabbles, publication bias and why betas rule metas. Perspectives on Psychological Science, 10, 683–691.

Ferguson, C. J. (2011). Video games and youth violence: a prospective analysis in adolescents. Journal of Youth and Adolescence, 40(4), 377–391.

Ferguson, C. J., & Beaver, K. M. (2009). Natural born killers: the genetic origins of extreme violence. Aggression and Violent Behavior, 14(5), 286–294.

Ferguson, C. J., & Colwell, J. (2017). Understanding why scholars hold different views on the influences of video games on public health. Journal of Communication, 67(3), 305–327.

Furuya-Kanamori, F., & Doi, S. (2016). Angry birds, angry children and angry meta-analysts. Perspectives on Psychological Science, 11(3), 408–414.

Gelman, A., & Loken, E. (2013). The garden of forking paths: why multiple comparisons can be a problem, even when there is no “fishing expedition” or “p-hacking” and the research hypothesis was posited ahead of time. http://www.stat.columbia.edu/~gelman/research/unpublished/p_hacking.pdf.

Gentile, D. A., Anderson, C. A., Yukawa, S., Saleem, M., Lim, K. M., Shibuya, A., & Sakamoto, A. (2009). The effects of prosocial video games on prosocial behaviors: international evidence from correlational, longitudinal, and experimental studies. Personality and Social Psychology Bulletin, 35, 752–763.

Gentile, D. A., Choo, H., Liau, A., Sim, T., Li, D., Fung, D., & Khoo, A. (2011). Pathological video game use among youths: a two-year longitudinal study. Pediatrics, 127(2), e319–e329. https://doi.org/10.1542/peds.2010-1353.

Gentile, D., Li, D., Khoo, A., Prot, S., & Anderson, C. (2014). Mediators and moderators of long-term effects of violent video games on aggressive behavior practice, thinking, and action. JAMA Pediatr. 168(5), 450–457, https://doi.org/10.1001/jamapediatrics.2014.63.

Glezer, H. (1984). Antecedents and correlates of marriage and family attitudes in young Australian men and women. In Proceedings of the XXth international committee on family research seminar conference on social change and family policies (Vol 1, pp. 201–255). Melbourne: Australian Institute of Family Studies.

Hampton, A. S., Drabick, D. A. G., & Steinberg, L. (2014). Does IQ moderate the relation between psychopathy and juvenile offending? Law and Human Behavior, 38(1), 23–33.

Hilgard, J., Engelhardt, C. R., & Rouder, J. N. (2017). Overstated evidence for short-term effects of violent games on affect and behavior: a reanalysis of Anderson et al. (2010). Psychological Bulletin, 143(7), 757–774. https://doi.org/10.1037/bul0000074.

Huesmann, L. R., & Guerra, N. G. (1997). Children’s normative beliefs about aggression and aggressive behavior. Journal of Personality and Social Psychology, 72(2), 408–419. https://doi.org/10.1037/0022-3514.72.2.408.

Ivory, A. H., Ivory, J. D., & Lanier, M. (2017). Video game use as risk exposure, protective incapacitation, or inconsequential activity among university students: comparing approaches in a unique risk environment. Journal of Media Psychology: Theories, Methods, and Applications, 29(1), 42–53. https://doi.org/10.1027/1864-1105/a000210.

Ivory, J. D., Markey, P. M., Elson, M., Colwell, J., Ferguson, C. J., Griffiths, M. D., & Williams, K. D. (2015). Manufacturing consensus in a diverse field of scholarly opinions: a comment on Bushman, Gollwitzer, and Cruz (2015). Psychology of Popular Media Culture, 4(3), 222–229. https://doi.org/10.1037/ppm0000056.

Jambroes, T., Jansen, L. M. C., v.d. Ven, P. M., Claassen, T., Glennon, J. C., Vermeiren, R. R. J. M., & Popma, A. (2018). Dimensions of psychopathy in relation to proactive and reactive aggression: does intelligence matter? Personality and Individual Differences, 129, 76–82. https://doi.org/10.1016/j.paid.2018.03.001.

Liau, A. K., Tan, Chow, D., Tan, T. K., & Konrad, S. (2011). Development and validation of the personal strengths inventory using exploratory and confirmatory factor analyses. Journal of Psychoeducational Assessment, 29(1), 14–26.

Linder, J. R., Crick, N. R., & Collins, W. A. (2002). Relational aggression and victimization in young adults’ romantic relationships: associations with perceptions of parent, peer, and romantic relationship quality. Social Development, 11(1), 69–86. https://doi.org/10.1111/1467-9507.00187.

Lobel, A., Engels, R. C. M. E., Stone, L. L., Burk, W. J., & Granic, I. (2017). Video gaming and children’s psychosocial wellbeing: a longitudinal study. Journal of Youth and Adolescence, 46(4), 884–897. https://doi.org/10.1007/s10964-017-0646-z.

Markey, P. M., Markey, C. N., & French, J. E. (2015). Violent video games and real-world violence: rhetoric versus data. Psychology of Popular Media Culture, 4(4), 277–295.

McCarthy, R. J., Coley, S. L., Wagner, M. F., Zengel, B., & Basham, A. (2016). Does playing video games with violent content temporarily increase aggressive inclinations? A pre-registered experimental study. Journal of Experimental Social Psychology, 67, 13–19. https://doi.org/10.1016/j.jesp.2015.10.009.

McCarthy, R. J., & Elson, M. (2018). A conceptual review of lab-based aggression paradigms. Collabra: Psychology, 4(1), 4 https://doi.org/10.1525/collabra.104.

Morales, J. R. & Crick, N. R. (1998). Self-report measure of aggression and victimization. Unpublished measure.

Nadel, H., Spellman, M., Alvarez-Canino, T., Lausell-Bryant, L., & Landsberg, G. (1996). The cycle of violence and victimization: a study of the school-based intervention of a multidisciplinary youth violence-prevention program. American Journal of Preventive Medicine, 12(5, Suppl), 109–119.

Olson, C. K. (2010). Children’s motivations for video game play in the context of normal development. Review of General Psychology, 14(2), 180–187. https://doi.org/10.1037/a0018984.

Olson, C. K., Kutner, L. A., Warner, D. E., Almerigi, J. B., Baer, L., Nicholi, II, A. M., & Beresin, E. V. (2007). Factors correlated with violent video game use by adolescent boys and girls. Journal of Adolescent Health, 41(1), 77–83. https://doi.org/10.1016/j.jadohealth.2007.01.001.

Orben, A., & Przybylski, A. (2019a). Screens, teens, and psychological well-being: evidence from three time-use-diary studies. Psychological Science. (in press).

Orben, A., & Przybylski, A. (2019b). The association between adolescent well-being and digital technology use. Nature: Human Behavior, 3(2), 173–182.

Prescott, A. T., Sargent, J. D., & Hull, J. G. (2018). Metaanalysis of the relationship between violent video game play and physical aggression over time. Proceedings of the National Academy of Sciences of the United States of America, 115(40), 9882–9888. https://doi.org/10.1073/pnas.1611617114.

Prot, S., Gentile, D. A., Anderson, C. A., Suzuki, K., Swing, E., Lim, K. M., & Lam, B. P. (2014). Long-term relations among prosocial-media use, empathy, and prosocial behavior. Psychological Science, 25(2), 358–368.

Przybylski, A. K., & Mishkin, A. F. (2016). How the quantity and quality of electronic gaming relates to adolescents’ academic engagement and psychosocial adjustment. Psychology of Popular Media Culture, 5(2), 145–156. https://doi.org/10.1037/ppm0000070.

Przybylski, A., & Weinstein, N. (2019). Violent video game engagement is not associated with adolescents’ aggressive behaviour: evidence from a registered report. Royal Society Open Science, 6, 171474. https://doi.org/10.1098/rsos.171474.

Quandt, T. (2017). Stepping back to advance: Why IGD needs an intensified debate instead of a consensus: commentary on: chaos and confusion din DSM-5 diagnosis of Internet Gaming Disorder: issues, concerns, and recommendations for clarity in the field (Kuss et al.). Journal of Behavioral Addictions, 6(2), 121–123. https://doi.org/10.1556/2006.6.2017.014.

Rothstein, H. R., & Bushman, B. J. (2015). Methodological and reporting errors in meta-analytic reviews make other meta-analysts angry: a commentary on Ferguson (2015). Perspectives on Psychological Science, 10(5), 677–679. https://doi.org/10.1177/1745691615592235.

Savage, J. (2004). Does viewing violent media really cause criminal violence? A methodological review. Aggression and Violent Behavior, 10, 99–128.

Savage, J., & Yancey, C. (2008). The effects of media violence exposure on criminal aggression: a meta-analysis. Criminal Justice and Behavior, 35, 1123–1136.

Schwartz, J. A., Connolly, E. J., Nedelec, J. L., & Beaver, K. M. (2017). An investigation of genetic and environmental influences across the distribution of self-control. Criminal Justice and Behavior, 44(9), 1163–1182. https://doi.org/10.1177/0093854817709495.

Shamama-tus-Sabah, S., Gilani, N., & Iftikhar, R. (2012). Ravens Progressive Matrices: psychometric evidence, gender and social class differences in middle childhood. Journal of Behavioural Sciences, 22(3), 120–131.

Sherry, J. (2007). Violent video games and aggression: why can’t we find links? In R. Preiss, B. Gayle, N. Burrell, M. Allen & J. Bryant (Eds), Mass media effects research: advances through meta-analysis (pp. 231–248). Mahwah, NJ: L. Erlbaum.

Simmons, J., Nelson, L. & Simonsohn, U. (2012). 21 word solution. https://ssrn.com/abstract=2160588 or https://doi.org/10.2139/ssrn.2160588 or http://spsp.org/sites/default/files/dialogue_26%282%29.pdf.

Surette, R. (2013). Cause or catalyst: the interaction of real world and media crime models. American Journal of Criminal Justice, 38, 392–409.

Swedish Media Council. (2011). Våldsamma datorspel och aggression—en översikt av forskningen 2000–2011. http://www.statensmedierad.se/Publikationer/Produkter/Valdsamma-datorspel-och-aggression/. Accessed 14 Jan 2011.

von Salisch, M., Vogelgesang, J., Kristen, A., & Oppl, C. (2011). Preference for violent electronic games and aggressive behavior among children: the beginning of the downward spiral? Media Psychology, 14(3), 233–258. https://doi.org/10.1080/15213269.2011.596468.

Wilkinson, L.Task Force on Statistical Inference (1999). Statistical methods in psychological journals: guidelines and explanations. American Psychologist, 54(8), 594–604. https://doi.org/10.1037/0003-066X.54.8.594.

Zendle, D., Cairns, P., & Kudenko, D. (2018). No priming in video games. Computers in Human Behavior, 78, 113–125. https://doi.org/10.1016/j.chb.2017.09.021.

Authors' Contributions

C.J.F. performed the data analyses and co-wrote the draft of the manuscript; C.K.J.W. conceived of the study, was co-PI on the original grant, conducted data collection and statistical analyses, and cowrote the draft of the manuscript. All authors read and approved the final manuscript.

Funding

This project is supported by the Ministry of Education, Singapore and Media Development Authority (Project #EPI/06AK).

Data Sharing and Declaration

Data are available from the second author upon reasonable request.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors have no conflicts of interest, real or imagined, to declare.

Ethical Approval

All procedures described within were approved by local IRB.

Informed Consent

Youth and their parents were provided with informed consent about the survey and its basic nature as part of the panel recruitment.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ferguson, C.J., Wang, J.C.K. Aggressive Video Games are Not a Risk Factor for Future Aggression in Youth: A Longitudinal Study. J Youth Adolescence 48, 1439–1451 (2019). https://doi.org/10.1007/s10964-019-01069-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10964-019-01069-0