Abstract

During the last two decades, the first generation of beam combiners at the Very Large Telescope Interferometer has proved the importance of optical interferometry for high-angular resolution astrophysical studies in the near- and mid-infrared. With the advent of 4-beam combiners at the VLTI, the u − v coverage per pointing increases significantly, providing an opportunity to use reconstructed images as powerful scientific tools. Therefore, interferometric imaging is already a key feature of the new generation of VLTI instruments, as well as for other interferometric facilities like CHARA and JWST. It is thus imperative to account for the current image reconstruction capabilities and their expected evolutions in the coming years. Here, we present a general overview of the current situation of optical interferometric image reconstruction with a focus on new wavelength-dependent information, highlighting its main advantages and limitations. As an Appendix we include several cookbooks describing the usage and installation of several state-of-the art image reconstruction packages. To illustrate the current capabilities of the software available to the community, we recovered chromatic images, from simulated MATISSE data, using the MCMC software SQUEEZE. With these images, we aim at showing the importance of selecting good regularization functions and their impact on the reconstruction.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Except perhaps for the most simple objects, interferometric data are hard to interpret directly, and image reconstruction is a powerful tool for scientific analysis of the observations. However, optical long-baseline interferometric instruments have, since their beginnings, faced a series of challenges to produce science-grade images. These challenges are related to:

-

Sparse sampling of the measurements due to a limited number of spatial frequencies;

-

Non-convex inverse problem to solve (i.e., there may be several local minima);

-

Phase disturbance by the atmosphere in front of the telescope or interferometer, which smears out the phase information of the object.

Since direct inversion of the data is neither possible nor recommended, image reconstruction algorithms are based on regularized minimization processes that iteratively solve an (inverse) ill-posed problem, where the model of the data given the image is compared to the actual data, in order to determine how to better fit the data while respecting some imposed constraints. These constraints are needed to avoid over-fitting of the data (and thus explaining, erroneously, the noise as well as the significant signal) and to compensate for the sparsity of the data which leads to an under-determined problem. The basic concept of this regularized minimization could be expressed in the following form:

where χ2(x) is the likelihood of our data to a given imaging model, R(x)i are the used (prior) regularization functions and μi the weighting factors that trade-off between the likelihood and priors. There are several algorithms to perform the minimization to find the most probable image xML. Two of the most important algorithms are Gradient Descent and Monte Carlo Markov Chain (MCMC). Gradient Descent is an optimization algorithm that takes steps proportional to the negative of a gradient function with respect to the image pixels in order to find the best solution. This method is fast; however, it may fall on local minima that could lead to a misleading solution in the image construction process. As a consequence, several types of gradient descent algorithms have been developed to improve the basic one, such as the Active Set Algorithm for Conjugate Gradient Descent (ASA-CG) [9].

On the other hand, MCMC is based on a random process which determines the flux element positions in a pixel grid until the desired distribution of pixel flux fits the data and reaches an equilibrium distribution. The great advantage of this method is that it could find a global minimum, at the cost of being significantly slower than Gradient Descent.

By using these methods, several image reconstruction software have been developed to analyse optical/infrared interferometric data, and most of them are available to the community. However, due to the nature of the minimization process, these software cannot be considered “black boxes” which could produce science-grade images without any user interaction. The choice of regularization functions must be carried out with great attention [32], always based on the prior knowledge of the source’s brightness distribution. This choice has a significant influence on the resulting image (as we explore later), with some regularization functions emphasizing image smoothness while others emphasize sharp edges or compactness of the brightness distribution. A good image reconstruction is therefore not just the matter of having a good algorithm but also of using it correctly.

While most software can be understood from the principles summarized above, they still differ in a number of points. One of the most obvious difference is the kind of regularizations implemented. Some offer many different possibilities, while others are more restricted. Another less obvious difference, but not less important, is the likelihood term. This is mainly because optical interferometers do not provide complex visibilities but other observables such as the powerspectrum, the closure phase and the chromatic differential phase. These quantities are less sensitive to random delays induced by the atmosphere turbulence while the complex visibilities are completely destroyed by them. At this time, there is no consensus on the statistics of these observables and the image reconstruction algorithms implement different expressions for the likelihood term.

During the last twenty years several algorithms have reached sufficient maturity to produce science-grade images, among them we have: the Building-Block Method [12], BSMEM [5], MiRA [44], SQUEEZE [3], WISARD [19], and IRBis [13]. The capabilities of these software to recover images from optical/infrared long-baseline interferometric data have been shown several times in the community through the “Interferometric Imaging Beauty Contest” (see e.g., [36]). Additionally, these software have been used repeatedly to recover images from Fizeau interferometric data in Sparse Aperture Masking observations. For example, Tuthill et al. [45] used MACIM to recover the first images of Pinwheel nebulae; while [35] used BSMEM to recover images of several bow shocks around WR-stars in the central parsec of our Galaxy.

However, the standard use of several of these software packages was limited to recover monochromatic images. With the consolidation of the spectro-interferometric capabilities of the second generation instruments, GRAVITY [8] and MATISSE [17], at the Very Large Telescope Interferometer (VLTI), some of the aforementioned software have been updated, and new methods are being developed to obtain chromatic images in a systematic way. For example, at the VIII edition of the “Interferometric Imaging Beauty Contest” a benchmark of different algorithms was done with simulated chromatic data for the first time [34].

2 The necessity of chromatic imaging

2.1 Scientific cases for optical/infrared interferometry

Optical interferometry allows one to probe the innermost regions of several astrophysical environments. Here we describe three examples, but there are of course many more. Around young stars, where the accretion on the star itself is almost done and the gas and dust disk have settled, the process of planet formation occurs with planet accretion, planet migration, gap formation, etc. These regions measure only a few astronomical units across (∼ 10 au), i.e. for a system at 100pc, this corresponds to a hundred milli-arcseconds, i.e. barely the angular resolution of an 8m-class telescope. Therefore, the high angular resolution of long baseline interferometry is the only one that provide us the possibility to explore the inner regions (at scales of ∼ 1–10 milli-arcseconds) of such environments. However, such environments are quite complex and properly determining their structure is only possible if image reconstruction techniques are robust.

Another example is the study of the line-emitting regions in massive stars. Here, the photosphere of the star is not directly imaged to probe its nature and evolution, but the distribution and kinematics of the surrounding gas. These regions are also located at only few au from the star, but typically the sources are located at distances larger than 1kpc, preventing them from being resolved even with adaptive optics on 8m-class telescopes. While continuum optical interferometric images have been reconstructed for a few of these objects, studies of the line-emitting region have remained difficult. Chromatic imaging, however, was recently attempted for Phi Per and Eta Car [24, 33], where it was shown that the inclusion of differential phase information can vastly improve the image quality.

The third example is asymptotic giant branch (AGB) stars. These objects are one of the final steps in the evolution of low- and intermediate-mass stars. Since they return most of their processed material to the interstellar medium, they are important for understanding the chemical evolution of galaxies [11]. Therefore, understanding the atmospheric structure is crucial to bridge the physics of the enriched stellar interior and the evolution-driven winds. Chromatic interferometric imaging allows us to resolve the structure of the photosphere and together with the inner dust-shell layers, allowing us to investigate convection and the formation of dust at the most compact scales, among other physical phenomena (see e.g., [26, 28]).

2.2 Solving the phase problem

The problem of image reconstruction was faced already a few decades ago in radio astronomy. Several methods like CLEAN and the Maximum Entropy Method (MEM) were developed to produce images out of visibilities and phases. However, it was not until a new set of techniques were developed (e.g., hybrid mapping and self-calibration) that imaging with radio telescopes came to a boom, allowing the recovery of spectral images (see e.g., [29, 31]). In optical/infrared long-baseline interferometry, it was considered up to very recently that the phase information was completely lost due to the turbulent atmosphere. Therefore, the powerspectrum (often called squared visibility) and the argument of the bispectrum (the so-called closure phase) were defined as the main observables of the geometrical information of the source’s brightness distribution. However, the “new” available wavelength-dependent phase (the so-called “differential phase”), accessible through the spectro-interferometric capabilities of instruments like AMBER [30], GRAVITY [8], and MATISSE [17] provides much more information than the geometric information of the closure phase alone.

The differential phase is an interferometric observable that provides information about the flux centroid position of the source’s brightness distribution across the observed bandpass. This observable was not considered in optical/infrared interferometry imaging until [21, 37], and [20]. Indeed, differential phase provides a corrugated phase measurement, which, in theory, can be incorporated into a self-calibration algorithm, in a very similar way to what is done in radio interferometry [29].

As early as 2003, J. Monnier anticipated “revived activity [on self-calibration] as more interferometers with imaging capability begin to produce data”. And indeed, the conceptual bases for using differential phases in image reconstruction were laid in [21]. The Schmitt paper [37] was a first attempt to use differential phases in image reconstruction. They considered that the phase in the continuum was equal to zero, making it possible to use the differential phase (then equal to the phase) in the Hα emission line of the β Lyr system. In this way, they were able to image the shock region between the two stars at different orbital phases.

Millour et al. 2011[20] went one step further, by using an iterative process similar to radio-interferometry self-calibration, in order to reconstruct the phase of the object from the closure phases and differential phases. This way, they could recover the image of a rotating gas+dust disc around a supergiant star, whose image is asymmetric even in the continuum (non-zero phase). This method was subsequently used in a few papers to reconstruct images of supergiant stars (see e.g., [25, 27]) and Eta Carinae (e.g., [33, 48]). More recently, Hone et al. [14] published the first chromatic reconstruction of the Brγ structure in a YSO with IRBis. Mourard et al. [24] presented an extended method to tackle the image reconstruction challenges posed by the lacking of the closure phases and a proper calibration of the spectrally-dispersed visibilities.

2.3 Going one step further

An enormous effort has been made in the community to develop reconstruction methods one step further. For example, a project named POLCA funded by the French ANR (Agence Nationale de la Recherche) made the following advances on the subject:

-

Statistical analysis of AMBER data showed that the interferometric data do not follow the usual assumptions of uncorrelation and Gaussian (Normal) noise distribution. Correlations over time are significant and can be partly disentangled by considering differential visibilities in addition to absolute visibilities. A Student distribution of noise on the visibilities should be used instead of a Normal one it is expected since visibility is calculated as the division of two random variables, like in [41]. This could lead to a future improvement on descent algorithms used in model-fitting or image reconstruction [38].

-

New development on the core image reconstruction algorithm to take into account the wavelength-dependence of the data has been achieved and is distributed under the “PAINTER” software [38]. It works on chromatic datasets (i.e. both the bispectrum and the differential phase) and produces chromatic image cubes by using the ADMM descent algorithm and spatio-spectral regularizations. An improved version, presented in [39], uses wavelets for spatial regularization and Discrete Cosine Transform (DCT) for spectral regularization.

-

A future chromatic image reconstruction algorithm is in development by F. Soulez & E. Thiébaut under the name “MIRA-3D” [40]. This algorithm uses a joint spectro-spatial regularisation, and thus will be applicable to objects with components exhibiting different spectra.

-

The power of combining chromatic model-fitting and “grey” image reconstruction was demonstrated by [15] for low-spectral resolution datasets. The software “SPARCO” was developed to demonstrate this. The potential of this technique is great and allows one to perform “numerical coronagraphy” on the interferometric data, by removing the (main) contribution from the central star.

On the other hand, Fabien Baron, at Georgia State University, has developed a chromatic imaging algorithm called SQUEEZE [1,2,3]. This software uses a MCMC as engine for the minimization. The code is able to use Simulated Annealing and Parallel Tempering with Metropolis-Hasting moves. SQUEEZE allows us to use (i) visibilities (amplitudes and phases), (ii) powerspectra, (iii) bispectra, or combination of them as input observables. One of the key characteristics of this software is the large number of regularization functions offered, like the L0-norm, the L2-norm, Entropy, Total Variation, Wavelets, etc.

All these software efforts have had a tremendous impact in the development of chromatic imaging, and they could lead to a new generation of image reconstruction software. For example, combining the new core algorithms with the chromatic model-fitting features of “SPARCO” and self-calibration techniques could produce a leading edge image reconstruction suite suitable to reduce both archival AMBER data, and the new data being taken with the new spectro-imaging interferometric instruments GRAVITY, and MATISSE at the VLTI or MYSTIC [42, 43] at CHARA.

2.4 Toward integrated tools for image reconstruction

With the developments mentioned above, one can produce images, not only by using the closure phases but also the wavelength-differential phases. These developments have allowed us to recover simultaneously chromatic images, while, additional techniques like self-calibration have allowed us to perform imaging even with 2 telescopes. Nowadays, the number of image reconstruction packages available to the community is large, however each of these packages uses different interfaces and programming languages, making a comparison relatively difficult in practice.

In this respect, there is a working group at JRA4 aiming at providing advances in easing the use of image reconstruction software through a web-based interface called OImagingFootnote 1, in a similar way to what has been done for model-fitting and the LITproFootnote 2 software at JMMC. Similarly the input/output formats (OIFITS v1 vs OIFITS v2) and visualization tools should be standardized to allow the comparison of different software and different runs in the huge image-reconstruction parameter space.

Also of interest is a series of complementary recipes to produce “science-grade” images like Low Frequency Filling, Monte Carlo analysis [22], and other recipes (which have no specific name) like using central symmetry properties of the object [16], or reducing the field-of-view of the reconstruction to the photosphere of the central star only [23]. The integration of such recipes with the available image reconstruction software, either hard-coding them in the software or with external tools, would be valuable for the coming generation of imaging software.

3 An example application: the future MATISSE image reconstruction capacity, illustrated

Here, we have highlighted the importance of image reconstruction to scientifically assess the information encoded in the optical interferometry data. Therefore, characterizing the imaging capabilities of the different interferometric arrays is necessary, especially in the frame of the upcoming infrared beam-combiners. MATISSE (Multi-Aperture mid-Infrared SpectroScopic Experiment; [17, 18]) is one of the second-generation interferometric instruments of the VLTI. This instrument is conceived to interconnect up to four telescopes, either the Unit Telescopes (UTs) or the Auxiliary Telescopes (ATs) to capture visibilities, closure phases and differential phases in the mid-infrared. It represents a major advance compared with its predecessor MIDI, mainly, because it will allow us to recover, for the first time, the closure phase at three different bands: L-M (2.8–5.2 μm), and N (8–13 μm).

3.1 Data simulation

One of the major science case studies of MATISSE is the characterization of proto-planetary discs around young stellar objects. In this respect, image reconstruction represents a unique tool to obtain constraints on (i) the physics in the inner discs, (ii) the signatures of interaction between forming planets and the dusty disc, (iii) detection of companions in the disc-like structure, (iv) the signatures tracing different dust mineralogy (e.g., the silicate feature at 10 μm) and (v) the gas disc kinematics, among others. Therefore, we selected a prototypical Herbig Ae star as our image reconstruction source. HD179218 is a B9 star with an effective temperature Teff = 9600 K [7], a stellar mass M = 2.9 M⊙ [46] and located at a distance of 250 pc [47]. Thanks to a large collection of MIDI and Spectral Energy Distribution (SED) data, Menu et al. (in prep.) inferred a disc with an eccentric inner gap. Nevertheless no image of this structure has been obtained so far. A set of radiative transfer images obtained from the Menu et al. model was used to simulate the expected MATISSE u − v coverage. We simulated three different interferometric arrays with the ATs, assuming MATISSE observations in low-resolution mode (R∼35). The three simulated configurations sample some of the small (A0-B2-C1-D0), medium (D0-G2-J3-K0) and large (A0-G1-J2-J3) telescope configurations available at the VLTI. We considered that the target was successfully observed at six different position angles over three different nights, each one with a different AT configuration. The applied noise model was generated using the MATISSE simulator developed at the Observatoire de la Côte d’Azur by Alexis Matter. This simulator uses the pre-computed theoretical interferometric observables and adds two main types of noise: (i) the fundamental noise and (ii) the calibration noise. Once the different error contributions are calculated, the theoretical observables are randomly changed following a Gaussian distribution within the computed error-bars. Figure 1 displays an example of the squared visibilities and closure phases recovered for the simulated AT configurations.

The figure displays the simulated squared visibilities (left) and closure phases (right) used to demonstrate the MATISSE imaging capabilities. The plotted observables correspond to three different observing nights, each one of them with a different ATs quadruplet. The colors represent the effective wavelength from 8μm (blue) to 13μm (red). Notice that the target is completely resolved and that it has a clear deviation from point-symmetry since the closure phases vary between -180∘ and 180∘

3.2 Recovering the images

To recover the images from our simulated data set, we used SQUEEZE. One of the key parameters in interferometric image reconstruction is the selection of the regularization functions. An important advantage of SQUEEZE is that it can process multiple regularization functions simultaneously, and because it uses MCMC sampling, they need not have well-defined gradients. The second important parameter for the reconstruction is the hyperparameter μ that controls the trade-off between the likelihood and the prior information of the brightness distribution encoded in the regularizers. Therefore, selecting the appropriate value of μ is crucial for the image reconstruction process. One of the most common methods to select the optimum μ is the L-curve [4, 6, 10, 15]. It computes the image solution for several values of μ, characterizing the response of the prior term versus the χ2. The optimal values are normally at the first inflection point of the L-curve. Figure 2 displays an example of this method, where R(x)i (in this case the Total Variation) is compared with χ2(x) for different values of μ. The plot shows clearly three areas; the so-called “under-regularized” region, in which different values of the regularizer produce similar χ2; and inflection point, which corresponds to the optimal value of μ; and the so-called “over-regularized” region, where the χ2 increases proportionally to each increment in the value of μ. This occurs because the regularizer is dominating the convergence criteria of the algorithm. To illustrate these effects over the reconstruction, we display the role of μ over the reconstruction of our object in Fig. 3.

L-curve obtained with different values of μ using the Total Variation as regularizer. The vertical axis displays the value of R(x)i and the horizontal axis the χ2(x). The optimal value of μ is at the elbow of the curve with the smallest χ2, in this case it is equal to 3.0e3. The optimal value of μ, together with the under-regularized and over-regularized regions are labelled on the frame

The image shows the impact of μ on the image reconstruction process. Eight reconstructed images of the source are shown using the hyperparameter values shown in Fig. 2. Notice that for the first three images (in the under-regularizer region) the general morphology of the object is recovered, but the reconstructed maps are clearly noisier than the one with the optimal value of μ = 3000. However, when the value of the hyperparameter is quite large, the structure of the source is completely erased and the code is not able to converge to reproduce the expected brightness distribution of the source

Figure 4 shows the N-band monochromatic reconstruction obtained with four different SQUEEZE regularization functions, to evaluate their impact. The target was considered to evolve as a grey-body object over all the channels inside the bandpass. Although this consideration is not true for most of the astrophysical objects, it represents a good starting point to calibrate the different parameters used for the reconstruction. In this case, we used both the squared visibilities and closure phases to recover the brightness distribution of our object. From this reconstruction, we could notice that the different regularizers were able to recover the general morphology of the target. Nevertheless, there were still some significant differences among them. For example, while the Total Variation was able to recover a smooth rim morphology, the other regularizers underestimated the brightness distribution of the rim for position angles between 90∘ and 180∘ (East of North). Additionally, all the reconstructed images show several bright-spots well localized along the rim, instead of a uniform distribution like in the model. This highlights the importance of a good selection of the regularization function and demonstrates the necessity of user interaction with the reconstruction process. It also shows that the reconstructed images are still models of the object’s brightness distribution and caution must be taken when physical parameters are derived from them.

Best reconstructed monochromatic images using SQUEEZE with four different regularizers. The leftmost panel displays an average of the model image for comparison. The colormap of the images is normalized to the maximum pixel value in the model frame. Notice the dependence of the reconstructed image according to the used regularization function

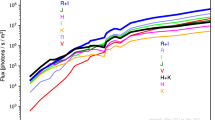

While the monochromatic images are crucial as first step to the reconstruction, one of the main goals of the new generation of infrared interferometers is to recover the morphological changes of the astrophysical objects along the bandpass of the observations. This aspect is particularly important for MATISSE, which will have a bandpass as large as Δλ ∼ 5 μm in the N-band. Therefore, we explored this capability by performing a polychromatic reconstruction by including the differential phase information of the data. The initial setup of the reconstructed images considers the best image from the first monochromatic reconstruction as starting point. In the previous reconstructions, the L0-norm and the TV regularizers exhibited the best performance. Therefore, for this new reconstruction, both of them were used together with a transpectral L2-norm regularization. Transpectral in this case means that the regularization was applied in the wavelength direction as well; more information about the implementation can be found in the SQUEEZE documentation. The hyperparameters were selected by tuning them manually with values close to the best ones obtained from the L-curve analysis of the monochromatic case. Nevertheless, we are aware that selecting the optimal ones from a multi-dimensional L-curve of the used regularizers can optimize these values. Fourteen images were recovered; each one of them corresponding to one of the simulated spectral channels. Figure 5 displays the recovered chromatic images. It is clear that, with this initial setup, the rim morphology was reproduced at all the reconstructed channels. However, the central source was only recovered in the first four of them. It is important to mention that the total flux in the central source corresponds to only a small percentage of the total flux in the object. Even for the first spectral channel at 8.18 μm, it only corresponds to 5% of the total flux, decreasing for longer wavelengths up to ∼0.8% at 12.72 μm.

Best reconstructed polychromatic images. The figure shows 14 recovered images that correspond to each one of the channels sampled with the simulated data. For comparison, each one of the model images are also plotted. The wavelength of each image is shown in the model frames. The colormap of the images is normalized to the maximum pixel value in the model frames. Since the amount of flux in the central source is considerably smaller than the flux in the ring, the central object is only recovered in the first four frames, for the rest of the images, the structure of the ring is dominant

4 Conclusion

-

The recovery of milliarcsecond resolution interferometric images in the infrared will represent a major breakthrough for the coming generation of beam-combiners. For example, on the one hand, MATISSE will allow us to image astrophysical objects in the mid-infrared with unprecedented resolution, representing a tremendous advantage with respect to its predecessor MIDI, which only allowed for parametric modelling of the interferometric data. On the other hand, GRAVITY will enable the possibility to use referenced-phase information by using the so-called dual-field mode. Even the James Webb Space Telescope will include a Sparse Aperture Masking mode on board, which will require mature infrared image reconstruction algorithms to recover the brightness distribution of the imaged objects.

-

Our current understanding of the image reconstruction problem and the current developed software allowed us to perform both monochromatic and polychromatic image reconstructions of simulated interferometric data. For the example here presented, we could recover the different components of a proto-typical young stellar object. However, we have also shown that image reconstruction is still not trivial and required a systematic study of the parameters used in the reconstruction, particularly, of the different regularizers and the value of the hyperparameters. Therefore, it is necessary to compare the results of the image reconstruction with several software and methods to better understand the systematics. To accomplish this task, a standarization of the benchmarking methods should be done to properly evaluate the outcome of the different methods in a systematic way.

-

The better we understand the requirements to achieve a science-grade images from interferometric observations, the easier it will be to provide tools and procedures to the community to make more accessible the use of the current techniques. This is a task that should be addressed in the coming years as part of an effort to broaden and engage the field with more members of the international community.

-

Testing the image capabilities of the different imaging algorithms is essential to have a full description of them and it is essential for the future of infrared interferometry in Europe.

Notes

Available at http://www.jmmc.fr/oimaging part of the European Commission’s FP7 Capacities programme (Grant Agreement Number 312430).

LITpro software available at http://www.jmmc.fr/litpro

References

Baron, F., Kloppenborg, B.: GPU-accelerated image reconstruction for optical and infrared interferometry. Society of Photo-Optical Instrumentation Engineers (SPIE) Conference Series 7734, 4 (2010)

Baron, F., Kloppenborg, B., Monnier, J.: Toward 5D image reconstruction for optical interferometry. Society of Photo-Optical Instrumentation Engineers (SPIE) Conference Series 8445, 1 (2012)

Baron, F., Monnier, J.D., Kloppenborg, B.: A novel image reconstruction software for optical/infrared interferometry. Optical and Infrared Interferometry II, SPIE 342I, 77 (2010)

Bose, N.K., Lertrattanapanich, S., Koo, J.: Advances in superresolution using the L-curve. Proc. IEEE Int. Symp. Circuits Syst. 2, 433–436 (2001)

Buscher, D.F.: Direct maximum-entropy image reconstruction from the Bispectrum. In: Robertson, J.G., Tango, W.J. (eds.) Very High Angular Resolution Imaging, IAU Symposium, vol. 158, p. 91 (1994)

Domiciano de Souza, A., Kervella, P., Moser Faes, D., Dalla Vedova, G., Mérand, A., Le Bouquin, J.B., Espinosa Lara, F., Rieutord, M., Bendjoya, P., Carciofi, A.C., Hadjara, M., Millour, F., Vakili, F.: The environment of the fast rotating star Achernar. III. Photospheric parameters revealed by the VLTI. A&A 569, A10 (2014). https://doi.org/10.1051/0004-6361/201424144

Folsom, C.P., Bagnulo, S., Wade, G.A., Alecian, E., Landstreet, J.D., Marsden, S.C., Waite, I.A.: Chemical abundances of magnetic and non-magnetic Herbig Ae/Be stars. MNRAS 422, 2072–2101 (2012)

Gravity Collaboration, Abuter, R., Accardo, M., Amorim, A., Anugu, N., Ávila, G., Azouaoui, N., Benisty, M., Berger, J.P., Blind, N., Bonnet, H., Bourget, P., Brandner, W., Brast, R., Buron, A., Burtscher, L., Cassaing, F., Chapron, F., Choquet, É., Clénet, Y., Collin, C., Coudé Du Foresto, V, de Wit, W., de Zeeuw, P.T., Deen, C., Delplancke-Ströbele, F., Dembet, R., Derie, F., Dexter, J., Duvert, G., Ebert, M., Eckart, A., Eisenhauer, F., Esselborn, M., Fédou, P., Finger, G., Garcia, P., Garcia Dabo, C.E., Garcia Lopez, R., Gendron, E., Genzel, R., Gillessen, S., Gonte, F., Gordo, P., Grould, M., Grözinger, U., Guieu, S., Haguenauer, P., Hans, O., Haubois, X., Haug, M., Haussmann, F., Henning, T., Hippler, S., Horrobin, M., Huber, A., Hubert, Z., Hubin, N., Hummel, C.A., Jakob, G., Janssen, A., Jochum, L., Jocou, L., Kaufer, A., Kellner, S., Kendrew, S., Kern, L., Kervella, P., Kiekebusch, M., Klein, R., Kok, Y., Kolb, J., Kulas, M., Lacour, S., Lapeyrère, V., Lazareff, B., Le Bouquin, J.B., Lèna, P., Lenzen, R., Lévêque, S., Lippa, M., Magnard, Y., Mehrgan, L., Mellein, M., Mérand, A., Moreno-Ventas, J., Moulin, T., Müller, E., Müller, F., Neumann, U., Oberti, S., Ott, T., Pallanca, L., Panduro, J., Pasquini, L., Paumard, T., Percheron, I., Perraut, K., Perrin, G., Pflüger, A., Pfuhl, O., Phan Duc, T., Plewa, P.M., Popovic, D., Rabien, S., Ramírez, A., Ramos, J., Rau, C., Riquelme, M., Rohloff, R.R., Rousset, G., Sanchez-Bermudez, J., Scheithauer, S., Schöller, M., Schuhler, N., Spyromilio, J., Straubmeier, C., Sturm, E., Suarez, M., Tristram, K.R.W., Ventura, N., Vincent, F., Waisberg, I., Wank, I., Weber, J., Wieprecht, E., Wiest, M., Wiezorrek, E., Wittkowski, M., Woillez, J., Wolff, B., Yazici, S., Ziegler, D., Zins, G.: First light for GRAVITY: phase referencing optical interferometry for the very large telescope interferometer. A&A 602, A94 (2017). https://doi.org/10.1051/0004-6361/201730838

Hager, W.W., Zhang, H.: Recent advances in bound constrained optimization. In: System Modeling and Optimization (2005)

Hansen, P.C.: Analysis of discrete ill-possed problems by means of the L-curve. SIAM Rev. 34, 561–580 (1992)

Herwig, F.: Evolution of asymptotic giant branch stars. ARA&A 43, 435–479 (2005). https://doi.org/10.1146/annurev.astro.43.072103.150600

Hofmann, K.H., Weigelt, G.: Iterative image reconstruction from the bispectrum. A&A 278, 328–339 (1993)

Hofmann, K.H., Weigelt, G., Schertl, D.: An image reconstruction method (IRBis) for optical/infrared interferometry. Astron. Astrophys. 565, A48 (2014)

Hone, E., Kraus, S., Kreplin, A., Hofmann, K.H., Weigelt, G., Harries, T., Kluska, J.: Gas dynamics in the inner few AU around the Herbig B[e] star MWC297. Indications of a disk wind from kinematic modeling and velocity-resolved interferometric imaging. A&A 607, A17 (2017). https://doi.org/10.1051/0004-6361/201731531

Kluska, J., Malbet, F., Berger, J.P., Baron, F., Lazareff, B., Le Bouquin, J.B., Monnier, J.D., Soulez, F., Thiébaut, E.: SPARCO : a semi-parametric approach for image reconstruction of chromatic objects. Application to young stellar objects. A&A, 564, A80 (2014)

Le Bouquin, J.B., Lacour, S., Renard, S., Thiébaut, E., Merand, A., Verhoelst, T.: Pre-maximum spectro-imaging of the Mira star T Leporis with AMBER/VLTI. A&A 496, L1–L4 (2009). https://doi.org/10.1051/0004-6361/200811579

Lopez, B., Antonelli, P., Wolf, S., Lagarde, S., Jaffe, W., Navarro, R., Graser, U., Petrov, R., Weigelt, G., Bresson, Y., Hofmann, K.H., Beckman, U., Henning, T., Laun, W., Leinert, C., Kraus, S., Robbe-Dubois, S., Vakili, F., Richichi, A., Abraham, P., Augereau, J.C., Behrend, J., Berio, P., Berruyer, N., Chesneau, O., Clausse, J.M., Connot, C., Demyk, K., Danchi, W.C., Dugué, M., Finger, G., Flament, S., Glazenborg, A., Hannenburg, H., Heininger, M., Hugues, Y., Hron, J., Jankov, S., Kerschbaum, F., Kroes, G., Linz, H., Lizon, J.L., Mathias, P., Mathar, R., Matter, A., Menut, J.L., Meisenheimer, K., Millour, F., Nardetto, N., Neumann, U., Nussbaum, E., Niedzielski, A., Mosoni, L., Olofsson, J., Rabbia, Y., Ratzka, T., Rigal, F., Roussel, A., Schertl, D., Schmider, F.X., Stecklum, B., Thiebaut, E., Vannier, M., Valat, B., Wagner, K., Waters, L.B.F.M.: MATISSE: Perspective of imaging in the mid-infrared at the VLTI. Optical and Infrared Interferometry 132B, 70 (2008)

Lopez, B., Lagarde, S., Wolf, S., Jaffe, W., Weigelt, G., Antonelli, P., Abraham, P., Augereau, J.C., Beckman, U., Behrend, J., Berruyer, N., Bresson, Y., Chesneau, O., Clausse, J.M., Connot, C., Danchi, W.C., Delbo, M., Demyk, K., Domiciano, A., Dugué, M., Glazenborg, A., Graser, U., Hanenburg, H., Henning, T., Heininger, M., Hofmann, K.H., Hugues, Y., Jankov, S., Kraus, S., Laun, W., Leinert, C., Linz, H., Matter, A., Mathias, P., Meisenheimer, K., Menut, J.L., Millour, F., Mosoni, L., Neumann, U., Niedzielski, A., Nussbaum, E., Petrov, R., Ratzka, T., Robbe-Dubois, S., Roussel, A., Schertl, D., Schmider, F.X., Stecklum, B., Thiebaut, E., Vakili, F., Wagner, K., Waters, L.B.F.M., Absil, O., Hron, J., Nardetto, N., Olofsson, J., Valat, B., Vannier, M., Goldman, B., Hönig, S., Cotton, W.D.: Matisse. Astrophysics and Space Science Proceedings 9, 353 (2009)

Meimon, S.C., Mugnier, L.M., Le Besnerais, G: Reconstruction method for weak-phase optical interferometry. Opt. Lett. 30, 1809–1811 (2005). https://doi.org/10.1364/OL.30.001809

Millour, F., Meilland, A., Chesneau, O., Stee, P., Kanaan, S., Petrov, R., Mourard, D., Kraus, S.: Imaging the spinning gas and dust in the disc around the supergiant A[e] star HD 62623. Astron. Astrophys. 526, A107 (2011)

Millour, F., Vannier, M., Petrov, R.G., Chesneau, O., Dessart, L., Stee, P.: Differential interferometry with the AMBER/VLTI instrument: description, performances and illustration. In: Carbillet, M., Ferrari, A., Aime, C. (eds.) EAS Publications Series, EAS Publications Series, vol. 22, pp. 379–388 (2006)

Millour, F.A.: Three recipes for improving the image quality with optical long-baseline interferometers: BFMC, LFF, and DPSC. SPIE Conference Series - Optical and Infrared Interferometry, III 8445, 84,451B (2012)

Monnier, J.D., Zhao, M., Pedretti, E., Thureau, N., Ireland, M., Muirhead, P., Berger, J.P., Millan-Gabet, R., Van Belle, G., ten Brummelaar, T., McAlister, H., Ridgway, S., Turner, N., Sturmann, L., Sturmann, J., Berger, D.: Imaging the surface of altair. Science 317, 342 (2007)

Mourard, D., Monnier, J.D., Meilland, A., Gies, D., Millour, F., Benisty, M., Che, X., Grundstrom, E.D., Ligi, R., Schaefer, G., Baron, F., Kraus, S., Zhao, M., Pedretti, E., Berio, P., Clausse, J.M., Nardetto, N., Perraut, K., Spang, A., Stee, P., Tallon-Bosc, I., McAlister, H., ten Brummelaar, T., Ridgway, S.T., Sturmann, J., Sturmann, L., Turner, N., Farrington, C.: Spectral and spatial imaging of the Be+sdO binary Phi Persei. Astron. Astrophys. 577, A51 (2015)

Ohnaka, K., Hofmann, K.H., Schertl, D., Weigelt, G., Baffa, C., Chelli, A., Petrov, R., Robbe-Dubois, S.: High spectral resolution imaging of the dynamical atmosphere of the red supergiant Antares in the CO first overtone lines with VLTI/AMBER. A&A 555, A24 (2013). https://doi.org/10.1051/0004-6361/201321063

Ohnaka, K., Weigelt, G., Hofmann, K.H.: Vigorous atmospheric motion in the red supergiant star Antares. Nature 548, 310–312 (2017). https://doi.org/10.1038/nature23445

Ohnaka, K., Weigelt, G., Millour, F., Hofmann, K.H., Driebe, T., Schertl, D., Chelli, A., Massi, F., Petrov, R., Stee, P.: Imaging the dynamical atmosphere of the red supergiant Betelgeuse in the CO first overtone lines with VLTI/AMBER. A&A 529, A163 (2011). https://doi.org/10.1051/0004-6361/201016279

Paladini, C., Baron, F., Jorissen, A., Le Bouquin, J.B., Freytag, B., van Eck, S., Wittkowski, M., Hron, J., Chiavassa, A., Berger, J.P., Siopis, C., Mayer, A., Sadowski, G., Kravchenko, K., Shetye, S., Kerschbaum, F., Kluska, J., Ramstedt, S.: Large granulation cells on the surface of the giant star π 1 Gruis. Nature 553, 310–312 (2018). https://doi.org/10.1038/nature25001

Pearson, T.J., Readhead, A.C.S.: Image formation by self-calibration in radio astronomy. ARA&A 22, 97–130 (1984). https://doi.org/10.1146/annurev.aa.22.090184.000525

Petrov, R.G., Malbet, F., Weigelt, G., Antonelli, P., Beckmann, U., Bresson, Y., Chelli, A., Dugué, M., Duvert, G., Gennari, S., Glück, L., Kern, P., Lagarde, S., Le Coarer, E., Lisi, F., Millour, F., Perraut, K., Puget, P., Rantakyrö, F., Robbe-Dubois, S., Roussel, A., Salinari, P., Tatulli, E., Zins, G., Accardo, M., Acke, B., Agabi, K., Altariba, E., Arezki, B., Aristidi, E., Baffa, C., Behrend, J., Blöcker, T., Bonhomme, S., Busoni, S., Cassaing, F., Clausse, J.M., Colin, J., Connot, C., Delboulbé, A., Domiciano de Souza, A., Driebe, T., Feautrier, P., Ferruzzi, D., Forveille, T., Fossat, E., Foy, R., Fraix-Burnet, D., Gallardo, A., Giani, E., Gil, C., Glentzlin, A., Heiden, M., Heininger, M., Hernandez Utrera, O., Hofmann, K.H., Kamm, D., Kiekebusch, M., Kraus, S., Le Contel, D., Le Contel, J.M., Lesourd, T., Lopez, B., Lopez, M., Magnard, Y., Marconi, A., Mars, G., Martinot-Lagarde, G., Mathias, P., Mège, P., Monin, J.L., Mouillet, D., Mourard, D., Nussbaum, E., Ohnaka, K., Pacheco, J., Perrier, C., Rabbia, Y., Rebattu, S., Reynaud, F., Richichi, A., Robini, A., Sacchettini, M., Schertl, D., Schöller, M., Solscheid, W., Spang, A., Stee, P., Stefanini, P., Tallon, M., Tallon-Bosc, I., Tasso, D., Testi, L., Vakili, F., von der Lühe, O., Valtier, J.C., Vannier, M., ventura, N.: AMBER, the near-infrared spectro-interferometric three-telescope VLTI instrument. A&A 464, 1–12 (2007)

Readhead, A.C.S., Nakajima, T.S., Pearson, T.J., Neugebauer, G., Oke J.b., Sargent, W.L.W: Diffraction-limited imaging with ground-based optical telescopes. AJ 95, 1278–1296 (1988)

Renard, S., Thiébaut, E., Malbet, F.: Image reconstruction in optical interferometry: benchmarking the regularization. A&A 533, A64 (2011). https://doi.org/10.1051/0004-6361/201016263

Sanchez-Bermudez, J.: Gravity chromatic imaging of the Eta Car’s core. In: Imaging of Stellar Surfaces, p. 32. https://doi.org/10.5281/zenodo.1220791 (2018)

Sanchez-Bermudez, J., Pott, J.U., van Boekel, R., Henning, T., Baron, F., Matter, A., Lopez, B., Millour, F., Weigelt, G., Hofmann, K.H., Schertl, D.: Imaging capabilities of the VLTI/MATISSE Spectro-interferometric instrument. In: Optical and Infrared Interferometry and Imaging V, Proc. SPIE, vol. 9907, p. 99070B (2016), https://doi.org/10.1117/12.2232308

Sanchez-Bermudez, J., Schödel, R., Alberdi, A., Muzić, K., Hummel, C.A., Pott, J.U.: Properties of bow-shock sources at the Galactic center. A&A 567, A21 (2014). https://doi.org/10.1051/0004-6361/201423657

Sanchez-Bermudez, J., Thiébaut, E., Hofmann, K.H., Heininger, M., Schertl, D., Weigelt, G., Millour, F., Schutz, A., Ferrari, A., Vannier, M., Mary, D., Young, J.: The 2016 interferometric imaging beauty contest. In: Optical and Infrared Interferometry and Imaging V, Proc. SPIE, vol. 9907, p. 99071D. https://doi.org/10.1117/12.2231982 (2016)

Schmitt, H.R., Pauls, T.A., Tycner, C., Armstrong, J.T., Zavala, R.T., Benson, J.A., Gilbreath, G.C., Hindsley, R.B., Hutter, D.J., Johnston, K.J., Jorgensen, A.M., Mozurkewich, D.: Navy prototype optical interferometer imaging of line emission regions of β Lyrae using differential phase referencing. ApJ 691, 984–996 (2009). https://doi.org/10.1088/0004-637X/691/2/984

Schutz, A., Ferrari, A., Mary, D., Soulez, F., Thiébaut, É., Vannier, M.: PAINTER: a spatio-spectral image reconstruction algorithm for optical interferometry. arXiv:1407.1885 (2014)

Schutz, A., Ferrari, A., Mary, D., Thiébaut, E., Soulez, F.: Large scale 3D image reconstruction in optical interferometry. arXiv:1503.01565(2015)

Soulez, F., Thiébaut, É.: An image reconstruction framework for polychromatic interferometry. In: Arnold, L., Le Coroller, H., Surdej, J. (eds.) Improving the Performances of Current Optical Interferometers - Future Designs, pp. 255–262 (2014)

Tatulli, E., Millour, F., Chelli, A., Duvert, G., Acke, B., Hernandez Utrera, O., Hofmann, K.H., Kraus, S., Malbet, F., Mège, P., Petrov, R.G., Vannier, M., Zins, G., Antonelli, P., Beckmann, U., Bresson, Y., Dugué, M., Gennari, S., Glück, L., Kern, P., Lagarde, S., Le Coarer, E., Lisi, F., Perraut, K., Puget, P., Rantakyrö, F., Robbe-Dubois, S., Roussel, A., Weigelt, G., Accardo, M., Agabi, K., Altariba, E., Arezki, B., Aristidi, E., Baffa, C., Behrend, J., Blöcker, T., Bonhomme, S., Busoni, S., Cassaing, F., Clausse, J.M., Colin, J., Connot, C., Delboulbé, A., Domiciano de Souza, A., Driebe, T., Feautrier, P., Ferruzzi, D., Forveille, T., Fossat, E., Foy, R., Fraix-Burnet, D., Gallardo, A., Giani, E., Gil, C., Glentzlin, A., Heiden, M., Heininger, M., Kamm, D., Kiekebusch, M., Le Contel, D., Le Contel, J.M., Lesourd, T., Lopez, B., Lopez, M., Magnard, Y., Marconi, A., Mars, G., Martinot-Lagarde, G., Mathias, P., Monin, J.L., Mouillet, D., Mourard, D., Nussbaum, E., Ohnaka, K., Pacheco, J., Perrier, C., Rabbia, Y., Rebattu, S., Reynaud, F., Richichi, A., Robini, A., Sacchettini, M., Schertl, D., Schöller, M., Solscheid, W., Spang, A., Stee, P., Stefanini, P., Tallon, M., Tallon-Bosc, I., Tasso, D., Testi, L., Vakili, F., von der Lühe, O., Valtier, J.C., Ventura, N.: Interferometric data reduction with AMBER/VLTI. Principle, estimators, and illustration. A&A 464, 29–42 (2007)

Ten Brummelaar, T., Che, X., McAlister, H.A., Ireland, M., Monnier, J.D., Mourard, D., Ridgway, S.T., Sturmann, J., Sturmann, L., Turner, N.H., Tuthill, P.: The CHARA array adaptive optics program. In: American Astronomical Society Meeting Abstracts #227, American Astronomical Society Meeting Abstracts, vol. 227, p. 427.02 (2016)

Ten Brummelaar, T.A., Gies, D.G., McAlister, H.A., Ridgway, S.T., Sturmann, J., Sturmann, L., Schaefer, G.H., Turner, N.H., Farrington, C.D., Scott, N.J., Monnier, J.D., Ireland, M.J.: An update on the CHARA array. In: Optical and Infrared Interferometry and Imaging V, Proc. SPIE, vol. 9907, p. 990703. https://doi.org/10.1117/12.2232125 (2016)

Thiébaut, E.: MIRA: an effective imaging algorithm for optical interferometry. Society of Photo-Optical Instrumentation Engineers (SPIE) Conference Series 7013, 1 (2008)

Tuthill, P.G., Monnier, J.D., Danchi, W.C.: A dusty pinwheel nebula around the massive star WR104. Nature 398, 487–489 (1999). 10.1038/19033

van Boekel, R., Min, M., Waters, L.B.F.M., de Koter, A., Dominik, C., van den Ancker, M.E., Bouwman, J.: A 10 μm spectroscopic survey of Herbig Ae star disks: grain growth and crystallization. Astron. Astrophys. 437, 189–208 (2005)

van Leeuwen, F.: Validation of the new Hipparcos reduction. Astron. Astrophys. 474, 653–664 (2007)

Weigelt, G., Hofmann, K.H., Schertl, D., Clementel, N., Corcoran, M.F., Damineli, A, de Wit, W.J., Grellmann, R., Groh, J., Guieu, S., Gull, T., Heininger, M., Hillier, D.J., Hummel, C.A., Kraus, S., Madura, T., Mehner, A., Mérand, A., Millour, F., Moffat, A.F.J., Ohnaka, K., Patru, F., Petrov, R.G., Rengaswamy, S., Richardson, N.D., Rivinius, T., Schöller, M., Teodoro, M., Wittkowski, M.: VLTI-AMBER velocity-resolved aperture-synthesis imaging of η Carinae with a spectral resolution of 12 000. Studies of the primary star wind and innermost wind-wind collision zone. A&A 594, A106 (2016)

Acknowledgments

Open access funding provided by Max Planck Society.

Author information

Authors and Affiliations

Corresponding author

Additional information

This article is part of the Topical Collection on Future of Optical-infrared Interferometry in Europe

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Sanchez-Bermudez, J., Millour, F., Baron, F. et al. Why chromatic imaging matters. Exp Astron 46, 457–473 (2018). https://doi.org/10.1007/s10686-018-9617-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10686-018-9617-y