Abstract

We conduct a large scale experiment to investigate peer effects in computer assisted learning (CAL). Identification relies on three levels of randomization. We find an average 0.17 standard deviation improvement in math scores among primary school students. This average effect is the same for students treated individually or in pairs, implying that peer effects double the learning benefit from a given equipment. Among paired students, poor performers benefit more from CAL when paired with good performers and vice versa. Average performers benefit equally irrespective of who they are paired with. This suggests that the treatment is dominated by knowledge exchange between peers. We also find that CAL treatment reduces the dispersion in math scores and that the beneficial effects of CAL can be strengthened if weak students are systematically paired with strong students.

Similar content being viewed by others

Notes

Reverse confirmation bias (Bougheas et al. 2015) could potentially create some confusion among strong students matched with weaker students. The computer software is designed to minimize this occurrence, however.

One of them enjoys the same treatment level as someone treated individually, while the other receives no treatment at all.

Grade 1 and 2 students are not included because they can not read at levels high enough to use software.

It has also be observed that increasing the number of high-achieving students in a group may induce low-achieving students to form subgroups among each other, something they might not have done if high-achieving students are less available (Carrell et al. 2013).

The \(\beta\) coefficients should be understood as capturing both exogenous and endogenous peer effects (Manski 1993), i.e., the effect of being paired with a treated student j, and the multiplier effect of j’s CAL-induced learning on i’s own learning. To estimate endogenous and exogenous effects separately, we would either need to observe paired students who did not to receive CAL treatment, or observe students paired with different numbers of peers (e.g., Fafchamps and Vicente 2013; Fafchamps et al. 2014). Neither of these is possible here given the design of our intervention.

Even if relative performance does not matter, we still may want to include average class performance as regressor to control for class differences that may, in a small sample, be correlated with treatment.

The reader may wonder whether, in model (6), \(\alpha\) can still be interpreted as the ATE of the CAL intervention even though we have not subtracted the mean of \((y_{it}-\overline{y}_{ct})\) from each interaction term. The answer is yes because the mean of \((y_{it}-\overline{y} _{ct})\) is, by construction, equal to 0.

If \(y_{it}\) is omitted from regression (7), \(\beta _{2}\) is affected by exclusion bias (Caeyers and Fafchamps 2016). This bias arises because \(y_{it}\) is positively correlated with \(y_{it+1}\) but negatively correlated with \(y_{jt}\). This negative correlation arises mechanically because high ability individuals are, on average, paired with individuals of lower ability, and vice versa.

This is obtained by using:

$$\begin{aligned} y_{it+1}\,=\, & {} k+\rho y_{it}+\gamma y_{it}T_{it} \\&+(\beta _{0}+\beta _{1}(y_{it}-\overline{y}_{t})+\beta _{2}(y_{jt}- \overline{y}_{t})+\beta _{3}(y_{it}-\overline{y}_{t})(y_{jt}-\overline{y} _{t}))P_{t}+u_{it+1} \\\,=\, & {} (k+\beta _{0}-\beta _{1}\overline{y}_{t})+(\rho +\gamma +\beta _{1})y_{it}+\beta _{2}(y_{jt}-\overline{y}_{t})+\beta _{3}(y_{it}-\overline{y }_{t})(y_{jt}-\overline{y}_{t})+u_{it+1} \end{aligned}$$from which we get our model in Guryan form:

$$\begin{aligned} y_{it+1}\,=\,& {} (k+\beta _{0}-(\beta _{1}+\beta _{2})\overline{y}_{t}+\beta _{3} \overline{y}_{t}^{2})+(\rho +\gamma +\beta _{1}-\beta _{3}\overline{y} _{t})y_{it} \\&+(\beta _{2}-\beta _{3}\overline{y}_{t})y_{jt}+\beta _{3}y_{it}y_{jt}+u_{it+1} \end{aligned}$$Finding an algebraic solution is not feasible given that the optimization problem is not differentiable—each classroom contains a finite number of students with different abilities. A numerical approach is thus necessary. In general, the numerical optimum can only be found with certainty by complete enumeration, that is, by computing the welfare gain for each possible way of pairing all the students in the class. Even for a small classroom, the total number of possible matches is a very large number and is impractical to compute. For instance, for a class size of 30, the number of possible pairings is 8.0949E+27. To illustrate how large this number is, imagine that we could compute the educational gain of one million class pairings per second. Enumerating all the possible combinations would take 256 quadrillion years, which amounts to 20,000 times the age of the universe. Moreover, this calculation would have to be done for each classroom separately.

There are other possible pairing rules, depending on the nature of peer effects. See for instance, Booij et al. (2014) who discuss a variety of assignment rules in the context of the assignment of university students to tutorial groups.

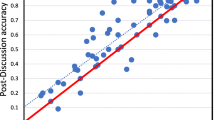

Before pooling we normalize the two distributions to have the same mean by subtracting the ATE of 0.17 from the paired students’ scores.

This finding is similar to that reported by Booij et al. (2014) in the context of tutorial groups for university students.

References

Angrist, J. D., & Lang, K. (2004). Does school integration generate peer effects? Evidence from Boston’s Metco Program. American Economic Review, 94, 1613–1634.

Bacharach, M. (2006). Beyond individual choice: Teams and frames in game theory. Princeton, NJ: Princeton University Press.

Bandiera, O., Barankay, I., & Rasul, I. (2010). Social incentives in the workplace. Review of Economic Studies, 77(2), 417–58.

Booij, A. S., Leuven, E., & Oosterbeek, H. (2014). The effect of ability grouping in university on student outcomes. Amsterdam: University of Amsterdam.

Bougheas, S., Nieboer, J., & Sefton, M. (2015). Risk taking and information aggregation in groups. Journal of Economic Psychology, 51, 34–47.

Bruhn, M., & McKenzie, D. (2009). In pursuit of balance: Randomization in practice in development field experiments. American Economic Journal: Applied Economics, 1(4), 200–232.

Burns, J., Corno, L., & La Ferrara, E. (2013). Interaction, stereotypes and performance: Evidence from South Africa. Mimeo: Bocconi University.

Bursztyn, L., Ederer, F., Ferman, B., & Yuchtman, N. (2014). Understanding mechanisms underlying peer effects: Evidence from a field experiment on financial decisions. Econometrica, 82(4), 1273–1301.

Caeyers, B., & Fafchamps, M. (2016). Exclusion bias in the estimation of peer effects. In NBER Working Paper 22565.

Carrell, S. E., Fullerton, R. L., & West, J. E. (2009). Does your cohort matter? Measuring peer effects in college achievement. Journal of Labor Economics, 27(3), 439–464.

Carrell, S. E., Sacerdote, B. I., & West, J. E. (2013). From natural variation to optimal policy? The importance of endogenous peer group formation. Econometrica, 81(3), 855–882.

China National Bureau of Statistics (CNBS). (2011). China national statistical yearbook, 2011. Beijing: China State Statistical Press.

China National Bureau of Statistics (CNBS). (2013). China national statistical yearbook, 2013. Beijing: China State Statistical Press.

Cooper, D. J., & Kagel, J. H. (2005). Are two heads better than one? Team versus individual play in signaling games. American Economic Review, 95(3), 477–509.

Cooper, D. J., & Rege, M. (2011). Misery loves company: Social regret and social interaction effects in choices under risk and uncertainty. Games and Economic Behavior, 73, 91–110.

Duflo, E., Dupas, P., & Kremer, M. (2011). Peer effects, teacher incentives, and the impact of tracking: Evidence from a randomized evaluation in Kenya. American Economic Review, 101(5), 1739–74.

Epple, D., & Romano, R. E. (2011). Peer effects in education: A survey of the theory and evidence. In J. Benhabib, A. Bisin, & M. O. Jackson (Eds.), Handbook of social economics (Vol. 1B, pp. 1053–1163). Amsterdam: Elsevier.

Fafchamps, M., & Vicente, P. (2013). Political violence and social networks: Experimental evidence from a Nigerian election. Journal of Development Economics, 101, 27–48.

Fafchamps, M., Kebede B., & Zizzo D. (2015). Keep up with the winners: Experimental evidence on risk taking, asset integration, and peer effects. European Economic Review, Journal of Development Economics, 79C, 59–79.

Fafchamps, M, Vaz, A., & Vicente, P. (2014). Voting and peer effects: Evidence from a randomized controlled trial. Stanford University (mimeograph).

Falk, A., & Ichino, A. (2006). Clean evidence on peer effects. Journal of Labor Economics, 24(1), 39–57.

Graham, B. S. (2008). Identifying social interactions through conditional variance restrictions. Econometrica, 76(3), 643–660.

Guryan, J., Kroft, K., & Notowidigdo, M. (2009). Peer effects in the workplace: Evidence from random groupings in professional Golf tournaments. American Economic Journal: Applied Economics, 1(4), 34–68.

Hoxby, C. M., & Weingarth, G. (2005). Taking race out of the equation: School reassignment and the structure of peer effects, working paper.

Kocher, M. G., & Sutter, M. (2005). The decision maker matters: Individual versus group behaviour in experimental beauty contest. Economic Journal, 115(500), 200–23.

Kocher, M., Sutter, M., & Wakolbinger, F. (2014). Social learning in beauty-contest games. Southern Economic Journal, 80(3), 586–613.

Lahno, A., & Serra-Garcia, M. (2015). Peer effects in risk taking: Envy or conformity? Journal of Risk and Uncertainty, 50(1), 73–95.

Lai, F., Luo, R., Zhang, L., Huang, X., & Rozelle, S. (2011). Does computer-assisted learning improve learning outcomes? Evidence from a randomized experiment in migrant schools in Beijing. In REAP Working Paper.

Lai, F., Zhang, L., Qu, Q., Hu, X., Shi, Y., Boswell, M., et al. (2012). Does computer-assisted learning improve learning outcomes? Evidence from a randomized experiment in public schools in rural minority areas in Qinghai, China. In REAP Working Paper.

Lai, F., Zhang, L., Qu, Q., Hu, X., Shi, Y., Boswell, M., et al. (2013). Computer assisted learning as extracurricular tutor? Evidence from a randomized experiment in rural boarding schools in Shaanxi. Journal of Development Effectiveness, 5(2), 208–231.

Lee, L.-F., Liu, X., Patacchini, E., & Zenou, Y. (2012). Criminal networks: Who is the key player? In CEPR Discussion Paper No. DP8772.

Lyle, D. (2007). Estimating and interpreting peer and role model effects from randomly assigned social groups at west point. Review of Economics and Statistics, 89(2), 289–299.

Lyle, D. (2009). The effects of peer group heterogeneity on the production of human capitalat west point. American Economic Journal: Applied Economics, 1, 69–84.

Manski, C. F. (1993). Identification of endogenous social effects: The reflection problem. Review of Economic Studies, 60(3), 531–42.

Mo, D., Zhang, L., Luo, R., Qu, Q., Huang, W., Wang, J., et al. (2014). Integrating computer-assisted learning into a regular curriculum: Evidence from a randomised experiment in rural schools in Shaanxi. Journal of Development Effectiveness, 6(3), 300–323.

Mo, D., Zhang, L., Wang, J., Huang, W., Shi, Y., Boswell, M., et al. (2013). The persistence of gains in learning from computer assisted learning: Evidence from a randomized experiment in rural schools in Shaanxi Province. In REAP Working Paper.

Sacerdote, B. (2001). Peer effects with random assignment: Results for Dartmouth roommates. Quarterly Journal of Economics, 116(2), 681–704.

Sacerdote, B. (2011). Peer effects in education: How might they work, how big are they and how much do we know thus far? Handbook of the Economics of Education, 3, 249–277.

Shue, K. (2012). Executive networks and firm policies: Evidence from the random assignment of MBA Peers. In Working Paper.

Sugden, R. (1993). Thinking as a team: Towards an explanation of nonselfish behaviour. Social Philosophy and Policy, 10, 69–89.

Vigdor, J., & Nechyba, T. (2007). Peer effects in North Carolina public schools. In Schools and the equal opportunity problem. MIT Press.

Wooldridge, J. M. (2002). Econometric analysis of cross-section and panel data. Cambridge: MIT Press.

Zimmerman, D. J. (2003). Peer effects in academic outcomes: Evidence from a natural experiment. Review of Economics and Statistics, 85(1), 9–23.

Acknowledgements

We benefited from comments and suggestions from Paul Glewwe, Jessica Leight, Arun Chandrasekhar, Bet Caeyers, Prashant Loyalka, and Hessel Oosterbeek, as well as from the journal’s editors and referees and from conference participants at the AEA 2016 Conference in San Francisco and from seminar participants at the Universities of Stanford, Minnesota and Santa Clara. We thank Weiming Huang and Yu Bai for their assistance in data cleaning and program implementation. We would like to acknowledge Dell Inc. and the LICOS Centre for Institutions and Economic Development for their generous support to REAP’s computer assisted learning programs. We are very grateful to Scott Rozelle for his constructive advice on this paper. We acknowledge the assisstance of students from the Chinese Academy of Sciences and Northwest University of Xi’an in conducting the surveys.

Author information

Authors and Affiliations

Corresponding author

Appendix: Balancedness and attrition

Appendix: Balancedness and attrition

In column (5) of Table 1 we report regression coefficient of the baseline characteristic of one student on the baseline characteristic of the other. The estimated regression is of the form:

This random assignment test is subject to exclusion bias: because a student cannot be his/her own peer, negative correlation between peer characteristics naturally arises under random assignment. Consequently, under the null hypothesis of random assignment estimated \(\widehat{\beta } _{2}\) are not centered on 0 but on a negative number. Caeyers and Fafchamps (2016) derives the magnitude of the bias for groups and selection pools of fixed size and shows that the bias is particularly large when the randomly assigned group is small, e.g., in pairs.

We cannot use their formula directly because the size of the selection pools varies: class sizes are not constant. To circumvent this problem, we simulate the distribution of \(\widehat{\beta }_{2}\) under the null using a so-called permutation method. This method also delivers a consistent p value for \(\beta _{2}\) and thus offers a way of testing the null of random assignment. This method works as follows. The object is to calculate the distribution of \(\widehat{\beta }_{2}\) under the null that \(y_{it}\) and \(y_{jt}\) are uncorrelated. To simulate \(\widehat{\beta }_{2}\) under the null, we create counterfactual random matches and estimate (9). In practice, this is implemented by artificially scrambling the order of students within each class to reassign them into counterfactual random pairs. By construction these samples of paired observations satisfy the null of random assignment within classroom. We repeat this process 1000 times to obtain a close approximation of the distribution of \(\widehat{\beta }_{2}\) under the null. We then compare the actual \(\widehat{\beta }_{2}\) to this distribution to get its p value.

We present in Fig. 2 the simulated distribution of \(\widehat{\beta }_{2}\) for baseline math scores under the null hypothesis of random assignment. These simulated \(\widehat{\beta }_{2}\)’s are centered around \(-0.05\), with very few values at or above 0. As shown in the first line of column (5) in Table 1, the \(\widehat{\beta }_{2}\) estimated from the sample \(-0.03\). Comparing this number to the histogram of \(\widehat{\beta }_{2}\) under the null reported in Fig. 2, we find that 27% of simulated coefficients are larger than \(-0.03\). From this we conclude that the p value is 0.27: we cannot reject the null hypothesis of random assignment based on baseline math scores.

In column (5) and (6) of Table 1 we report the coefficient estimates for other baseline characteristics as well as similarly calculated p values for the null hypothesis of random assignment by these characteristics. All p values are above the 10% level. From this we conclude that the random assignment of peers was implemented in a satisfactory manner.

Attrition during the experiment is low. A total of 7536 sample students surveyed in the baseline participated in the endline survey. Only 4% of the students who took the baseline survey did not take the endine survey. Based on information provided by the schools, attrition is mainly due to illness, dropout, and transfers to schools outside of the town. In “Appendix” Table 7 we examine whether attrition is correlated with treatment. Column 1 shows that attrition rates do not differ statistically between CAL school students and control school students. Attrition is also not correlated with being paired or not (Table 7, column 2) or with being assigned to a high or low achieving peer Table 7, column 3).

As a final check, we repeat the balancedness tests of Table 1 using only the non-attriting sample. Results are shown in “Appendix” Table 8. The same conclusions hold: we cannot reject balance on all baseline characteristics for the first two treatments. We also repeat the permutation tests to check random peer assignment on baseline math scores. We obtain p values all above 0.1 and again fail to reject the random peer assignment hypothesis.

Rights and permissions

About this article

Cite this article

Fafchamps, M., Mo, D. Peer effects in computer assisted learning: evidence from a randomized experiment. Exp Econ 21, 355–382 (2018). https://doi.org/10.1007/s10683-017-9538-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10683-017-9538-z