Abstract

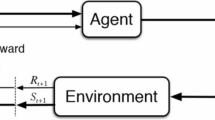

Quantitative finance has had a long tradition of a bottom-up approach to complex systems inference via multi-agent systems (MAS). These statistical tools are based on modelling agents trading via a centralised order book, in order to emulate complex and diverse market phenomena. These past financial models have all relied on so-called zero-intelligence agents, so that the crucial issues of agent information and learning, central to price formation and hence to all market activity, could not be properly assessed. In order to address this, we designed a next-generation MAS stock market simulator, in which each agent learns to trade autonomously via reinforcement learning. We calibrate the model to real market data from the London Stock Exchange over the years 2007 to 2018, and show that it can faithfully reproduce key market microstructure metrics, such as various price autocorrelation scalars over multiple time intervals. Agent learning thus enables accurate emulation of the market microstructure as an emergent property of the MAS.

Similar content being viewed by others

Notes

Computations were performed on a Mac Pro with 3,5 GHz 6-Core Intel Xeon E5 processor, and 16 GB 1866 MHz DDR memory.

We used the time series feature extraction functions implemented in the tsfresh Python package (Christ et al. 2018).

We used the implementation from the scikit-learn Python package (Pedregosa et al. 2011), with 200 estimators, maximal tree depth equal to 5 and default values for other hyperparameters.

References

Abbeel, P., Coates, A., & Ng, A. Y. (2010). Autonomous helicopter aerobatics through apprenticeship learning. The International Journal of Robotics Research, 29, 1608–1639.

Aloud, M. (2014). Agent-based simulation in finance: Design and choices. In: Proceedings in finance and risk perspectives ‘14.

Andreas, J., Klein, D., & Levine, S. (2017). Modular multitask reinforcement learning with policy sketches.

Bak, P., Norrelykke, S., & Shubik, M. (1999). Dynamics of money. Physical Review E, 60, 2528–2532.

Bak, P., Norrelykke, S., & Shubik, M. (2001). Money and goldstone modes. Quantitative Finance, 1, 186–190.

Barde, S. (2015). A practical, universal, information criterion over nth order Markov processes (p. 04). School of Economics Discussion Papers, University of Kent.

Bavard, S., Lebreton, M., Khamassi, M., Coricelli, G., & Palminteri, S. (2018). Reference-point centering and range-adaptation enhance human reinforcement learning at the cost of irrational preferences. Nature Communications, 9(1), 4503. https://doi.org/10.1038/s41467-018-06781-2.

Benzaquen, M., & Bouchaud, J. (2018). A fractional reaction–diffusion description of supply and demand. The European Physical Journal B, 91, 23. https://doi.org/10.1140/epjb/e2017-80246-9D.

Bera, A. K., Ivliev, S., & Lillo, F. (2015). Financial econometrics and empirical market microstructure. Berlin: Springer.

Bhatnagara, S., & Panigrahi, J. R. (2006). Actor-critic algorithms for hierarchical decision processes. Automatica, 42, 637–644.

Biondo, A. E. (2018a). Learning to forecast, risk aversion, and microstructural aspects of financial stability. Economics, 12(2018–20), 1–21.

Biondo, A. E. (2018b). Order book microstructure and policies for financial stability. Studies in Economics and Finance, 35(1), 196–218.

Biondo, A. E. (2018c). Order book modeling and financial stability. Journal of Economic Interaction and Coordination, 14(3), 469–489.

Boero, R., Morini, M., Sonnessa, M., & Terna, P. (2015). Agent-based models of the economy, from theories to applications. New York: Palgrave Macmillan.

Bouchaud, J., Cont, R., & Potters, M. (1997). Scale invariance and beyond. In Proceeding CNRS Workshop on Scale Invariance, Les Houches. Springer.

Bouchaud, J. P. (2018). Handbook of computational economics (Vol. 4). Amsterdam: Elsevier.

Chiarella, C., Iori, G., & Perell, J. (2007). The impact of heterogeneous trading rules on the limit order book and order flows. arXiv:0711.3581.

Christ, M., Braun, N., Neuffer, J., & Kempa-Liehr, A. W. (2018). Time series feature extraction on basis of scalable hypothesis tests, tsfresh-a python package. Neurocomputing, 307, 72–77.

Cont, R. (2001). Empirical properties of asset returns: Stylized facts and statistical issues. Quantitative Finance, 1, 223–236.

Cont, R. (2005). Chapter 7-Agent-based models for market impact and volatility. In A. Kirman & G. Teyssiere (Eds.), Long memory in economics. Berlin: Springer.

Cont, R., & Bouchaud, J. P. (2000). Herd behavior and aggregate fluctuations in financial markets. Macroeconomic Dynamics, 4, 170–196.

Cristelli, M. (2014). Complexity in financial markets. Berlin: Springer.

Current dividend impacts of FTSE-250 stocks. Retrieved May 19, 2020 from https://www.dividenddata.co.uk.

Delbaen, F., & Schachermayer, W. (2004). What is a free lunch? Notices of the AMS, 51(5), 526–528.

Deng, Y., Bao, F., Kong, Y., Ren, Z., & Dai, Q. (2017). Deep direct reinforcement learning for financial signal representation and trading. IEEE Transactions on Neural Networks and Learning Systems, 28(3), 653–64.

de Vries, C., & Leuven, K. (1994). Stylized facts of nominal exchange rate returns. Working papers from Purdue University, Krannert School of Management—Center for International Business Education and Research (CIBER).

Ding, Z., Engle, R., & Granger, C. (1993). A long memory property of stock market returns and a new model. Journal of Empirical Finance, 1, 83–106.

Dodonova, A., & Khoroshilov, Y. (2018). Private information in futures markets: An experimental study. Managerial and Decision Economics, 39, 65–70.

Donangelo, R., Hansen, A., Sneppen, K., & Souza, S. R. (2000). Modelling an imperfect market. Physica A, 283, 469–478.

Donangelo, R., & Sneppen, K. (2000). Self-organization of value and demand. Physica A, 276, 572–580.

Duan, Y., Schulman, J., Chen, X., Bartlett, P. L., Sutskever, I., & Abbeel, P. (2016). Rl-squared: Fast reinforcement learning via slow reinforcement learning. arXiv:1611.02779.

Duncan, K., Doll, B. B., Daw, N. D., & Shohamy, D. (2018). More than the sum of its parts: A role for the hippocampus in configural reinforcement learning. Neuron, 98, 645–657.

Eickhoff, S. B., Yeo, B. T. T., & Genon, S. (2018). Imaging-based parcellations of the human brain. Nature Reviews Neuroscience, 19, 672–686.

Eisler, Z., & Kertesz, J. (2006). Size matters: Some stylized facts of the stock market revisited. European Physical Journal B, 51, 145–154.

Engle, R. F. (1982). Autoregressive conditional heteroscedasticity with estimates of the variance of United Kingdom inflation. Econometrica, 50(4), 987–1007.

Erev, I., & Roth, A. E. (2014). Maximization, learning and economic behaviour. PNAS, 111, 10818–10825.

Fama, E. (1970). Efficient capital markets: A review of theory and empirical work. Journal of Finance, 25, 383–417.

Franke, R., & Westerhoff, F. (2011). Structural stochastic volatility in asset pricing dynamics: Estimation and model contest. BERG working paper series on government and growth (Vol. 78).

Fulcher, B. D., & Jones, N. S. (2014). Highly comparative feature-based time-series classification. IEEE Transactions Knowledge and Data Engineering, 26, 3026–3037.

Ganesh, S., Vadori, N., Xu, M., Zheng, H., Reddy, P., & Veloso, M. (2019). Reinforcement learning for market making in a multi-agent dealer market. arXiv:1911.05892.

Gode, D., & Sunder, S. (1993). Allocative efficiency of markets with zero-intelligence traders: Market as a partial substitute for individual rationality. Journal of Political Economy, 101(1), 119–137.

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., et al. (2014). Generative adversarial nets. In Advances in neural information processing systems (pp. 2672–2680).

Green, E., & Heffernan, D. M. (2019). An agent-based model to explain the emergence of stylised facts in log returns. arXiv:1901.05053.

Greene, W. H. (2017). Econometric analysis (8th ed.). London: Pearson.

Grondman, I., Busoniu, L., Lopes, G., & Babuska, R. (2012). A survey of actor-critic reinforcement learning: Standard and natural policy gradients. IEEE Transactions on Systems Man and Cybernetics, 42, 1291–1307.

Gualdi, S., Tarzia, M., Zamponi, F., & Bouchaud, J. P. (2015). Tipping points in macroeconomic agent-based models. Journal of Economic Dynamics and Control, 50, 29–61.

Heinrich, J. (2017). Deep RL from self-play in imperfect-information games. Ph.D. thesis, University College London.

Hu, Y. J., & Lin, S. J. (2019). Deep reinforcement learning for optimizing portfolio management. In 2019 Amity international conference on artificial intelligence.

Huang, W., Lehalle, C. A., & Rosenbaum, M. (2015). Simulating and analyzing order book data: The queue-reactive model. Journal of the American Statistical Association, 110, 509.

Huang, Z. F., & Solomon, S. (2000). Power, Lévy, exponential and Gaussian-like regimes in autocatalytic financial systems. European Physical Journal B, 20, 601–607.

IG fees of Contracts For Difference. Retrieved May 19, 2020 from https://www.ig.com.

Katt, S., Oliehoek, F. A., & Amato, C. (2017). Learning in Pomdps with Monte Carlo tree search. In Proceedings of the 34th international conference on machine learning.

Keramati, M., & Gutkin, B. (2011). A reinforcement learning theory for homeostatic regulation. NIPS.

Keramati, M., & Gutkin, B. (2014). Homeostatic reinforcement learning for integrating reward collection and physiological stability. Elife, 3, e04811.

Kim, G., & Markowitz, H. M. (1989). Investment rules, margin and market volatility. Journal of Portfolio Management, 16, 45–52.

Konovalov, A., & Krajbich, I. (2016). Gaze data reveal distinct choice processes underlying model-based and model-free reinforcement learning. Nature Communications, 7, 12438.

Lefebvre, G., Lebreton, M., Meyniel, F., Bourgeois-Gironde, S., & Palminteri, S. (2017). Behavioural and neural characterization of optimistic reinforcement learning. Nature Human Behaviour, 1(4), 1–19.

Levy, M., Levy, H., & Solomon, S. (1994). A microscopic model of the stock market: Cycles, booms, and crashes. Economics Letters, 45, 103–111.

Levy, M., Levy, H., & Solomon, S. (1995). Microscopic simulation of the stock market: The effect of microscopic diversity. Journal de Physique, I(5), 1087–1107.

Levy, M., Levy, H., & Solomon, S. (1997). New evidence for the power-law distribution of wealth. Physica A, 242, 90–94.

Levy, M., Levy, H., & Solomon, S. (2000). Microscopic simulation of financial markets: From investor behavior to market phenomena. New York: Academic Press.

Levy, M., Persky, N., & Solomon, S. (1996). The complex dynamics of a simple stock market model. International Journal of High Speed Computing, 8, 93–113.

Levy, M., & Solomon, S. (1996a). Dynamical explanation for the emergence of power law in a stock market model. International Journal of Modern Physics C, 7, 65–72.

Levy, M., & Solomon, S. (1996b). Power laws are logarithmic Boltzmann laws. International Journal of Modern Physics C, 7, 595–601.

Liang, H., Yang, L., Tu, H. C. W., & Xu, M. (2017). Human-in-the-loop reinforcement learning. In 2017 Chinese automation congress.

Lipski, J., & Kutner, R. (2013). Agent-based stock market model with endogenous agents’ impact. arXiv:1310.0762.

Lobato, I. N., & Savin, N. E. (1998). Real and spurious long-memory properties of stock-market data. Journal of Business and Economics Statistics, 16, 261–283.

Lux, T., & Marchesi, M. (1999). Scaling and criticality in a stochastic multi-agent model of a financial market. Nature, 397, 498–500.

Lux, T., & Marchesi, M. (2000). Volatility clustering in financial markets: A microsimulation of interacting agents. Journal of Theoretical and Applied Finance, 3, 67–70.

Mandelbrot, B. (1963). The variation of certain speculative prices. The Journal of Business, 39, 394–419.

Mandelbrot, B., Fisher, A., & Calvet, L. (1997). A multifractal model of asset returns. Cowles Foundation for Research and Economics.

Martino, A. D., & Marsili, M. (2006). Statistical mechanics of socio-economic systems with heterogeneous agents. Journal of Physics A, 39, 465–540.

McInnes, L., Healy, J., & Melville, J. (2018). Umap: Uniform manifold approximation and projection for dimension reduction. arXiv preprint arXiv:1802.03426.

Mnih, V., Badia, A. P., Mirza, M., Graves, A., Lillicrap, T. P., Harley, T., et al. (2016). Asynchronous methods for deep reinforcement learning. arXiv:1602.01783.

Momennejad, I., Russek, E., Cheong, J., Botvinick, M., Daw, N. D., & Gershman, S. J. (2017). The successor representation in human reinforcement learning. Nature Human Behavior, 1, 680–692.

Murray, M. P. (1994). A drunk and her dog: An illustration of cointegration and error correction. The American Statistician, 48(1), 37–39.

Mota Navarro, R., & Larralde, H. (2016). A detailed heterogeneous agent model for a single asset financial market with trading via an order book. arXiv:1601.00229.

Naik, P. K., Gupta, R., & Padhi, P. (2018). The relationship between stock market volatility and trading volume: Evidence from South Africa. The Journal of Developing Areas, 52(1), 99–114.

Neuneier, R. (1997). Enhancing q-learning for optimal asset allocation. In Proceeding of the 10th international conference on neural information processing systems.

Ng, A. Y., Harada, D., & Russell, S. (1999). Theory and application to reward shaping.

Pagan, A. (1996). The econometrics of financial markets. Journal of Empirical Finance, 3, 15–102.

Palminteri, S., Khamassi, M., Joffily, M., & Coricelli, G. (2015). Contextual modulation of value signals in reward and punishment learning. Nature Communications, 6, 1–14.

Palminteri, S., Lefebvre, G., Kilford, E., & Blakemore, S. (2017). Confirmation bias in human reinforcement learning: Evidence from counterfactual feedback processing. PLoS Computational Biology, 13(8), e1005684.

Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V., Thirion, B., Grisel, O., et al. (2011). Scikit-learn, machine learning in python. Journal of Machine Learning Research, 12, 2825–2830.

Pinto, L., Davidson, J., Sukthankar, R., & Gupta, A. (2017). Robust adversarial reinforcement learning. arXiv:1703.02702.

Plerou, V., Gopikrishnan, P., Amaral, L. A., Meyer, M., & Stanley, H. E. (1999). Scaling of the distribution of fluctuations of financial market indices. Physical Review E, 60(6), 6519.

Potters, M., & Bouchaud, J. P. (2001). More stylized facts of financial markets: Leverage effect and downside correlations. Physica A, 299, 60–70.

Preis, T., Golke, S., Paul, W., & Schneider, J. J. (2006). Multi-agent-based order book model of financial markets. Europhysics Letters, 75(3), 510–516.

Ross, S., Pineau, J., Chaib-draa, B., & Kreitmann, P. (2011). A Bayesian approach for learning and planning in partially observable Markov decision processes. Journal of Machine Learning Research, 12, 1729–1770.

Sbordone, A. M., Tambalotti, A., Rao, K., & Walsh, K. J. (2010). Policy analysis using DSGE models: An introduction. Economic Policy Review, 16(2), 23–43.

Schreiber, T., & Schmitz, A. (1997). Discrimination power of measures for nonlinearity in a time series. Physical Review E, 55(5), 5443.

Silver, D., Huang, A., Maddison, C. J., Guez, A., Sifre, L., van den Driessche, G., et al. (2016). Mastering the game of go with deep neural networks and tree search. Nature, 529, 484–489.

Silver, D., Hubert, T., Schrittwieser, J., Antonoglou, I., Lai, M., Guez, A., et al. (2018a). A general reinforcement learning algorithm that masters chess, shogi and go through self-play. Science, 362(6419), 1140–1144.

Silver, D., Lever, G., Heess, N., Degris, T., Wierstra, D., & Riedmiller, M. (2014). Deterministic policy gradient algorithms. In Proceedings of the 31st international conference on machine learning (Vol. 32).

Silver, D., Schrittwieser, J., Simonyan, K., Antonoglou, I., Huang, A., Guez, A., et al. (2018b). Mastering the game of go without human knowledge. Nature, 550, 354–359.

Sirignano, J., & Cont, R. (2019). Universal features of price formation in financial markets: Perspectives from deep learning. Quantitative Finance, 19(9), 1449–1459.

Solomon, S., Weisbuch, G., de Arcangelis, L., Jan, N., & Stauffer, D. (2000). Social percolation models. Physica A, 277(1), 239–247.

Spooner, T., Fearnley, J., Savani, R., & Koukorinis, A. (2018). Market making via reinforcement learning. In Proceedings of the 17th AAMAS.

Sutton, R., & Barto, A. (1998). Reinforcement learning: An introduction. Cambridge, MA: MIT Press.

Sutton, R. S., McAllester, D., Singh, S., & Mansour, Y. (2000). Policy gradient methods for reinforcement learning with function approximation. Advances in Neural Information Processing Systems, 12, 1057–1063.

Szepesvari, C. (2010). Algorithms for reinforcement learning. San Rafael: Morgan and Claypool Publishers.

Tessler, C., Givony, S., Zahavy, T., Mankowitz, D. J., & Mannor, S. (2016). A deep hierarchical approach to lifelong learning in minecraft. arXiv:1604.07255.

UK one-year gilt reference prices. Retrieved May 19, 2020 from https://www.dmo.gov.uk.

Vandewalle, N., & Ausloos, M. (1997). Coherent and random sequences in financial fluctuations. Physica A, 246, 454–459.

Vernimmen, P., Quiry, P., Dallocchio, M., Fur, Y. L., & Salvi, A. (2014). Corporate finance: Theory and practice (4th ed.). New York: Wiley.

Wang, J. X., Kurth-Nelson, Z., Kumaran, D., Tirumala, D., Soyer, H., Leibo, J. Z., et al. (2018). Prefrontal cortex as a meta-reinforcement learning system. Nature Neuroscience, 21, 860–868.

Watkins, C. J. C. H., & Dayan, P. (1992). Q-learning. Machine Learning, 8(3–4), 279–292.

Way, E., & Wellman, M. P. (2013). Latency arbitrage, market fragmentation, and efficiency: A two-market model. In Proceedings of the fourteenth ACM conference on electronic commerce (pp. 855–872).

Wellman, M. P., & Way, E. (2017). Strategic agent-based modeling of financial markets. The Russell Sage Foundation Journal of the Social Sciences, 3(1), 104–119.

Weron, R. (2001). Levy-stable distributions revisited: Tail index \(> 2\) does not exclude the levy-stable regime. International Journal of Modern Physics C, 12, 209–223.

Wiering, M., & van Otterlo, M. (2012). Reinforcement learning: State-of-the-art. Berlin: Springer.

Acknowledgements

We graciously acknowledge this work was supported by the RFFI Grant No. 16-51-150007 and CNRS PRC No. 151199, and received support from FrontCog ANR-17-EURE-0017. I. L.’s work was supported by the Russian Science Foundation, Grant No. 18-11-00294. S. B.-G. received funding within the framework of the HSE University Basic Research Program funded by the Russian Academic Excellence Project No. 5-100.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Classification Accuracy

We show on Fig. 16 the accuracy of both the testing (left) and training (right) sets, as functions of time-series sample size, for samples containing larger numbers of timestamps than in the Fig. 13. The saturating accuracy dynamics can be observed for both testing and training sets: for the value distribution feature set, the former does not exceed \(70\%\) and the latter \(75\%\), while for the full time-series feature set, the former saturates above \(90\%\) and the latter above \(95\%\). One can notice that the accuracy values on the training set are generally higher than for the testing set, and do not show such a pronounced saturation dynamic. The accuracy on the training set is not too large because the trees in the random forest have been regularized (with maximal depth equal to 5), since we found it is necessary for a good generalization on the testing set.

Accuracy of the testing set (left) and training set (right), as a function of time-series sample size. The value distribution features include only features that do not depend on the order of values in the time-series (e.g. mean, median, variance, kurtosis, skewness of the value distribution, etc.), whereas all time-series features correspond to the total set of time-series features including those that depend on the temporal structure of the series (e.g. autocorrelation, entropy, FFT coefficients, etc.). Both testing and training subsets are balanced in terms of class distribution, and their respective accuracy is achieved with samples containing up to 200 timestamps. The simulations are generated with parameters \(I=500\), \(J=1\), \(T=2875\), and \(S=20\)

1.2 Top Statistical Features

We provide a general grouping and examples of the top statistical features used in the dimensionality reduction performed in Sect. 4.2. The exact ranking of particular features found in our experiments together with their importance metric value \(\varTheta\) is as follows. The imporance metric \(\varTheta\) is summed from 30 random forest models trained on different random splits of the training/testing sets.

-

1.

Partial autocorrelation value of lag 1, \(\varTheta =1.2240\).

-

2.

First coefficient of the fitted AR(10) process, \(\varTheta =1.0777\).

-

3.

Kurtosis of the FFT coefficient distribution, \(\varTheta =1.0214\).

-

4.

Skewness of the FFT coefficient distribution, \(\varTheta =1.0001\).

-

5.

Autocorrelation value of lag 1, \(\varTheta =0.9861\).

-

6.

60th percentile of the value distribution, \(\varTheta =0.9044\).

-

7.

Kurtosis of the FFT coefficient distribution, \(\varTheta =0.7347\).

-

8.

Mean of consecutive changes in the series for values in between the 0th and the 80th percentiles of the value distribution, \(\varTheta =0.6349\).

-

9.

Variance of consecutive changes in the series for values in between the 0th and the 20th percentiles of the value distribution, \(\varTheta =0.5948\).

-

10.

Approximate entropy value (length of compared run of data is 2, filtering level is 0.1), \(\varTheta =0.5878\).

-

11.

70th percentile of the value distribution, \(\varTheta =0.5589\).

-

12.

Variance of absolute consecutive changes in the series for values in between the 0th and the 20th percentiles of the value distribution, \(\varTheta =0.5584\).

-

13.

Mean of consecutive changes in the series for values in between the 40th and the 100th percentiles of the value distribution, \(\varTheta =0.4755\).

-

14.

Ratio of values that are more than 1 standard deviation away from the mean value, \(\varTheta =0.3282\).

-

15.

Median of the value distribution, \(\varTheta =0.2957\).

-

16.

Skewness of the value distribution, \(\varTheta =0.2894\).

-

17.

Measure of time series nonlinearity from Schreiber and Schmitz (1997) of lag 1, \(\varTheta =0.2867\).

-

18.

Second coefficient of the fitted AR(10) process, \(\varTheta =0.2726\).

-

19.

Partial autocorrelation value of lag 1, \(\varTheta =0.2575\).

-

20.

Time reversal symmetry statistic from Fulcher and Jones (2014) of lag 1, \(\varTheta =0.2418\).

The top-10 features referenced in Sect. 4.2 are the first 10 features taken from the list above. The PCA and UMAP mappings of the top-10 features onto a two-dimensional space demonstrated some separability between the two classes (real vs. simulated data), as measured by training a linear classifier on these two-dimensional data representations (see Sect. 4.2 for details), as well as by calculating the Kolmogorov–Smirnov (KS) statistic for each embedding component. The KS statistic value between the two classes is 0.24 and 0.11 for PCA (for the first and second component, respectively) and 0.30 and 0.25 for UMAP.

Rights and permissions

About this article

Cite this article

Lussange, J., Lazarevich, I., Bourgeois-Gironde, S. et al. Modelling Stock Markets by Multi-agent Reinforcement Learning. Comput Econ 57, 113–147 (2021). https://doi.org/10.1007/s10614-020-10038-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10614-020-10038-w