Abstract

An increasingly used method for the engineering of software systems with strict quality-of-service (QoS) requirements involves the synthesis and verification of probabilistic models for many alternative architectures and instantiations of system parameters. Using manual trial-and-error or simple heuristics for this task often produces suboptimal models, while the exhaustive synthesis of all possible models is typically intractable. The EvoChecker search-based software engineering approach presented in our paper addresses these limitations by employing evolutionary algorithms to automate the model synthesis process and to significantly improve its outcome. EvoChecker can be used to synthesise the Pareto-optimal set of probabilistic models associated with the QoS requirements of a system under design, and to support the selection of a suitable system architecture and configuration. EvoChecker can also be used at runtime, to drive the efficient reconfiguration of a self-adaptive software system. We evaluate EvoChecker on several variants of three systems from different application domains, and show its effectiveness and applicability.

Similar content being viewed by others

1 Introduction

Software systems used in application domains including healthcare, finance and manufacturing must comply with strict reliability, performance and other quality-of-service (QoS) requirements. The software engineers developing these systems must use rigorous techniques and processes at all stages of the software development life cycle. In this way, the engineers can continually assess the correctness of a system under development (SUD) and confirm its compliance with the required levels of reliability and performance.

Probabilistic model checking (PMC) is a formal verification technique that can assist in establishing the compliance of a SUD with QoS requirements through mathematical reasoning and rigorous analysis (Baier and Katoen 2008; Clarke et al. 1999). PMC supports the analysis of reliability, timeliness, performance and other QoS requirements of systems exhibiting stochastic behaviour, e.g. due to unreliable components or uncertainties in the environment (Kwiatkowska 2007). The technique has been successfully applied to the engineering of software for critical systems (Alur et al. 2015; Woodcock et al. 2009). In PMC, the behaviour of a SUD is defined formally as a finite state-transition model whose transitions are annotated with information about the likelihood or timing of events taking place. Examples of probabilistic models that PMC operates with include discrete and continuous-time Markov chains, and Markov decision processes (Kwiatkowska et al. 2007). QoS requirements are expressed formally using probabilistic variants of temporal logic, e.g., probabilistic computation tree logic and continuous stochastic logic (Kwiatkowska et al. 2007). Through automated exhaustive analysis of the underlying low-level model, PMC proves or disproves compliance of the probabilistic model of the system with the formally specified QoS requirements.

Recent advances in PMC reinforced its applicability to the cost-effective engineering of software both at design time (Baier et al. 2010; Calinescu et al. 2016; Kwiatkowska et al. 2010) and at runtime (Calinescu et al. 2012; Draeger et al. 2014). The design-time use of the technique involves the verification of alternative designs of a SUD. The objectives are to identify designs whose quality attributes comply with system QoS requirements and also to eliminate early in the design process errors that could be hugely expensive to fix later (Damm and Lundberg 2007). Designs that meet these objectives can then be used as a basis for the implementation of the system. Alternatively, software engineers can construct probabilistic models of existing systems and employ PMC to assess their QoS attributes. Within the last decade, PMC has also been used to drive the reconfiguration of self-adaptive systems (Baresi and Ghezzi 2010; Calinescu and Kwiatkowska 2009; Epifani et al. 2009) by supporting the “analyse” and “plan” stages of the monitor-analyse-plan-execute control loop (Calinescu et al. 2017d; Salehie and Tahvildari 2009) of these systems. In this runtime use, PMC provides formal guarantees that the reconfiguration plan adopted by the self-adaptive system meets the QoS requirements (Calinescu et al. 2017a, 2012). We discuss related research on using PMC at runtime, including our recent work from Calinescu et al. (2015), Gerasimou et al. (2014), in Sect. 8.

Notwithstanding the successful applications of PMC at both design time and runtime, the synthesis and verification of probabilistic models that satisfy the QoS requirements of a system remains a very challenging task. The complexity of this task increases significantly when the search space is large and/or the QoS requirements ask for the optimisation of conflicting QoS attributes of the system (e.g. the maximisation of reliability and minimisation of cost). Existing approaches such as exhaustive search and simple heuristics like manual trial-and-error and automated hill climbing can only tackle this challenge for small systems. Exhaustively searching the solution space for an optimal probabilistic model is intractable for most real-world systems. On the other hand, trial-and-error requires manual verification of numerous alternative instantiations of the system parameters, while simple heuristics do not generalise well and are often biased towards a particular area of the problem landscape (e.g. through getting stuck at local optima).

The EvoChecker search-based software engineering approach presented in our paper addresses these limitations of existing approaches by automating the synthesis of probabilistic models and by considerably improving the outcome of the synthesis process. EvoChecker achieves these improvements by using evolutionary algorithms (EAs) to guide the search towards areas of the search space more likely to comprise probabilistic models that meet a predefined set of QoS requirements. These requirements can include both constraints, which specify bounds for QoS attributes of the system (e.g. “Workflow executions must complete successfully with probability at least 0.98”), and optimisation objectives (e.g. “The workflow response time should be minimised”).

Given this set of QoS requirements and a probabilistic model template that encodes the configuration parameters (e.g., alternative architectures, parameter ranges) of the software system, EvoChecker supports both the identification of suitable architectures and configurations for a software system under design, and the runtime reconfiguration of a self-adaptive software system.

When used at design time, EvoChecker employs multi-objective EAs to synthesise (i) a set of probabilistic models that closely approximates the Pareto-optimal model set associated with the QoS requirements of a software system; and (ii) the corresponding approximate Pareto front of QoS attribute values. Given this information, software designers can inspect the generated solutions to assess the tradeoffs between multiple QoS requirements and make informed decisions about the architecture and parameters of the SUD.

When used at runtime, EvoChecker drives the reconfiguration of a self-adaptive software system by synthesising probabilistic models that correspond to configurations which meet the QoS requirements of the system. To speed up this runtime search, we use incremental probabilistic model synthesis. This novel technique involves maintaining an archive of specific probabilistic models synthesised during recent reconfiguration steps, and using these models as the starting point for each new search. As reconfiguration steps are triggered by events such as changes to the environment that the system operates in, the EvoChecker archive accumulates “solutions” to past events that often resemble new events experienced by the system. Therefore, starting new searches from the archived “solutions” can achieve significant performance improvement for the model synthesis process.

The main contributions of our paper are:

-

The EvoChecker approach for the search-based synthesis of probabilistic models for QoS software engineering, and its application to the synthesis of models that meet QoS requirements both at design time and at runtime.

-

The EvoChecker high-level modelling language, which extends the modelling language used by established probabilistic model checkers such as PRISM (Kwiatkowska et al. 2011) and Storm (Dehnert et al. 2017).

-

The definition of the probabilistic model synthesis problem.

-

An incremental probabilistic model synthesis technique for the efficient runtime generation of probabilistic models that satisfy the QoS requirements of a self-adaptive system.

-

An extensive evaluation of EvoChecker in three case studies drawn from different application domains.

-

A prototype open-source EvoChecker tool and a repository of case studies, both of which are freely available from our project webpage at http://www-users.cs.york.ac.uk/simos/EvoChecker.

These contributions significantly extend the preliminary results from our conference paper on search-based synthesis of probabilistic models (Gerasimou et al. 2015) in several ways. First, we introduce an incremental probabilistic model synthesis technique that extends the applicability of EvoChecker to self-adaptive software systems. Second, we devise and evaluate different strategies for selecting the archived solutions used by successive EvoChecker synthesis tasks at runtime. Third, we extend the presentation of the EvoChecker approach with additional technical details and examples. Fourth, we use EvoChecker to develop two self-adaptive systems from different application domains (service-based systems and unmanned underwater vehicles). Finally, we use the systems and models from our experiments to assemble a repository of case studies available on our project website.

The rest of the paper is organised as follows. Section 2 presents the software system used as a running example. Section 3 introduces the EvoChecker modelling language, and Sect. 4 presents the specification of EvoChecker constraints and optimisation objectives using the QoS requirements of a software system. Section 5 describes the use of EvoChecker to synthesise probabilistic models at design time and at runtime. Section 6 summarises the implementation of the EvoChecker prototype tool. Section 7 presents the empirical evaluation carried out to assess the effectiveness of EvoChecker, and an analysis of our findings. Finally, Sects. 8 and 9 discuss related work and conclude the paper, respectively.

2 Running example

We will illustrate the EvoChecker approach for synthesising probabilistic models using a real-world service-based system from the domain of foreign exchange trading. The system, which for confidentiality reasons we anonymise as FX, is used by a European foreign exchange brokerage company and implements the workflow in Fig. 1.

An FX trader can use the system to carry out trades in expert or normal mode. In the expert mode, the trader can provide her objectives or action strategy. FX periodically analyses exchange rates and other market activity, and automatically executes a trade once the trader’s objectives are satisfied. In particular, a Market watch service retrieves real-time exchange rates of selected currency pairs. A Technical analysis service receives this data, identifies patterns of interest and predicts future variation in exchange rates. Based on this prediction and if the trader’s objectives are “satisfied”, an Order service is invoked to carry out a trade; if they are “unsatisfied”, execution control returns to the Market watch service; and if they are “unsatisfied with high variance”, an Alarm service is invoked to notify the trader about opportunities not captured by the trading objectives. In the normal mode, FX assesses the economic outlook of a country using a Fundamental analysis service that collects, analyses and evaluates information such as news reports, economic data and political events, and provides an assessment of the country’s currency. If the trader is satisfied with this assessment, she can sell/buy currency by invoking the Order service, which in turn triggers a Notification service to confirm the successful completion of a trade.

(modified with the permission from Gerasimou et al. (2015))

Workflow of the FX system.

The FX system uses \(m_i \!\ge \!1\) functionally equivalent implementations of the ith service. For any service i, the jth implementation, \(1 \!\le \! j\! \le m_i\) is characterised by its reliability \(r_{ij} \!\in \! [0,1]\) (i.e., probability of successful invocation), invocation cost \(c_{ij}\! \in \! {\mathbb {R}}^+\) and response time \(t_{ij}\! \in \! \mathbb {R^+}\).

FX is required to satisfy the QoS requirements from Table 1. For each service, FX must select one of two invocation strategies by means of a configuration parameter \(\textit{str}_i \in \{\text {PROB, SEQ}\}\), where

-

if \(\textit{str}_i=\text {PROB}\), FX uses a probabilistic strategy to randomly select one of the service implementations based on an FX-specified discrete probability distribution \(p_{i1}, p_{i2},\ldots , p_{im_i}\); and

-

if \(\textit{str}_i=\text {SEQ}\), FX uses a sequential strategy that employs an execution order to invoke one after the other all enabled service implementations until a successful response is obtained or all invocations fail.

For the SEQ strategy, a parameter \(ex_{i} \in \{1,2, \ldots , m_i!\}\) establishes which of the \(m_i!\) permutations of the \(m_i\) implementations should be used, and a configuration parameter \(x_{ij} \in \{0,1\}\) indicates if implementation j is enabled (\(x_{ij}=1\)) or not (\(x_{ij}=0\)).

3 EvoChecker modelling language

EvoChecker uses an extension of the modelling language that leading model checkers such as PRISM (Kwiatkowska et al. 2011) and Storm (Dehnert et al. 2017) use to define probabilistic models. This language is based on the Reactive Modules formalism (Alur and Henzinger 1999), which models a system as the parallel composition of a set of modules. The state of a module is defined by a set of finite-range local variables, and its state transitions are specified by probabilistic guarded commands that modify these variables:

where guard is a boolean expression over all model variables. If the guard is true, the arithmetic expression \(e_i, 1 \le i \le n\), gives the probability (for discrete-time models) or rate (for continuous-time models) with which the update\(_i\) change of the module variables occur. The action is an optional element of type ‘string’; when used, all modules comprising commands with the same action must synchronise by performing one of these commands simultaneously. For a complete description of the modelling language, we refer the reader to the PRISM manual at www.prismmodelchecker.org/manual.

EvoChecker extends this language with the following three constructs that support the specification of the possible configurations of a system.

1. Evolvable parameters EvoChecker uses the syntax

to define model parameters of type ‘int’ and ‘double’, respectively, and acceptable ranges for them. These parameters can be used in any field of command (1) other than action.

2. Evolvable probability distributions The syntax

where \([\textit{min}_i,\textit{max}_i]\subseteq [0,1]\) for all \(1\le i\le n\), is used to define an n-element discrete probability distribution, and ranges for the n probabilities of the distribution. The name of the distribution with \(1, 2, \ldots , n\) appended as a suffix (i.e., dist\(_1\), dist\(_2\), etc.) can then be used instead of expressions \(e_1\), \(e_2\), ..., \(e_n\) from an n-element command (1).

3. Evolvable modules EvoChecker uses the syntax

to define \(n\ge 2\) alternative implementations of a module modName.

The interpretation of the three EvoChecker constructs within a probabilistic model template is described by the following definitions.

Definition 1

(Probabilistic model template) A valid probabilistic model augmented with EvoChecker evolvable parameters (2), probability distributions (3) and modules (4) is called a probabilistic model template.

Definition 2

(Valid probabilistic model) A probabilistic model M is an instance of a probabilistic model template \({\mathcal {T}}\) if and only if it can be obtained from \({\mathcal {T}}\) using the following transformations:

-

Each evolvable parameter (2) is replaced by a ‘const int param = val;’ or ‘const double param = val;’ declaration (depending on the type of the parameter), where \(val\in \{min, \ldots , max\}\) or \(val\in [min..max]\), respectively.

-

Each evolvable probability distribution (3) is removed, and the n occurrences of its name instead of expressions \(e_1\), ..., \(e_n\) of a command (1) are replaced with values from the ranges \([\textit{min}_1\textit{..max}_1]\), ..., \([\textit{min}_n\textit{..max}_n]\), respectively. For a discrete-time model, the sum of the n values is 1.0.

-

Each set of evolvable modules with the same name is replaced with a single element from the set, from which the keyword ‘evolve’ was removed.

As the EvoChecker modelling language is based on the modelling language of established probabilistic model checkers such as PRISM and Storm, our approach can handle templates of all types of probabilistic models supported by these model checkers. Table 2 shows these types of probabilistic models, and the probabilistic temporal logics available to specify the QoS requirements of the modelled software systems.

Example 1

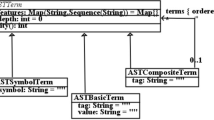

Figure 2 presents the discrete-time Markov chain (DTMC) probabilistic model template of the FX system introduced in Sect. 2. The template comprises a \(\mathsf {WorkflowFX}\) module modelling the FX workflow, and two modules modelling the alternative implementations of each service. These two service modules correspond to the probabilistic invocation strategy and the sequential invocation strategy, respectively. Due to space constrains, Fig. 2 shows in full only the \(\textsf {MarketWatch}\) module for the probabilistic strategy of the Market watch service; the complete FX probabilistic model template is available on our project webpage.

The local variable state from the \(\mathsf {WorkflowFX}\) module (line 5 in Fig. 2) encodes the state of the system, i.e. the service being invoked, the success or failure of that service invocation, etc. The local variable mw from the MarketWatch implementations (line 39) records the internal state of the Market watch service invocation.The WorkflowFX module synchronises with the service modules through ‘start’-, ‘failed’- and ‘succ’-prefixed actions, which are associated with the invocation, failed execution, and successful execution of a service, respectively. For instance, the synchronisation with the MarketWatch module occurs through the actions startMW, failedMW and succMW (lines 9–11).

Each service module models a specific invocation strategy, e.g. a probabilistic selection is made between three available Market watch service implementations in line 41 of the first MarketWatch module. Then, the selected service implementation is invoked (lines 43–45) and either completes successfully (line 47) or fails (line 48). The FX system continues with the rest of its workflow (lines 12–31 from WorkflowFX) if the service executed successfully, or terminates (line 32) otherwise.

All three EvoChecker constructs (2)–(4) are used by the FX probabilistic model template:

-

four evolvable parameters specify the enabled Market watch service implementations and their execution order (lines 50–53) associated with the sequential invocation strategy;

-

an evolvable distribution specifies the discrete probability distribution for the probabilistic invocation strategy of the first MarketWatch module (line 36);

-

two alternative implementations of the MarketWatch module are provided in lines 37–49 and 54–57, respectively.

4 EvoChecker specification of QoS requirements

4.1 Quality-of-service attributes

Given the probabilistic model template \({\mathcal {T}}\) of a system, QoS attributes such as the response time, throughput and reliability of the system can be expressed in the probabilistic temporal logics from Table 2, and can be evaluated by applying probabilistic model checking to relevant instances of \({\mathcal {T}}\). Formally, given the probabilistic temporal logic formula \({\varPhi }\) for a QoS attribute \( attr \) and an instance M of \({\mathcal {T}}\) (i.e. a probabilistic model corresponding to a system configuration being examined), the value of the QoS attribute is

where \( PMC \) is the probabilistic model checking “function” implemented by tools such as PRISM and Storm.

Example 2

The QoS requirements of the FX system from our running example (shown in Table 1) are based on three QoS attributes. Requirements R1 and R3 refer to the probability of successful completion (i.e. the reliability) of FX workflow executions. This QoS attribute corresponds to the probabilistic computation tree logic (PCTL) formula \(P_{=?} [F\; state \!=\!15]\) from the first row of Table 3. This PCTL formula expresses the probability that the probabilistic model template from Fig. 2 reaches its success state.

The QoS attributes for the other two requirements can be specified using rewards PCTL formulae (Andova et al. 2004; Katoen et al. 2005; Kwiatkowska 2007). For this purpose, positive values are associated with specific states and transitions of the model template from Fig. 2 by adding the following two

structures to the template:

structures to the template:

These structures support the computation of the total service response time for requirement R2 and of the workflow invocation cost for requirement R4. To this end, the two structures associate the mean response time \(t_{ij}\) and the invocation cost \(c_{ij}\) of the jth implementation of FX service i with the transition that models the execution of this service implementation. The corresponding PCTL formulae, shown in the last two rows of Table 3, represent the reward (i.e. the response time and cost, respectively) “accumulated” before reaching a state where the workflow execution terminates. In these formulae, \( state \!=\!15\) denotes a successful termination, and \( state \!=\!5\) an unsuccessful one.

Before describing the formalisation of QoS requirements in EvoChecker, we note that a software system has two types of parameters:

-

1.

Configuration parameters, which software engineers can modify to select between alternative system architectures and configurations. The EvoChecker constructs (2)–(4) are used to define these parameters and their acceptable values. The set of all possible combinations of configuration parameter values forms the configuration space \( Cfg \) of the system.

-

2.

Environment parameters, which specify relevant characteristics of the environment in which the system will operate or is operating. These parameters cannot be modified, and need to be estimated based on domain knowledge or observations of the actual system. The set of all possible combinations of environment parameter values forms the environment space \( Env \) of the system.

A probabilistic model template \({\mathcal {T}}\) of a system with configuration space \( Cfg \) and environment space \( Env \) corresponds to a specific combination of environment parameter values \(e\in Env \) and to the entire configuration space \( Cfg \). Furthermore, each instance M of \({\mathcal {T}}\) is associated with the same environment state e and with a specific combination of configuration parameter values \(c\in Cfg \). We will use the notation M(e, c) to refer to this specific instance of \({\mathcal {T}}\), and the notation \( attr (e,c)\) for the value of a QoS attribute (5) computed for this instance.

Example 3

The environment parameters of the FX system comprise:

-

the probabilities \( pExpert \), \( pSat \), \( pNotSat \), \( pTrade \) and \( pRetry \) from module WorkflowFX in Fig. 2;

-

the success probabilities \(r_{ij}\), response times \(t_{ij}\) and costs \(c_{ij}\) of the FX service implementations.

The FX configuration parameters defined by the EvoChecker constructs from Fig. 2 are:

-

the invocation strategies \( str _i\) used for the ith FX service;

-

the probabilities \(p_{ij}\) of invoking the jth implementation of service i when the probabilistic invocation strategy is used;

-

the \(x_{ij}\) and \( ex _i\) parameters specifying which implementations of service i are used by the sequential invocation strategy and their execution order.

4.2 Quality-of-service requirements

EvoChecker supports the engineering of software systems that need to satisfy two types of QoS requirements:

-

1.

Constraints, i.e. requirements that specify bounds for the acceptable values of QoS attributes such as response time, throughput, reliability and cost.

-

2.

Optimisation objectives, i.e. requirements which specify QoS attributes that should be minimised or maximised.

Formally, EvoChecker considers systems with \(n_1\ge 0\) constraints \(R^C_1, R^C_2, \ldots \), \(R^C_{n_1}\), and \(n_2\ge 1\) optimisation objectives \(R^O_1, R^O_2, \ldots , R^O_{n_2}\). The ith constraint, \(R^C_i\), has the form

and, assuming that all optimisation objectives require the minimisation of QoS attributes,Footnote 1 the jth optimisation objective, \(R^O_j\), has the form

where \(\bowtie _i \in \! \{<,\le ,\ge ,>,=\}\) is a relational operator, \( bound _i \in {\mathbb {R}}\), \(0 \le i \le n_1\), \(1 \le j \le n_2\), and \( attr _1\), \( attr _2\), ..., \( attr _{n_1+n_2}\) represent \(n_1+n_2\) QoS attributes (5) of the software system.

Example 4

The QoS requirements of the FX system (Table 1) comprise one constraint (R1) and three optimisation objectives (R2–R4). Table 4 shows the formalisation of these requirements for the design time use of EvoChecker using the FX QoS attributes from Table 3.

5 EvoChecker probabilistic model synthesis

EvoChecker supports both the selection of a suitable architecture and configuration for a software system under design, and the runtime reconfiguration of a self-adaptive software system. There are three key differences between these two uses of EvoChecker .

First, the use of EvoChecker during system design requires the specification of the (fixed) environment state that the system will operate in by a domain expert, while for its runtime use the environment state is continually updated based on monitoring information.

Second, the EvoChecker use at design time can handle multiple optimisation objectives and yields multiple Pareto-optimal solutions (i.e. probabilistic models). In contrast, EvoChecker at runtime yields a single solution (as synthesising multiple solutions is not useful without a software engineer to examine them) by operating with a single optimisation objective (i.e. a “loss” function).

Finally, the use of EvoChecker for the design of a system is a one-off activity, whereas for a self-adaptive system the approach is used to select new system configurations on frequent intervals or after each environment change. The latter use involves the incremental synthesis of probabilistic models by generating each new configuration efficiently based on previously synthesised ones.

Given these differences between the design time and runtime EvoChecker uses, we present them separately in Sects. 5.1 and 5.2, respectively.

5.1 Using EvoChecker at design time

5.1.1 Probabilistic model synthesis problem

Consider a SUD with environment space \( Env \), an environment state \(e \in Env \) provided by a domain expert, and a probabilistic model template \({\mathcal {T}}\) associated with the configuration space \( Cfg \) of the system. Given \(n_1 \ge 0\) constraints (6) and \(n_2 \ge 1\) optimisation objectives (7), the probabilistic model synthesis problem involves finding the Pareto-optimal set \( PS \) of configurations from \( Cfg \) that satisfy the \(n_1\) constraints and are non-dominated with respect to the \(n_2\) optimisation objectives:

with the dominance relation \(\prec \; : Cfg \!\times Cfg \!\!\rightarrow \! \mathbb {B}\) defined by

Finally, given the Pareto-optimal set \( PS \), the Pareto front PF is defined by

because the system designers need this information in order to choose between the configurations from the set PS.

5.1.2 Probabilistic model synthesis approach

Obtaining the Pareto-optimal set of a SUD, given by Eq. (8), is usually unfeasible, as the configuration space \( Cfg \) is typically extremely large or (when the probabilistic model template \({\mathcal {T}}\) includes evolvable distributions or evolvable parameters of type double) infinite. Therefore, EvoChecker synthesises a close approximation of the Pareto-optimal set by using standard multiobjective evolutionary algorithms such as the genetic algorithms NSGA-II (Deb et al. 2002), SPEA2 (Zitzler et al. 2001) and MOCell (Nebro et al. 2009).

Evolutionary algorithms (EAs) encode each possible solution of a search problem as a sequence of genes, i.e. binary representations of the problem variables. For EvoChecker , each use of an ‘evolvable’ construct (2)–(4) within the probabilistic model template \({\mathcal {T}}\) contributes to this sequence with the gene(s) specified by the encoding rules in Table 5. EvoChecker uses these rules to obtain the value ranges \(V_1, V_2, \ldots , V_k\) for the \(k\!\ge \! 1\) genes of \({\mathcal {T}}\), and to assemble the SUD configuration space \( Cfg =V_1\times V_2\times \cdots \times V_k\).

The high-level architecture of EvoChecker is shown in Fig. 3. The probabilistic model template \({\mathcal {T}}\) of the SUD is processed by a Template parser component. The Template parser converts the template into an internal representation (i.e. a parametric probabilistic model) and extracts the configuration space \( Cfg \) as described above. The configuration space \( Cfg \) and the \(n_1\) QoS constraints and \(n_2\) optimisation objectives of the SUD are used to initialise the Multi-objective evolutionary algorithm component at the core of EvoChecker . This component creates a random initial population of individuals (i.e. a set of random gene sequences corresponding to different \( Cfg \) elements), and then iteratively evolves this population into populations containing “fitter” individuals by using the standard EA approach summarised next.

The EA approach involves evaluating different individuals (i.e., potential new system configurations) through invoking an Individual analyser. This component combines an individual and the parametric model created by the Template parser to produce a probabilistic model M in which all configuration parameters are fixed using values from the genes of the analysed individual. Next, the Individual analyser invokes a Quantitative verification engine that uses probabilistic model checking to determine the QoS attributes \( attr _i\), \(1\le i\le n_1+n_2\), of the analysed individual. To this end, the Quantitative verification engine analyses the model M and each of the probabilistic temporal logic formulae \({\varPhi }_1\), \({\varPhi }_2\), ..., \({\varPhi }_{n_1+n_2}\) corresponding to the \(n_1+n_2\) QoS attributes. These attributes are then used by the Multi-objective evolutionary algorithm to establish whether the individual satisfies the \(n_1\) QoS constraints and to assess its “fitness” based on the QoS attribute values associated with the \(n_2\) QoS optimisation objectives.

Once all individuals have been evaluated, the Multi-objective evolutionary algorithm performs an assignment, reproduction and selection step. During assignment, the algorithm establishes the fitness of each individual (e.g., its rank in the population). Fit individuals have higher probability to enter a “mating” pool and to be chosen for reproduction and selection. With reproduction, the algorithm creates new individuals from the mating pool by means of crossover and mutation. Crossover randomly selects two fit individuals and exchanges genes between them to produce offspring with potentially higher fitness values. Mutation, on the other hand, introduces variation in the population by selecting an individual from the pool and creating an offspring by randomly changing a subset of its genes. Finally, through selection, a subset of the individuals from the current population and offspring becomes the new population that will evolve in the next generation.

The Multi-objective evolutionary algorithm uses elitism, a strategy that directly propagates into the next population a subset of the fittest individuals from the current population. This strategy ensures the iterative improvement of the Pareto-optimal approximation set PS assembled by EvoChecker . Furthermore, the multi-objective EAs used by EvoChecker maintain diversity in the population and generate a Pareto-optimal approximation set spread as uniformly as possible across the search space. This uniform spread is achieved using algorithm-specific mechanisms for diversity preservation. One such mechanism involves combining the nondomination level of each evaluated individual and the population density in its area of the search space.Footnote 2

The evolution of fitter individuals continues until one of the following termination criteria is met:

-

1.

the allocated computation time is exhausted;

-

2.

the maximum number of individual evaluations has been reached;

-

3.

no improvement in the quality of the best individuals has been detected over a predetermined number of successive iterations.

Once the evolution terminates, the set of nondominated individuals from the final population is returned as the Pareto-optimal set approximation \( PS \). The values of the QoS attributes associated with the \(n_2\) optimisation objectives and with each individual from \( PS \) are used to assemble the Pareto front approximation \( PF \). System designers can analyse the \( PS \) and \( PF \) sets to select the design to use for system implementation.

5.2 Using EvoChecker at runtime

5.2.1 EvoChecker -based self-adaptive systems

The use of EvoChecker to drive the runtime reconfiguration of self-adaptive software systems is illustrated in Fig. 4. The approach uses system Sensors to continually monitor the system and identify the parameters of the environment it operates in. Changes in the environment state e lead to updates of the probabilistic model template \({\mathcal {T}}\) used by EvoChecker and to the incremental synthesis of a probabilistic model specifying a new configuration c that enables the system to meet its QoS requirements in the changed environment. This configuration is applied using an Effectors interface of the self-adaptive system.

To speed up the search for a new configuration, the use of EvoChecker at runtime builds on the principles of incrementality and exploits the fact that changes in a self-adaptive system are typically localised (Ghezzi 2012). As reported in other domains (Helwig and Wanka 2008; Kazimipour et al. 2014), and also discussed in our related work section (Sect. 8), an effective initialisation of the EA search can speed up its convergence and can yield better-quality solutions. Accordingly, EvoChecker maintains an Archive (cf. Fig. 4) of “effective” configurations identified during recent reconfigurations of the self-adaptive system. This Archive is used to “seed” each new search with a subset of recent configurations that encode solutions to potentially similar environment states experienced in the past.

To fully automate the EvoChecker operation, its runtime use combines the QoS requirements that target the optimisation of QoS attributes into a composite single objective. Similar to other approaches for developing self-adaptive systems (Ramirez et al. 2011), this objective requires the minimisation of a generalised \( loss \) function given by

where \(w_j \ge 0\) are weight coefficients and at least one of them is strictly positive.Footnote 3

These weight coefficients express the desired trade-off between the j QoS attributes. Fonseca and Fleming (Fonseca and Fleming 1997) show that for any positive set of coefficient values, the identified solution is always Pareto optimal (compared to all other solutions generated during the search). Selecting appropriate values for the coefficients is a responsibility of system designers. To this end, they can use domain knowledge to determine the value range of the QoS attributes comprising the loss function and assign appropriate coefficient values that reflect their relative importance (Coello et al. 2006). Note that although more complex, loss is just another QoS attribute which can still be specified in the latest version of the probabilistic temporal logic languages supported by model checkers like PRISM (Kwiatkowska et al. 2011), so it fits the definition of an attribute from Eq. (5).

Example 5

To use EvoChecker in a self-adaptive variant of the FX system from our running example, the QoS attributes from Table 3 need to be combined into a \( loss \) function (10) that the self-adaptive system should minimise, e.g.:

with the weights \(w_1\), \(w_2\) and \(w_3\) chosen based on the value ranges and on the relative importance of the three attributes. Note that the first attribute from the \( loss \) function is actually the reciprocal of the reliability attribute \( attr _1\) from Table 3, as we want decreases in reliability to lead to rapid increases in \( loss \). Using the failure probability \(1\!-\! attr _1\) as the first attribute is also an option, although this choice yields a \( loss \) function that increases only linearly with the failure probability.

5.2.2 Runtime probabilistic model synthesis

When EvoChecker is used at runtime, the synthesis of probabilistic models is performed incrementally, i.e. by exploiting previously generated solutions, to speed up the synthesis of new solutions. This incremental synthesis is enabled by the Archive component shown in Fig. 4.

The use of EvoChecker within a self-adaptive system starts with an empty Archive, which is updated at the end of each reconfiguration step using an archive updating strategy. This strategy selects individuals from the final EA population synthesised by EvoChecker in the current reconfiguration step. Several criteria are used to enable this selection:

-

1.

an individual that meets all \(n_1\) constraints is preferred over an individual that violates one or more constraints;

-

2.

from two individuals that satisfy all constraints, the individual with the lowest \( loss \) is preferred;

-

3.

from two individuals that both violate at least one constraint, the individual with the lowest overall “level of violation” is preferred.Footnote 4

While EvoChecker is not prescriptive about the calculation of the level of violation from the last criterion, the current version of our tool uses the following definition.

Definition 3

(Constraints violation) For each combination of an environment state \(e \in Env \) and a configuration \(c \in Cfg \) of a self-adaptive system, the level of violation of the \(n_1\) QoS constraints is given by

where \(\alpha _i>0\) is a violation weight associated with the ith attribute.

Note that according to this definition, \( violation (e,c)=0\) for all (e, c) combinations that violate none of the \(n_1\) bounds.

Example 6

Consider the QoS requirements of the FX system from Table 1. The only QoS constraint, R1, requires that workflow executions are at least \( bound _1=0.98\) reliable. Hence, for any \((e,c) \in Env \times Cfg \),

The value of \(\alpha _1\) (i.e., \(\alpha _1=100\) in our experiments from Sect. 7.2) is provided to EvoChecker by simply annotating the constraint R1 with this value. EvoChecker automatically parses all such annotations and constructs the violation function for the system.

EvoChecker employs a preference relation based on criteria 1–3 to select configurations for storing in its archive. This relation and the EvoChecker archive updating strategy are formally defined below.

Definition 4

(Preference relation) Let \(e \in Env \) be an environment state of a self-adaptive system. Then, given two configurations \(c,c'\in Cfg \), configuration c is preferred over configuration \(c'\) (written \(c\prec c'\)) iff

\(\bigl (\forall 1 \le i \le n_1 \bullet attr _i(e,c) \bowtie _i bounds_i \;\wedge \;\) | |

\(\exists 1 \le i \le n_1 \bullet \lnot ( attr _i(e,c') \bowtie _i bounds_i)\bigr )\;\vee \) | ① |

\(\bigl (\forall 1 \le i \le n_1 \bullet attr _i(e,c) \bowtie _i bounds_i \;\wedge \; attr _i(e,c') \bowtie _i bounds_i\) | |

\(\wedge \; loss (e,c) < loss (e,c')\bigr )\;\vee \) | ② |

\(\bigl (\exists 1\!\!\le \!i,j\!\!\le n_1 \bullet \lnot ( attr _i(e,c)\!\bowtie _i bounds_i) \wedge \lnot ( attr _j(e,c')\!\bowtie _j bounds_j)\) | |

\(\wedge \; violation (e,c) < violation (e,c')\bigr )\) | ③ |

Definition 5

(Archive updating strategy) Let \(C_{e} \subseteq Cfg \) be the set of configurations synthesised for the new environment state \(e \in Env \) and \( Arch \) be the archive before the change. Then an archive updating strategy is a function \(\sigma : Cfg \rightarrow \mathbb {B}\) such that the updated archive at the end of the reconfiguration step is given by

We formally define four archive updating strategies that we will use to evaluate EvoChecker in Sect. 7:

-

1.

The prohibitive strategy retains no configurations in the archive:

$$\begin{aligned} \sigma (c) = false , \forall c \in Arch \cup C_{e} \end{aligned}$$(13) -

2.

The complete recent strategy uses the entire population from the current adaptation step and removes the previous configurations from the archive:

$$\begin{aligned} \sigma (c)= {\left\{ \begin{array}{ll} true , &{} \text {if } c \in C_{e}\\ false , &{} \text {otherwise} \end{array}\right. } \end{aligned}$$(14) -

3.

The limited recent strategy keeps the \(x, 0< x < \#C_{e}\), best configurations from the current adaptation step and removes the previous configurations from the archive:

$$\begin{aligned} \sigma (c)= {\left\{ \begin{array}{ll} true , &{} \text {if } c \in C_e \text { and } position (c) \le x\\ false , &{} \text {otherwise} \end{array}\right. }, \end{aligned}$$(15)where \( position : C_e \rightarrow \{1, 2, \ldots , \#C_e\}\) is a function that gives the position of a configuration \(c \in C_e\), i.e. \( position (c) = \#\{c' \in C_e \setminus \{c\} \mid c' \prec c \} +1\).

-

4.

The limited deep strategy accumulates the \(x, 0 \le x \le \#C_{e}\) best configurations from all previous adaptation steps, given by

$$\begin{aligned} \sigma (c)= {\left\{ \begin{array}{ll} true , &{} \text {if } c \in C_e \text { and } position (c) \le x\\ true , &{} \text {if } c \in Arch \\ false , &{} \text {otherwise} \end{array}\right. } \end{aligned}$$(16)

As the limited deep strategy yields archives that grow in size after each reconfiguration step, some of the archive elements must be evicted when the archive size exceeds the size of the EA population. Possible eviction methods include: (i) discarding the “oldest” individuals (e.g. by implementing the archive as a circular buffer of size equal to that of the EA population); and (ii) performing a random selection.

Using the archive \( Arch \) to create the initial EA population is carried out by importing configurations from the archive into the population (cf. Fig. 4). If a complete population cannot be created in this way (e.g. because \( Arch \) is empty at the beginning of the first reconfiguration step and may not contain sufficient individuals for a few more steps), additional individuals are generated randomly to form a complete initial population.

The assignment, reproduction and selection operations applied during the iterative evolution of the population, and the EA termination criteria are similar to those from the design-time use of EvoChecker . However, a standard single-objective (generational) evolutionary algorithm is used instead of the multi-objective evolutionary algorithm, since there is only one optimisation objective (10).

6 Implementation

To ease the evaluation and adoption of the EvoChecker approach, we have implemented a tool that automates its use at both design time and runtime. Our EvoChecker tool uses the leading probabilistic model checker PRISM (Kwiatkowska et al. 2011) as its Quantitative verification engine, and the established Java-based framework for multi-objective optimization with metaheuristics jMetal (Durillo and Nebro 2011) for its (Multi-objective) Evolutionary algorithm component. We developed the remaining EvoChecker components in Java, using the AntlrFootnote 5 parser generator to build the Template parser, and implementing dedicated versions of the Individual analyser, Monitor, Sensor and Effector components.

The open-source code of EvoChecker, the full experimental results summarised in the following section, additional information about EvoChecker and the case studies used for its evaluation are available at http://www-users.cs.york.ac.uk/simos/EvoChecker.

7 Evaluation

We performed a wide range of experiments to evaluate the effectiveness of EvoChecker at both design time and runtime. The design-time use of Evo-Checker employs multi-objective genetic algorithms (MOGAs), while the runtime use of EvoChecker is based on a single-objective (generational) Genetic algorithm (GA). Experimenting with other types of evolutionary algorithms (e.g. evolution strategies, differential evolution) is part of our future work (Sect. 9). In Sects. 7.1 and 7.2, we describe the evaluation procedure and the results obtained for the design-time and runtime use of EvoChecker, respectively. For each use, we introduce the research questions that guided the experimental process, we describe the experimental setup, we summarise the methodology followed for obtaining and analysing the results, and finally, we present and discuss our findings. We conclude the evaluation with a review of threats to validity (Sect. 7.3).

7.1 Evaluating EvoChecker at design time

7.1.1 Research questions

The aim of our evaluation was to answer the following research questions.

- RQ1 :

-

(Validation): How does the design-time use of EvoChecker perform compared to random search? We used this research question to establish if the application of EvoChecker at design time “comfortably outperforms a random search” (Harman et al. 2012a), as expected of effective search-based software engineering solutions.

- RQ2 :

-

(Comparison): How do EvoChecker instances using different MOGAs perform compared to each other? Since we devised EvoChecker to work with any MOGA, we examined the results produced by EvoChecker instances using three established such algorithms [i.e., NSGA-II (Deb et al. 2002), SPEA2 (Zitzler et al. 2001), MOCell (Nebro et al. 2009)].

- RQ3 :

-

(Insights): Can EvoChecker provide insights into the tradeoffs between the QoS attributes of alternative software architectures and instantiations of system parameters? To support system experts in their decision making, EvoChecker must provide insights into the tradeoffs between multiple QoS objectives. To address this question, we identified a range of decisions suggested by the EvoChecker results for the software systems considered in our evaluation.

7.1.2 Experimental setup

The experimental evaluation comprised multiple scenarios associated with two software systems from different application domains. The first is the foreign exchange (FX) service-based system described in Sect. 2. The second is a software-controlled dynamic power management (DPM) system adapted from Qiu et al. (2001), Sesic et al. (2008) and described on our project webpage.

We performed a wide range of experiments using the system variants from Table 6. The column ‘Details’ reports the number of third-party implementations for each service of the FX systemFootnote 6; and the capacity of the two request queues (\(Qmax_H\) and \(Qmax_L\)) and the number of power managers available (\(m=2\)) for the DPM system. The column ‘Size’ lists the configuration space size assuming a two-decimal points discretisation of the real parameters and probability distributions of the probabilistic model template (cf. Table 5). Given the nonlinearity of most probabilistic models, this is the minimum precision we could assume as an 0.01 increase or decrease in one of these parameters can have a significant effect in the evaluation of a QoS attribute. Finally, the column ‘T\(_\text {run}\)’ shows the average running time per system variant for evaluating a configuration. Note that the EvoChecker run time depends on the size of model \(\mathcal {M}\) and the time consumed by the probabilistic model checker to establish the \(n_1 + n_2\) QoS attributes from Eq. (5) and on the computer used for the evaluation.

We conducted a two-part evaluation for EvoChecker. First, to assess the stochasticity of the approach when different MOGAs are adopted and also to eliminate the possibility that any observations may have been obtained by chance, we used specific scenarios for the system variants from Table 6. For the FX system variants, we chose realistic values for reliability, performance and invocation cost of third-party services implementations, while the values of parameters for the DPM system variants (i.e., power usage and transition rates) correspond to the real-world system from Qiu et al. (2001), Sesic et al. (2008). Second, to mitigate further the risk of accidentally choosing values that biased the EvoChecker evaluation, we defined a set of 30 different scenarios per FX system variant with varied services characteristics for each scenario.

7.1.3 Evaluation methodology

We used the following established MOGAs to evaluate the use of EvoChecker at design time: NSGA-II (Deb et al. 2002), SPEA2 (Zitzler et al. 2001) and MOCell (Nebro et al. 2009).

In line with the standard practice for evaluating the performance of stochastic optimisation algorithms (Arcuri and Briand 2011), we performed multiple (i.e., 30) independent runs for each system variant from Table 6 and each multiobjective optimisation algorithm, i.e., NSGA-II, SPEA2, MOCell and random search. Each run comprised 10,000 evaluations, each using a different initial population of 100 individuals, single-point crossover with probability \(p_c = 0.9\), and single-point mutation with probability \(p_{m} = 1/n_p\), where \(n_p\) is the number of configuration parameters for a particular system variant. All the experiments were run on a CentOS Linux 6.5 64bit server with two 2.6GHz Intel Xeon E5-2670 processors and 32GB of memory.

Obtaining the actual Pareto front for our system variants is unfeasible because of their very large configuration spaces. Therefore, we adopted the established practice (Zitzler et al. 2008) of comparing the Pareto front approximations produced by each algorithm with the reference Pareto front comprising the nondominated solutions from all the runs carried out for the analysed system variant. For this comparison, we employed the widely-used Pareto-front quality indicators below, and we will present their means and box plots as measures of central tendency and distribution, respectively:

- \(I_\epsilon \) :

-

(Unary additive epsilon) (Zitzler et al. 2003). This is the minimum additive term by which the elements of the objective vectors from a Pareto front approximation must be adjusted in order to dominate the objective vectors from the reference front. This indicator presents convergence to the reference front and is Pareto compliant.Footnote 7 Smaller \(I_{\epsilon }\) values denote better Pareto front approximations.

- \(I_{HV}\) :

-

(Hypervolume) (Zitzler and Thiele 1999). This indicator measures the volume in the objective space covered by a Pareto front approximation with respect to the reference front (or a reference point). It measures both convergence and diversity, and is strictly Pareto compliant (Zitzler et al. 2007). Larger \(I_{HV}\) values denote better Pareto front approximations.

- \(I_{IGD}\) :

-

(Inverted Generational Distance) (Van Veldhuizen 1999). This indicator gives an “error measure” as the Euclidean distance in the objective space between the reference front and the Pareto front approximation. \(I_{IGD}\) shows both diversity and convergence to the reference front. Smaller \(I_{IGD}\) values signify better Pareto front approximations.

We used inferential statistical tests to compare these quality indicators across the four algorithms (Arcuri and Briand 2011; Harman et al. 2012b). As is typical of multiobjective optimisation (Zitzler et al. 2008), the Shapiro–Wilk test showed that the quality indicators were not normally distributed, so we used the Kruskal–Wallis non-parametric test with 95% confidence level (\(\alpha \!=\! 0.05\)) to analyse the results without making assumptions about the distribution of the data or the homogeneity of its variance. We also performed a post-hoc analysis with pairwise comparisons between the algorithms using Dunn’s pairwise test, controlling the family-wise error rate with the Bonferroni correction \(p_{crit} = \alpha /k\), where k is the number of comparisons.

7.1.4 Results and discussion

RQ1 (Validation) We carried out the experiments described in the previous section and we report their results in Table 7 and Fig. 5. The ‘+’ from the last column of the table entries indicate that the Kruskal–Wallis test showed significant difference among the four algorithms (p value<0.05) for all six system variants and all Pareto-front quality indicators.

For both systems, EvoChecker using any MOGA achieved considerably better results than random search, for all quality indicators and system variants. The post hoc analysis of pairwise comparisons between random search and the MOGAs provided statistical evidence about the superiority of the MOGAs for all system variants and for all quality indicators. The best and, when obtained, the second best outcomes of this analysis per system variant and quality indicator are shaded and lightly shaded in the result tables, respectively. This superiority of the results obtained using EvoChecker with any of the MOGAs over those produced by random search can also be seen from the boxplots in Fig. 5.

We qualitatively support our findings by showing in Figs. 6 and 7 the Pareto front approximations achieved by EvoChecker with each of the MOGAs and by random search, for a typical run of the experiment for the DPM and FX system variants, respectively. We observe that irrespective of the MOGA, EvoChecker achieves Pareto front approximations with more, better spread and higher quality nondominated solutions than random search.

Boxplots for a specific scenario of the FX system variants (left) and DPM system variants (right) from Table 6, evaluated with quality indicators \(I_{\epsilon }\), \(I_{HV}\) and \(I_{IGD}\)

As explained earlier, the parameters we used for the DPM system variants (power usage, transition rates, etc.) correspond to the real-world system (Qiu et al. 2001; Sesic et al. 2008). In contrast, for the FX system variants we chose realistic values for the reliability, performance and cost of the third-party services. To mitigate the risk of accidentally choosing values that biased the EvoChecker evaluation, we performed additional experiments comprising 300 independent runs per FX system variant (900 runs in total) in which these parameters were randomly instantiated. To allow for a fair comparison across the experiments comprising the 30 different FX scenarios, and to avoid undesired scaling effects, we normalise the results obtained for each quality indicator per experiment within the range [0,1]. The results of this further analysis, shown in Table 8 and Fig. 8, validate our findings.

Considering all these results, we have strong empirical evidence that the EvoChecker significantly outperforms random search, for a range of system variants from two different domains, and across multiple widely-used MOGAs. This also confirms the challenging and well-formulated nature of the multi-objective probabilistic model synthesis problem we introduced in Sect. 5.1.1.

Typical Pareto front approximations for the FX system variants and optimisation objectives R2–R4 from Table 4. a FX_Small, b FX_Medium, c FX_Large

Typical Pareto front approximations for the DPM system variants. The DPM optimisation objectives involve minimising the steady-state power utilisation (“Power use”), minimising the number of requests lost at the steady state (“Lost requests”), and minimising the capacity of the DPM queues (“Queue length”). a DPM_Small, b DPM_Medium, c DPM_Large

Boxplots for the FX system variants (Table 6) across 30 different scenarios, evaluated using the quality indicators \(I_{\epsilon }\), \(I_{HV}\) and \(I_{IGD}\)

RQ2 (Comparison) To compare EvoChecker instances based on different MOGAs, we first observe in Table 7 that NSGA-II and SPEA2 outperformed MOCell for all system variant–quality indicator combinations except DPM_Small (\(I_{IGD}\)). Between SPEA2 and NSGA-II, the former achieved slightly better results for the smaller configuration spaces of the DPM system variants (across all indicators) and for the \(I_{HV}\) indicator (across all system variants), whereas NSGA-II yielded Pareto-front approximations with better \(I_{\epsilon }\) and \(I_{IGD}\) indicators for the larger configuration spaces of the FX system variants (except the combination FX_Small (\(I_\epsilon \))).

Additionally, we carried out the post-hoc analysis described in Sect. 7.1.3, for 9 system variants (counting separately the FX system variants with chosen services characteristics and those comprising the adaptation scenarios) \(\times \) 3 quality indicators = 27 tests. Out of these tests, 22 tests (i.e., a percentage of 81.4%) showed high statistical significance in the differences between the performance achieved by EvoChecker with different MOGAs (Table 9). The five system variant–quality indicator combinations for which the tests were unsuccessful are: FX_Medium (\(I_\epsilon \)), FX_Small_Adapt (\(I_\epsilon \)), FX_ Medium_Adapt(\(I_\epsilon \)), FX_Small(\(I_{IGD}\)) and FX_Medium(\(I_{IGD}\)).

These results show that if the probabilistic model synthesis problem can be formulated as a multi-objective optimisation problem, then several MOGAs can be used to synthesise the Pareto approximation sets PF and PS effectively. Selecting between alternative MOGAs entails using domain knowledge about the synthesis problem, and analysing the individual strengths of the MOGAs (Harman et al. 2012a). The results also confirm the generality of the EvoChecker approach, showing that its functionality can be realised using multiple established MOGAs.

RQ3 (Insights) We performed qualitative analysis of the Pareto front approximations produced by EvoChecker, in order to identify actionable insights. We present this for the FX and DPM Pareto front approximations from Figs. 6 and 7, respectively.

First, the EvoChecker results enable the identification of the “point of diminishing returns” for each system variant. The results from Fig. 6 show that configurations with costs above approximately 52 for FX_Small, 61 for FX_Medium and 94 for FX_Large provide only marginal response time and reliability improvements over the best configurations achievable for these costs. Likewise, the results in Fig. 7 show that DPM configurations with power use above 1.7W yield insignificant reductions in the number of lost requests, whereas configurations with even slightly lower power use lead to much higher request loss. This key information helps system experts to avoid unnecessarily expensive solutions.

Second, we note the high density of solutions in the areas with low reliability (below 0.95) for the FX system in Fig. 6, and with high request loss (above 0.09) for the DPM system in Fig. 7. For the FX system, for instance, these areas correspond to the use of the probabilistic invocation strategy, for which numerous service combinations can achieve similar reliability and response time with relatively low, comparable costs. Opting for a configuration from this area will make the FX system susceptible to failures, as when the only implementation invoked for an FX service fails, the entire workflow execution will also fail. In contrast, reliability values above 0.995 correspond to expensive configurations that use the sequential selection strategy; e.g., FX_Small must use the sequential strategy for the Market watch and Fundamental analysis services in order to achieve 0.996 reliability.

Third, the EvoChecker results reveal configuration parameters that QoS attributes are particularly sensitive to. For the FX system, for example, we noticed a strong dependency of the workflow reliability on the service invocation strategy and the number of implementations used for each service. Configurations from high-reliability areas of the Pareto front not only use the sequential strategy, but also require multiple services per FX service (e.g., three FX service providers are needed for success rates above 0.99).

Finally, we note EvoChecker’s ability to produce solutions that: (i) cover a wide range of values for the QoS attributes from the optimisation objectives of the FX and DPM systems; and (ii) include alternatives with different tradeoffs for fixed values of one of these attributes. Thus, for 0.99 reliability, the experiment from Fig. 6 generated four alternative FX_Large configurations, each with a different cost and execution time. Similar observations can be made for a specific value of either of the other two QoS attributes. These results support the system experts in their decision making.

7.2 Evaluating EvoChecker at runtime

7.2.1 Research questions

We evaluated the runtime use of EvoChecker to answer the research questions below.

- RQ4 :

-

(Effectiveness): Can EvoChecker support dependable adaptation? With this research question we examine whether our approach can identify new effective configurations at runtime.

- RQ5 :

-

(Validation): How does EvoChecker perform compared to random search? Following the standard practice in search-based software engineering (Harman et al. 2012b), with this research question we aim to determine whether our approach performs better than random search.

- RQ6 :

-

(Archive-strategy comparison): How do EvoChecker instances based on different archive updating strategies compare to each other? We used this research question to analyse the impact of various archive updating strategies in the performance of EvoChecker. To this end, we study whether specific strategies improve the quality of a search and/or help identifying faster an effective configuration. We also investigate possible relationships between archive updating strategies and specific adaptation events.

7.2.2 Experimental setup

For the experimental evaluation, we used two self-adaptive software systems from different application domains. The first is the FX service-based system from Sect. 2 and the second is an embedded system from the area of unmanned underwater vehicles (UUVs) adapted from Calinescu et al. (2015), Gerasimou et al. (2014), Gerasimou et al. (2017) and described on our project webpage.

We applied EvoChecker at runtime to the system variants from Table 10, aiming to assess its behaviour for multiple configuration space sizes. As before (cf. Table 6), the column ‘Details’ shows for the UUV system the number of sensors, their measurement rates and the UUV speed, while for the FX system the number of third-party implementations for each service. The column ‘Size’ reports the size of the configuration space that an exhaustive search would need to explore using two-decimal precision for the real parameters and probability distributions of the probabilistic model template (cf. Table 5). Finally, the column ‘T\(_{\text {run}}\)’ shows the average time required by EvoChecker to evaluate a configuration on a 2.6GhZ Intel Core i5 Macbook Pro computer with 16GB memory, running Mac OSX 10.9.

To evaluate EvoChecker at runtime, we identified several changes that can cause each UUV and FX system variant to adapt. These changes cover a wide range of the possible values that the environment parameters of each system variant can take (Table 12). Due to these changes, the systems experience problems while providing service (e.g., service degradation, violation of QoS requirements) and therefore are forced to adapt. Sensors in the UUV variants, beyond normal behaviour, encounter periods of unexpected changes (C1–C12) during which their rates change dramatically, including sensor failures and recovery from these failures, and significant variation in measurement rates. Changes C1–C13 in FX comprise sudden minor or significant increase in response time and decline in reliability of service implementations, and complete failure or recovery of service implementations. For instance, change C7 in FX_Small represents a deviation from the nominal reliability of the first and third service implementations of the \( Market\_Watch \) service (cf. Fig. 2): before the change, \(r_{11}=0.98\) and \(r_{13}=0.993\); and, after the change, \(r_{11}=0.89\) and \(r_{13}=0.93\). This is a significant change because the FX system cannot meet the reliability requirement (Table 1) using only the degraded service implementations (i.e., \(x_{11}\!=\!1, x_{12}\!=\!0, x_{13}\!=1\!\)). Instead, a valid configuration should always realise the functionality of the \( Market\_Watch \) service by selecting its second service implementation (thus setting \(x_{12}=1\)).Footnote 8 We make available the EvoChecker templates for the changes in Table 12 on our project webpage.

Answering research question RQ1 entails making the configuration space size tractable for exhaustive search. Searching exhaustively through the configuration space of the UUV_Medium variant (which has the smallest configuration space and the shortest average time per evaluation) would take an estimated \(2.19\cdot 10^{13}\) h (given the \(1.04\cdot 10^{19}\) configurations to analyse, and a mean analysis time of 0.0076 s). Thus, we used the UUV_Medium variant but disabled three of its sensors, leaving just under \(2.56\cdot 10^9\) possible configurations. We also disregarded the adaptation time, since it is too large for exhaustive search. For the same reason, we performed this assessment on a subset of the UUV changes (i.e. C1, C3, C4); these changes correspond to a representative sample of the UUV changes from Table 12.

For using EvoChecker at runtime, we define the QoS optimisation objectives as a \( loss \) function; see Eq. (10) in Sect. 5.2. We used the \( loss \) function from Example 5 with \(w_1=0.2\), \(w_2=0.004\) and \(w_3=0.016\) for the FX system variants, and a similarly defined loss function (provided on our project webpage) for the UUV system variants.

Since EvoChecker at runtime employs a single optimisation objective, we employ an elitist single objective GA. Recall that an elitist GA propagates the best individuals to the next generation. With elitism, if the GA discovers the best solution, then the entire population will eventually converge to this solution (Coello et al. 2006).

To investigate whether different archive updating strategies (cf. Def. 5) can improve the efficiency of EvoChecker, we realised the strategies from (13)–(16). To this end, we created four different GA variants, each enhanced with one of the following archive updating strategies:

- PGA::

-

a prohibitive strategy (13) that does not keep any configurations in the archive. Thus, a search for a new configuration starts without using any prior knowledge.

- CRGA::

-

a complete recent strategy (14) that puts in the archive the entire population from the current adaptation step and discards all previous configurations.

- LRGA::

-

a limited recent strategy (15) that stores in the archive the two best configurations (i.e. \(x=2\)) from the current adaptation step, and removes all the other configurations from the archive.

- LDGA::

-

a limited deep strategy (16) that accumulates in the archive the two best configurations (i.e. \(x=2\)) from all previous adaptation steps. If the archive size exceeds the initial size of the GA population, then a random selection is carried out to select the configurations that will comprise the seed for the next search.

7.2.3 Evaluation methodology

We adopted the established procedure in search-based software engineering for the analysis of optimisation algorithms (Arcuri and Briand 2011). Thus, for each system variant from Table 10 we carried out 30 independent runs per optimisation algorithm using the adaptation events (changes) in Table 12 sequentially. We assume that the time interval between successive changes is long enough that enables running EvoChecker. All algorithms used a population of 50 individuals. The GAs used single-point crossover with probability \(p_c=0.9\) and single-point mutation with probability \(p_m=1/n_{k}\), where \(n_{k}\) is the number of system configuration parameters from the configuration space Cfg. Each algorithm was executed for 5000 iterations. When no improvement was detected for 1000 successive iterations (i.e. 20% of the allocated evolution time), the evolution terminated early. The solution corresponding to the best individual from the last population was used to reconfigure the system. After normalisation, and for ease of presentation, we assigned the maximum loss of 1.00 for each event in which an algorithm failed to find a configuration satisfying the QoS constraints of the system. We used the loss corresponding to the selected configuration as a quality indicator to compare the effectiveness of the optimisation algorithms and answer research questions RQ4–RQ6. Furthermore, we combined these quality results with data about the number of iterations executed by the algorithms (for all 30 independent runs) to assess their ability both to identify good solutions and converge (or stagnate, if no effective solution was found within the available time).

Following the standard advice for assessing the performance of optimisation algorithms, we used inferential statistical tests (Arcuri and Briand 2011; Coello et al. 2006). First, we analysed the normality of data and confirmed its deviation from the normal distribution using the Shapiro–Wilk test. Then, we used the non-parametric tests Mann–Whitney and Kruskal–Wallis with 95% confidence level (\(\alpha \) = 0.05) to analyse the results without making assumptions about the data distribution or the homogeneity of its variance. Also, to compare the EvoChecker instantiations with different archive updating strategies, we ran a post-hoc analysis using Dunn’s pairwise test, controlling the family-wise error rate using the Bonferroni correction \(p_{ crit }\!=\!\alpha /k\), where k is the number of comparisons.

Finally, when statistical significance exists, we establish the practical importance of the observed effect. Therefore, we used the Varga and Delaney’s effect size measure (Vargha and Delaney 2000; Arcuri and Briand 2011). When comparing algorithms A and B, this measure returns the probability \(A_{AB} \in [0,1]\) that algorithm A will yield better results than algorithm B. For instance, if \(A_{AB}=0.5\) then the algorithms are equivalent, while if \(A_{AB}=0.8\) then algorithm A will achieve better results 80% of the time.

7.2.4 Results and discussion

RQ4 (Effectiveness) We begin the presentation of our results by examining whether EvoChecker at runtime can identify new effective configurations in response to unexpected environment and/or system events. To answer this research question we performed two types of experiments.

First, we used the UUV_Medium system variant and assessed the effectiveness of the selected configurations using PGA compared to those generated by exhaustive search. We reduced the configuration space of UUV_Medium, using the process described in Sect. 7.2.2, to make it tractable for exhaustive search. For all the events, the EvoChecker with PGA found configurations satisfying system QoS constraints R1 and R2 (cf. Table 11) with average loss not more than 9% of the optimal loss given by the configurations found by exhaustive search. Both time and memory overheads incurred by exhaustive search were approximately two orders of magnitude larger than PGA.

For the second experiment, we analysed how the events in FX_Small system variant from Table 12 affected its compliance with QoS requirement R1 (i.e. workflow reliability) and varied the loss before (using the current configuration) and after (using the new configuration) each adaptation. Figure 9 depicts a typical run (timeline) of these changes and the impact of the configurations selected by the no-archive version of EvoChecker (i.e. PGA) in workflow reliability and loss.

Irrespective of the change in environment state, either being a serious decrease in workflow reliability or a moderate increase in response time, EvoChecker always managed to successfully self-adapt the system by identifying configurations that met QoS constraint R1 (cf. Table 1). Furthermore, EvoChecker maintained a balanced system loss of approximately 0.845. Given that searching exhaustively the configuration space is unfeasible and that the average running time for evaluating a single configuration is less than 1s (cf. Table 10), these experimental results indicate that our approach can support system adaptation.

We also analysed changes C3, C5, C7 and C13, in which the system exhibited a significant decrease in workflow reliability, caused by decrease in reliability of the service implementations used at various points in time. Due to this abrupt change, the currently used service implementations failed to meet requirement R1 and EvoChecker was invoked to carry out the search for a new configuration. As an example, for change C13, the system experienced a serious disruption in about 50% of the available service implementations. As a result, workflow reliability fell to only 72%. The newly found configuration restored compliance with R1 (i.e. approximately 98.5%), but increased the probabilities of using more expensive implementations, yielding a significantly higher expected loss of 0.935.

Another interesting observation concerns change C10 (cf. Table 12) in which two previously under-performing service implementations (those with increased response time \(t_{41}\) and \(t_{42}\)) recover. Although no requirement violation occurs, i.e. workflow reliability R1 is not affected by this change, the system loss corresponding to the new configuration selected by EvoChecker is slightly higher compared to the configuration before the change. Since for each change PGA starts a new search and does not use any knowledge gained from previous adaptation steps, this is expected. As we explain in research question RQ6, this issue can be addressed using one of the other archive updating strategies which seed a new GA search with configurations from the archive.

Variation in workflow reliability and system loss of the FX_Small variant due to the changes from Table 12 and system adaptation using EvoChecker with no archive use (i.e. PGA)

RQ5 (Validation). To answer this research question we compared the no-archive version of incremental EvoChecker (i.e. PGA) with random search (RS). For conciseness, we include a representative sample of reconfiguration events. Thus, Figs. 10 and 11 show the evolution of the algorithms every 500 iterations (i.e., 10 generations) for the FX_Small variant for changes C4, C7, C11 and C13, and for the UUV_Large variant for changes C7 and C12, respectively. When an algorithm terminated early, we propagated the final loss to the remaining evolution stages (i.e. until the 5000th iteration). An asterisk ‘\(*\)’ next to each algorithm’s boxplot denotes when the algorithm terminated for all 30 runs.

For both variants of the FX and UUV systems and for all 25 events, the EvoChecker employing PGA identified configurations that met QoS requirements and achieved lower loss than RS. We obtained statistical significance (p value < 0.05) using the Mann–Whitney test for all system variants and for all events, with the p value being in the range [1.689E−02, 1.669E−11]. In fact, as the size of the system increases (cf. Table 10), PGA’s ability to outperform RS becomes more evident.

We also measured the improvement magnitude using the \(A_{\text {PGA,RS}}\) effect size metric (Vargha and Delaney 2000). For all evaluated events and evolution stages, the effect size was large with \(A_{\text {PGA,RS}} \in [0.696, 1.00]\). Thus, PGA achieved better results than RS at least 69.6% of the time, while in some events, especially for the larger system variants FX_Medium and UUV_Large, the dominance reached 100%.

Another interesting finding concerns the evolution of the populations of these algorithms. Despite the overall performance difference, at the beginning of the evolution, i.e. 200–300 iterations, both the p value and effect size are on the lower end of their respective value ranges. During these iterations, PGA operates pseudo-randomly and the impact of its selection and reproduction mechanisms, i.e. crossover and mutation, are not strong yet. As the evolution progresses, the performance gap between PGA and RS increases, reaching eventually the upper end of the p value and effect size ranges.

Considering these results, we conclude that EvoChecker instantiated with GA-based algorithm that uses a prohibitive selection strategy (PGA) significantly outperforms random search (RS) with large effect size in all adaptation steps and for all FX and UUV system variants. Thus, the use of evolutionary search-based approaches produces configuration with better quality.