Abstract

We consider the thresholding scheme, a time discretization for mean curvature flow introduced by Merriman et al. (Diffusion generated motion by mean curvature. Department of Mathematics, University of California, Los Angeles 1992). We prove a convergence result in the multi-phase case. The result establishes convergence towards a weak formulation of mean curvature flow in the BV-framework of sets of finite perimeter. The proof is based on the interpretation of the thresholding scheme as a minimizing movements scheme by Esedoğlu et al. (Commun Pure Appl Math 68(5):808–864, 2015). This interpretation means that the thresholding scheme preserves the structure of (multi-phase) mean curvature flow as a gradient flow w. r. t. the total interfacial energy. More precisely, the thresholding scheme is a minimizing movements scheme for an energy functional that \(\Gamma \)-converges to the total interfacial energy. In this sense, our proof is similar to the convergence results of Almgren et al. (SIAM J Control Optim 31(2):387–438, 1993) and Luckhaus and Sturzenhecker (Calculus Var Partial Differ Equ 3(2):253–271, 1995), which establish convergence of a more academic minimizing movements scheme. Like the one of Luckhaus and Sturzenhecker, ours is a conditional convergence result, which means that we have to assume that the time-integrated energy of the approximation converges to the time-integrated energy of the limit. This is a natural assumption, which however is not ensured by the compactness coming from the basic estimates.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Context

The thresholding scheme, a time discretization for mean curvature flow introduced by Merriman et al. [26], has because of its conceptual and practical simplicity become a very popular scheme, see Algorithm 1 for its definition in a more general context. It has a natural extension from the two-phase case to the multi-phase case with triple junctions in local equilibrium, well-known in case for equal surface tensions since some time [27]. Multi-phase mean-curvature flow models the slow relaxation of grain boundaries in polycrystals (called grain growth), where each grain corresponds to a phase. Elsey et al. have shown that (a modification of) the thresholding scheme is practical in handling a large number of grains over time intervals sufficiently large to extract significant statistics of the coarsening (also called aging) of the grain configuration [11–13]. In grain growth, the surface tension (and the mobility) of a grain boundary is both dependent on the misorientation between the crystal lattice of the two adjacent grains and on the orientation of its normal. In other words, the surface tension \(\sigma _{ij}\) of an interface is indexed by the pair (i, j) of phases it separates, and is anisotropic. Esedoğlu and the second author have shown in [14] the thresholding scheme can be extended to handle the first extension in a very general way, including in particular the most popular Ansatz for a misorientation-dependent grain boundary energy [33]. How to handle general anisotropies in the framework of the thresholding scheme, even in case of two phases, seems not yet to be completely settled, see however [5] and [19]. Hence in this work, we will focus on the isotropic case, ignore mobilities, but make the attempt to be as general as [14] when it comes to the dependence of \(\sigma _{ij}\) on the pair (i, j).

In the two-phase case, the convergence of the thresholding scheme is well-understood: two-phase mean curvature flow satisfies a geometric comparison principle, and it is easy to see that the thresholding scheme preserves this structure. Partial differential equations and geometric motions that allow for a comparison principle can typically be even characterized by comparison with very simple solutions, which opens the way for a definition of a very robust notion of weak solutions, namely what bears the somewhat misleading name of viscosity solutions. If one allows for what the experts know as fattening, two-phase mean-curvature flow is well-posed in this framework [16]. Barles and Georgelin [4] and Evans [15] proved independently that the thresholding scheme converges to mean-curvature flow in this sense. Hence the main novelty of this work is a (conditional) convergence result in the multi-phase case; where clearly a geometric comparison principle is absent. However, the result has also some interest in the two-phase case, since it establishes convergence even in situations where the viscosity solution features fattening. Together with Drew Swartz [22], the first author uses similar arguments to treat another version of mean curvature flow that does not even allow for a comparison principle in the two-phase case, namely volume-preserving mean-curvature flow. They prove (conditioned) convergence of a scheme introduced by Ruuth and Wetton in [35]. We also draw the reader’s attention to the recent work of Mugnai et al. [32], where they prove a (conditional) convergence result as in [24] of a modification of the scheme in [2, 24] to volume-preserving mean curvature flow. Note that due to the only conditional convergence, our result does not provide a long-time existence result for (weak solutions of) multi-phase mean curvature flow. Short-time existence results of smooth solutions go back to the work of Bronsard and Reitich [7]. Mantegazza et al. [25] and Schnürer et al. [38] were able to construct long-time solutions close to a self-similar singularity.

For the present work, the structural substitute for the comparison principle is the gradient flow structure. Folklore says that mean curvature flow, also in its multi-phase version, is the gradient flow of the total interfacial energy. It is by now well-appreciated that the gradient flow structure also requires fixing a Riemannian structure, that is, an inner product on the tangent space, which here is given by the space of normal velocities. Mean curvature flow is then the gradient flow with respect to the \(L^2\)-inner product, in case of grain growth weighted by grain-boundary-dependent and anisotropic mobilities. Loosely speaking, Brakke’s existence proof in the framework of varifolds [6] is based on this structure in the sense that the solution monitors weighted versions of the interfacial energy. Recently, Kim and Tonegawa [21] improved this work by deriving the continuity of the volumes of the grains in the case of grain growth with equal surface tensions which ensures that the solution is non-trivial. Also Ilmanen’s convergence proof of the Allen–Cahn equation, a diffuse interface approximation of computational relevance in the world of phase-field models, to mean curvature flow makes use of the gradient flow structure [18]. It was only discovered recently that the thresholding algorithm preserves also this gradient flow structure [14], which in that paper was taken as a guiding principle to extend the scheme to surface tensions \(\sigma _{ij}\) and mobilities that depend on the phase pair (i, j). In this paper, we take the gradient flow structure, which we make more precise in the following paragraphs, as a guiding principle for the convergence proof.

On the abstract level, every gradient flow has a natural discretization in time, which comes in form of a sequence of variational problems: the configuration \(\Sigma ^n\) at time step n is obtained by minimizing \(\frac{1}{2}\mathrm{dist}^2(\Sigma , \Sigma ^{n-1}) +h E(\Sigma )\), where \(\Sigma ^{n-1}\) is the configuration at the preceding time step, h is the time-step size and \(\mathrm{dist}\) denotes the induced distance on the configuration space endowed with the Riemannian structure. In the Euclidean case, the Euler–Lagrange equation (i. e. the first variation) of this variational problem yields the implicit (or backwards) Euler scheme. This variational scheme has been named “minimizing movements” by De Giorgi [10], and has recently gained popularity because it allows to interpret diffusion equations as gradient flows of an entropy functional w. r. t. the Wasserstein metric ([20], see [3] for the abstract framework)—an otherwise unrelated problem. However, the formal Riemannian structure in case of mean curvature flow is completely degenerate: \(\mathrm{dist}^2(\Sigma ,{\tilde{\Sigma }})\) as defined as the infimal energy of curves in configuration space that connect \(\Sigma \) to \({\tilde{\Sigma }}\) vanishes identically, cf. [28].

Hence when formulating a minimizing movements scheme in case of mean curvature flow, one has to come up with a proxy for \(\mathrm{dist}^2(\Sigma ,{\tilde{\Sigma }})\). This has been independently achieved by Almgren et al. [2] on the one side and Luckhaus and Sturzenhecker [24] on the other side of the Atlantic. \(\Sigma =\partial \Omega \) and \({\tilde{\Sigma }} ={\partial {\tilde{\Omega }}}\), \(2\int _{\Omega \triangle {\tilde{\Omega }}}d(x,\Sigma )dx\) is one possible substitute for \(\mathrm{dist}^2(\Sigma ,{\tilde{\Sigma }})\) in the minimizing movements scheme, where \(d(x,\Sigma )\) denotes the unsigned distance of the point x to the surface \(\Sigma \)—it is easy to see that to leading order in the proximity of \({\tilde{\Omega }}\) to \(\Omega \), this expression behaves as the metric tensor \(\int _{\Sigma }V^2dx\), where V is the normal velocity leading from \(\Omega \) to \({\tilde{\Omega }}\) in one unit time. Their work makes this point by proving that this minimizing movements scheme converges to mean curvature flow. To be more precise, they consider a time-discrete solution \(\{\Omega ^n\}_n\) of the minimizing movement scheme, interpolated as a piecewise constant function \(\Omega ^h\) in time and assume that for a subsequence \(h\downarrow 0\), the time-dependent sets \(\Omega ^h\) converge to \(\Omega \) in a stronger sense than the given compactness provides. Almgren et al. assume that \(\Sigma ^h(t)\) converges to \(\Sigma (t)\) in the Hausdorff distance and show that \(\Sigma \) solves the mean curvature flow equation in the above mentioned viscosity sense. The argument was later substantially simplified by Chambolle and Novaga in [9]. Luckhaus and Sturzenhecker start from a weaker convergence assumption than the one in [2]: they assume that for the finite time horizon T under consideration, \(\int _0^T|\Sigma ^h(t)|dt\) converges to \(\int _0^T|\Sigma (t)|dt\). Then they show that \(\Omega \) evolves according to a weak formulation of mean curvature flow, using a distributional formulation of mean curvature that is available for sets of finite perimeter, see Definition 1.1 for the multi-phase case of this formulation. Incidentally, weak-strong uniqueness of this formulation seems not to be understood—even in the two-phase case. Those are both only conditional convergence results: While the natural estimates coming from the minimizing movements scheme, namely the uniform boundedness of \(\sup _n|\Sigma ^n|\) and \(\sum _{n}2\int _{\Omega ^n\triangle \Omega ^{n+1}}d(x,\Sigma ^n)dx\), are enough to ensure \(\int _0^T|\Omega ^h(t)\triangle \Omega (t)|dt \rightarrow 0\) and \(\int _0^T|\Sigma (t)|dt\le \liminf \int _0^T|\Sigma ^h(t)|dt\), they are not sufficient to yield \(\limsup \int _0^T|\Sigma ^h(t)|dt\le \int _0^T|\Sigma (t)|dt\) or even the convergence of \(\Sigma ^h(t)\) to \(\Sigma (t)\) in the Hausdorff distance. Our result will be a conditional convergence result very much in the same sense as the one in [24] but it turns out that our convergence result for the thresholding scheme requires no regularity theory for (almost) minimal surfaces, in contrast to the one of [24] and is therefore not restricted to low spatial dimensions \(d\le 7\). Although the time discretization scheme in [2, 24] seems rather academic from a computational point of view, it has been adapted for numerical simulations by Chambolle in [8]. Nevertheless, even in that variant, in each step one has to compute a (signed) distance function and solve a convex optimization problem.

Following [14], we now explain in which sense the thresholding scheme may be considered as a minimizing movements scheme for mean curvature flow. Each step in Algorithm 1 is equivalent to minimizing a functional of the form \(E_h(\chi ) - E_h(\chi -\chi ^{n-1})\), where the functional \(E_h\), defined below in (3), is an approximation to the total interfacial energy. It is a little more subtle to see that the second term, \(-E_h(\chi ^n-\chi ^{n-1})\), is comparable to the metric tensor \(\int _{\Sigma }V^2dx\). The \(\Gamma \)-convergence of functionals of the type (3) to the area functional has a long history: for the two-phase case, cf. Alberti and Bellettini [1] and Miranda et al. [29]. The multi-phase case, also for arbitrary surface tensions was investigated by Esedoğlu and the second author in [14]. Incidentally, it is easy to see that \(\Gamma \)-convergence of the energy functionals is not sufficient for the convergence of the corresponding gradient flows; Sandier and Serfaty [36] have identified sufficient conditions on both the functional and the metric tensor for this to be true.

Identically, the approach of Saye and Sethian [37] for multi-phase evolutions can also be seen as coming from the gradient flow structure when applied to mean-curvature flow with \(P\) phases. More precisely, it can be understood as a time splitting of an \(L^2\)-gradient flow with an additional phase whose volume is strongly penalized: the first step is \((P+1)\)-phase gradient flow w. r. t. the total interfacial energy and the second step is \((P+1)\)-gradient flow w. r. t. the volume penalization (so geometrical optics leading to the Voronoi construction).

1.2 Informal summary of the proof

We now give a summary of the main steps and ideas of the convergence proof. In Sect. 2, we draw consequences from the basic estimate (10) in a minimizing movements scheme, like compactness, Proposition 2.1, coming from a uniform (integrated) modulus of continuity in space, Lemma 2.4, and in time, Lemma 2.5. We also draw the first consequence from the strengthened convergence (8) in the case of equal surface tensions in Proposition 2.2. We strongly advise the reader to familiarize him- or herself with the argument for the modulus of continuity in time, Lemma 2.5, since it is there that the mesoscopic time scale \(\sqrt{h}\) appears for the first time in a simple context before being used in Sect. 4 in a more complex context. In the same vein, the fudge factor \(\alpha \) in the mesoscopic time scale \(\alpha \sqrt{h}\), which will be crucial in Sect. 4, will first be introduced and used in the simple context when estimating the normal velocity V of the limit in Proposition 2.2.

Starting from Sect. 3, we also use the Euler–Lagrange equation (34) of the minimizing movement scheme. By Euler–Lagrange equation we understand the first variation w. r. t. the independent variables, as generated by a test vector field \(\xi \). In Sect. 3, we pass to the limit in the energetic part of the first variation, recovering the mean curvature H via the term \(\int _{\Sigma }H\,\xi \cdot \nu =\int _{\Sigma }\nabla \cdot \xi - \nu \cdot \nabla \xi \,\nu \). This amounts to show that under our assumption of strengthened convergence (8), the \(\Gamma \)-convergence of the functionals can be upgraded to a distributional convergence of their first variations, cf. Proposition 3.1. It is a classical result credited to Reshetnyak [34] that under the strengthened convergence of sets of finite perimeter, the measure-theoretic normals and thus the distributional expression for mean curvature also converge. The fact that this convergence of the first variation may also hold when combined with a diffuse interface approximation is known for instance in case of the Ginzburg–Landau approximation of the area functional (also known by the names of Modica and Mortola, who established this \(\Gamma \)-convergence [30, 31]), see [23]. In our case the convergence of the first variations relies on a localization of the ingredients for the \(\Gamma \)-convergence worked out in [14], like the consistency, i. e. pointwise convergence of these functionals.

Section 4 constitutes the central and, as we believe, most innovative piece of the paper; we pass to the limit in the dissipation/metric part of the first variation, recovering the normal velocity V via the term \(\int _{\Sigma }V\,\xi \cdot \nu \). In fact, we think of the test-field \(\xi \) as localizing this expression in time and space, and recover the desired limiting expression only up to an error that measures how well the limiting configuration can be approximated by a configuration with only two phases and a flat interface in the space–time patch under consideration; this is measured both in terms of area (leading to a multi-phase excess in the language of the regularity theory of minimal surfaces) and volume, see Proposition 4.1. The main difficulty of recovering the metric term \(\int _{\Sigma }V\,\xi \cdot \nu \) in comparison to recovering the distributional form \(\int _{\Sigma }\nabla \cdot \xi - \nu \cdot \nabla \xi \,\nu \) of the energetic term is that one has to recover both the normal velocity V, which is distributionally characterized by \(\partial _t\chi -V|\nabla \chi |=0\) on the level of the characteristic function \(\chi \), and the (spatial) normal \(\nu \). In short: one has to pass to the limit in a product. More precisely, the main difficulty is that there is no good bound on the discrete normal velocity V at hand on the level of the microscopic time scale h; only on the level of the above-mentioned mesoscopic time scale \(\sqrt{h}\), such an estimate is available. This comes from the fact that the basic estimate yields control of the time derivative of the characteristic function \(\chi \) only when mollified on the spatial scale \(\sqrt{h}\) in \(u=G_h*\chi \). The main technical ingredient to overcome this lack of control in Proposition 4.1 is presented in Lemma 4.2 in the two-phase case and in Lemma 4.5 in the general setting: if one of the two (spatial) functions \(u,\tilde{u}\) is not too far from being strictly monotone in a given direction (a consequence of the control of the tilt excess, see Lemma 4.4), then the spatial \(L^1\)-difference between the level sets \(\chi =\{u>\frac{1}{2}\}\) and \(\tilde{\chi }=\{\tilde{u}>\frac{1}{2}\}\) is controlled by the squared \(L^2\)-difference between u and \(\tilde{u}\).

In Sect. 5, we combine the results of the previous two sections yielding the weak formulation of \(V=H\) on some space–time patch up to an error expressed in terms of the above mentioned (multi-phase) tilt excess of the limit on that patch. Complete localization in time and partition of unity in space allows us to assemble this to obtain \(V=H\) globally, up to an error expressed by the time integral of the sum of the tilt excess over the spatial patches of finite overlap. De Giorgi’s structure theorem for sets of finite perimeter (cf. Theorem 4.4 in [17]), adapted to a multi-phase situation but just used for a fixed time slice, implies that the error expression can be made arbitrarily small by sending the length scale of the spatial patches to zero.

1.3 Notation

We denote by

the Gaussian kernel of variance h. Note that \(G_{2t}(z)\) is the fundamental solution to the heat equation and thus

We recall some basic properties, such as the normalization, non-negativity, boundedness and the factorization property:

where \(G^1\) denotes the 1-dimensional and \(G^{d-1}\) the \((d-1)\)-dimensional Gaussian kernel; let us also mention the semi-group property

Throughout the paper, we will work on the flat torus \({[0,\Lambda )^d}\). The thresholding scheme for multiple phases, introduced in [14], for arbitrary surface tensions \(\sigma _{ij}\) and mobilities \(\mu _{ij}=1/\sigma _{ij}\) is the following.

Algorithm 1

Given the partition \(\Omega ^{n-1}_1,\ldots ,\Omega ^{n-1}_P\) of \({[0,\Lambda )^d}\) at time \(t=(n-1)h\), obtain the new partition \(\Omega ^n_1,\ldots ,\Omega ^n_P\) at time \(t=nh\) by:

-

1.

Convolution step:

$$\begin{aligned} \phi _i := G_h*\left( \sum _{j=1}^P\sigma _{ij}\mathbf {1}_{\Omega ^{n-1}_j}\right) . \end{aligned}$$(1) -

2.

Thresholding step:

$$\begin{aligned} \Omega ^n_i := \left\{ x\in {[0,\Lambda )^d}:\phi _i(x) < \phi _j(x) \text { for all } j\ne i \right\} . \end{aligned}$$(2)

We will denote the characteristic functions of the phases \(\Omega ^n_i\) at the \(n\text {th}\) time step by \(\chi _i^n\) and interpolate these functions piecewise constantly in time, i. e.

As in [14], we define the approximate energies

for admissible measurable functions:

Here and in the sequel \(\int dx\) stands short for \(\int _{{[0,\Lambda )^d}}dx\), whereas \(\int dz\) stands short for \(\int _{\mathbb {R}^d} dz\). The minimal assumption on the matrix of surface tensions \(\{\sigma _{ij}\}\), next to the obvious

is the following triangle inequality

It is known that (e. g. [14]), under the conditions above, these energies \(\Gamma \)-converge w. r. t. the \(L^1\)-topology to the optimal partition energy given by

for admissible \(\chi \):

The constant \(c_0\) is given by

For our purpose we ask the matrix of surface tensions \(\sigma \) to satisfy a strict triangle inequality:

We recall the minimizing movements interpretation from [14] which is easy to check. The combination of convolution and thesholding step in Algorithm 1 is equivalent to solving the following minimization problem

where \(\chi \) runs over (4). The proof will mostly be based on the interpretation (5) and only once uses the original form (1) and (2) in Lemmas 4.2 and 4.4, respectively. Following [14], we will additionally assume that \(\sigma \) is conditionally negative-definite, i. e.

where \(\underline{\sigma }>0\) is a constant. That means, that \(\sigma \) is negative as a bilinear form on \((1,\ldots ,1)^\perp \). This ensures that \(-E_h(\chi -\chi ^{n-1})\) in (5) is non-negative and penalizes the distance to the previous step.

In the following we write \(A \lesssim B\) to express that \(A\le C B\) for a (possibly large) generic constant \(C<\infty \) that only depends on the dimension d, the total number of phases \(P\) and on the matrix of surface tensions \(\sigma \) through \(\sigma _{\min }=\min _{i\ne j} \sigma _{ij}\), \(\sigma _{\max } = \max \sigma _{ij}\), \(\underline{\sigma }\) and \(\min \{ \sigma _{ik} + \sigma _{kj} - \sigma _{ij}:i,\, j,\, k\, \text {pairwise different}\}\). Furthermore, we say a statement holds for \(A \ll B\) if the statement holds for \(A \le \frac{1}{C} B\) for some generic constant \(C<\infty \) as above.

1.4 Main result

The definition of our weak notion of mean-curvature flow is a distributional formulation which is suited to the framework of functions of bounded variation.

Definition 1.1

(Motion by mean curvature) Fix some finite time horizon \(T<\infty \), a matrix of surface tensions \(\sigma \) as above and initial data \(\chi ^0:{[0,\Lambda )^d}\rightarrow \{0,1\}^P\) with \(E_0 := E(\chi ^0) <\infty \). We say that the network

with \(\sum _i \chi _i = 1\) a. e. and

moves by mean curvature if there exist functions \(V_i:(0,T)\times {[0,\Lambda )^d}\rightarrow \mathbb {R}\) with

which satisfy

for all \(\xi \in C^\infty _0((0,T)\times {[0,\Lambda )^d}, \mathbb {R}^d)\) and which are normal velocities in the sense that for all \(\zeta \in C^\infty ([0,T]\times {[0,\Lambda )^d})\) with \(\zeta (T)=0\) and all \(i\in \left\{ 1,\ldots ,P\right\} \)

Note that (7) also encodes the initial conditions as well as (6) encodes the Herring angle condition. Indeed, for a smooth evolution, since for any interface \(\Sigma \) we have

where \(\Gamma = \partial \Sigma \), b denotes the conormal and H the mean curvature of \(\Sigma \), we do not only obtain the equation

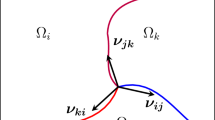

along the smooth parts of the interfaces but also the Herring angle condition at triple junctions. If three phases \(\Omega _1\), \(\Omega _2\) and \(\Omega _3\) meet at a point x, then we have

In terms of the opening angles \(\theta _1\), \(\theta _2\) and \(\theta _3\) at the junction, this condition reads

so that the opening angles at triple junctions are determined by the surface tensions.

Remark 1.2

To prove the convergence of the scheme, we will need the following convergence assumption:

This assumption makes sure that there is no loss of area in the limit \(h\rightarrow 0\) as in Fig. 1.

Theorem 1.3

Let \(P\in \mathbb {N}\), let the matrix of surface tensions \(\sigma \) satisfy the strict triangle inequality and be conditionally negative-definite, \(T<\infty \) be a finite time horizon and let \(\chi ^0\) be given with \(E(\chi ^0) < \infty \). Then for any sequence there exists a subsequence \(h\downarrow 0\) and a \(\chi :(0,T)\times {[0,\Lambda )^d}\rightarrow \{0,1\}^P\) with \(E(\chi (t))\le E_0\) such that the approximate solutions \(\chi ^h\) obtained by Algorithm 1 converge to \(\chi \). Given (8), \(\chi \) moves by mean curvature in the sense of Definition 1.1 with initial data \(\chi ^0\).

Remark 1.4

An upcoming result of the authors will show that under the assumption (8) the limit \(\chi \) solves a localized energy inequality and is thus a weak solution in the sense of Brakke.

Remark 1.5

Our proof uses the following three different time scales (Fig. 2):

-

1.

the macroscopic time scale, \(T<\infty \), given by the finite time horizon,

-

2.

the mesoscopic time scale, \(\tau =\alpha \sqrt{h}\sim \sqrt{h}>0\) and

-

3.

the microscopic time scale, \(h>0\), coming from the time discretization.

The mesoscopic time scale arises naturally from the scheme: due to the parabolic scaling, the microscopic time scale h corresponds to the length scale \(\sqrt{h}\) as can be seen from the kernel \(G_h\). Since for a smooth evolution, the normal velocity V is of order 1, this prompts the mesoscopic time scale \(\sqrt{h}\).

The parameter \(\alpha \) will be kept fixed most of the time until the very end, where we send \(\alpha \rightarrow 0\). Therefore, it is natural to think of \(\alpha \sim 1\), but small.

These three time scales go hand in hand with the following numbers, which we will for simplicity assume to be natural numbers throughout the proof:

-

1.

N: the total number of microscopic time steps in a macroscopic time interval (0, T),

-

2.

\(\textit{K}\): the number of microscopic time steps in a mesoscopic time interval \((0,\tau )\) and

-

3.

\(L\): the number of mesoscopic time intervals in a macroscopic time interval.

The following simple identities linking these different parameters will be used frequently:

2 Compactness

In this section we prove the compactness of the approximate solutions, construct the normal velocities and derive bounds on these velocities. In the first subsection we present all results of this section; the proofs can be found in the subsequent subsection.

2.1 Results

The first main result of this section is the following compactness statement.

Proposition 2.1

(Compactness) There exists a sequence \(h\downarrow 0\) and a limit \(\chi :(0,T)\times {[0,\Lambda )^d}\rightarrow \{0,1\}^P\) such that

and the limit satisfies \( E(\chi (t)) \le E_0 \) and \(\chi (t)\) is admissible in the sense of (4) for a.e. \(t\in (0,T)\).

The second main result of this section is the following construction of the normal velocities and the square-integrability under the convergence assumption (8).

Proposition 2.2

If the convergence assumption (8) holds, the limit \(\chi =\lim _{h\rightarrow 0} \chi ^h\) has the following properties.

-

(i)

\(\partial _t \chi \) is a Radon measure with

$$\begin{aligned} \iint \left| \partial _t \chi _i\right| \lesssim (1+T)E_0 \end{aligned}$$for each \(i\in \{1,\ldots ,P\}.\)

-

(ii)

For each \(i\in \{1,\ldots ,P\}\), \(\partial _t \chi _i\) is absolutely continuous w. r. t. \(\left| \nabla \chi _i \right| dt\). In particular, there exists a density \(V_i\in L^1(\left| \nabla \chi _i \right| dt)\) such that

$$\begin{aligned} -\int _0^T\int \partial _t \zeta \,\chi _i\, dx\,dt = \int _0^T\int \zeta \, V_i \left| \nabla \chi _i \right| dt \end{aligned}$$for all \(\zeta \in C_0^\infty ((0,T)\times {[0,\Lambda )^d})\).

-

(iii)

We have a strong \(L^2\)-bound: for each \(i\in \{1,\ldots ,P\}\)

$$\begin{aligned} \int _0^T \int V_i^2 \left| \nabla \chi _i \right| dt \lesssim \left( 1+T\right) E_0. \end{aligned}$$

Both results essentially stem from the following basic estimate, a direct consequence of the minimizing movements interpretation (5).

Lemma 2.3

(Energy-dissipation estimate) The approximate solutions satisfy

\(\sqrt{-E_h}\) defines a norm on the process space \(\{ \omega :{[0,\Lambda )^d}\rightarrow \mathbb {R}^P| \sum _i \omega _i = 0 \}. \) In particular, the algorithm dissipates energy.

In order to prove Proposition 2.1 we derive estimates on time- and space-variations of the approximations only using the basic estimate (10).

The estimate (10) bounds the (approximate) energies \(E_h(\chi ^h)\), which in turn control \(\int \left| \nabla G_h*\chi ^h\right| dx\) and thus variations of \(G_h*\chi ^h\) in space. On length scales greater than \(\sqrt{h}\), this estimate also survives for the approximations \(\chi ^h\).

Lemma 2.4

(Almost BV in space) The approximate solutions satisfy

for any \(\delta >0\) and \(e\in {{S}^{d-1}}\).

Variations in time are controlled by the following lemma coming from interpolating the (unbalanced) estimate (10) on time scales of order \(\sqrt{h}\).

Lemma 2.5

(Almost BV in time) The approximate solutions satisfy

for any \(\tau >0\).

Let us also mention that with the same methods we can prove \(C^{1/2}\)-Hölder-regularity of the volumes, i. e. \(\left| \Omega (s)\Delta \Omega (t)\right| \lesssim \left| s-t\right| ^{\frac{1}{2}}\). For the approximations this estimate of course only holds on time scales larger than the time-step size h.

Lemma 2.6

(\(C^{1/2}\)-bounds) We have uniform Hölder-type bounds for the approximate solutions: I.e. for any pair \(s,t\in [0, T]\) with \(|s-t|\ge h\) we have

In particular, \(\chi \in C^{1/2}([0,T],L^1({[0,\Lambda )^d}))\): for almost every \(s,t\in (0, T)\), we have

For the proof of the second main result of this section, Proposition 2.2, and also for later use in Sect. 4 it is useful to define certain measures which are induced by the metric term. These measures allow us to localize the result of Lemma 2.5. In the two-phase case this is enough to prove that the measure \(\partial _t \chi \) is absolutely continuous w. r. t. the perimeter and the existence and integrability of the normal velocity, cf. (i) and (ii) of Proposition 2.2. The square-integrability follows then from a refinement of these estimates by localizing the fudge factor \(\alpha \) (cf. Remark 1.5) after passage to the limit \(h\rightarrow 0\).

Definition 2.7

(Dissipation measure) For \(h>0\), we define the approximate dissipation measures (associated to the approximate solution \(\chi ^h\)) \(\mu _h\) on \([0,T]\times {[0,\Lambda )^d}\) by

where \(\zeta \in C^\infty ([0,T]\times {[0,\Lambda )^d})\) and \(\overline{\zeta }^n\) is the time average of \(\zeta \) on the interval \([nh,(n+1)h)\). By the monotonicity of \(h\mapsto \Vert G_h*u\Vert _{L^2}\) and the energy-dissipation estimate (10), we have

and \(\mu _h \rightharpoonup \mu \) after passage to a further subsequence for some finite, non-negative measure \(\mu \) on \([0,T]\times {[0,\Lambda )^d}\) with \(\mu ([0,T]\times {[0,\Lambda )^d}) \lesssim E_0\). We call \(\mu \) the dissipation measure.

In order to prove Proposition 2.2 also in the multi-phase case we have to ensure that the convergence assumption implies the convergence of the individual interfacial areas \(\frac{1}{2} \int \left( \left| \nabla \chi _i \right| + \left| \nabla \chi _j \right| - \left| \nabla (\chi _i+\chi _j) \right| \right) \).

Lemma 2.8

(Implications of convergence assumption) The convergence assumption (8) ensures that for any pair \(i\ne j\) and any \(\zeta \in C^\infty ([0,T]\times {[0,\Lambda )^d})\),

as \(h\rightarrow 0\).

The proof of Lemma 2.8 heavily relies on the fact that \(\sigma \) satisfies the strict triangle inequality so that we can preserve the triangle inequality after perturbing the energy functional. The following example shows that this is not a technical assumption but is a necessary condition for the lemma to hold and thus plays a crucial role in identifying the normal velocities \(V_i\).

Example 2.9

To fix ideas let us consider three sets \(\Omega _1,\,\Omega _2\) and \(\Omega _3\) in dimension \(d=2\) with surface tensions \(\sigma _{12}= \sigma _{23}=1\), \(\sigma _{13}=2\) as illustrated in Fig. 3. Then, the total energy is constant in h and due to the choice of the surface tensions the convergence assumption is fulfilled. Nevertheless, we clearly have

This example also illustrates that although the energy functional E is lower semi-continuous, the individual interfacial energies \(\frac{1}{2}\int \left( |\nabla \chi _i| +|\nabla \chi _j| - |\nabla (\chi _i+\chi _j)| \right) \) are not.

As \(h\rightarrow 0\), the two interfaces \(\Sigma ^h_{12}\) and \(\Sigma ^h_{23}\) merge into one interface, \(\Sigma _{13}\), between Phases 1 and 3. Therefore the measure of \(|\Sigma ^h_{13}|\) jumps up in the limit \(h\rightarrow 0\) although the total interfacial energy converges due to the choice of surface tensions

2.2 Proofs

Before proving the statements of this section we cite two results of [14] which will be used frequently in the proofs.

The following monotonicity statement is a key tool for the \(\Gamma \)-convergence in [14]. We will use it throughout our proofs but we seem not to rely heavily on it.

Lemma 2.10

(Approximate monotonicity) For all \(0<h\le h_0\) and any admissible \(\chi \), we have

Another important tool for the \(\Gamma \)-convergence in [14] is the following consistency, or pointwise convergence of the functionals \(E_h\) to E, which we will refine in Sect. 3.

Lemma 2.11

(Consistency) For any admissible \(\chi \in BV\), we have

Taking the limit \(h\rightarrow 0\) in (18) with \(\chi =\chi ^0\) and using (19), we see that that the interfacial energy \(E_0\) of the initial data \(\chi (0)\equiv \chi ^0\) bounds the approximate energy of the initial data:

We first prove Proposition 2.1 which follows directly from the estimates in Lemmas 2.4 and 2.5. Then we give the proofs of the Lemmas used for Proposition 2.1. We present the proof of Proposition 2.2 at the end of this section since the proof heavily relies on the techniques developed in the proofs of the lemmas, especially in Lemma 2.5.

Proof of Proposition 2.1

The proof is an adaptation of the Riesz–Kolmogorov \(L^p\)-compactness theorem. By Lemmas 2.4 and 2.5, we have

for any \(\delta ,\tau >0\) and \(e\in {{S}^{d-1}}\). For \(\delta >0\) consider the mollifier \(\varphi _\delta \) given by the scaling \(\varphi _\delta (x) := \frac{1}{\delta ^{d+1}}\varphi (\frac{x}{\delta },\frac{t}{\delta })\) and \(\varphi \in C_0^\infty ( (-1,0)\times B_1)\) such that \(0\le \varphi \le 1\) and \(\int _{-1}^0 \int _{B_1} \varphi =1\). We have the estimates

Hence, on the one hand, the mollified functions are equicontinuous and by Arzelà–Ascoli precompact in \(C^0([0,T]\times {[0,\Lambda )^d})\): for given \(\epsilon ,\delta >0\) there exist functions \(u_i\in C^0([0,T]\times {[0,\Lambda )^d})\), \(i=1,\ldots ,n(\epsilon ,\delta )\) such that

where the balls \(B_\epsilon (u_i)\) are given w. r. t. the \(C^0\)-norm. On the other hand, for any function \(\chi \) we have

Using this for \(\chi ^h\) and plugging in (20) yields

Given \(\rho >0\), fix \(\delta ,h_0>0\) such that

Then set \(\epsilon :=\frac{\rho }{T \Lambda ^d}\) and find \(u_1,\ldots ,u_n\) from above. Note that only finitely many of the elements in the sequence \(\{h\}\) are greater than \(h_0\). Therefore,

is a finite covering of balls (w. r. t. \(L^1\)-norm) of given radius \(\rho >0\). Therefore, \(\{\chi ^h\}_h\) is precompact and hence relatively compact in \(L^1\). Hence we can extract a converging subsequence. After passing to another subsequence, we can w. l. o. g. assume that we also have pointwise convergence almost everywhere in \( (0,T)\times {[0,\Lambda )^d}\). \(\square \)

Proof of Lemma 2.3

By the minimality condition (5), we have in particular

for each \(n=1,\ldots ,N\). Iterating this estimate yields (10) with \(E_h(\chi ^0)\) instead of \(E_0= E(\chi ^0)\). Then (10) follows from the short argument after Lemma 2.11.

We claim that the pairing \( - \frac{1}{\sqrt{h}}\int \omega \cdot \sigma \left( G_h*\tilde{\omega }\right) dx \) defines a scalar product on the process space. It is bilinear and symmetric thanks to the symmetry of \(\sigma \) and \(G_h\). Since \(\sigma \) is conditionally negative-definite,

Furthermore, we have equality only if \(\omega \equiv 0\). Thus, \(\sqrt{-E_h}\) is the induced norm on the process space. \(\square \)

Proof of Lemma 2.4

Step 1 We claim that

Indeed, for any characteristic function \(\chi : {[0,\Lambda )^d}\rightarrow \{0,1\}\) we have

Therefore, since \(\left| \nabla G_h(z)\right| \lesssim \frac{1}{\sqrt{h}}\left| G_{2h}(z)\right| \),

By \(\chi \in \{0,1\}\), we have \(\left| \chi (x+z)-\chi (x) \right| = \chi (x)\left( 1-\chi \right) (x+z) + \left( 1-\chi \right) (x)\chi (x+z)\) and thus by symmetry of \(G_{2h}\):

Applying this on \(\chi ^h_i\), summing over \(i=1,\ldots ,P\), using \(\chi ^h_i = 1-\sum _{j\ne i} \chi ^h_j\) and \(\sigma _{ij}\ge \sigma _{\min }>0\) for \(i\ne j\) we obtain

where we used the approximate monotonicity of \(E_h\), cf. Lemma 2.10. Using the energy-dissipation estimate (10), we have

and integration in time yields (21).

Step 2 By (21) and Hadamard’s trick, we have on the one hand

Since \(\chi \in \{0,1\}\), we have on the other hand

which yields

Using the translation invariance and (22) for the components of \(\chi ^h\), we have

\(\square \)

Proof of Lemma 2.5

In this proof, we make use of the mesoscopic time scale \(\tau =\alpha \sqrt{h}\), see Remark 1.5 for the notation. First we argue that it is enough to prove

for \(\alpha \in [1,2]\). If \(\alpha \in (0,1)\), we can apply (23) twice, once for \(\tau = \sqrt{h}\) and once for \(\tau = (1+\alpha )\sqrt{h}\) and obtain (12). If \(\alpha >2\), we can iterate (23). Thus we may assume that \(\alpha \in [1,2]\). We have

Thus, it is enough to prove

for any \(k=0,\ldots ,\textit{K}-1\). By the energy-dissipation estimate (10), we have \(E_h(\chi ^k) \le E_0\) for all these k’s. Hence we may assume w. l. o. g. that \(k=0\) and prove only

Note that for any two characteristic functions \(\chi ,\tilde{\chi }\) we have

Now we post-process the energy-dissipation estimate (10). Using the triangle inequality for the norm \(\sqrt{-E_h}\) on the process space and Jensen’s inequality, we have

Using (25) for \(\chi ^{\textit{K}l}_i\) and \(\chi ^{\textit{K}(l-1)}_i\) with (22) for the second and the third right-hand side term and the conditional negativity of \(\sigma \) and the above inequality for the first right-hand side term we obtain

Since \((1-\chi _i^n) = \sum _{j\ne i} \chi _j^n\) a.e. and \(\sigma _{ij}\ge \sigma _{\min }>0\) for all \(i\ne j\), the energy-dissipation estimate (10) yields

which establishes (24) and thus concludes the proof. \(\square \)

Proof of Lemma 2.6

First note that (14) follows directly from (13) since we also have \(\chi ^h(t) \rightarrow \chi (t)\) in \(L^1\) for almost every t. The argument for (13) comes in two steps. Let \(s>t\), \(\tau := s-t\) and \(t\in [nh,(n+1)h)\).

Step 1 Let \(\tau \) be a multiple of h. We may assume w. l. o. g. that \(\tau = m^2h\) for some \(m\in \mathbb {N}\). As in the proof of Lemma 2.5, using (25) and (26) we derive

As before, we sum these estimates:

Step 2 Let \(\tau \ge h\) be arbitrary. Take \(m\in \mathbb {N}\) such that \(s\in [(m+n)h, (m+n+1)h)\). From Step 2 we obtain the bound in terms of mh instead of \(\tau \). If \(\tau \ge mh\), we are done. If \(h\le \tau < mh\), then \(m\ge 2\) and thus \(mh\le \frac{m}{m-1}\tau \lesssim \tau \). \(\square \)

Proof of Lemma 2.8

W. l. o. g. let \(i=1\), \(j=2\). We prove the statement in three steps. In the first step we reduce the statement to a time-independent one. In the second step, we show that due to the strict triangle inequality, the convergence of the energies implies the convergence of the individual perimeters. In the third step, we conclude by showing that this convergence still holds true if we localize with a test function \(\zeta \), which proves the time-independent statement formulated in the first step.

Step 1: reduction to a time-independent problem It is enough to prove that \(\chi ^h\rightarrow \chi \) in \(L^1({[0,\Lambda )^d},\mathbb {R}^P)\) and \( E_h(\chi ^h)\rightarrow E(\chi )\) imply

for any \(\zeta \in C^\infty ({[0,\Lambda )^d})\).

Given \(\chi ^h\rightarrow \chi \) in \(L^1((0,T)\times {[0,\Lambda )^d})\), for a subsequence we clearly have \(\chi ^h(t)\rightarrow \chi (t)\) in \(L^1({[0,\Lambda )^d})\) for a. e. t. We further claim that for a subsequence

Writing \( \big |E_h(\chi ^h) - E(\chi )\big | = 2 \big ( E(\chi )- E_h(\chi ^h)\big )_+ + E_h(\chi ^h) - E(\chi ) \) and using the \(\liminf \)-inequality of the \(\Gamma \)-convergence of \(E_h\) to E, we have

Then Lebesgue’s dominated convergence, cf. (10), and the convergence assumption (8) yield

and thus (28) after passage to a subsequence. Therefore, we can apply (27) for a. e. t and the time-dependent version follows from the time-independent one by Lebesgue’s dominated convergence theorem and (10).

Step 2: convergence of perimeters We claim that given \(\chi ^h\rightarrow \chi \) in \(L^1({[0,\Lambda )^d},\mathbb {R}^P)\) and \( E_h(\chi ^h)\rightarrow E(\chi )\), the individual perimeters converge in the following sense: we have

where \(F_h\) and F are the two-phase analogues of the (approximate) energies:

We will prove this claim by perturbing the functional \(E_h\). We recall that the functionals \(F_h\) \(\Gamma \)-converge to F (see e. g. [29] or [14]). Since the argument for the three cases work in the same way, we restrict ourself to the first case, \(F_h(\chi _1^h) \rightarrow F(\chi _1)\). Since the matrix of surface tensions \(\sigma \) satisfies the strict triangle inequality, we can perturb the functionals \(E_h\) in the following way: for sufficiently small \(\epsilon >0\), the associated surface tensions for the functional \( \chi \mapsto E_h(\chi ) - \epsilon F_h(\chi _1) \) satisfy the triangle inequality so that approximate monotonicity, Lemma 2.10, and consistency, Lemma 2.11, still apply. Therefore, by Lemma 2.10, we have for any \(h_0\ge h\)

By assumption, the left-hand side converges to \(E(\chi )\). Since for fixed \(h_0\), \(\chi \mapsto E_{h_0}(\chi )-\epsilon F_{h_0}(\chi _1)\) is clearly a continuous functional on \(L^2\), the first right-hand side term converges as \(h\rightarrow 0\). Thus, for any \(h_0>0\),

As \(h_0 \rightarrow 0\), Lemma 2.11 yields

By the \(\Gamma \)-convergence we also have

and thus the convergence \( F_h(\chi ^h_1) \rightarrow F(\chi _1). \)

Step 3: conclusion We claim that given \(\chi ^h\rightarrow \chi \) in \(L^1({[0,\Lambda )^d},\mathbb {R}^P)\) and \( E_h(\chi ^h)\rightarrow E(\chi )\), for any \(\zeta \in C^\infty ({[0,\Lambda )^d})\) we have (27).

We will not prove (27) directly but prove that for any \( \zeta \in C^\infty ({[0,\Lambda )^d})\)

for the localized functionals

instead. This is indeed sufficient since for any \(\chi _1,\chi _2\), we clearly have

and (29) therefore implies (27).

Now we give the argument for (29). As before, we only prove one of the statements, namely \(F_h(\chi ^h_1,\zeta ) \rightarrow F(\chi _1,\zeta )\). For this we use two lemmas that we will prove in Sect. 3. First, by applying Lemma 3.6, which is the localized version of Lemma 2.11, we have for the functional \(F_h\) instead of \(E_h\) we have \( F_h(\chi _1) \rightarrow F(\chi _1). \) Then, by Lemma 3.7 we can estimate \( \left| F_h(\chi _1) - F_h(\chi ^h_1)\right| \rightarrow 0 \) and thus conclude the proof.

Let us mention that one can also follow a different line of proof by localizing the monotonicity statement of Lemma 2.10 with a test function \(\zeta \). Since Lemma 3.7 seems more robust, we only prove the statement in this fashion. \(\square \)

Proof of Proposition 2.2

We make use of the mesoscopic time scale \(\tau \), see Remark 1.5 for the notation.

Argument for (i) Let \(\zeta \in C_0^\infty ((0,T)\times {[0,\Lambda )^d})\). We have to show that

In this part we choose \(\alpha = 1\). Using the notation \(\partial ^{\tau }\zeta \) for the discrete time derivative \( \frac{1}{\tau }\left( \zeta (t+\tau ) - \zeta (t)\right) \), by the smoothness of \(\zeta \),

Since \(\chi ^h \rightarrow \chi \) in \(L^1((0,T)\times {[0,\Lambda )^d}) \), the product converges:

Since \(\text {supp}\zeta \) is compact, by Lemma 2.5 we have

for sufficiently small h.

Argument for (ii) First we prove

for any \(\alpha >0\) and any \(\zeta \in C_0^\infty ((0,T)\times {[0,\Lambda )^d})\). We fix \(\zeta \) and by linearity we may assume that \(\zeta \ge 0\) if we prove the inequality with absolute values on the left-hand side. We use the identity from above

Setting

to be the time average over a microscopic time interval, we have

Now fix \(k\in \{1,\ldots , \textit{K}\}\). For simplicity, we will ignore k at first. We can argue as in the proof of Lemma 2.5, here with the localization \(\zeta \): By (22) we have for any \(\chi \in \{0,1\}\)

with \(F_h\) as in (30) and furthermore

Therefore, using (25) we obtain

where the last right-hand side term vanishes as \(h\downarrow 0\) by (10). For the first right-hand side term we note that for any \(\zeta \in C^\infty ({[0,\Lambda )^d})\) and any \(\chi ,\,\tilde{\chi }\in \{0,1\}\) we have

so that we can replace the first right-hand side term by

up to an error that vanishes as \(h\downarrow 0\), due to the above calculation and e. g. Lemma 2.6. As in (26) for \(-E_h\), now for this localized version, we can use the triangle inquality and Jensen’s inequality to bound this term by

as \(h\downarrow 0\), where \(\mu _h\) is the (approximate) dissipation measure defined in (15). Therefore we have

as \(h\downarrow 0\). Taking the mean over the k’s we obtain

Passing to the limit \(h\rightarrow 0\), (17), which is guaranteed by the convergence assumption (8), implies (31).

Now let \(U \subset (0,T)\times {[0,\Lambda )^d}\) be open such that

If we take \(\zeta \in C_0^\infty (U)\), the first term on the right-hand side of (31) vanishes and therefore

Since the left-hand side does not depend on \(\alpha \), we have

Taking the supremum over all \(\zeta \in C_0^\infty (U)\) yields

Thus, \(\partial _t \chi _i\) is absolutely continuous w. r. t. \(\left| \nabla \chi _i\right| dt\) and the Radon–Nikodym theorem completes the proof.

Argument for (iii) We refine the estimate in the argument for (ii). Instead of estimating the right-hand side of (31) and optimizing afterwards, which leads to a weak \(L^2\)-bounds, we localize. Starting from (31), we notice that we can localize with the test function \(\zeta \). Thus, we can post-process the estimate and obtain

for any integrable \(\zeta :(0,T)\times {[0,\Lambda )^d}\rightarrow \mathbb {R}\), any measurable \(\alpha :(0,T)\times {[0,\Lambda )^d}\rightarrow (0,\infty )\) and some constant \(C<\infty \) which depends only on the dimension d, the number of phases \(P\) and the matrix of surface tensions \(\sigma \). Now choose

where we set \(\alpha := 1\) if \(V_i=0\), in which case all other integrands vanish. Then, the first term on the right-hand side can be absorbed in the left-hand side and we obtain

\(\square \)

3 Energy functional and curvature

It is a classical result by Reshetnyak [34] that the convergence \(\chi ^h\rightarrow \chi \) in \(L^1\) and

imply convergence of the first variation

A result by Luckhaus and Modica [23] shows that this may extend to a \(\Gamma \)-convergence situation, namely in case of the Ginzburg–Landau functional

We show that this also extends to our \(\Gamma \)-converging functionals \(E_h\). Let us first address why the first variation of the approximate energies is of interest in view of our minimizing movements scheme. We recall (5): the approximate solution \(\chi ^n\) at time nh minimizes \(E_h(\chi ) - E_h(\chi -\chi ^{n-1})\) among all \(\chi \). The natural variations of such a minimization problem are inner variations, i. e. variations of the independent variable. Given a vector field \(\xi \in C^\infty ({[0,\Lambda )^d},\mathbb {R}^d)\) and an admissible \(\chi \), we define the deformation \(\chi _s\) of \(\chi \) along \(\xi \) by the distributional equation

which means that the phases are deformed by the flow generated through \(\xi \). The inner variation \(\delta E_h\) of the energy \(E_h\) at \(\chi \) along the vector field \(\xi \) is then given by

For an admissible \(\tilde{\chi }\) the inner variation of the metric term \(-E_h(\chi -\tilde{\chi })\) is given by

The (chosen and not necessarily unique) minimizer \(\chi ^n\) in Algorithm 1 therefore satisfies the Euler–Lagrange equation

for any vector field \(\xi \in C^\infty ({[0,\Lambda )^d},\mathbb {R}^d)\).

3.1 Results

The goal of this section is to prove the following statement about the convergence of the first term in the Euler–Lagrange equation.

Proposition 3.1

Let \(\chi ^h,\, \chi :(0,T)\times {[0,\Lambda )^d}\rightarrow \{0,1\}^P\) be such that \(\chi ^h(t),\, \chi (t)\) are admissible in the sense of (4) and \(E(\chi (t)) < \infty \) for a.e. t. Let

and furthermore assume that

Then, for any \(\xi \in C_0^\infty ((0,T)\times {[0,\Lambda )^d},\mathbb {R}^d)\), we have

It is easy to reduce the statement to the following time-independent statement.

Proposition 3.2

Let \(\chi ^h,\, \chi :{[0,\Lambda )^d}\rightarrow \{0,1\}^P\) be admissible in the sense of (4) with \(E(\chi ) <\infty \) such that

and furthermore assume that

Then, for any \(\xi \in C^\infty ({[0,\Lambda )^d},\mathbb {R}^d)\), we have

Remark 3.3

Proposition 3.2 and all other statements in this section hold also in a more general context. We do not need the approximations \(\chi ^h\) to be characteristic functions. In fact the statements hold for any sequence \(u^h :{[0,\Lambda )^d}\rightarrow [0,1]^P\) with \(\sum _i u_i^h =1\) a.e. converging to some \(\chi :{[0,\Lambda )^d}\rightarrow \{0,1\}^P\) with \(E(\chi ) <\infty \) in the sense of (37)–(38).

The following first lemma brings the first variation \(\delta E_h\) of \(E_h\) into a more convenient form, up to an error vanishing as \(h\rightarrow 0\) because of the smoothness of \(\xi \). Already at this stage one can see the structure

in the first variation of E in the form of \(\nabla \xi :( G_h Id-h\nabla ^2 G_h)\) on the level of the approximation.

Lemma 3.4

Let \(\chi \) be admissible and \(\xi \in C^\infty ({[0,\Lambda )^d},\mathbb {R}^d)\) then

We have already seen in Lemma 2.8 that we can pass to the limit in the term involving only the kernel \(G_h Id\):

where now \(\zeta =\nabla \cdot \xi \). The next proposition shows that we can also pass to the limit in the term involving the second derivatives \(h \nabla ^2G_h\) of the kernel, which yields the projection \(\nu \otimes \nu \) onto the normal direction in the limit.

Proposition 3.5

Let \(\chi ^h,\, \chi \) satisfy the convergence assumptions (37) and (38). Then for any \(A\in C^\infty ([0,\Lambda )^d,\mathbb {R}^{d\times d})\)

The following two statements are used to prove Proposition 3.5. The following lemma yields in particular the construction part in the \(\Gamma \)-convergence result of \(E_h\) to E. We need it in a localized form; the proof closely follows the proof of Lemma 4 in Section 7.2 of [14].

Lemma 3.6

(Consistency) Let \(\chi \in BV({[0,\Lambda )^d},\{0,1\}^P)\) be admissible in the sense of (4). Then for any \(\zeta \in C^\infty ({[0,\Lambda )^d})\)

and for any \(A\in C^\infty ({[0,\Lambda )^d},\mathbb {R}^{d\times d})\)

The next lemma shows that under our convergence assumption of \(\chi ^h\) to \(\chi \), the corresponding spatial covariance functions \(f_h\) and f are very close and allows us to pass from Lemmas 3.4 and 3.6 to Proposition 3.2.

Lemma 3.7

(Error estimate) Let \(\chi ^h,\, \chi \) satisfy the convergence assumptions (37) and (38) and let k be a non-negative kernel such that

for some polynomial p. Then

where

3.2 Proofs

Proof of Proposition 3.1

The proposition is an immediate consequence of the time-independent analogue, Proposition 3.2. Indeed, according to Step 1 in the proof of Lemma 2.8 we have \(E_h(\chi ^h) \rightarrow E(\chi )\) for a. e. t. Thus all conditions of Proposition 3.2 are fulfilled. Proposition 3.1 follows then from Lebesgue’s dominated convergence theorem. \(\square \)

Proof of Proposition 3.2

We may apply Lemma 3.4 for \(\chi ^h\) and obtain by the energy-dissipation estimate (10) that

Applying Proposition 3.5 for the kernel \(\nabla ^2 G\) with \(\nabla \xi \) playing the role of the matrix field A and Lemma 2.8 for the kernel G with \(\zeta = \nabla \cdot \xi \), we can conclude the proof. \(\square \)

Proof of Lemma 3.4

Recall the definition of \(\delta E_h\) in (32). Since \( -\nabla \tilde{\chi }\cdot \xi = -\nabla \cdot (\tilde{\chi }\,\xi ) +\tilde{\chi }\left( \nabla \cdot \xi \right) \) for any function \(\tilde{\chi }:{[0,\Lambda )^d}\rightarrow \mathbb {R}\), we can rewrite the integral on the right-hand side of (32):

Let us first turn to the first right-hand side term. For fixed (i, j), we can collect the two terms in the sum that belong to the interface between phases i and j and obtain by the antisymmetry of the kernel \(\nabla G_h\) that the resulting term with the prefactor \(\frac{2\sigma _{ij}}{\sqrt{h}}\) is

A Taylor expansion of \(\xi \) around x gives the first-order term

Now we argue that the second-order term is controlled by \(\Vert \nabla ^2 \xi \Vert _\infty E_h(\chi ) \sqrt{h}\). Indeed, since \(|z|^3G(z) \lesssim G_2(z)\), the contribution of the second-order term is controlled by

Using the approximate monotonicity (18) of \(E_h\), we have suitable control over this term. After distributing the first-order term on both summand (i, j) and (j, i) we therefore have

and since \(\nabla ^2 G(z) = -Id\, G - z \otimes \nabla G(z)\), we conclude the proof. \(\square \)

Proof of Proposition 3.5

By Lemma 3.6 we know that the term converges if we take \(\chi \) instead of the approximation \(\chi ^h\) on the left-hand side of the statement. Lemma 3.7 in turn controls the error by substituting \(\chi ^h\) by \(\chi \) on the left-hand side. \(\square \)

Proof of Lemma 3.6

Our main focus in this proof lies on the anisotropic kernel \(\nabla ^2 G\). The statement for G is—up to the localization—already contained in the proof of Lemma 4 in Section 7.2 of [14].

Step 1: reduction of the statement to a simpler kernel Since \(\nabla ^2G(z)\) is a symmetric matrix, the inner product

depends only the symmetric part \(A^{sym }\) of A; hence w. l. o. g. let A be a symmetric matrix field. But then there exist functions \(\zeta _{ij}\in C^\infty ({[0,\Lambda )^d})\), such that

We also note

Hence by linearity it is enough to prove the statement for A of the form

for some \(\xi \in {{S}^{d-1}}\). By rotational invariance we may assume

Hence the statement can be reduced to

for any \(\zeta \in C^\infty ([0,\Lambda )^d).\) In the following we will show that for any such \(\zeta \) and \(\chi ,\, \tilde{\chi }\in BV({[0,\Lambda )^d},\{0,1\})\) such that

and for the anisotropic kernel \(k(z) = z_1^2 G(z)\) we have

The analogous statement for the Gaussian kernel G instead of the anisotropic kernel k is—up to the localization with \(\zeta \)—contained in [14]. In that case the right-hand side of (43) turns into the localized energy, i.e. replacing the anisotropic term \((\nu _1^2+1)\) by 1. Since \(\partial _1^2 G(z) = \left( z_1^2-1\right) G(z)\) it is indeed sufficient to prove (43). We will prove this in five steps. Before starting, we introduce spherical coordinates \(z=r\xi \) on the left-hand side:

In the following two steps of the proof, we simplify the problem by disintegrating in r (Step 2) and \(\xi \) (Step 3). Then we explicitly calculate an integral that arises in the second reduction and which translates the anisotropy of the kernel k into a geometric information about the normal (Step 4). We simplify further by disintegration in the vertical component (Step 5) and conclude by solving the one-dimensional problem (Step 6).

Step 2: disintegration in r We claim that it is sufficient to show

Indeed, note that since \(G(z)=G(|z|)\) and \(\frac{d}{dr} G(r) = -r G(r)\) we have, using integration by parts,

Replacing \(\sqrt{h}\) by \(\sqrt{h}\,r\) on the left-hand side of (45) and integrating w. r. t. the non-negative measure \(G(r)r^{d}dr\) and using the equality from above shows that (45), in view of (44), formally implies (43). To make this step rigorous, we use Lebesgue’s dominated convergence theorem. A dominating function can be obtained as follows:

which is finite and independent of r. Hence, it is integrable w. r. t. the finite measure \(G(r)r^{d+2}dr\).

Step 3: disintegration in \(\xi \) We claim that it is sufficient to show that for each \(\xi \in {{S}^{d-1}}\),

Indeed, if we integrate w. r. t. the non-negative measure \(\frac{1}{2} \xi _1^2 d\xi \) we obtain the left-hand side of (45) from the left-hand side of (46). At least formally, this is obvious because of the symmetry under \(\xi \mapsto -\xi \). The dominating function to interchange limit and integration is obtained as in Step 1:

For the passage from the right-hand side of (46) to the right-hand side of (45) we note that since

and \(|\nu |=1 \) \(|\nabla \chi |\)- a. e. it is enough to prove

to obtain the equality for the right-hand side.

Step 4: argument for (47) By symmetry of \(\int _{{S}^{d-1}}d\xi \) under the reflection that maps \(e_1\) into \(\nu \), we have

Applying the divergence theorem to the vector field \(|\xi _1|\left( \xi \cdot \nu \right) \nu \), we have

Since \(\nabla \cdot \left( |\xi _1|\left( \xi \cdot \nu \right) \nu \right) = \text {sign}\xi _1 \left( \xi \cdot \nu \right) \nu _1 + |\xi _1|\), the right-hand side is equal to

By symmetry of \(d\xi \) under rotations that leave \(e_1\) invariant, we see that \(\int _B \text {sign}\xi _1 \,\xi \, d\xi \) points in direction \(e_1\), so that the above reduces to

We conclude by observing

Step 5: one-dimensional reduction The problem reduces to the one-dimensional analogue, namely: for all \(\chi ,\,\tilde{\chi }\in BV([0,\Lambda ),\{0,1\})\) such that

and every \(\zeta \in C^\infty ([0,\Lambda ))\) we have

Indeed, by symmetry, it suffices to prove (46) for \(\xi =e_1\). Using the decomposition \(x= se_1 + x'\) we see that (46) follows from (49) using the functions \( \chi _{x'}(s):=\chi (se_1 + x')\), \(\tilde{\chi }_{x'}\), \(\zeta _{x'}\) in (49) and integrating w. r. t. \(dx'\). For the left-hand side, this is formally clear. For the right-hand side, one uses BV-theory: if \(\chi \in BV({[0,\Lambda )^d})\), we have \(\chi _{x'}\in BV([0,\Lambda ))\) for a. e. \(x'\in [0,\Lambda )^{d-1}\) and

for any \(\zeta \in C^\infty ({[0,\Lambda )^d}).\) To make the argument rigorous, we use again Lebesgue’s dominated convergence. As before, using (48), we obtain

Since

this is indeed an integrable dominating function.

Step 6: argument for (49) Since \(\chi ,\,\tilde{\chi }\) are \(\{0,1\}\)-valued, every jump has height 1 and since \(\chi ,\, \tilde{\chi }\in BV([0,\Lambda ))\), the total number of jumps is finite. Let \(J,\,\tilde{J}\subset [0,\Lambda )\) denote the jump sets of \(\chi \) and \(\tilde{\chi }\), respectively. Now, if \(\sqrt{h}\) is smaller than the minimal distance between two different points in \(J\cup \tilde{J}\), then in view of (48), the only contribution to the left-hand side of (49) comes from neighborhoods of points where both, \(\chi \) and \(\tilde{\chi }\), jump:

Note that \(\chi (\sigma +\sqrt{h}) + \chi (\sigma -\sqrt{h}) \equiv 1\) on each of these intervals and that

for intervals of the form

Since \(|I_s^h|=\sqrt{h}\), we have

Note that by (48), \(\chi +\tilde{\chi }\) jumps precisely where either \(\chi \) or \(\tilde{\chi }\) jumps. Thus

Therefore, (49) holds, which concludes the proof. \(\square \)

Proof of Lemma 3.7

The proof is divided into two steps. First, we prove the claim for \(k=G\), to generalize this result for arbitrary kernels k in the second step.

Step 1: \(k=G\) By Lemma 3.6 and the convergence assumption (38), we already know

Hence, it is sufficient to show that

Fix \(h_0>0\) and \(N\in \mathbb {N}\) and set \(h:=\frac{1}{N^2}h_0\). We will make use of the following triangle inequality for \(f_{(h)}=f,\, f_h\):

This inequality has been proven in the proof of Lemma 3 in Section 7.1 of [14]. For the convenience of the reader we reproduce the argument here: using the admissibility of \(\chi \) in the form of \(\sum _k \chi _k =1\), we obtain the following identity for any pair \(1\le i,j\le P\) of phases and any points \(x,x',x''\in {[0,\Lambda )^d}\):

Note that the contribution of \(k\in \{i,j\}\) to the sum has a sign:

We now fix \(z,\,w\in \mathbb {R}^d\) and use the above inequality for \(x'=x+z\), \(x''= x+z+w\) so that after multiplication with \(\sigma _{ij}\), summation over \(1\le i,j\le P\) and integration over x, we obtain \(f(z+w) - f(z) - f(w)\) on the left-hand side. Indeed, using the translation invariance for the term appearing in \(f_\zeta (w)\), we have

Using the triangle inequality for the surface tensions, we see that the first right-hand side integral is non-positive:

Indeed, the first and the third term, and the second and the last term cancel since the domain of indices in the sums is symmetric and thus we have (50).

By iterating the triangle inequality (50) for \(f_{(h)}=f,\, f_h\) we have

Hence, by the definition of h,

Therefore, using (51) for \(f_h\), the subadditivity of \(u \mapsto u_+\) and finally (51) for f, we obtain

Integrating w. r. t. the positive measure \(G(z)\,dz\) yields

Given \(\delta >0\), by Lemma 3.6 we may first choose \(h_0>0\) such that for all \(0<h<h_0\):

We note that we may now choose \(N\in \mathbb {N}\) so large that for all \(0<h<\frac{1}{N^2}h_0\):

Indeed, using the triangle inequality and translation invariance we have

which tends to zero as \(h\rightarrow 0\) because by Lebesgue’s dominated convergence and (37). Hence also the second term on the right-hand side of (52) is small:

Step 2: \(k=p\,G\) Fix \(\epsilon >0\). Since G is exponentially decaying, we can find a number \(M= M(\epsilon )< \infty \) such that

Hence we can split the integral into two parts. On the one hand, using (40) for \(k=G\),

and on the other hand, using (53) and the approximate monotonicity in Lemma 2.10,

By the convergence assumption (38) and the consistency, cf. Lemma 2.11, we can take the limit \(h\rightarrow 0\) on the right-hand side and obtain

Since the left-hand side does not depend on \(\epsilon >0\), this implies (40). \(\square \)

4 Dissipation functional and velocity

As for any minimizing movements scheme, the time derivative of the solution should arise from the metric term in the minimization scheme. For the minimizing movements scheme of our interfacial motion, the time derivative is the normal velocity. The goal of this section, which is the core of the paper, is to compare the first variation of the dissipation functional to the normal velocity.

4.1 Idea of the proof

Let us first give an idea of the proof in a simplified setting with only two phases, a constant test vector field \(\xi \) and no localization. Then the first variation (33) of the metric term reads

Using the distributional equation \(\nabla \chi \cdot \xi = \nabla \cdot (\chi \xi ) - \left( \nabla \cdot \xi \right) \chi \), this is equal to

as \(h\rightarrow 0\). We will prove this in Lemma 4.7. Since \(\partial ^{-h}_t \chi ^h = \frac{\chi ^n-\chi ^{n-1}}{h} \rightharpoonup V \left| \nabla \chi \right| dt\) and \(\sqrt{h}\nabla G_h *\chi ^n \approx c_0 \nu \) only in a weak sense, we cannot pass to the limit a priori. Our strategy is to freeze the normal and to control

by the excess

where \(\chi ^*= \mathbf {1}_{\{x\cdot \nu ^*>\lambda \}}\) is a half space in direction of \(\nu ^*\). By the convergence assumption \(\varepsilon ^2\) converges to

as \(h\rightarrow 0\), which is small by De Giorgi’s structure theorem—at least after localization in space and time; i. e. sets of finite perimeter have (approximate) tangent planes almost everywhere. To be self-consistent we will prove this application of De Giorgi’s result in Sect. 5.

The main difficulty in controlling (54) lies in finding good bounds on

For the sake of simplicity we set \(E_0 =T= \Lambda =1\) and write \(\chi \) instead of \(\chi ^h\) in the following. In Sect. 2 we have seen the bound

For this, we used the energy-dissipation estimate (10) to bound the dissipation

and Jensen’s inequality gave us control over the function

by the fudge factor \(\alpha \) appearing in the definition of the mesoscopic time scale \(\tau =\alpha \sqrt{h}\):

This estimate is the reason for the slight abuse of notation: we call the function in (56) \(\alpha ^2(t)\) in order to keep the relation (57) between the two quantities in mind. In the following we will always carry along the argument t of the function \(\alpha ^2(t)\) to make the difference clear. Writing \(\chi ^\tau \) short for \(\chi (\, \cdot \,+\tau )\) we have shown in the proof of Lemma 2.5 that (55) holds in the more precise form of

In this section we will derive the following more subtle bound:

While the argument for (55) was based on

we now start from the thresholding scheme:

We will use an elementary one-dimensional estimate, Lemma 4.2 (cf. Corollary 79 for this rescaled version), in direction \(\nu ^*= e_1\) (w. l. o. g.) and integrate transversally to obtain

The first right-hand side term measures the monotonicity of the phase function u in normal direction in the transition zone \(\{\frac{1}{3} \le u \le \frac{2}{3}\}\). It is clear that this term vanishes for \(\chi ^{-h} = \chi ^*\), provided the universal constant \(\overline{c}>0\) is sufficiently small. In Lemma 4.4 we will indeed bound this term by the excess

at the previous time step. Compared to the first approach which yielded (58), where the limiting factor is that the first right-hand side term is only \(O(\sqrt{h})\), the result of the latter approach yields the improvement

for an arbitrary (small) parameter \(s>0\). Now we show how to use the bound (61) in order to estimate (54). First, in Lemma 4.7 by freezing time for \(\chi \) on the mesoscopic time scale \(\tau = \alpha \sqrt{h}\) and using a telescoping sum for the first term \(\partial _t^h \chi \) we will show that

By (61) the error term is controlled by

by choosing \(s\sim \alpha ^{\frac{2}{3}}\). Second, in Lemma 4.8 we will show how to use the algebraic relation \((\chi ^\tau -\chi )(\chi ^\tau + \chi ) = \chi ^\tau -\chi \) for the product \((\chi ^\tau -\chi )\sqrt{h}\nabla G_h*(\chi ^\tau + \chi ) \) so that we can rewrite the right-hand side of (62) as

for some kernel k. Third, in Lemma 4.9 we will control the first error term by using its quadratic structure and the estimate (61) before the transversal integration in \(x'\):

by choosing \(\tilde{s} \sim \alpha ^{\frac{2}{3}}\) and \(s \sim \alpha ^{\frac{4}{9}}\). We note that the values of the exponents of \(\alpha \) in (63) and (65) do not play any role and can be easily improved. We only need the extra terms, here \(\alpha ^{\frac{1}{3}}\) and \(\alpha ^{\frac{1}{9}}\), to be o(1) as \(\alpha \rightarrow 0\); the prefactor of the excess \(\varepsilon ^2\), here \(\frac{1}{\alpha }\), can be large. Indeed, after sending \(h\rightarrow 0\) we will obtain the error \(\frac{1}{\alpha }\mathscr {E}^2+ \alpha ^{\frac{1}{9}}\). We will handle this term in Sect. 5 by first sending the fineness of the localization to zero so that \(\mathscr {E}^2\) vanishes, and then sending the parameter \(\alpha \rightarrow 0\).

In the following we will make the above steps rigorous and give a full proof in the multi-phase case. First we state the main result, Proposition 4.1, then we explain the tools we will be using more carefully in the subsequent lemmas. We turn first to the two-phase case to present the one-dimensional estimate (60) in Lemma 4.2, its rescaled and localized version Corollary 4.3 and the estimate for the error term Lemma 4.4. Subsequently we state the same results in Lemma 4.5 and Corollary 4.6 for the multi-phase case. These estimates are the core of the proof of Proposition 4.1 and use the explicit structure of the scheme. Let us note that in these estimates we are using the two steps of the scheme, the convolution step (1) and the thresholding step (2), in a well-separated way. Indeed, the one-dimensional estimate, Lemma 4.5, analyzes the thresholding step (2); and Corollary 4.6 brings the (transversally integrated) error term in the form of the excess \(\varepsilon ^2\) at the previous time step by analyzing the convolution step (1).

4.2 Results

The main result of this section is the following proposition which will be used for small time intervals in Sect. 5 where we will control the limiting error terms which appear here with soft arguments from geometric measure theory. In view of the definition of \(\mathscr {E}^2\) below, the proposition assumes that \(\chi _3,\ldots ,\chi _P\) are the minority phases in the space–time cylinder \((0,T)\times B_r\) (Fig. 4); likewise it assumes that the normal between \(\chi _1\) and \(\chi _2\) is close to the first unit vector \(e_1\). This can be assumed since on the one hand we can relabel the phases in case we want to treat another pair of phases as the majority phases. On the other hand, due to the rotational invariance, it is no restriction to assume that \(e_1\) is the approximate normal.

Proposition 4.1

For any \(\alpha \ll 1\), \(T>0\), \(\xi \in C_0^\infty ((0,T)\times B_r,\mathbb {R}^d)\) and any \(\eta \in C_0^\infty (B_{2r})\) radially symmetric and radially non-increasing cut-off for \(B_r\) in \(B_{2r}\) with \(\left| \nabla \eta \right| \lesssim \frac{1}{r}\) and \(\left| \nabla ^2 \eta \right| \lesssim \frac{1}{r^2}\), we have

Here we use the notation

where the infimum is taken over all half spaces \(\chi ^*= \mathbf {1}_{\{ x_1 > \lambda \}}\) in direction \(e_1\).

The exponents of \(\alpha \) in this statement are of no importance and can be easily improved. It is only relevant that the two extra error terms, i. e. \(r^{d-1}T\) and \(\iint \eta \, d \mu \), are equipped with prefactors which vanish as \(\alpha \rightarrow 0\). In Sect. 5 we will show that—even after summation—the excess will vanish as the fineness of the localization, i. e. the radius r of the ball in the statement of Proposition 4.1 tends to zero. There we will take first the limit \(r\rightarrow 0\) and then \(\alpha \rightarrow 0\) to prove Theorem 1.3. The prefactor of the excess, here \(\frac{1}{\alpha ^2}\) differs from the one in the two-phase case since the one-dimensional estimate is slightly different in the multi-phase case.

Let us comment on the structure of \(\mathscr {E}^2\). The first term, describing the surface area of Phases \(3,\ldots ,P\) inside the ball \(B_{2r}\), will be small in the application when \(\chi _3,\ldots ,\chi _P\) are indeed the minority phases. The second term, sometimes called the excess energy describes how far \(\chi _1\) and \(\chi _2\) are away from being half spaces in direction \(e_1\) or \(-e_1\), respectively. The terms comparing the surface energy inside \(B_{2r}\) do not see the orientation of the normal, whereas the bulk terms measuring the \(L^1\)-distance inside the ball \(B_{2r}\) do see the orientation of the normal.

The estimates in Sect. 2 are not sufficient to understand the link between the first variation of the metric term and the normal velocities. For this, we need refined estimates which we will first present for the two-phase case, where only one interface evolves. The main tool of the proof is the following one-dimensional lemma. For two functions \(u,\,\tilde{u}\), it estimates the \(L^1\)-distance between the characteristic functions \(\chi = \mathbf {1}_{\{u\ge \frac{1}{2}\}}\) and \(\tilde{\chi }= \mathbf {1}_{\{\tilde{u}\ge \frac{1}{2}\}}\) in terms of the \(L^2\)-distance between the u’s - at the expense of a term that measures the strict monotonicity of one of the functions u. We will apply it in a rescaled version for \(x_1\) being the normal direction.

Lemma 4.2

Let \(I\subset \mathbb {R}\) be an interval, Let \(u,\, \tilde{u}\in C^{0,1}(I)\), \(\chi := \mathbf {1}_{\{u \ge \frac{1}{2}\}}\) and \(\tilde{\chi }:= \mathbf {1}_{\{\tilde{u} \ge \frac{1}{2}\}}\). Then

for every \(s>0\).

The following modified version of Lemma 4.2 is the estimate one would use in the two-phase case.

Corollary 4.3

Let \(u,\, \tilde{u}\in C^{0,1}(I)\), \(\chi := \mathbf {1}_{\{u \ge \frac{1}{2}\}}\), \(\tilde{\chi }:= \mathbf {1}_{\{\tilde{u} \ge \frac{1}{2}\}}\) and \(\eta \in C_0^\infty (\mathbb {R})\), \(0\le \eta \le 1\) radially non-increasing. Then

for any \(s>0\).

In the previous corollary, it was crucial to control strict monotonicity of one of the two functions via the term

In the following lemma, we consider the d-dimensional version, i. e. \(dx_1\) replaced by dx, of this term in case of \(u=G_h*\chi \). We show that this term can be controlled in terms of the excess, measuring the energy difference to a half space \(\chi ^*\) in direction \(e_1\).

Lemma 4.4

Let \(\chi :{[0,\Lambda )^d}\rightarrow \{0,1\}\), \(\chi ^*=\mathbf {1}_{\{x_1>\lambda \}}\) a half space in direction \(e_1\) and \(\eta \in C_0^\infty (B_{2r})\) a cut-off of \(B_r\) in \(B_{2r}\) with \(\left| \nabla \eta \right| \lesssim \frac{1}{r}\) and \(\left| \nabla ^2 \eta \right| \lesssim \frac{1}{r^2}\). Then there exists a universal constant \(\overline{c}>0\) such that

where \(\varepsilon ^2\) is defined via

and the integral on the left-hand side of (68) with the two cases \(<,+\) and \(>,-\), respectively is a short notation for the sum of the two integrals.

In our application, we use the following lemma which is valid for any number of phases with arbitrary surface tensions instead of Lemma 4.2 or Corollary 4.3. Nevertheless, the core of the proof is already contained in the respective estimates in the two-phase case above. As in Proposition 4.1, we assume that \(\chi _1\) and \(\chi _2\) are the majority phases and that \(e_1\) is the approximate normal to \(\Omega _1=\{\chi _1=1\}\).

Lemma 4.5

Let \(I\subset \mathbb {R}\) be an interval, \(h>0\), \(\eta \in C_0^\infty (\mathbb {R})\), \(0\le \eta \le 1\) radially non-increasing and \(u,\,\tilde{u} :I \rightarrow \mathbb {R}^{P}\) be two smooth maps into the standard simplex \(\{U_i\ge 0,\,\sum _i U_i = 1\} \subset \mathbb {R}^P\). We define \(\phi _i := \sum _j \sigma _{ij} u_j\), \(\tilde{\phi }_i := \sum _j \sigma _{ij} \tilde{u}_j\), \(\chi _i := \mathbf {1}_{\{\phi _i < \phi _j\,\forall j\ne i\}}\) and \(\tilde{\chi }_i := \mathbf {1}_{\{\tilde{\phi }_i < \tilde{\phi }_j\,\forall j\ne i\}}\). Then

for any \(s\ll 1\).

As Lemma 4.4 can be used to estimate the integrated version of the error in Corollary 4.3 against the excess, the following corollary shows that the integrated version of the corresponding error term in the multi-phase version, Lemma 4.5, can be estimated against a multi-phase version of the excess \(\varepsilon ^2\).

Corollary 4.6

Let \(\chi \) be admissible, \(\chi ^*=\mathbf {1}_{\{x_1>\lambda \}}\) a half space in direction \(e_1\) and \(\eta \in C_0^\infty (B_{2r})\) a cut-off of \(B_r\) in \(B_{2r}\) with \(\left| \nabla \eta \right| \lesssim \frac{1}{r}\) and \(\left| \nabla ^2 \eta \right| \lesssim \frac{1}{r^2}\). Then there exists a universal constant \(\overline{c}>0\) such that for \(u=G_h*\chi \)

where the functional \(\varepsilon ^2(\chi )\) is defined via

and the functional \(F_h\) is the following localized version of the approximate energy in the two-phase case

With these tools we can now turn to the rigorous proof of (62)–(65) in the following lemmas. In the next two lemmas, we approximate the first variation of the metric term by an expression that makes the normal velocity appear. The main idea is to work, as for Lemma 2.5, on a mesoscopic time scale \(\tau \sim \sqrt{h}\), introducing a fudge factor \(\alpha \), cf. Remark 1.5. The first lemma shows that we may coarsen the first variation from the microscopic time scale h to the mesoscopic time scale \(\alpha \sqrt{h}\) and is therefore the rigorous analogue of (62). It also shows that we may pull the test vector field \(\xi \) out of the convolution.

Lemma 4.7

Let \(\xi \in C_0^\infty ((0,T)\times B_r,\mathbb {R}^d)\). Then

in the sense that the error is controlled by

where \(\eta \in C_0^\infty (B_{2r})\) is a radially symmetric, radially non-increasing cut-off for \(B_r\) in \(B_{2r}\) with \(\left| \nabla \eta \right| \lesssim \frac{1}{r}\) and the functional \(\varepsilon ^2(\chi )\) is defined in Corollary 4.6.

While the first lemma made the mesoscopic time derivative \(\frac{1}{\tau }\big ( \chi _i^{\textit{K}l} - \chi _i^{\textit{K}(l-1)}\big )\) appear, the upcoming second lemma makes the approximate normal, here \(e_1\), appear. This is the analogue of (64).

Lemma 4.8