Abstract

We investigate DC (Difference of Convex functions) programming and DCA (DC Algorithm) for a class of online learning techniques, namely prediction with expert advice, where the learner’s prediction is made based on the weighted average of experts’ predictions. The problem of predicting the experts’ weights is formulated as a DC program for which an online version of DCA is investigated. The two so-called approximate/complete variants of online DCA based schemes are designed, and their regrets are proved to be logarithmic/sublinear. The four proposed algorithms for online prediction with expert advice are furthermore applied to online binary classification. Experimental results tested on various benchmark datasets showed their performance and their superiority over three standard online prediction with expert advice algorithms—the well-known weighted majority algorithm and two online convex optimization algorithms.

Similar content being viewed by others

References

Alexander L, Das SR, Ives Z, Jagadish H, Monteleoni C (2017) Research challenges in financial data modeling and analysis. Big Data 5(3):177–188

Angluin D (1988) Queries and concept learning. Mach Learn 2(4):319–342

Azoury K, Warmuth MK (2001) Relative loss bounds for on-line density estimation with the exponential family of distributions. Mach Learn 43(3):211–246

Barzdin JM, Freivald RV (1972) On the prediction of general recursive functions. Sov Math Doklady 13:1224–1228

Cesa-Bianchi N (1999) Analysis of two gradient-based algorithms for on-line regression. J Comput Syst Sci 59(3):392–411

Cesa-Bianchi N, Freund Y, Haussler D, Helmbold DP, Schapire RE, Warmuth MK (1997) How to use expert advice. J ACM 44(3):427–485

Cesa-Bianchi N, Lugosi G (2003) Potential-based algorithms in on-line prediction and game theory. Mach Learn 51(3):239–261

Cesa-Bianchi N, Lugosi G (2006) Prediction, learning, and games. Cambridge University Press, New York

Cesa-Bianchi N, Mansour Y, Stoltz G (2007) Improved second-order bounds for prediction with expert advice. Mach Learn 66(2):321–352

Chung TH (1994) Approximate methods for sequential decision making using expert advice. In: Proceedings of the seventh annual conference on computational learning theory, COLT ’94, pp 183–189. ACM, New York, NY, USA

Collobert R, Sinz F, Weston J, Bottou L (2006) Large scale transductive SVMs. J Mach Learn Res 7:1687–1712

Collobert R, Sinz F, Weston J, Bottou L (2006) Trading convexity for scalability. In: Proceedings of the 23rd international conference on machine learning, ICML ’06, pp 201–208. New York, NY, USA

Conover WJ (1999) Pratical nonparametric statistics, 3rd edn. Wiley, Hoboken

Crammer K, Dekel O, Keshet J, Shalev-Shwartz S, Singer Y (2006) Online passive-aggressive algorithms. J Mach Learn Res 7:551–585

Dadkhahi H, Shanmugam K, Rios J, Das P, Hoffman SC, Loeffler TD, Sankaranarayanan S (2020) Combinatorial black-box optimization with expert advice. In: Proceedings of the 26th ACM SIGKDD international conference on knowledge discovery and data mining, pp 1918–1927. Association for Computing Machinery, New York, NY, USA

Demšar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7:1–30

DeSantis A, Markowsky G, Wegman MN (1988) Learning probabilistic prediction functions. In: Proceedings of the first annual workshop on computational learning theory, COLT’88, pp. 312–328. Morgan Kaufmann Publishers Inc., San Francisco, CA, USA

Devaine M, Gaillard P, Goude Y, Stoltz G (2013) Forecasting electricity consumption by aggregating specialized experts. Mach Learn 90(2):231–260

Freund Y, Schapire RE (1997) A decision-theoretic generalization of on-line learning and an application to boosting. J Comput Syst Sci 55(1):119–139

Friedman M (1937) The use of ranks to avoid the assumption of normality implicit in the analysis of variance. J Am Stat Assoc 32(200):675–701

Friedman M (1940) A comparison of alternative tests of significance for the problem of \(m\) rankings. Ann Math Stat 11(1):86–92

García S, Herrera F (2009) An extension on “statistical comparisons of classifiers over multiple data sets” for all pairwise comparisons. J Mach Learn Res 9:2677–2694

Gentile C (2002) A new approximate maximal margin classification algorithm. J Mach Learn Res 2:213–242

Gentile C (2003) The robustness of the \(p\)-norm algorithms. Mach Learn 53(3):265–299

Gollapudi S, Panigrahi D (2019) Online algorithms for rent-or-buy with expert advice. In: Chaudhuri K, Salakhutdinov R (eds) Proceedings of the 36th International Conference on Machine Learning, vol. 97, pp 2319–2327. PMLR, Long Beach, California, USA

Gramacy RB, Warmuth MKK, Brandt SA, Ari I (2003) Adaptive caching by refetching. In: Becker S, Thrun S, Obermayer K (eds) Advances in neural information processing systems, vol 15. MIT Press, Cambridge, pp 1489–1496

Grove AJ, Littlestone N, Schuurmans D (2001) General convergence results for linear discriminant updates. Mach Learn 43(3):173–210

Hao S, Hu P, Zhao P, Hoi SCH, Miao C (2018) Online active learning with expert advice. ACM Trans Knowl Discov Data 12(5):1–22

Haussler D, Kivinen J, Warmuth MK (1995) Tight worst-case loss bounds for predicting with expert advice. In: Vitányi P (ed) Computational learning theory, lecture notes in computer Science, vol 904. Springer, Berlin, pp 69–83

Hazan E (2016) Introduction to online convex optimization. Found Trends Optim 2(3–4):157–325

Ho VT, Le Thi HA, Bui DC (2016) Online DC optimization for online binary linear classification. In: Nguyen TN, Trawiński B, Fujita H, Hong TP (eds) Intelligent information and database systems: 8th Asian conference, ACIIDS 2016, proceedings, Part II. Springer, Berlin, pp 661–670

Hoi SCH, Wang J, Zhao P (2014) LIBOL: a library for online learning algorithms. J Mach Learn Res 15(1):495–499

Jamil W, Bouchachia A (2019) Model selection in online learning for times series forecasting. In: Lotfi A, Bouchachia H, Gegov A, Langensiepen C, McGinnity M (eds) Advances in computational intelligence systems. Springer, Cham, pp 83–95

Kivinen J, Warmuth MK (1997) Exponentiated gradient versus gradient descent for linear predictors. Inf Comput 132(1):1–63

Kivinen J, Warmuth MK (2001) Relative loss bounds for multidimensional regression problems. Mach Learn 45(3):301–329

Kveton B, Yu JY, Theocharous G, Mannor S (2008) Online learning with expert advice and finite-horizon constraints. In: Proceedings of the twenty-third AAAI conference on artificial intelligence, AAAI 2008, pp 331–336. AAAI Press

Le Thi HA (1994) Analyse numérique des algorithmes de l’optimisation d. C. Approches locale et globale. Codes et simulations numériques en grande dimension. Applications. Ph.D. thesis, University of Rouen, France

Le Thi HA (2020) DC programming and DCA for supply chain and production management: state-of-the-art models and methods. Int J Prod Res 58(20):6078–6114

Le Thi HA, Ho VT, Pham Dinh T (2019) A unified DC programming framework and efficient DCA based approaches for large scale batch reinforcement learning. J Glob Optim 73(2):279–310

Le Thi HA, Le HM, Phan DN, Tran B (2020) Stochastic DCA for minimizing a large sum of DC functions with application to multi-class logistic regression. Neural Netw 132:220–231

Le Thi HA, Moeini M, Pham Dinh T (2009) Portfolio selection under downside risk measures and cardinality constraints based on DC programming and DCA. Comput Manag Sci 6(4):459–475

Le Thi HA, Pham Dinh T (2001) DC programming approach to the multidimensional scaling problem. In: Migdalas A, Pardalos PM, Värbrand P (eds) From local to global optimization. Springer, Boston, pp 231–276

Le Thi HA, Pham Dinh T (2003) Large-scale molecular optimization from distance matrices by a D.C. optimization approach. SIAM J Optim 14(1):77–114

Le Thi HA, Pham Dinh T (2005) The DC (difference of convex functions) programming and DCA revisited with DC models of real world nonconvex optimization problems. Ann Oper Res 133(1–4):23–48

Le Thi HA, Pham Dinh T (2014) DC programming in communication systems: challenging problems and methods. Vietnam J Comput Sci 1(1):15–28

Le Thi HA, Pham Dinh T (2018) DC programming and DCA: thirty years of developments. Math Program Spec Issue DC Program Theory Algorithms Appl 169(1):5–68

Li Y, Long P (2002) The relaxed online maximum margin algorithm. Mach Learn 46(1–3):361–387

Littlestone N, Warmuth MK (1994) The weighted majority algorithm. Inf Comput 108(2):212–261

Nayman N, Noy A, Ridnik T, Friedman I, Jin R, Zelnik-Manor L (2019) XNAS: neural architecture search with expert advice. In: Advances in neural information processing systems 32: annual conference on neural information processing systems 2019, NeurIPS 2019, pp 1975–1985

Novikoff AB (1963) On convergence proofs for perceptrons. In: Proceedings of the symposium on the mathematical theory of automata 12:615–622

Ong CS, Le Thi HA (2013) Learning sparse classifiers with difference of convex functions algorithms. Optim Methods Softw 28(4):830–854

Pereira DG, Afonso A, Medeiros FM (2014) Overview of Friedman’s test and post-hoc analysis. Commun Stat Simul Comput 44(10):2636–2653

Pham Dinh T, Le HM, Le Thi HA, Lauer F (2014) A difference of convex functions algorithm for switched linear regression. IEEE Trans Autom Control 59(8):2277–2282

Pham Dinh T, Le Thi HA (1997) Convex analysis approach to D.C. programming: theory, algorithm and applications. Acta Math Vietnam 22(1):289–355

Pham Dinh T, Le Thi HA (1998) DC optimization algorithms for solving the trust region subproblem. SIAM J Optim 8(2):476–505

Pham Dinh T, Le Thi HA (2014) Recent advances in DC programming and DCA. In: Nguyen NT, Le Thi HA (eds) Transactions on computational intelligence XIII, vol 8342. Springer, Berlin, pp 1–37

Rosenblatt F (1958) The perceptron: a probabilistic model for information storage and organization in the brain. Psychol Rev 65(6):386–408

Shalev-Shwartz S (2007) Online learning: theory, algorithms, and applications. Ph.D. thesis, The Hebrew University of Jerusalem

Shalev-Shwartz S (2012) Online learning and online convex optimization. Found Trends Mach Learn 4(2):107–194

Shor NZ (1985) Minimization methods for non-differentiable functions, 1 edn. Springer Series in Computational Mathematics 3. Springer, Berlin

Valadier M (1969) Sous-différentiels d’une borne supérieure et d’une somme continue de fonctions convexes. CR Acad. Sci. Paris Sér. AB 268:A39–A42

Van Der Malsburg C (1986) Frank rosenblatt: principles of neurodynamics: perceptrons and the theory of brain mechanisms. In: Palm G, Aertsen A (eds) Brain theory. Springer, Berlin, pp 245–248

Vovk V (1990) Aggregating strategies. In: Proceedings of the third annual workshop on computational learning theory. Morgan Kaufmann Publishers Inc., San Francisco, CA, USA, pp 371–386

Vovk V (1998) A game of prediction with expert advice. J Comput Syst Sci 56(2):153–173

Wang W, Carreira-Perpiñán MÁ (2013) Projection onto the probability simplex: an efficient algorithm with a simple proof, and an application. arxiv: 1309.1541

Wilcoxon F (1945) Individual comparisons by ranking methods. Biometr Bull 1(6):80–83

Wu P, Hoi SCH, Zhao P, Miao C, Liu Z (2016) Online multi-modal distance metric learning with application to image retrieval. IEEE Trans Knowl Data Eng 28(2):454–467

Yaroshinsky R, El-Yaniv R, Seiden SS (2004) How to better use expert advice. Mach Learn 55(3):271–309

Zinkevich M (2003) Online convex programming and generalized infinitesimal gradient ascent. In: Fawcett T, Mishra N (eds) Proceedings of the 20th international conference on machine learning (ICML-03), pp 928–936

Acknowledgements

This research is funded by Foundation for Science and Technology Development of Ton Duc Thang University (FOSTECT), website: http://fostect.tdtu.edu.vn, under Grant FOSTECT.2017.BR.10.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1: DC decomposition of \({f}^{(i)}_t\)

We present the following proposition to get a DC decomposition of \({f}^{(i)}_t\).

Proposition 3

Let \(a > 0\), b, c and x be three constants and a given vector, respectively. The function

is a DC function with DC components

Proof

Knowing from [54] that if \(f = g - h\) is a DC function, then the function

is DC too. We see that the function

is DC. Therefore, applying (23) for \(g = b\langle w, x \rangle + c\) and \(h = \max \{0,b\langle w, x \rangle + c - a \}\), we get immediately a DC decomposition of f, that is,

However, since \(a > 0\), we have

Hence, we complete the proof. \(\square\)

Appendix 2: Proof of Lemma 1

Proof

First, we observe that when \(\tilde{\mathtt {p}}_{t-1} = 0\) or \(\tau ^{(1)}_{t-1} > \langle w^{t-1}, \tilde{\mathtt {p}}_{t-1} \rangle\) (resp. \(\tau ^{(2)}_{t-1} < \langle w^{t-1}, \tilde{\mathtt {p}}_{t-1} \rangle\)), our algorithms do not update the weight, and thus, the result in Lemma 1 is straightforward. Hence, we need to consider only the cases where, at each step \(t \in \mathcal {M}\),

Since \(u^*\) satisfies (18) and \(\tilde{\mathtt {p}}_t \ne 0\), Assumption 1 (i) is satisfied for DC functions (8). We see that \(\Vert u^* - w^t \Vert _2 \ne 0\) for all t because if there is some step t such that \(u^* = w^t\), then \(\langle u^*,\tilde{\mathtt {p}}_t \rangle = \langle w^t,\tilde{\mathtt {p}}_t \rangle\), which contradicts (18).

Next, we verify Assumptions 1 (ii)–(iv) for the DC functions \(f^{(1)}_t\).

\(\bullet\) Let us define the function \(\overline{g}^{(1)}_t := {g}^{(1)}_t - \langle z^t, \cdot \rangle .\) From (24), we derive

Thus, there exists a positive number \(\alpha \le \min \limits _{t \in \mathcal {M}}{\frac{2 }{ \Vert u^*-w^t\Vert _2^2}}\) such that Assumption 1 (ii) is satisfied.

\(\bullet\) For any \(\beta \ge 0\), we have

Thus, Assumption 1 (iii) is satisfied.

\(\bullet\) Assumption 1 (iv) is also verified with \(\gamma \le \min \limits _{t \in \mathcal {M}}{\frac{2(\langle u^*, \tilde{\mathtt {p}}_t \rangle -\rho )}{(\rho - \tau ^{(1)}_{t})\Vert u^*-w^t\Vert _2^2}}\) since

Similarly, as for the DC functions \(f_t^{(i)}\) (\(i=2,3\)), Assumptions 1 (i)–(iv) are satisfied if

The proof of Lemma 1 is established. \(\square\)

Appendix 3: Proof of Theorem 1

Proof

First of all, we analyze the regret bound of ODCA-SG.

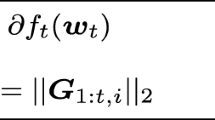

From the definition (17), we have

It derives from Assumption 1 (i) that

where the convex function \(\overline{g}_t := g_t - \langle z^t, \cdot \rangle\) for \(t=1,\ldots ,T\).

From (25), (26) and Assumptions 1 (ii)–(iii) with the choice \(\beta = \alpha\), we obtain

The last inequality holds as \(s^{t,k} \in \partial \overline{g}_t(w^{t,k})\), \(k=0,1,\ldots ,K_t-1\).

Similarly to Theorem 3.1 in [30], we can derive from (13) an upper bound of \(\langle s^{t,K_t-1}, w^{t,K_t-1} - u^* \rangle\) as follows:

Combining (19) and the fact that

we yield

It implies

Similarly, we get

We deduce from (28), (31), (32) that

where, by convention, \(\frac{1}{\eta _0}:=0\).

Let us define \(\eta _t := \dfrac{1}{\sqrt{t}\sqrt{3K^2-4K+2}}\) for all \(t=1,\ldots ,T\). We have

As for ODCA-SGk, since Assumption 1 (iv) is also satisfied, we can derive from (27) that

Defining \(\eta _t := \frac{1}{\gamma t}\) for all \(t=1,\ldots ,T\), we obtain

The proof of Theorem 1 is established. \(\square\)

Appendix 4: Proof of Proposition 1

Proof

(i) From the condition (7) and Theorem 1, we have, for any \(w \in \mathcal {S}\),

Here, \(|\mathcal {M}|\) is the number of the steps in \(\mathcal {M}\).

It implies that \(|\mathcal {M}| \le \overline{a} + \overline{c}\sqrt{|\mathcal {M}|}\). Thus, the proof of (i) is complete.

(ii) From the definition of \(\gamma _{\mathrm {SG}}\), we derive that for any \(w \in \mathcal {S}\),

It implies

Considering the strictly convex function \(r : (0,+\infty ) \rightarrow \mathbb {R}\),

Since \(\lim \nolimits _{x \rightarrow 0^+}r(x) = \lim \nolimits _{x \rightarrow +\infty }r(x) = +\infty\) and \(r(\overline{b}) \le 0\), equation \(r(x) = 0\) has two roots \(\overline{x}_1\), \(\overline{x}_2\) such that \(0 < \overline{x}_2 \le \overline{b} \le \overline{x}_1\). The proof of (ii) is established. \(\square\)

Appendix 5: Euclidean projection onto the probability simplex

Appendix 6: Description of the experts

We give here a description of the five experts used in the numerical experiments. They are well-known online classification algorithms: perceptron [50, 57, 62], relaxed online maximum margin algorithm [47], approximate maximal margin classification algorithm [23], passive-aggressive learning algorithm [14], classic online gradient descent algorithm [69].

Note that, in this paper, we consider the outcome space \(\mathcal {Y} = \{0,1\}\) and the prediction label \(\tilde{p}_{i,t} = \mathbb {1}_{\langle u_i, x \rangle \ge 0}(x_t) \in \{0,1\}\), where \(u_i\) is the linear classifier of ith expert. Therefore, in the description below, the \(\{0,1\}\) label is used instead to \(\{-1,1\}\) which are often used in linear classification algorithms.

First, the perceptron algorithm is known as the earliest, simplest approach for online binary linear classification [57].

Second, the relaxed online maximum margin algorithm [47] is an incremental algorithm for classification using a linear threshold function. It can be seen as a relaxed version of the algorithm that searches for the separating hyperplane which maximizes the minimum distance from previous instances classified correctly.

Third, the approximate maximal margin classification algorithm [23] consists in approximating the maximal margin hyperplane with respect to \(\ell _p\)-norm (\(p \ge 2\)) for a set of linearly separable data. The proposed algorithm in [23] is called Approximate Large Margin Algorithm.

Fourth, the passive-aggressive learning algorithm [14] computes the classifier based on analytical solution to simple constrained optimization problem which minimizes the distance from the current classifier \(u^t\) to the half-space of vectors which are of the zero hinge-loss on the current sample.

Finally, the classic online gradient descent algorithm [69] uses the gradient descent method for minimizing the hinge-loss function.

Rights and permissions

About this article

Cite this article

Le Thi, H.A., Ho, V.T. DCA for online prediction with expert advice. Neural Comput & Applic 33, 9521–9544 (2021). https://doi.org/10.1007/s00521-021-05709-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-021-05709-0