Abstract

The change in the mean temperature in Finland is investigated with a dynamic linear model in order to define the sign and the magnitude of the trend in the temperature time series within the last 166 years. The data consists of gridded monthly mean temperatures. The grid has a 10 km spatial resolution, and it was created by interpolating a homogenized temperature series measured at Finnish weather stations. Seasonal variation in the temperature and the autocorrelation structure of the time series were taken account in the model. Finnish temperature time series exhibits a statistically significant trend, which is consistent with human-induced global warming. The mean temperature has risen very likely over 2 °C in the years 1847–2013, which amounts to 0.14 °C/decade. The warming after the late 1960s has been more rapid than ever before. The increase in the temperature has been highest in November, December and January. Also spring months (March, April, May) have warmed more than the annual average, but the change in summer months has been less evident. The detected warming exceeds the global trend clearly, which matches the postulation that the warming is stronger at higher latitudes.

Similar content being viewed by others

1 Introduction

The global average temperature has increased by about 0.8 °C since the mid-19th century. It has been shown (e.g., Bloomfield 1992; Gao and Hawthorne 2006; Wu and Zhao 2007; Keller 2009) that this increase is statistically significant and that it can, for the most part, be attributed to human-induced climate change (IPCC 2013; Foster and Rahmstorf 2011). A temperature increase is obvious also in regional and local temperatures in many parts of the world. However, compared with the global average temperature, the regional and local temperatures exhibit higher levels of noise, which has largely been removed from the global temperature due to the higher level of averaging. It is therefore not always clear that a regional or local warming signal, although apparent “to the naked eye” in the temperature data, can, under strict assumptions, be considered statistically significant. Because climate change is one of the most serious environmental issues today, the question of statistical significance in local and regional temperature trends is not only of scientific but also of public interest.

In this article, we consider the time series of Finnish average temperatures in 1847–2013. Because Finland is located in northern latitudes, it is subject to the polar amplification of climate change-induced warming, which is due to the enhanced melting of snow and ice and other feedback mechanisms (see, e.g., Screen and Simmonds 2010; Serreze and Barry 2011). Therefore, warming in Finland is expected to be approximately 50 % higher than the global average. Conversely, the location of Finland between the Atlantic Ocean and continental Eurasia causes the weather to be very variable, and thus the temperature signal is rather noisy.

The concept of trend in itself is not completely free of ambiguity (e.g., Wu et al. 2007). Ambient temperature time series, for example, exhibit autocorrelation created by processes that are not completely understood. Therefore, the choice of the autocorrelation model is somewhat arbitrary, which is reflected in the obtained trend and its significance level. It is relatively straightforward to calculate different averages and linear trends from the observed temperatures. However, to evaluate the significance of the observed changes relative to the natural year-to-year variability and to give realistic uncertainty estimates of the trends we need statistical modeling. In this paper we have used dynamic regression to model the seasonality and the background level of the average temperature in Finland for the years 1847–2013. As our model fits the observed data well and the non-modeled part of the variability, the model residuals, can be seen to consisting of independent Gaussian noise, we can safely say that the uncertainty attributed to the trend values given here is well justified.

2 Data

Tietäväinen et al. (2010) created an over 160-year-long time series of monthly mean temperature grids with 10 km resolution for Finland. Homogenized station values of monthly mean temperature (Tuomenvirta 2001) from Finnish weather stations as well as monthly mean temperatures from selected weather stations in Sweden, Norway, and Russia near the Finnish border were used for the spatial interpolation. A kriging interpolation method (Matheron 1963; Ripley 1981), especially developed for climatological applications in Finland (Henttonen 1991), was used for creating the monthly mean temperature grids. As external forcing parameters, the kriging method took into account the geographical coordinates, elevation of the terrain, and the percentage of lakes and sea in each grid box. At the 10 km resolution, a total of 3,829 grid boxes were needed to cover the whole of Finland. Besides, according to Tietäväinen et al. (2010), this spatial model has previously been applied in climatological research projects conducted by Venäläinen and Heikinheimo (1997), Vajda and Venäläinen (2003), Venäläinen et al. (2005), Vajda (2007), and Ylhäisi et al. (2010).

The spatial representativeness of the observation station network is highly dependent on time. A meteorological observation network was initiated in 1846 by the Societas Scientiarum Fennica—The Finnish Society of Sciences and Letters (Finska Vetenskaps-Societeten). The extent of data is limited to temperature measurements from six stations in the first year of the time series in 1847. After decades of slow growth in the number of observation stations, in the 1880s, many new observation stations were established in different parts of southern and central Finland; however, in northern Finland, the first weather stations were not set up until the early 20th century. Therefore, data from Sweden and Norway is crucial. The number of stations used for the interpolation process increased continuously until the 1970s, when there were 179 stations in the network, after which it has slowly decreased. The density of the station network is still higher in southern and central Finland than in the northern part of the country. Stations outside of Finnish borders were removed from the kriging interpolation after 2002 and currently there are more than 120 stations in the network. More details on the station network can be found in Tietäväinen et al. (2010).

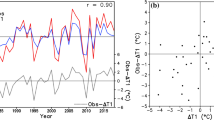

The limited amount and uneven distribution of the observation stations is the main source of uncertainty in the interpolated temperature fields. Tietäväinen et al. (2010) determined the errors and uncertainties in the annual and seasonal mean temperatures calculated from the monthly grids for the whole of Finland. According to their study, the uncertainty in annual and seasonal mean temperatures of Finland during the 19th century was large, with a maximum of more than ±2.0 °C in wintertime in the mid-1800s. At the beginning of the 20th century, the uncertainty related to the limited station network was in wintertime less than ±0.4 °C and during other seasons less than ±0.2 °C. For the monthly mean temperature grids, corresponding uncertainty calculations have not been made. Even though the Finnish station values of monthly mean temperatures were homogenized, minor uncertainties may have been introduced into the temperature grids both by inaccuracies in the homogenization process and possible remaining heterogeneities in the station time series (Tietäväinen et al. 2010). Figure 1a shows the annual mean levels of the temperature in Finland and 1b shows the monthly values from the last decade in order to demonstrate the yearly variation in the time series. In this paper, we use the data set of Tietäväinen et al. (2010) that has been extended to the end of year 2013.

3 Statistical methods

A trend is a change in the statistical properties of the background state of a system (Chandler and Scott 2011). The simplest case is a linear trend, in which, when applicable, we need to specify only the trend coefficient and its uncertainty. Natural systems evolve continuously over time, and it is not always appropriate to approximate the background evolution with a constant trend. Furthermore, the time series can include multiple time dependent cycles, and they are typically non-stationary, i.e., their distributional properties change over time.

In this work, we apply dynamic regression analysis by using dynamic linear model (DLM) approach to time series analysis of Finnish temperatures. DLM is used to statistically describe the underlying processes that generate variability in the observations. The method will effectively decompose the series into basic components, such as level, trend, seasonality, and noise. The components can be allowed to change over time, and the magnitude of this change can be modeled and estimated. The part of the variability that is not explained by the chosen model is assumed to be uncorrelated noise and we can evaluate the validity of this assumption by statistical model residual diagnostics.

Our model is, of course, just one possibility to describe the evolution of the observed temperatures. We see it as a very natural extension to non-dynamic multiple linear regression model. The method allows us to estimate both the model states (e.g. time-varying trends) and the model parameters (e.g. variances related to temporal variability), and we can assess the uncertainties and statistical significance of the underlying features. In this study, we are not trying to use the model to predict future temperatures, but to detect trends by finding a description that is consistent with the observed temperature variability. To study the adequacy of our chosen model, we examine the model residuals to see if the modeling assumptions are fulfilled.

With a properly set-up and estimated DLM model, we can detect significant changes in the background state and estimate the trends. The magnitude of the trend is not prescribed by the modeling formulation, and the method does not favor finding a “statistically significant” trend. The statistical model provides a method to detect and quantify trends, but it does not directly provide explanations for the observed changes, i.e., whether for example natural variability or solar effects could explain the changes in the background level. Model diagnostics and the increase in the observational data will eventually falsify incorrect models and other poorly selected prior specifications (see e.g. Tarantola 2006).

Dynamic linear models are linear regression models whose regression coefficients can depend on time. This dynamic approach is well known and documented in time series literature (Chatfield 1989; Harvey 1991; Hamilton 1994; Migon et al. 2005). These models are sometimes called structural time series models or hidden Markov models. The latter comes from the fact that dynamic regression is best described by the state space approach where the hidden state variables describe the time evolution of the components of the system. Modern computationally oriented references of the state space approach include Petris et al. (2009) and Durbin and Koopman (2012). The first describes a software package dlm for R statistical language that can be used to do the calculations described in this paper. We have used the Matlab software and computer code described in Laine et al. (2014). In this work, we use a DLM to explain variability in the temperature time series using components for a smooth varying locally linear mean level, for a seasonal effect, and for noise that is allowed to have autoregressive correlation. The autoregressive stochastic error term is used to account for long-range dependencies, irregular cycles, and the effects of different forcing mechanisms that a model with only second order random walk for mean and stochastic seasonality does not suffice to explain.

A DLM can be formulated as a general linear state space model with Gaussian errors and written with an observation equation and a state evolution equation as

where \( y_{t} \) are the observations and \( x_{t} \) is a vector of unobserved states of the system at time t. Matrix \( F_{t} \) is the observation operator that maps the hidden states to the observations and matrix \( G_{t} \) is the model evolution operator that provides the dynamics of the hidden states. We assume that the uncertainties, represented by observation uncertainty \( v_{t} \) and model error \( w_{t} \) are Gaussian, with observation uncertainty covariance \( V_{t} \) and model error covariance \( W_{t} \). The time index \( t \) will go from 1 to n, the length of the time series to be analyzed. In this work, we analyze univariate temperature time series, but the framework would also allow the modeling of multivariate series. We use notation common to many time series textbooks, e.g., Petris et al. (2009).

Trend will be defined as a change in the mean state of the system after all known systematic effects, such as seasonality, have been accounted for. To build a DLM for the trend we start with a simple local level and trend model that has two hidden states \( x_{t} = \left[ {\begin{array}{*{20}c} {\mu_{t} } & {\alpha_{t} } \\ \end{array} } \right]^{T} \), where \( \mu_{t} \) is the mean level and \( \alpha_{t} \) is the change in the level from time t-1 to time t. This system can be written by the equations

The Gaussian stochastic “ε” terms are used for the observation uncertainty and for random dynamics of the level and the trend. In terms of the state space Eqs. (1) and (2) this model is written as

Note that only the state vector \( x_{t} \) and the observation uncertainty covariance (a \( 1 \times 1 \) matrix) depend on time t. Depending on the choice of the variances \( \sigma_{\text{level}}^{2} \) and \( \sigma_{\text{trend}}^{2} \), the mean state \( \mu_{t} \) will define a smoothly varying background level of the time series. In our analyses, we will set \( \sigma_{\text{level}}^{2} = 0 \) and estimate \( \sigma_{\text{trend}}^{2} \) from the observations. As noted by Durbin and Koopman (2012), this will result in an integrated random walk model for the mean level \( \mu_{t} \), which can be interpreted as a cubic spline smoother, with well-based statistical descriptions of the stochastic components.

Temperature time series exhibit strong seasonal variability. In our DLM, the monthly seasonality is modeled with 11 state variables, which carry information of the seasonal effects of individual months. In general, the number of states is one less than the number of observations for each seasonal cycle when the model already has the mean level term. The corresponding matrices \( G_{\text{seas}} \), \( F_{\text{seas}} \) (\( 11 \times 11 \) and \( 1 \times 11 \) matrices) and the error covariance matrix \( W_{\text{seas}} \) (\( 11 \times 11 \)) for the time-wise variability in the seasonal components are modeled as (Durbin & Koopman, 2012):

We allow autocorrelation in the residuals using a first order autoregressive model (AR(1)). In DLM settings, we can estimate the autocorrelation coefficient and the extra variance term \( \sigma_{\text{seas}}^{2} \) together with the other model parameters. For a first order autoregressive component with a coefficient ρ and an innovation variance, \( \sigma_{AR}^{2} \), we simply define

and both ρ and \( \sigma_{AR}^{2} \) can be estimated from the observations.

The next step in the DLM model construction is the combination of the selected individual model components into larger model evolution and observation equations by

and the analysis then proceeds to the estimation of the variance parameters and other parameters in model formulation (e.g. the AR coefficient ρ in the matrix \( G_{\text{AR}} \)), and to the estimation of the model states by state space Kalman filter methods.

To get more intuitive meaning of the model and the stochastic error terms involved, we write the observation equation for our model as

where \( y_{t} \) is the monthly temperature at time t, \( \mu_{t} \) is the mean temperature level, \( \gamma_{t} \) is the seasonal component for monthly data, \( \eta_{t} \) is an autoregressive error component, and \( \varepsilon_{t} \) is the error term for the uncertainty in the observed temperature values. The simplification \( \sigma_{\text{level}}^{2} = 0 \) in Eq. (4) allows us to write a second difference process for the mean level \( \mu_{t} \) as

see e.g. Durbin and Koopman (2012) Sect. 2.3.1. For the seasonal component \( \gamma_{t} \), we have a condition that the 12 consecutive monthly effects sum to zero on the average, so for each t:

The term \( \eta_{t} \) follows a first order autoregressive process, AR(1), with coefficient ρ:

Finally, the observation uncertainty term \( \varepsilon_{t} \) is assumed to be zero mean Gaussian as

where the observation standard deviations \( \sigma_{t} \) are assumed to be known and correspond to the uncertainties from the spatial representativeness of the observations and from the averaging and the homogenization processes (Tietäväinen et al. 2010). The additional error terms \( \sigma_{\text{trend}}^{2} \), \( \sigma_{\text{seas}}^{2} \), and \( \sigma_{AR}^{2} \) account for the modeling error in the components of the model and are estimated from the data.

In the model construction above, we have four unknown model parameters: the three variances for stochastic model evolution, \( \sigma_{\text{trend}}^{2} \), \( \sigma_{\text{seas}}^{2} \), \( \sigma_{AR}^{2} \) and the autoregressive coefficient ρ. If the values of these parameters are known, the state space representation and the implied Markov properties of the processes allow estimation of the marginal distributions of the states given the observations and parameter by the Kalman filter and Kalman smoother formulas (Durbin & Koopman, 2012). The Kalman smoother gives efficient recursive formulas to calculate the marginal distribution of model states at each time t given the whole set of observations \( y_{t} , t = 1, \ldots ,n \). In a DLM these distributions are Gaussian, so defined by a mean vector and a covariance matrix. In addition, the auxiliary parameter vector θ = [\( \sigma_{\text{trend}}^{2} \), \( \sigma_{\text{seas}}^{2} \), \( \sigma_{AR}^{2} \), ρ] can be estimated using a marginal likelihood function that is provided as a side product of the Kalman filter recursion. This likelihood can be used to estimate the parameter θ using maximum likelihood method and the obtained estimates can be plugged back to the equations. We use Bayesian approach and Markov chain Monte Carlo (MCMC) simulation to estimate the posterior distribution of θ and to account for its uncertainty in the trend analysis.

The level component \( \mu_{t} \) models the evolution of the mean temperature after the seasonal and irregular noise components have been filtered out. It allows us to study the temporal changes in the temperature. The trends can be studied visually, or by calculating trend related statistics from the estimated mean level component \( \mu_{t} \). Statistical uncertainty statements can be given by simulating realizations of the level component using MCMC and the Kalman simulation smoother (Durbin and Koopman 2012, Laine et al., 2014).

The strength of the DLM method is its ability to estimate all model components, such as trends and seasonality, in one estimation step and to provide a conceptually simple decomposition of the observed variability. Furthermore, the analysis does not require assumptions about the stationarity of the series in the sense required, e.g., in classical ARIMA time series analyses and ARIMA analyses can be seen as special cases of the DLM analyses. For example, the simple local level and trend DLM of Eqs. (3–5) is equivalent to the ARIMA (0,2,2) model. In addition, the state space methods can easily handle missing observations; they are extendible to non-linear state space models, to hierarchical parameterizations, and to non-Gaussian errors (e.g. Durbin and Koopman 2012 and Gonçalves and Costa 2013). Details of the construction procedure of a DLM model and estimations of model states and parameters can be found in Gamerman (2006) and in Petris et al. (2009). We use an efficient adaptive MCMC algorithm by Haario et al. (2006) and the Kalman filter likelihood to estimate the four parameters in θ. The details of the estimation procedure can be found in Laine et al. (2014) who use similar DLM model to study trends in stratospheric ozone concentrations. We also conducted our analyses with dlm-package in R-software (Petris 2010) to verify the computations.

4 Results and discussion

We used a dynamic linear model with a local linear trend, a 12-month dummy type seasonal component, and an AR(1) autocorrelated error term to decompose the temperature time series. The time series consisted of 2004 monthly observations from years 1847–2013. Figure 2 shows the measurement series and the modeled mean background temperature \( \mu_{t} \). For clarity, the observations in the figure are annual averages, but in all of the statistical analyses monthly data are used. The mean temperature has risen in two periods, from the 1850s to the late 1930s and from the end of the 1960s to the present day, and was close to a constant between 1940 and 1970. It has been suggested that the global mean temperature oscillates quasiperiodically on a multidecadal time scale either globally (e.g., Henriksson et al. 2012, and references therein) or regionally (e.g., Sleschinger and Ramankutty 1994). The multidecadal oscillation is suggested to provide part of the explanation both for the near-constant global mean temperatures in recent years, despite the warming effect of increasing greenhouse gas concentrations, and for the declining global mean temperature in the 1950s and 1960s, along with the cooling caused by postwar anthropogenic aerosol emissions. Therefore, we tested the data for 60–80-year oscillations in order to see whether a multidecadal oscillation is present also in our data and whether the observed changes in the trend of the time series are due to this phenomenon. The results (not shown) indicated that taking account the multidecadal oscillations did not improve the model, as the change in BIC-value used in model comparison was almost negligible, thus we decided not to include them in the final model.

The variance parameters in matrices \( V_{t} \) and \( W_{t} \) and the autocorrelation coefficient ρ used in the DLM were estimated using the MCMC simulation algorithm. The length of the MCMC chain was 10,000, the last half of the chain was used for calculating the posterior values, and the convergence of the MCMC algorithm was assessed using plots of the MCMC chain, by calculating convergence diagnostics statistics, and by estimating the Monte Carlo error of the posterior estimates.

From the first to the last 10-year period of the data (from 1847–1856 to 2004–2013), the average temperature in Finland has risen by a total of 2.3 ± 0.4 °C (95 % probability limits). This equals to an average change of 0.14 °C/decade. The number of measurement stations in the first years of the measurement period was rather low, which is accounted for in the observational error \( \sigma_{t}^{2} \), but this causes only a small increase in the uncertainty estimates at the beginning of the series. Figure 2 also shows the Finnish decadal average temperatures estimated from the model as grey bars for 50 and 95 % probability limits, and the actual numbers are presented in Table 1. The temperature change was negligible in the middle of the 20th century, but the current temperatures show an indisputably rising trend. The mean temperature within 2000–2010 was almost one degree higher than in the 1960s and more than two degrees higher than in the 1850s.

Figure 3 shows the prior and posterior probability distributions for the unknown parameters and the numeric values for prior and posterior means are shown in Table 2 with corresponding relative standard errors.

The residual diagnostics for the DLM model are shown in Fig. 4. The distribution of residuals agrees well with the normality assumption and there is no significant autocorrelation.

The same model, but without the seasonal component, was fitted for observations of each month separately. Figure 5 shows that the change in the temperature has not been even between the months. The increase in temperature has been highest in late autumn and in spring but the change in summer months, especially in July and August, has been smaller. The temperature changes from 1847–1856 to 2004–2013 for each month have been collected in Table 3.

5 Conclusions

By using advanced statistical time series approach, a dynamic linear model (DLM), we were able to model the uncertainty caused by year-to-year natural variability and the uncertainty caused by the incomplete data and non-uniform sampling in the early observational years, and to estimate the uncertainty limits for the increase of the mean temperature in Finland. The Finnish temperature time series exhibits a statistically significant trend, which is consistent with the human-induced global warming. Our analysis shows that the mean temperature has risen by a total of 2.3 ± 0.4 °C (95 % probability limits) during the years 1847–2013, which amounts to 0.14 °C/decade. The warming trend before the 1940s was close to linear for the whole period, whereas the temperature change in the mid-20th century was negligible. However, the warming after the late 1960 s has been more rapid than ever before. Within the last 40 years the rate of change has varied between 0.2 and 0.4 °C/decade. The highest increases were seen in November, December and January. Also spring months (March, April, May) have warmed more than the annual average. Impacts of long-term cold season and spring warming have been documented e.g. in later freeze-up and earlier ice break-up in Finnish lakes (Korhonen 2006) and advancement in the timing of leaf bud burst and flowering of native deciduous trees growing in Finland (Linkosalo et al. 2009). Although warming during the growing season months has been small in centigrade it has resulted in attributable growth in growth of boreal forests in Finland in addition to other drivers (forest management, nitrogen deposition, CO2 concentration) since the 1960s (Kauppi et al. 2014). The analysis of a 166-year-long time series shows that the temperature change in Finland follows the global warming trend, which can be attributed to anthropogenic activities (IPCC: Climate Change 2013). The observed warming in Finland is almost twice as high as the global temperature increase (0.74 °C/100 years), which is in line with the notion that warming is stronger in higher latitudes.

References

Kauppi PE et al (2014) Large impacts of climatic warming on growth of Boreal Forests since 1960. PloS One (in press)

Bloomfield P (1992) Trends in global temperature. Clim Change 21:1–16. doi:10.1007/BF00143250

Chandler RE, Scott EM (2011) Statistical methods for trend detection and analysis in the environmental sciences. John Wiley & Sons, New York

Chatfield C (1989) The analysis of time series, an introduction, 4th edn. Chapman & Hall, London

Durbin TJ, Koopman SJ (2012) Time series analysis by state space methods. Oxford statistical science series, 2nd edn. Oxford University Press, Oxford

Foster G, Rahmstorf S (2011) Global temperature evolution 1979–2010. Environ Res Lett 6:044022. doi:10.1088/1748-9326/6/4/044022

Gamerman D (2006) Markov chain Monte Carlo—stochastic simulation for Bayesian inference, 2nd edn. Chapman & Hall, London

Gao J, Hawthorne K (2006) Semiparametric estimation and testing of the trend of temperature series. Econometr J 9:332–355. doi:10.1111/j.1368-423X.2006.00188.x

Gonçalves AM, Costa M (2013) Predicting seasonal and hydro-meteorological impact in environmental variables modelling via Kalman filtering. Stoch Environ Res Risk Assess 27:1021–1038. doi:10.1007/s00477-012-0640-7

Hamilton JD (1994) Time series analysis. Princeton University Press, Princeton

Haario H, Laine M, Mira A, Saksman E (2006) DRAM: efficient adaptive MCMC. Stat Comp 16:339–354. doi:10.1007/s11222-006-9438-0

Harvey AC (1991) Forecasting, structural time series models and the Kalman filter. Cambridge University Press, Cambridge

Henriksson SV, Räisänen P, Silén J, Laaksonen A (2012) Quasiperiodic climate variability with a period of 50–80 years: fourier analysis of measurements and earth system model simulations. Clim Dynam 39:1999–2011. doi:10.1007/s00382-012-1341-0

Henttonen H (1991) Kriging in interpolating July mean temperatures and precipitation sums. Reports from the Department of Statistics. University of Jyväskylä, 12

IPCC: Climate Change 2013 (2013) The Physical Science Basis. Contribution of Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change [Stocker TF, Qin D, Plattner G-K, Tignor M, Allen SK, Boschung J, Nauels A, Xia Y, Bex V, and Midgley PM (eds.)], Cambridge University Press, Cambridge and New York

Keller CF (2009) Global warming: a review of this mostly settled issue. Stoch Environ Res Risk Assess 23:643–676

Korhonen J (2006) Long-term changes in lake ice cover in Finland. Nord Hydrol 37:347–363

Laine M, Latva-Pukkila N, Kyrölä E (2014) Analysing time-varying trends in stratospheric ozone time series using the state space approach. Atmos Chem Phys 14:9725–9797. doi:10.5194/acp-14-9707-2014

Linkosalo T, Häkkinen R, Terhivuo J, Tuomenvirta H, Hari P (2009) The time series of flowering and leaf bud burst of boreal trees (1846–2005) support the direct temperature observations of climatic warming. Agric For Meteorol 149:453–461

Matheron G (1963) Principles of geostatistics. Econ Geol 58:1246–1266. doi:10.2113/gsecongeo.58.8.1246

Migon HS, Gamerman D, Lopes HF, and Ferreira MAR (2005) Handbook of statistics, Vol. 25, Bayesian thinking: modeling and computation, chapter Dynamic models. Elsevier. doi: 10.1016/S0169-7161(05)25019-8

Petris G (2010). An R package for dynamic linear models. J Stat Softw, 36(12):1–16. URL http://www.jstatsoft.org/v36/i12/

Petris G, Petrone S, Campagnoli P (2009) Dynamic linear models with R. Springer, New York

Ripley BD (1981) Spatial statistics. John Wiley & Sons, New York

Screen JA, Simmonds I (2010) The central role of diminishing sea ice in recent Arctic temperature amplification. Nature 464:1334–1337. doi:10.1038/nature09051

Serreze MC, Barry RG (2011) Processes and impacts of Arctic amplification: a research synthesis. Glob Planet Change 77:85–96

Sleschinger ME, Ramankutty N (1994) An oscillation in the global climate system of period 65–70 years. Nature 367:723–726. doi:10.1038/367723a0

Tarantola Albert (2006) Popper, Bayes and the inverse problem. Nat Phys 2:492–494

Tietäväinen H, Tuomenvirta H, Venäläinen A (2010) Annual and seasonal mean temperatures in Finland during the last 160 years based on gridded temperature data. Int J Climatol 30(15):2247–2256. doi:10.1002/joc.2046

Tuomenvirta H (2001) Homogeneity adjustments of temperature and precipitation series—Finnish and Nordic data. Int J Climatol 21:495–506. doi:10.1002/joc.616

Vajda A (2007) Spatial variation of climate and the impact of disturbances on local climate and forest recovery in northern Finland, vol 64. Finnish Meteorological Institute Contributions, Helsinki

Vajda A, Venäläinen A (2003) The influence of natural conditions on the spatial variation of climate in Lapland, northern Finland. Int J Climatol 23:1011–1022. doi:10.1002/joc.928

Venäläinen A, Heikinheimo M (1997) The spatial variation of long-term mean global radiation in Finland. Int J Climatol 17:415–426. doi:10.1002/(SICI)1097-0088(19970330)17:4<415:AID-JOC138>3.0.CO;2-#

Venäläinen A, Tuomenvirta H, Pirinen P, Drebs A (2005) A basic Finnish climate data set 1961–2000—Description and illustrations. Finnish Meteorological Institute Reports 5

Wu WB, Zhao Z (2007) Inference of trends in time series. J R Statist Soc B 69:391–410. doi:10.1111/j.1467-9868.2007.00594.x

Wu Z, Huang NE, Long SE, Peng C-K (2007) On the trend, detrending, and variability of nonlinear and nonstationary time series. Proc Nat Acad Sci 104:14889–14894. doi:10.1073/pnas.0701020104

Ylhäisi J, Tietäväinen H, Peltonen-Sainio P, Venäläinen A, Eklund J, Räisänen J, Jylhä K (2010) Growing season precipitation in Finland under recent and projected climate. Nat Hazards Earth Sys 10:1563–1574. doi:10.5194/nhess-10-1563-2010

Acknowledgments

This work was funded by University of Eastern Finland strategic funding (Project ACRONYM), the Academy of Finland Centre of Excellence program (Project No. 1118615) and the Academy of Finland INQUIRE Project.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

About this article

Cite this article

Mikkonen, S., Laine, M., Mäkelä, H.M. et al. Trends in the average temperature in Finland, 1847–2013. Stoch Environ Res Risk Assess 29, 1521–1529 (2015). https://doi.org/10.1007/s00477-014-0992-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00477-014-0992-2