Abstract

Key message

UAV-based photogrammetry can provide detailed, accurate, and consistent structural models of open-grown trees across different species and growth forms. Environmental and ambient light conditions impact the results.

Abstract

Our empirical knowledge of the complicated and highly dynamic three-dimensional structure of trees is limited. Drone-based photogrammetry offers a powerful and effective tool for capturing, analyzing, and monitoring adult trees, particularly individuals growing outside of the closed canopy forest (subsequently termed “open-grown”) in both natural and built environments. Here, we test the accuracy and consistency of high-resolution models of individual open-grown trees of three structurally distinct species. To validate model accuracy, we compared model estimates with direct measurements of branch diameter and internode length. We also examined the consistency of models of the same individual given different ambient light conditions by comparing corresponding measurements from two models of each tree. We use readily available equipment and software, so our protocol can be rapidly adopted by professional and citizen scientists alike. We found that the models captured diameter and interior internode length well (r2 = 0.87 and 0.98, respectively). Difference between measurements from different models of the same tree was less than 3 cm for diameters and interior internode lengths of most size classes. Thin distal branches were not captured well: measurements of terminal internodes in most size classes were underestimated by 50–60 cm. Models from overcast days were more accurate than models from sunny days (p = 0.0056), and a high-contrast background helps capture thin branches. Our approach constructs accurate and consistent three-dimensional models of individual open-grown trees that can provide the foundation for a wide range of physiological, behavioral, and growth studies.

Similar content being viewed by others

Introduction

The three-dimensional structure of a tree’s aboveground biomass is a complex, dynamic, and adaptive response of the inherent growth patterns of the species to the environment in which it grows. Early descriptions of characteristic growth patterns of different tree groups (Halle et al. 1978) illustrated the great diversity of emergent structures possible from a relatively simple and modular process (Prusinkiewicz and Lindenmayer 1990). Algorithmic modeling of trees can generate realistic functional–structural models of specific types of tree, to examine source–sink interactions of a carbon allocation model through computer simulation (Allen et al. 2005), but, detailed, empirical knowledge of tree structure is lacking for most trees in many environmental settings. Better empirical information about tree structure could significantly contribute to a wide range of scientific research, including our understanding of tree physiology, resource allocation, short- and long-term behavior, and biomechanics (Malhi et al. 2018).

The structure of trees grown in closed forests differs dramatically from those grown in more open environments (Hirons and Thomas 2018), like cities and parks (Sterck et al. 2005). Trees in closed forests directly compete with their immediate neighbors for light and underground resources. The mature shape that emerges over decades of growth is a response to these interactions. Open environments potentially allow trees to more clearly express the growth patterns inherent to their genetic inheritance without the influence of competition, which can provide insight into the intrinsic whole-tree biology of the species. With over 5 billion trees planted in US cities alone and projections that this number will continue to grow rapidly (Nowak and Greenfield 2018), the global importance of single individuals growing outside of closed canopy forest or “open-grown” trees in both natural and built environments will become critical to the sustainability and health of both human and tree populations. These isolated trees can play an important role in supporting biodiversity in a variety of environments (Sebek et al. 2016; Parmain and Bouget 2018). Although solitary trees in the built environment do not experience direct competition for resources, their growth is influenced by harsh urban environments (Quigley 2004) where they are often heavily managed, and which can be considerably hotter and have poorer soils than the surrounding countryside (Arnfield 2003; Lehmann and Stahr 2007). Understanding these responses could reveal a great deal about their phenotypic plasticity and ability to adapt to climate and land use change. Here, we assess a protocol to produce accurate and consistent high-resolution models with which individual open-grown trees can be studied.

Several approaches are often used to produce point clouds and remotely obtain structural metrics from trees, primarily based on LiDAR and photogrammetry techniques. LiDAR technology builds point clouds by calculating the distance from a sensor to a target object based on the elapsed time between an emitted laser pulse and its return to the sensor, and has been used to capture 3D structures in many ecological contexts (Lefsky et al. 2002; Disney et al. 2018). Digital photographs can also be used to build point clouds through photogrammetry, which finds the 3D coordinates of points by comparing multiple images of the points from different angles (Colomina and Molina 2014; Pádua et al. 2017). Photogrammetry can only build point clouds of objects captured clearly in the input photographs, meaning handheld cameras can be used to produce models of subjects within reach, like excavated root systems (Koeser et al. 2016), small trees (Miller et al. 2015), and the base of adult trees (Morgenroth and Gomez 2014). UAVs have been used to capture photographs of forests from above to build point clouds through photogrammetry (Lisein et al. 2013), and Gatziolis et al. (2015) demonstrated the feasibility of constructing individual adult trees using UAV-based photogrammetry.

Point clouds generated by both LiDAR and photogrammetry can capture tree structure and obtain basic measurements, such as tree height, diameter at breast height, and canopy volume (Henning and Radtke 2006; Baltsavias et al. 2008; Côté et al. 2009; Rosell et al. 2009; Fritz et al. 2013; Lisein et al. 2013; Morgenroth and Gomez 2014; Zarco-Tejada et al. 2014; Miller et al. 2015; Torres-Sánchez et al. 2018). Comparisons of forest and individual tree metrics captured by terrestrial LiDAR, airborne LiDAR, and photogrammetry show that LiDAR captures forest structure below the canopy more accurately than photogrammetry (Wallace et al. 2016; Brede et al. 2017; Roşca et al. 2018). Methods to reconstruct fine branches that were not captured in point clouds have been developed, but the reconstructions are based on assumptions about growth patterns and do not necessarily reflect the actual branch structure (Tan et al. 2007; Côté et al. 2009; Wu et al. 2013). Few studies have validated detailed measurements of branches throughout the crown captured by LiDAR (Dassot et al. 2010; Lau et al. 2018), and no previous validations of the accuracy and consistency of measurements from models captured by photogrammetry exist. We propose using photogrammetry as a less expensive and more widely available alternative to LiDAR to capture detailed records of tree structure that can be monitored over time, which requires a thorough evaluation of the accuracy of fine measurements in adult trees with complex structures. Here we use Agisoft Photoscan to produce models from photogrammetrically generated point clouds to validate our measurements, but the point clouds built in the first part of our protocol can be processed with other tree-model building softwares developed for LiDAR point clouds (Raumonen et al. 2013; Hackenberg et al. 2015).

In this paper, we describe a workflow for using aerial photos to build point clouds and produce models of individual adult trees, and test the accuracy and consistency of these models by comparing them to the actual tree. The workflow covers the entire process, from designing flight paths and capturing photos to processing the photos and measuring the models. This analysis does not include thorough optimization of processing parameters, but rather tests the accuracy of models produced using parameters that we determined to be reliable through extensive trial and error of methods described in peer-reviewed articles, in online forums and tutorials, and through personal communications. We tested this protocol for three trees of different species, with varying sizes and growth forms. We validate the models by comparing branch diameter and internode measurements from our models with direct measurements of the corresponding points in the actual trees, which were felled for this purpose. We also examine the consistency of the models produced from photographs taken in different environmental conditions by comparing independently built models of the same tree.

Methods

Site and focal trees

Three open-grown trees of different species with varying size, shape, and branching complexity were chosen within the grounds of The Morton Arboretum in Lisle, Illinois. Two trees from the arboricultural research area of the Arboretum were selected: an American elm (Ulmus americana cultivar ‘New Horizon’) and a black walnut (Juglans nigra). A white oak (Quercus alba), grown in the living collections, was included in the study because it was being removed due to its decline and death. Photo sets of each tree were captured in the winter when the trees had no leaves. Photo sets were captured once on a sunny day and once on an overcast day, for a total of six photo sets that were then processed into models (Fig. 1).

Photo capture

Flight paths were planned using Litchi Online Hub and iOS app (VC Technology Ltd, 22.99 USD), based on optimal paths determined by Gatziolis et al. (2015). For simplicity, paths were designed as stacked orbits rather than vertical spirals. Two concentric stacks of orbits were planned for each tree, with staggered heights (Fig. 2). The inner stack of orbits was as close to the focal tree as possible, generally 1–2 m from the outer edge of the crown. The lowest orbit was 4–5 m above the ground. Each subsequent orbit was placed 4–5 m above the last, continuing until the highest orbit was 1–3 m higher than the top of the tree. The outer stack of orbits was designed so that approximately 85% of the tree was visible in most photos. The lowest orbit was 6 m above the ground, and each subsequent orbit was 4–5 m above the last, continuing until the highest orbit was 5–10 m above the top of the tree. The drone was set to fly at approximately 2.5 km/h.

Schematic of target placement and orbit locations during photo capture. Green circle represents tree canopy. Squares represent targets placed near the base of the tree (light blue), in an inner circle (yellow) and in an outer circle (dark blue). Lines connecting squares represent distances measured for scale bars. Dashed circles indicate inner and outer orbits. Orbits were stacked at 4–5 m intervals

Fourteen circular coded targets (available from Agisoft Photoscan, Agisoft LLC) were printed in gray at 46.5 cm diameter and placed on 49.5 × 76 cm black poster board (~ 20 USD). Two targets were placed flat on the ground at the base of the tree. The remaining targets were placed in two concentric circles around the tree (Fig. 2). The inner circle consisted of 7 targets, propped up at a 6.8° angle and placed 2–3 m wider than the crown of the tree. The outer circle consisted of 5 targets, propped up at a 30.4° angle and placed 2–3 m wider than the inner circle. Distances between the two targets at the base of the tree and three additional pairs of targets were measured to be used to scale the model. All targets were secured in place with garden stakes.

A DJI Inspire 2 (2999 USD + 954 USD for two sets of batteries) with Zenmuse X5S camera and gimbal (1899 USD) was flown using the automatic flight paths. The camera and gimbal angle were controlled manually to capture photos of as much of the tree as possible every 2–4 s. Litchi waypoint planning gives the option of stopping at each waypoint to take a photo automatically, however, this function was not used because frequents stops greatly decrease battery life. The drone was then flown manually to capture additional photos from ~ 1 to 2 m off the ground with the camera gimbal pointed slightly upwards, and to capture photos from any areas that were avoided during the automatic flight paths (often due to the presence of a nearby object).

Photo processing

All models were processed using a workstation with Dual Intel Xeon E5-2667 3.2 GHz CPU Processors, 128 GB of DDR4-2400 RAM, and four NVIDIA GeForce GTX 1080 GPU, running Windows 10 Pro 64-bit Operating System (13,760 USD).

Automatic photo alignment

Each photo set was processed individually using Agisoft Photoscan version 1.4.3 (Agisoft LLC, 550 USD) in its own .psx file. The ground altitude was estimated based on the altitude of the lowest orbit and entered into the software. The “estimate image quality” feature was used to identify photos with poor quality. Photos with a quality score below 0.6 were disabled. Because the Zenmuse X5S camera uses a rolling shutter, the “allow rolling shutter compensation” feature was enabled. Photos were aligned using the parameters listed in Table 1.

Alignment review and manual realignment

After initial alignment, coded targets were manually marked in the photos. Because the handmade coded targets did not have the precise patterns needed to be recognized automatically by the software, they were marked manually. After a target is manually marked in two photos, the software projects the placement of that target onto all aligned photos, with incorrect projections indicating poor alignment. With perfect alignment, all projections would be in the exact center of the target, but after extensive trial and error, we determined that the time necessary to achieve perfect alignment is not worth the benefits to the model. Here, we consider a projection correct if it is placed on the target board, even if it is not exactly in the center of the target. The first target was marked in two photos taken from high angles that were assumed to be aligned correctly based on initial placement relative to the reconstructed point cloud. High-angle photos were used for initial target placement, because they align correctly more often than low-angle photos during automatic alignment. Each subsequent target was marked in any two high-angle photos onto which all previously marked targets were correctly projected. Ensuring that existing targets are projected correctly in a photo before marking new targets prevents alignment within separate clusters of photos that are not aligned with each other. Once all fourteen targets were marked in two photos, all photos were examined for incorrect projections, which would indicate poor alignment. Alignment was reset in any photo in which projections were missing or placed off the target board. After alignment was reset in all incorrectly aligned photos, the cameras were optimized. Targets were marked in additional aligned photos until each target was marked in at least 10% of the total number of photos, the average error for all targets was less than 0.8 pixels, and the error for each target was less than 1.0 pixel.

Additional photos were aligned manually by marking up to four visible targets in each unaligned photo, leaving at least one target unmarked as a control, and then aligning the photo. If the photo aligned and projections of control targets were correct, the alignment was accepted. If any target projection in the newly aligned photo was incorrect, the alignment was rejected and reset. The cameras were optimized throughout this process. After manual alignment was attempted in each unaligned photo once, the remaining unaligned photos were worked through again, using the same criteria to accept or reject alignment. Any photos that did not correctly align after two attempts were not used to build the final model. Targets were marked in additional aligned photos until once again each target was marked in at least 10% of the total number of photos, the average error for all targets was less than 0.8 pixels, and the error for each target was less than 1.0 pixel.

Cleaning sparse cloud

The sparse cloud was cleaned using the “gradual selection” tool. Points with reprojection error greater than 0.5 were selected and deleted, and the cameras were optimized. Points with reconstruction uncertainty greater than 70 were then selected and deleted, and the cameras were optimized. Finally, points with projection accuracy greater than 8 were selected and deleted, and the cameras were optimized.

Model scaling

Scale bars were created between four pairs of targets using the measurements taken at the time of photo capture. After the distances were entered, scaling was updated.

Dense cloud generation and cleaning

The sparse cloud was split into two chunks to generate the dense cloud. In one chunk, the bounding box was centered around the crown of the tree, but did not extend to the ground, and therefore did not include the base of the trunk. The second chunk included only the base of the trunk and a small portion of the ground around it. Including only a small portion of the ground in dense cloud generation greatly reduces the processing time and increases the quality of the model. Unaligned photos were disabled in both chunks. A dense cloud was generated in both chunks using the parameters listed in Table 1. After the dense clouds were built, the two chunks were merged by checking “merge targets,” “merge tie points,” and “merge dense cloud.” The final dense cloud was cleaned manually to remove extraneous points surrounding the focal tree, and the “select by color” feature was used to remove white, gray, and blue points from the sky that often appear as noise in the tree crown. There was no attempt to manually clean noise within the crown.

Mesh generation

The bounding box was resized to include the entire dense cloud. The mesh was then generated using the parameters listed in Table 1.

Validating accuracy

We labeled branches growing from the trunk as primary, branches growing from primary branches as secondary, and so on. Half of the primary branches of each tree were selected, and the branching points (nodes) were labeled. A subset of the labeled nodes was randomly selected to be measured. One model of the elm captured only 30 nodes in the selected primary branches, so all 30 were used. When the walnut was felled, several of the selected branches broke off and could not be correctly identified, so two additional secondary branches were randomly selected to be measured.

The models were measured using the ruler tool in Agisoft Photoscan. Four measurements were made at each selected node: two diameter and two length measurements (Fig. 3). The diameters of both branches were measured perpendicular to the branch just past the branching point. Length measurements were taken on each branch from the selected node to a subsequent node (“interior internode”), or to the end of the branch when there was no subsequent node (“terminal internode”). Internodes were measured as the shortest distance from the selected node to a subsequent node and did not take into account the curvature of the branch segment.

The focal trees were felled, and the nodes were labeled to match the labeling of the models. Diameter and length measurements were taken using a DBH tape and measuring tape, respectively. Calipers were used to measure diameters of branches thinner than 3 cm and of branches that the DBH tape could not be wrapped around due to obstructions. Measurements from nodes that could not be confidently matched between models and the actual tree were excluded from the analysis. Measurements from branches that broke when the tree was felled were also excluded.

Results

Photo capture and processing

Two photo sets were captured of each tree without leaves, for a total of six photo sets. Although the flight paths were consistent for the two models of each tree, the photos were taken manually, resulting in photo sets ranging in size from 248 to 581. Automating photo capture in the flight paths would help maintain consistency in photo number across photo sets. After processing, the average error of target projections was 0.38 pixels. The average percent error between our scale bar measurements and the measurements estimated by the software was 0.02 m. The mean number of points per cloud was 10,274,940, and ranged from 2,428,312 to 23,769,356. A small portion of the points is noise that was not removed or points that are duplicated in the portion of the trunk that overlapped in the two separate chunks when the dense cloud was processed.

Processing took 33.8 h on average (Fig. 4), of which 24.3 h was automatic processing. Manual alignment of photos made up the majority of the manual processing time (~ 7 h, ~ 73%). Using coded targets that the software automatically recognizes should greatly reduce the time required for this step; the coded targets used for these models were not made with the precision required for consistent automatic recognition.

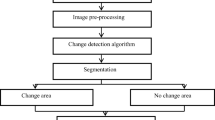

Flow chart showing model production process and time required for each step. Yellow boxes are automated, while blue boxes require manual input. Time required for automated steps is listed in hours as mean [first quartile, third quartile]. Time required for manual steps is listed in hours as estimated mean

Model accuracy

Overall, the accuracy of our models for both diameters and interior internodes was high, even for the smallest size classes (r2 = 0.87 and 0.98). In general, branch diameter was overestimated, while segment lengths were consistently but only slightly underestimated for all size classes (Table 2). The average relative error of diameter measurements was greater for branches smaller than 5 cm in diameter (p < 2.2 × 1016), but the average error magnitude changed by less than 1 cm across all size classes (Fig. 5).

Accuracy of tree structural model estimates for diameters, interior internodes, and terminal internodes. In each panel, the error of the two model estimates are connected by a vertical line for a diameter; b interior internode; and c terminal internode. y-axes show the error of model estimates compared to direct measurements, and x-axes show branch diameter. Values are color coded by tree species: elm (red), oak (blue), and walnut (gold)

The relative errors in interior internode estimates were greatest for branches less than 5 cm in diameter (p = 0.0332), although there was not a clear trend in underestimates across size classes (Table 2). Average error magnitude of interior internodes for branches greater than 10 cm in diameter was less than 3.6 cm (2.99–8.74%), and was slightly but significantly larger for branches smaller than 10 cm in diameter (4.11–4.20 cm, p < 5.7 × 1012), resulting in 11.16–16.81% errors.

The models did not capture the full length of branches and therefore the full crown extent, likely due to the resolution of the images and the complexity of small distal branches, resulting in high terminal internode estimate errors (Table 2 and Fig. 5c). The length to the end of the branch was underestimated for most size classes and the errors were between 43 and 63 cm. The correlation coefficient was 0.20. Size class was determined by the node where the length measurement starts, so terminal internodes in larger size classes start closer to the base of the branch. The largest node at the beginning of a terminal internode was 14.5 cm in diameter, meaning that all branches thicker than 14.5 cm in diameter were captured in the models.

Model consistency

Models were consistent for the same tree in different light conditions (Figs. 1, 6), with the difference between models being less than the error between the model and the tree in almost every instance (Table 3). The difference between diameter measurements was greatest for branches larger than 20 cm (p = 0.0128), although the relative difference was lower for branches larger than 10 cm (p = 0.2115). There were also greater differences in larger branches for interior internode length. The difference and relative difference for terminal internodes peaked in the 5–10 cm size class (32.17 cm, 122.95%).

Consistency of tree structural model estimates for diameters, interior internodes, and terminal internodes. The median, first and third quartiles, and 95% confidence interval of the error in measurements from models made under two ambient light conditions are shown for a diameters; b interior internodes; and c terminal internodes. y-axes show the error of model estimates. Values are color coded by tree species: elm (red), oak (blue), and walnut (gold). Dark colors indicate overcast conditions; light colors indicate sunny conditions

Branch diameter was more accurately estimated in models captured during overcast conditions than sunny conditions (Fig. 6) across measurements from all trees (p = 0.0056). This pattern was significant between the two models of the elm (p = 0.0129), and between the two models of the oak (p = 0.0042). Diameter measurements from the overcast walnut model were slightly but not significantly more accurate than those from the sunny walnut model (p = 0.075). Light conditions had no significant effect on internode errors across trees. The magnitude of errors in terminal internode length estimates in the elm was greater in the sunny model than the overcast model (p = 0.0110).

Discussion

Our validation study demonstrates that models obtained through drone-based photogrammetry can capture the diameter and length of interior internodes down to 3 cm in diameter to within 2.50 cm and 3.37 cm error, respectively, but underestimates the length of thin, distal branches that make up the full extent of the crown by up to 63.31 cm (Table 2). The accuracy of measurements from LiDAR models of fully leafed-out trees in dense forests and only for branches greater than 10 cm in diameter (Lau et al. 2018), and for LiDAR models where only branch volume and diameter or measured (Dassot et al. 2010), were similar or slightly lower than our results. In our models, diameters were consistently overestimated and interior internode lengths were underestimated, which can both be explained by noise within acute angle branches being incorporated into the model (Fig. 7). Manual cleaning of the dense point cloud may improve these errors, but it is an extremely time-consuming process. These results were consistent across three different growth forms and in different light conditions. The elm, with dense and slender branches, had larger errors (p < 2.2e−16), greatly underestimating the lengths of terminal internodes, which was likely due to the large number of overlapping thin distal branches.

Diameter overestimation and internode underestimation. Screenshots of dense point cloud (a), where gray noise points (indicated by arrow) are visible and separate from the branch, and mesh (b), where noise (indicated by arrow) is included in the branch structure. Lines show where diameter (orange) and internode (blue) should have been measured (a), and where they were measured (b)

Ambient light conditions (Fig. 1) affected contrast and shadows and slightly impacted the accuracy of the model. For both the oak and the elm, errors in diameter measurements were 0.53 cm smaller in models created under overcast conditions (p = 0.0009). One model of the walnut was made from photos in conditions that changed between overcast and sunny, which prevents comparison of the walnut models as two ends of a spectrum, but it provides an example of an intermediate condition. The overcast–sunny model had slightly but not significantly lower diameter errors than the sunny model, supporting the trend indicated by the oak and elm models. The sunny elm model had greater error in terminal internode measurements than the overcast model (p = 0.0110). Although the differences were minimal, preliminary results indicate that environmental conditions are more important than the size of the photo set: a larger photo set (286 photos) taken in sunny conditions produced a worse model of the elm than a smaller photo set (248 photos) taken in overcast conditions.

Based on our analyses, we propose that the best conditions for modeling a leafless tree are an overcast day with no wind when the ground is covered with snow (Fig. 8). On sunny days, the glare from the sun can distort the appearance of branches (Fig. 8a) and the movement of shadows throughout the course of photo capture causes changes in color, exposure, and focal length that can cause alignment problems (Fig. 8b, c). Snow on the ground can greatly improve the capture of thin distal branches, as the solid white background creates high contrast against the dark branches (Fig. 8d–g). The errors of terminal internodes were slightly lower (p = 0.45) in the sunny oak model, which was captured with a snowy background.

Environmental factors impacting model reconstruction. a Photo taken looking into the sun. Red circle indicates branches that are distorted due to glare. b, c Photos of a target taken 9 min apart. Photo of a tree with a grass background (d) and the model it produces (f); the same tree with a snowy background (e) and the model it produces (g). Orange and blue circles show corresponding branches in photos and models. h, i Photos of a tree taken 43 min apart. Purple circles show change in snow cover

Our protocol for producing models would likely be most improved by standardization and intensification of the contrast between the tree’s branches and the environmental background, as the results from the model obtained with a snow-covered background show (Fig. 8d–g). Even-colored ground covers can be placed around the base of the tree for high-angle photos, but low-angle oblique views of the tree include broad landscapes. Using snow as a solid background is complicated by the fact that the snow may melt during photo capture due to rapidly rising temperatures and direct sunlight (Fig. 8h, i), resulting in a changing background that may be worse for photo alignment than an unchanging but low-contrast background. Low temperatures while snow is present should not restrict drone use, as the DJI Inspire 2 and Zenmuse X5S function at temperatures as low as − 20 and − 10 °C, respectively.

The ability to quickly and affordably obtain accurate high-resolution models through the use of drone-based photogrammetry techniques has the potential to enable rapid advances in our understanding of tree structure, form, and growth, and how they relate to environmental factors through time. With a total cost of 20,205 USD for equipment and software, this protocol is not cost prohibitive. While the use of drones is limited in closed canopy forested environments, this aerial platform provides a powerful tool for building models of open-grown trees. Ideally, this protocol would be used for trees with no objects or passersby within ten meters of the edge of the tree’s crown. Objects within ten meters make flight planning and execution more difficult, but can be avoided. The presence of pedestrians or any sort of traffic within ten meters may require special precautions and legal waivers, as legal restrictions prohibit commercial drone use in densely populated areas. We do not foresee legal restrictions greatly limiting the use of this protocol, as the intended focal trees are relatively isolated, often located in gardens, parks, or on private property. These models can be applied to the study of many aspects of tree biology and questions regarding their health in open environments that are both natural and built. Photogrammetric models also easily capture colors that can indicate health and phenology. Empirical information about tree structure has been widely neglected in numerous questions in ecology and plant ecophysiology, despite having considerable relevance to management issues (James et al. 2014) related to assessment of failure risk (James et al. 2006), impact of diseases, and pruning practices, and research questions ranging from whole-tree hydraulics to calculation of carbon stocks (Malhi et al. 2018).

We demonstrate that models capturing branches down to 3 cm in diameter can be obtained through drone-based photogrammetry for open-grown trees with different growth forms. Models of trees in the living collections of The Morton Arboretum will form the foundation of a “tree observatory” that will incorporate numerous streams of data, obtained simultaneously, into a ‘whole tree’ perspective of the tree’s behavior, growth, and interaction with the abiotic and biotic environment (Cannon et al. 2018). Continuous monitoring of baseline and foundational aspects of tree biology over long periods of time will provide insights into a number of basic and applied questions in the arboriculture industry, urban forestry, detection and control of pests and diseases, phenology, and overall health of open-grown trees. Understanding open-grown trees will be increasingly important, as these trees continue to play a large role in the health of people in built environments and provide key biodiversity resources in pastures and marginal areas.

Author contribution statement

CC and CS devised the idea. CS and EG executed the project. CS analyzed the results. CS, CC, and EG wrote the manuscript.

References

Allen MT, Prusinkiewicz P, DeJong TM (2005) Using L-systems for modeling source-sink interactions, architecture and physiology of growing trees: the L-PEACH model. New Phytol 166(3):869–880

Arnfield AJ (2003) Two decades of urban climate research: a review of turbulence, exchanges of energy and water, and the urban heat island. Int J Climatol 23(1):1–26

Baltsavias E, Gruen A, Eisenbeiss H, Zhang L, Waser LT (2008) High-quality image matching and automated generation of 3D tree models. Int J Remote Sens 29(5):1243–1259

Brede B, Lau A, Bartholomeus HM, Kooistra L (2017) Comparing RIEGL RiCOPTER UAV LiDAR derived canopy height and DBH with terrestrial LiDAR. Sensors 17(10):2371

Cannon CH, Scher CL, Gao AT, Khan T, Kua CS (2018) Building a tree observatory. Acta Hortic 1222:85–92

Colomina I, Molina P (2014) Unmanned aerial systems for photogrammetry and remote sensing: a review. ISPRS J Photogramm 92(2014):79–97

Côté J-F, Widlowski J-L, Fournier RA, Verstraete MM (2009) The structural and radiative consistency of three-dimensional tree reconstructions from terrestrial lidar. Remote Sens Environ 113(5):1067–1081

Dassot M, Barbacci A, Colin A, Fournier M, Constant T (2010) Tree architecture and biomass assessment from terrestrial LiDAR measurements: a case study for some beech trees (Fagus sylvatica). SilviLaser Freiburg 2010:206–215

Disney MI, Boni Vicari M, Burt A, Calders K, Lewis SL, Raumonen P, Wilkes P (2018) Weighing trees with lasers: advances, challenges and opportunities. interface. Focus 8(2):20170048

Fritz A, Kattenborn T, Koch B (2013) UAV-based photogrammetric point clouds—tree stem mapping in open stands in comparison to terrestrial laser scanner point clouds. Int Arch Photogramm Remote Sens Spat Inf Sci 40:141–146

Gatziolis D, Lienard JF, Vogs A, Strigul NS (2015) 3D tree dimensionality assessment using photogrammetry and small unmanned aerial vehicles. PLoS One 10(9):e0137765

Hackenberg J, Spiecker H, Calders K, Disney M, Raumonen P (2015) SimpleTree —an efficient open source tool to build tree models from TLS clouds. For Trees Livelihoods 6(11):4245–4294

Halle F, Oldeman RAA, Tomlinson PB (1978) Tropical trees and forests: an architectural analysis, 1st edn. Springer, Berlin

Henning JG, Radtke PJ (2006) Detailed stem measurements of standing trees from ground-based scanning lidar. For Sci 52(1):67–80

Hirons A, Thomas PA (2018) Applied tree biology. Wiley, New York

James KR, Haritos N, Ades PK (2006) Mechanical stability of trees under dynamic loads. Am J Bot 93:1522–1530

James KR, Dahle GA, Grabosky J, Kane B, Detter A (2014) Tree biomechanics literature review: dynamics. Arboricult Urban For 40:1–15

Koeser AK, Roberts JW, Miesbauer JW, Lopes AB, Kling GJ, Lo M, Morgenroth J (2016) Testing the accuracy of imaging software for measuring tree root volumes. Urban For Urban Green 18:95–99

Lau A, Bentley LP, Martius C, Shenkin A, Bartholomeus H, Raumonen P, Malhi Y, Jackson T, Herold M (2018) Quantifying branch architecture of tropical trees using terrestrial LiDAR and 3D modelling. Trees 32(5):1219–1231

Lefsky MA, Cohen WB, Parker GG, Harding DJ (2002) Lidar remote sensing for ecosystem studies: lidar, an emerging remote sensing technology that directly measures the three-dimensional distribution of plant canopies, can accurately estimate vegetation structural attributes and should be of particular interest to forest, landscape, and global ecologists. Bioscience 52(1):19–30

Lehmann A, Stahr K (2007) Nature and significance of anthropogenic urban soils. J Soils Sediments 7(4):247–260

Lisein J, Pierrot-Deseilligny M, Bonnet S, Lejeune P (2013) A photogrammetric workflow for the creation of a forest canopy height model from small unmanned aerial system imagery. For Trees Livelihoods 4(4):922–944

Malhi Y, Jackson T, Patrick Bentley L, Lau A, Shenkin A, Herold M, Calders K, Bartholomeus H, Disney MI (2018) New perspectives on the ecology of tree structure and tree communities through terrestrial laser scanning. Interface Focus 8(2):20170052

Miller J, Morgenroth J, Gomez C (2015) 3D modelling of individual trees using a handheld camera: accuracy of height, diameter and volume estimates. Urban For Urban Green 14(4):932–940

Morgenroth J, Gomez C (2014) Assessment of tree structure using a 3D image analysis technique—a proof of concept. Urban For Urban Green 13(1):198–203

Nowak DJ, Greenfield EJ (2018) US urban forest statistics, values, and projections. J For 116(2):164–177

Pádua L, Vanko J, Hruška J, Adão T, Sousa JJ, Peres E, Morais R (2017) UAS, sensors, and data processing in agroforestry: a review towards practical applications. Int J Remote Sens 38(8–10):2349–2391

Parmain G, Bouget C (2018) Large solitary oaks as keystone structures for saproxylic beetles in European agricultural landscapes. Insect Conserv Divers 11(1):100–115

Prusinkiewicz P, Lindenmayer A (1990) The algorithmic beauty of plants. Springer, Berlin

Quigley MF (2004) Street trees and rural conspecifics: will long-lived trees reach full size in urban conditions? Urban Ecosyst 7(1):29–39

Raumonen P, Kaasalainen M, Åkerblom M, Kaasalainen S, Kaartinen H, Vastaranta M, Holopainen M, Disney M, Lewis P (2013) Fast automatic precision tree models from terrestrial laser scanner data. Remote Sens 5(2):491–520

Roşca S, Suomalainen J, Bartholomeus H, Herold M (2018) Comparing terrestrial laser scanning and unmanned aerial vehicle structure from motion to assess top of canopy structure in tropical forests. Interface Focus 8(2):20170038

Rosell JR, Llorens J, Sanz R, Arnó J, Ribes-Dasi M, Masip J, Escolà A, Camp F, Solanelles F, Gràcia F, Gil E, Val L, Planas S, Palacín J (2009) Obtaining the three-dimensional structure of tree orchards from remote 2D terrestrial LIDAR scanning. Agric For Meteorol 149(9):1505–1515

Sebek P, Vodka S, Bogusch P, Pech P, Tropek R, Weiss M, Zimova K, Cizek L (2016) Open-grown trees as key habitats for arthropods in temperate woodlands: the diversity, composition, and conservation value of associated communities. For Ecol Manag 380:172–181

Sterck FJ, Schieving F, Lemmens A, Pons TL (2005) Performance of trees in forest canopies: explorations with a bottom-up functional-structural plant growth model. New Phytol 166(3):827–843

Tan P, Zeng G, Wang J, Kang SB, Quan L (2007) Image-based tree modeling. ACM Trans Gr 26(3):87

Torres-Sánchez J, de Castro IA, Peña JM, Jiménez-Brenes FM, Arquero O, Lovera M, López-Granados F (2018) Mapping the 3D structure of almond trees using UAV acquired photogrammetric point clouds and object-based image analysis. Biosyst Eng 176:172–184

Wallace L, Lucieer A, Malenovský Z, Turner D, Vopěnka P (2016) Assessment of forest structure using two UAV techniques: a comparison of airborne laser scanning and structure from motion (SfM) point clouds. For Trees Livelihoods 7(3):62

Wu J, Cawse-Nicholson K, van Aardt J (2013) 3D tree reconstruction from simulated small footprint waveform lidar. Photogramm Eng Remote Sens 79(12):1147–1157

Zarco-Tejada PJ, Diaz-Varela R, Angileri V, Loudjani P (2014) Tree height quantification using very high resolution imagery acquired from an unmanned aerial vehicle (UAV) and automatic 3D photo-reconstruction methods. Eur J Agron J Eur Soc Agron 55(2014):89–99

Acknowledgements

We thank Marvin Lo, Taskeen Khan, and Catherine Luo for their help in collecting field measurements. We thank Marvin Lo additionally for his guidance as we improved our protocol using Agisoft Photoscan. We are very grateful to Ann Schultz for her help in processing photos and building models. Funding for this research was provided by the Center for Tree Science with support from the Hamill Family Foundation.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Thierry Fourcaud.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Scher, C.L., Griffoul, E. & Cannon, C.H. Drone-based photogrammetry for the construction of high-resolution models of individual trees. Trees 33, 1385–1397 (2019). https://doi.org/10.1007/s00468-019-01866-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00468-019-01866-x