Abstract

The first CT scanners in the early 1970s already used iterative reconstruction algorithms; however, lack of computational power prevented their clinical use. In fact, it took until 2009 for the first iterative reconstruction algorithms to come commercially available and replace conventional filtered back projection. Since then, this technique has caused a true hype in the field of radiology. Within a few years, all major CT vendors introduced iterative reconstruction algorithms for clinical routine, which evolved rapidly into increasingly advanced reconstruction algorithms. The complexity of algorithms ranges from hybrid-, model-based to fully iterative algorithms. As a result, the number of scientific publications on this topic has skyrocketed over the last decade. But what exactly has this technology brought us so far? And what can we expect from future hardware as well as software developments, such as photon-counting CT and artificial intelligence? This paper will try answer those questions by taking a concise look at the overall evolution of CT image reconstruction and its clinical implementations. Subsequently, we will give a prospect towards future developments in this domain.

Key Points

• Advanced CT reconstruction methods are indispensable in the current clinical setting.

• IR is essential for photon-counting CT, phase-contrast CT, and dark-field CT.

• Artificial intelligence will potentially further increase the performance of reconstruction methods.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

Since its introduction in 1972 [1, 2], computed tomography (CT) has evolved into a highly successful and indispensable diagnostic tool. The success story of CT is reflected by the number of annual CT exams, which increased yearly with 6.5% over the last decade resulting in a total of 80 million CT scans in 2015 in the USA [3]. After this first tomographic imaging modality was introduced, its technological developments advanced rapidly. The first clinical CT scan took about 5 min, and image reconstruction took approximately the same time [2]. Despite long reconstruction times, image resolution was poor with only 80 × 80 pixels [2]. Nowadays, rotation speeds are accelerated to approximately a quarter of a second per rotation, and detector coverage, along the patient axis, increased up to 16 cm in high-end systems, allowing for imaging the whole heart in a single heartbeat [4]. Resolution of cross-sectional images increased to 512 × 512 pixels for most clinical applications and to 1024 × 1024 pixels or more for state-of-the-art CT scanners [5, 6].

The increasing number of CT exams, however, has a major drawback. Radiation exposure to society has significantly increased since the introduction of CT imaging, which is especially problematic for younger patients. The combination of growing community awareness about exposure-associated health risks [7] and CT communities’ efforts to tackle them has already led to significant reduction in CT dose. The most important way to reduce CT-radiation exposure is clearly to use this technique only when benefits outweigh the risks as well as costs [8]. However, dose-reduction techniques are necessary in case a CT scan is clinically indicated. Multiple dose-reduction methods were introduced, including tube current modulation [9], organ-specific care [10], beam-shaping filters [11], and most importantly optimization of CT parameters. Essential parameters of every CT protocol include tube current (mA), tube voltage (kV), pitch, voxel size, slice thickness, reconstruction filters, and the number of rotations. It is essential to realize that a different combination of parameters enables significantly different image qualities while delivering the same radiation dose to the patient. For example, the combination of large pixels with a smooth filter can provide diagnostic quality for specific indications, while the same acquisition reconstructed with smaller pixels and a sharper filter would provide non-diagnostic quality through a higher level of noise and artifacts. In the clinical routine, radiation exposure is frequently controlled by adjusting the tube current. When decreasing the tube current, one can observe a proportional increase in image noise. Thus, another dose-reduction technique concerns the proper treatment of image noise and artifacts within the reconstruction of three-dimensional data from raw projection data. Originally, CT images were reconstructed with an iterative method called algebraic reconstruction technique (ART) [12]. Due to lack of computational power, this technique was quickly replaced by simple analytic methods such as filtered back projection (FBP). FBP was the method of choice for decades, until the first iterative reconstruction (IR) technique was clinically introduced in 2009. This caused a true hype in the CT-imaging domain. Within a few years, all major CT vendors introduced IR algorithms for clinical use, which evolved rapidly into increasingly advanced reconstruction algorithms. In this paper, we will take a concise look at the overall evolution of CT image reconstruction and its clinical implementations. Subsequently, we will give a prospect towards future developments in sparse-sampling CT [13], photon-counting CT [14], phase-contrast/dark-field CT [15, 16], and artificial intelligence [17].

From concept to clinical necessity

In December 1970, Gordon et al presented initial work on ART [18], which is a method belonging to a class of IR algorithms that was initially applied to reconstruct cross-sectional images. However, due to a lack of computation power, ART was not clinically applicable, and a simpler algorithm, namely FBP was standard for decades. With FBP, CT slices are reconstructed from projection data (sinograms) by applying a high-pass filter followed by a backward projection step (Fig. 1A). With the fast progress in CT technology, FBP-based algorithms got improved and extended to keep up with hardware progress, such as Feldkamp et al’s 1984 solution for reconstruction of data from large area detectors [19]. In most circumstances, FBP works well and results in images with high diagnostic quality. However, due to the increasing concerns of exposing (younger) patients with ionizing radiation, more CT scans were being acquired at a lower radiation dose. Unfortunately, this resulted in significantly reduced image quality, because there is a direct proportional relation between image noise and radiation exposure. Also, with the growing prevalence rates of obesity [20], image quality of CT scans reconstructed with FBP deteriorated. With a larger body size, the x-ray photon attenuation increases which leads to less photons reaching the CT detector, finally resulting in significantly reduced image quality. The benefit of FBP is the short reconstruction time, but the major disadvantage is that it inputs raw data into a “black box” where only very limited model and prior information can be applied, for example to properly model image noise when a small number of photons reach the CT detector.

Filtered back projection (FBP), hybrid iterative reconstruction (IR), and model-based IR. With FBP, images are reconstructed from projection data (sinograms) by applying a high-pass filter followed by a backward projection step (left column). In hybrid IR, the projection data is iteratively filtered to reduce artifacts, and after the backward projection step, the image data are iteratively filtered to reduce image noise (middle column). In model-based IR, the projection data are backward projected into the cross-sectional image space. Subsequently, image space data are forward projected to calculate artificial projection data. The artificial projection data are compared to the true projection data to thereupon update the cross-sectional image. In parallel, image noise is removed via a regularization step

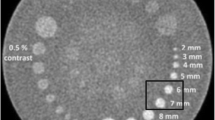

While clinical scanners operated with FBP, the CT research community spent a significant effort into the development of advanced IR algorithms, with the goal to enable low-dose CT with high diagnostic quality. These developments fall loosely into three basic approaches: (i) sinogram-based [21,22,23], (ii) image domain-based [24,25,26], and (iii) fully iterative algorithms [18, 27,28,29,30]. A parallel progress was an increasing availability of cost-efficient computational tools, such as programmable graphics processing units (GPUs) for accelerated CT reconstruction [31, 32]. This combination of developments has triggered the medical device industry to develop advanced reconstruction algorithms. In 2009, the first IR algorithm called IRIS (iterative reconstruction in image space, Siemens Healthineers) received FDA clearance [33]. This was a simple method that—similar to FBP—only applied a single backward projection step to create a cross-sectional image from raw data. Image noise was iteratively reduced in image space [34]. Within 2 years, four more advanced IR algorithms received FDA clearance: ASIR (adaptive statistical iterative reconstruction, GE Healthcare), SAFIRE (sinogram-affirmed iterative reconstruction, Siemens Healthineers), iDose4 (Philips Healthcare), and Veo (GE Healthcare) [35,36,37,38]. The first three methods are so-called hybrid IR algorithms. Similar to FBP and IRIS, a single backward projection step is used. However, hybrid IR methods are more advanced since they iteratively filter the raw data to reduce artifacts, and after the backward projection, the image data are iteratively filtered to reduce image noise (Fig. 1B). Veo was the first clinical fully iterative IR algorithm, which was one of the most advanced algorithms so far [39]. In fully IR, raw data are backward projected into the cross-sectional image space. Subsequently, image space data are forward projected to calculate artificial raw data. The forward projection step is a core module of IR algorithms, since it enables the physically correct modulation of the data acquisition process (including system geometry and noise models). The artificial raw data are compared to the true raw data to thereupon update the cross-sectional image. In parallel, image noise is removed via a regularization step (Fig. 1, right column). The process of backward and forward projection is repeated until the difference between true and artificial raw data is minimized. One can imagine that fully IR is computationally more demanding than hybrid IR resulting in longer reconstruction times of fully IR. Due to these long reconstruction times, the vendor decided to develop a different advanced algorithm called ASIR-V (GE Healthcare), which received FDA clearance in 2014. In the meantime, other hybrid and model-based IR algorithms were introduced by other vendors, including AIDR3D (adaptive iterative dose reduction 3D, Canon Healthcare), ADMIRE (advanced modeled iterative reconstruction, Siemens Healthineers), and IMR (iterative model reconstruction, Philips Healthcare) (Table 1). Most recently, in 2016, the model-based IR algorithm FIRST (forward projected model-based iterative reconstruction solution, Canon Healthcare) received FDA-clearance. The introduction of IR for clinical CT imaging resulted in a substantial number of studies evaluating the possibilities of these methods (Fig. 2). Overall, these studies showed improved image quality and diagnostic value with IR compared to FBP. Radiation dose can be reduced with IR by 23 to 76% without compromising on image quality [40]. Some studies compared the different approaches of multiple vendors, and in general, these studies found that radiation dose can be reduced further with model-based IR compared to hybrid IR and FBP [39, 41] (Fig. 3). Multiple studies evaluated the effect of IR on image quality of specific body parts. Relatively low hanging fruit is the CT examination of high-contrast body regions such as the lungs. Due to the low attenuation of x-rays passing through the air in the lungs, and due to the high natural contrast between air and the lung tissue, the radiation dose of chest CT examinations was already relatively low to begin with. In a systematic review of 24 studies, Den Harder et al [42] found that the average radiation dose of 2.6 (1.5–21.8) mSv for chest CT scans reconstructed with FBP could be reduced to 1.4 (0.7–7.8) mSv by applying IR. Similarly, the radiation dose in another high-contrast body region, CT angiography of the heart, could be reduced substantially. With FBP, the average radiation dose of ten coronary CT angiography studies was 4.2 (3.5–5.0) mSv, which could be reduced to 2.2 (1.3–3.1) mSv by using IR, with preserved objective and subjective image quality [43]. Reducing the CT radiation dose of body regions with low contrast such as the abdomen is, however, more problematic [44]. Detectability of low-contrast lesions cannot always be improved with IR at lower radiation doses [45]. However, most studies found that IR does allow for radiation dose reduction of abdominal CT exams without compromising on image quality [46, 47].

One ex vivo human heart, scanned at 4 mGy and 1 mGy (75% dose-reduction) with high-end CT scanners from four vendors. Images are reconstructed with filtered back projection (FBP), hybrid iterative reconstruction, and model-based iterative reconstruction. Numbers represent noise levels (standard deviations) in air. Images derived from a study published before by Willemink et al [39]

Current and future developments

While the number of clinical IR-related publications and the speed of introducing novel clinical algorithms have slowed down, the challenge of reducing radiation exposure remains a topic of high interest. So far, most dose-reduction strategies remained in the domain of decreasing tube current or tube voltage while IR algorithms insure an acceptable diagnostic image quality. A fundamentally different way to reduce radiation exposure is to acquire less projection images, e.g., acquire only every second, fourth, or so projection. This compressed-sensing [48, 49] inspired strategy is widely known as sparse-sampling CT. This approach allows acquiring a reduced number of projections, while the radiation exposure remains high for each individual projection image. The clear benefit of sparse-sampling acquisitions is an improved quality for each individual projection (e.g., increased signal-to-noise ratio) while circumventing the influence of electronic readout noise. Those benefits allow for an additional dose reduction by a factor of two or more when comparing to dose levels achieved with current technology. However, to reconstruct a cross-sectional image from those highly under-sampled data, a fully IR algorithm is imperative. Over the last decade, several investigators have presented IR solutions [50,51,52,53,54,55], which have the potential to be clinically introduced in the future. Translation into the clinical routine is highly depending on when sparse-sampling capable hardware, e.g., novel x-ray tubes, will become available. However, first evaluations of the clinical potential have been published [56]. One example is the possibility to quantitatively determine bone mineral density (BMD) from the combination of ultra-low-dose sparse-sampling acquisitions and a fully IR algorithm [57].

The integration of advanced prior knowledge into IR algorithms has been a parallel development over the last years. Compared to conventional FBP, IR allows integrating prior knowledge into the reconstruction process. One idea is to utilize previous examination as part of the image formation process. For example, during an oncological follow-up, many patients undergo sequential studies of the same anatomical region. Through the fact that there is shared anatomical information in between the scans, one can utilize this fact in an IR algorithm to significantly improve diagnostic image quality while reducing radiation exposure [58,59,60,61]. A different example for prior knowledge is to integrate information concerning orthopedic implants into the reconstruction process. Metal artifact, which can introduce extensive noise and streaks, is caused by implants consisting of materials with high z values. However, if the shape and material composition of the implant is known a priori (e.g., from a computer-aided design (CAD) model or a spectral analysis), it has been illustrated that integrating this information into the image formation eliminates artifacts and improves diagnostic image quality [62, 63].

Another technology that has found its way into the clinical environment is dual energy CT (DECT). DECT enables material decomposition, which is the quantification of an object composition by exploiting measurements of the material- and energy-dependent x-ray attenuation of various materials using a low- and high-energy spectrum (Fig. 4) [64,65,66]. This technology has the potential to improve contrast and reduce artifacts as compared to conventional CT. While those advances are becoming clinically available, the issue related to radiation exposure remains, especially for this CT modality. The material decomposition step can significantly intensify image noise when data are acquired with a low radiation exposure. Further, the direct implementation of model-based or fully IR requires several modifications to account for the statistical dependencies between the material-decomposed data. This dependency includes anti-correlated noise, which plays a significant role in the overall image quality in material images. IR-algorithms allow to model anti-correlated noise with a result of significantly improved diagnostic image quality [67,68,69]. Over the last years, this class of IR specific for DECT has been introduced into the clinical routine. The results can be observed when considering the contrast-to-noise ratio in virtual monoenergetic images (VMI). In theory, a strong increase in noise should be observed towards low VMI (keV) settings and a moderate increase in high VMIs [66, 70]. In DECT scanners with latest IR, one can observe almost no increase in noise for low or high VMI settings [71, 72]. Different DECT acquisition approaches are available including two x-ray tubes with different voltages, one x-ray tube switching between voltages, one x-ray tube with a partly filtered beam, and detector-based spectral separation. Dedicated IR algorithms, accounting for differences in CT design, become necessary for each of these DECT schemes. Further improvements for DECT-specific IR can be expected, for example with the integration of learning algorithms, such as dictionaries [73,74,75].

Reconstructions in dual-energy and photon-counting computed tomography. Differentiation of energy levels of x-ray photons allows for the reconstruction of energy-selective images. Material-selective images are reconstructed based on interaction of materials at varying energy levels. Finally, a combined image with different colors per material is reconstructed

An upcoming spectral CT technology, which is gaining clinical interest, is photon-counting CT (PCCT). This unique technology is capable of counting individual x-ray photons while rejecting noise, rather than simply integrating the electrical signal in each pixel. Also, these detectors can perform “color” x-ray detection; they can discriminate the energy of individual photons and divide them into several pre-defined but selectable energy bins, thereby providing a spectral analysis of the transmitted x-ray beam [76,77,78,79]. First clinical evaluations illustrated promising performance with respect to quantitative imaging, material specific (K-edge) imaging, high-resolution imaging, and a new level of diagnostic image quality in combination with significant reduction in radiation exposure [80,81,82,83,84,85,86]. While first results render the potential benefits of this technology, challenges concerning hardware and software remain. IR plays a central role to overcome those challenges. However, IR algorithms that are used in conventional CT are not optimal for PCCT, because of reasons similar to the statistical dependencies in DECT. For example, PCCT data are more complex than conventional CT data since additional multi-energetic information is present, and additional detector elements can be employed to achieve high spatial resolution images, depending on detector configuration and hardware and software settings. These variations in image acquisition as well as differences in the noise model of PCCT data need to be integrated into the model of the forward projector to fully utilize the power of IR algorithms. One of the reconstruction challenges in PCCT is that the step of material decomposition and image reconstruction are performed separately. This separation implies a loss of information, for which the second step cannot compensate. To adapt IR for this higher level of complexity, image reconstruction and material decomposition can be performed jointly [87]. This can be accomplished by a forward model, which directly connects the (expected) spectral projection measurements and the material-selective images [88,89,90,91,92]. First results illustrated the possibility to overcome challenges related to PCCT, but current IR algorithms are still too computationally intensive, and therefore reconstruction times are too long for clinical use. Further development towards IR solutions with clinical feasible reconstruction times is imperative.

Besides spectral CT, other fundamental CT developments are currently being investigated, namely phase-contrast and dark-field CT. Image contrast in current CT imaging is based on a particle model describing the physical interaction of photoelectric absorption and Compton scattering. Phase-contrast and dark-field CT are based on an electromagnetic wave model, and thus image contrast represents wave-optical interactions such as phase-shift or small-angle scattering. These novel imaging methods make use of these wave optical characteristics of x-rays, by applying for example a grating interferometer to x-ray imaging [93,94,95,96,97,98]. Compared to conventional CT, additional and complementary information become available. Phase-contrast CT offers significantly higher soft-tissue contrast [99,100,101], and dark-field CT offers structural information below the spatial resolution of the imaging system [102,103,104]. When considering a translation, clinical standards, for example with respect to radiation dose and acquisition time, need to be maintained. To ensure those clinical standards, one path is to reconstruct raw data with tailored IR algorithms. Initial investigations have illustrated the high potential of IR algorithms to enhance the image quality in phase-contrast as well as dark-field CT [105,106,107,108]. One challenge was the fact that a CT with continuous rotations seems to be not feasible; however, latest developments in fully IR algorithms have enabled the possibility of a continuously rotating gantry [16, 109, 110]. This is a significant step towards clinical translation of phase-contrast and dark-field CT.

Another emerging technique is artificial intelligence (AI). Besides classification of images, detection of objects and playing games [111, 112], AI has gained substantial interest for its potential to improve reconstruction of CT images [17]. AI, and more specifically machine learning, is a group of methods that is able to produce a mapping from raw inputs, such as intensities of individual pixels, to specific outputs, such as classification of a disease [113]. With machine learning, the input is based on hand-engineered features, while unsupervised deep learning is able to learn these features itself directly from data. Multiple research groups are working on applying AI to improve the reconstruction of CT images. One application is image-space-based reconstructions in which convolutional neural networks are trained with low-dose CT images to reconstruct routine-dose CT images [17, 114, 115]. Another approach is to optimize IR algorithms [116]. Generally, IR algorithms are based on manually designed prior functions resulting in low-noise images without loss of structures [117]. Deep learning methods allow for implementing more complex functions, which have the potential to enable lower-dose CT [117,118,119,120] and sparse-sampling CT [121]. These AI techniques have the potential to reduce CT radiation doses while speeding up reconstruction times. Also, deep learning can be used to optimize image quality without reducing the radiation dose, e.g., by more advanced DECT monochromatic image reconstruction [122] and metal artifact reduction [123, 124]. These methods are not yet ready for clinical implementation; however, it is expected that AI will play, in the near future, a major role in CT image reconstruction and restoration. We expect that AI will fit in current clinical CT imaging workflow by enhancing current reconstruction methods, for example by significantly accelerating the reconstruction process since application of a trained network can be instantaneously.

In conclusion, IR is a powerful technique that has arrived in clinical practice, and even more exciting advances can be expected from IR in the near future.

Abbreviations

- ADMIRE:

-

Advanced modeled iterative reconstruction

- AI:

-

Artificial intelligence

- AIDR3D:

-

Adaptive iterative dose reduction 3D

- ART:

-

Algebraic reconstruction technique

- ASIR:

-

Adaptive statistical iterative reconstruction

- BMD:

-

Bone mineral density

- CAD:

-

Computer-aided design

- CT:

-

Computed tomography

- DECT:

-

Dual energy CT

- FIRST:

-

Forward projected model-based iterative reconstruction solution

- GPU:

-

Graphics processing unit

- IMR:

-

Iterative model reconstruction

- IR:

-

Iterative reconstruction

- IRIS:

-

Iterative reconstruction in image space

- PCCT:

-

Photon-counting CT

- SAFIRE:

-

Sinogram-affirmed iterative reconstruction

- VMI:

-

Virtual monoenergetic images

References

Ambrose J, Hounsfield G (1973) Computerized transverse axial tomography. Br J Radiol 46:148–149

Hounsfield GN (1973) Computerized transverse axial scanning (tomography). 1. Description of system. Br J Radiol 46:1016–1022

OECD (2017) Health at a glance 2017: OECD indicators. OECD Publishing, Paris

de Graaf FR, Schuijf JD, van Velzen JE et al (2010) Diagnostic accuracy of 320-row multidetector computed tomography coronary angiography in the non-invasive evaluation of significant coronary artery disease. Eur Heart J 31:1908–1915

Hata A, Yanagawa M, Honda O et al (2018) Effect of matrix size on the image quality of ultra-high-resolution CT of the lung: comparison of 512 x 512, 1024 x 1024, and 2048 x 2048. Acad Radiol. https://doi.org/10.1016/j.acra.2017.11.017

Takagi H, Tanaka R, Nagata K et al (2018) Diagnostic performance of coronary CT angiography with ultra-high-resolution CT: comparison with invasive coronary angiography. Eur J Radiol 101:30–37

ESR (2018) ESR EuroSafe imaging Campaign. Available via http://eurosafeimaging.org. Accessed 3 Oct 2018

Macias CG, Sahouria JJ (2011) The appropriate use of CT: quality improvement and clinical decision-making in pediatric emergency medicine. Pediatr Radiol 41(Suppl 2):498–504

Kalender WA, Wolf H, Suess C (1999) Dose reduction in CT by anatomically adapted tube current modulation. II. Phantom measurements. Med Phys 26:2248–2253

Vollmar SV, Kalender WA (2008) Reduction of dose to the female breast in thoracic CT: a comparison of standard-protocol, bismuth-shielded, partial and tube-current-modulated CT examinations. Eur Radiol 18:1674–1682

Weis M, Henzler T, Nance JW Jr et al (2017) Radiation dose comparison between 70 kVp and 100 kVp with spectral beam shaping for non-contrast-enhanced pediatric chest computed tomography: a prospective randomized controlled study. Invest Radiol 52:155–162

Fleischmann D, Boas FE (2011) Computed tomography--old ideas and new technology. Eur Radiol 21:510–517

Abbas S, Lee T, Shin S, Lee R, Cho S (2013) Effects of sparse sampling schemes on image quality in low-dose CT. Med Phys 40:111915

Willemink MJ, Persson M, Pourmorteza A, Pelc NJ, Fleischmann D (2018) Photon-counting CT: technical principles and clinical prospects. Radiology:172656. https://doi.org/10.1148/radiol.2018172656

Gromann LB, De Marco F, Willer K et al (2017) In-vivo x-ray dark-field chest radiography of a pig. Sci Rep 7:4807

Teuffenbach MV, Koehler T, Fehringer A et al (2017) Grating-based phase-contrast and dark-field computed tomography: a single-shot method. Sci Rep 7:7476

Wolterink JM, Leiner T, Viergever MA, Isgum I (2017) Generative adversarial networks for noise reduction in low-dose CT. IEEE Trans Med Imaging 36:2536–2545

Gordon R, Bender R, Herman GT (1970) Algebraic reconstruction techniques (ART) for three-dimensional electron microscopy and x-ray photography. J Theor Biol 29:471–481

Feldkamp LA, Davis LC, Kress JW (1984) Practical cone-beam algorithm. J Opt Soc Am A 1:612–619

Ng M, Fleming T, Robinson M et al (2014) Global, regional, and national prevalence of overweight and obesity in children and adults during 1980–2013: a systematic analysis for the Global Burden of Disease Study 2013. Lancet 384:766–781

La Rivière PJ, Bian J, Vargas PA (2006) Penalized-likelihood sinogram restoration for computed tomography. IEEE Trans Med Imaging 25:1022–1036

Lu H, Hsiao I, Li X, Liang Z (2001) Noise properties of low-dose CT projections and noise treatment by scale transformations. 2001 IEEE Nuclear Science Symposium Conference Record 3:1662–1666

Xie Q, Zeng D, Zhao Q et al (2017) Robust low-dose CT sinogram preprocessing via exploiting noise-generating mechanism. IEEE Trans Med Imaging 36:2487–2498

Kachelriess M, Watzke O, Kalender WA (2001) Generalized multi-dimensional adaptive filtering for conventional and spiral single-slice, multi-slice, and cone-beam CT. Med Phys 28:475–490

Kalra MK, Wittram C, Maher MM et al (2003) Can noise reduction filters improve low-radiation-dose chest CT images? Pilot study. Radiology 228:257–264

Zhang Y, Rong J, Lu H, Xing Y, Meng J (2017) Low-dose lung CT image restoration using adaptive prior features from full-dose training database. IEEE Trans Med Imaging 36:2510–2523

Andersen AH, Kak AC (1984) Simultaneous algebraic reconstruction technique (SART): a superior implementation of the art algorithm. Ultrason Imaging 6:81–94

Erdogan H, Fessler JA (1999) Ordered subsets algorithms for transmission tomography. Phys Med Biol 44:2835–2851

Lange K, Carson R (1984) EM reconstruction algorithms for emission and transmission tomography. J Comput Assist Tomogr 8:306–316

Thibault JB, Sauer KD, Bouman CA, Hsieh J (2007) A three-dimensional statistical approach to improved image quality for multislice helical CT. Med Phys 34:4526–4544

Noël PB, Walczak AM, Xu J, Corso JJ, Hoffmann KR, Schafer S (2010) GPU-based cone beam computed tomography. Comput Methods Programs Biomed 98:271–277

Xu F, Mueller K (2007) Real-time 3D computed tomographic reconstruction using commodity graphics hardware. Phys Med Biol 52:3405–3419

Willemink MJ, de Jong PA, Leiner T et al (2013) Iterative reconstruction techniques for computed tomography part 1: technical principles. Eur Radiol 23:1623–1631

Nelson RC, Feuerlein S, Boll DT (2011) New iterative reconstruction techniques for cardiovascular computed tomography: how do they work, and what are the advantages and disadvantages? J Cardiovasc Comput Tomogr 5:286–292

Noël PB, Fingerle AA, Renger B, Munzel D, Rummeny EJ, Dobritz M (2011) Initial performance characterization of a clinical noise-suppressing reconstruction algorithm for MDCT. AJR Am J Roentgenol 197:1404–1409

Scheffel H, Stolzmann P, Schlett CL et al (2012) Coronary artery plaques: cardiac CT with model-based and adaptive-statistical iterative reconstruction technique. Eur J Radiol 81:e363–e369

Singh S, Kalra MK, Gilman MD et al (2011) Adaptive statistical iterative reconstruction technique for radiation dose reduction in chest CT: a pilot study. Radiology 259:565–573

Winklehner A, Karlo C, Puippe G et al (2011) Raw data-based iterative reconstruction in body CTA: evaluation of radiation dose saving potential. Eur Radiol 21:2521–2526

Willemink MJ, Takx RA, de Jong PA et al (2014) Computed tomography radiation dose reduction: effect of different iterative reconstruction algorithms on image quality. J Comput Assist Tomogr 38:815–823

Willemink MJ, Leiner T, de Jong PA et al (2013) Iterative reconstruction techniques for computed tomography part 2: initial results in dose reduction and image quality. Eur Radiol 23:1632–1642

Sauter A, Koehler T, Fingerle AA et al (2016) Ultra low dose CT pulmonary angiography with iterative reconstruction. PLoS One 11:e0162716

den Harder AM, Willemink MJ, de Ruiter QM et al (2015) Achievable dose reduction using iterative reconstruction for chest computed tomography: a systematic review. Eur J Radiol 84:2307–2313

Den Harder AM, Willemink MJ, De Ruiter QM et al (2016) Dose reduction with iterative reconstruction for coronary CT angiography: a systematic review and meta-analysis. Br J Radiol 89:20150068

Park JH, Jeon JJ, Lee SS et al (2018) Can we perform CT of the appendix with less than 1 mSv? A de-escalating dose-simulation study. Eur Radiol 28:1826–1834

Schindera ST, Odedra D, Raza SA et al (2013) Iterative reconstruction algorithm for CT: can radiation dose be decreased while low-contrast detectability is preserved? Radiology 269:511–518

Ellmann S, Kammerer F, Allmendinger T et al (2018) Advanced modeled iterative reconstruction (ADMIRE) facilitates radiation dose reduction in abdominal CT. Acad Radiol. https://doi.org/10.1016/j.acra.2018.01.014

Noël PB, Engels S, Köhler T et al (2018) Evaluation of an iterative model-based CT reconstruction algorithm by intra-patient comparison of standard and ultra-low-dose examinations. Acta Radiol. https://doi.org/10.1177/0284185117752551:284185117752551

Candes EJ, Romberg J, Tao T (2006) Robust uncertainty principles: exact signal reconstruction from highly incomplete frequency information. IEEE Trans Inf Theory 52:489–509

Donoho DL (2006) Compressed sensing. IEEE Trans Inf Theory 52:1289–1306

Han XA, Bian JG, Eaker DR et al (2011) Algorithm-enabled low-dose micro-CT imaging. IEEE Trans Med Imaging 30:606–620

Khaled AS, Beck TJ (2013) Successive binary algebraic reconstruction technique: an algorithm for reconstruction from limited angle and limited number of projections decomposed into individual components. J Xray Sci Technol 21:9–24

Liu Y, Ma J, Fan Y, Liang Z (2012) Adaptive-weighted total variation minimization for sparse data toward low-dose x-ray computed tomography image reconstruction. Phys Med Biol 57:7923–7956

Sidky EY, Kao CM, Pan XH (2006) Accurate image reconstruction from few-views and limited-angle data in divergent-beam CT. J Xray Sci Technol 14:119–139

Sidky EY, Pan X (2008) Image reconstruction in circular cone-beam computed tomography by constrained, total-variation minimization. Phys Med Biol 53:4777–4807

Tang J, Nett BE, Chen GH (2009) Performance comparison between total variation (TV)-based compressed sensing and statistical iterative reconstruction algorithms. Phys Med Biol 54:5781–5804

Kopp FK, Bippus R, Sauter AP et al (2018) Diagnostic value of sparse sampling computed tomography for radiation dose reduction: initial results. Proc. SPIE, Medical Imaging 2018: Physics of Medical Imaging 10573:40

Mei K, Kopp FK, Bippus R et al (2017) Is multidetector CT-based bone mineral density and quantitative bone microstructure assessment at the spine still feasible using ultra-low tube current and sparse sampling? Eur Radiol 27:5261–5271

Chen GH, Tang J, Leng S (2008) Prior image constrained compressed sensing (PICCS): a method to accurately reconstruct dynamic CT images from highly undersampled projection data sets. Med Phys 35:660–663

Dang H, Wang AS, Sussman MS, Siewerdsen JH, Stayman JW (2014) dPIRPLE: a joint estimation framework for deformable registration and penalized-likelihood CT image reconstruction using prior images. Phys Med Biol 59:4799–4826

Pourmorteza A, Dang H, Siewerdsen JH, Stayman JW (2016) Reconstruction of difference in sequential CT studies using penalized likelihood estimation. Phys Med Biol 61:1986–2002

Tang J, Hsieh J, Chen GH (2010) Temporal resolution improvement in cardiac CT using PICCS (TRI-PICCS): performance studies. Med Phys 37:4377–4388

Nasirudin RA, Mei K, Penchev P et al (2015) Reduction of metal artifact in single photon-counting computed tomography by spectral-driven iterative reconstruction technique. PLoS One 10:e0124831

Stayman JW, Otake Y, Prince JL, Khanna AJ, Siewerdsen JH (2012) Model-based tomographic reconstruction of objects containing known components. IEEE Trans Med Imaging 31:1837–1848

Alvarez RE, Macovski A (1976) Energy-selective reconstructions in x-ray computerized tomography. Phys Med Biol 21:733–744

Johnson TR, Krauss B, Sedlmair M et al (2007) Material differentiation by dual energy CT: initial experience. Eur Radiol 17:1510–1517

Kalender WA, Perman WH, Vetter JR, Klotz E (1986) Evaluation of a prototype dual-energy computed tomographic apparatus. I. Phantom studies. Med Phys 13:334–339

Kalender WA, Klotz E, Kostaridou L (1988) An algorithm for noise suppression in dual energy CT material density images. IEEE Trans Med Imaging 7:218–224

McCollough CH, Van Lysel MS, Peppler WW, Mistretta CA (1989) A correlated noise reduction algorithm for dual-energy digital subtraction angiography. Med Phys 16:873–880

Richard S, Siewerdsen JH (2008) Cascaded systems analysis of noise reduction algorithms in dual-energy imaging. Med Phys 35:586–601

Yu L, Leng S, McCollough CH (2012) Dual-energy CT-based monochromatic imaging. AJR Am J Roentgenol 199:S9–S15

Ehn S, Sellerer T, Muenzel D et al (2018) Assessment of quantification accuracy and image quality of a full-body dual-layer spectral CT system. J Appl Clin Med Phys 19:204–217

Sellerer T, Noël PB, Patino M et al (2018) Dual-energy CT: a phantom comparison of different platforms for abdominal imaging. Eur Radiol. https://doi.org/10.1007/s00330-017-5238-5

Li L, Chen Z, Jiao P (2012) Dual-energy CT reconstruction based on dictionary learning and total variation constraint. 2012 IEEE Nuclear Science Symposium and Medical Imaging Conference Record (NSS/MIC), 2358–2361

Mechlem K, Allner S, Ehn S et al (2017) A post-processing algorithm for spectral CT material selective images using learned dictionaries. Biomed Phys Eng Express 3:025009

Zhao B, Ding HJ, Lu Y, Wang G, Zhao J, Molloi S (2012) Dual-dictionary learning-based iterative image reconstruction for spectral computed tomography application. Phys Med Biol 57:8217–8229

Iwanczyk JS, Nygård E, Meirav O et al (2009) Photon counting energy dispersive detector arrays for x-ray imaging. IEEE Trans Nucl Sci 56:535–542

Schlomka JP, Roessl E, Dorscheid R et al (2008) Experimental feasibility of multi-energy photon-counting K-edge imaging in pre-clinical computed tomography. Phys Med Biol 53:4031–4047

Steadman R, Herrmannk C, Mülhens O et al (2010) ChromAIX: a high-rate energy-resolving photon-counting ASIC for spectal computed tomography. Proc SPIE. https://doi.org/10.1117/12.844222

Taguchi K, Iwanczyk JS (2013) Vision 20/20: single photon counting x-ray detectors in medical imaging. Med Phys 40:100901

Cormode DP, Si-Mohamed S, Bar-Ness D et al (2017) Multicolor spectral photon-counting computed tomography: in vivo dual contrast imaging with a high count rate scanner. Sci Rep 7:4784

Gutjahr R, Halaweish AF, Yu Z et al (2016) Human imaging with photon counting-based computed tomography at clinical dose levels: contrast-to-noise ratio and cadaver studies. Invest Radiol 51:421–429

Muenzel D, Bar-Ness D, Roessl E et al (2017) Spectral photon-counting CT: initial experience with dual-contrast agent K-edge colonography. Radiology 283:723–728

Pourmorteza A, Symons R, Sandfort V et al (2016) Abdominal imaging with contrast-enhanced photon-counting CT: first human experience. Radiology 279:239–245

Symons R, Cork TE, Sahbaee P et al (2017) Low-dose lung cancer screening with photon-counting CT: a feasibility study. Phys Med Biol 62:202–213

Symons R, Reich DS, Bagheri M et al (2018) Photon-counting computed tomography for vascular imaging of the head and neck: first in vivo human results. Invest Radiol 53:135–142

Dangelmaier J, Bar-Ness D, Daerr H et al (2018) Experimental feasibility of spectral photon-counting computed tomography with two contrast agents for the detection of endoleaks following endovascular aortic repair. Eur Radiol. https://doi.org/10.1007/s00330-017-5252-7

Mory C, Sixou B, Si-Mohamed S, Boussel L, Rit S (2018) Comparison of five one-step reconstruction algorithms for spectral CT. HAL archives ouvertes, Lyon. Available via https://hal.archives-ouvertes.fr/hal-01760845v2. Accessed 3 Oct 2018

Cai C, Rodet T, Legoupil S, Mohammad-Djafari A (2013) A full-spectral Bayesian reconstruction approach based on the material decomposition model applied in dual-energy computed tomography. Med Phys 40:111916

Foygel Barber R, Sidky EY, Gilat Schmidt T, Pan X (2016) An algorithm for constrained one-step inversion of spectral CT data. Phys Med Biol 61:3784–3818

Long Y, Fessler JA (2014) Multi-material decomposition using statistical image reconstruction for spectral CT. IEEE Trans Med Imaging 33:1614–1626

Mechlem K, Ehn S, Sellerer T et al (2018) Joint statistical iterative material image reconstruction for spectral computed tomography using a semi-empirical forward model. IEEE Trans Med Imaging 37:68–80

Sawatzky A, Xu Q, Schirra CO, Anastasio MA (2014) Proximal ADMM for multi-channel image reconstruction in spectral x-ray CT. IEEE Trans Med Imaging 33:1657–1668

Momose A (2005) Recent advances in x-ray phase imaging. Jpn J Appl Phys 44:6355–6367

Momose A, Kawamoto S, Koyama I, Hamaishi Y, Takai K, Suzuki Y (2003) Demonstration of x-ray Talbot interferometry. Jpn J Appl Phys 42:L866–L868

Momose A, Yashiro W, Takeda Y, Suzuki Y, Hattori T (2006) Phase tomography by x-ray Talbot interferometry for biological imaging. Jpn J Appl Phys 45:5254–5262

Pfeiffer F, Kottler C, Bunk O, David C (2007) Hard x-ray phase tomography with low-brilliance sources. Phys Rev Lett 98:108105

Pfeiffer F, Weitkamp T, Bunk O, David C (2006) Phase retrieval and differential phase-contrast imaging with low-brilliance x-ray sources. Nat Phys 2:258–261

Weitkamp T, Diaz A, David C et al (2005) X-ray phase imaging with a grating interferometer. Opt Express 13:6296–6304

Donath T, Pfeiffer F, Bunk O et al (2010) Toward clinical x-ray phase-contrast CT: demonstration of enhanced soft-tissue contrast in human specimen. Invest Radiol 45:445–452

Pfeiffer F, Bunk O, David C et al (2007) High-resolution brain tumor visualization using three-dimensional x-ray phase contrast tomography. Phys Med Biol 52:6923–6930

Stampanoni M, Wang Z, Thüring T et al (2011) The first analysis and clinical evaluation of native breast tissue using differential phase-contrast mammography. Invest Radiol 46:801–806

Bech M, Bunk O, Donath T, Feidenhans'l R, David C, Pfeiffer F (2010) Quantitative x-ray dark-field computed tomography. Phys Med Biol 55:5529–5539

Bech M, Tapfer A, Velroyen A et al (2013) In-vivo dark-field and phase-contrast x-ray imaging. Sci Rep 3:3209

Velroyen A, Yaroshenko A, Hahn D et al (2015) Grating-based x-ray dark-field computed tomography of living mice. EBioMedicine 2:1500–1506

Burger K, Koehler T, Chabior M et al (2014) Regularized iterative integration combined with non-linear diffusion filtering for phase-contrast x-ray computed tomography. Opt Express 22:32107–32118

Hahn D, Thibault P, Fehringer A et al (2015) Statistical iterative reconstruction algorithm for x-ray phase-contrast CT. Sci Rep 5:10452

Köhler T, Brendel B, Roessl E (2011) Iterative reconstruction for differential phase contrast imaging using spherically symmetric basis functions. Med Phys 38:4542–4545

Langer M, Cloetens P, Peyrin F (2010) Regularization of phase retrieval with phase-attenuation duality prior for 3-D holotomography. IEEE Trans Image Process 19:2428–2436

Brendel B, von Teuffenbach M, Noël PB, Pfeiffer F, Koehler T (2016) Penalized maximum likelihood reconstruction for x-ray differential phase-contrast tomography. Med Phys 43:188

Ritter A, Bayer F, Durst J et al (2013) Simultaneous maximum-likelihood reconstruction for x-ray grating based phase-contrast tomography avoiding intermediate phase retrieval. arXiv:1307.7912. Available via: https://arxiv.org/abs/1307.7912. Accessed 3 Oct 2018

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521:436–444

Silver D, Huang A, Maddison CJ et al (2016) Mastering the game of Go with deep neural networks and tree search. Nature 529:484–489

Chartrand G, Cheng PM, Vorontsov E et al (2017) Deep learning: a primer for radiologists. Radiographics 37:2113–2131

Chen H, Zhang Y, Kalra MK et al (2017) Low-dose CT with a residual encoder-decoder convolutional neural network. IEEE Trans Med Imaging 36:2524–2535

Chen H, Zhang Y, Zhang W et al (2017) Low-dose CT via convolutional neural network. Biomed Opt Express 8:679–694

Kopp FK, Catalano M, Pfeiffer D, Rummeny EJ, Noël PB (2018) Evaluation of a machine learning based model observer for x-ray CT. Proc SPIE. https://doi.org/10.1117/12.2293582

Wu D, Kim K, El Fakhri G, Li Q (2017) Iterative low-dose CT reconstruction with priors trained by artificial neural network. IEEE Trans Med Imaging 36:2479–2486

Chen Y, Liu J, Xie L et al (2017) Discriminative prior - prior image constrained compressed sensing reconstruction for low-dose CT imaging. Sci Rep 7:13868

Kang E, Min J, Ye JC (2017) A deep convolutional neural network using directional wavelets for low-dose x-ray CT reconstruction. Med Phys 44:e360–e375

Yi X, Babyn P (2018) Sharpness-aware low-dose CT denoising using conditional generative adversarial network. J Digit Imaging. https://doi.org/10.1007/s10278-018-0056-0

Lee H, Lee J, Kim H, Cho B, Cho S (2018) Deep-neural-network based sinogram synthesis for sparse-view CT image reconstruction. arXiv:1803.00694. Available via: https://arxiv.org/abs/1803.00694. Accessed 3 Oct 2018

Cong W, Wang G (2017) Monochromatic CT image reconstruction from current-integrating data via deep learning. arXiv:1710.03784. Available via: https://arxiv.org/abs/1710.03784. Accessed 3 Oct 2018

Gjesteby L, Yang Q, Xi Y et al (2017) Reducing metal streak artifacts in CT images via deep learning: pilot results. Fully3D Proc. https://doi.org/10.12059/Fully3D.2017-11-3202009

Zhang Y, Yu H (2018) Convolutional neural network based metal artifact reduction in x-ray computed tomography. IEEE Trans Med Imag. https://doi.org/10.1109/TMI.2018.2823083

Funding

Martin Willemink is financially supported by the American Heart Association (18POST34030192) and a Stanford-Philips Fellowship Training Award. Peter B. Noël acknowledges support through the German Department of Education and Research (BMBF) under grant IMEDO (13GW0072C) and the German Research Foundation (DFG) within the Research Training Group GRK 2274.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Guarantor

The scientific guarantor of this publication is Peter B. Noël, PhD.

Conflict of interest

The authors of this manuscript declare no relationships with any companies, whose products or services may be related to the subject matter of the article.

Statistics and biometry

One of the authors has significant statistical expertise; however, no complex statistical methods were necessary for this paper.

Informed consent

Written informed consent was not required for this study because this concerns a review paper.

Ethical approval

Institutional Review Board approval was not required because this concerns a review paper.

Methodology

• Review paper

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Willemink, M.J., Noël, P.B. The evolution of image reconstruction for CT—from filtered back projection to artificial intelligence. Eur Radiol 29, 2185–2195 (2019). https://doi.org/10.1007/s00330-018-5810-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00330-018-5810-7