Abstract

A suite of vast stellar surveys mapping the Milky Way, culminating in the Gaia mission, is revolutionizing the empirical information about the distribution and properties of stars in the Galactic stellar disk. We review and lay out what analysis and modeling machinery needs to be in place to test mechanism of disk galaxy evolution and to stringently constrain the Galactic gravitational potential, using such Galactic star-by-star measurements. We stress the crucial role of stellar survey selection functions in any such modeling; and we advocate the utility of viewing the Galactic stellar disk as made up of ‘mono-abundance populations’ (MAPs), both for dynamical modeling and for constraining the Milky Way’s evolutionary processes. We review recent work on the spatial and kinematical distribution of MAPs, and point out how further study of MAPs in the Gaia era should lead to a decisively clearer picture of the Milky Way’s dark-matter distribution and formation history.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the world of galaxies, the Milky Way is in many ways as typical as it gets: half of the Universe’s present-day stars live in galaxies that match our Milky Way in stellar mass, size, chemical abundance, etc. within factors of a few (e.g., Mo et al. 2010). But for us, it is the only galaxy whose stellar distribution we can see in its full dimensionality: star-by-star we can obtain 3D positions and 3D velocities (v los,μ ℓ ,μ b ), coupled with the stars’ photospheric element abundances and constraints on their ages. We know that in principle this enormous wealth of information about the stellar body of our Galaxy holds a key to recognizing and understanding some of the mechanisms that create and evolve disk galaxies. And it holds a key to mapping the three-dimensional gravitational potential and by implication the dark-matter distribution in the Milky Way. A sequence of ongoing photometry and spectroscopy surveys has recently hundred-folded the number of stars with good distances, radial and transverse velocities, and abundance estimates; this only forebodes the data wealth expected from ESA’s flagship science Mission Gaia to be launched next year.

Yet, practical approaches to extract the enormous astrophysical information content of these data remain sorely underdeveloped. It is not even qualitatively clear at this point what will limit the accuracy of any galaxy-formation or dark-matter inferences: the sample sizes, the fraction of the Milky Way’s stellar body covered (cf. Fig. 1), the precision of the x–v phase-space measurements, the quality and detail of the abundance information, or the (lack of) stellar age estimates. Are dynamical analyses limited by the precision with which sample selection functions can be specified, or by the fact that dust obscuration and crowding will leave the majority (by stellar-mass-weighted volume) of Milky Way stars unobserved even if all currently planned experiments worked our perfectly? Or are dynamical inferences limited by the fact that the symmetry and equilibrium assumptions, which underlie most dynamical modeling, are only approximations?

The ongoing data deluge and the continuing recognition how much our Milky Way, despite being ‘just one galaxy’, may serve as a Rosetta Stone for galaxy studies, have triggered a great deal of preparatory work on how to analyze and model these data. Many of the scientific and practical issues have been laid out, e.g., in Turon et al. (2008) or Binney (2011). Yet, it appears (at least to the authors) that the existing survey interpretation and modeling approaches are still woefully inadequate to exploit the full information content of even the existing Galactic stellar surveys, let alone the expected information content of Gaia data.

And while the science theme of ‘understanding the current structure of the Galaxy and reconstructing its formation history’ remains of central interest to astrophysics, the specific questions that should be asked of the data have evolved since the original Gaia mission science case was laid out, through advances in our understanding of galaxy formation in the cosmological framework and recent work on secular galaxy evolution. The aim of simply ‘reconstructing’ the formation history of the Galaxy in light of even idealized Gaia data, now seems naive.

The review here will restrict itself to the Galactic stars (as opposed to the Galactic interstellar medium), and in particular to the Milky Way’s dominant stellar component, its Disk, which contains about three quarters of all Galactic stars. As a shorthand, we will use the term Disk (capitalized) to refer to the ‘Milky Way’s stellar disk’. All other disks will be labeled by qualifying adjectives. The discussion will focus on the stellar disk of our Galaxy and dark matter in the central parts of the overall Milky Way halo (≤0.05×R virial∼12 kpc), with only cursory treatment of the other stellar components, the gas and dust in the Milky Way, and the overall halo structure. Perhaps our neglect of the Galactic bar is most problematic in drawing scope boundaries for this review, as the interface between disk and bar is both interesting and unclear, and because it is manifest that the present-day Galactic bar has an important dynamical impact on the dynamics and evolution of the Disk; yet, the Galactic bar’s role and complexity warrants a separate treatment.

The questions about galaxy disk formation that a detailed analysis of the Milky Way may help answer are manyfold. What processes might determine galaxy disk structure? In particular, what processes set the exponential radial and vertical profiles seen in the stellar distributions of galaxy disks? Were all or most stars born from a well-settled gas disk near the disk plane and acquired their vertical motions subsequently? Or was some fraction of disk stars formed from very turbulent gas early on (e.g., Bournaud et al. 2009; Ceverino et al. 2012), forming a primordial thick disk? Are there discernible signatures of the stellar energy feedback to the interstellar medium that global models of galaxy formation have identified as a crucial ‘ingredient’ of (disk) galaxy formation (Nath and Silk 2009; Hopkins et al. 2012)? What was the role of internal heating in shaping galaxy disks? What has been the role of radial migration (Sellwood and Binney 2002; Roškar et al. 2008a, 2008b; Schönrich and Binney 2009a; Minchev et al. 2011), i.e., the substantive changes in the stars’ mean orbital radii that are expected to occur without boosting the orbits’ eccentricity? What was the disk-shaping role of minor mergers (e.g., Abadi et al. 2003), which are deemed an integral part of the ΛCDM cosmogony? How much did in-falling satellites impulsively heat the Milky Way’s disk, potentially leading to a distinct thick disk (Villalobos and Helmi 2008)? How much stellar debris did they deposit in this process? Is the radial orbit migration induced by satellite infall (Bird et al. 2012) distinguishable from purely internal processes? All of these questions are not only relevant for the Milky Way in particular, but lead generically to the question of how resilient stellar disks are to tidal interactions; it has been claimed (e.g., Kormendy et al. 2010; Shen et al. 2010) that the existence of large, thin stellar disks poses a challenge to the merger-driven ΛCDM picture.

In the end, answers to these questions require a multi-faceted comparison of the Disk’s observable status quo to the expectations from ab initio formation models (see e.g. Fig. 2), in practice for the most part hydrodynamical simulations. However, the current generation of ‘cosmological’ disk galaxy formation models is more illustrative than exhaustive in their representation of possible disk galaxy formation histories; therefore the question of how to test for the importance of galaxy formation ingredients through comparison with observational data is actively under way.

Output of the ERIS disk galaxy formation simulation aimed at following the formation history of a Milky-Way-like galaxy ab initio. The figure shows a projection resembling the 2MASS map of the Galaxy, and shows that simulations have now reached a point where, with very quiescent merging histories, disk-dominated galaxies can result

The maximal amount of empirical information about the Disk that one can gather from data is a joint constraint on the gravitational potential in which stars orbit Φ(x,t) and on the chemo-orbital distribution function of the Disk’s stars. How to best obtain such a joint constraint is a problem solved in principle, but not in practice (see Sects. 2.3 and 5).

It is in this context that this review sets out to work towards three broad goals:

-

Synthesize what the currently most pertinent questions about dark matter, disk galaxy formation, and evolution are that may actually be addressed with stellar surveys of the Galactic disk.

-

Lay out what ‘modeling’ of large stellar samples means and emphasize some of the practical challenges that we see ahead.

-

Describe how recent work may change our thinking about how to best address these questions.

Compared to Turon et al. (2008) and Ivezić et al. (2012) for an empirical description of the Milky Way disk, and compared to a series of papers by Binney and collaborators on dynamical modeling of the Galaxy (Binney 2010, 2012a; Binney and McMillan 2011; McMillan and Binney 2012), we place more emphasis two aspects that we deem crucial in Galactic disk modeling:

-

The consideration of ‘mono-abundance’ stellar sub-populations (MAPs), asking the question of ‘what our Milky Way disk would look like if we had eyes for stars of only a narrow range of photospheric abundances’. The importance of ‘mono-abundance components’ arises from the fact that in the presence of significant radial migration, chemical abundances are the only lifelong tags (see Freeman and Bland-Hawthorn 2002) that stars have, which can be used to isolate sub-groups independent of presuming a particular dynamical history. In a collisionless system, such populations can be modeled completely independently; yet they have to ‘feel’ the same gravitational potential.

-

The central importance of the (foremost spatial) selection function of any stellar sample that enters modeling. Dynamical modeling links the stars’ kinematics to their spatial distribution. Different subsets of Disk stars (differing e.g. by abundance) have dramatically different spatial and kinematical distributions. If the spatial selection function of any subset of stars with measured kinematics is not known to better than some accuracy, this will pose a fundamental limitation on the dynamical inferences, irrespective of how large the sample is; with ever larger samples emerging, understanding the selection function is increasingly probably to be a limiting factor in the analysis.

The remainder of the review is structured as follows: in Sect. 2 we discuss in more detail the overall characterization of the Milky Way’s disk and treat in detail the open questions of stellar disk formation and evolution in a cosmological context. In Sect. 3 we provide an overview of the existing and emerging stellar Galactic surveys, and in Sect. 4 we describe how the survey selection function can and should be rigorously handled in modeling. In Sects. 5 and 6 we present recent results in dynamical and structural modeling of the Disk, and their implications for future work. In the closing Sect. 7, we discuss what we deem the main practical challenges and promises for this research direction in the next years.

2 Galactic Disk studies: an overview

‘Understanding’ the Disk could mean having a comprehensive empirical characterization for it and exploring which—possibly competing—theoretical concepts that make predictions for these characteristics match, or do not. As the Milky Way is only one particular galaxy and as disk galaxy formation is a complex process that predicts broad distributions for many properties, it may be useful to consider which Disk characteristics generically test formation concepts, rather than simply representing one of many possible disk formation outcomes.

2.1 Characterizing the current structure of the Disk

In a casual, luminosity or mass-weighted average, the Disk can be characterized as a highly flattened structure with an (exponential) radial scale length of 2.5–3 kpc and scale height of ≃0.3 kpc (e.g., Kent et al. 1991; López-Corredoira et al. 2002; McMillan 2011), which is kinematically cold in the sense that the characteristic stellar velocity dispersions near the Sun of σ z ≃σ ϕ ≃σ R /1.5≃25 km s−1 are far less than v circ≃220 km s−1. Current estimates for the overall structural parameters of the Milky Way are compiled in Table 1.2 of Binney and Tremaine (2008); more specifically estimates for the mass of the Disk are ≃5×1010 M⊙ (Flynn et al. 2006; McMillan 2011), though the most recent data sets have not yet been brought to bear on this basic number. No good estimates for the globally averaged metallicity of the Disk exist, though 〈[Fe/H]〉, being about the solar value, seems likely.

With these bulk properties, the Milky Way and its Disk are very ‘typical’ in the realm of present-day galaxies: comparable numbers of stars in the low-redshift Universe live in galaxies larger and smaller (more and less metal-rich) than the Milky Way. For its stellar mass, the structural parameters of the Disk are also not exceptional (e.g., van der Kruit and Freeman 2011). Perhaps the most unusual aspect of the Milky Way is that its stellar disk is so dominant, with a luminosity ratio of bulge-to-disk of about 1:5 (Kent et al. 1991): most galaxies of M∗>5×1010 M⊙ are much more bulge dominated (Kauffmann et al. 2003).

But describing the Disk by ‘characteristic’ numbers, as one is often forced to do in distant galaxies, does not even begin to do justice to the rich patterns that we see in the Disk: it has been long established that positions, velocities, chemical abundances, and ages are very strongly and systematically correlated. This is in the sense that younger and/or more metal-rich stars tend to be on more nearly circular orbits with lower velocity dispersions. Of course, stellar populations with lower (vertical) velocity dispersions will form a thinner disk component. This has led to the approach of defining subcomponents of the Disk on the basis of the spatial distribution, kinematics, or chemical abundances. Most common has been to describe the Disk in terms of a dominant thin disk and a thick disk, with thin–thick disk samples of stars defined spatially, kinematically, or chemically. While these defining properties are of course related, they do not isolate identical subsets of stars. Whether it is sensible to parse the Disk structure into only two distinct components is discussed below.

Much of what we know about these spatial, kinematical, and chemical correlations within the Disk has come until very recently from very local samples of stars, either from studies at R≃R ⊙ or from the seminal and pivotal Hipparcos/Geneva–Copenhagen sample of stars drawn from within ≃100 pc (ESA 1997; Nordström et al. 2004). As dynamics links local and global properties, it is perfectly possible and legitimate to make inferences about larger volumes than the survey volume itself; yet, it is important to keep in mind that the volume-limited Geneva–Copenhagen sample encompasses a volume that corresponds to two-millionths of the Disk’s half-mass volume. Only recently have extensive samples beyond the solar neighborhood with \(p(\boldsymbol{x},\boldsymbol{v},[\overrightarrow{\mathrm{X}/\mathrm{H}}])\) become available.

While a comprehensive empirical description of the Disk (spatial, kinematical, and as regards abundances) in the immediate neighborhood of the Sun has revealed rich correlations that need explaining, an analogous picture encompassing a substantive fraction of the disk with direct observational constraints is only now emerging.

But the Disk is neither perfectly smooth nor perfectly axisymmetric, as the above description implied. This is most obvious for the youngest stars that still remember their birthplaces in star clusters and associations and in spiral arms. But is also true for older stellar populations. On the one hand, the spiral arms and the Galactic bar are manifest non-axisymmetric features, with clear signatures also in the Solar neighborhood (e.g., Dehnen 1998, 2000; Fux 2001; Quillen 2003; Quillen and Minchev 2005), as shown in Fig. 3. By now the strength of the Galactic bar and its pattern speed seem reasonably well established, both on the basis of photometry (Binney et al. 1997; Bissantz and Gerhard 2002) and on the basis of dynamics (Dehnen 1999; Minchev et al. 2007). But we know much less about spiral structure in the stellar disk. While the location of the nearby Galactic spiral arms have long been located on the bases of the dense gas geometry and distance measurements to young stars, the existence and properties of dynamically important stellar spiral structure is completely open: neither has there been a sound measure of a spiral stellar over-density that should have dynamical effects, nor has there been direct evidence for any response of the Disk to stellar spirals. Clarifying the dynamical role of spiral arms in the Milky Way presumably has to await Gaia.

Distribution in v x –v z -space of very nearby stars in the Disk with distances from Hipparcos (≃100 pc; Dehnen 1998). The sample shows rich substructure of ‘moving groups’, some of which reflect stars of common birth origin, others are the result of resonant orbit trapping

In addition there is a second aspect of non-axisymmetric substructure, which is known to be present in the Disk, but which is far from being sensibly characterized: there are groups of chemically similar stars on similar but very unusual orbits (streams, e.g., Helmi et al. 1999; Navarro et al. 2004; Klement et al. 2008, 2009) that point towards an origin where they were formed in a separate satellite galaxy and subsequently disrupted in a (minor) merger, spending now at least part of their orbit in the disk. The process of Disk heating and Disk augmentation through minor mergers has been simulated extensively, both with collisionless and with hydrodynamical simulations (e.g., Velazquez and White 1999; Abadi et al. 2003; Kazantzidis et al. 2008; Moster et al. 2010): these simulations have shown that galaxy disks can absorb considerably more satellite infall and debris than initial estimates had suggested (Toth and Ostriker 1992). However, these simulations also indicated that—especially for prograde satellite infall—it is not always easy to distinguish satellite debris by its orbit from ‘disk groups’ formed in situ, once the satellite debris has been incorporated into the disk.

2.2 The formation and evolution of the Disk

Explaining the formation and evolution of galaxy disks has a 50-year history, gaining prominence with the seminal papers by Mestel (1963) and Eggen et al. (1962). Yet, producing a galaxy through ab initio calculations that in its disk properties resembles the Milky Way has remained challenging to this day.

Exploring the formation of galaxy disks through simplified (semi-)analytic calculations has yielded seemingly gratifying models, but at the expense of ignoring ‘detail’ that is known to play a role. Milestones in this approach were in particular the work by Fall and Efstathiou (1980) that put Mestel’s idea of gas collapse under angular momentum conservation into the cosmological context of an appropriately sized halo that acquired a plausible amount of angular momentum through interactions with its environment: this appeared as a cogent explanation for galactic disk sizes. These concepts were married with the Press and Schechter (Press and Schechter 1973; Bond et al. 1991) formalism and its extensions by Mo et al. (1998), to place disk formation in the context of halos that grew by hierarchical merging. This approach performed well in explaining the overall properties of the present-day galaxy disk population and also its redshift evolution (e.g., Somerville et al. 2008).

Yet, trouble in explaining disks came with the efforts to explain galaxy disk formation using (hydrodynamical) simulations in a cosmological context. The first simulations (Katz and Gunn 1991), which started from unrealistically symmetrical and quiescent initial conditions, yielded end-products that resembled observed galaxy disks. But then the field entered a 15 year period in which almost all simulations produced galaxies that were far too bulge-dominated and whose stellar disks were either too small, or too anemic (low mass fraction), or both. Of course, it was clear from the start that this was a particularly hard problem to tackle numerically: the initial volume from which material would come (≃500 kpc) and the thinness of observed disks (≃0.25 kpc) implied very large dynamic ranges, and the ‘sub-grid’ physics issue of when to form stars from gas and how this star-formation would feed back on the remaining gas played a decisive role.

As of 2012, various groups (Agertz et al. 2011; Guedes et al. 2012; Martig et al. 2012; Stinson et al. 2013) have succeeded in running simulations that can result in large, disk-dominated galaxies, resembling the Milky Way in many properties (cf. Fig. 2). This progress seems to have—in good part—arisen from the explicit or implicit inclusion of physical feedback processes that had previously been neglected. In particular, ‘radiative feedback’ or ‘early stellar feedback’ from massive stars before they explode as Supernovae seems to have been an important missing feedback ingredient and must be added in simulations to the well-established supernovae feedback (Nath and Silk 2009; Hopkins et al. 2012; Brook et al. 2012). With such—physically expected—feedback implemented, disk dominated galaxies can be made in ab initio simulations that have approximately correct stellar-to-halo mass fractions for a wide range of mass scales. In some of the other cases, cf. Guedes et al. (2012), the radiative feedback is not implemented directly, but cooling-suppression below 104 K has a similar effect. It appears that the inclusion of this additional feedback not only makes disk dominated galaxies a viable simulation outcome, but also improves the match to the galaxy luminosity function and Tully–Fisher relations.

This all constitutes long-awaited success, but is certainly far from having any definitive explanation for the formation of any particular galaxy disk, including ours. In particular, a very uncomfortable dependence of the simulation outcome on various aspects of the numerical treatment remains, which has recently been summarized by Scannapieco et al. (2012).

In addition to the fully cosmological simulations, a large body of work has investigated processes relevant to disk evolution from an analytic or numerical perspective, with an emphasis on chemical evolution and the formation and evolution of thick-disk components. The chemical evolution of the Milky Way has been studied with a variety of approaches (e.g., Matteucci and Francois 1989; Gilmore et al. 1989; Chiappini et al. 2001) mostly with physically motivated but geometrically simplified models (not cosmological ab initio simulations): these studies have aimed at constraining the cosmological infall of ‘fresh’ gas (e.g., Fraternali and Binney 2008; Colavitti et al. 2008), explain the origin of radial abundance gradients (e.g., Prantzos and Aubert 1995), and explore the role of ‘galactic fountains’, i.e. gas blown from the disk, becoming part of a rotating hot corona, and eventually returning to the disk (e.g., Marinacci et al. 2011). Recent work by Minchev et al. (2012b) has introduced a new approach to modeling the evolution of the Disk by combining detailed chemical evolution models with cosmological N-body simulations.

To explain in particular the vertical disk structure qualitatively different models have been put forward, including cosmologically motivated mechanisms where stars from a disrupted satellite can be directly accreted (Abadi et al. 2003), or the disk can be heated through minor mergers (Toth and Ostriker 1992; Quinn et al. 1993; Kazantzidis et al. 2008; Villalobos and Helmi 2008; Moster et al. 2010) or experience a burst of star formation following a gas-rich merger (Brook et al. 2004). Alternatively, the disk could have been born with larger velocity dispersion than is typical at z≈0 (Bournaud et al. 2009; Ceverino et al. 2012); or purely internal dynamical evolution due to radial migration may gradually thicken the disk (Schönrich and Binney 2009b; Loebman et al. 2011; Minchev et al. 2012a). It is likely that a combination of these mechanisms is responsible for the present-day structure of the Disk, but what relative contributions of these effects should be expected has yet to be worked out in detail, and none of these have been convincingly shown to dominate the evolution of the Disk.

Radial migration is likely to have a large influence on the observable properties of the Disk, if its role in shaping the bulk properties of the disk were sub-dominant. The basic process as described by Sellwood and Binney (2002) consists of the scattering of stars at the corotation radius of transient spiral arms; at corotation such scattering changes the angular momentum, L z , of the orbit (≃mean radius) without increasing the orbital random energy. A similar—and potentially more efficient—process was later shown to happen when the bar and spiral structure’s resonances overlap (Minchev and Famaey 2010; Minchev et al. 2011). Such changes in the mean orbital radii are expected to be of order unity within a few Gyrs, and to have a profound effect on the interpretation of the present-day structure of the Disk: e.g. present-day L z can no longer be used as a close proxy for the birth L z , even for stars on near-circular orbits. Quantifying the strength of radial migration in the Milky Way is one of the most pertinent action items for the next-generation of Milky Way surveys.

A brief synthesis of the predictions from all these efforts is as follows:

-

Stellar disks should generically form from the inside out. More specifically, it is the low angular momentum gas that settles first near the centers of the potential wells forming stars at small radii.

-

Disk-dominated galaxies with disk sizes in accord with observations can emerge, if, and only if, there is no major merger since z≃1.

-

The luminosity- or mass-weighted radial stellar density profiles at late epochs resemble exponentials.

-

Stars that formed at earlier epochs (z≥1), when the gas fraction was far higher, do not form from gas disks that are as well-settled and thin as they are at z≃0 with dispersions of ≤10 km s−1.

-

Characteristic disk thicknesses or vertical temperatures of 400 pc and σ z ≃25 km s−1 are plausible.

-

Material infall, leading to fresh gas supply and dynamical disk heating, and star formation is not smooth but quite variable, even episodic, with a great deal of variation among dark-matter halos with the same overall properties. It is rare that one particular infall or heating event dominates.

-

Throughout their formation histories galaxies exhibit significant non-axisymmetries, which at epochs later than z≃1 resemble bars and spiral arms. Through resonant interactions, these structures may have an important influence on the evolution of stellar disks.

2.3 The Disk and the Galactic gravitational potential

Learning about the orbits of different Disk stellar sub-populations as a galaxy formation constraint and learning from these stars about the gravitational potential, Φ(x,t), are inexorably linked. Those orbits are generally described by a chemo-orbital distribution function, \(p(\boldsymbol{J},\boldsymbol{\phi},[\overrightarrow {\mathrm{X}/\mathrm{H}}], t_{\mathrm{age}}| \varPhi (\boldsymbol{x}))\) that quantifies the probability of being on an orbit labeled by J,ϕ for each subset of stars (characterized by, e.g., by their ages t age or their chemical abundances \([\overrightarrow{\mathrm{X}/\mathrm{H}}]=[\mathrm{Fe}/\mathrm{H}], [\alpha/\mathrm{Fe}], \ldots\)). Here, we chose to characterize the orbit (the argument of the distribution function) by J, actions or integrals of motion (which depend on both its observable instantaneous phase-space coordinates p(x,v) and on Φ(x,t)). Each star then also has an orbital phase (or angle), ϕ, whose distribution is usually assumed to be uniform in [0,2π].

Unless direct accelerations are measured for stars in many parts of the Galaxy, many degenerate combinations of Φ(x,t) and \(p(\boldsymbol{J},\boldsymbol{\phi},[\overrightarrow{\mathrm{X}/\mathrm{H}}], t_{\mathrm {age}})\) exist, unless astrophysically plausible constraints and/or assumptions are imposed: time-independent steady-state solutions, axisymmetric solutions, uniform phase distributions of stars on the same orbit, etc. This is the art and craft of stellar dynamical modeling (Binney and Tremaine 2008; Binney and McMillan 2011).

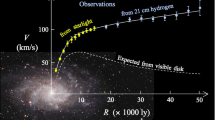

Learning about the Galactic potential is one central aspect of such dynamical modeling. On scales larger than individual galaxies, the so-called standard ΛCDM cosmology has been tremendously successful in its quantitative predictions. If certain characteristics for dark energy and dark matter are adopted the large-scale matter and galaxy distribution can be well explained (including baryon acoustic oscillations) and be linked to the fluctuations in the cosmic microwave background. On the scales of galaxies and smaller, theoretical predictions are more complex, both because all of these scales are highly non-linear and because the cooling baryons constitute an important, even dominant mass component. Indeed, the ΛCDM paradigm seems to make at least two predictions that are unsubstantiated by observational evidence, or even in seeming contradiction. Not only are numerous low-mass dark-matter halos predicted, almost completely devoid of stars (Kauffmann et al. 1993; Klypin et al. 1999; Moore et al. 1999), but ΛCDM simulations also predict that dark-matter profiles are cuspy, i.e., have divergent dark-matter densities towards their centers (Dubinski and Carlberg 1991; Navarro et al. 1996). In galaxies as massive as our Milky Way, baryonic processes could not easily turn the dark-matter cusp into a core, as seems viable in low mass galaxies (e.g., Flores and Primack 1994; Pontzen and Governato 2012). Therefore, we should expect for the Milky Way that more than half of the mass within a sphere of ≃R 0 should be dark matter. Yet microlensing towards the Milky Way bulge (Popowski et al. 2005; Hamadache et al. 2006; Sumi et al. 2006) indicates that in our own Galaxy most of the in-plane column density is made up of stars (e.g., Binney and Evans 2001). Measurements in individual external galaxies remain inconclusive, because dynamical tracers only measure the total mass, but cannot separate the stellar and DM contributions. In the Milky Way, almost all stellar mass beyond the bulge is in a stellar disk and hence very flat, while the DM halos emerging from ΛCDM simulations are spheroidal or ellipsoidal. So, mapping the Milky Way’s mass near the disk plane as a function of radius through the vertical kinematics will break the so-called disk—halo degeneracy, when combined with the rotation curve and the outer halo mass profile: one can then separate the flat from the round-ish mass contributions.

Perhaps the most immediate goal of dynamical Disk modeling is to determine how much DM there is within the Solar radius: as little as implied by the microlensing results, or as much as predicted by ΛCDM cosmology. A second goal for the Galactic potential is to determine whether the disk-like total mass distribution is as thin as the stellar counts imply; this is interesting, because the possibility of a thick dark-matter disk has been raised (Read et al. 2008). Finally, we can combine precise constraints from near the Galactic disk with constraints from stellar halo streams (e.g., Koposov et al. 2010) to get the shape of the potential as a function of radius. This can then be compared to the expectations, e.g., of alternative gravity models, and provide another, qualitatively new test for the inevitability of some form of dark matter.

On the other hand, the best possible constraints on Φ(x) are necessary to derive the (chemo-)orbital distribution of stars (the ‘distribution function’, DF), as the orbit characteristic, such as the actions depend of course on both (x,v) of the stars and on Φ(x).

3 Stellar surveys of the Milky Way

3.1 Survey desiderata

The ideal survey would result in an all-encompassing catalog of stars throughout the Disk, listing their 3-D positions and 3-D velocities (x,v), elemental abundances \(([\overrightarrow{\mathrm {X/H}}])\), individual masses (M ∗), ages (t age), binarity, and line-of-sight reddening (A V ), along with the associated uncertainties. Furthermore, these uncertainties should be ‘small’, when compared to the scales on which the multi-dimensional mean number density of stars \(n(\boldsymbol{x}, \boldsymbol{v}, [\overrightarrow{\mathrm{X}/\mathrm{H}}], M_{*}, t_{\mathrm{age}})\) has structure. In practice, this is neither achievable in the foreseeable future, nor is it clear that a ‘fuller’ sampling of \(n(\boldsymbol{x}, \boldsymbol{v}, [\overrightarrow{\mathrm{X}/\mathrm{H}}], M_{*}, t_{\mathrm{age}})\) is always worth the additional effort. Such an all-encompassing survey would imply that the probability of entering the catalog is \(p_{\mathrm {complete}}(\boldsymbol{x}, \boldsymbol{v}, [\overrightarrow{\mathrm{X}/\mathrm{H}}], M_{*}, t_{\mathrm{age}})\simeq1\) across the entire relevant domainFootnote 1 of x, v, \([\overrightarrow{\mathrm{X}/\mathrm{H}}]\), M ∗, and t age.

Any realistic survey is a particular choice of compromise in this parameter space. Fundamentally, p complete of any survey, i.e., the probability of any given star having ended up in the catalog, is always limited by quantities in the space of ‘immediate observables’, foremost by the stars’ fluxes (or signal-to-noise) vis-à-vis a survey’s flux limit or image-crowding limit. But these ‘immediate observables’ are usually not the quantities of foremost astrophysical interest, say \(\boldsymbol{x}, \boldsymbol{v}, [\overrightarrow {\mathrm{X}/\mathrm{H}}], m, t_{\mathrm{age}}\). While full completeness is practicable with respect to some quantities, e.g., the angular survey coverage (ℓ,b) and the velocities v, it is not in other respects. The effective survey volume will always be larger for more luminous stars, the survey’s distance limit will always be greater in directions of lower dust extinction at the observed wavelength; and an all-sky survey at fixed exposure time will go less deep towards the bulge because of crowding.

So, in general, ‘completeness’ is an unattainable goal. And while samples that are ‘complete’ in some physical quantity such as volume or mass are immediately appealing and promise easy analysis, their actual construction in many surveys comes at the expense of discarding a sizable (often dominant) fraction of the pertinent catalog entries. With the right analysis tools, understanding the survey (in-)completeness and its mapping into the physical quantities of interest are more important than culling ‘complete’ samples. We will return to this in Sect. 4.

3.2 Observable quantities and physical quantities of interest

The physical attributes about a star (\(\boldsymbol{x}, \boldsymbol{v}, [\overrightarrow{\mathrm{X}/\mathrm{H}}], m, t_{\mathrm{age}}\)) that one would like to have for describing and modeling the Disk are in general not direct observables. This starts out with the fact that the natural coordinates for (x, v) in the space of observables are (ℓ,b,D) and (v los,μ ℓ ,μ b ), respectively, with a heliocentric reference system in position and velocity.

In general, Galactic star surveys fall into two seemingly disjoint categories: imaging and spectroscopy. Imaging surveys get parsed into catalogs that provide angular positions and fluxes (typically in 2 to 10 passbands) for discrete sources, once photometric solutions (e.g., Schlafly et al. 2012) and astrometric solutions (Pier et al. 2003) have been obtained. Multi-epoch surveys, or the comparison of different surveys from different epochs, then provide proper motions, and—with sufficient precision—useful parallaxes (e.g., Perryman et al. 1997; Munn et al. 2004). Spectroscopic surveys are carried out with different instrumentation or even as disjoint surveys, usually based on a pre-existing photometric catalog. These spectra, usually for vastly fewer objects, provide v los on the one hand and the spectra that allow estimates of the ‘stellar parameters’, T eff, logg, and \([\overrightarrow{\mathrm{X}/\mathrm{H}}]\) (e.g., Nordström et al. 2004; Yanny et al. 2009). But ‘imaging’ and ‘spectroscopic’ surveys are only seemingly disjoint categories, because multi-band photometry may be re-interpreted as a (very) low resolution spectrum and some stellar parameters (e.g., T eff and [Fe/H]) can be constrained by photometry and/or spectra (e.g., Ivezić et al. 2008).

The practical link between ‘observables’ and ‘quantities of interest’ warrants extensive discussion: along with the survey selection function (Sect. 4) it is one of the two key ingredients for any rigorous survey analysis. For some quantities, such as (ℓ,b) and v los this link is quite direct; but even there a coordinate transformation is required, which involves R ⊙, v LSR and of course the distances, as most dynamical or formation models are framed in some rest-frame system with an origin at the Galactic center. Similarly, converting estimates of μ ℓ ,μ b to two components of v (and δ v) requires knowledge of the distance. In Fig. 4, we show a graphical model overview over the task of ‘astrophysical parameter determination’ in Galactic surveys. In the subsequent subsections, we discuss different aspects of this model.

Constraining Stellar Parameters from Observables in Milky Way Surveys: this figure provides a schematic overview, in the form of a simplified graphical model, of the logical dependencies between the stellar observables in Galactic surveys (thick dotted ovals) and the main desiderata for each star (thin dashed ovals), its stellar parameters and distance, given various prior expectations about galaxy formation, star formation and the Galactic dust distribution (top). The basic observables are: line-of-sight-velocity, v los, proper motions, μ, parallax π, multi-band photometry \(m_{\lambda_{i}}\) and photospheric parameters derived from spectra (T eff, logg, abundances, Z); most of them depend on the Sun’s position x ⊙ through, Δx. The main desiderata are the star’s mass M ∗, age t age and abundances Z, along with its distance D from the Sun and the (dust) extinction along the line of sight, A V . The prior probabilities of M ∗, age t age, Z, D, and A V are informed by our notions about star formation (the IMF) the overall structure of the Galaxy and various constraints on the dust distribution. Overall the goal of most survey analysis is to determine the probability of the stellar observables for a given set of desiderata, which requires both isochrones and stellar atmospheric models (see Burnett and Binney 2010). In practice, most existing Galactic surveys analyses can be mapped onto this scheme, with logical dependencies often replaced by assumed logical conditions (e.g. ‘using dereddened fluxes’, ‘presuming the star is on the main sequence’, etc.). This graphical model still makes a number of simplifications on the velocities

3.2.1 Distance estimates

Clearly, a ‘direct’ distance estimate, one that is independent of any intrinsic property of the stars, such as parallax measurements, is to be preferred. At present, good parallax distance estimates exist for about 20,000 stars within ≃100 pc from Hipparcos (Perryman et al. 1997; ESA 1997). And a successful Gaia mission will extend this to ≃109 stars within ≃10 kpc (de Bruijne 2012). However, a widely usable catalog with Gaia parallaxes is still 5 years away as of this writing, and even after Gaia most stars in the already existing wide-field photometric surveys (e.g., SDSS or PS1) will not have informative Gaia parallax estimates, simply because they are too faint.

Therefore, it is important to discuss the basics and the practice of (spectro-) photometric distance estimates to stars, which has turned out to be quite productive with the currently available data quality. Such distance estimates, all based on the comparison of the measured flux to an inferred intrinsic luminosity or absolute magnitude, have a well-established track record in the Galaxy, when using so-called standard candles, such as BHB stars (Sirko et al. 2004; Xue et al. 2008) or ‘red clump stars’ in near-IR surveys (e.g., Alves et al. 2002). But, of course, as the ‘physical HR diagram’ (L vs. T eff) in Fig. 5 shows, the distance-independent observables greatly constrain luminosity and hence distance for basically all stars. Foremost, these distance-independent observables are T eff or colors as well \([\overrightarrow{\mathrm{X}/\mathrm{H}}]\), potentially augmented by spectroscopic logg estimates.

‘Physical’ color–magnitude diagram, shown by BaSTI isochrones for two metallicities, which are spaced 0.2 dex in age between 109 and 1010 yr. This diagram serves as a reference for the discussion of how to derive physical parameters (distances, ages, abundances) for stars from observables (cf. Sect. 3.2): the age-independence of the luminosity on the MS makes for robust photometric distance estimates; the age and abundance sensitivity of L and T eff near the turn-off and on the (sub-)giant branch makes for good age determinations, but only if parallax-based distances exist; etc.

A general framework for estimating distances in the absence of parallaxes is given in Burnett et al. (2011) or Breddels et al. (2010), and captured by the graphical model in Fig. 4. Basically, the goal is to determine the distance modulus likelihood

where the m i are the apparent magnitudes of the star in various passbands (i.e., also the ‘colors’) and M ∗ is its stellar mass, which predicts the star’s luminosity and colors (at a given [Fe/H] and t age, see Fig. 4). Note that [Fe/H] appears both as an observational constraint and a model parameter, which determines isochrone locations. The prior on ([Fe/H],t age) may depend on the distance modulus to use the different spatial distribution of stars of different metallicities (in this case, the position of the sky would also be included to compute the 3D position).

To get distance estimates, one needs to marginalize over the nuisance parameters (M ∗, [Fe/H], and t age in the case above), and one needs to spell out the external information (i.e. priors) on the relative probability of these nuisance parameters (e.g., Bailer-Jones 2011). As Fig. 5 makes obvious, the precision of the resulting distance estimates depends on the evolutionary phase, the quality of observational information, and any prior information. To give a few examples: for stars on the lower main sequence, the luminosity is only a function of T eff or color, irrespective of age; hence it can be well determined (≃5–10 %) if the metallicity is well constrained (δ[Fe/H]≤0.2) and if the probability of the object being a (sub-)giant is low, either on statistical grounds or through an estimate of logg. Jurić et al. (2008) showed that such precisions can be reached even if the metallicity constraints only come from photometry. On the giant branch, colors reflect metallicity (and also age) as much as they reflect luminosity; therefore photometric distances are less precise. Nonetheless, optical colors good to ≃0.02 mag and metallicities with δ[Fe/H]≤0.2 can determine giant star distances good to ≃15 %, if there is a prior expectation that the stars are old (Xue et al. 2012, in preparation). The power of spectroscopic data comes in determining logg, T eff especially in the case of significant reddening, and in constraining the abundances, especially [Fe/H]. While only high-dispersion and high-S/N spectra will yield tight logg estimates, even moderate-resolution and moderate-S/N spectra (as for the SEGUE and LAMOST surveys; see Sect. 3.3.2) suffice to separate giants from dwarfs (e.g., Yanny et al. 2009), thereby discriminating the multiple branches of L(color,[Fe/H]) in the isochrones. While main-sequence turn-off stars have been widely used to make 3D maps of the Milky Way (Fig. 4; e.g., Belokurov et al. 2006; Jurić et al. 2008; Bell et al. 2008), they are among the stellar types abundantly found in typical surveys, the ones for which precise (<20 %) spectrophotometric distances are hardest to obtain (e.g., Schönrich et al. 2011).

The absolute scale for such spectrophotometric distance estimates is in most cases tied to open or globular clusters, which are presumed to be of known distance, age and metallicity (e.g., Pont et al. 1998; An et al. 2009). Because the number of open and globular clusters is relatively small and, in particular, old open clusters are rare, the sampling in known age and metallicity is sparse and non-uniform. Therefore, care is necessary to make this work for Disk stars of any age or metallicity. Interestingly, the panoptic sky surveys with kinematics offer the prospect of self-calibration of the distance scale (Schönrich et al. 2012) through requiring dynamical consistency between radial velocities and proper motions.

3.2.2 Chemical abundances

Chemical abundances are enormously important astrophysically, as they are witnesses of the Disk’s enrichment history and as they are lifelong tags identifying various stellar sub-populations. Broadly speaking, metals are produced in stars as by-products of nuclear burning and are dispersed into the interstellar medium by supernova explosions and winds. This leads to a trend toward higher metallicity as time goes on, with inside-out formation leading to faster chemical evolution or metal-enrichment in the inner part of the Disk. While all supernovae produce iron, α-element enrichment occurs primarily through type II supernovae of massive stars with short lifetimes. Therefore, until type Ia supernovae, with typical delay times of 2 Gyr (e.g., Matteucci and Recchi 2001; Dahlen et al. 2008; Maoz et al. 2011), start occurring, the early disk’s ISM, and stars formed out of it, has [α/Fe] that is higher than it is today. For a temporally smooth star-formation history, [α/Fe] decreases monotonically with time. However, a burst of star formation, e.g., following a gas-rich merger, could re-instate a higher [α/Fe], such that the relation between [α/Fe] and age is dependent on the star-formation history (e.g., Gilmore and Wyse 1991).

Beyond the astrophysical relevance of abundance information, it is also of great practical importance in obtaining distances (Sect. 3.2.1) and ages (Sect. 3.2.3). Among the ‘abundances’ \([\overrightarrow{\mathrm{X}/\mathrm{H}}]\), the ‘metallicity’ [Fe/H] is by far of the greatest importance for distance estimates. Practical determinations of \([\overrightarrow{\mathrm{X}/\mathrm{H}}]\) fall into three broad categories: estimates (usually just [Fe/H], or [M/H] as a representative of the overall metal content) from either broad- or narrow-band photometry; estimates based on intermediate-resolution spectra (R=2000 to 3000); or estimates from high-resolution spectra (R=10,000 and higher) that enable individual element abundance determinations. We discuss here briefly abundances obtained in these three different regimes.

-

Stellar Abundances from Photometry: That abundances can be constrained through photometry—at least for some stellar types—has been established for half a century (Wallerstein 1962; Strömgren 1966). The level of overall metal-line blanketing or the strength of particular absorption features varies with [Fe/H] (e.g., Böhm-Vitense 1989), which appreciably changes the broad-band fluxes and hence colors. How well this can be untangled from changes in T eff or other stellar parameters, depends of course on the mass and evolutionary phase of the star. The most widely used applications are the ‘color of the red giant branch’, which is a particularly useful metallicity estimate if there are priors on age and distance (e.g. McConnachie et al. 2010 for the case of M31). In the Galaxy, variants of the ‘UV excess method’ (Wallerstein 1962) have been very successfully used to estimate [Fe/H] for vast numbers of stars. In particular, Ivezić et al. (2008) calibrated the positions of F & G main sequence stars in the SDSS (u−g)–(g−r) color plane against spectroscopic metallicity estimates. Based on SDSS photometry, they showed that metallicity precisions of δ[Fe/H]≃0.1 to 0.3 can be reached, depending on the temperature and metallicity of the stars: in this way they determined [Fe/H] for over 2,000,000 stars.

Some narrow- or medium-band photometric systems (foremost Strömgren filters; Strömgren 1966; Bessell et al. 2011) can do even better in estimating chemical abundances, foremost [Fe/H], by placing filters on top of strong absorptions features. Recently, this has been well illustrated by Árnadóttir et al. (2010) and Casagrande et al. (2011), who compared Strömgren photometry to abundances from high-resolution spectra, showing that [Fe/H] precisions of ≤0.1 dex are possible in some T eff regimes. Casagrande et al. (2011) also showed that precise Strömgren photometry can constrain [α/Fe] to ≃0.1 dex. In addition, narrow band photometry can provide good metallicity estimates (δ[Fe/H]≃0.2) for a wider range of stellar parameters than SDSS photometry.

Indeed, the literature is scattered with disputes that a certain photometric abundance determination precision is untenable, because the same approach yields poor [Fe/H] estimates for a sample at hand. In many cases, this seeming controversy can be traced back to the fact that different approaches vary radically in their accuracy across the T eff, logg, t age parameter plane.

The abundance information from Gaia will be based on a spectral resolution of only R=15 to 80 for most stars; and while these data are produced through a dispersive element, they are probably best thought of as many-narrow-band photometry; for FGKM stars with g<19 Gaia data are expected to yield δ[Fe/H]≤0.2 (Liu et al. 2012).

-

Stellar Abundances from High-Resolution Spectroscopy: High-resolution spectroscopy (R≥10,000), either in the optical or in the near-IR, is an indispensable tool to obtain individual element abundances and to anchor any abundance scale in physically motivated models of stellar photospheres (e.g., Asplund et al. 2009; Edvardsson et al. 1993; Reddy et al. 2003; Bensby et al. 2005; Allende Prieto et al. 2008). The abundances are then fit by either modeling the spectrum directly in pixel or flux space, or more commonly by parsing the observed and the model spectrum into a set of line equivalent widths which are compared in a χ 2-sense. From typical existing spectra, about 10 to 20 individual abundances are derived, with relative precisions of 0.05 to 0.2 (e.g., Reddy et al. 2003; Boeche et al. 2011). High resolution spectroscopy has remained the gold standard for investigating chemo-dynamical patterns in the Milky Way in detail.

-

Stellar Abundances from Moderate-Resolution Spectroscopy: In recent years, large data sets at moderate spectral resolutionFootnote 2 have become available, foremost by SDSS/SEGUE (Yanny et al. 2009) in the last years, but in the future also from LAMOST data (Deng et al. 2012). Such data can provide robust metallicities [Fe/H], good to δ[Fe/H]≤0.2, and constrain [α/Fe] to δ[α/Fe]≤0.15 (Lee et al. 2008a, 2011), for F, G and K stars. The accuracy of these data has been verified against high-resolution spectroscopy and through survey spectra in globular clusters of known metallicity (Lee et al. 2008b, 2011). Recently, Bovy et al. (2012c) have pursued an alternate approach to determine the precision (not accuracy) of abundance determinations [Fe/H], [α/Fe], through analyzing the abundance-dependent kinematics of Disk stars. They found that for plausible kinematical assumptions, the SDSS spectra of G-dwarfs must be able to rank stars in [Fe/H] and [α/Fe] at the (0.15,0.08) dex level, respectively. These precisions should be compared to the range of [Fe/H] (about 1.5 dex) and [α/Fe] (about 0.45 dex) of abundances found in the Disk, which illustrates that moderate resolution spectroscopy is very useful for isolating abundance-selected subsamples of stars in the Disk.

3.2.3 Stellar ages

Constraints on stellar ages are of course tremendously precious information for understanding the formation of the Disk, yet are very hard to obtain in practice. The review by Soderblom (2010) provides an excellent exposition of these issues, and we only summarize a few salient points here. For the large samples under discussion here, the absolute age calibration is not the highest priority, but the aim is to provide age constraints, even if only relative-age constraints, for as many stars as possible. In the terminology of Soderblom (2010), it is the ‘model-based’ or ‘empirical’ age determinations that are relevant here: three categories of them matter most for the current context (0.5 Gyr≤t age≤13 Gyr), depending on the type of stars and the information available. First, the chromospheric activity or rotation decays with increasing age of stars, leading to empirical relations that have been calibrated against star clusters (e.g., Baliunas et al. 1995), especially for stars younger than a few Gyr. Soderblom et al. (1991) showed that the expected age precision for FGK stars is about 0.2 dex. Second, stellar seismology, probing the age-dependent internal structure can constrain ages well; the advent of superb light-curves from the Kepler mission, has just now enabled age constraints—in particular for giant stars—across sizable swaths of the Disk (Van Grootel et al. 2010).

The third, and most widely applicable approach in the Disk context, is the comparison between isochrones and the position of a star in the observational or physical Hertzsprung–Russell diagram, i.e., the L (or logg)–T eff,[Fe/H] plane (see Fig. 5). Ideally, the set of observational constraints for a star, {data}obs, would be precise determinations of {data}obs={L,T eff,[Fe/H]}, or perhaps precise estimates of logg,T eff,[Fe/H]. But until good parallaxes to D≥1 kpc exist, L is poorly constrained (without referring to T eff and [Fe/H] etc.) and the error bars on logg are considerable (≃0.5 dex), leaving T eff,[Fe/H] as the well-determined observables.

All these observable properties of a star depend essentially only on a few physical model parameters for the star M ∗,[Fe/H],t age (Fig. 4), and the observables (at a given age) can be predicted through isochrones (e.g., Girardi et al. 2002; Pietrinferni et al. 2004). For any set of observational constraints, the probability of a star’s age is then given by a similar expression as that for photometric distances in Eq. (1) (cf. Takeda et al. 2007; Burnett and Binney 2010):

where p({data}obs|M ∗,[Fe/H],t age) is the probability of the data given the model parameters (i.e., the likelihood); the shape of that distribution is where the uncertainties on the observables are incorporated. Further, not all combinations of M ∗,[Fe/H],t age are equally likely, since we have prior information on p p (M ∗|t age) and p p ([Fe/H]|t age). Those prior expectations come from our overall picture of Galaxy formation or from our knowledge of the stellar mass function; also they may reflect the sample properties, when analyzing any one individual star. To be specific, p p (M ∗|t age) simply reflects the mass function, truncated at M ∗,max(t age), if one accepts that there has been an approximately universal initial mass function in the Disk.

The integration, or marginalization, over M ∗ and [Fe/H], of course involves the isochrone-based prediction of observables {params}ic, as illustrated in Fig. 4, so that

where {params}ic could for example be {L,T eff,[Fe/H]}ic(M ∗,[Fe/H],t age).

To get the relative probabilities of different presumed ages, \(\mathcal{L}(\{\mathrm{data}\}_{\mathrm{obs}}|t_{\mathrm{age}})\), one simply goes over all combinations of M ∗ and [Fe/H] at t age and one integrates up how probable the observations are for each combination of M ∗ and [Fe/H]. This has been put into practice for various large samples, where luminosity constraints either come from parallaxes or from logg (see Nordström et al. 2004; Takeda et al. 2007; Burnett and Binney 2010).

The quality of the age constraints depends dramatically both on the stellar evolutionary phase and on the quality of the observational constraints. It is worth looking at a few important regimes, using the isochrones for two metallicities in Fig. 5 as a guide. Note that stars with ages >1 Gyr, which make up over 90 % of Disk, correspond to the last four isochrones in this figure.

(1) For stars on the lower main sequence (where main sequence lifetimes exceed 10 Gyr; L≤L ⊙), isochrone fitting provides basically no age constraints at all; in turn, photometric distance estimates are most robust there. For stars on the upper main sequence, Eq. (2) ‘automatically’ provides a simple upper limit on the age, given by the main sequence lifetime.

(2) For stars near the main-sequence turn-off and on the horizontal branch, the isochrones for a given metallicity are widely spread enabling good age determinations: Takeda et al. (2007) obtained a relative age precision for their stars with logg<4.2 of ≃15 %. Similarly, the parallax-based luminosities of the stars in the Geneva–Copenhagen Survey (GCS) provide age estimate for stars off the main sequence of ≃20 %. Note that the [Fe/H] marginalization in Eq. (2) and the strong metallicity dependence of the isochrones in Fig. 5 illustrate how critical it is to have good estimates of the metallicity: without very good metallicities, even perfect Gaia parallaxes (and hence perfect luminosities) will not yield good age estimates across much of the color–magnitude diagram.

(3) On the red giant branch, there is some L–T eff spread in the isochrones of a given metallicity; but for ages >1 Gyr the metallicity dependence of T eff is so strong as to preclude precise age estimates.

Note that the formalism of Eq. (2) provides at least some age constraints even in the absence of good independent L constraints (e.g., Burnett and Binney 2010), as the different stellar phases vary vastly in duration, which enters through the strong M ∗ dependence in Eq. (2).

3.2.4 Interstellar extinction

The complex dust distribution in the Galaxy is of course very interesting in itself (e.g., Jackson et al. 2008), delineating spiral arms, star formation locations, and constraining the Galactic matter cycle. For the study of the overall stellar distribution of the Milky Way, it is foremost a nuisance and in some regimes, e.g., at very low latitudes, a near-fatal obstacle to seeing the entire Galaxy in stars (e.g., Nidever et al. 2012). Unlike in the analysis of galaxy surveys, where dust extinction is always in the foreground and can be corrected by some integral measure A λ (ℓ,b) (from, e.g., Schlegel et al. 1998), one needs to understand the 3D dust distribution in the Galaxy, A λ (ℓ,b,D). On the one hand, one needs to know, and marginalize out, the extinction to each star in a given sample, foremost to get its intrinsic properties. On the other hand, for many modeling applications one needs the full 3D extinction information A λ (ℓ,b,D), also in directions where there are no stars, e.g., in order to determine the ‘effective survey volume’ (see below): it obviously makes a difference whether there are no stars in the sample with a given (ℓ,b,D) because they are truly absent or because they have been extinguished below some sample flux threshold.

Practical ways to constrain the extinction to any given star, given multi-band photometry or spectra, have recently been worked out by, e.g., Bailer-Jones (2011) and Majewski et al. (2011) for different regimes of A v . In the absence of parallax distances or spectra for the stars, estimates of A λ (ℓ,b) inevitably involve mapping the stars’ SED back to a plausible unreddened SED, the so-called stellar locus. This de-reddening constrains the amount of extinction, but only through the wavelength variation of the extinction, the reddening.

Estimates of T eff or L from spectra or from well-known parallax distances, allow of course far tighter constraints, because the dereddened colors are constrained a priori and because also the ‘extinction’ not just the ‘reddening’ appears as constraints.

How to take that star-by-star extinction information, potentially combine it with maps of dust emissivity (e.g., Planck Collaboration et al. 2011), to get a continuous estimate of A λ (ℓ,b,D) has not yet been established. This task is not straightforward, as emission line maps indicate that the fractal nature of dust (and hence) extinction distribution continues to very small scales. Presumably, some form of interpolation between the A λ (ℓ,b,D) to a set of stars, exploiting the properties of Gaussian processes, is a sensible way forward.

3.2.5 Towards an optimal Disk survey analysis

The task ahead is now to lay the foundation for an optimal survey analysis that will allow optimal dynamical modeling, by rigorously constructing the PDF for the physical quantities of interest, \(\boldsymbol{x}, \boldsymbol{v}, t_{\mathrm{age}}, [\overrightarrow{\mathrm{X}/\mathrm{H}}], M_{*}\) from the direct observables. The preceding subsections show that many sub-aspects of the overall approach (Fig. 4) have been carried out and published; and a general framework has been spelled out by Burnett and Binney (2010). What is still missing is a comprehensive implementation that uses the maximal amount of information and accounts for all covariances, especially one that puts data sets from different surveys on the same footing.

3.3 Existing and current Disk surveys

There is a number of just-completed, ongoing, and imminent surveys of the stellar content of our Galaxy, all of which have different strengths and limitations. Tables 1 and 2 provide a brief overview of the most pertinent survey efforts. The (ground-based) surveys fall into two categories: wide-area multi-color imaging surveys and multi-object, fiber-fed spectroscopy. Broadly speaking, the ground-based imaging surveys provide the angular distribution of stars with complete (magnitude-limited) sampling, proper motions at the ≃3 mas yr−1 level (in conjunction with earlier imaging epochs), and photometric distances; for stars of certain temperature ranges (F through K dwarfs) they can also provide metallicity estimates.

The ground-based spectroscopic surveys have all been in ‘follow-up’ mode, i.e., they select their spectroscopic targets from one of the pre-existing photometric surveys, using a set of specific targeting algorithms. In most cases, the photometric samples are far larger than the number of spectra that can be taken, so target selection is a severe downsampling, in sky area, in brightness or in color range. The survey spectra provide foremost radial velocities, along with good stellar photospheric parameters, including more detailed and robust elemental abundances.

In this section we restrict ourselves to surveys that have started taking science-quality data as of Summer 2012, with the exception of Gaia.

3.3.1 Individual photometric surveys

-

2MASS (Skrutskie et al. 2006): Designed as an ‘all purpose’ near-infrared (JHK) imaging survey, it has produced the perhaps most striking and clearest view of the Milky Way’s stellar distribution to date with half a Billion stars, owing to its ability to penetrated dust extinction better than optical surveys. Its imaging depth is sufficient to see, albeit not necessarily recognize, modestly extinct giant stars to distances >10 kpc. It has been very successfully used to map features in the Milky Way’s outskirts, e.g., the Sagittarius stream (Majewski et al. 2003) and the Monoceros feature in the outer Disk (Rocha-Pinto et al. 2003). Looking towards the Galactic center, 2MASS has been able to pin down the geometry of the extended stellar bar (e.g., Cabrera-Lavers et al. 2007; Robin et al. 2012b). 2MASS has also provided constraints on the scale heights and lengths of the Disk components (Cabrera-Lavers et al. 2005). It has been the photometric basis for several spectroscopic surveys, in the Disk-context most notably RAVE and APOGEE (see below). However, as a stand-alone survey, 2MASS has been hampered in precise structural analyses of the disk by the difficulties to deriving robust distances and abundances from its data. But 10 years after its completion, it is still the only all-sky survey in the optical/near-IR region of the electromagnetic spectrum. In imaging at ≥10,000 square degree coverage, 2MASS is now being surpassed (by 4 magnitudes) by the Vista Hemisphere Survey (VHS) (McMahon et al., 2012, in preparation).

-

SDSS (e.g., York et al. 2000; Stoughton et al. 2002; Abazajian et al. 2009): The primary science goals of the (imaging & spectroscopy) Sloan Digital Sky Survey were focused on galaxy evolution, large-scale structure and quasars, and the 5-band imaging survey ‘avoided’ much of the Milky Way by largely restricting itself to |b|>30∘. Nonetheless, SDSS imaging has had tremendous impact on mapping the Galaxy (see Fig. 6). Like 2MASS, its impact has been most dramatic for understanding the outskirts of the Milky Way, where its imaging depth (giant stars to 100 kpc, old main-sequence turn-off stars to 25 kpc), its precise colors and its ability to get photometric metallicity constraints (Ivezić et al. 2008) have been most effective. On this basis, SDSS has drawn up a state-of the art picture of the overall stellar distribution (Jurić et al. 2008), drawn the clearest picture of stellar streams in the Milky Way halo (Belokurov et al. 2006), and expanded the known realm of low-luminosity galaxies by two orders of magnitude (e.g., Willman et al. 2005). SDSS photometry and proper motions (δ μ≃3 mas yr−1 through comparison with USNOB, Munn et al. (2004) have allowed a kinematic exploration of the Disk (Fuchs et al. 2009; Bond et al. 2010). However, the bright flux limit of SDSS (g≃15) makes an exploration of the Solar neighborhood within a few 100 pc actually difficult with these data.

Fig. 6 Stellar number density map of the Disk and halo from Jurić et al. (2008), for K-stars with colors 0.6≤r−i≤0.65. The map, averaged over the ϕ-direction was derived drawing on SDSS photometry, and applying photometric distance estimates that presume (sensibly) that the vast majority of stars are on the main sequence, not giants. Note that for these colors the main sequence stars will sample all ages fairly, as their MS luminosity remains essentially unchanged. With increasing |z| and R, however, the mean metallicity of the stars changes, and the stars within that color range represent different masses and luminosities

-

PanSTARRS1 (Kaiser et al. 2010): PanSTARRS 1 (PS1) is carrying out a time-domain imaging survey that covers 3/4 of the sky (δ>−30∘) in five bands to an imaging depth and photometric precision comparable to SDSS (e.g., Schlafly et al. 2012). PS1 has imaging in the y band, but not in the u band, which limits its ability to determine photometric metallicities. However, it is the first digital multi-band survey in the optical to cover much of the Disk, including at b=0 both the Galactic Center and the Galactic Anticenter.

-

SkyMapper (Keller et al. 2007): The Southern Sky Survey with the SkyMapper telescope in Australia is getting under way, set to cover the entire Southern celestial hemisphere within 5 years to a depth approaching that of SDSS. Through the particular choice of its five filters, SkyMapper is a survey designed for stellar astrophysics, constraining metallicities and surface gravities through two blue medium band filters (u,v). Together with PanSTARRS 1, SkyMapper should finally provide full-sky coverage in the optical to g≤21.

-

UKIDSS (Lawrence et al. 2007; Lucas et al. 2008; Majewski 1994): A set of near-IR sky surveys, of which the Galactic Plane Survey (GPS) has covered a significant fraction in Galactic latitude at |b|<5∘ in JHK, 3 magnitudes deeper than 2MASS. It has not yet been used for studies of the overall Disk structure.

3.3.2 Spectroscopic surveys

-

Geneva–Copenhagen Survey (GCS) (Nordström et al. 2004): This has been the first homogeneous spectroscopic survey of the Disk that encompasses far more than 1000 stars. For ≃13,000 stars in the Galactic neighborhood (within a few 100 pc) that have Hipparcos parallaxes, it obtained Strömgren photometry and radial velocities through cross-correlation spectrometry, and derived T eff, [Fe/H], logg, ages, and binarity information from them. It has been the foundation for studying the Galactic region around the Sun for a decade.

-

SEGUE (Yanny et al. 2009): Over the course of its first decade, the SDSS survey facility has increasingly shifted its emphasis towards more systematically targeting stars, resulting eventually in R≈2000 spectra from 3800 Å to 9200 Å for ≃350,000 stars. This survey currently provides the best extensive sample of Disk stars beyond the Solar neighborhood with good distances (≃10 %) and good abundances ([Fe/H],[α/Fe]), see Fig. 7.

Fig. 7 The geometry of ‘mono-abundance populations’ (MAPs), as derived from SDSS/SEGUE data in Bovy et al. (2012d). The figure shows lines of constant stellar number density (red, green and blue) for MAPs of decreasing chemical age (i.e. decreasing [α/Fe] and increasing [Fe/H], with color coding analogous to Fig. 10), illustrating the sequence of MAPs from ‘old, thick, centrally concentrated’ to ‘younger, thin, radially extended’. The figure also puts the SDSS/SEGUE survey geometry into perspective of the overall Galaxy (here represented by an image of NGC 891)

-

RAVE (Steinmetz et al. 2006): RAVE is a multi-fiber spectroscopic survey, carried out at the AAO 1.2 m Schmidt telescope, which, as of 2012, has obtained R≈7000 spectra in the red CaII-triplet region (8410 Å<λ<8795 Å) for nearly 500,000 bright stars (9<I<13). RAVE covers the entire Southern celestial hemisphere except, regions at low |b| and low |ℓ|. The spectra deliver velocities to ≤2 km s−1, T eff to ≃200 K, logg to 0.3 dex, and seven individual element abundances to 0.25 dex (Boeche et al. 2011). At present, precise distance estimates, even for main-sequence stars, are limited by the availability of precise (at the 1–2 %-level) optical colors.

-

APOGEE (Allende Prieto et al. 2008): The APO Galactic Evolution Experiment (APOGEE), is the only comprehensive near-IR spectroscopic survey of the Galaxy; it started in the Spring of 2011 taking R≈22,500 spectra in the wavelength region 1.51 μm<λ<1.70 μm for stars preselected to likely be giants with H<13.8 mag. It aims to obtain spectra for eventually 100,000 stars that yield velocities to better than 1 km s−1, individual element abundances and logg; the APOGEE precisions for \([\overrightarrow{\mathrm{X}/\mathrm{H}}]\) and logg have yet to be verified. The lower extinction in the near-IR A H ≃A V /6 enables APOGEE to focus on low latitude observations, with the majority of spectra taken with |b|<10∘.

-

Gaia-ESO (Gilmore et al. 2012): The Gaia-ESO program, is a 300-night ESO public survey, which commenced in early 2012, and will obtain 100,000 high-resolution spectra with the GIRAFFE and UVES spectrographs at the VLT. It will sample all Galactic components, and in contrast to all the other surveys, will obtain an extensive set of analogous spectra for open clusters with a wide rage of properties; this will constitute the consummate calibration data set for ‘field’ stars in the Disk. The stars targeted by Gaia-ESO will typically be 100-times fainter than those targeted by APOGEE, including a majority of stars on the main sequence; however, taking spectra at ≈0.5 μm, Gaia-ESO will not penetrate the dusty low-latitude parts of the Disk.

-

LAMOST (Deng et al. 2012): The most extensive ground-based spectroscopic survey of the Galaxy currently under way is being carried out with the LAMOST telescope. The Galactic Survey, LEGUE, has just started towards obtaining moderate-resolution spectra (R≈2000) for 2.5 million stars with r≤18. In the context of the Disk, LEGUE is expected to focus on the Milky Way’s outer disk, carrying out the majority of its low-latitude observations towards the Galactic anticenter.

3.3.3 The survey road ahead: Gaia

Gaia is an astrometric space mission currently scheduled to launch in September 2013 that will survey the entire sky down to 20th magnitude in a broadband, white-light filter, G. A recent overview of the spacecraft design and instruments, and the expected astrometric performance is given in de Bruijne (2012); the expected performance of Gaia’s stellar parameters and extinction measurements is given in Liu et al. (2012). For Disk studies in particular, Gaia will obtain 10 percent measurements of parallaxes and proper motions out to about 4 kpc for F- and G-type dwarfs, down to (non-extinguished) G≃V=15, for which the Gaia spectro-photometry also provides line-of-sight velocities good to ≃5 to 10 km s−1 and logg and [M/H] good to 0.1 to 0.2 dex. Overall, Gaia will observe approximately 400 million stars with G RVS<17—where G RVS is the integrated flux of the Radial Velocity Spectrometer (RVS)—for which line-of-sight velocities and stellar parameters can be measured (Robin et al. 2012a).

While high-precision samples from Gaia will provide an enormous improvement over current data, it is important to realize that most Gaia projections are in the non-extinguished, non-crowded limit and Gaia’s optical passbands will be severely hampered by the large extinctions and crowding in the Galactic plane. In practice, this will limit most Disk tracers to be within a few kpc from the Sun, and Gaia will in particular have a hard time constraining large-scale Disk asymmetries that are only apparent when looking beyond the Galactic center (the ‘far side’ of the Galaxy, D≥10 kpc,|ℓ|<45∘). Gaia’s lack of detailed abundance information beyond [M/H] also means that it probably will need to be accompanied by spectroscopic follow-up to reach its full potential for constraining Disk formation and evolution. Some follow-up is being planned (e.g., 4MOST; de Jong 2011), but no good studies of the trade-offs between, for example, sample size and abundance-precision have been performed to date.

4 From surveys to modeling: characterizing the survey selection functions

Spectroscopic surveys of the Milky Way are always affected by various selection effects, commonly referred to as ‘selection biases’.Footnote 3 In their most benign form, these are due to a set of objective and repeatable decisions of what to observe (necessitated by the survey design). Selection biases typically arise in three forms: (a) the survey selection procedure, (b) the relation between the survey stars and the underlying stellar population, and (c) the extrapolation from the observed (spatial) volume and the ‘global’ Milky Way volume. Different analyses need not be affected by all three of these biases.

In many existing Galactic survey analyses emphasis has been given—in the initial survey design, the targeting choices, and the subsequent sample culling—to getting as simple a selection function as possible, e.g. Kuijken and Gilmore (1989a), Nordström et al. (2004), Fuhrmann (2011), Moni Bidin et al. (2012). This then lessens, or even obviates the need to deal with the selection function explicitly in the subsequent astrophysical analysis. While this is a consistent approach, it appears not viable, or at least far from optimal, for the analyses of the vast data sets that are emerging from the current ‘general purpose’ surveys. In the context of Galactic stellar surveys, a more general and rigorous way of guarding against these biases and correcting for them has been laid our perhaps most explicitly and extensively in Bovy et al. (2012d), and in the interest of a coherent exposition we focus on this case.

Therefore, we use the example of the spectroscopic SEGUE G-dwarf sample used in Bovy et al. (2012d)—a magnitude-limited, color-selected sample part of a targeted survey of ≃150 lines of sight at high Galactic latitude (see Yanny et al. 2009). We consider the idealized case that the sample was created using a single g−r color cut from a sample of pre-existing photometry and that objects were identically and uniformly sampled and successfully observed over a magnitude range r min<r<r max. We assume that all selection is performed in dereddened colors and extinction-corrected magnitudes. At face value, such a sample suffers from the three biases mentioned in the previous paragraph: (a) the survey selection function (SSF) is such that only stars in a limited magnitude range are observed spectroscopically, and this range corresponds to a different distance range for stars of different metallicities; (b) a g−r color cut selects more abundant, lower-mass stars at lower metallicities, such that different ranges of the underlying stellar population are sampled for different metallicities; (c) the different distance range for stars of different metallicities combined with different spatial distributions means that different fractions of the total volume occupied by a stellar population are observed.

We assume that a spectroscopic survey is based on a pre-existing photometric catalog, presumed complete to potential spectroscopic targets. The survey selection procedure can then ideally be summarized by (a) the cuts on the photometric catalog to produce the potential spectroscopic targets, (b) the sampling method, and (c) potential quality cuts for defining a successfully observed spectrum (for example, a signal-to-noise ratio cut on the spectrum). We will assume that the sampling method is such that targets are selected independently from each other. If targets are not selected independently from each other (such as, for example, in systematic sampling techniques where each ‘N’th item in an ordered list is observed), then correcting for the SSF may be more complicated. For ease of use of the SSF, spectroscopic targets should be sampled independently from each other.